Diagnostic Coding Intensity among a Pneumonia Inpatient Cohort Using a Risk-Adjustment Model and Claims Data: A U.S. Population-Based Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Data

2.2. Statistical Analysis

3. Results

3.1. Descriptive Statistics

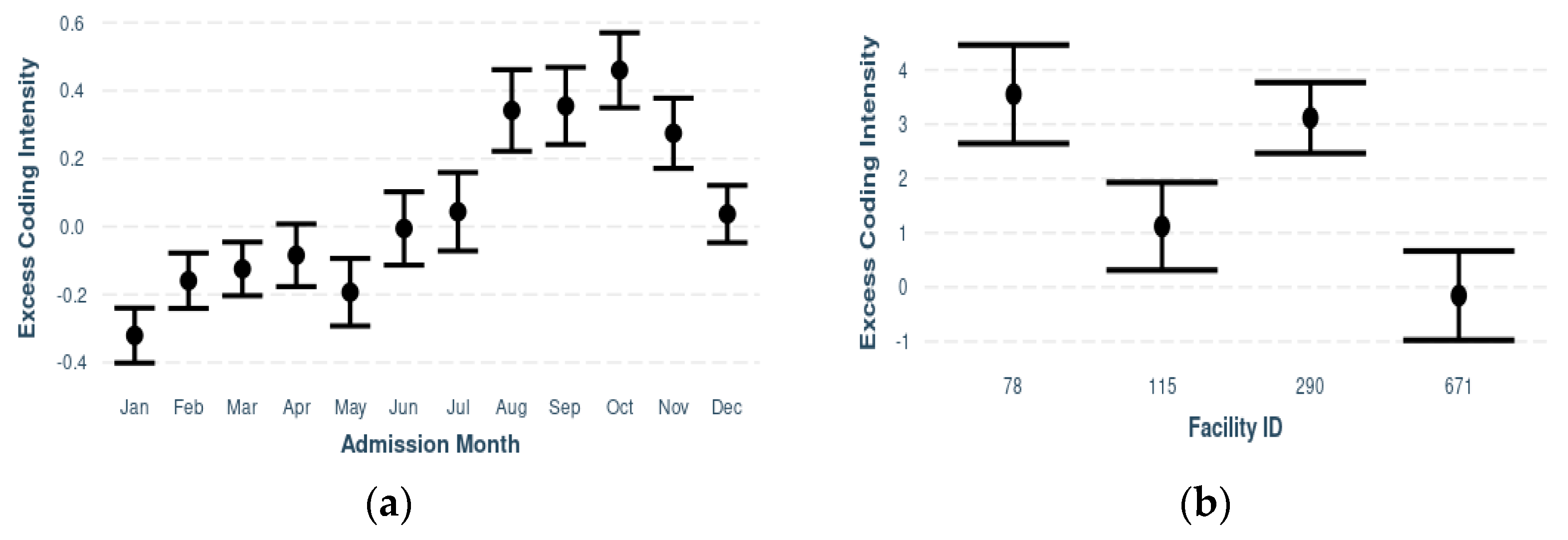

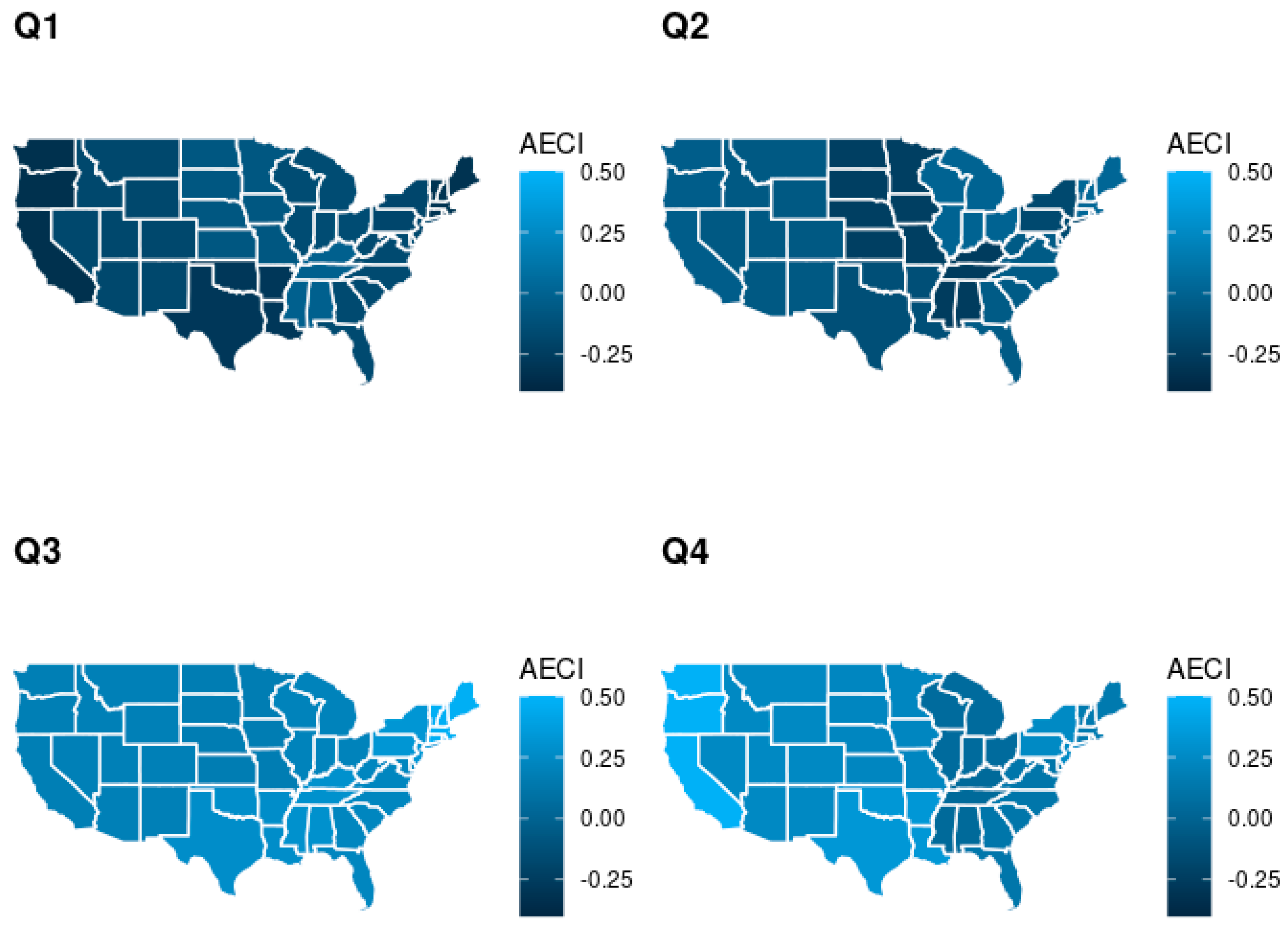

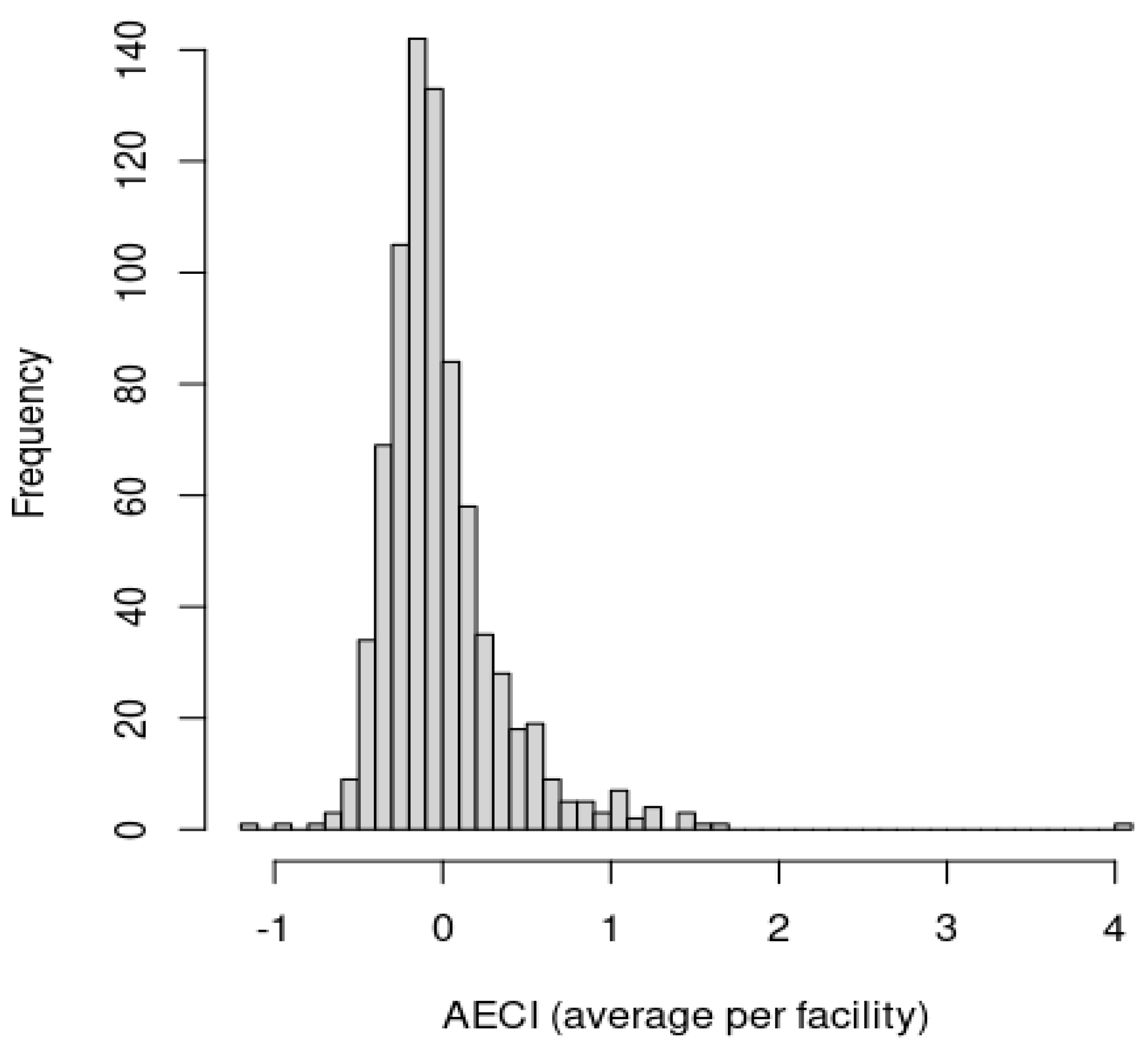

3.2. Model Outcomes

4. Discussion

Strengths and Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Olmstead, J. Understanding the importance of diagnosis coding. Nurse Pract. 2018, 43, 8–10. [Google Scholar] [CrossRef] [PubMed]

- Aiello, F.A.; Roddy, S.P. Inpatient coding and the diagnosis-related group. J. Vasc. Surg. 2017, 66, 1621–1623. [Google Scholar] [CrossRef] [PubMed]

- Rosenbaum, B.P.; Lorenz, R.R.; Luther, R.B.; Knowles-Ward, L.; Kelly, D.L.; Weil, R.J. Improving and measuring inpatient documentation of medical care within the MS-DRG system: Education, monitoring, and normalized case mix index. Perspect. Health Inf. Manag. 2014, 11, 1c. [Google Scholar] [PubMed]

- Fletcher, T. ICD-10-CM and CPT Coding Mistakes Can Cost You—And Not just Financially. 2020. ICD10monitor. Available online: https://icd10monitor.com/icd-10-cm-and-cpt-coding-mistakes-can-cost-you-and-not-just-financially/ (accessed on 4 March 2022).

- Harman, L.B.; Cornelius, F.H. Ethical Health Informatics: Challenges and Opportunities, 3rd ed.; Jones & Bartlett Learning: Burlington, MA, USA, 2017. [Google Scholar]

- Wardle, G.; Wodchis, W.P.; Laporte, A.; Anderson, G.M.; Ross Baker, G. The sensitivity of adverse event cost estimates to diagnostic coding error. Health Serv. Res. 2012, 47, 984–1007. [Google Scholar] [CrossRef] [PubMed]

- Tsopra, R.; Wyatt, J.C.; Beirne, P.; Rodger, K.; Callister, M.; Ghosh, D.; Clifton, I.J.; Whitaker, P.; Peckham, D. Level of accuracy of diagnoses recorded in discharge summaries: A cohort study in three respiratory wards. J. Eval. Clin. Pract. 2019, 25, 36–43. [Google Scholar] [CrossRef] [PubMed]

- Pink, K.L.; Mitchell, I.; Davies, H.E. P17 The accuracy of a diagnosis of pneumonia in a UK teaching hospital. Thorax 2012, 67 (Suppl. 2), A71. [Google Scholar] [CrossRef][Green Version]

- Kronick, R.; Welch, W.P. Measuring coding intensity in the Medicare Advantage Program. Medicare Medicaid Res. Rev. 2014, 4. [Google Scholar] [CrossRef]

- Sjoding, M.W.; Iwashyna, T.J.; Dimick, J.B.; Cooke, C.R. Gaming hospital-level pneumonia 30-day mortality and readmission measures by legitimate changes to diagnostic coding. Crit. Care Med. 2015, 43, 989–995. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Rothberg, M.B.; Pekow, P.S.; Priya, A.; Lindenauer, P.K. Variation in diagnostic coding of patients with pneumonia and its association with hospital risk-standardized mortality rates: A cross-sectional analysis. Ann. Intern. Med. 2014, 160, 380–388. [Google Scholar] [CrossRef] [PubMed]

- Lindenauer, P.K.; Lagu, T.; Shieh, M.S.; Pekow, P.S.; Rothberg, M.B. Association of diagnostic coding with trends in hospitalizations and mortality of patients with pneumonia, 2003–2009. JAMA 2012, 307, 1405–1413. [Google Scholar] [CrossRef] [PubMed]

- Ruhnke, G.W.; Perraillon, M.C.; Cutler, D.M. Mortality reduction among pneumonia patients still substantial despite the impact of coding changes. Am. J. Med. 2013, 126, 266–269. [Google Scholar] [CrossRef] [PubMed]

- Premier Applied Sciences. Premier Healthcare Database White Paper: Data that Informs and Performs; Premier Inc.: Charlotte, NC, USA, 2020; Available online: https://learn.premierinc.com/white-papers/premier-healthcare-database-whitepaper (accessed on 2 March 2022).

- Melfi, C.; Holleman, E.; Arthur, D.; Katz, B. Selecting a patient characteristics index for the prediction of medical outcomes using administrative claims data. J. Clin. Epidemiol. 1995, 48, 917–926. [Google Scholar] [CrossRef]

- Von Korff, M.; Wagner, E.H.; Saunders, K. A chronic disease score from automated pharmacy data. J. Clin. Epidemiol. 1992, 45, 197–203. [Google Scholar] [CrossRef]

- Iezzoni, L.I. (Ed.) Risk Adjustment for Measuring Healthcare Outcomes, 4th ed.; Health Administration Press: Chicago, IL, USA, 2012. [Google Scholar]

- Sacarny, A. Adoption and learning across hospitals: The case of a revenue-generating practice. J. Health Econ. 2018, 60, 142–164. [Google Scholar] [CrossRef] [PubMed]

- Ho, A.T.N.; Shmelev, A.; Charbek, E. Trends and seasonal variation of hospitalization and mortality of interstitial lung disease in the United States from 2006 to 2016. Respir. Res. 2020, 21, 152. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.-C.; Lin, C.-C.; Chen, C.-S.; Lin, H.-C. Seasonality of pneumonia admissions and its association with climate: An eight-year nationwide population-based study. Chronobiol. Int. 2009, 26, 1647–1659. [Google Scholar] [CrossRef] [PubMed]

| Characteristics | Count/Mean (%/SD) |

|---|---|

| Outcome | |

| Additional Diagnoses (mean, SD) | 14.18 (7.74) |

| Patient-Level Characteristics | |

| Age (years) | |

| <1 | 2685 (1.47%) |

| 1–4 | 6576 (3.60%) |

| 5–9 | 3213 (1.76%) |

| 10–14 | 1406 (0.77%) |

| 15–19 | 1177 (0.64%) |

| 20–24 | 1459 (0.80%) |

| 25–34 | 5159 (2.82%) |

| 35–44 | 7798 (4.27%) |

| 45–54 | 14,812 (8.11%) |

| 55–59 | 12,980 (7.11%) |

| 60–64 | 16,335 (8.94%) |

| 65–69 | 17,767 (9.73%) |

| 70–74 | 20,453 (11.20%) |

| 75–79 | 20,695 (11.33%) |

| 80–84 | 19,104 (10.46%) |

| ≥85 | 31,047 (17.00%) |

| Sex | |

| Female | 98,828 (54.10%) |

| Male | 83,768 (45.86%) |

| Unknown | 70 (0.04%) |

| Race | |

| American Indian | 1418 (0.78%) |

| Asian | 3319 (1.82%) |

| Black | 22,954 (12.57%) |

| Pacific Islander | 1194 (0.65%) |

| White | 140,060 (76.68%) |

| Other | 10,301 (5.64%) |

| Unknown | 3420 (1.87%) |

| AHRQ 1 Overall Tract Summary (mean, SD) | 0.54 (0.24) |

| Primary Payor | |

| Charity | 659 (0.36%) |

| Commercial Indemnity | 7808 (4.27%) |

| Direct Employer Contract | 359 (0.20%) |

| Managed Care Capitated | 399 (0.22%) |

| Managed Care Non-Capitated | 19,645 (10.75%) |

| Medicaid–Managed Care Capitated | 2620 (1.43%) |

| Medicaid–Managed Care Non-Capitated | 14,695 (8.04%) |

| Medicaid Traditional | 8776 (4.80%) |

| Medicare–Managed Care Capitated | 6160 (3.37%) |

| Medicare–Managed Care Non-Capitated | 35,100 (19.22%) |

| Medicare Traditional | 77,108 (42.21%) |

| Other Government Payors | 2515 (1.38%) |

| Self-Pay | 5319 (2.91%) |

| Other | 1503 (0.82%) |

| Point of Origin | |

| Clinic | 14,013 (7.67%) |

| Non-Healthcare Facility Point of Origin | 153,451 (84.01%) |

| Transferred from a Hospital (Different Facility) | 7695 (4.21%) |

| Transferred from Department Unit in Same Hospital | 989 (0.54%) |

| Transferred from Health Facilities | 1642 (0.90%) |

| Transferred from Skilled Facility or Intermediate Care Facility | 3888 (2.13%) |

| Other | 175 (0.10%) |

| Information Not Available | 813 (0.45%) |

| Discharge Status | |

| Discharged to Home Health Organization | 27,954 (15.30%) |

| Discharged to Home or Self-Care | 115,553 (63.26%) |

| Discharged to Hospice–Home | 2416 (1.32%) |

| Discharged to Hospice–Medical Facility | 2021 (1.11%) |

| Discharged/Transferred to ICF 2 | 1810 (0.99%) |

| Discharged/Transferred to Other Facility | 2095 (1.15%) |

| Discharged/Transferred to Psychiatric Hospital | 325 (0.18%) |

| Discharged/Transferred to SNF 3 | 21,544 (11.79%) |

| Discharged/Transferred to Swing Bed | 509 (0.28%) |

| Discharged/Transferred to Other Health Institute Not in List | 242 (0.13%) |

| Discharged/Transferred to a Long-Term Care Hospital | 711 (0.39%) |

| Discharged/Transferred to Another Rehab Facility | 2079 (1.14%) |

| Expired | 1963 (1.07%) |

| Left Against Medical Advice | 2547 (1.39%) |

| Other | 897 (0.49%) |

| ICD-10-CM Principal Diagnosis Code | |

| J09: Influenza due to certain identified influenza viruses | 1004 (0.55%) |

| J10: Influenza due to other identified influenza virus | 25,419 (13.92%) |

| J11: Influenza due to unidentified influenza virus | 1368 (0.75%) |

| J12: Viral pneumonia, not elsewhere classified | 8590 (4.70%) |

| J13: Pneumonia due to Streptococcus pneumoniae | 1977 (1.08%) |

| J14: Pneumonia due to Hemophilus influenzae | 583 (0.32%) |

| J15: Bacterial pneumonia, not elsewhere classified | 13,517 (7.40%) |

| J16: Pneumonia due to other infectious organisms, not elsewhere classified | 523 (0.29%) |

| J18: Pneumonia, unspecified organism | 129,404 (70.84%) |

| R09: Other symptoms and signs involving the circulatory and respiratory system-as a primary diagnosis code | 243 (0.13%) |

| Other | 38 (0.02%) |

| MS-DRG 4 Code | |

| 193: Simple Pneumonia or Pleurisy with MCC 5 | 92,239 (50.50%) |

| 194: Simple Pneumonia and Pleurisy with CC 6 | 66,386 (36.34%) |

| 195: Simple Pneumonia and Pleurisy without CC/MCC | 24,041 (13.16%) |

| Length of Stay (days; mean, SD) | 4.09 (3.53) |

| Facility-Level Characteristics | |

| Teaching Status | |

| No | 148,656 (81.38%) |

| Yes | 31,355 (17.17%) |

| To Be Determined | 2655 (1.45%) |

| Academic Status | |

| No | 161,362 (88.34%) |

| Yes | 21,304 (11.66%) |

| Urban/Rural Status | |

| Rural | 29,964 (16.40%) |

| Urban | 152,702 (83.60%) |

| Ownership Status | |

| Government—Federal | 567 (0.31%) |

| Government—Hospital District or Authority | 11,565 (6.33%) |

| Government—Local | 4342 (2.38%) |

| Government—State | 971 (0.53%) |

| Physician | 271 (0.15%) |

| Proprietary | 11,771 (6.44%) |

| Voluntary Non-Profit (Church) | 26,489 (14.50%) |

| Voluntary Non-Profit (Private) | 116,090 (63.55%) |

| Voluntary Non-Profit (Other) | 10,600 (5.80%) |

| Size (Bed Count) | |

| [1, 100] | 18,437 (10.09%) |

| (100, 200] | 30,232 (16.55%) |

| (200, 300] | 37,147 (20.34%) |

| (300, 400] | 32,176 (17.61%) |

| (400, 500] | 19,970 (10.93%) |

| (500, 600] | 14,741 (8.07%) |

| (600, 700] | 9347 (5.12%) |

| (700, 800] | 9046 (4.95%) |

| (800, 900] | 5789 (3.17%) |

| (900, 1000] | 2355 (1.29%) |

| (1000, 2000] | 3426 (1.88%) |

| Case Mix Index (rounded) | |

| 0 | 5931 (3.25%) |

| 1 | 75,516 (41.34%) |

| 2 | 100,970 (55.28%) |

| 3 | 242 (0.13%) |

| 4 | 7 (0.01%) |

| Census Region | |

| East—North Central | 36,144 (19.79%) |

| East—South Central | 15,916 (8.71%) |

| Middle Atlantic | 23,642 (12.94%) |

| Mountain | 9886 (5.41%) |

| New England | 4166 (2.28%) |

| Pacific | 13,568 (7.43%) |

| South Atlantic | 49,091 (26.87%) |

| West—North Central | 9327 (5.11%) |

| West—South Central | 20,926 (11.46%) |

| Admission Month | |

| January | 21,438 (11.74%) |

| February | 21,040 (11.52%) |

| March | 22,458 (12.29%) |

| April | 16,411 (8.98%) |

| May | 14,129 (7.73%) |

| June | 12,014 (6.58%) |

| July | 10,490 (5.74%) |

| August | 9708 (5.31%) |

| September | 10,815 (5.92%) |

| October | 11,463 (6.28%) |

| November | 13,056 (7.15%) |

| December | 19,644 (10.75%) |

| Patient-Level Characteristics | IRR | 95% CI | p-Value |

|---|---|---|---|

| Intercept | 16.27 | 16.19–16.35 | <0.0001 |

| Age (Reference: Over 84) | |||

| <1 | 0.36 | 0.35–0.36 | <0.0001 |

| 1–4 | 0.42 | 0.41–0.42 | <0.0001 |

| 5–9 | 0.46 | 0.45–0.47 | <0.0001 |

| 10–14 | 0.53 | 0.52–0.55 | <0.0001 |

| 15–19 | 0.57 | 0.56–0.59 | <0.0001 |

| 20–24 | 0.68 | 0.66–0.69 | <0.0001 |

| 25–34 | 0.76 | 0.75–0.76 | <0.0001 |

| 35–44 | 0.90 | 0.89–0.91 | <0.0001 |

| 45–54 | 1.02 | 1.01–1.03 | <0.0001 |

| 55–59 | 1.07 | 1.06–1.08 | <0.0001 |

| 60–64 | 1.09 | 1.08–1.10 | <0.0001 |

| 65–69 | 1.05 | 1.04–1.05 | <0.0001 |

| 70–74 | 1.07 | 1.06–1.07 | <0.0001 |

| 75–79 | 1.07 | 1.06–1.07 | <0.0001 |

| 80–84 | 1.05 | 1.05–1.06 | <0.0001 |

| Sex (Reference: Female) | |||

| Male | 1.01 | 1.00–1.01 | <0.0001 |

| Unknown | 1.03 | 0.96–1.10 | 0.3900 |

| Race (Reference: White) | |||

| American Indian | 1.05 | 1.03–1.06 | <0.0001 |

| Asian | 0.85 | 0.84–0.86 | <0.0001 |

| Black | 0.99 | 0.99–1.00 | <0.0001 |

| Pacific Islander | 0.89 | 0.87–0.90 | <0.0001 |

| Other | 0.93 | 0.92–0.93 | <0.0001 |

| Unknown | 0.87 | 0.87–0.88 | <0.0001 |

| AHRQ 1 Overall Tract Summary | 0.95 | 0.94–0.95 | <0.0001 |

| Primary Payor (Reference: Medicare Traditional) | |||

| Charity | 0.71 | 0.70–0.73 | <0.0001 |

| Commercial Indemnity | 0.86 | 0.85–0.86 | <0.0001 |

| Direct Employer Contract | 0.86 | 0.83–0.89 | <0.0001 |

| Managed Care Capitated | 0.86 | 0.83–0.88 | <0.0001 |

| Managed Care Non-Community-Acquired Pneumonia | 0.81 | 0.80–0.81 | <0.0001 |

| Medicaid–Managed Care Community-Acquired Pneumonia | 0.87 | 0.86–0.88 | <0.0001 |

| Medicaid–Managed Care Non-Community-Acquired Pneumonia | 0.91 | 0.90–0.91 | <0.0001 |

| Medicaid Traditional | 0.94 | 0.93-0.95 | <0.0001 |

| Medicare–Managed Care Community-Acquired pneumonia | 0.99 | 0.99–1.00 | 0.1072 |

| Medicare–Managed Care Non-Community-Acquired pneumonia | 0.99 | 0.99–0.99 | <0.0001 |

| Other Government Payors | 0.94 | 0.93–0.95 | <0.0001 |

| Self-Pay | 0.73 | 0.73–0.74 | <0.0001 |

| Other | 0.87 | 0.86–0.88 | <0.0001 |

| Point of Origin (Reference: Non-Healthcare Facility) | |||

| Clinic | 0.96 | 0.96–0.97 | <0.0001 |

| Referred from a Hospital (Different Facility) | 1.00 | 0.99–1.01 | 0.7277 |

| Referred from Department Unit in Same Hospital; Separate Claim | 0.87 | 0.85–0.88 | <0.0001 |

| Referred from Health Facility | 0.97 | 0.95–0.98 | <0.0001 |

| Referred from Skilled Nursing Facility or Intermediate Care Facility | 1.02 | 1.01–1.03 | <0.0001 |

| Other | 0.97 | 0.93–1.01 | 0.1328 |

| Information Not Available | 0.98 | 0.96–0.99 | 0.0081 |

| Patient Discharge Status (Reference: Discharged to Home or Self-Care) | |||

| Discharged to Home Health Organization | 1.11 | 1.11–1.12 | <0.0001 |

| Discharged to Hospice–Home | 1.22 | 1.21–1.23 | <0.0001 |

| Discharged to Hospice–Medical Facility | 1.26 | 1.25–1.28 | <0.0001 |

| Discharged/Transferred to Intermediate Care Facility | 1.07 | 1.06–1.09 | <0.0001 |

| Discharged/Transferred to Other Facility | 1.13 | 1.12–1.15 | <0.0001 |

| Discharged/Transferred to Psychiatric Hospital | 0.98 | 0.96–1.01 | 0.3130 |

| Discharged/Transferred to Skilled Nursing Facility | 1.12 | 1.12–1.13 | <0.0001 |

| Discharged/Transferred to Swing Bed | 1.07 | 1.05–1.10 | <0.0001 |

| Discharged/Transferred to Other Health Institute not in List | 1.04 | 1.00–1.07 | 0.0294 |

| Discharged/Transferred to a Long-Term Care Hospital | 1.15 | 1.13–1.17 | <0.0001 |

| Discharged/Transferred to Another Rehabilitation Facility | 1.13 | 1.12–1.14 | <0.0001 |

| Expired | 1.32 | 1.31–1.33 | <0.0001 |

| Left Against Medical Advice | 1.07 | 1.06–1.08 | <0.0001 |

| Other | 1.10 | 1.08–1.12 | <0.0001 |

| ICD-10-CM Principal Diagnosis (Reference: J18—Pneumonia, unspecified organism) | |||

| J09: Influenza due to certain identified influenza viruses | 0.87 | 0.85–0.89 | <0.0001 |

| J10: Influenza due to other identified influenza virus | 0.93 | 0.93–0.93 | <0.0001 |

| J11: Influenza due to unidentified influenza virus | 0.91 | 0.90–0.92 | <0.0001 |

| J12: Viral pneumonia, not elsewhere classified | 1.00 | 1.00–1.01 | 0.6140 |

| J13: Pneumonia due to streptococcus pneumoniae | 0.95 | 0.94–0.97 | <0.0001 |

| J14: Pneumonia due to Hemophilus influenzae | 1.01 | 0.99–1.03 | 0.3320 |

| J15: Bacterial pneumonia, not elsewhere classified | 1.00 | 0.99–1.00 | 0.2477 |

| J16: Pneumonia due to other infectious organisms, not elsewhere classified | 0.96 | 0.94–0.99 | 0.0020 |

| R09: Other symptoms and signs involving the circulatory and respiratory system—as a primary diagnosis code | 1.15 | 1.11–1.19 | <0.0001 |

| Other | 1.08 | 1.00–1.17 | 0.0489 |

| MS-DRG 2 Code (Reference: 193—Simple Pneumonia or Pleurisy with MCC 3) | |||

| 194: Simple Pneumonia and Pleurisy with CC 4 | 0.89 | 0.89–0.89 | <0.0001 |

| 195: Simple Pneumonia and Pleurisy without CC/MCC | 0.60 | 0.60–0.61 | <0.0001 |

| Log of Length of Stay (Spline coefficients) χ2 = 25,873 (p < 0.0001) | |||

| Model Fit Deviance explained: 43.5%; Adj. R-squared 0.392 | |||

| Facility-Level Characteristics | Estimate | SE 1 | p-Value |

|---|---|---|---|

| Intercept | −0.38 | 0.10 | 0.0002 |

| Teaching Status (Reference: No) | |||

| Yes | 0.56 | 0.06 | <0.0001 |

| TBD 2 | 0.02 | 0.18 | 0.8601 |

| Academic Status (Reference: No) | |||

| Yes | −0.47 | 0.07 | <0.0001 |

| Urban/Rural Status (Reference: Urban) | |||

| Rural | 0.04 | 0.04 | 0.4191 |

| Ownership Status (Reference: Voluntary Non-Profit-Private) | |||

| Federal | −0.06 | 0.27 | 0.8309 |

| Hospital District or Authority | −0.15 | 0.06 | 0.0130 |

| Local | −2.07 | 0.10 | <0.0001 |

| Government—State | −1.44 | 0.20 | <0.0001 |

| Physician | −0.32 | 0.37 | 0.3812 |

| Proprietary | −0.74 | 0.06 | <0.0001 |

| Voluntary Non-Profit—Church | −0.15 | 0.04 | 0.0004 |

| Voluntary Non-Profit—Other | −0.27 | 0.06 | <0.0001 |

| Size (Bed Count) (Reference: 1, 100) | |||

| (100, 200) | 0.02 | 0.06 | 0.7529 |

| (200, 300) | 0.04 | 0.06 | 0.4527 |

| (300, 400) | 0.29 | 0.06 | <0.0001 |

| (400, 500) | 0.31 | 0.07 | <0.0001 |

| (500, 600) | 0.42 | 0.07 | <0.0001 |

| (600, 700) | −0.21 | 0.09 | 0.0177 |

| (700, 800) | 0.51 | 0.09 | <0.0001 |

| (800, 900) | 0.16 | 0.11 | 0.1433 |

| (900, 1000) | 0.26 | 0.15 | 0.0792 |

| (1000, 2000) | 0.57 | 0.12 | <0.0001 |

| Region (Reference: South Atlantic) | |||

| North Central | −0.01 | 0.04 | 0.8572 |

| East South Central | −0.68 | 0.06 | <0.0001 |

| Middle Atlantic | −1.59 | 0.05 | <0.0001 |

| Mountain | −0.55 | 0.07 | <0.0001 |

| New England | −1.45 | 0.10 | <0.0001 |

| Pacific | −1.11 | 0.06 | <0.0001 |

| West North Central | −0.43 | 0.07 | <0.0001 |

| West South Central | −0.36 | 0.05 | <0.0001 |

| Case Mix Index (Reference: 0) | |||

| 1 | 0.80 | 0.08 | <0.0001 |

| 2 | 0.84 | 0.08 | <0.0001 |

| 3 | 2.34 | 0.40 | <0.0001 |

| 4 | −1.45 | 2.26 | 0.5225 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mishra, R.; Verma, H.; Aynala, V.B.; Arredondo, P.R.; Martin, J.; Korvink, M.; Gunn, L.H. Diagnostic Coding Intensity among a Pneumonia Inpatient Cohort Using a Risk-Adjustment Model and Claims Data: A U.S. Population-Based Study. Diagnostics 2022, 12, 1495. https://doi.org/10.3390/diagnostics12061495

Mishra R, Verma H, Aynala VB, Arredondo PR, Martin J, Korvink M, Gunn LH. Diagnostic Coding Intensity among a Pneumonia Inpatient Cohort Using a Risk-Adjustment Model and Claims Data: A U.S. Population-Based Study. Diagnostics. 2022; 12(6):1495. https://doi.org/10.3390/diagnostics12061495

Chicago/Turabian StyleMishra, Ruchi, Himadri Verma, Venkata Bhargavi Aynala, Paul R. Arredondo, John Martin, Michael Korvink, and Laura H. Gunn. 2022. "Diagnostic Coding Intensity among a Pneumonia Inpatient Cohort Using a Risk-Adjustment Model and Claims Data: A U.S. Population-Based Study" Diagnostics 12, no. 6: 1495. https://doi.org/10.3390/diagnostics12061495

APA StyleMishra, R., Verma, H., Aynala, V. B., Arredondo, P. R., Martin, J., Korvink, M., & Gunn, L. H. (2022). Diagnostic Coding Intensity among a Pneumonia Inpatient Cohort Using a Risk-Adjustment Model and Claims Data: A U.S. Population-Based Study. Diagnostics, 12(6), 1495. https://doi.org/10.3390/diagnostics12061495