Interpretation of Thoracic Radiography Shows Large Discrepancies Depending on the Qualification of the Physician—Quantitative Evaluation of Interobserver Agreement in a Representative Emergency Department Scenario

Abstract

:1. Introduction

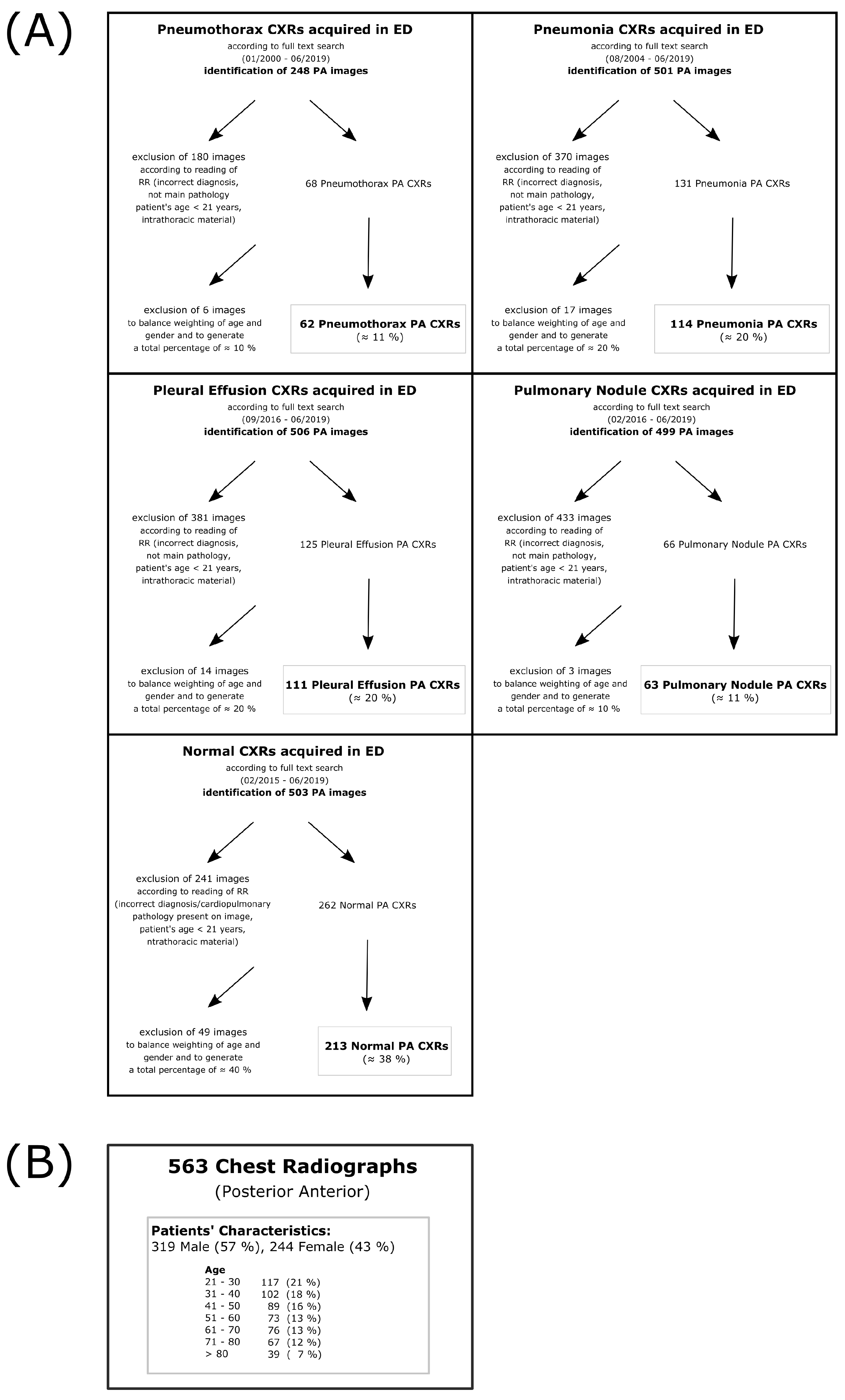

2. Materials and Methods

2.1. Patient Identification and Reading

2.2. Statistics

3. Results

3.1. Reading Duration

3.2. Distribution of Likert Scale-Based Diagnosis

3.3. Interrater Reliability

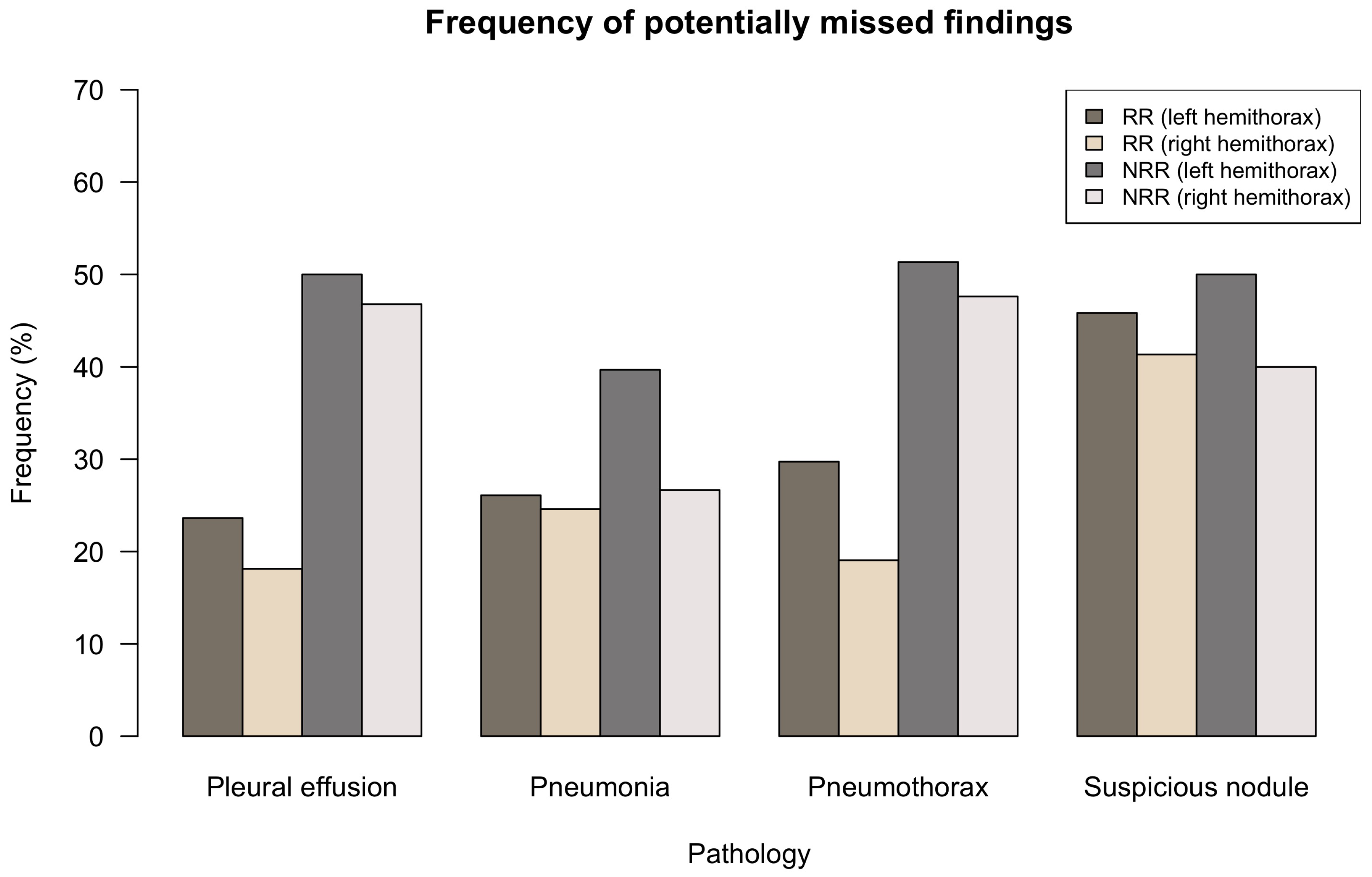

3.4. Potentially Missed Findings

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | artificial intelligence |

| BCR | board-certified radiologist(s) |

| CT | computed tomography |

| CXR | chest radiography |

| CXRs | chest radiographs |

| ED | emergency department |

| IM | internal medicine |

| LH | left hemithorax |

| NRR | non-radiology resident(s) |

| PA | posterior anterior projection |

| PACS | picture archiving and communication system |

| RH | right hemithorax |

| RR | radiology resident(s) |

| YOE | years of experience |

References

- Raoof, S.; Feigin, D.; Sung, A.; Raoof, S.; Irugulpati, L.; Rosenow, E.C. Interpretation of plain chest roentgenogram. Chest 2012, 141, 545–558. [Google Scholar] [CrossRef] [Green Version]

- Martindale, J.L.; Wakai, A.; Collins, S.P.; Levy, P.D.; Diercks, D.; Hiestand, B.C.; Fermann, G.J.; deSouza, I.; Sinert, R. Diagnosing Acute Heart Failure in the Emergency Department: A Systematic Review and Meta-analysis. Acad. Emerg. Med. 2016, 23, 223–242. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hunton, R. Updated concepts in the diagnosis and management of community-acquired pneumonia. JAAPA 2019, 32, 18–23. [Google Scholar] [CrossRef] [Green Version]

- Gurney, J.W. Why chest radiography became routine. Radiology 1995, 195, 245–246. [Google Scholar] [CrossRef]

- Speets, A.M.; van der Graaf, Y.; Hoes, A.W.; Kalmijn, S.; Sachs, A.P.; Rutten, M.J.; Gratama, J.W.C.; Montauban van Swijndregt, A.D.; Mali, W.P. Chest radiography in general practice: Indications, diagnostic yield and consequences for patient management. Br. J. Gen Pract. 2006, 56, 574–578. [Google Scholar] [PubMed]

- Regunath, H.; Oba, Y. Community-Acquired Pneumonia. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2021. Available online: https://www.ncbi.nlm.nih.gov/books/NBK430749/ (accessed on 20 September 2021).

- Marel, M.; Zrůstová, M.; Stasný, B.; Light, R.W. The incidence of pleural effusion in a well-defined region. Epidemiologic study in central Bohemia. Chest 1993, 104, 1486–1489. [Google Scholar] [CrossRef] [PubMed]

- Bobbio, A.; Dechartres, A.; Bouam, S.; Damotte, D.; Rabbat, A.; Régnard, J.F.; Roche, N.; Alifano, M. Epidemiology of spontaneous pneumothorax: Gender-related differences. Thorax 2015, 70, 653–658. [Google Scholar] [CrossRef] [Green Version]

- Loverdos, K.; Fotiadis, A.; Kontogianni, C.; Iliopoulou, M.; Gaga, M. Lung nodules: A comprehensive review on current approach and management. Ann. Thorac. Med. 2019, 14, 226–238. [Google Scholar]

- Henostroza, G.; Harris, J.B.; Kancheya, N.; Nhandu, V.; Besa, S.; Musopole, R.; Krüüner, A.; Chileshe, C.; Dunn, I.J.; Reid, S.E. Chest radiograph reading and recording system: Evaluation in frontline clinicians in Zambia. BMC Infect. Dis. 2016, 16, 136. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kosack, C.S.; Spijker, S.; Halton, J.; Bonnet, M.; Nicholas, S.; Chetcuti, K.; Mesic, A.; Brant, W.E.; Joekes, E.; Andronikou, S. Evaluation of a chest radiograph reading and recording system for tuberculosis in a HIV-positive cohort. Clin. Radiol. 2017, 72, 519.e1–519.e9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Potchen, E.J.; Cooper, T.G.; Sierra, A.E.; Aben, G.R.; Potchen, M.J.; Potter, M.G.; Siebert, J.E. Measuring performance in chest radiography. Radiology 2000, 217, 456–459. [Google Scholar] [CrossRef] [PubMed]

- Fabre, C.; Proisy, M.; Chapuis, C.; Jouneau, S.; Lentz, P.-A.; Meunier, C.; Mahé, G.; Lederlin, M. Radiology residents’ skill level in chest x-ray reading. Diagn Interv. Imaging 2018, 99, 361–370. [Google Scholar] [CrossRef]

- Eisen, L.A.; Berger, J.S.; Hegde, A.; Schneider, R.F. Competency in chest radiography. A comparison of medical students, residents, and fellows. J. Gen Intern. Med. 2006, 21, 460–465. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sullivan, G.M.; Artino, A.R., Jr. Analyzing and interpreting data from likert-type scales. J. Grad. Med. Educ. 2013, 5, 541–542. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2020; Available online: https://www.R-project.org/ (accessed on 20 September 2021).

- Brogi, E.; Gargani, L.; Bignami, E.; Barbariol, F.; Marra, A.; Forfori, F.; Vetrugno, L. Thoracic ultrasound for pleural effusion in the intensive care unit: a narrative review from diagnosis to treatment. Crit Care 2017, 21, 325. [Google Scholar] [CrossRef] [PubMed]

- Rueckel, J.; Kunz, W.G.; Hoppe, B.F.; Patzig, M.; Notohamiprodjo, M.; Meinel, F.G.; Cyran, C.C.; Ingrisch, M.; Ricke, J.; Sabel, B.O. Artificial Intelligence Algorithm Detecting Lung Infection in Supine Chest Radiographs of Critically Ill Patients With a Diagnostic Accuracy Similar to Board-Certified Radiologists. Crit Care Med. 2020, 48, e574–e583. [Google Scholar] [CrossRef] [PubMed]

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Valentim, C.C.S.; Liang, H.; Baxter, S.L.; McKeown, A.; Yang, G.; Wu, X.; Yan, F.; et al. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell 2018, 172, 1122–1131.e9. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Ball, R.L.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.P.; et al. Deep learning for chest radiograph diagnosis: A retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS Med. 2018, 15, e1002686. [Google Scholar] [CrossRef] [PubMed]

- Stephen, O.; Sain, M.; Maduh, U.J.; Jeong, D.-U. An Efficient Deep Learning Approach to Pneumonia Classification in Healthcare. J. Healthc Eng. 2019, 2019, 4180949. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hwang, E.J.; Park, S.; Jin, K.-N.; Kim, J.I.; Choi, S.Y.; Lee, J.H.; Goo, J.M.; Aum, J.; Yim, J.-J.; Cohen, J.G.; et al. DLAD Development and Evaluation Group, Development and Validation of a Deep Learning-Based Automated Detection Algorithm for Major Thoracic Diseases on Chest Radiographs. JAMA Netw. Open 2019, 2, e191095. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nam, J.G.; Park, S.; Hwang, E.J.; Lee, J.H.; Jin, K.-N.; Lim, K.Y.; Vu, T.H.; Sohn, J.H.; Hwang, S.; Goo, J.M.; et al. Development and Validation of Deep Learning-based Automatic Detection Algorithm for Malignant Pulmonary Nodules on Chest Radiographs. Radiology 2019, 290, 218–228. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Park, S.; Lee, S.M.; Lee, K.H.; Jung, K.-H.; Bae, W.; Choe, J.; Seo, J.B. Deep learning-based detection system for multiclass lesions on chest radiographs: Comparison with observer readings. Eur. Radiol. 2020, 30, 1359–1368. [Google Scholar] [CrossRef]

- Rueckel, J.; Trappmann, L.; Schachtner, B.; Wesp, P.; Hoppe, B.F.; Fink, N.; Ricke, J.; Dinkel, J.; Ingrisch, M.; Sabel, B.O. Impact of confounding thoracic tubes and pleural dehiscence extent on artificial intelligence pneumothorax detection in chest radiographs. Investig. Radiol. 2020, 55, 792–798. [Google Scholar] [CrossRef] [PubMed]

- Taylor, A.G.; Mielke, C.; Mongan, J. Automated detection of moderate and large pneumothorax on frontal chest X-rays using deep convolutional neural networks: A retrospective study. PLoS Med. 2018, 15, e1002697. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Lee, S.M.; Kim, N.; Choe, J.; Cho, Y.; Kyung-Hyun, D.; Seo, J.B. Application of deep learning-based computer-aided detection system: Detecting pneumothorax on chest radiograph after biopsy. Eur. Radiol. 2019, 29, 5341–5348. [Google Scholar] [CrossRef]

- Rudolph, J.; Huemmer, C.; Ghesu, F.-C.; Mansoor, A.; Preuhs, A.; Fieselmann, A.; Fink, N.; Dinkel, J.; Koliogiannis, V.; Schwarze, V.; et al. Artificial Intelligence in Chest Radiography Reporting Accuracy—Added Clinical Value in the Emergency Unit Setting Without 24/7 Radiology Coverage. Investig. Radiol. 2021. Epub ahead of print. [Google Scholar] [CrossRef] [PubMed]

| Left Hemithorax (LH) | Right Hemithorax (RH) | |

|---|---|---|

| Pleural effusion | ||

| Kruskal–Wallis | , , p < 0.001 | , p < 0.001 |

| BCR–RR | p = 0.968 | p = 0.898 |

| BCR–NRR | p< 0.001 | p< 0.001 |

| RR–NRR | p< 0.001 | p< 0.001 |

| Pneumonia | ||

| Kruskal–Wallis | , , p = 0.894 | , p < 0.001 |

| BCR–RR | (*) | p = 0.789 |

| BCR–NRR | (*) | p< 0.001 |

| RR–NRR | (*) | p= 0.007 |

| Pneumothorax | ||

| Kruskal–Wallis | , , p = 0.066 | , p = 0.986 |

| BCR–RR | (*) | (*) |

| BCR–NRR | (*) | (*) |

| RR–NRR | (*) | (*) |

| Suspicious nodule | ||

| Kruskal–Wallis | , , p< 0.001 | , p < 0.001 |

| BCR–RR | p = 0.854 | p = 0.796 |

| BCR–NRR | p= 0.001 | p< 0.001 |

| RR–NRR | p< 0.001 | p< 0.001 |

| Overall-Inter- | BCR/RR- | BCR/NRR- | RR/NRR- | Overall- | BCR-Inter- | RR-Inter- | NRR-Inter- | |

|---|---|---|---|---|---|---|---|---|

| Individual | Consensus | Consensus | Consensus | Consensus | Individual | Individual | Individual | |

| Agreement | Agreement | Agreement | Agreement | Agreement | Agreement | Agreement | Agreement | |

| (n = 9) | (n = 3) | (n = 3) | (n = 3) | (n = 3) | ||||

| Kendall | Spearman | Spearman | Spearman | Kendall | Kendall | Kendall | Kendall | |

| Pleural effusion | ||||||||

| Left hemithorax (LH) | 0.562 | 0.774 | 0.626 | 0.648 | 0.787 | 0.654 | 0.756 | 0.663 |

| (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | |

| Right hemithorax (RH) | 0.647 | 0.799 | 0.671 | 0.693 | 0.812 | 0.742 | 0.772 | 0.750 |

| (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | |

| Pneumonia | ||||||||

| Left hemithorax (LH) | 0.532 | 0.696 | 0.509 | 0.590 | 0.732 | 0.685 | 0.703 | 0.584 |

| (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | |

| Right hemithorax (RH) | 0.568 | 0.709 | 0.550 | 0.669 | 0.760 | 0.676 | 0.763 | 0.623 |

| (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | |

| Pneumothorax | ||||||||

| Left hemithorax (LH) | 0.719 | 0.773 | 0.665 | 0.725 | 0.806 | 0.827 | 0.898 | 0.718 |

| (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | |

| Right hemithorax (RH) | 0.710 | 0.825 | 0.515 | 0.521 | 0.747 | 0.861 | 0.843 | 0.726 |

| (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | |

| Suspicious nodule | ||||||||

| Left hemithorax (LH) | 0.391 | 0.561 | 0.300 | 0.303 | 0.578 | 0.607 | 0.679 | 0.502 |

| (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | |

| Right hemithorax (RH) | 0.417 | 0.623 | 0.359 | 0.291 | 0.595 | 0.686 | 0.632 | 0.509 |

| (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) | (p < 0.001) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rudolph, J.; Fink, N.; Dinkel, J.; Koliogiannis, V.; Schwarze, V.; Goller, S.; Erber, B.; Geyer, T.; Hoppe, B.F.; Fischer, M.; et al. Interpretation of Thoracic Radiography Shows Large Discrepancies Depending on the Qualification of the Physician—Quantitative Evaluation of Interobserver Agreement in a Representative Emergency Department Scenario. Diagnostics 2021, 11, 1868. https://doi.org/10.3390/diagnostics11101868

Rudolph J, Fink N, Dinkel J, Koliogiannis V, Schwarze V, Goller S, Erber B, Geyer T, Hoppe BF, Fischer M, et al. Interpretation of Thoracic Radiography Shows Large Discrepancies Depending on the Qualification of the Physician—Quantitative Evaluation of Interobserver Agreement in a Representative Emergency Department Scenario. Diagnostics. 2021; 11(10):1868. https://doi.org/10.3390/diagnostics11101868

Chicago/Turabian StyleRudolph, Jan, Nicola Fink, Julien Dinkel, Vanessa Koliogiannis, Vincent Schwarze, Sophia Goller, Bernd Erber, Thomas Geyer, Boj Friedrich Hoppe, Maximilian Fischer, and et al. 2021. "Interpretation of Thoracic Radiography Shows Large Discrepancies Depending on the Qualification of the Physician—Quantitative Evaluation of Interobserver Agreement in a Representative Emergency Department Scenario" Diagnostics 11, no. 10: 1868. https://doi.org/10.3390/diagnostics11101868

APA StyleRudolph, J., Fink, N., Dinkel, J., Koliogiannis, V., Schwarze, V., Goller, S., Erber, B., Geyer, T., Hoppe, B. F., Fischer, M., Ben Khaled, N., Jörgens, M., Ricke, J., Rueckel, J., & Sabel, B. O. (2021). Interpretation of Thoracic Radiography Shows Large Discrepancies Depending on the Qualification of the Physician—Quantitative Evaluation of Interobserver Agreement in a Representative Emergency Department Scenario. Diagnostics, 11(10), 1868. https://doi.org/10.3390/diagnostics11101868