Ensuring Driving and Road Safety of Autonomous Vehicles Using a Control Optimiser Interaction Framework Through Smart “Thing” Information Sensing and Actuation

Abstract

1. Introduction

- A brief discussion of the previous works related to AV safe driving and navigation support through different learning and optimisation methods

- The introduction, discussion, and theoretical explanations of the proposed framework with illustrations and mathematical models

- The discussion includes data-based analysis, functional representations, and partial outputs.

- The metric-centric discussions through comparisons using related metrics and congruent methods with percentage improvement

2. Related Works

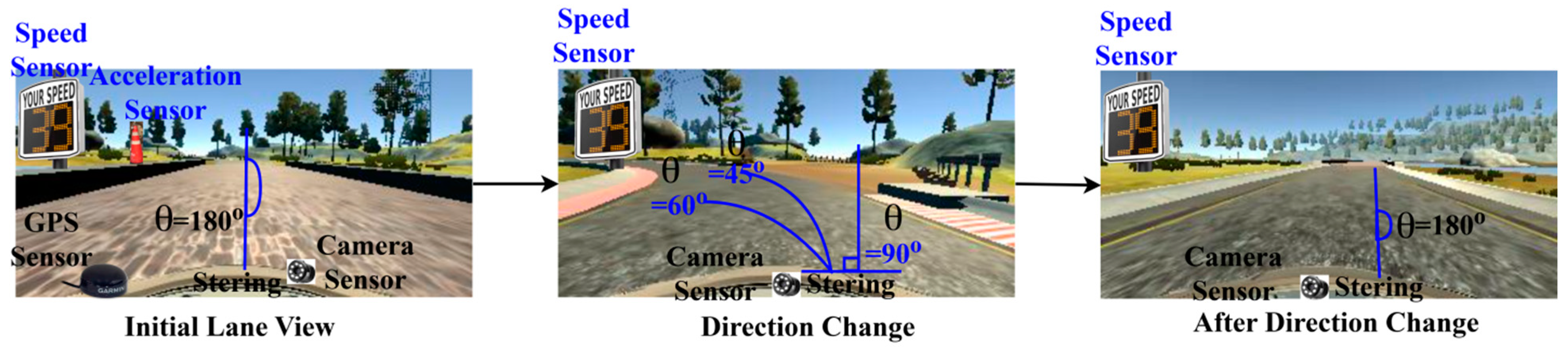

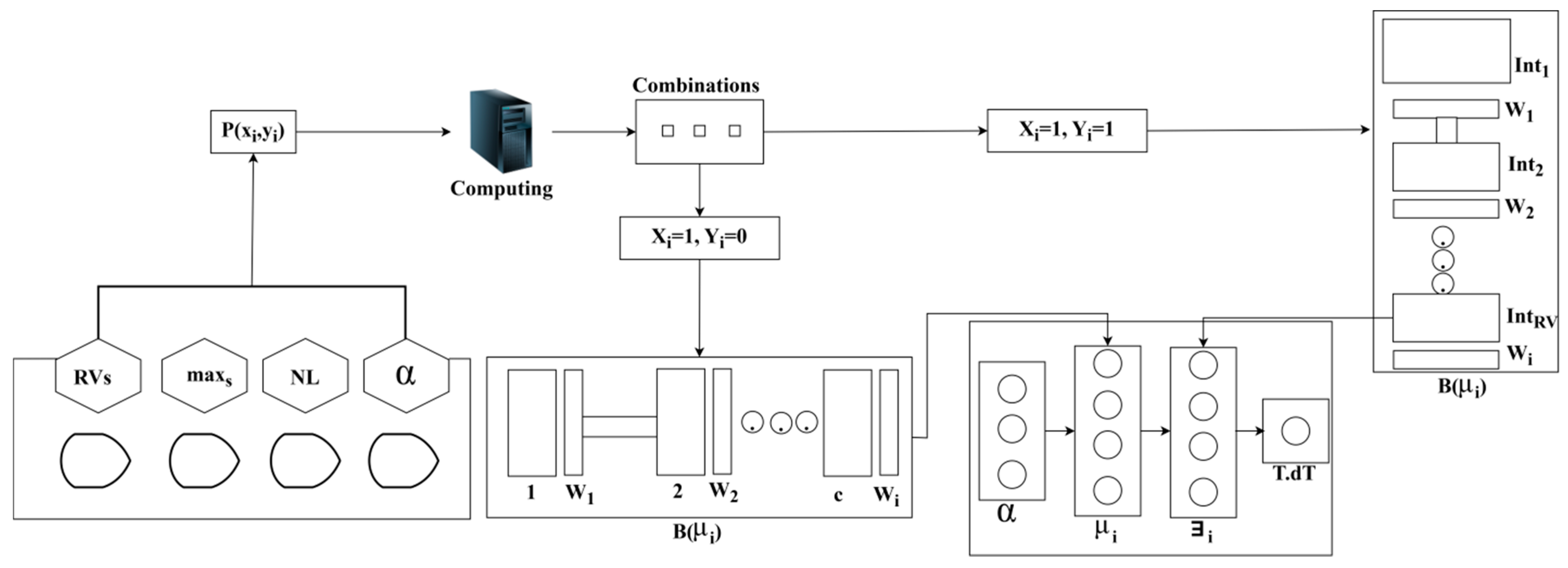

3. Proposed Control Optimiser Interaction Framework

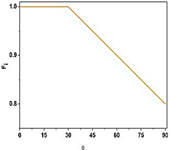

3.1. Data Collection and Description

3.2. Framework Description

3.3. Information-Sharing Process

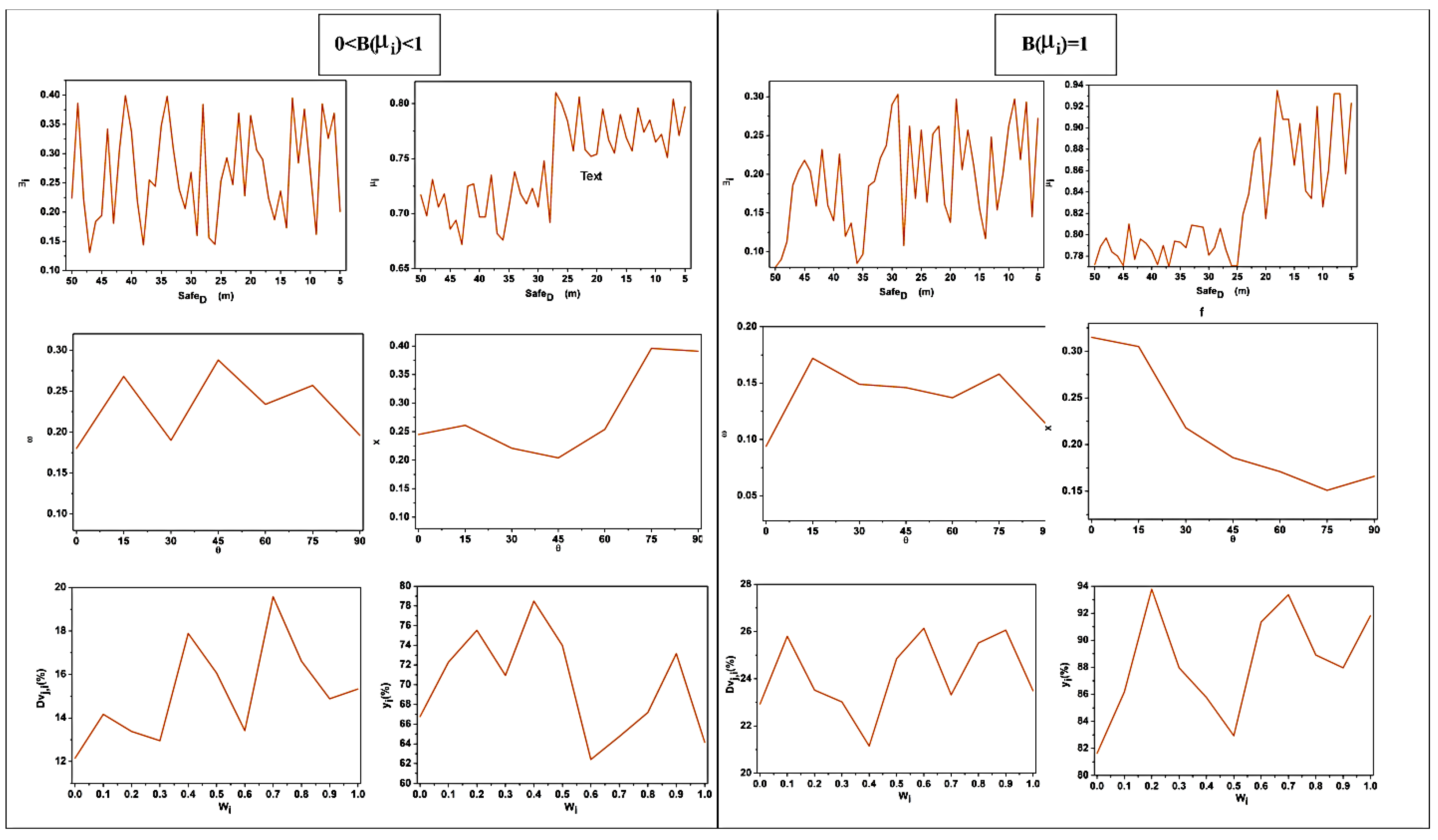

3.4. Batch Optimisation Algorithm

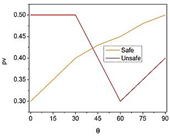

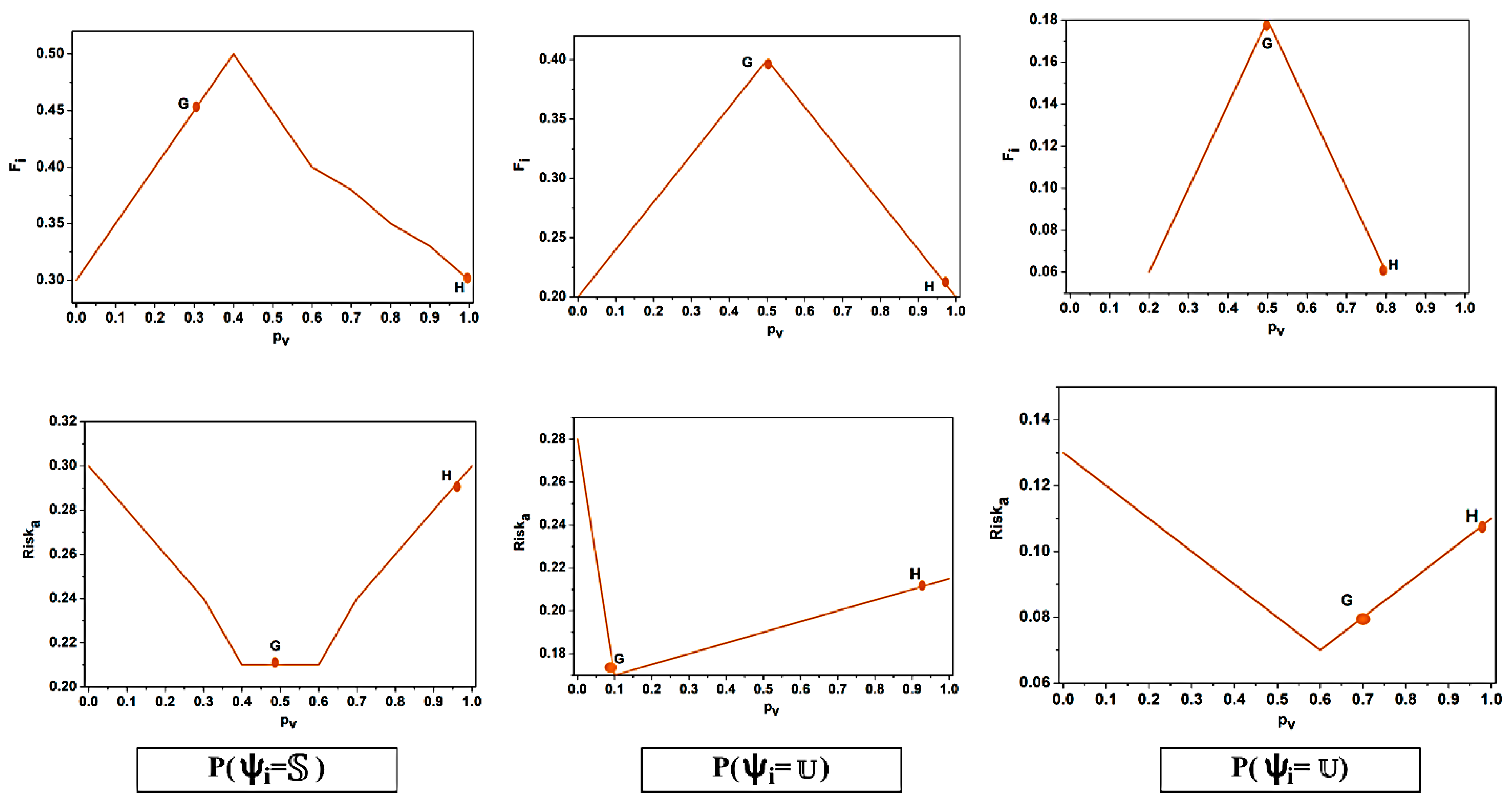

3.5. Risk Assessment

3.6. Driving Control Design

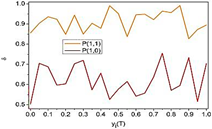

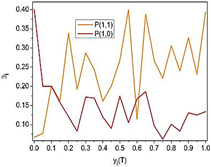

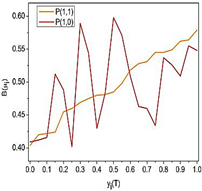

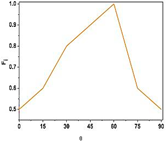

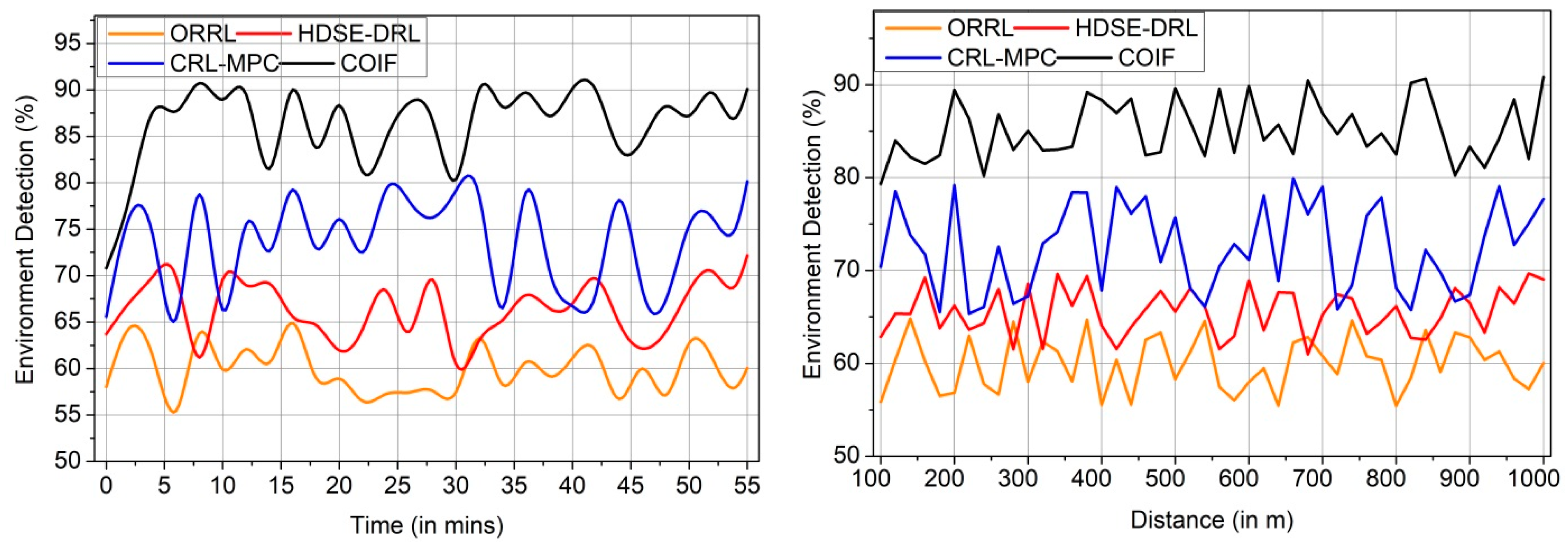

4. Results

5. Discussion

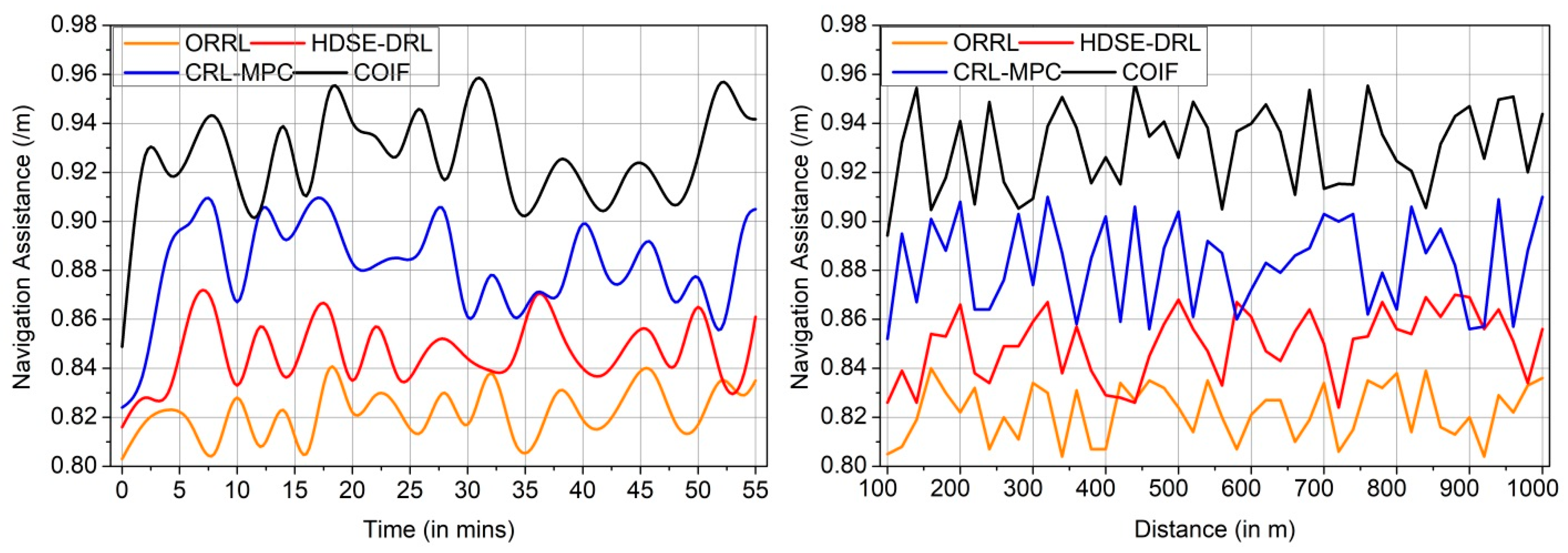

- (i)

- COIF’s primary goal is the same as ORRL’s: to ensure AV decisions can withstand unpredictable environments. The overarching objective of ORRL and COIF is to enhance AV performance in novel environments. However, COIF enhances information-sharing consistency and improves control over safety responses by communicating with external “Things” in the environment in real time via neuro-batch learning.

- (ii)

- HDSE-DRL was selected for its strategic flexibility in uncertain highway driving, aligning with COIF’s emphasis on resilience. The versatility is further enhanced by COIF’s ability to classify and batch various types of data (such as traffic density, objects, and obstacles), which allows for more detailed environmental information and the real-time detection of harmful activities.

- (iii)

- CRL-MPC is included, which enhances vehicle navigation via predictive control and focuses on managing AV behaviours during car-following manoeuvres. Using CRL-MPC’s predicted performance as a yardstick, one may assess COIF’s real-time adaptability and decision consistency. While CRL-MPC uses reinforcement learning and model predictive control to anticipate how vehicles will behave and ensure safe following distances, COIF uses adaptive neuro-batch learning to enhance real-time responses to various road circumstances.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Duan, J.; Wang, Z.; Jing, X. Digital Twin Test Method With LTE-V2X for Autonomous Vehicle Safety Test. IEEE Internet Things J. 2024, 11, 30161–30171. [Google Scholar] [CrossRef]

- Jiang, Z.; Pan, W.; Liu, J.; Dang, S.; Yang, Z.; Li, H.; Pan, Y. Efficient and unbiased safety test for autonomous driving systems. IEEE Trans. Intell. Veh. 2022, 8, 3336–3348. [Google Scholar] [CrossRef]

- Hosseinian, S.M.; Mirzahossein, H. Efficiency and safety of traffic networks under the effect of autonomous vehicles. Iran. J. Sci. Technol. Trans. Civ. Eng. 2024, 48, 1861–1885. [Google Scholar] [CrossRef]

- Noh, J.; Jo, Y.; Kim, J.; Min, K. Enhancing transportation safety with infrastructure cooperative autonomous driving system. Int. J. Automot. Technol. 2024, 25, 61–69. [Google Scholar] [CrossRef]

- Wang, H.; Song, L.; Wei, Z.; Peng, L.; Li, J.; Hashemi, E. Driving safety zone model oriented motion planning framework for autonomous truck platooning. Accid. Anal. Prev. 2023, 193, 107225. [Google Scholar] [CrossRef]

- Wei, S.; Shao, M. Existence of connected and autonomous vehicles in mixed traffic: Impacts on safety and environment. Traffic Inj. Prev. 2024, 25, 390–399. [Google Scholar] [CrossRef]

- Liu, W.; Hua, M.; Deng, Z.; Meng, Z.; Huang, Y.; Hu, C.; Song, S.; Gao, L.; Liu, C.; Shuai, B.; et al. A systematic survey of control techniques and applications in connected and automated vehicles. IEEE Internet Things J. 2023, 10, 21892–21916. [Google Scholar] [CrossRef]

- Tang, X.; Yan, Y.; Wang, B. Trajectory Tracking Control of Autonomous Vehicles Combining ACT-R Cognitive Framework and Preview Tracking Theory. IEEE Access 2023, 11, 137067–137082. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, R.; Meng, X.; Yang, Y.; Wei, Z.; Ye, Q. A novel path tracking system for autonomous vehicle based on model predictive control. J. Mech. Sci. Technol. 2024, 38, 365–378. [Google Scholar] [CrossRef]

- Kim, J.S.; Quan, Y.S.; Chung, C.C. Koopman operator-based model identification and control for automated driving vehicle. Int. J. Control. Autom. Syst. 2023, 21, 2431–2443. [Google Scholar] [CrossRef]

- Chen, J.; Chen, F. Efficient vehicle lateral safety analysis based on Multi-Kriging metamodels: Autonomous trucks under different lateral control modes during being overtaken. Accid. Anal. Prev. 2024, 208, 107787. [Google Scholar] [CrossRef] [PubMed]

- He, S.; Liu, X.; Wang, J.; Ge, H.; Long, K.; Huang, H. Influence of conventional driving habits on takeover performance in joystick-controlled autonomous vehicles: A low-speed field experiment. Heliyon 2024, 10, e31975. [Google Scholar] [CrossRef] [PubMed]

- Ni, H.; Yu, G.; Chen, P.; Zhou, B.; Liao, Y.; Li, H. An Integrated Framework of Lateral and Longitudinal Behavior Decision-Making for Autonomous Driving Using Reinforcement Learning. IEEE Trans. Veh. Technol. 2024, 73, 9706–9720. [Google Scholar] [CrossRef]

- Liao, Y.; Yu, G.; Chen, P.; Zhou, B.; Li, H. Integration of Decision-Making and Motion Planning for Autonomous Driving Based on Double-Layer Reinforcement Learning Framework. IEEE Trans. Veh. Technol. 2023, 73, 3142–3158. [Google Scholar] [CrossRef]

- Peng, Z.; Xia, F.; Liu, L.; Wang, D.; Li, T.; Peng, M. Online deep learning control of an autonomous surface vehicle using learned dynamics. IEEE Trans. Intell. Veh. 2023, 9, 3283–3292. [Google Scholar] [CrossRef]

- Yu, J.; Arab, A.; Yi, J.; Pei, X.; Guo, X. Hierarchical framework integrating rapidly-exploring random tree with deep reinforcement learning for autonomous vehicle. Appl. Intell. 2023, 53, 16473–16486. [Google Scholar] [CrossRef]

- Nguyen, H.D.; Han, K. Safe reinforcement learning-based driving policy design for autonomous vehicles on highways. Int. J. Control. Autom. Syst. 2023, 21, 4098–4110. [Google Scholar] [CrossRef]

- Guangwen, T.; Mengshan, L.; Biyu, H.; Jihong, Z.; Lixin, G. Achieving accurate trajectory predicting and tracking for autonomous vehicles via reinforcement learning-assisted control approaches. Eng. Appl. Artif. Intell. 2024, 135, 108773. [Google Scholar] [CrossRef]

- Tóth, S.H.; Bárdos, Á.; Viharos, Z.J. Tabular Q-learning Based Reinforcement Learning Agent for Autonomous Vehicle Drift Initiation and Stabilization. IFAC-PapersOnLine 2023, 56, 4896–4903. [Google Scholar] [CrossRef]

- Kang, L.; Shen, H.; Li, Y.; Xu, S. A data-driven control-policy-based driving safety analysis system for autonomous vehicles. IEEE Internet Things J. 2023, 10, 14058–14070. [Google Scholar] [CrossRef]

- Han, S.; Zhou, S.; Wang, J.; Pepin, L.; Ding, C.; Fu, J.; Miao, F. A multi-agent reinforcement learning approach for safe and efficient behavior planning of connected autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2023, 25, 3654–3670. [Google Scholar] [CrossRef]

- Wang, X.; Hu, J.; Wei, C.; Li, L.; Li, Y.; Du, M. A Novel Lane-Change Decision-Making With Long-Time Trajectory Prediction for Autonomous Vehicle. IEEE Access 2023, 11, 137437–137449. [Google Scholar] [CrossRef]

- Sun, Q.; Wang, X.; Yang, G.; Chen, Y.H.; Ma, F. Adaptive robust formation control of connected and autonomous vehicle swarm system based on constraint following. IEEE Trans. Cybern. 2022, 53, 4189–4203. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Zhang, J.; Liu, G. Autonomous Driving Decision Algorithm for Complex Multi-Vehicle Interactions: An Efficient Approach Based on Global Sorting and Local Gaming. IEEE Trans. Intell. Transp. Syst. 2024, 25, 6927–6937. [Google Scholar] [CrossRef]

- Jond, H.B.; Platoš, J. Differential game-based optimal control of autonomous vehicle convoy. IEEE Trans. Intell. Transp. Syst. 2022, 24, 2903–2919. [Google Scholar] [CrossRef]

- Wang, L.; Yang, S.; Yuan, K.; Huang, Y.; Chen, H. A combined reinforcement learning and model predictive control for car-following maneuver of autonomous vehicles. Chin. J. Mech. Eng. 2023, 36, 80. [Google Scholar] [CrossRef]

- Nie, X.; Liang, Y.; Ohkura, K. Autonomous highway driving using reinforcement learning with safety check system based on time-to-collision. Artif. Life Robot. 2023, 28, 158–165. [Google Scholar] [CrossRef]

- Deng, H.; Zhao, Y.; Wang, Q.; Nguyen, A.T. Deep Reinforcement Learning Based Decision-Making Strategy of Autonomous Vehicle in Highway Uncertain Driving Environments. Automot. Innov. 2023, 6, 438–452. [Google Scholar] [CrossRef]

- Feng, S.; Sun, H.; Yan, X.; Zhu, H.; Zou, Z.; Shen, S.; Liu, H.X. Dense reinforcement learning for safety validation of autonomous vehicles. Nature 2023, 615, 620–627. [Google Scholar] [CrossRef]

- Lee, C.Y.; Khanum, A.; Sung, T.W. Robust autonomous driving control using deep hybrid-learning network under rainy/snown conditions. Multimed. Tools Appl. 2024, 1–15. [Google Scholar] [CrossRef]

- He, X.; Lv, C. Towards Safe Autonomous Driving: Decision Making with Observation-Robust Reinforcement Learning. Automot. Innov. 2023, 6, 509–520. [Google Scholar] [CrossRef]

- Ben Elallid, B.; Bagaa, M.; Benamar, N.; Mrani, N. A reinforcement learning based autonomous vehicle control in diverse daytime and weather scenarios. J. Intell. Transp. Syst. 2024, 1–14. [Google Scholar] [CrossRef]

- Shi, Y.; Liu, J.; Liu, C.; Gu, Z. DeepAD: An integrated decision-making framework for intelligent autonomous driving. Transp. Res. Part A Policy Pract. 2024, 183, 104069. [Google Scholar] [CrossRef]

- Li, H.; Wei, W.; Zheng, S.; Sun, C.; Lu, Y.; Zhou, T. Personalised driving behavior oriented autonomous vehicle control for typical traffic situations. J. Frankl. Inst. 2024, 361, 106924. [Google Scholar] [CrossRef]

- Mihály, A.; Do, T.T.; Gáspár, P. Supervised reinforcement learning based trajectory tracking control for autonomous vehicles. IFAC-PapersOnLine 2024, 58, 140–145. [Google Scholar] [CrossRef]

- Gao, F.; Luo, C.; Shi, F.; Chen, X.; Gao, Z.; Zhao, R. Online Safety Verification of Autonomous Driving Decision-Making Based on Dynamic Reachability Analysis. IEEE Access 2023, 11, 93293–93309. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, Z.; Pant, Y.V. Improving safety in mixed traffic: A learning-based model predictive control for autonomous and human-driven vehicle platooning. Knowl. Based Syst. 2024, 293, 111673. [Google Scholar] [CrossRef]

- Kang, D.; Li, Z.; Levin, M.W. Evasion planning for autonomous intersection control based on an optimised conflict point control formulation. J. Transp. Saf. Secur. 2022, 14, 2074–2110. [Google Scholar]

- Available online: https://www.kaggle.com/datasets/roydatascience/training-car (accessed on 22 August 2024).

- Available online: https://www.kaggle.com/datasets/magnumresearchgroup/offroad-terrain-dataset-for-autonomous-vehicles (accessed on 22 August 2024).

where | ||

| Based on | Based on | |

| Define unless and , if , then Impact 1: //low where Impact 2: //high where Impact 2: //high | Define For the , the impacts as follows such that and Impact 1: //low Impact 2: | |

|  |  |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almutairi, A.; Asmari, A.F.A.; Alqubaysi, T.; Alanazi, F.; Armghan, A. Ensuring Driving and Road Safety of Autonomous Vehicles Using a Control Optimiser Interaction Framework Through Smart “Thing” Information Sensing and Actuation. Machines 2024, 12, 798. https://doi.org/10.3390/machines12110798

Almutairi A, Asmari AFA, Alqubaysi T, Alanazi F, Armghan A. Ensuring Driving and Road Safety of Autonomous Vehicles Using a Control Optimiser Interaction Framework Through Smart “Thing” Information Sensing and Actuation. Machines. 2024; 12(11):798. https://doi.org/10.3390/machines12110798

Chicago/Turabian StyleAlmutairi, Ahmed, Abdullah Faiz Al Asmari, Tariq Alqubaysi, Fayez Alanazi, and Ammar Armghan. 2024. "Ensuring Driving and Road Safety of Autonomous Vehicles Using a Control Optimiser Interaction Framework Through Smart “Thing” Information Sensing and Actuation" Machines 12, no. 11: 798. https://doi.org/10.3390/machines12110798

APA StyleAlmutairi, A., Asmari, A. F. A., Alqubaysi, T., Alanazi, F., & Armghan, A. (2024). Ensuring Driving and Road Safety of Autonomous Vehicles Using a Control Optimiser Interaction Framework Through Smart “Thing” Information Sensing and Actuation. Machines, 12(11), 798. https://doi.org/10.3390/machines12110798