Multimodal Interface for Human–Robot Collaboration

Abstract

1. Introduction

2. Literature Review and Related Work

2.1. Smart Factories

2.2. Methodologies for Human–Robot Interaction and Communication

3. Proposed HRI Component for Smart Factory Environment

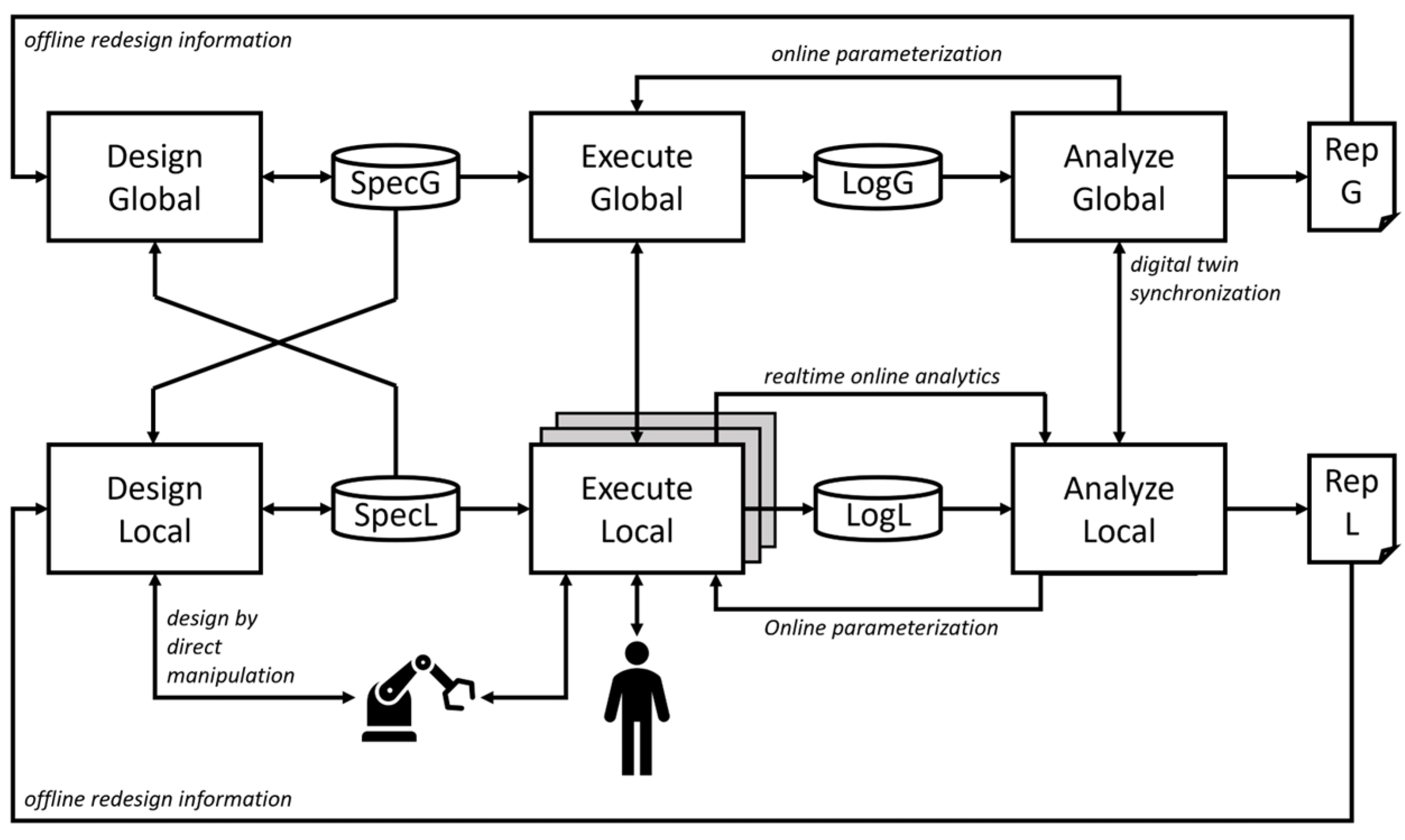

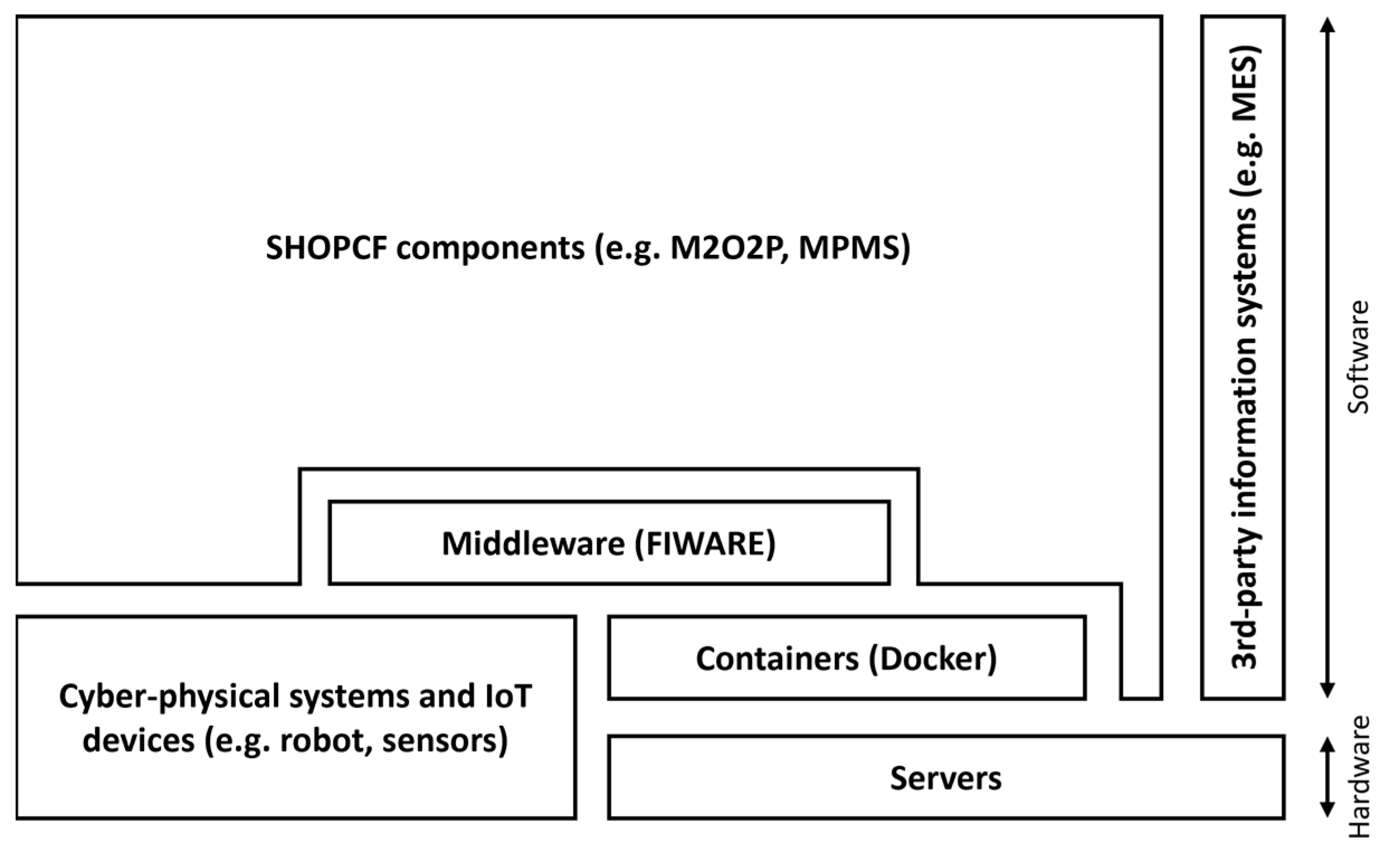

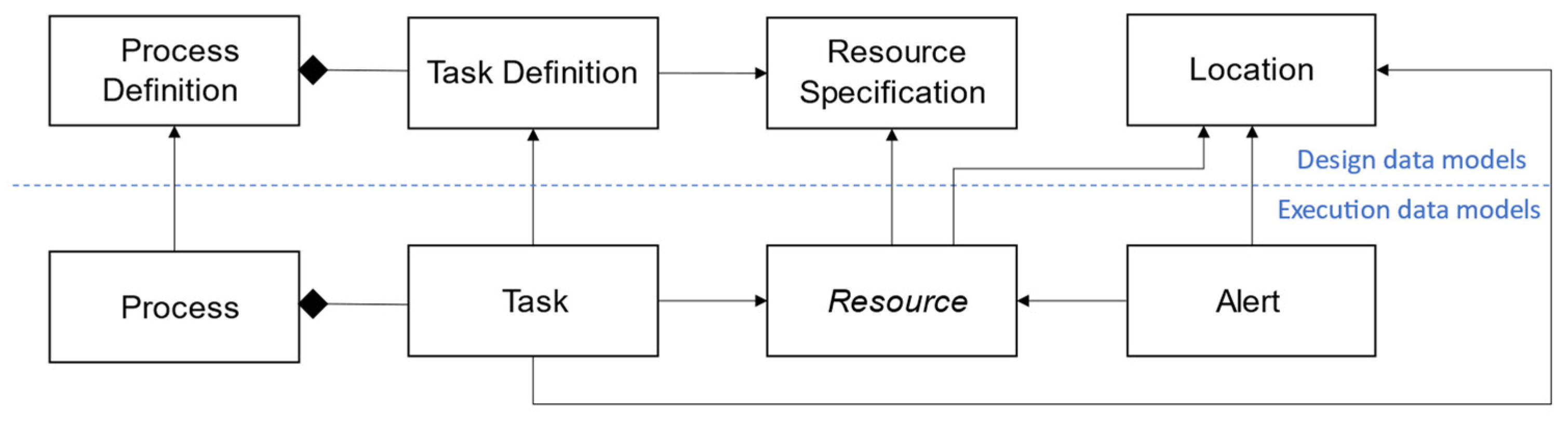

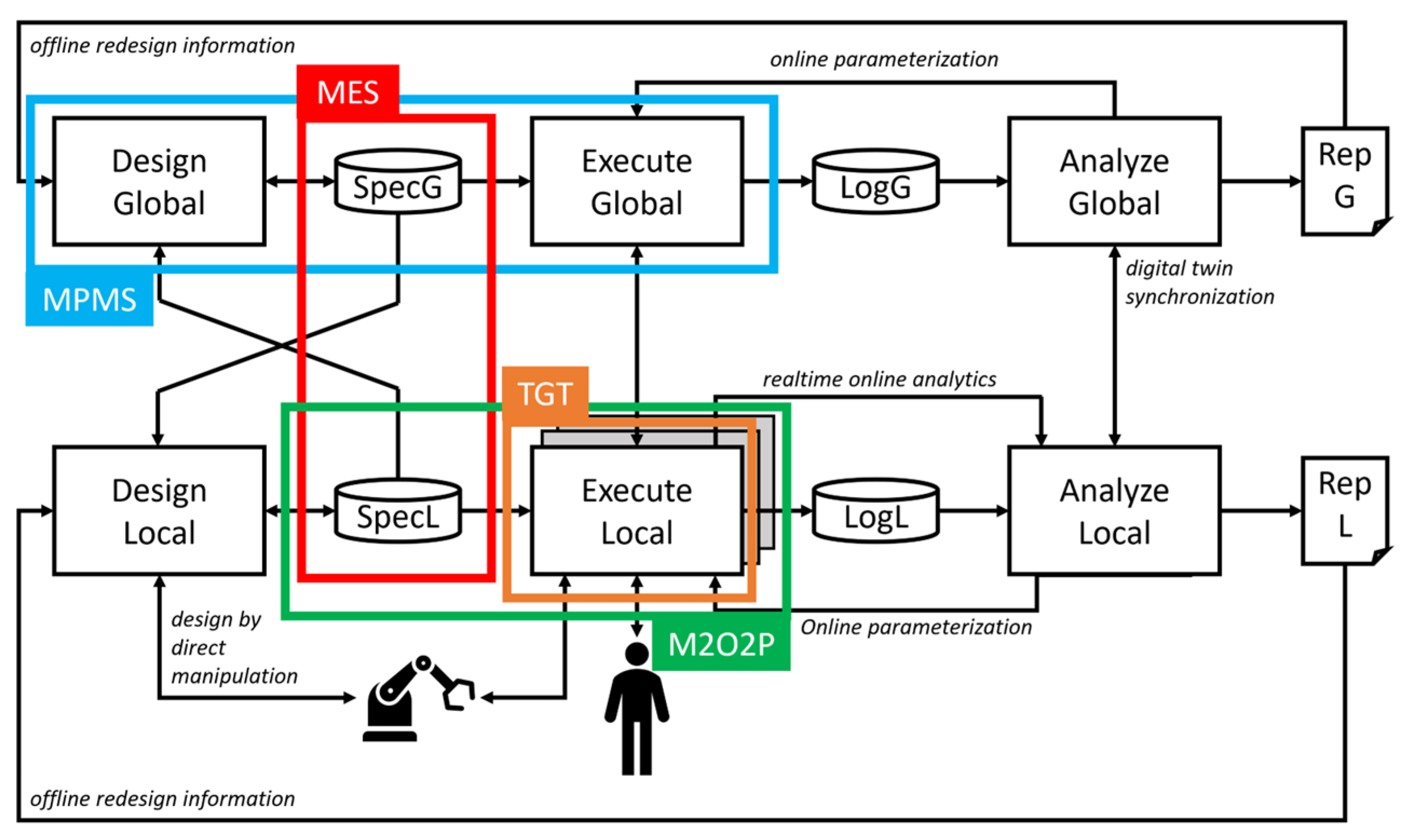

3.1. SHOP4CF Architecture

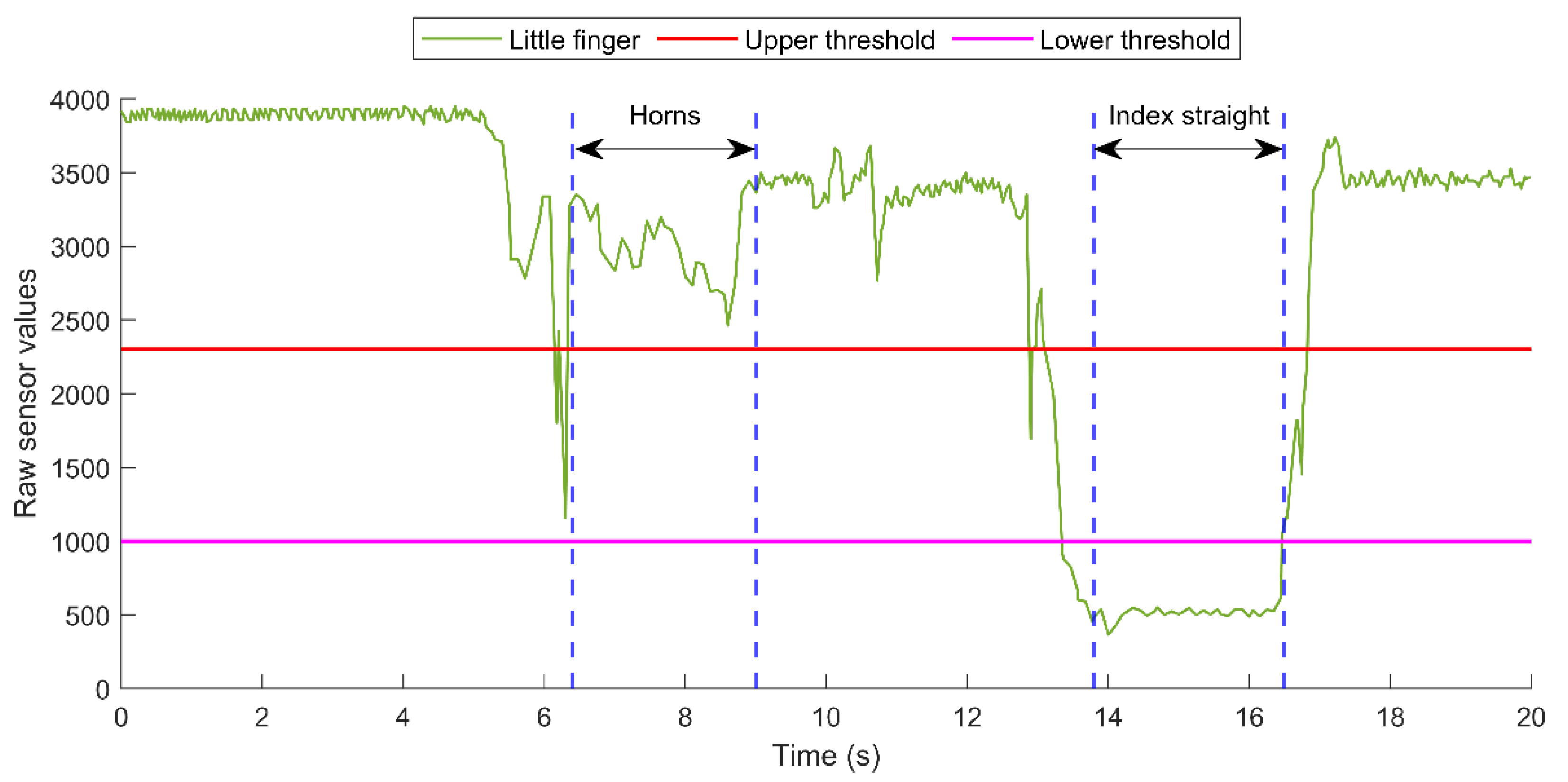

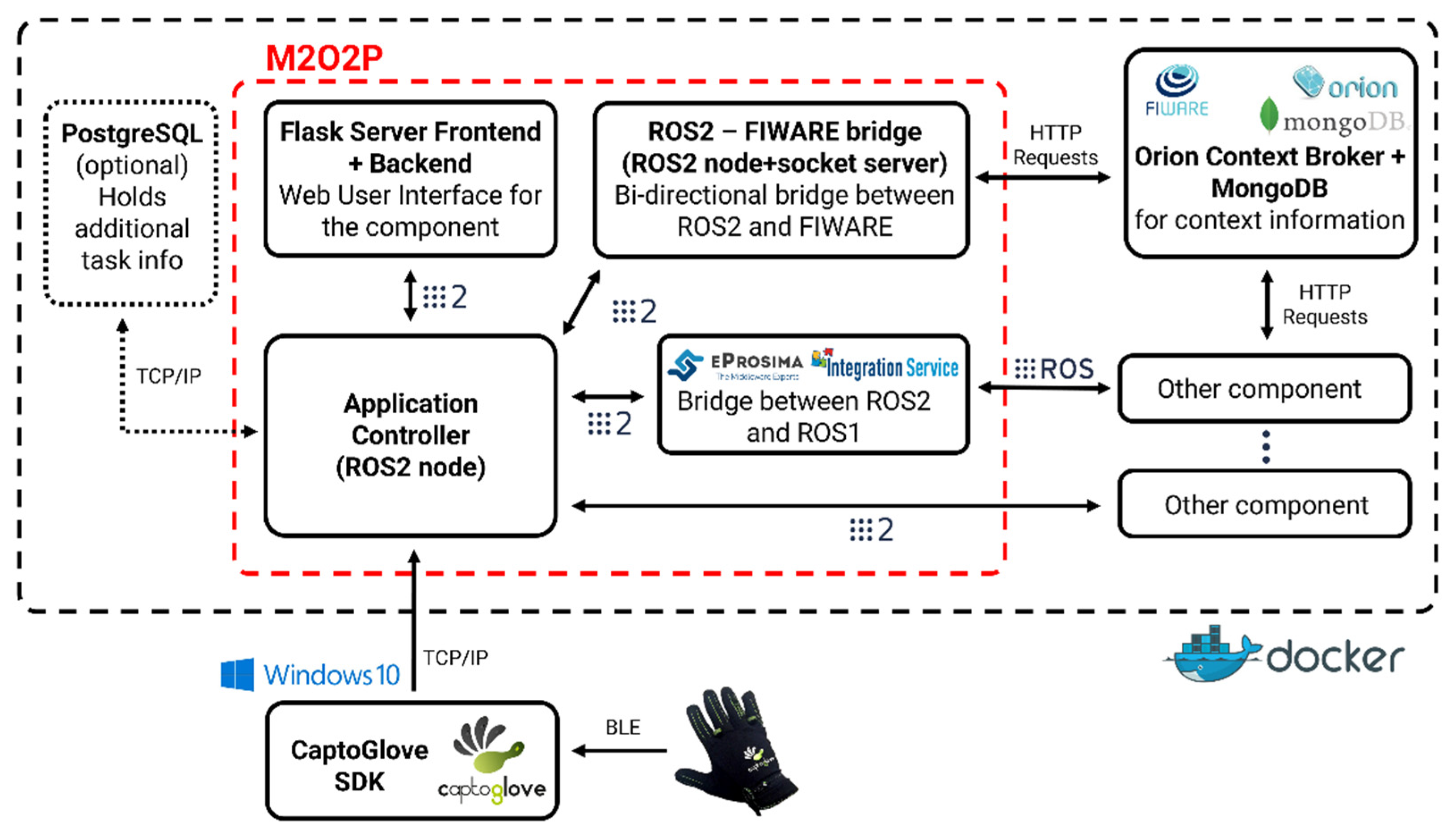

3.2. M2O2P

3.2.1. Application Controller

- Establish a TCP/IP connection with the SDK;

- Transform raw sensor data to states, gestures, and commands;

- Receive tasks from FIWARE and, if necessary, retrieve additional task information from PostgreSQL (communication is explained in Section 4.3.2);

- Provide additional options such as calibration, filtering by the task, and testing mode (these options are further elaborated in Section 3.2.2).

| Algorithm 1: How Application Controller transforms raw sensor data to commands |

| Result: From raw sensor data to commands while True do |

| if Message from any glove received then |

| Retrieve sensor data, transform to states, recognize gesture; |

| if Gesture is recognized then |

| while Time is less than 1500ms do |

| if Same gesture is held for 500ms then |

| if Gesture = Gesture set by the task then |

| print Hold gesture for a second to send the command; |

| else |

| print Doesn’t correspond gesture set by the task; |

| break loop; |

| end |

| end |

| Retrieve sensor data, transform to states, recognize gesture; |

| if Gesture = Gesture set by the task AND gesture is held 1500ms then |

| Update task entity status from “inProgress” to “completed”; |

| Update Device entity command id; |

| end |

| end |

| end |

| end |

| end |

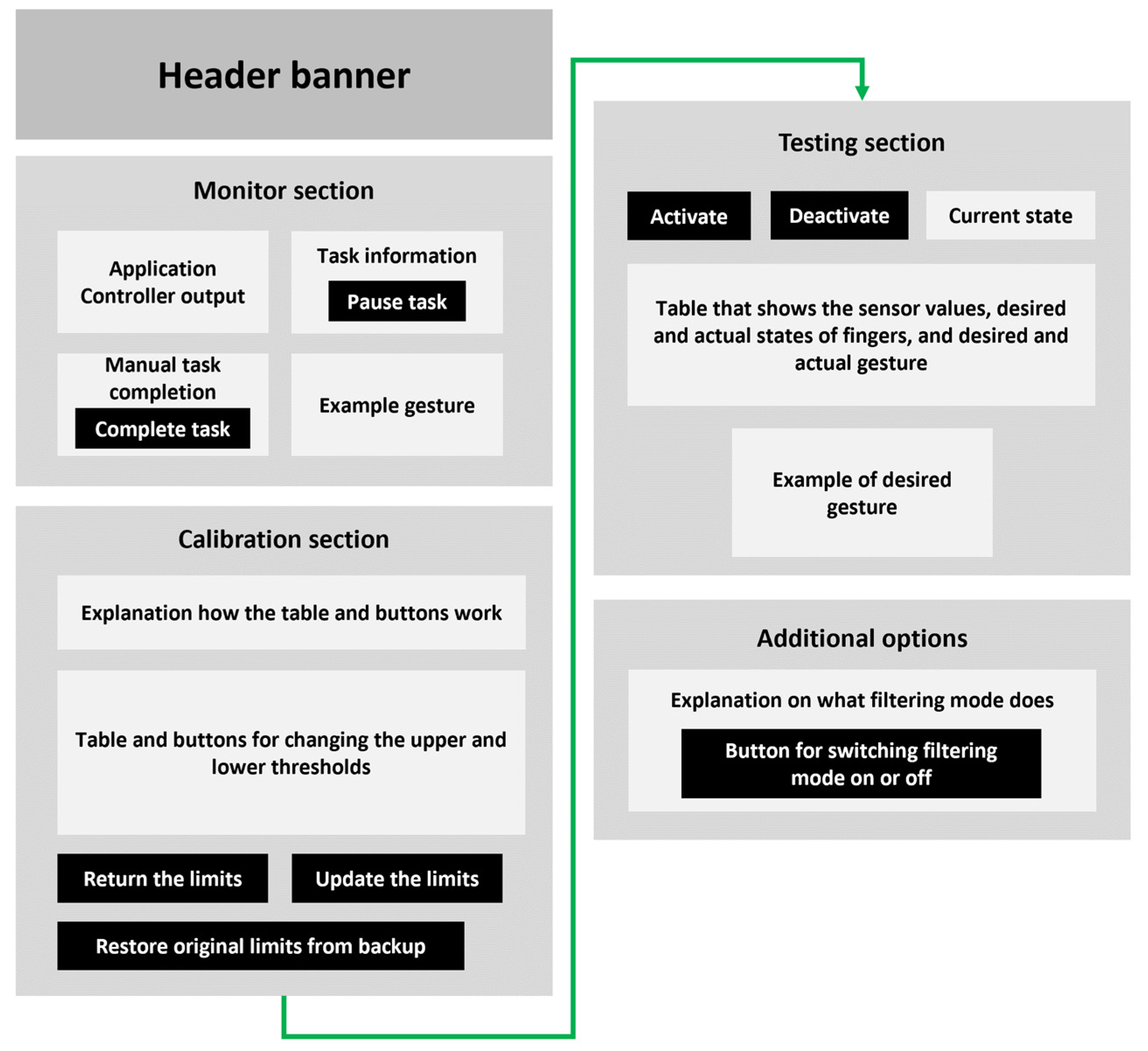

3.2.2. Web User Interface

4. Smart Factory Use Case

4.1. Use Case Description and Envisioned Interaction

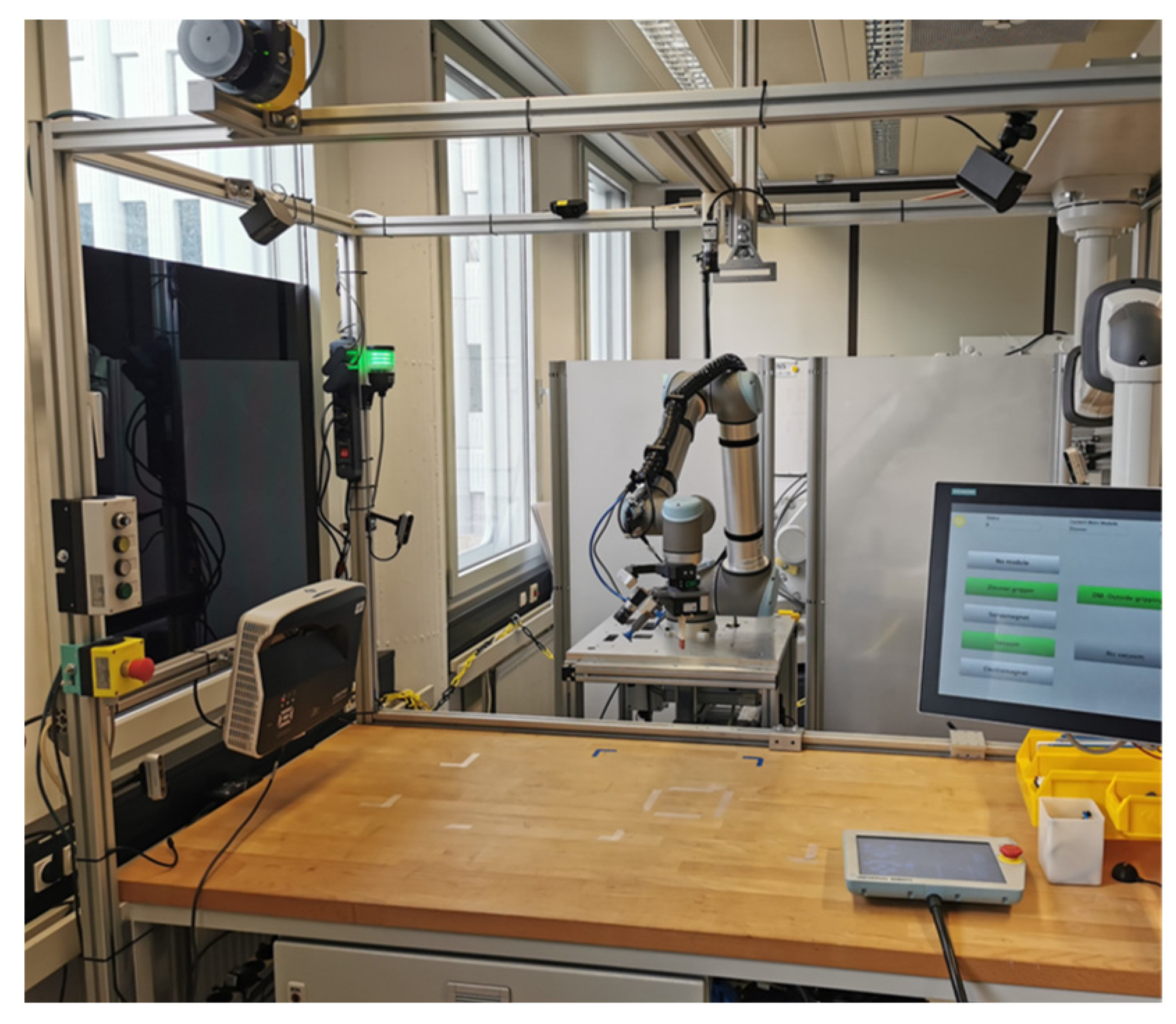

4.2. Use Case Hardware

4.3. Integrated Solution

4.3.1. Components and Architecture

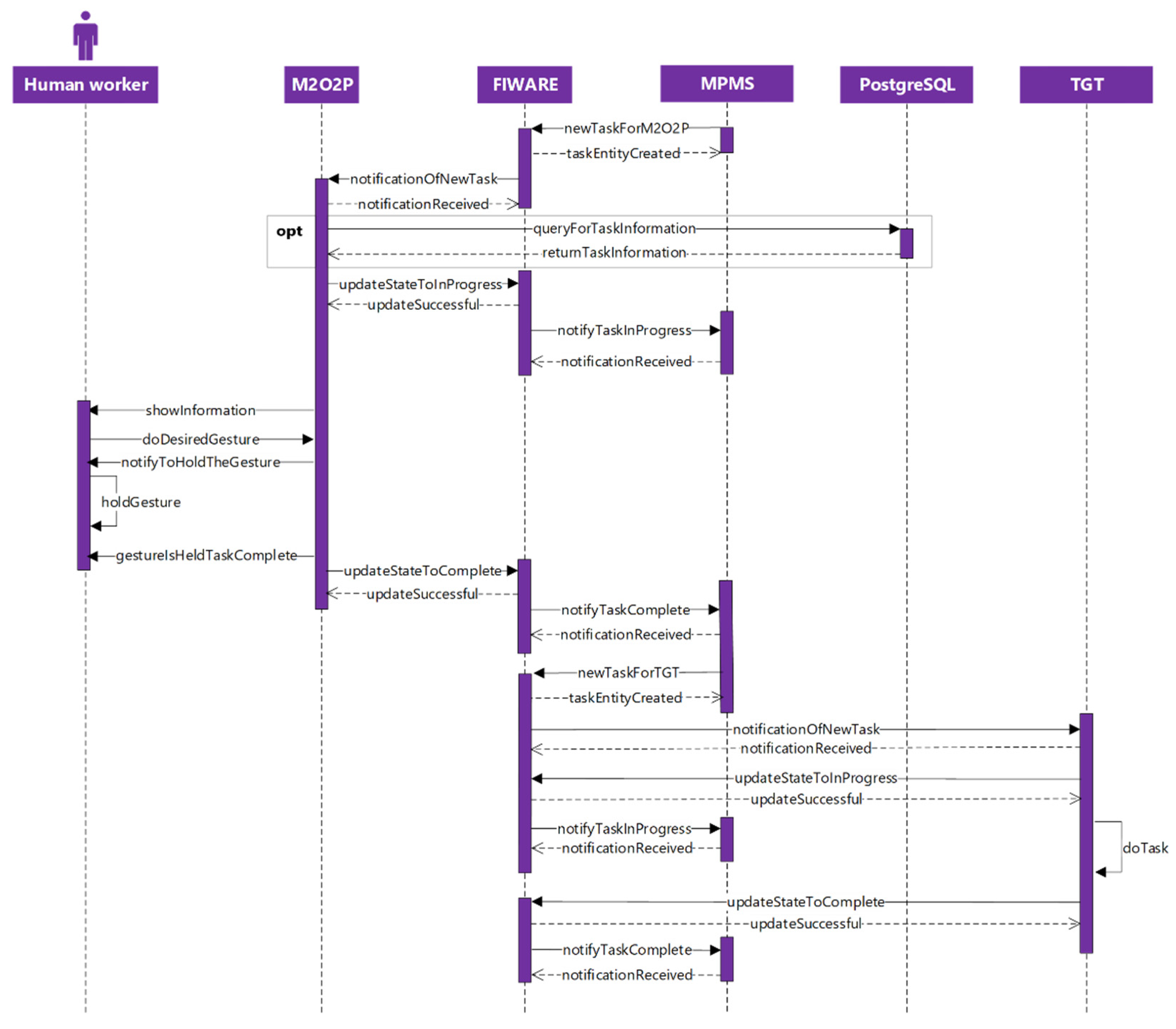

4.3.2. Communication between Components

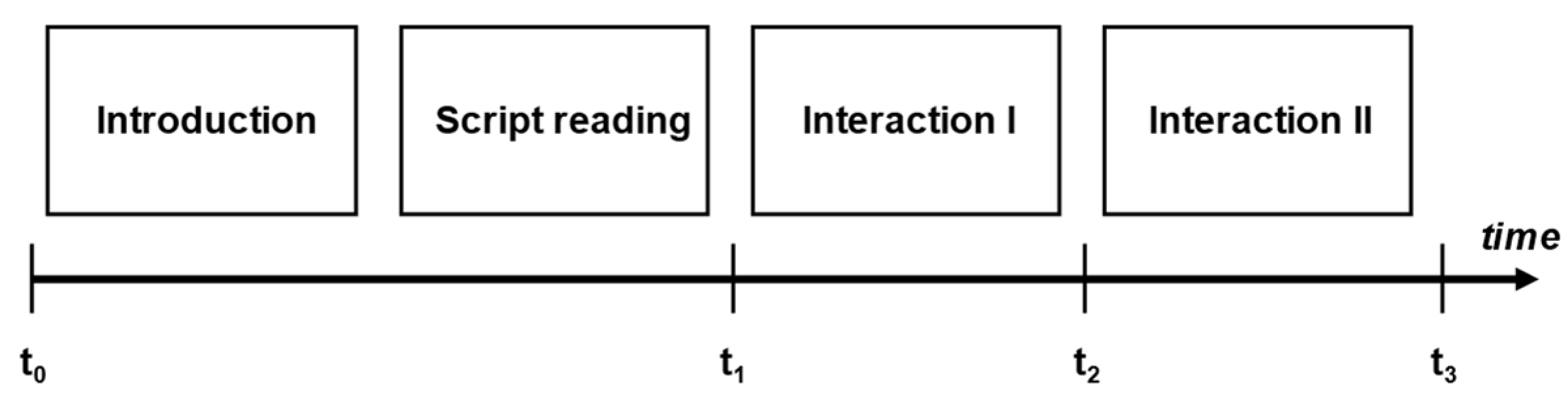

5. Evaluation

6. Results and Discussion

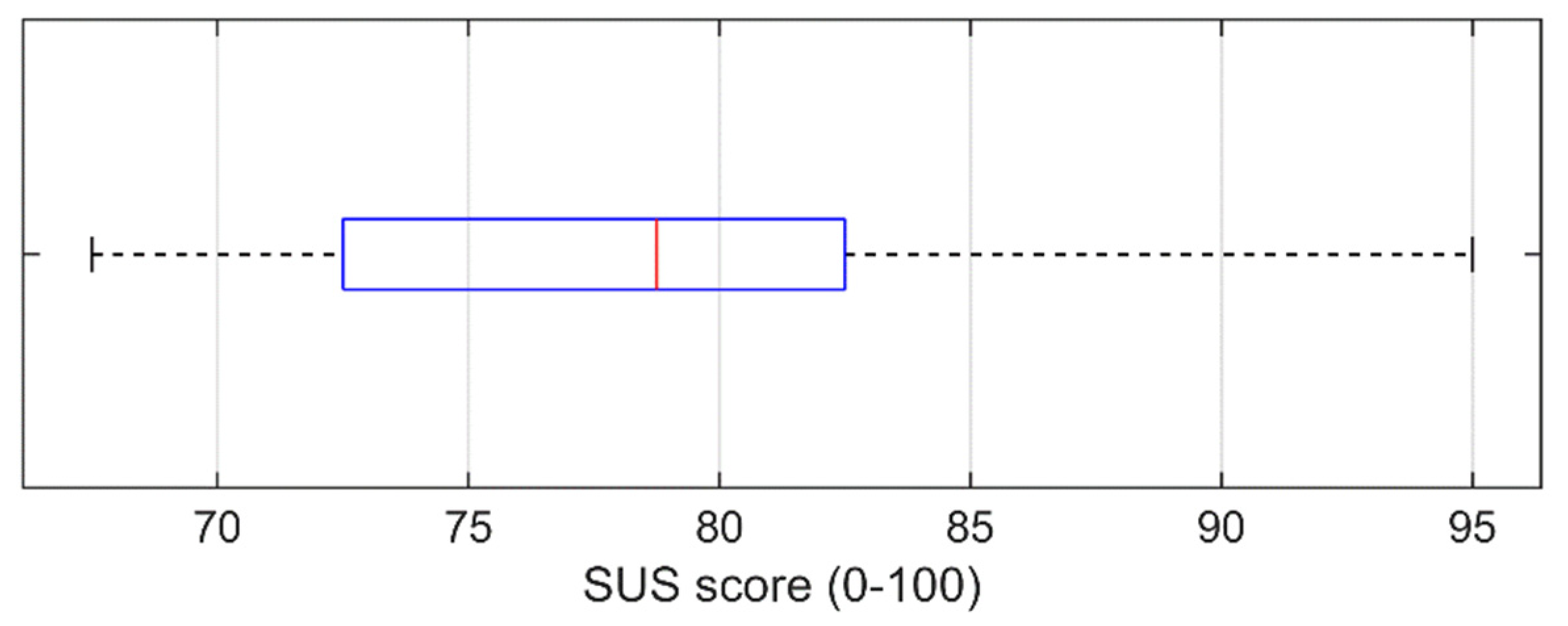

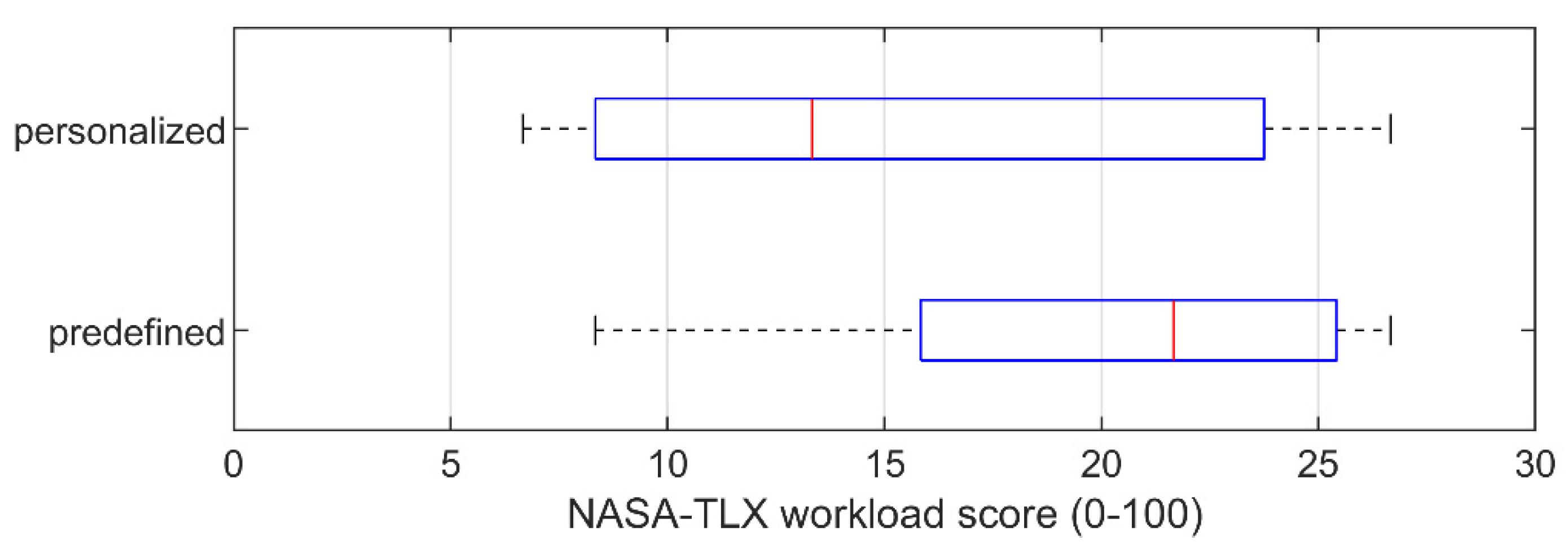

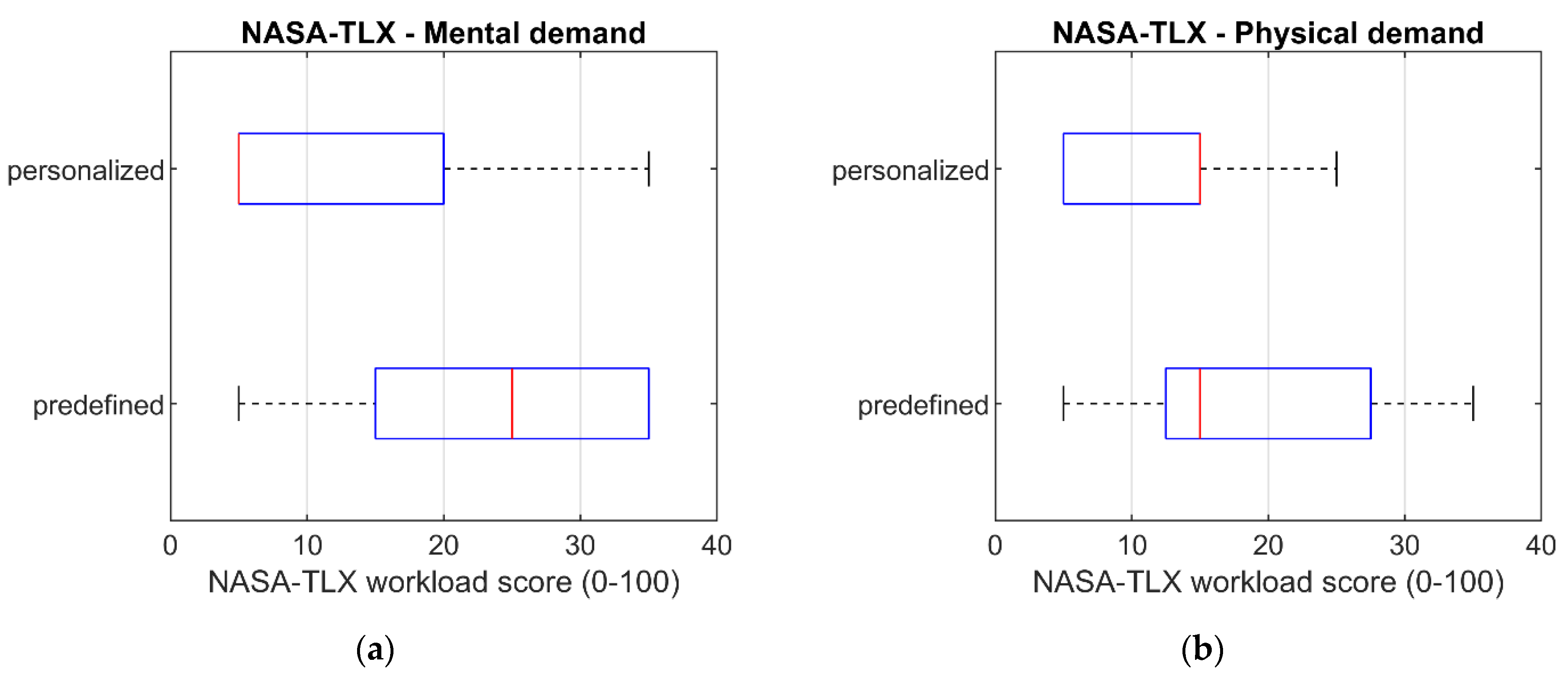

6.1. Test Results

6.2. Comparison

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Suzić, N.; Forza, C.; Trentin, A.; Anišić, Z. Implementation guidelines for mass customization: Current characteristics and suggestions for improvement. Prod. Plan. Control 2018, 29, 856–871. [Google Scholar] [CrossRef]

- Lasi, H.; Fettke, P.; Kemper, H.-G.; Feld, T.; Hoffmann, M. Industry 4.0. Bus. Inf. Syst. Eng. 2014, 6, 239–242. [Google Scholar] [CrossRef]

- Barbazza, L.; Faccio, M.; Oscari, F.; Rosati, G. Agility in assembly systems: A comparison model. Assem. Autom. 2017, 37, 411–421. [Google Scholar] [CrossRef]

- Krämer, N.C.; von der Pütten, A.; Eimler, S. Human-Agent and Human-Robot Interaction Theory: Similarities to and Differences from Human-Human Interaction. In Human-Computer Interaction: The Agency Perspective; Zacarias, M., de Oliveira, J.V., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; Volume 396, pp. 215–240. [Google Scholar] [CrossRef]

- Goodrich, M.A.; Schultz, A.C. Human-Robot Interaction: A Survey. Found. Trends® Hum.–Comput. Interact. 2007, 1, 203–275. [Google Scholar] [CrossRef]

- Prati, E.; Peruzzini, M.; Pellicciari, M.; Raffaeli, R. How to include User Experience in the design of Human-Robot Interaction. Robot. Comput.-Integr. Manuf. 2021, 68, 102072. [Google Scholar] [CrossRef]

- Benyon, D. Designing User Experience, 4th ed.; Pearson Education Limited: Harlow, UK, 2017. [Google Scholar]

- Miller, L.; Kraus, J.; Babel, F.; Baumann, M. More Than a Feeling—Interrelation of Trust Layers in Human-Robot Interaction and the Role of User Dispositions and State Anxiety. Front. Psychol. 2021, 12, 592711. [Google Scholar] [CrossRef]

- Nandi, A.; Jiang, L.; Mandel, M. Gestural query specification. Proc. VLDB Endow. 2013, 7, 289–300. [Google Scholar] [CrossRef][Green Version]

- Liu, J.; Zhong, L.; Wickramasuriya, J.; Vasudevan, V. uWave: Accelerometer-based personalized gesture recognition and its applications. Pervasive Mob. Comput. 2009, 5, 657–675. [Google Scholar] [CrossRef]

- Kotzé, P., Marsden, G., Lindgaard, G., Wesson, J., Winckler, M., Eds.; Human-Computer Interaction—INTERACT 2013. In Proceedings of the 14th IFIP TC 13 International Conference, Cape Town, South Africa, 2–6 September 2013; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8118. [Google Scholar] [CrossRef]

- Sylari, A.; Ferrer, B.R.; Lastra, J.L.M. Hand Gesture-Based On-Line Programming of Industrial Robot Manipulators. In Proceedings of the 2019 IEEE 17th International Conference on Industrial Informatics (INDIN), Helsinki, Finland, 23–25 July 2019; pp. 827–834. [Google Scholar] [CrossRef]

- Weiser, M. The Computer for the 21st Century. Sci. Am. 1991, 265, 8. [Google Scholar] [CrossRef]

- Hozdić, E. Smart Factory for Industry 4.0: A Review. Int. J. Mod. Manuf. Technol. 2015, 7, 28–35. [Google Scholar]

- Shi, Z.; Xie, Y.; Xue, W.; Chen, Y.; Fu, L.; Xu, X. Smart factory in Industry 4.0. Syst. Res. Behav. Sci. 2020, 37, 607–617. [Google Scholar] [CrossRef]

- Lucke, D.; Constantinescu, C.; Westkämper, E. Smart Factory—A Step towards the Next Generation of Manufacturing. In Manufacturing Systems and Technologies for the New Frontier; Mitsuishi, M., Ueda, K., Kimura, F., Eds.; Springer: London, UK, 2008; pp. 115–118. [Google Scholar] [CrossRef]

- Jazdi, N. Cyber physical systems in the context of Industry 4.0. In Proceedings of the 2014 IEEE International Conference on Automation, Quality and Testing, Robotics, Cluj-Napoca, Romania, 22–24 May 2014; pp. 14–16. [Google Scholar] [CrossRef]

- Mohammed, W.M.; Ferrer, B.R.; Iarovyi, S.; Negri, E.; Fumagalli, L.; Lobov, A.; Lastra, J.L.M. Generic platform for manufacturing execution system functions in knowledge-driven manufacturing systems. Int. J. Comput. Integr. Manuf. 2018, 31, 262–274. [Google Scholar] [CrossRef]

- Castano, F.; Haber, R.E.; Mohammed, W.M.; Nejman, M.; Villalonga, A.; Lastra, J.L.M. Quality monitoring of complex manufacturing systems on the basis of model driven approach. Smart Struct. Syst. 2020, 26, 495–506. [Google Scholar] [CrossRef]

- Lee, E.A. Cyber Physical Systems: Design Challenges. In Proceedings of the 11th IEEE International Symposium on Object and Component-Oriented Real-Time Distributed Computing (ISORC), Orlando, FL, USA, 5–7 May 2008; pp. 363–369. [Google Scholar] [CrossRef]

- Kusiak, A. Smart manufacturing. Int. J. Prod. Res. 2017, 56, 508–517. [Google Scholar] [CrossRef]

- IEC 62264 (5-2013); Enterprise-Control System Integration. nternational Electrotechnical Commission: London, UK, 22 May 2013.

- Bettenhausen, K.D.; Kowalewski, S. Cyber-Physical Systems: Chancen und Nutzen Aus Sicht der Automation. In VDI/VDE-Gesellschaft Mess-und Automatisierungstechnik; VDI: Düsseldorf, Germany, 2013; Available online: https://www.vdi.de/ueber-uns/presse/publikationen/details/cyber-physical-systems-chancen-und-nutzen-aus-sicht-der-automation (accessed on 12 July 2022).

- Wang, S.; Zhang, C.; Liu, C.; Li, D.; Tang, H. Cloud-assisted interaction and negotiation of industrial robots for the smart factory. Comput. Electr. Eng. 2017, 63, 66–78. [Google Scholar] [CrossRef]

- Torn, I.; Vaneker, T. Mass Personalization with Industry 4.0 by SMEs: A concept for collaborative networks. Procedia Manuf. 2019, 28, 135–141. [Google Scholar] [CrossRef]

- Kolbeinsson, A.; Lagerstedt, E.; Lindblom, J. Foundation for a classification of collaboration levels for human-robot cooperation in manufacturing. Prod. Manuf. Res. 2019, 7, 448–471. [Google Scholar] [CrossRef]

- Sheridan, T.B. Human–Robot Interaction: Status and Challenges. Hum. Factors J. Hum. Factors Ergon. Soc. 2016, 58, 525–532. [Google Scholar] [CrossRef]

- McColl, D.; Nejat, G. Affect detection from body language during social HRI. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; pp. 1013–1018. [Google Scholar] [CrossRef]

- Hormaza, L.A.; Mohammed, W.M.; Ferrer, B.R.; Bejarano, R.; Lastra, J.L.M. On-line Training and Monitoring of Robot Tasks through Virtual Reality. In Proceedings of the 2019 IEEE 17th International Conference on Industrial Informatics (INDIN), Helsinki, Finland, 22–25 July 2019; pp. 841–846. [Google Scholar] [CrossRef]

- Lazaro, O.D.M.; Mohammed, W.M.; Ferrer, B.R.; Bejarano, R.; Lastra, J.L.M. An Approach for adapting a Cobot Workstation to Human Operator within a Deep Learning Camera. In Proceedings of the 2019 IEEE 17th International Conference on Industrial Informatics (INDIN), Helsinki, Finland, 22–25 July 2019; pp. 789–794. [Google Scholar] [CrossRef]

- Lackey, S.; Barber, D.; Reinerman, L.; Badler, N.I.; Hudson, I. Defining Next-Generation Multi-Modal Communication in Human Robot Interaction. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Atlanta, GA, USA, 23 September 2011; SAGE Publications: Los Angeles, CA, USA, 2011; Volume 55, pp. 461–464. [Google Scholar] [CrossRef]

- Li, S.; Zhang, X. Implicit Intention Communication in Human–Robot Interaction Through Visual Behavior Studies. IEEE Trans. Human-Machine Syst. 2017, 47, 437–448. [Google Scholar] [CrossRef]

- Jones, A.D.; Watzlawick, P.; Bevin, J.H.; Jackson, D.D. Pragmatics of Human Communication: A Study of Interactional Patterns, Pathologies, and Paradoxes; Norton: New York, NY, USA, 1980. [Google Scholar] [CrossRef]

- Denkowski, M.; Dmitruk, K.; Sadkowski, L. Building Automation Control System driven by Gestures. In IFAC-PapersOnLine; Elsevier: Amsterdam, The Netherlands, 2015; Volume 48, pp. 246–251. [Google Scholar] [CrossRef]

- Jamone, L.; Natale, L.; Metta, G.; Sandini, G. Highly Sensitive Soft Tactile Sensors for an Anthropomorphic Robotic Hand. IEEE Sens. J. 2015, 15, 4226–4233. [Google Scholar] [CrossRef]

- Yuan, W.; Dong, S.; Adelson, E.H. GelSight: High-Resolution Robot Tactile Sensors for Estimating Geometry and Force. Sensors 2017, 17, 2762. [Google Scholar] [CrossRef] [PubMed]

- Chi, C.; Sun, X.; Xue, N.; Li, T.; Liu, C. Recent Progress in Technologies for Tactile Sensors. Sensors 2018, 18, 948. [Google Scholar] [CrossRef] [PubMed]

- Schmitz, A.; Maiolino, P.; Maggiali, M.; Natale, L.; Cannata, G.; Metta, G. Methods and Technologies for the Implementation of Large-Scale Robot Tactile Sensors. IEEE Trans. Robot. 2011, 27, 389–400. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.-R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Bingol, M.C.; Aydogmus, O. Performing predefined tasks using the human–robot interaction on speech recognition for an industrial robot. Eng. Appl. Artif. Intell. 2020, 95, 103903. [Google Scholar] [CrossRef]

- Coronado, E.; Villalobos, J.; Bruno, B.; Mastrogiovanni, F. Gesture-based robot control: Design challenges and evaluation with humans. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 2761–2767. [Google Scholar] [CrossRef]

- Guo, L.; Lu, Z.; Yao, L. Human-Machine Interaction Sensing Technology Based on Hand Gesture Recognition: A Review. IEEE Trans. Human-Machine Syst. 2021, 51, 300–309. [Google Scholar] [CrossRef]

- Shen, Z.; Yi, J.; Li, X.; Mark, L.H.P.; Hu, Y.; Wang, Z. A soft stretchable bending sensor and data glove applications. In Proceedings of the 2016 IEEE International Conference on Real-Time Computing and Robotics (RCAR), Angkor Wat, Cambodia, 6–10 June 2016; pp. 88–93. [Google Scholar] [CrossRef][Green Version]

- Lin, B.-S.; Lee, I.-J.; Yang, S.-Y.; Lo, Y.-C.; Lee, J.; Chen, J.-L. Design of an Inertial-Sensor-Based Data Glove for Hand Function Evaluation. Sensors 2018, 18, 1545. [Google Scholar] [CrossRef]

- Jones, C.L.; Wang, F.; Morrison, R.; Sarkar, N.; Kamper, D.G. Design and Development of the Cable Actuated Finger Exoskeleton for Hand Rehabilitation Following Stroke. IEEE/ASME Trans. Mechatron. 2012, 19, 131–140. [Google Scholar] [CrossRef]

- Wen, R.; Tay, W.-L.; Nguyen, B.P.; Chng, C.-B.; Chui, C.-K. Hand gesture guided robot-assisted surgery based on a direct augmented reality interface. Comput. Methods Programs Biomed. 2014, 116, 68–80. [Google Scholar] [CrossRef]

- Liu, H.; Wang, L. Gesture recognition for human-robot collaboration: A review. Int. J. Ind. Ergon. 2018, 68, 355–367. [Google Scholar] [CrossRef]

- Pisharady, P.; Saerbeck, M. Recent methods and databases in vision-based hand gesture recognition: A review. Comput. Vis. Image Underst. 2015, 141, 152–165. [Google Scholar] [CrossRef]

- Cohen, P.R. The role of natural language in a multimodal interface. In Proceedings of the 5th Annual ACM Symposium on User interface Software and Technology—UIST’ 92, Monteray, CA, USA, 15–18 November 1992; pp. 143–149. [Google Scholar] [CrossRef]

- Maurtua, I.; Fernández, I.; Tellaeche, A.; Kildal, J.; Susperregi, L.; Ibarguren, A.; Sierra, B. Natural multimodal communication for human–robot collaboration. Int. J. Adv. Robot. Syst. 2017, 14, 172988141771604. [Google Scholar] [CrossRef]

- Grefen, P.W.P.J.; Boultadakis, G. Designing an Integrated System for Smart Industry: The Development of the HORSE Architecture; Independently Published: Traverse City, MI, USA, 2021. [Google Scholar]

- Zimniewicz, M. Deliverable 3.2—SHOP4CF Architecture. 2020; p. 26. Available online: https://live-shop4cf.pantheonsite.io/wp-content/uploads/2021/07/SHOP4CF-WP3-D32-DEL-210119-v1.0.pdf (accessed on 8 August 2022).

- Cirillo, F.; Solmaz, G.; Berz, E.L.; Bauer, M.; Cheng, B.; Kovacs, E. A Standard-Based Open Source IoT Platform: FIWARE. IEEE Internet Things Mag. 2019, 2, 12–18. [Google Scholar] [CrossRef]

- ETSI GS CIM 009 V1.1.1 (2019-01); Context Information Management (CIM) NGSI-LD API. ETSI: Sophia Antipolis Cedex, France, 2019.

- Araujo, V.; Mitra, K.; Saguna, S.; Åhlund, C. Performance evaluation of FIWARE: A cloud-based IoT platform for smart cities. J. Parallel Distrib. Comput. 2019, 132, 250–261. [Google Scholar] [CrossRef]

- West, M. Developing High Quality Data Models; Morgan Kaufmann: Burlington, MA, USA, 2011. [Google Scholar] [CrossRef]

- Caeiro-Rodríguez, M.; Otero-González, I.; Mikic-Fonte, F.; Llamas-Nistal, M. A Systematic Review of Commercial Smart Gloves: Current Status and Applications. Sensors 2021, 21, 2667. [Google Scholar] [CrossRef] [PubMed]

- Macenski, S.; Foote, T.; Gerkey, B.; Lalancette, C.; Woodall, W. Robot Operating System 2: Design, architecture, and uses in the wild. Sci. Robot. 2022, 7, eabm6074. [Google Scholar] [CrossRef]

- Maruyama, Y.; Kato, S.; Azumi, T. Exploring the performance of ROS2. In Proceedings of the 13th International Conference on Embedded Software, Pittsburgh, PA, USA, 1–7 October 2016; pp. 1–10. [Google Scholar] [CrossRef]

- Norman, D. The Design of Everyday Things; Currency Doubleday: New York, NY, USA, 2013; ISBN 0-465-07299-2. [Google Scholar]

- Buchholz, D. Bin-Picking; Springer International Publishing: Cham, Switzerland, 2016; Volume 44. [Google Scholar] [CrossRef]

- ISO 9241:112 (2017); Ergonomics of Human-System Interaction—Part 112: Principles for the Presentation of Information: Ergonomics of Human-System Interaction, 1st ed. International Organization for Standardization: Geneva, Switzerland, 2017.

- Bouklis, P.; Garbi, A. Deliverable 5.1—Definition of the Deployment Scenarios; 2020; p. 49. Available online: https://live-shop4cf.pantheonsite.io/wp-content/uploads/2021/07/SHOP4CF-WP5-D51-DEL-201215-v1.0.pdf (accessed on 8 August 2022).

- OMG. Business Process Model and Notation (BPMN), Version 2.0; 2013. Available online: http://www.omg.org/spec/BPMN/2.0.2 (accessed on 8 July 2022).

- Prades, L.; Romero, F.; Estruch, A.; García-Dominguez, A.; Serrano, J. Defining a Methodology to Design and Implement Business Process Models in BPMN According to the Standard ANSI/ISA-95 in a Manufacturing Enterprise. Procedia Eng. 2013, 63, 115–122. [Google Scholar] [CrossRef][Green Version]

- ISO 10218-1:2011; Robots and robotic devices—Safety requirements for industrial robots—Part 1: Robots, 2nd ed. International Organization for Standarization: Geneva, Switzerland, 2011.

- Pantano, M.; Blumberg, A.; Regulin, D.; Hauser, T.; Saenz, J.; Lee, D. Design of a Collaborative Modular End Effector Considering Human Values and Safety Requirements for Industrial Use Cases. In Human-Friendly Robotics 2021; Palli, G., Melchiorri, C., Meattini, R., Eds.; Springer International Publishing: Cham, Switzerland, 2022; Volume 23, pp. 45–60. [Google Scholar] [CrossRef]

- Vanderfeesten, I.; Erasmus, J.; Traganos, K.; Bouklis, P.; Garbi, A.; Boultadakis, G.; Dijkman, R.; Grefen, P. Developing Process Execution Support for High-Tech Manufacturing Processes. In Empirical Studies on the Development of Executable Business Processes; Springer: Cham, Switzerland, 2019; pp. 113–142. [Google Scholar] [CrossRef]

- Pantano, M.; Pavlovskyi, Y.; Schulenburg, E.; Traganos, K.; Ahmadi, S.; Regulin, D.; Lee, D.; Saenz, J. Novel Approach Using Risk Analysis Component to Continuously Update Collaborative Robotics Applications in the Smart, Connected Factory Model. Appl. Sci. 2022, 12, 5639. [Google Scholar] [CrossRef]

- Brooke, J. SUS—A Quick and Dirty Usability Scale: Usability Evaluation in Industry; CRC Press: Boca Raton, FL, USA, 1996; Available online: https://www.crcpress.com/product/isbn/9780748404605 (accessed on 18 July 2022).

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum.-Comput. Interact 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Rautiainen, S. Design and Implementation of a Multimodal System for Human—Robot Interactions in Bin-Picking Operations. Master’s Thesis, Tampere University, Tampere, Finland, 2022. Available online: https://urn.fi/URN:NBN:fi:tuni-202208166457 (accessed on 22 August 2022).

- Mazhar, O.; Navarro, B.; Ramdani, S.; Passama, R.; Cherubini, A. A real-time human-robot interaction framework with robust background invariant hand gesture detection. Robot. Comput.-Integr. Manuf. 2019, 60, 34–48. [Google Scholar] [CrossRef]

- Neto, P.; Simão, M.; Mendes, N.; Safeea, M. Gesture-based human-robot interaction for human assistance in manufacturing. Int. J. Adv. Manuf. Technol. 2019, 101, 119–135. [Google Scholar] [CrossRef]

- Xu, D.; Wu, X.; Chen, Y.-L.; Xu, Y. Online Dynamic Gesture Recognition for Human Robot Interaction. J. Intell. Robot. Syst. 2015, 77, 583–596. [Google Scholar] [CrossRef]

| Gesture | Thumb | Index | Middle | Ring | Little | Pose |

|---|---|---|---|---|---|---|

| Horns | 2 | 0 | 2 | 2 | 0 |  |

| Index and middle straight | 2 | 0 | 0 | 2 | 2 |  |

| Index and ring straight | 2 | 0 | 2 | 0 | 2 |  |

| Index, middle, and little straight | 2 | 0 | 0 | 2 | 0 |  |

| Index, middle, and ring straight | 2 | 0 | 2 | 0 | 2 |  |

| Index, ring, and little straight | 2 | 0 | 2 | 0 | 0 |  |

| Middle and little straight | 2 | 2 | 0 | 2 | 0 |  |

| Middle and ring straight | 2 | 2 | 0 | 0 | 2 |  |

| Middle straight | 2 | 2 | 0 | 2 | 2 |  |

| Middle, ring, and little straight | 2 | 2 | 0 | 0 | 0 |  |

| Little straight | 2 | 2 | 2 | 2 | 0 |  |

| Point with index | 2 | 0 | 2 | 2 | 0 |  |

| Ring and little straight | 2 | 2 | 2 | 0 | 0 |  |

| Ring straight | 2 | 2 | 2 | 0 | 2 |  |

| Thumb and index straight | 0 | 0 | 2 | 2 | 2 |  |

| Thumb and little straight | 0 | 2 | 2 | 2 | 0 |  |

| Thumb, index, and middle straight | 0 | 0 | 0 | 2 | 2 |  |

| Thumb, index, and little straight | 0 | 0 | 2 | 2 | 0 |  |

| Thumb, index, middle, and little straight | 0 | 0 | 0 | 0 | 2 |  |

| Thumb, middle, and little straight | 0 | 2 | 0 | 2 | 0 |  |

| Thumbs up | 0 | 2 | 2 | 2 | 2 |  |

| Component Name | Functionality in the Use Case | Level |

|---|---|---|

| Multi-Modal Offline and Online Programming solution (M2O2P) | Enables human–robot interactions with sensor glove | Local |

| Manufacturing Process Management System (MPMS) | Orchestrator application that handles process enactment and task assignment | Global |

| Siemens Trajectory Generation tool (TGT) | Provides trajectory and motion control for the robot | Local |

| Problematic Gesture | Number of Problematic Interactions |

|---|---|

| Middle and ring straight | 1 |

| Index and ring straight | 1 |

| Number of successful interactions: | 208 |

| Number of problematic interactions: | 2 |

| Accuracy of the gesture recognition without readjusting fingers: | 99.05% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rautiainen, S.; Pantano, M.; Traganos, K.; Ahmadi, S.; Saenz, J.; Mohammed, W.M.; Martinez Lastra, J.L. Multimodal Interface for Human–Robot Collaboration. Machines 2022, 10, 957. https://doi.org/10.3390/machines10100957

Rautiainen S, Pantano M, Traganos K, Ahmadi S, Saenz J, Mohammed WM, Martinez Lastra JL. Multimodal Interface for Human–Robot Collaboration. Machines. 2022; 10(10):957. https://doi.org/10.3390/machines10100957

Chicago/Turabian StyleRautiainen, Samu, Matteo Pantano, Konstantinos Traganos, Seyedamir Ahmadi, José Saenz, Wael M. Mohammed, and Jose L. Martinez Lastra. 2022. "Multimodal Interface for Human–Robot Collaboration" Machines 10, no. 10: 957. https://doi.org/10.3390/machines10100957

APA StyleRautiainen, S., Pantano, M., Traganos, K., Ahmadi, S., Saenz, J., Mohammed, W. M., & Martinez Lastra, J. L. (2022). Multimodal Interface for Human–Robot Collaboration. Machines, 10(10), 957. https://doi.org/10.3390/machines10100957