An Inexact Nonsmooth Quadratic Regularization Algorithm

Abstract

1. Introduction

2. Preliminaries

3. Inexact Nonsmooth Quadratic Regularization Algorithm

- The first-order term of φ: Algorithm 1 uses the inexact gradient g as stated in (8), while ([23], Algorithm 6.1) uses the full gradient , that is,We adopt a different tolerance that is easier to verify, which in turn requires a slightly modified update rule for the regularization parameters in Algorithm 1. These are specified as follows; see more explanations in Remark 2.

- Different stopping criterion: the stopping criterion of Algorithm 1 is (see Step 5 of Algorithm 1), while the stopping criterion of ([23], Algorithm 6.1) is

- Different update rule for : Algorithm 1 uses parameter to ensure that all regularization parameters have a positive lower bound, while ([23], Algorithm 6.1) does not use such a bound.

| Algorithm 1 Inexact Nonsmooth Quadratic Regularization Algorithm |

|

4. Implementation and Numerical Results

- (1)

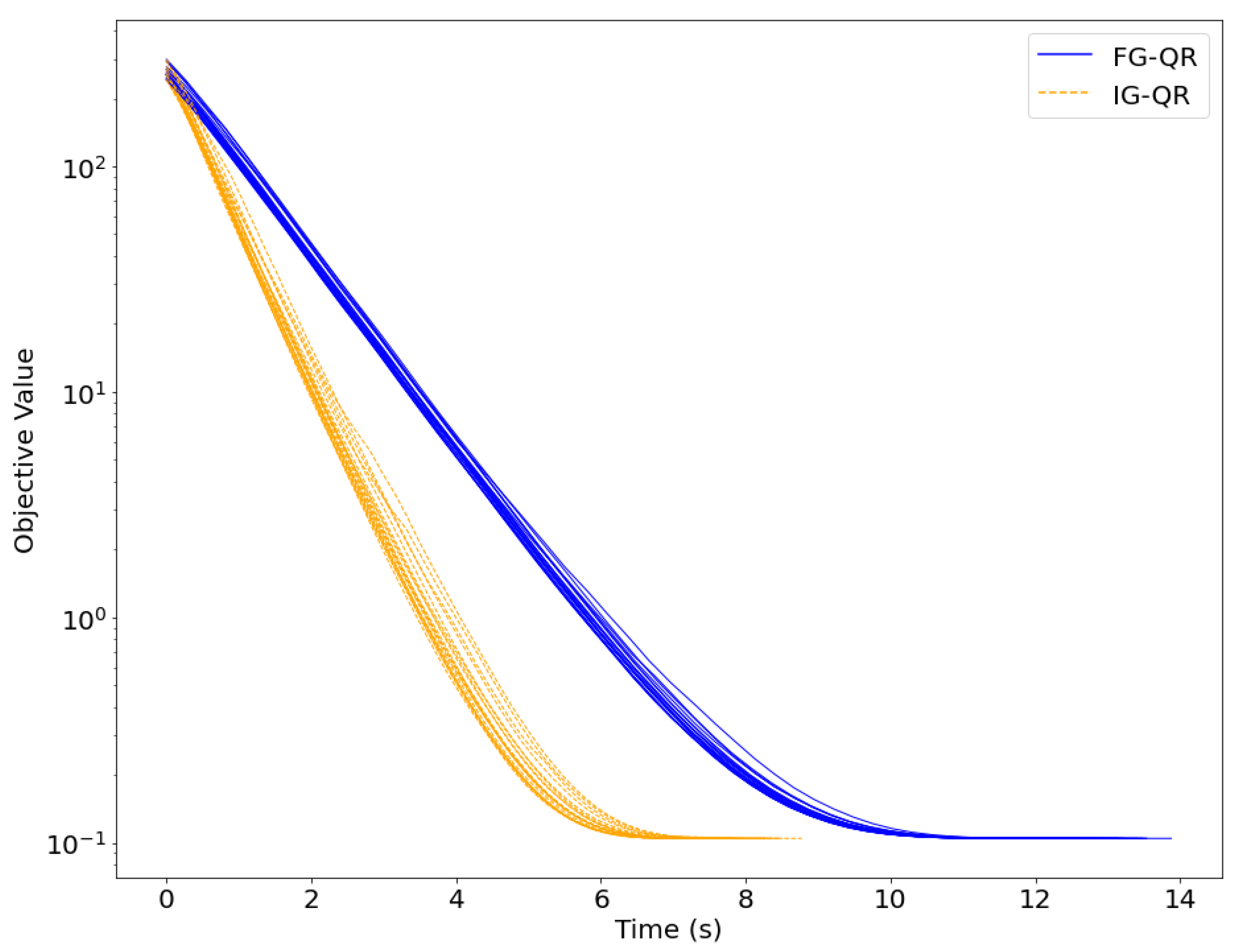

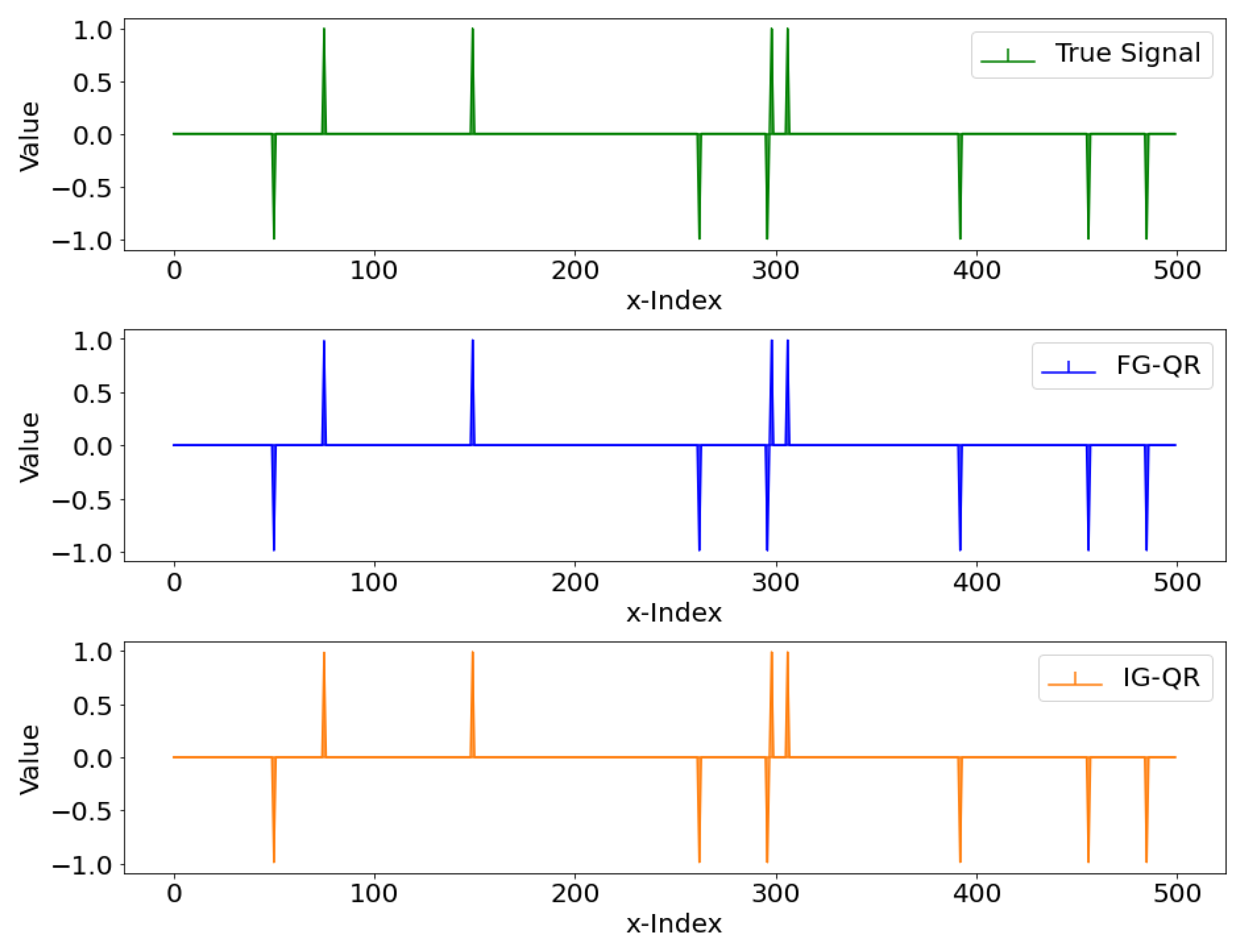

- Algorithm 1 employs inexact gradients, which are referred to as IG-QR for short. That is, in the subproblem (11) in the k-th iteration, where is an index subset randomly sampled from I without replacement. The sampling technique is referred to the stochastic gradient algorithm, for example [25,26,31]. More specifically, we set the sampling ratio at .

- (2)

- Algorithm 1 employs full gradients, which are referred to as FG-QR for short. That is, in the subproblem (11) in the k-th iteration.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Conn, A.R.; Gould, N.I.M.; Toint, P.L. Trust Region Methods, 1st ed.; SIAM: Philadelphia, PA, USA, 2000; pp. 113–434. ISBN 978-0-89871-985-7. [Google Scholar]

- Toint, P.L. Nonlinear stepsize control, trust regions and regularizations for unconstrained optimization. Optim. Method Softw. 2013, 28, 82–95. [Google Scholar] [CrossRef][Green Version]

- Grapiglia, G.N.; Yuan, J.Y.; Yuan, Y.X. Nonlinear stepsize control algorithms: Complexity bounds for first- and second-order optimality. J. Optim. Theory Appl. 2016, 171, 980–997. [Google Scholar] [CrossRef]

- Bergou, E.H.; Diouane, Y.; Gratton, S. A line-search algorithm inspired by the adaptive cubic regularization framework and complexity analysis. J. Optim. Theory Appl. 2018, 178, 885–913. [Google Scholar] [CrossRef]

- Dennis, J.E., Jr.; Li, S.B.B.; Tapia, R.A. A unified approach to global convergence of trust region methods for nonsmooth optimization. Math. Program. 1995, 68, 319–346. [Google Scholar] [CrossRef][Green Version]

- Qi, L.Q.; Sun, J. A trust region algorithm for minimization of locally Lipschitzian functions. Math. Program. 1994, 66, 25–43. [Google Scholar] [CrossRef]

- Mashreghi, Z.; Nasri, M. Bregman distance regularization for nonsmooth and nonconvex optimization. Can. Math. Bul.-Bul. Can. Math. 2024, 67, 415–424. [Google Scholar] [CrossRef]

- Wang, X.M. Subgradient algorithms on Riemannian manifolds of lower bounded curvatures. Optimization 2018, 67, 179–194. [Google Scholar] [CrossRef]

- Wang, J.H.; Wang, X.M.; Li, C.; Yao, J.C. Convergence analysis of gradient algorithms on Riemannian manifolds without curvature constraints and application to Riemannian Mass. SIAM J. Optim. 2021, 31, 172–199. [Google Scholar] [CrossRef]

- Sun, W.M.; Liu, H.W.; Liu, Z.X. A regularized limited memory subspace minimization conjugate gradient method for unconstrained optimization. Numer. Algorithms 2023, 94, 1919–1948. [Google Scholar] [CrossRef]

- Lee, J.D.; Sun, Y.K.; Saunders, M.A. Proximal Newton-type methods for minimizing composite functions. SIAM J. Optim. 2014, 24, 1420–1443. [Google Scholar] [CrossRef]

- Kim, D.; Sra, S.; Dhillon, I. A scalable trust-region algorithm with application to mixed-norm regression. In Proceedings of the 27th International Conference on Machine Learning (ICML 2010), Haifa, Israel, 21–24 June 2010; pp. 519–526. [Google Scholar]

- Cartis, C.; Gould, N.I.M.; Toint, P.L. On the evaluation complexity of composite function minimization with applications to nonconvex nonlinear programming. SIAM J. Optim. 2011, 21, 1721–1739. [Google Scholar] [CrossRef]

- Chen, Z.A.; Milzarek, A.; Wen, Z.W. A trust-region method for nonsmooth nonconvex optimization. J. Comput. Math. 2023, 41, 683–716. [Google Scholar] [CrossRef]

- Liu, R.Y.; Pan, S.H.; Wu, Y.Q.; Yang, X.Q. An inexact regularized proximal Newton method for nonconvex and nonsmooth optimization. Comput. Optim. Appl. 2024, 88, 603–641. [Google Scholar] [CrossRef]

- Bolte, J.; Sabach, S.; Teboulle, M. Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 2014, 146, 459–494. [Google Scholar] [CrossRef]

- Fukushima, M.; Mine, H. A generalized proximal point algorithm for certain non-convex minimization problems. Int. J. Syst. Sci. 1981, 12, 989–1000. [Google Scholar] [CrossRef]

- Tseng, P. On accelerated proximal gradient methods for convex-concave optimization. SIAM J. Optim. 2008, 2, 1–20. [Google Scholar]

- Tseng, P.; Yun, S. Block-coordinate gradient descent method for linearly constrained nonsmooth separable optimization. J. Optim. Theory Appl. 2009, 140, 513–535. [Google Scholar] [CrossRef]

- Nesterov, Y. Gradient methods for minimizing composite functions. Math. Program. 2013, 140, 125–161. [Google Scholar] [CrossRef]

- Wu, Q.Q.; Peng, D.T.; Zhang, X. Continuous exact relaxation and alternating proximal gradient algorithm for partial sparse and partial group sparse optimization problems. J. Sci. Comput. 2024, 100, 20. [Google Scholar] [CrossRef]

- Li, H.; Lin, Z.C. Accelerated proximal gradient methods for nonconvex programming. In Proceedings of the 29th International Conference on Neural Information Processing Systems (NIPS’15), Montreal, QC, Canada, 7–12 December 2015; pp. 379–387. [Google Scholar]

- Aravkin, A.Y.; Baraldi, R.; Orban, D. A proximal quasi-Newton trust-region method for nonsmooth regularized optimization. SIAM J. Optim. 2022, 32, 900–929. [Google Scholar] [CrossRef]

- Yang, D.; Wang, X.M. Incremental subgradient algorithms with dynamic step sizes for separable convex optimizations. Math. Meth. Appl. Sci. 2023, 46, 7108–7124. [Google Scholar] [CrossRef]

- Robbins, H.; Monro, S. A stochastic approximation method. Ann. Math. Statist. 1951, 22, 400–407. [Google Scholar] [CrossRef]

- Franchini, G.; Porta, F.; Ruggiero, V.; Trombini, I.; Zanni, L. A stochastic gradient method with variance control and variable learning rate for Deep Learning. J. Comput. Appl. Math. 2024, 451, 116083. [Google Scholar] [CrossRef]

- Johnson, R.; Zhang, T. Accelerating stochastic gradient descent using predictive variance reduction. In Proceedings of the 27th International Conference on Neural Information Processing Systems (NIPS’13), Lake Tahoe, NV, USA, 5–10 December 2013; pp. 315–323. [Google Scholar]

- Rockafellar, R.T.; Wets, R.J.B. Variational Analysis, 3rd ed.; Springer Science & Business Media: Berlin, Germany, 2009; pp. 1–74. ISBN 978-3-642-02431-3. [Google Scholar]

- Roosta-Khorasani, F.; Mahoney, M.W. Sub-sampled Newton methods. Math. Program. 2019, 174, 293–326. [Google Scholar] [CrossRef]

- Shen, H.L.; Peng, D.T.; Zhang, X. Smoothing composite proximal gradient algorithm for sparse group Lasso problems with nonsmooth loss functions. J. Appl. Math. Comput. 2024, 70, 1887–1913. [Google Scholar] [CrossRef]

- Yang, J.D.; Song, H.M.; Li, X.X.; Hou, D. Block Mirror Stochastic Gradient Method For Stochastic Optimization. J. Sci. Comput. 2023, 94, 69. [Google Scholar] [CrossRef]

| Notation | Description |

|---|---|

| gradient of function f at point x | |

| approximation of | |

| Fréchet subdifferential of h at x | |

| limiting subdifferential of h at x | |

| Moreau envelope of h at x with parameter | |

| proximal mapping of h at x with parameter | |

| * threshold of prox-boundedness of function h | |

| regularization parameter in the k-th iteration | |

| IG-QR | quadratic regularization algorithm employing inexact gradients |

| FG-QR | quadratic regularization algorithm employing full gradients |

| Dimensions | True | FG-QR | IG-QR | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| n | d | O-v | O-v | R-e | I-k | T-s | O-v | R-e | I-k | T-s |

| 100,000 | 200 | 0.105016 | 0.104550 | 0.011752 | 49 | 4.601011 | 0.104558 | 0.012024 | 51 | 2.642049 |

| 100,000 | 500 | 0.105025 | 0.104531 | 0.011712 | 48 | 12.862375 | 0.104545 | 0.011038 | 52 | 7.583251 |

| 100,000 | 800 | 0.104999 | 0.104523 | 0.012177 | 50 | 20.439099 | 0.104526 | 0.011822 | 57 | 13.037192 |

| 150,000 | 200 | 0.104985 | 0.104511 | 0.011667 | 49 | 5.919224 | 0.104521 | 0.011709 | 50 | 3.301263 |

| 150,000 | 500 | 0.105012 | 0.104526 | 0.012019 | 50 | 13.428264 | 0.104523 | 0.011853 | 52 | 8.217642 |

| 150,000 | 800 | 0.105001 | 0.104528 | 0.011814 | 50 | 25.827659 | 0.104539 | 0.011963 | 56 | 16.310059 |

| 200,000 | 200 | 0.105009 | 0.104548 | 0.011770 | 49 | 7.683134 | 0.104547 | 0.011395 | 50 | 4.764928 |

| 200,000 | 500 | 0.104975 | 0.104503 | 0.011600 | 49 | 26.579966 | 0.104510 | 0.011754 | 52 | 15.295921 |

| 200,000 | 800 | 0.105024 | 0.104552 | 0.012110 | 50 | 39.642553 | 0.104554 | 0.011776 | 55 | 24.811985 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, A.; Wang, X.; Liao, C. An Inexact Nonsmooth Quadratic Regularization Algorithm. Axioms 2025, 14, 604. https://doi.org/10.3390/axioms14080604

Wang A, Wang X, Liao C. An Inexact Nonsmooth Quadratic Regularization Algorithm. Axioms. 2025; 14(8):604. https://doi.org/10.3390/axioms14080604

Chicago/Turabian StyleWang, Anliang, Xiangmei Wang, and Chunfang Liao. 2025. "An Inexact Nonsmooth Quadratic Regularization Algorithm" Axioms 14, no. 8: 604. https://doi.org/10.3390/axioms14080604

APA StyleWang, A., Wang, X., & Liao, C. (2025). An Inexact Nonsmooth Quadratic Regularization Algorithm. Axioms, 14(8), 604. https://doi.org/10.3390/axioms14080604