On the Convergence Order of Jarratt-Type Methods for Nonlinear Equations

Abstract

1. Introduction

2. Convergence Order of Iterative Scheme (3)

- (A1)

- exists and ∃ such that

- (A2)

- ∃ such that

- (A3)

- ∃ such thatand

- (A4)

- ∃ such that

3. Analysis of Convergence Order of (2)

4. Analysis of Convergence Order of (4)

5. Convergence Under Generalized Conditions

- (H1)

- Consider a CNF for which the smallest positive solution to is . Let be the interval .

- (H2)

- Let be the SPS of , where the function is given byfor some CNF

- (H3)

- Let have an SPS given as where is given byLet

- (H4)

- The equation has an SPS denoted by where is given bywhere

- (H5)

- The equation has an SPS denoted by where is given byLet

- (H6)

- The equation has an SPS denoted by where is given aswhere

- (H7)

- There exists an invertible linear operator L and solving the equation such that for each ,

- (H8)

- for eachand

- (H9)

- (e1)

- There exist as CNF such that has an SPS denoted bySet Let be a CNF. Define the sequence for and each byand

- (e2)

- There exists such thatfor each Consequently, and there exists such thatThe functions and are connected to the operators on the iterative scheme given in (4).

- (e3)

- There exists such thatLet Notice that (e1) and (e3) imply that operator is invertible. Let

- (e4)

- for each and

- (e5)

6. Efficiency Indices

7. Numerical Example

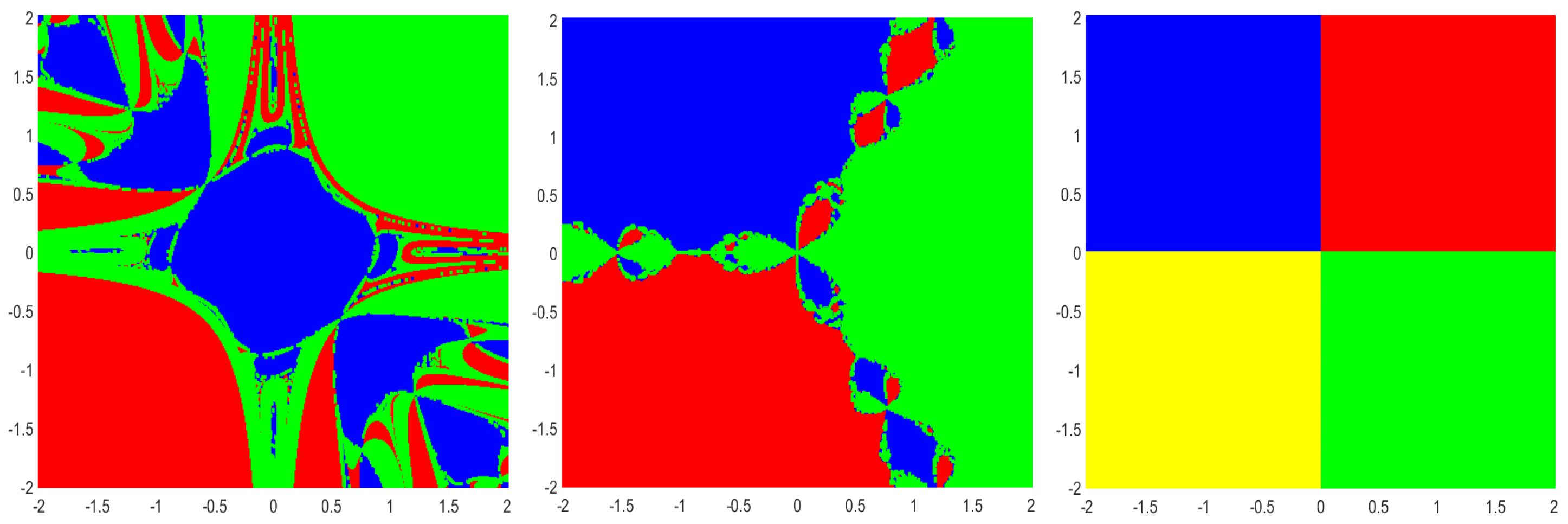

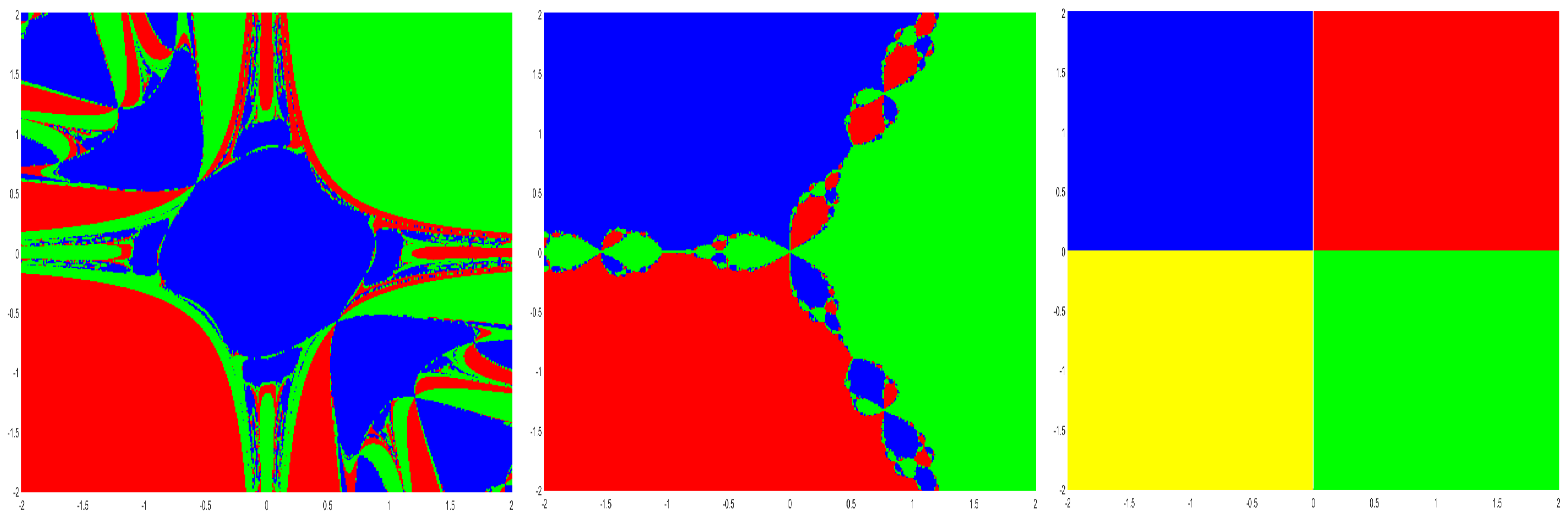

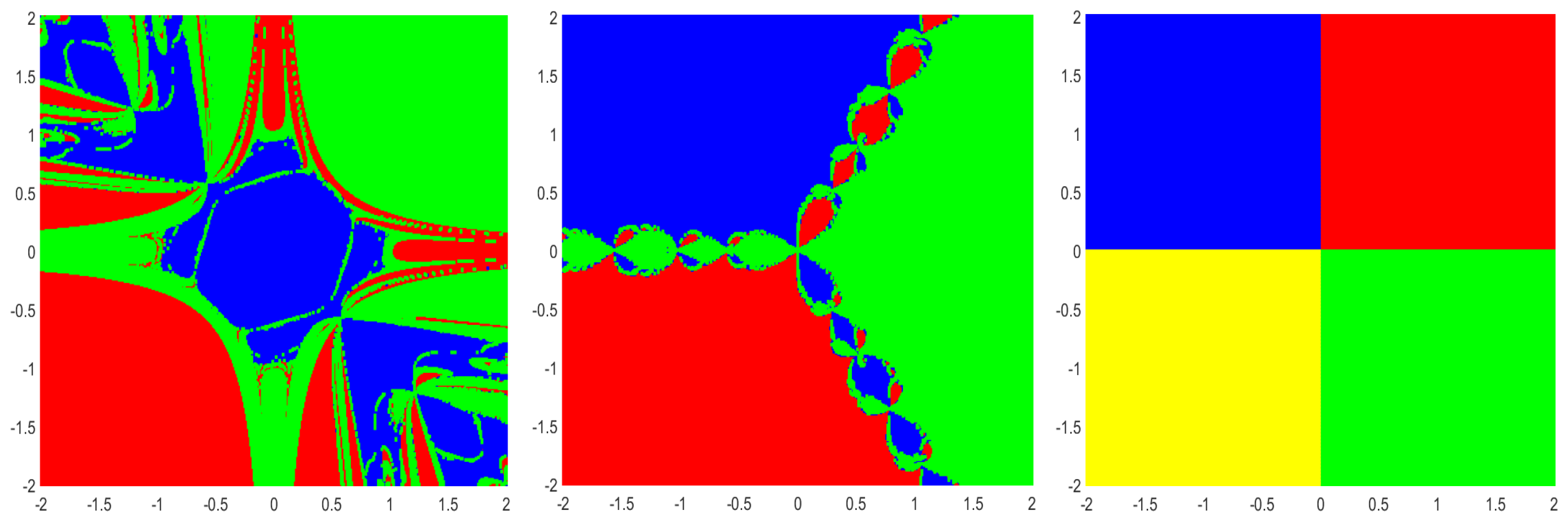

8. Basins of Attraction

9. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ahmad, F.; Tohidi, E.; Ullah, M.Z. Higher order multi-step Jarratt-like method for solving systems of nonlinear equations: Application to PDEs and ODEs. Comput. Math. Appl. 2015, 70, 624–636. [Google Scholar] [CrossRef]

- Ullah, M.Z.; Serra-Capizzano, S.; Ahmad, F. An efficient multi-step iterative method for computing the numerical solution of systems of nonlinear equations associated with ODEs. Appl. Math. Comput. 2015, 250, 249–259. [Google Scholar] [CrossRef]

- Argyros, I.K. The Theory and Applications of Iteration Methods; Taylor and Francis Group, CRC Press: Boca Raton, FL, USA, 2022; Volume 2. [Google Scholar]

- Ullah, M.Z.; Soleymani, F.; Al-Fhaid, A.S. Numerical solution of nonlinear systems by a general class of iterative methods with application to nonlinear PDEs. Numer. Algorithms 2014, 67, 223–242. [Google Scholar] [CrossRef]

- Yu, J.; Wang, X. A single parameter fourth-order Jarratt type iterative method for solving nonlinear systems. AIMS Math. 2025, 10, 7847–7863. [Google Scholar] [CrossRef]

- Bartle, R.G. Newton’s method in Banach spaces. Proc. Am. Math. Soc. 1955, 6, 827–831. [Google Scholar]

- Ben-Israel, A. A Newton-Raphson method for the solution of systems of equations. J. Math. Anal. Appl. 1966, 15, 243–252. [Google Scholar] [CrossRef]

- Jarratt, P. Some fourth order multipoint iterative methods for solving equations. Math. Comput. 1966, 20, 434–437. [Google Scholar] [CrossRef]

- Saheya, B.; Chen, G.Q.; Sui, Y.K.; Wu, C.Y. A new Newton-like method for solving nonlinear equations. SpringerPlus 2016, 5, 1269. [Google Scholar] [CrossRef]

- Ren, H.; Wu, Q.; Bi, W. New variants of Jarratt’s method with sixth-order convergence. Numer. Algorithms 2009, 52, 585–603. [Google Scholar] [CrossRef]

- Weerakoon, S.; Fernando, T. A variant of Newton’s method with accelerated third-order convergence. Appl. Math. Lett. 2000, 8, 87–93. [Google Scholar] [CrossRef]

- Petković, M.S.; Neta, B.; Petković, L.D.; Džunić, J. Multipoint methods for solving nonlinear equations: A survey. Appl. Math. Comput. 2014, 226, 635–660. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martínez, E.; Torregrosa, J.R. A modified Newton-Jarratt’s composition. Numer. Algorithms 2010, 55, 87–99. [Google Scholar] [CrossRef]

- Behl, R.; Cordero, A.; Motsa, S.S.; Torregrosa, J.R. On developing fourth-order optimal families of methods for multiple roots and their dynamics. Appl. Math. Comput. 2015, 265, 520–532. [Google Scholar] [CrossRef]

- Magreñán, A.A. Different anomalies in a Jarratt family of iterative root finding methods. Appl. Math. Comput. 2014, 233, 29–38. [Google Scholar]

- Shakhno, S.M.; Iakymchuk, R.P.; Yarmola, H.P. Convergence analysis of a two step method for the nonlinear squares problem with decomposition of operator. J. Numer. Appl. Math. 2018, 128, 82–95. [Google Scholar]

- Shakhno, S.M.; Gnatyshyn, O.P. On an iterative algorithm of order 1.839… for solving nonlinear operator equations. Appl. Math. Appl. 2005, 161, 253–264. [Google Scholar]

- Cárdenas, E.; Castro, R.; Sierra, W. A Newton-type midpoint method with high efficiency index. J. Math. Anal. Appl. 2020, 491, 124381. [Google Scholar] [CrossRef]

- Traub, J.F. Iterative methods for the solution of equations. Am. Math. Soc. 1982, 312. [Google Scholar] [CrossRef]

- Ostrowski, A.M. Solution of Equations and Systems of Equations: Pure and Applied Mathematics; A Series of Monographs and Textbooks; Elsevier: Amsterdam, The Netherlands, 2016; Volume 9. [Google Scholar]

- Noor, M.A.; Waseem, M.; Noor, K.I. New iterative technique for solving a system of nonlinear equations. Appl. Math. Comput. 2015, 271, 446–466. [Google Scholar] [CrossRef]

- Alqahtani, H.F.; Behl, R.; Kansal, M. Higher-Order Iteration Schemes for Solving Nonlinear Systems of Equations. Mathematics 2019, 7, 937. [Google Scholar] [CrossRef]

- Jayakumar, J. Generalized Simpson-Newton’s Method for Solving Nonlinear Equations with Cubic Convergence. IOSR J. Math. 2013, 7, 58–61. [Google Scholar] [CrossRef]

- Iliev, A.; Iliev, I. Numerical method with order t for solving system nonlinear equations. Collect. Sci. Work. 2000, 30, 3–4. [Google Scholar]

- Chun, C.; Lee, M.Y.; Neta, B.; Džunić, J. On optimal fourth-order iterative methods free from second derivative and their dynamics. Appl. Math. Comput. 2012, 218, 6427–6438. [Google Scholar] [CrossRef]

- Scott, M.; Neta, B.; Chun, C. Basin attractors for various methods. Appl. Math. Comput. 2011, 218, 2584–2599. [Google Scholar] [CrossRef]

- Ortega, J.M.; Rheinboldt, W.C. Iterative solution of nonlinear equations in several variables. In Classics in Applied Mathematics, Philadelphia: Society for Industrial and Applied Mathematics; Academic Press: Cambridge, MA, USA, 2000; Volume 14. [Google Scholar]

- Werner, W. Über ein Verfahren der Ordnung 1 + zur Nullstellenbestimmung. Numer. Math. 1979, 32, 333–342. [Google Scholar] [CrossRef]

| No. of Iterations (n) | Method (2) | Method (3) |

|---|---|---|

| 1 | (−0.285212504390587, 1.085353003161222) | (−0.291165568093180, 1.084718626530083) |

| 2 | (−0.290514555536393, 1.084215081898184) | (−0.290514555507251, 1.084215081491351) |

| 3 | (−0.290514555536393, 1.084215081898184) | (−0.290514555507251, 1.084215081491351) |

| 4 | (−0.290514555536393, 1.084215081898184) | (−0.290514555507251, 1.084215081491351) |

| k | Noor Waseem Method (65) | Ratio | Newton Simpson Method (68) | Ratio | Method (3) | Ratio |

|---|---|---|---|---|---|---|

| 0 | (2.000000, −1.000000) | (2.000000, −1.000000) | (2.000000, −1.000000) | |||

| 1 | (1.264067, −0.166747) | 0.052791 | (1.263927, −0.166887) | 0.052792 | (1.151437, 0.051449) | 0.040459 |

| 2 | (1.019624, 0.265386) | 0.259247 | (1.019452, 0.265424) | 0.259156 | (0.994771, 0.304342) | 0.536597 |

| 3 | (0.992854, 0.306346) | 1.578713 | (0.992853, 0.306348) | 1.580144 | (0.992780, 0.306440) | 1.951273 |

| 4 | (0.992780, 0.306440) | 1.977941 | (0.992780, 0.306440) | 1.977957 | (0.992780, 0.306440) | 1.979028 |

| 5 | (0.992780, 0.306440) | 1.979028 | (0.992780, 0.306440) | 1.979028 | (0.992780, 0.306440) | 1.979028 |

| k | Noor Waseem Method (66) | Ratio | Newton Simpson Method (69) | Ratio | Method (4) | Ratio |

|---|---|---|---|---|---|---|

| 0 | (2.000000, −1.000000) | (2.000000, −1.000000) | (2.000000, −1.000000) | |||

| 1 | (1.127204, 0.054887) | 0.004363 | (1.127146, 0.054883) | 0.004363 | (1.144528, 0.069067) | 0.004375 |

| 2 | (0.993331, 0.305731) | 0.501551 | (0.993328, 0.305734) | 0.501670 | (0.994305, 0.304922) | 0.495553 |

| 3 | (0.992780, 0.306440) | 3.889725 | (0.992780, 0.306440) | 3.889832 | (0.992780, 0.306440) | 3.847630 |

| 4 | (0.992780, 0.306440) | 3.916553 | (0.992780, 0.306440) | 3.916553 | (0.992780, 0.306440) | 3.916553 |

| k | Noor Waseem Method (67) | Ratio | Newton Simpson Method (70) | Ratio | Method (2) | Ratio |

|---|---|---|---|---|---|---|

| 0 | (2.000000, −1.000000) | (2.000000, −1.000000) | (2.000000, −1.000000) | |||

| 1 | (1.067979, 0.174843) | 0.001211 | (1.067906, 0.174885) | 0.001211 | (1.027012, 0.256566) | 0.001057 |

| 2 | (0.992784, 0.306436) | 1.383068 | (0.992784, 0.306436) | 1.384152 | (0.992780, 0.306440) | 3.122403 |

| 3 | (0.992780, 0.306440) | 5.509412 | (0.992780, 0.306440) | 5.509414 | (0.992780, 0.306440) | 5.509727 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Erappa, S.M.; Bheemaiah, S.P.; George, S.; Karuppaiah, K.; Argyros, I.K. On the Convergence Order of Jarratt-Type Methods for Nonlinear Equations. Axioms 2025, 14, 401. https://doi.org/10.3390/axioms14060401

Erappa SM, Bheemaiah SP, George S, Karuppaiah K, Argyros IK. On the Convergence Order of Jarratt-Type Methods for Nonlinear Equations. Axioms. 2025; 14(6):401. https://doi.org/10.3390/axioms14060401

Chicago/Turabian StyleErappa, Shobha M., Suma P. Bheemaiah, Santhosh George, Kanagaraj Karuppaiah, and Ioannis K. Argyros. 2025. "On the Convergence Order of Jarratt-Type Methods for Nonlinear Equations" Axioms 14, no. 6: 401. https://doi.org/10.3390/axioms14060401

APA StyleErappa, S. M., Bheemaiah, S. P., George, S., Karuppaiah, K., & Argyros, I. K. (2025). On the Convergence Order of Jarratt-Type Methods for Nonlinear Equations. Axioms, 14(6), 401. https://doi.org/10.3390/axioms14060401