Abstract

In this survey, we provide an overview of category theory-derived machine learning from four mainstream perspectives: gradient-based learning, probability-based learning, invariance and equivalence-based learning, and topos-based learning. For the first three topics, we primarily review research in the past five years, updating and expanding on the previous survey by Shiebler et al. The fourth topic, which delves into higher category theory, particularly topos theory, is surveyed for the first time in this paper. In certain machine learning methods, the compositionality of functors plays a vital role, prompting the development of specific categorical frameworks. However, when considering how the global properties of a network reflect in local structures and how geometric properties and semantics are expressed with logic, the topos structure becomes particularly significant and profound.

Keywords:

machine learning; category theory; topos theory; gradient-based learning; categorical probability; Bayesian learning; functorial manifold learning; persistent homology MSC:

68T01; 68-02; 18A99; 18C10

1. Introduction and Background

In recent years, there has been an increasing amount of research involving category theory in machine learning. This survey primarily reviews recent research on the integration of category theory in various machine learning paradigms. Roughly, we divide this research into two main directions:

- Studies on specific categorical frameworks corresponding to specific machine learning methods. Backpropagation is formalized within Cartesian differential categories, structuring gradient-based learning. Probabilistic models, including Bayesian inference, are studied in Markov categories, capturing stochastic dependencies. Clustering algorithms are analyzed in the category of metric spaces, providing a structured view of similarity-based learning.

- Methodological approaches that explore the potential applications of category theory to various aspects of machine learning from a broad mathematical perspective. For example, research has examined how topoi capture the internal properties of neural networks, how 2-categories formalize component compositions in learning models, and how toposes and stacks provide structured frameworks for encoding learning dynamics and invariances.

We begin by introducing the key terms category theory, functoriality, and topos for readers who may not be familiar with these mathematical concepts. Category theory is a branch of mathematics that provides a unifying framework for describing mathematical structures and their relationships in an abstract manner. Mathematical domains such as algebra, topology, and logic can all be described within this framework. A fundamental concept in category theory, functoriality, refers to a method of mapping one category to another. It provides a systematic way to translate concepts and results from one mathematical context to another, enabling the study of similarities and connections across different areas of mathematics. As a higher-order category, a topos behaves like the category of sets in that it supports operations such as taking limits, colimits, and exponentials, and it also has an internal logic (often intuitionistic rather than classical). Topoi are used in fields such as logic, geometry, and computer science, particularly in areas like type theory and the semantics of programming languages, where they provide a versatile and abstract framework for representing data structures and reasoning about computations. We provide the specific definitions as follows.

Definition 1

(Category Theory). A category consists of objects and morphisms (also called arrows) between these objects, satisfying two key properties: associativity (the composition of morphisms is associative) and identity (each object has an identity morphism that acts as a neutral element under composition).

Definition 2

(Functor). A functor is a structure-preserving map between categories that assigns to each object in one category an object in another category, and to each morphism in the first category a morphism in the second, while preserving the composition of morphisms and identity morphisms.

Definition 3

(Topos). A topos is a category that generalizes the category of sets and is equipped with additional logical and topological structures. It serves as a generalized space where various mathematical concepts can be interpreted.

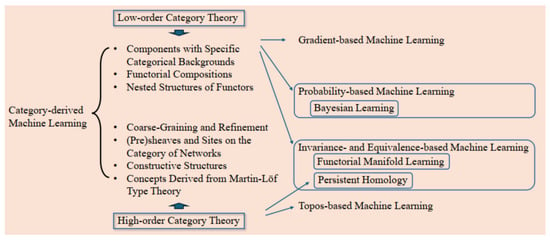

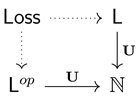

Our framework, shown in Figure 1, structures category-derived learning into two levels. Low-order category theory provides functorial frameworks, where components—defined with their own inputs, outputs, and parameters—are composed using functors. This modular perspective parallels programming languages and has influenced approaches in gradient-based learning, Bayesian learning, and functorial manifold learning for invariance and equivalence. High-order category theory captures global properties relevant to learning. For instance, coarse-graining and refinement can be structured using sites on networks, and constructive frameworks like stacks may offer further insights.

Figure 1.

Category-derived machine learning framework.

For the first direction, the survey by Shiebler et al. [1] provided a detailed overview of the main results up to 2021. In this survey, we complement their survey by including more recent results from 2021 to the present. Additionally, we introduce some other branches beyond these mainstream results. The second direction, high-order category theory-derived learnings, which is not covered in Shiebler et al.’s survey, is emphasized in this survey, and explores causality by leveraging the rich structures of sheaves and presheaves. These constructs effectively capture local–global relationships in datasets, such as the impact of local interventions on global outcomes. Furthermore, the internal logic of a topos provides a robust foundation for reasoning about counterfactuals and interventions, both of which are central to causal inference. For example, a systematic framework based on functor composition provides a robust design-oriented approach and effectively encodes ‘parallelism’ by describing independent processes. However, it struggles to fully encode ‘concurrency’, where events can be both ‘fired’ and ‘enabled’ at the same time, and to model the dynamic state transitions of these events. On the other hand, a topos, with its inherent (co)sheaf structures, naturally accommodates graph-like networks. When applied in this context, it implicitly supports a Petri net structure, which excels at capturing the dynamic activation and interactions of events, including concurrency and state changes [2]. The comparison between our work and Shiebler et al.’s is summarized in Table 1.

Table 1.

Comparison between Shiebler et al. [1] (2021) and this survey.

Next, we provide practical examples illustrating the benefits of incorporating topos theory into machine learning.

- Example 1. Dimensionality Reduction: Traditional methods risk information loss. Topos theory leverages its algebraic properties and thus enables dimensionality reduction while preserving structural integrity and extracting key features.

- Example 2. Interpretability of Machine Learning Models: Deep learning models are often ‘black boxes’. Topos theory provides logical reasoning to interpret internal structures, outputs, and emergent phenomena in large-scale models.

- Example 3. Dynamic Data Analysis: Traditional static analysis struggles with evolving data. Topos theory naturally captures temporal changes and local–global relationships.

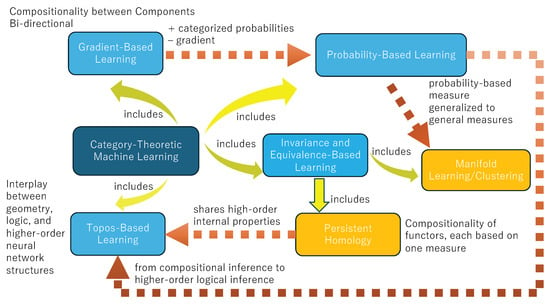

The diagram in Figure 2 illustrates category-theoretic machine learning as a unifying framework, integrating gradient-based, probability-based, invariance and equivalence-based, and topos-based learning, each of which will be introduced in subsequent sections. Gradient-based learning interacts bidirectionally with probability-based learning, where optimization techniques such as gradient descent refine probabilistic models, and probability distributions enhance optimization efficiency. Probability-based learning further connects to manifold learning and clustering, generalizing probabilistic measures to structured geometric representations. Invariance and equivalence-based learning builds on these foundations, incorporating persistent homology to capture topological invariants and structural consistency. Topos-based learning extends categorical structures to higher-order logic, providing a framework to analyze compositional relationships and logical inference in neural networks. This integrated framework emphasizes structured learning, ensuring coherence across different learning paradigms.

Figure 2.

Category-theoretic machine learning framework.

The following develops a categorical and topos-theoretic approach to machine learning, structured as follows:

- In gradient-based learning, we introduce base categories, functors, and the structure of compositional optimization.

- In probability-based learning, we present categorical probability models, Bayesian inference, and probabilistic programming.

- In invariance and equivalence-based learning, we explore categorical clustering, manifold learning, and persistent homology.

- In topos-based learning, we apply sheaf and stack structures to machine learning, building on Lafforgue’s reports.

- Finally, we discuss applications and future directions, covering frustrated AI systems, categorical modeling, and emerging challenges.

In the following, we generally use a sans serif font to represent categories and a bold font to represent functors, though we may occasionally emphasize sheaves/toposes with different fonts.

2. Developments in Gradient-Based Learning

The primary objective of integrating concepts from category theory into concrete, modularizable learning processes or methods is to leverage compositionality, enabling existing processes to be represented through graphical (diagrammatic) representations and calculus, where the modular design facilitates replacements of individual components. For existing methods, in [3], the author listed different gradient descent optimization algorithms and compared their behavior. The categorical approach, on the other hand, highlights their similarities and integrates different algorithms/optimizers into a common framework within the learning process. We summarized the differences and characteristics of gradient descent optimizers within a categorical framework in Table 2. In [4], the authors developed categorical frameworks focused on the most classical and straightforward gradient-based learning methods, demonstrating the variations achievable through the composition of the categorical components they defined. Specifically, the graphical representation, semantic properties, and diagrammatic reasoning—key aspects of category theory—are regarded as a rigorous semantic foundation for learning. They also facilitate the construction of scalable deep learning systems through compositionality.

Table 2.

Comparison of gradient descent optimizers in a categorical framework.

In this section, we aim to explain how (low-order) category semantics are applied to understand the fundamental structures of gradient-based learning, which is a core component of the deep learning paradigm. The mainstream approach is to decompose learning into independent components: the parameter component, the bidirectional dataflow component, and the differentiation component. These components, especially the bidirectional components—lenses and optics—are widely applied in other categorical frameworks of machine learning. After introducing the basic framework, we will present related research in Section 2.3.

Shiebler et al. [1] provided an overview of the fundamental structures in their survey. However, their definitions and explanations may be quite challenging for general readers without a mathematical background. Therefore, we briefly outline the most commonly used concepts in a more accessible manner.To technically introduce the critical parametric lens structure in this work, we begin by outlining the three key characteristics of gradient-based learning identified in [4,5]. Each characteristic motivates a specific categorical construction, respectively.

These concepts are summarized in Table 3. It provides the key characteristics of neural network learning and their categorical constructions. Parametricity is represented by Para, which captures parameterized mappings in supervised learning. Bidirectionality, modeled by Lens, describes the forward and backward flow of information, essential for backpropagation. Differentiation, expressed using CRDC, formalizes loss function optimization by differentiating parameters to minimize loss. These constructions provide a categorical perspective on neural network structures and learning dynamics.

Table 3.

Summary of the key characteristics in gradient-based learning with corresponding construction.

These basic settings can be extended or modified to accommodate various learning tasks, methods, or datasets. For example, bicategory- and actegory-based approaches adapt to different objects (e.g., polynomial circuits) or methodologies (e.g., Bayesian learning using Grothendieck lenses instead of standard functors). The Cartesian property of the background category enables duality, particularly through products and coproducts. Works like [6,7] extend this framework with dual components, introducing concepts such as parametrized/coparametrized morphisms and algebra/coalgebra. These enrichments integrate diverse networks (e.g., GCNNs, GANs) into a unified framework, while the Cartesian structure also allows Lawvere theory to model algebraic structures within the base category.

In complex network architectures, gradient-based composition alone is insufficient because it lacks structural constraints to ensure semantic consistency, logical validity, and global coherence. Therefore, topos-based learning provides a solution by introducing sheaf and stack structures, which enforce hierarchical dependencies and maintain local–global consistency. Additionally, subobject classifiers and fibered categories regulate module composition, preventing information distortion. Homotopy theory and categorical invariants further enable scalable modeling, preserving structural integrity across expanding architectures. Thus, topos theory extends compositional optimization, ensuring interpretable, adaptable, and logically consistent AI systems.

Now we give the definitions of these functors and categories in order.

2.1. Fundamental Components: The Base Categories and Functors

Definition 4

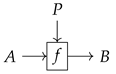

(Functor [4]). Let be a strict symmetric monoidal category. The mapping by results in a category with the following structure:

- Objects: the objects of .

- Morphisms: pairs of the form , representing a map from the input A to the output B, with P being an object of and .

- Compositions of morphisms: the composition of morphisms and is given by the pair .

- Identity: The identity endomorphism on A is the pair . ( due to the strict monoidal property.)

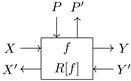

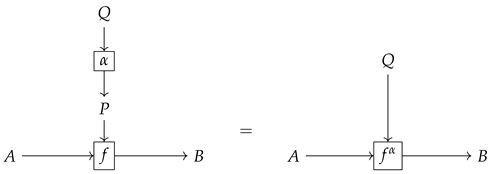

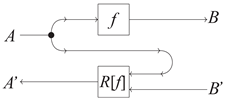

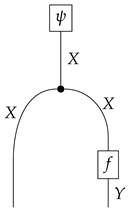

Every parametric morphism has a horizontal, but also a vertical component, emphasized by its string diagram representation

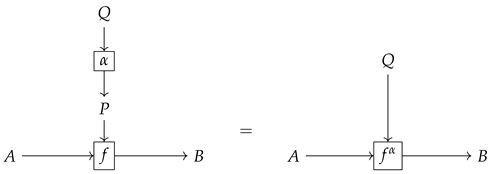

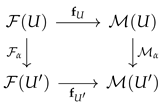

In supervised parameter learning, the update of parameters is formulated as 2-morphisms in , called reparameterizations. A reparameterization from is a morphism in such that the diagram

commutes in , yielding a new map . Following the authors, we write for the reparameterization of f with , as shown in this string diagram representation

commutes in , yielding a new map . Following the authors, we write for the reparameterization of f with , as shown in this string diagram representation

where = . In this context, based on the vertical morphism setting, Para(C) can be viewed as a bicategory with models as its 1-morphisms associating inputs and outputs, and with reparameterizations as its 2-morphisms associating models.

where = . In this context, based on the vertical morphism setting, Para(C) can be viewed as a bicategory with models as its 1-morphisms associating inputs and outputs, and with reparameterizations as its 2-morphisms associating models.

The following construction facilitates bidirectional data transmission.

Definition 5

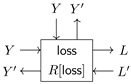

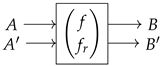

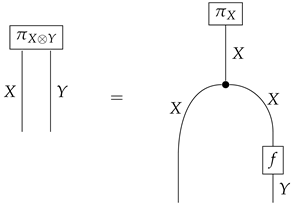

(Functor [4]). For any Cartesian category , the mapping of functor results in the category with the following data:

- Objects are pairs of objects in .

- A morphism from to consists of a pair of morphisms in , denoted as , as illustrated inwhere f:A → B is called the get or forward part of the lens and fr:A × B′ → A′ is called the put or backwards part of the lens. The inside construction is illustrated as in

- The composition of and is given by get and put . The graphical notation is

- The identity on is the pair .

Definition 6

(Cartesian left additive category [8]). A Cartesian left additive category is both a Cartesian category and a left additive category. A Cartesian category is specified by four components: binary products ×, projection maps , pairing operation , and a terminal object. A left additive category is defined by commutative monoid hom-sets, an addition operation +, zero maps 0, with compositions on the left that are compatible with the addition operation. The compatibility in is reflected in the fact that the projection maps of as a Cartesian category are additive.

Definition 7

(Cartesian differential category [8]). A Cartesian differential category is a Cartesian left additive category with a differential combinator D, with which the inference rule is given by:

, which satisfies the eight axioms listed in [8], is called the derivative of f.

We highlight the Chain Rule of Derivative axiom in particular which defines the differential operation on composite maps: .

Definition 8

(Cartesian reverse differential category (CRDC), first introduced by [8] and first applied to the context of machine learning and automatic differentiation by [9]). A Cartesian reverse differential category is a Cartesian left additive category with a reverse differential combinator R, with which the inference rule is given by:

where satisfying the eight axioms listed in [9] is called the reverse derivative of f. And we would highlight the Reverse Chain Rule of Derivative axiom in particular which defines the differential operation on composite maps.

A more straightforward relationship between the forward and reverse derivatives in standard computations, in terms of function approximations, is as follows:

The correspondence between the forward and reverse derivatives is as follows:

The structure integrates seamlessly with the reverse differential combinator R of CRDC. Specifically, the pair forms a morphism in when is a CRDC. In the context of learning, functions as a backward map, enabling the ‘learning’ of f. The type assignment for conceals essential distinctions: takes a tangent vector (corresponding to the gradient descent algorithm) at B and outputs one at A. Since on the side, both outputs and inputs are different from those on the f side, the diagram representation is revised as follows.

The fundamental category where gradient-based learning takes place is the composite of the and constructions given the base CRDC .

2.2. Composition of Components

This section highlights principal results from Deep Learning with Parametric Lenses [5], which describes parametric lenses as homogeneous components functioning in the gradient-based learning process.

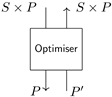

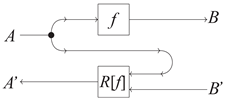

For the most basic situation, they discussed a typical supervised learning scenario and its categorization. It involves finding a parametrized model f with parameters , which are updated step by step until certain requirements are met. The gradient-based updating algorithm, referred to as the optimizer, updates these parameters iteratively based on a loss map and is controlled by a learning rate . The authors of [5] emphasize that each component, including the model, loss map, optimizer, and learning rate, can vary independently but are uniformly formalized as parametric lenses. Their pictorial definitions and types are summarized in Table 4. It summarizes the following key components in categorical supervised learning using parametric lenses.

Table 4.

Summary of key components at work in the learning process: parametric lenses [5].

- Model: A parameterized function maps inputs to outputs, with reparameterization enabling parameter updates.

- Loss Map: Computes error; its reverse map aids in gradient-based updates.

- Gradient Descent: Iteratively updates parameters using gradient information.

- Optimizer: Includes basic and stateful variants, the latter incorporating memory for adaptive updates.

- Learning Rate: A scalar controlling update step size.

- Corner Structure: Ensures compatibility between learning components.

This formalism unifies learning processes into a modular and compositional framework. Moreover, can be introduced as the base category for the learning of digital circuits (as inputs) involved in the same framework [10].

In Ref. [5], the authors present a comprehensive framework of parametric lenses, which can accommodate a wide range of variations, including different models (such as linear-bias-activation neural networks, Boolean circuits [11], and polynomial circuits), loss functions (such as mean squared error and Softmax cross-entropy), and gradient update algorithms. These algorithms include well-known optimizers like momentum, Nesterov momentum, and Adaptive Moment Estimation (ADAM), all of which are captured within the parametric lens framework.

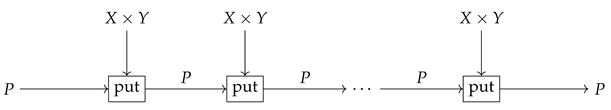

The stateful parameter update proposed by the authors involves selecting an object S (the state object) and a lens . In the momentum variant of gradient descent, the previous change is tracked and used to adjust the current parameter. Specifically, the authors set , fix some , and define the momentum lens , where and , with . This formulation reduces to standard gradient descent when .

For Nesterov momentum, the authors use the momentum from previous updates to modify the input parameter supplied to the network. This behavior can be precisely captured by a small variation of the lens from the momentum case. Again, they set , fix some , and define the Nesterov momentum lens by and as in the previous case.

Additionally, the authors discuss Adaptive Moment Estimation (ADAM), a method that computes adaptive learning rates for each parameter by storing exponentially decaying averages of past gradients (m) and squared gradients (v). For fixed , , and , Adam is given by , with the lens whose get part is and the put part is , where , , and , .

Thus, the parametric lens framework introduced by the authors provides a unified approach to modeling different optimization algorithms, each with its own distinctive characteristics. The pictorial definitions of parametric lenses rely on three types of interfaces: inputs, outputs, and parameters, which serve as the foundation of the component-based approach.

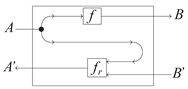

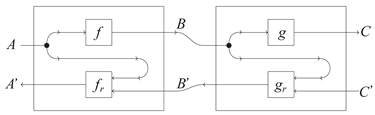

The composition of learning components can be viewed as a ‘plugging’ operation in graphical lens descriptions. A model represents a parametric lens, while the gradient descent optimizer G updates parameters by adjusting their values. By connecting G to through their shared interface , the model undergoes reparameterization via G. This process discards backward wires and , resulting in another parametric lens of type .

This modular approach integrates the components in Table 4 to construct a gradient-based learning system for the following two case studies (Figure 3 and Figure 4).

Figure 3.

Model reparameterized by basic gradient descent (left, adapted from Figure 4 in [5]) and a full picture of an end-to-end supervised learning process (right, adapted from Equation (5.1) in [5]).

Figure 4.

Deep dreaming as a parametric lens, adapted from Equation (4.3) in [5].

- Case Study 1. Supervised Learning: In the former, fixing the optimizer enables parameter learning as a morphism in , yielding a lens of type , where ‘get’ is the identity morphism and ‘put’ maps inputs to updated parameters. The following formulas can summarize the learning process.where

- * Updates of parameters for the network provided by the state and step size

- * Predicted output of the network

- * Difference between prediction and true value

- * Updates of parameters and input

- Case Study 2: DeepDream framework: This utilizes the parameters p of a trained classifier network to generate or amplify specific features and shapes of a given type b in the input a. This enables the network to enhance selected features in an image. The corresponding categorical framework formalizes how the gradient descent lens connects to the input, facilitating structured image modification to elicit a specific interpretation. The system learns a morphism in from to , with the parameter space . This defines a lens of type , where the ‘get’ function is trivial, and the ‘put’ function maps to . The learning process follows the formulas below.where

- * The updated input provided by the state and step size

- * Prediction of the network

- * Changes in prediction and true value

- * Changes in parameters and input

Notably, the authors also illustrated the component-based approach that covers the iterative process, which is a parametric morphism (Figure in [4], page 20).

As another case study, a notable practical implementation of category theory in gradient-based learning is the ‘numeric-optics-python’ library, which applies categorical concepts such as lenses and reverse derivatives to neural network construction and optimization [12]. This library follows a compositional approach, allowing neural network architectures to be systematically assembled from primitive categorical components. Its framework enhances modularity and interpretability while remaining compatible with conventional deep learning frameworks. The library includes practical experiments on standard machine learning benchmarks, such as Iris and MNIST, demonstrating its effectiveness in real-world tasks. Additionally, equivalent implementations using Keras provide a direct comparison, highlighting its capability to integrate categorical methods into existing gradient-based optimization pipelines. By leveraging categorical optics and functorial differentiation, this case study exemplifies how category theory can improve the structure, modularity, and interpretability of gradient-based learning models, paving the way for more compositional and mathematically grounded machine learning frameworks. Building upon [4,5], data-parallel algorithms have been explored in string diagrams which efficiently compute symbolic representations of gradient-based learners based on reverse derivatives [13]. Based on these efficient algorithms, several Python implementations [14,15,16] have been released. These implementations are characterized by their speed, data-parallel capabilities, and GPU compatibility; they require minimal primitives/dependencies and are straightforward to implement correctly.

2.3. Other Related Research

The categorical framework for gradient-based learning, introduced by Cruttwell et al. [4] and refined in later works [5,17,18], builds on foundational research by Fong et al. [19,20]. Initially developed for supervised learning, it later incorporated probabilistic learning to handle uncertainty [7,21].

Cruttwell et al. emphasized the compositional nature of learning processes, unifying models, optimizers, and loss functions as parametric lenses. This abstraction enables a modular and integrable system where components interact through functorial compositions, visually represented using graphical notation. The resulting open system structure aligns with frameworks like open Petri nets, ensuring flexibility in learning model design.

An extension of lenses is ‘optics’. They correspond to the case where learning processes do not involve the ‘differentiation’, thus not requiring the Cartesian property of the background category. While the base category still needs to permit parallel composition of processes, it then degenerates to a monoidal structure [7,20].

In [22], the authors discuss aggregating data in a database using optics structures. They also consider the category Poly, which is closely related to machine learning in (analog) circuit designs [10,23]. Another work, [24], demonstrates that servers with composability can also be abstracted using the lenses structure.

In [25], the higher categorical (2-categorical) properties of optics are specifically mentioned for programming their internal setups. Specifically, the internal configuration of optics is described by the 2-cells. From another viewpoint, the 2-cells encapsulate homotopical properties, which are often considered as the internal semantics of a categorical system. This aligns with the topos-based research of [26]. Some contents were also mentioned in [27].

In [28], game theory factors are also integrated into this categorical framework, focusing more on the feedback mechanism rather than the learning algorithm. We consider combining their ideas with reinforcement learning [29] (see the next paragraph) as an interesting direction, with existing instances provided in [30].

A critical example is that, instead of lenses, optics are used for composing the Bayesian learning process [31]. We will introduce this work in detail in the next section. Because decisions are made based on policies and Bayesian inference, they introduced the concept of ‘actegory’ to express actions on categories, which is also related to the monoidal structure (Cartesian categories are a specific type of monoidal category with additional structures, such as symmetric tensor products and projection maps). Moreover, a similar structure is employed in [29], where the component-compositional framework is expanded to reinforcement learning. They demonstrated how to fit several major algorithms of reinforcement learning into the framework of categorical parametrized bidirectional processes, where the available actions of a reinforcement learning agent depend on the current state of the Markov chain. In [32], the authors considered how to specify constraints in a top-down manner and implementations in a bottom-up manner together. This approach introduces algebraic structures to play the role of ‘action’, or more precisely, the invariance or equivariance under action. In this context, they chose to introduce the monad structure and employ monad algebra homomorphisms to describe equivariance. An important instance of their framework is geometric deep learning, where the main objective is to find neural network layers that are monad algebra homomorphisms of monads associated with group actions. It is worth noting that this structure is further related to the ‘sheaf action’ in [26].

When this framework is further expanded to learning on manifolds, where a point has additional data (in its tangent space), reverse differential categories considering only the data themselves are not sufficient. Ref. [18] extended the base category to reverse tangent categories, i.e., categories with an involution operation for their differential bundles.

Other research applying category theory to gradient-based learning includes [33]. This work primarily serves programming languages with expressive features. The authors built their results on the original automatic differentiation algorithm, considering it as a homomorphic (structure-preserving) functor from the syntax of its source programming language to the syntax of its target language. This approach is extensible to higher-order primitives, such as map operations over constructive data structures. Consequently, they can use automatic differentiation to build instances such as differential and algebraic equation solvers.

Some studies, such as [13,34,35], have also provided comprehensive ideas on the compositional, graphical, and symbolic properties of categorical learning in neural circuit diagrams and deep learning. Their work also discussed related semantics.

3. Developments in Probability-Based Learning

Probability plays a key role in machine learning, particularly in probabilistic modeling, Bayesian inference, and generative models. Many machine learning tasks can be framed as optimization problems, where the objective is to minimize a loss function. Effective problem-solving in this context requires a careful consideration of dataset origins and limitations, such as biases and data quality. In probabilistic approaches, uncertainty is modeled through probability theory and Bayesian inference, converting supervised learning into a problem of approximating distributions over outputs.

In category theory, stochastic behavior is studied in categories like Markov categories and categories of stochastic maps (e.g., , ), where morphisms represent probabilistic transitions, modeling the evolution of uncertainty over time.

Here is an overview of key probability types relevant to ML:

- Empirical Probability: Defined as the ratio of event occurrences to total observations. In the context of category theory, empirical probability is represented in three distinct ways: first, as a functor mapping observations A to distributions ; second, through the Giry monad, which captures finite measures; and third, within the framework of measure-theoretic probability, where categories such as formalize measurable spaces and measurable functions.

- Theoretical Probability: Defined as the ratio of favorable outcomes to possible outcomes. Within category theory, theoretical probability is modeled using the Giry monad to represent probability distributions, and categories like to formalize measurable spaces and functions. Furthermore, monoidal categories provide a structured framework for combining distributions, facilitating the modeling of probabilistic processes in machine learning.

- Joint Probability: Joint probability, , quantifies the likelihood of two events occurring together. In category theory, it is modeled using the copying structure in Markov categories or the tensor product in monoidal categories.

- Conditional Probability and Bayes’ Theorem in Machine Learning: Conditional probability plays a fundamental role in probabilistic reasoning, allowing the update of beliefs based on new information. In machine learning, it is widely used in probabilistic models such as Bayesian networks and hidden Markov models. The joint probability of two events can be expressed as:which forms the basis for sequential decision-making and inference in learning algorithms.A direct application of conditional probability is Bayes’ Theorem, which updates the probability of a hypothesis given new evidence, is as follows:This theorem is crucial in Bayesian inference, widely applied in generative models, reinforcement learning, and uncertainty quantification.In category-theoretic terms, probabilistic transitions can be modeled using Markov categories, where conditional dependencies are naturally represented. However, for practical machine learning applications, the focus remains on efficient approximation techniques, such as variational inference (VI) and Monte Carlo methods (MCMC), to handle complex distributions.

Statistics forms the backbone of machine learning, offering essential methodologies for data analysis, interpretation, and inference. In particular, the applications in ML include: Poisson processes, martingales, probability metrics, empirical processes, Vapnik-Chervonenkis (VC) theory, large deviations, the exponential family, and simulation techniques like Markov Chain Monte Carlo. We specifically highlight that the field of algebraic geometry is also closely related to statistical methods in machine learning [36]. Statistical methods in machine learning are broadly classified into two key areas:

- Descriptive Statistics: This summarizes data characteristics through measures such as central tendency, dispersion, and visualization techniques, offering insights into distribution patterns and trends.

- Inferential Statistics—This uses sample data to estimate population parameters, facilitating hypothesis testing, interval estimation, and predictive modeling.

3.1. Categorical Background of Probability and Statistics Learning

In machine learning, predefined datasets are commonly used to formulate optimization problems. Effectively addressing these problems requires a thorough understanding of the data’s provenance, biases, and inherent limitations. Understanding the data distribution is crucial for machine learning. Random uncertainties are modeled using probability theory and Bayesian inference, enabling the shift from function approximation to distribution approximation in supervised learning. This transition effectively addresses aleatoric uncertainty, which cannot be reduced by simply increasing the dataset size.

Category theory is a powerful framework for analyzing and interpreting randomness within various models, connecting probability, statistics, and information theory. This approach offers a solid foundation for developing robust and widely applicable learning models.

A significant advancement in this field is the formal categorization of the Bayesian learning framework. Bayesian learning combines prior knowledge with observational data to refine model parameters. The prior distribution represents beliefs before observing data, while the posterior distribution updates these beliefs by incorporating new evidence. Bayes’ rule derives the posterior distribution from the prior and likelihood, ensuring parameter estimates align with observed data. Bayesian methods not only provide optimal parameter estimates but also quantify uncertainty through the posterior, which captures both data variability and inherent randomness. This makes Bayesian learning a robust and adaptive framework for model development. Category-theoretic approaches to probability theory provide an abstract and unifying framework, which can be broadly summarized as follows:

- Categorization of traditional probability theory structures: Constructs like probability spaces and integration can be categorized using structures such as the Giry monad, which maps measurable spaces to probability measures, preserving the measurable structure. These frameworks formalize relationships between spaces and probability measures, enabling compositional probabilistic models.

- Categorization of synthetic probability and statistical concepts: Some axioms and structures are seen as ‘fundamental’ in probabilistic logic, which derive inference processes. Measure-theoretic models serve as concrete instances of these abstract frameworks. Markov categories are used to represent stochastic maps, conditional probabilities, and compositional reasoning in probabilistic systems.

Early categorical frameworks formalized probability measures. Lawvere [37] and Giry [38] introduced a categorical perspective on probability measures. Ref. [39] focused on the resulting ultrafilter monad, a structure derived when the probability monad is induced from a functor without an adjoint, known as the codensity monad. They demonstrated that an ultrafilter monad on a set can be interpreted as a functional mapping subsets to the two-element set, satisfying properties of finitely additive probability measures. Ref. [40] later generalized this framework, describing such a probability measure as a weakly averaging, affine measurable functional mapping into [0, 1]. Probability measures on a space were shown to constitute elements of a submonad of the Giry monad.

Working with real-world datasets, where discreteness plays a crucial role, brings deeper significance. Ref. [41] shows that the Giry monad can be restricted to countable measurable spaces with discrete -algebras, yielding a restricted Giry functor from the codensity monad. This suggests that natural numbers N are ’sufficient’ for such applications. Here, standard measurable spaces are replaced by Polish spaces, a class that has not been fully explored in categorical probability-related machine learning.

The Giry monad structure has been applied in many areas, such as [42], where stochastic automata are built as algebras on the monoid and Giry monads. This survey emphasizes the categorical approach to Bayesian Networks [21], Bayesian reasoning, and inference [43,44] using the Giry monad. To illustrate how the measure-theoretic perspective is incorporated into probabilistic inference in machine learning, we summarize the role of the Giry monad in Table 5.

Table 5.

Role of the Giry monad in probabilistic inference for machine learning.

Markov categories, which represent ‘randomness’ through random functions, offer a unique synthetic approach to understanding probability. The first research on Markov categories goes back to Lawvere [37] and Chentsov [45]. Related studies involve early synthetics research [46,47,48] and the specialization of Markov categories with restrictions by Kallenberg [49], Fritz [50,51,52,53,54,54], and Sabok [55], among others. Markov categories’ advantage lies in their graphical calculus, which simplifies translating diagram-based operations into programming languages [31,55,56].

Category theory’s application in Probabilistic Machine Learning aims to elucidate properties and facilitate data updating, especially for probability distributions and uncertainty inference. Key steps include formalizing random variables, modeling learning processes in a categorical framework, and establishing principles for probabilistic reasoning. For instance, random variables are treated as morphisms in a category, and learning processes as functors between categories of data and models. Bayesian machine learning uses categorical methods for both parametric and nonparametric reasoning on function spaces, representing priors, likelihoods, and posteriors with categorical structures. These frameworks enable compositional reasoning, where probabilistic updates are modeled compositionally, especially in supervised learning. Categorical Bayesian probability formalizes prior knowledge and belief updates based on data.

The categorical approach to probabilistic modeling provides practical advantages, simplifying proofs, complementing measure-theoretic methods, and intuitively representing complex probabilistic relationships. For example, Markov categories offer a framework for reasoning about stochastic processes, conditional probability, and independence. Research includes contributions by [1,21,31,43,44], among others. Experimental studies, such as [57], showcase the use of categorical theories in automatic inference systems [58,59,60], demonstrating their practical value in machine learning.

3.2. Preliminaries and Notions

In this section, we introduce key preliminaries and notions relevant to the research.

In traditional probability theory, a measurable space consists of a set X equipped with a -algebra , which defines a structured way to determine which subsets of X can be assigned probabilities. A function between measurable spaces is called measurable if it preserves this structure. A measure space extends this by introducing a measure , a function that assigns a non-negative value to each set in , following certain consistency rules such as countable additivity. When the total measure is normalized to one, the space is called a probability space, commonly used in probabilistic modeling.

While these classical structures provide a foundation for probability theory, they are not always well-suited for representing function spaces in machine learning, leading to the exploration of alternative frameworks such as quasi-Borel spaces, which define measurability in terms of random variables rather than -algebras. Probabilistic learning algorithms rely on distributions defined over a fixed global sample space, focusing on random variables that are characterized by predefined spaces. In probabilistic programming, reasoning often necessitates the Cartesian closed property. However, , the category of measurable spaces, is not Cartesian closed because measurable functions between measurable spaces may not preserve measurability. To overcome this limitation, two main approaches have been proposed. The first involves augmenting with various monoidal products, thus enabling probabilistic reasoning and compositionality within the existing framework [43]. The second approach generalizes measurable spaces to categories that are inherently Cartesian closed, such as quasi-Borel spaces (QBSs) [61].

Definition 9

(Quasi-Borel space [61]). A quasi-Borel space is a pair where X is a set and is a collection of ‘measurable’ maps from some standard Borel space B to X, satisfying the following conditions:

- 1.

- For every , the map defined byis in .

- 2.

- If are in , then any measurable function such that is also in .

- 3.

- contains all constant functions.

A fundamental issue with the category of measurable spaces is that it is not Cartesian closed, meaning function spaces do not always inherit a measurable structure, which complicates higher-order probabilistic reasoning. QBS addresses this by defining measurability in terms of random variables rather than -algebras, ensuring that function spaces remain well-structured. The lack of Cartesian closure in arises because function spaces do not naturally inherit a measurable structure, disrupting composability in probabilistic inference. In contrast, QBS ensures well-defined function spaces: according to Proposition 15 in [61], an adjunction between and establishes a natural framework where function spaces exist consistently. Moreover, Example 12 in [61] illustrates two ways to equip a set X with a quasi-Borel structure, demonstrating its adaptability for probabilistic models. Additionally, QBS is inherently compatible with probability measures, as it seamlessly integrates with them, preserving the structure necessary for probabilistic reasoning.

Definition 10

([61] Probability measure on QBS). A probability measure on a quasi-Borel space is a pair of and a probability measure μ on .

The key operations, such as pushforward and integration, are also well-defined in QBS, ensuring the preservation of probabilistic structures. Furthermore, in applications to Probabilistic Machine Learning, QBS facilitates structured reasoning in Bayesian inference, stochastic maps, and probabilistic programming, offering a compositional framework for complex models.

In conclusion, quasi-Borel spaces restore Cartesian closure, making them a powerful tool for structuring higher-order probabilistic computations in machine learning while ensuring compatibility with traditional probability theory.

Definition 11

(Stochastic process). A stochastic process is defined as a collection of random variables defined on a common probability space , where Ω is a sample space, is a σ-algebra, and is a probability measure; and the random variables, indexed by some set T, all take values in the same mathematical space S, which must be measurable with respect to some σ-algebra .

Definition 12

(Stochastic Process [62]). A stochastic process is a collection of random variables defined on a common probability space , where:

- Ω is the sample space;

- is a σ-algebra of subsets of Ω;

- is a probability measure on .

Each random variable is indexed by and takes values in a measurable space , where S is the state space and is a σ-algebra on S.

Definition 13

(Stochastic Map [62]). Let X and Y be sets. A stochastic map (also known as a stochastic matrix in the finite case) from X to Y is a function that represents the conditional probability of transitioning from to . It satisfies the following properties:

- 1.

- Non-negativity: for all and .

- 2.

- Finiteness: For each , the number of nonzero probabilities is finite.

- 3.

- Normalization: For each , the transition probabilities sum to one:

A stochastic map models uncertainty by specifying probabilistic transitions between states rather than deterministic ones. In machine learning, it plays a fundamental role in Markov decision processes (MDPs), reinforcement learning, and probabilistic graphical models. Specifically:

- In Markov chains, a stochastic map describes the transition probabilities between states, forming the basis for modeling sequential dependencies.

- In reinforcement learning, policy functions and transition models are often represented as stochastic maps, capturing the inherent randomness in environment dynamics.

- In probabilistic inference, stochastic maps define the conditional distributions in Bayesian networks and hidden Markov models.

Definition 14

(Stochastic Kernel [62]). A stochastic kernel (or probability kernel) from a measurable space to another measurable space is a function satisfying:

- 1.

- Probability Measure Condition: For each , the function is a probability measure on , meaning:

- 2.

- Measurability Condition: For each , the function is -measurable.

A stochastic kernel generalizes a stochastic map to continuous spaces by allowing probabilistic transitions between arbitrary measurable subsets of Y rather than just discrete points. It is a crucial tool in:

- Bayesian learning: Modeling posterior distributions in Bayesian inference.

- Sequential decision-making: Representing transition dynamics in stochastic control and reinforcement learning.

- Variational inference: Defining probability measures in stochastic optimization and Monte Carlo methods.

In machine learning, stochastic maps and stochastic kernels enable structured uncertainty modeling, facilitating robust decision-making under probabilistic assumptions.

Using stochastic kernels and Markov kernels, we can define the following categories commonly used in categorical probabilistic learning research, where these kernels serve as morphisms (See Table 6).

Table 6.

Categories in categorical probabilistic learning.

The categorization of probability-based learning processes involves using the following monads to handle probabilistic measures (Definitions in [46,63]).

As mentioned earlier, Markov categories are categories where morphisms encode ‘randomness’. In categorical probability-based learning, a Markov category background is necessary to describe Bayesian inversion, stochastic dependencies, and the interplay between randomness and determinism.

Definition 15

(Markov Category [53]). A Markov category is a symmetric monoidal category in which every object is equipped with a commutative comonoid structure, consisting of:

- A comultiplication map ;

- A counit map .

These maps must satisfy coherence laws with the monoidal structure. Additionally, the counit map del must be natural with respect to every morphism f.

Next, some commonly used probabilistic monads in this context are listed in Table 7.

Table 7.

Summary of probabilistic monads in measurable spaces.

One of the most common ways to construct a Markov category is by constructing it as a Kleisli category, which involves adding a commutative monad structure to a base category. In the following, we introduce a typical example: the Giry monad on . The Kleisli morphisms of the Giry monad on (and related subcategories) are Markov kernels. Therefore, its Kleisli category is the category , which is an essential example of a Markov category.

Definition 16

(Kleisli Category [64]). Given a monad on a category , the Kleisli category associated with is defined as follows:

- Objects: The objects of are the same as the objects of .

- Morphisms: For objects , the morphisms in are given by:where denotes the set of morphisms in from A to .

- Composition: For and , their composition in is defined as:where is the functorial action of , and is the monad multiplication.

- Identity: For each object , the identity morphism is given by the unit of the monad.

To generalize, given a distribution monad where:

The corresponding Kleisli category (a monad structure in addition to a base category, with morphisms like ) can describe the probability distribution of one object associated with another, forming a Markov category. Hence, once there is a probability distribution, there is a natural corresponding Markov category.

Next, we introduce another commonly used distribution monad in probability-based learning.

Definition 17

(Bayesian Inverse (Posterior Distribution) [62]). Let be a Markov kernel from a measurable space to a measurable space , and let π be a prior probability measure on X. The Bayesian inverse or posterior distribution is a Markov kernel defined for and measurable sets by:

provided that .

Here:

- represents the probability density or likelihood of y given x;

- is the marginal probability of y under the prior π;

- is a probability measure on , representing the conditional distribution of x given y (the posterior distribution).

Finally, the concept of entropy is introduced to measure the information (uncertainty) in probability distributions.

Definition 18

(Entropy [65]). The entropy of a random variable X quantifies the uncertainty or information content associated with its probability distribution. It is defined as follows:

- Discrete Case: If X is a discrete random variable with a probability mass function defined on a finite set , the entropy is given by:where the base of the logarithm determines the unit of entropy.

- Continuous Case: If X is a continuous random variable with a probability density function defined on a support set , the entropy , also referred to as differential entropy, is given by:

The discrete entropy is always non-negative, whereas the continuous entropy can take negative values due to the use of densities. In both cases, measures the average uncertainty or surprise in the random variable X.

3.3. Framework of Categorical Bayesian Learning

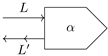

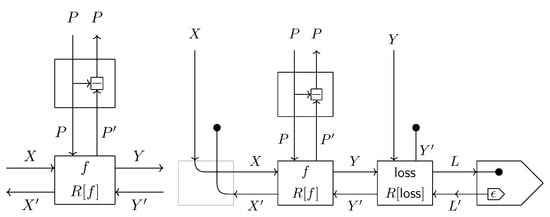

In the work of Kamiya et al. [31], the authors introduced a categorical framework for Bayesian inference and learning. Their methodology is mainly based on the ideas from [4,19], with relevant concepts from Markov categories introduced to formalize the entire framework. The key ideas can be summarized as two points:

- The combination of Bayesian inference and backpropagation induces the Bayesian inverse.

- The gradient-based learning process is further formalized as a functor .

As a result, they found Bayesian learning to be the simplest case of the learning paradigm described in [4]. The authors also constructed a categorical formulation for batch Bayesian updating and sequential Bayesian updating and verified the consistency of these two in a special case.

3.3.1. Probability Model

The basic idea of their work is to model the relationship between two random variables using conditional probabilities . Unlike gradient-based learning methods, Bayesian machine learning updates the prior distribution on using Bayes’ theorem. The posterior distribution is defined by the following formula, up to a normalization constant:

This approach is fundamental to Bayesian machine learning, as it leverages Bayes’ theorem to update parameter distributions rather than settling on fixed values. By focusing on these distributions instead of fixed estimates, the Bayesian framework enhances its ability to manage data uncertainty, thereby improving model generalization.

The model from [4] as a function f with inputs, outputs, and parameters, is adjusted to the following model: For the input data and parameters, the data parallelism and data fusion are much more complicated when they are distributions. Therefore, the following conceptions (such as joint distribution, disintegration, conditional distribution) become necessary.

- A morphism in a Markov category can be viewed as a probability distribution on X. A morphism is called a channel.

- Given a pair and , a state on can be defined as the following diagram, referred to as the jointification of f and .

- Let be a joint state. A disintegration of consists of a channel and a state such that the following diagram commutes. If every joint state in a category allows for decomposition, then the category is said to allow for conditional distribution.

- Let be a Markov category. If for every morphism , there exists a morphism such that the following commutative diagram holds, then is said to have conditional distribution (i.e., X can be factored out as a premise for Y).

- Equivalence of Channels: Let be a state on the object . Let be morphisms in . f is said to be almost everywhere equal to g if the following commutative diagram holds. If is a state and is the corresponding marginal distribution, and are channels such that and both form decompositions with respect to , then f is almost everywhere equal to g with respect to .

- Bayesian Inverse: Let be a state on . Let be a channel. The Bayesian inverse of f with respect to is a channel . If a Bayesian inverse exists for every state and channel f, then is said to support Bayesian inverses. This definition can be rephrased using the concept of decomposition. The Bayesian inverse can be obtained by decomposing the joint distribution which results from integrating over , where f is a channel. The Bayesian inverse is not necessarily unique. However, if and are Bayesian inverses of a channel with respect to the state , then is almost everywhere equal to .

As an example, in , corresponds to a probability distribution on . The decomposition of is given by the related conditional distribution and the state obtained by marginalizing y in . Define the conditional distribution such that, if is non-zero, then

If , then define as an arbitrary distribution on Y. This can also be discussed in the subcategory , where objects are Borel spaces.

However, in order to ensure that the composition of Bayesian inverses is strict (to define a functor similar to gradient learning functors), equivalent channels are no longer used, and instead, the category (abbreviated to in some references) is used (based on the following propositions).

- If a category admits conditional probabilities, it is causal. However, the converse does not hold.

- The categories and are both causal.

- If is causal, then (or written as ) is symmetric monoidal.

3.3.2. Introduction of Functor

The concept of parameterized functions has a natural expression in the categorification of machine learning models: the functor. By modifying the functor in gradient learning algorithms, the objective here is to understand conditional distributions between random variables or morphisms within a Markov category that satisfies certain constraints. In this context, a parameterized function is used to model the conditional distribution , and the learning algorithm updates using a given training set. In a Markov category, the type of the parameter need not be the same as the type of the variable. By leveraging the concept of actegories (categories with an action), parameters are considered to act on the model.

Definition 19

(Actegory [66]). Let be a monoidal category, and let be a category.

- is called an -actegory if there exists a strong monoidal functor , where is the category of endofunctors on , with composition as the monoidal operation. For and , the action is denoted by .

- is called a right -actegory if it is an -actegory and is equipped with a natural isomorphism:where is a monoidal category, and κ satisfies the coherence conditions.

- If is a right -actegory, the following natural isomorphisms must exist:called the mixed associator , andcalled the mixed interchanger.

The structure of is then adjusted as follows. Let be a symmetric monoidal category and be an -actegory. becomes a 2-category, which is defined as follows:

- Objects: The objects of .

- 1-morphism: in consists of a pair , where and is a morphism in .

- Composition of 1-morphisms: Let and . The composition is the morphism in given by:

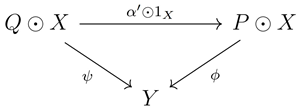

- 2-morphism: Let . A 2-morphism is given by a morphism such that the following diagram commutes:

- Identity morphisms and composition: These in the category inherit from the identity morphisms and composition in .

defines a pseudomonad on the category of -actegories (consider the monoidal structure of the endofunctor category, which can be composed with itself multiple times—a multi-parameter setting). In particular, if there is a functor , one can obtain a related functor:

3.3.3. The Final Combination: Functor

The ultimate goal is to synthesize the entire Bayesian process into a functor , so that its construction can capture the characteristics of Bayesian learning. Beyond the aforementioned functor, a reverse feedback mechanism (lenses) needs to be incorporated. In this context, to maintain the characteristics of an actegory, Grothendieck Lenses are used in the construction.

Here is the text extracted from the image:

Definition 20

(Grothendieck Lenses [64]). Let be a (pseudo)functor, and let denote the total category arising from the Grothendieck construction of . The category of Grothendieck lenses (or category of -lenses) is defined as follows:

- Objects: Pairs , where and .

- Morphisms: A morphism consists of a pair , where:

- 1.

- is a morphism in ;

- 2.

- is a morphism in .

- Hom-Sets: The Hom-set is given by the dependent sum:

Let be a Markov category with conditional probabilities (i.e., indicating that there are Bayesian inverses in ). Bayesian inverses are typically defined up to an equivalence relation. Observing the category , Bayesian inverses define a symmetric monoidal dagger functor.

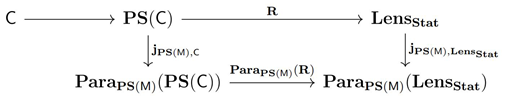

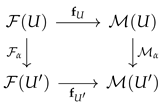

The gradient learning functor is defined based on a functor , where is a Cartesian reverse differential category. In the context of Bayesian learning, this structure is reflected through the construction of Bayesian inverses and generalized lenses. Therefore, the goal is to define a functor , where is the -Lens associated with the functor , where is the category of small categories.

The entire construction of is as follows.

- Define the functor : given , let .

- Define as the lens corresponding to the functor with objects and morphisms as follows.

- Objects: For , where and .

- Morphisms: A morphism is given by a morphism in and a morphism in .

- Combining with reverse derivatives, define the functor : given , let . If is a morphism in , thenis defined as the pair , where is the Bayesian inverse of f with respect to the state on X.

- Let and be Markov categories, with being causal. Assume is a symmetric monoidal -actegory consistent with . Then is a symmetric monoidal category. The categories and are -actegories. is a functor that, when applied to , yields:If is a symmetric monoidal category, then a -actegory allows a canonical functor , where . The functor is the unit of the pseudomonad defined by . Thus, we obtain the following diagram:

- Define the functor .

The functor does not include updates or shifts like the gradient learning functor. This is because the nature of Bayesian learning is relatively simplified; here, parameter updates correspond to obtaining the posterior distribution using the prior and likelihood, rather than as a result of optimization relative to a loss function.

The method of using the posterior distribution for prediction can be formalized within the category, i.e., by considering the following composition:

3.4. Other Related Research

3.4.1. Categorical Probability Framework and Bayesian Inference

Fritz et al. [53] developed a categorical framework for probability theory, which underpins many modern approaches in probability-based machine learning. By utilizing concepts such as the Giry monad and Markov categories, this framework bridges classical probability theory and categorical structures. Similar models, such as those in [31,43,59], further refine this connection, emphasizing coherence and adaptability.

A primary application of this framework is Bayesian inference, where log-linear models are used to express relationships and conditional independencies in multivariate categorical data. Categorical-from-binary models enable efficient Bayesian analysis of generalized linear models, particularly in cases with numerous categories. These models simplify computations, reducing the complexity of encoding and manipulating the data. Bayesian methods, including conjugate priors, asymmetric hyperparameters, and MCMC techniques, are seamlessly integrated, facilitating efficient inference while preserving compatibility with categorical structures. For instance, MCMC procedures can be interpreted as morphisms within Markov categories, aligning with the stochastic transitions inherent in probabilistic systems.

Probability measures within this framework are viewed as weakly averaging affine measurable functionals that preserve limits, forming a submonad of the double dualization monad on the measurable space category . This submonad is isomorphic to the Giry monad [54], reinforcing its classical applicability. Moreover, the Giry monad serves as a formal bridge for describing and manipulating probability measures.

Beyond classical probability, this framework accommodates generalized models, including fuzzy probability theories. By modeling probability measures using enriched categories and submonads, it provides a flexible approach to handle uncertainty across diverse contexts. This adaptability positions the framework for integration with future models that deal with imprecision or ambiguity in data.

3.4.2. Generalized Models and Probabilistic Programming

Baez et al. [67] first discussed the connection between categorical perspectives and statistical field theories, including quantum mechanics and quantum field theory in their Euclidean form. They identified a similarity to nonparametric Bayesian approaches and explored the construction of probability theory using a monad. This construction aligns with the Bayesian perspective of distributions over distributions, such as Dirichlet processes. In [68], the authors highlighted that in statistical learning theory, the richness of the hypothesis space is determined not by the number of parameters but by the VC-dimension, which measures falsifiability. Thus, the introduction of logical classification, such as topos structure, is natural in this context.

Further research emphasizes the role of syntax in machine learning, particularly in fields like natural language processing. Syntax ensures the preservation of structural and relational properties during data encoding. Categorical methods, through a functorial framework, offer robustness in both maintaining structural consistency and clarifying data relationship propagation [69,70].

To address this challenge, [70] introduced a classification pseudodistance, derived from the softmax function, to transform datasets into generalized metric spaces. This approach quantifies structural differences and facilitates syntax-based comparisons while preserving relational information.

At the application level, Bayesian synthesis advances probabilistic programming by efficiently managing uncertainty in complex statistical models. Central to this approach are Bayesian networks, which represent dependency structures using directed acyclic graphs. They simplify joint distribution representation and enable efficient inference by extracting conditional independence relationships, reducing computational complexity. Additionally, Markovian stochastic processes in probabilistic graphical models support scalable inference [59].

Another key component is probabilistic couplings, based on Strassen’s theorem [71], which enable coupling between distributions without requiring a bijection between sampling spaces. This enhances the expressivity of probabilistic programs. Lastly, Bayesian lenses simplify Bayesian updating, analogous to automatic differentiation in numerical computation, offering flexibility and adaptability in probabilistic programming languages [60].

3.4.3. Applications and Advanced Techniques in Categorical Structures

The synthetic approach to probability, focusing on relationships between probabilistic objects rather than their concrete definitions, offers a robust foundation for probability and statistics within the framework of Markov categories. By abstracting away from specific representations, it emphasizes the compositional and structural properties of probabilistic systems, facilitating the development of fundamental results, such as zero-one laws, that describe extreme probabilistic behaviors [52]. This framework also enhances probabilistic programming, particularly in languages like Stan and WebPPL, through Bayesian synthesis. Bayesian synthesis supports both soft constraints—allowing flexible regularization or partial observations—and exact conditioning, ensuring strict conformity to observed data. This dual capability enables precise and adaptable statistical modeling, with semantic analyses in Gaussian probabilistic languages demonstrating the operation of Bayesian synthesis within the structured framework of Markov categories.

Shiebler et al. [1] offer a categorical perspective on causality, covering key components such as causal independence, conditionals, and intervention effects. This perspective leverages the abstraction and compositionality of category theory to model and infer causal relationships. In the Bayesian framework for causal inference, the potential outcomes approach addresses causal estimands, identification assumptions, Bayesian estimation, and sensitivity analysis. Tools such as propensity scores, identifiability techniques, and prior selection are integral to robust causal modeling. A formal graphical framework, grounded in monoidal categories and causal theories, introduces algebraic structures that enhance our understanding of causal relationships. Monoidal categories represent independent or parallel processes using tensor products, while causal theories formalize their interactions. Markov categories, in particular, offer a compositional framework for reasoning about random mappings and noisy processing units [72,73]. Objects in Markov categories represent spaces of possible states, and morphisms act as channels that may introduce noise, enabling precise interpretations of causal relationships [1].

String diagrams serve as powerful tools for visualizing and analyzing causal models. These diagrams align with morphisms in Markov categories when decomposed according to the specifications of the causal model. For instance, the decomposition of a string diagram might represent a causal system where variables influence each other through noisy channels, reflecting probabilistic dependencies. This compatibility highlights the deep connection between causal structures and probabilistic relationships [74]. Key concepts, such as second-order stochastic dominance and the Blackwell-Sherman-Stein Theorem, further refine our understanding of causal inference [53]. In this categorical framework, causal models abstractly represent causal independence, conditionals, and intervention effects, moving beyond model-specific methods like structural equation models or causal Bayesian networks. Instead, causal models are formalized as probabilistic interpretations of string diagrams, with equivalence established through natural transformations between functors, known as abstractions and equivalences. This abstraction enables a more general and unified understanding of causality, providing a principled foundation for reasoning about causal relationships and intervention effects.

In [57], the integration of categorical structures such as symmetric monoidal categories and operads with amortized variational inference is explored within frameworks like DisCoPyro. Symmetric monoidal categories enable modeling of compositional processes, while operads formalize hierarchical and modular structures. These structures align naturally with the iterative and modular nature of variational inference frameworks. This integration demonstrates how Markov categories bridge abstract mathematical foundations and practical machine learning applications, enhancing both the efficiency and expressiveness of Bayesian models. By leveraging categorical methods, it is possible to construct models for parametric and nonparametric Bayesian reasoning on function spaces, such as Gaussian processes, and to define inference maps analytically within symmetric monoidal weakly closed categories. These developments highlight the potential of Markov categories to provide a unified and robust foundation for supervised learning and general stochastic processes.

From an algebraic perspective, Ref. [75] introduces the concepts of categoroid and functoroid to characterize universal properties of conditional independence. Categoroids extend traditional categories to account for probabilistic structures, while functoroids generalize functors to capture relationships of conditional independence. As previously mentioned, research on quasi-Borel spaces, which are cartesian closed, supports higher-order functions and continuous distributions, providing a robust framework for probabilistic reasoning [60]. This approach aligns with the Curry-Howard isomorphism, providing a universal representation for finite discrete distributions. The integration of these advanced techniques facilitates the development of sophisticated models and inference algorithms for probabilistic queries and assigning probabilities to complex events [72].

For applications, Ref. [76] employed the monad structure to perform automatic differentiation in reverse mode for statically typed functions containing multiple trainable variables.

4. Developments in Invariance and Equivalence-Based Learning

In machine learning, invariance and equivalence are key concepts, ensuring models produce consistent results under transformations like image splitting, zooming, or rotation. These transformations often reflect the geometric structure of data, viewed as lying on a manifold. Similarly, analyzing how shared network components affect data or how semantics evolve during training is essential for understanding equivalence. Despite their differences, these concepts all relate to forms of invariance or equivalence.

Category theory offers two main approaches to studying these concepts. The first uses functors, which map between categories while preserving structural relationships. This method is straightforward, computationally efficient, and practical. The second approach involves higher-order categories—such as topoi, stacks, or infinity categories—to capture complex relationships and multi-scale dependencies. While powerful, these methods are computationally intensive and harder to implement. The following sections will explore both approaches and their relevance to machine learning. We briefly introduce common homological methodologies, focusing on:

- Shiebler’s work [77,78], which leverages functorial constructions;

- Other functorial construction-based methods.;

- Persistent homology approaches for analyzing topological features in data [79,80].

Before delving into the details, we compare traditional invariant learning methods with a novel categorical approach, highlighting differences in theoretical formulation and computational complexity.

Traditional methods rely on explicitly defining transformation groups (e.g., rotations, translations) to ensure model invariance. CNNs, for example, use shared filters to achieve translation invariance. However, extending this approach to other symmetries, like rotation or scaling, requires additional layers or data augmentations, increasing computational overhead and design complexity. The categorical approach abstracts these transformations using category theory. Instead of manually applying transformations, data points and their transformations are represented as objects and morphisms within a category. Functors and natural transformations capture relationships between these objects, enabling the model to generalize beyond predefined transformations. This abstraction simplifies handling complex symmetries without needing specific modifications.

One may think of a practical example, such as image recognition, while reading the section. A traditional CNN processes image data using convolutional filters that slide across the image, detecting features invariant to spatial translations. However, making the model invariant to rotations or scaling requires additional mechanisms, increasing complexity. Instead of applying transformations directly, transformations like translation, rotation, and scaling are represented as morphisms between image objects. A functor captures how these transformations relate, enabling the model to generalize across different symmetries without additional layers. The categorical approach offers greater flexibility and efficiency by abstracting transformations through functors and morphisms, reducing the need for explicit definitions and enhancing scalability for complex data and symmetries.

4.1. Functorial Constructions and Properties

The thesis [81] explores the compositional and functorial structure of machine learning systems, focusing on how assumptions, problems, and models interact and adapt to change. It addresses two key questions: How does a model’s structure reflect its training dataset? Can common structures underlie seemingly different machine learning systems?

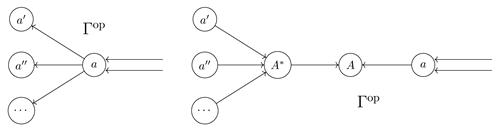

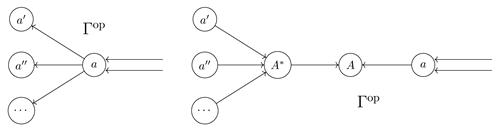

Shiebler [77] models hierarchical overlapping clustering algorithms as functors factoring through a category of simplicial complexes. He defines a pair of adjoint functors that link simplicial complexes with clustering algorithm outputs. In [78], he characterizes manifold learning algorithms as functors mapping metric spaces to optimization objectives, building on hierarchical clustering functors. This approach proves refinement bounds and organizes manifold learning algorithms into a hierarchy based on their equivariants. By projecting these algorithms along specific criteria, new manifold learning algorithms can be derived.