Abstract

This paper investigates the long-time behavior of fractional-order complex memristive neural networks in order to analyze the synchronization of both anatomical and functional brain networks, for predicting therapy response, and ensuring safe diagnostic and treatments of neurological disorder (such as epilepsy, Alzheimer’s disease, or Parkinson’s disease). A new mathematical brain connectivity model, taking into account the memory characteristics of neurons and their past history, the heterogeneity of brain tissue, and the local anisotropy of cell diffusion, is proposed. This developed model, which depends on topology, interactions, and local dynamics, is a set of coupled nonlinear Caputo fractional reaction–diffusion equations, in the shape of a fractional-order ODE coupled with a set of time fractional-order PDEs, interacting via an asymmetric complex network. In order to introduce into the model the connection structure between neurons (or brain regions), the graph theory, in which the discrete Laplacian matrix of the communication graph plays a fundamental role, is considered. The existence of an absorbing set in state spaces for system is discussed, and then the dissipative dynamics result, with absorbing sets, is proved. Finally, some Mittag–Leffler synchronization results are established for this complex memristive neural network under certain threshold values of coupling forces, memristive weight coefficients, and diffusion coefficients.

Keywords:

fractional-order dynamics; graph Laplacian; asymmetric complex networks; complex memristive neural networks; connected network on boundary; complete synchronization; pinning control; dissipativity; absorbing set; local anisotropy; cellular heterogeneity; spatio-temporal patterns MSC:

34K24; 35R11; 92B20; 35R02; 35B36; 05C40; 92B25

1. Introduction and Mathematical Setting of the Problem

Complex networks of dynamical systems appear naturally in real-world systems such as biology, physics (e.g., plasma, laser cooling), intelligent grid technologies (e.g., power grid networks, communications networks), neuromorphic engineering, social networks, as well as neuronal networks. Of particular interest are complex memristive neural networks in the brain network. The macroscopic anatomical brain network, which is composed of a large number of neurons (≈) and their connections (≈), is a complex large-scale network system that exhibits various subsystems at different spatial scales (micro and macro) and timescales, yet is capable of integrated real-time performance. These subsystems are huge networks of neurons, which are connected with each other and are modified based on the activation of neurons. The communication, via a combination of electrical and chemical signals between neurons, occurs at small gaps called synapses (see, e.g., [1]). This brain network structure implements mechanisms of regulation at different scales (from microscopic to macroscopic scales). On the microscopic level, neural plasticity regulates the formation and behavior of synaptic connections between individual neurons in response to new information. The association matrix of pair-wise synaptic weights between neurons will be of the order of . In view of the scale of the network and the specific difficulties of accurately measuring all synaptic connections, an accurate description of a complete network diagram of brain connections is an ongoing challenge and a task of great difficulty (see, e.g., [2]).

However, the rise of functional neuroimaging and related neuroimaging techniques, such as Electroencephalography (EEG), Magnetoencephalography (MEG), functional Magnetic Resonance Imaging (fMRI), diffusion-weighted Magnetic Resonance Imaging (dMRI), and Transcranial Magnetic Stimulation (TMS), has led to good mapping and deeper understanding of the large-scale network organization of the human brain. The fMRI technique can be used to determine which regions of the brain are in charge of crucial functions, and assess the consequences of stroke, malignant brain tumors, abscess, other structural alterations, or direct brain therapy. For this last one, see, e.g., [3] for the used of online sensor information to automatically adjust, in real time, the brain tumor drugs through MRI-compatible catheters, via nonlinear model-based control techniques and a mathematical model describing tumor-normal cell interaction dynamics.

The EEG and MEG signals measure, respectively, electrical activity and magnetic fields induced by the electrical activity, from various brain regions with a high temporal resolution (but with limited spatial coverage). However, fMRI measures whole-brain activity indirectly (by detecting changes associated with blood flow for each network, over a specified interval of time) with a high spatial resolution (but with limited temporal resolution). Consequently, in order to improve both spatial and temporal resolution, EEG and MEG signals are often associated with fMRI (see, e.g., [4]).

This whole-brain connectomics approach, which relies on macroscopic measurements of structural and functional connectivity, has notably favored a major development in the identification and analysis of effects of brain injury or neurodegenerative and psychiatric diseases on brain systems related to cognition and behavior, for better diagnosis and treatments (see, e.g., [5,6,7]).

The dynamic interaction between neuronal networks and systems, which takes into account the dynamic flows of information that pass through different interconnected, widely distributed, and functionally specialized brain regions, is crucial for normal brain function (see, e.g., [8,9]). Measuring electrical activity (from, e.g., dMRI connectivity, magnetoencephalography or electroencephalography recordings) has allowed researchers to point out the existence of oscillations characterized by their frequency, amplitude, and phase (see, e.g., [8,10,11,12]). This phenomenon is considered to result from oscillatory neuronal (local) synchronization and long-range cortical synchronization (which is linked to many cognitive and memory functions). Moreover, several studies have established that the activity pattern of cortical neurons depends on the history of electrical activities (e.g., caused by the long-range interaction of ionic conductances), as a result of changes in synaptic strength or shape of synaptic plasticity (see, e.g., [13,14,15]). In addition, the diffusion terms play an important role in dynamics and stability of neural networks when, e.g., electrons are moving in asymmetric electromagnetic fields (see, e.g., [16,17,18]). So, diffusion phenomena cannot be neglected. Understanding mechanisms behind these synchronized oscillations and their alterations is very important for better diagnosis and treatments of neurological disorders. In particular, the relationship between stability of synchronization and graph theory is established. This relationship characterizes the impact of network topology on the disturbances. Disturbances or alteration of such synchronized networks, taking into account memory characteristics of neurons and their past history, play an important role in several brain disorders, such as neuropsychiatric diseases, epileptic seizures, Alzheimer’s disease, and Parkinson’s disease (see, e.g., [19,20,21,22,23] and references therein).

Moreover, noninvasive brain stimulation is attracting considerable attention due to its potential for safe and effective modulation of brain network dynamics. In particular, in the context of human cognition and behavior, the targeting of cortical oscillations by brain stimulation with electromagnetic waveforms has been widely used, whether it is to ensure a safe treatment, to improve quality of life, or to understand and explain the contribution of different brain regions to various human Cognitive Brain Functions (see, e.g., [24] and references therein).

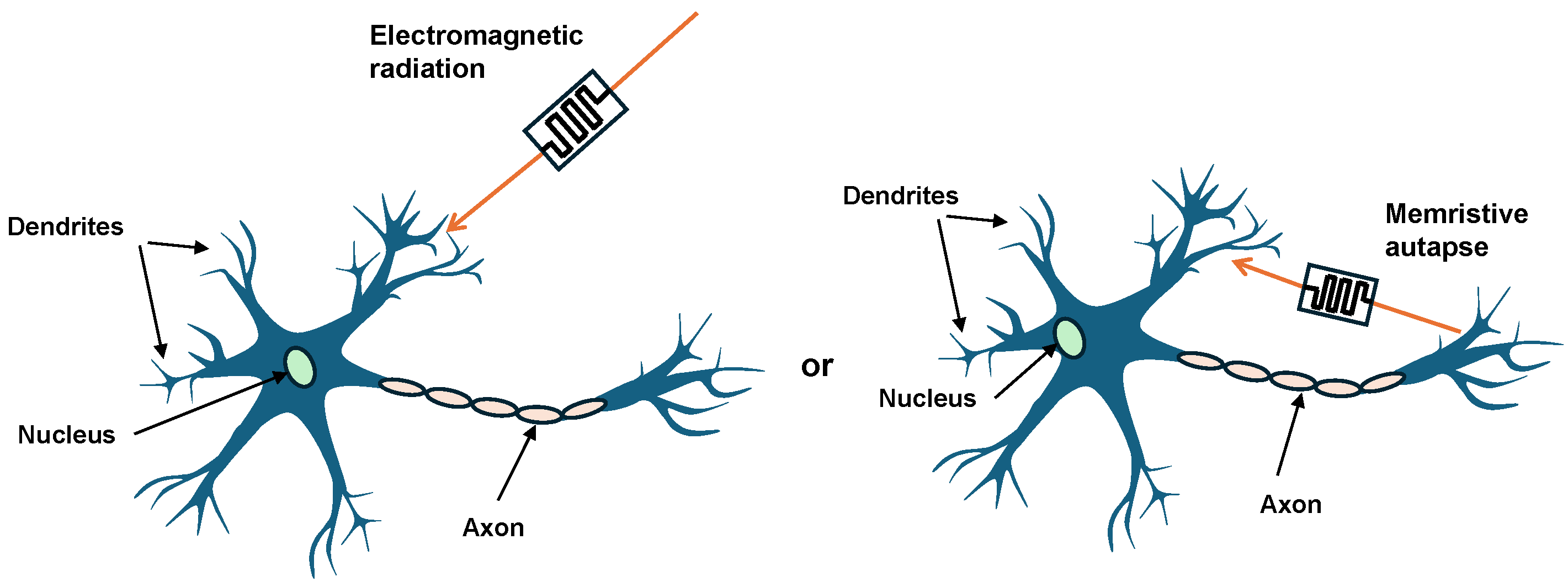

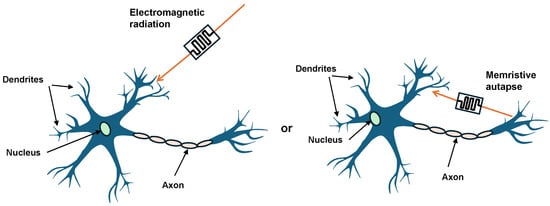

It is well known that the dynamic behavior of neurons depends on the architecture of the network and on external perturbations such as electromagnetic radiation or stimulation by an electric field. A memristor, with plasticity and bionic characteristics, is a nonvolatile electrical component (i.e., retains memory without power) that limits or regulates the flow of electrical current and is capable of describing the impacts of electromagnetic induction (radiation) in neurons, by coupling membrane potential and magnetic flux. Moreover, electromagnetic induction currents in the nervous system can be emulated by memristive autapses (an autapse is a synaptic coupling of a neuron’s axon to its own dendrite and soma), which play a critical role in regulating physiological functions (see Figure 1).

Figure 1.

Schematic design of a biological neuron.

The concept of a memristor, which is a passive and nonlinear circuit element, was first introduced by Chua [25]. The author estimates that the memristor was to be considered as basic as the three classical passive electronic elements: the resistor, the capacitor, and the inductor. In the resistor, there is a relation between the instantaneous value of voltage and the instantaneous value of current. Unlike the resistor, the memristance depends on the amount of charge passing through it and consequently, the memristor can remember its past dynamic history. It is a natural nonvolatile memory.

Memristor-based neural network models can be divided into (see, e.g., [26,27]) (a) memristive synaptic (autapse or synapse) models, in which a memristor is used as a variable connection weight for neurons; (b) neural network models affected by electromagnetic radiation, in which a memristor is used to simulate the electromagnetic induction effects. The memristive synaptic model uses the bionic properties of the memristor to realistically mimic biological synaptic functions, while the neural network model under electromagnetic radiation employs a magnetic flux-controlled memristor to imitate the electromagnetic induction effect on membrane potential.

Recently, considerable efforts have been made to mimic significant neural behaviors of the human brain by means of artificial neural networks. Due to the attractive property of this new type of information storage and processing device, it can be implemented using synaptic weights in artificial neural networks. It can also be an ideal component for simulating neural synapses in brain networks due to its nano-scale size, fast switching, passive nature, and remarkable memory characteristics (see, e.g., [28,29,30] and references therein). In recent years, the dynamical characteristics of memiristor-based neural networks have been extensively analyzed and, in this context, several studies have been reported as regards chaos, passivity, stability, and synchronization (see, e.g., [31,32,33,34,35,36,37] and references therein).

Currently, fractional calculus is particularly efficient, when compared to the classical integer-order models, for describing the long memory and hereditary behaviors of many complex systems. Fractional-order differential systems have been studied by many researchers in recent years; the genesis of fractional-order derivatives dates back to Leibniz. Since the beginning of the 19th century, many authors have addressed this problem and have devoted their attention to developing several fractional operators to represent nonlinear and nonlocal phenomena (such as Riemann, Liouville, Hadmard, Littlewood, Hilfer, and Caputo among others). Fractional integrals and fractional derivatives have proved to be useful in many real-world applications; in particular, they appear naturally in a wide variety of biological and physical models (see, for instance, Refs. [38,39,40,41,42,43] and references therein). Both the Riemann–Liouville and Caputo derivative operators are the most common and widely used way of defining fractional calculus. Unlike this Riemann–Liouville operator, when solving a fractional differential equation, we use the Caputo fractional operator [44], for which there is no need to define the fractional-order initial conditions. One of the most important characteristics of fractional operators is their nonlocal nature, which accounts for the infinite memory and hereditary properties of underlying phenomena. Recently, in [39], we proposed and analyzed a mathematical model of the electrical activity in the heart through the torso, which takes into account cardiac memory phenomena (this phenomenon, also known as the Chatterjee phenomenon, can cause dynamical instabilities (as alternans) and give rise to highly complex behavior including oscillations and chaos). We have shown numerically the interest of modeling memory through fractional-order derivatives, and that, with this model, we are able to analyze the influence of memory on some electrical properties, such as the duration of action potentials (APD), action potential morphology, and spontaneous activity.

On the other hand, synchronization of neural networks plays a significant role in the activities of different brain regions. In addition, compared with the concept of stability, the synchronization mechanism (with possible control), for two or more apparently independent systems, is being paid increasing attention in neuroscience research and medical science, because of its practicality. The study of dynamical systems and the synchronization of biological neural networks, with different types of coupling, have attracted a large amount of theoretical research, and consequently, the literature in this field is very extensive, especially in the context of integer-order differential systems. Since the literature on this topic has been receiving a significant amount of attention, it is not our intention to comment in detail here on all the works cited. For a general presentation of the synchronization phenomenon and its mathematical analysis, we can cite, e.g., [45,46,47,48]. Concerning problems associated with integer-order partial differential equations various methods and technique, we can refer to, e.g., [49,50,51,52,53,54]. Finally, for problems associated with fractional-order partial differential equations, various methods have recently been studied in the literature, such as, for example, [55], which considers the synchronization control of a neural network’s equation via Pinning Control, ref. [56], which investigates the dissipativity and synchronization control of memristive neural networks via feedback controller, and [57], which explores the stability and pinning synchronization of a memristive neural network’s equation.

Motivated by the above discussions, to take into account noninvasive brain stimulation and the effect of memory in the propagation of brain waves, together with other critical brain material parameters, we propose and analyze a new mathematical model of fractional-order memristor-based neural networks with multidimensional reaction–diffusion terms, by combining memristor with fractional-order neural networks. The models with time fractional-order derivatives integrate all the past activities of neurons and are capable of capturing the long-term history-dependent functional activity in a network. The diffusion can be seen as a local connection (at a lower scale), like, e.g., in [58], whereas the coupling topology relates to dynamical properties of network dynamical systems, corresponding to physical or functional connections at the upper scale.

Thus, the derived brain neural network model is precisely the system (1)–(5) (see further), which is a nonlinear coupled reaction–diffusion system in the shape of a set of fractional-order differential equations coupled with a set of fractional-order partial differential equations (interacting via a complex network).

In the present work, we are interested in the synchronization phenomenon in a whole network of diffusively nonlinear coupled systems which combines past and present interactions. First, we will impose initial data on a closed and bounded spatial domain, and analyze some complex dynamical property of the long-time behavior of a derived fractional-order large-scale neural network model. After a rigorous investigation of dissipative dynamics, different synchronization problems of the developed complex dynamical networks are studied.

2. Formulation of Memristive-Based Neural Network Problem

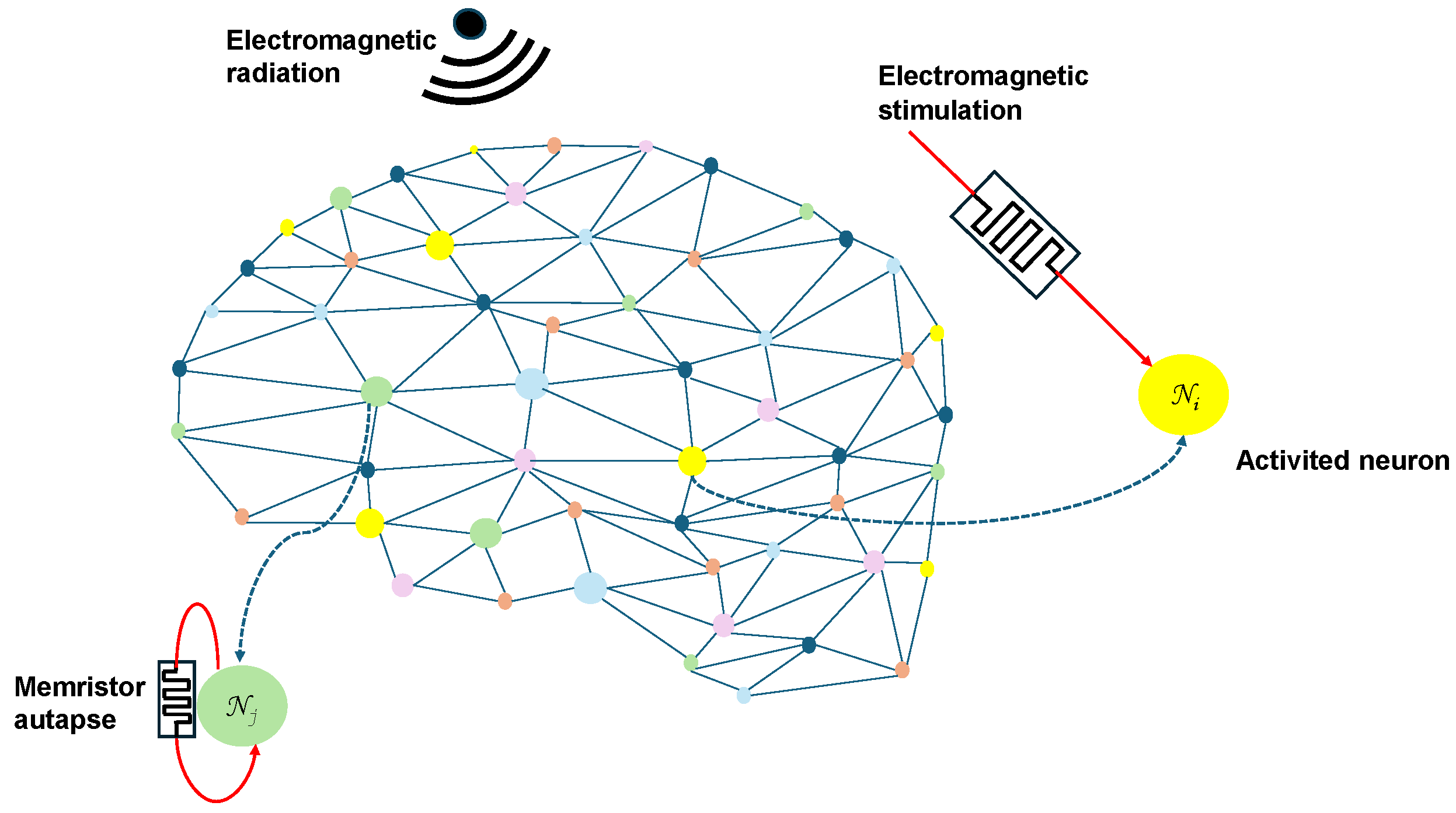

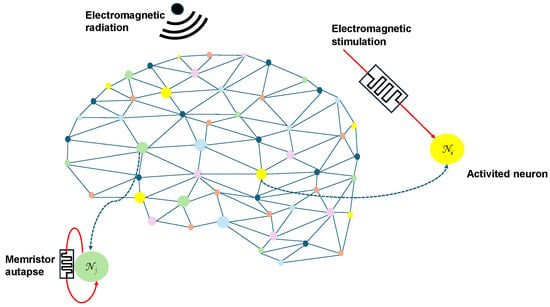

We shall consider a network of m coupled neurons denoted by , where the network size is a positive integer. Our model of a memristor-autapse-based neural network with external electromagnetic radiation can be depicted as in Figure 2.

Figure 2.

Concept map of the coupled neural network.

In this paper, motivated by the above discussions (see Section 1), we introduce the following new fractional-order memristive neural network of coupled neurons, modeled by the following Caputo-fractional system, including the magnetic flux coupling (on each neuron of the network, for ):

where and the spatial domain is a bounded open subset and its boundary denoted by is locally Lipschitz continuous. Here, denotes the forward Caputo fractional derivative with a real value in . The state variables , , and describe, respectively, the membrane potential, the ionic variable, and the magnetic flux across the membrane of the i-th neuron , for . The term F is the nonlinear activation operator. The functions and represent the external forcing current and the external field electromagnetic radiation, respectively. The parameters , , are the coupling strength constants with . The values a and can be any nonzero number constants and all the parameters b, , , , , and can be any positive constants.

The fractional parameter is , where is the membrane pseudo-capacitance per unit area and is the surface area-to-volume ratio (homogenization parameter). The membrane is assumed to be passive, so the pseudo-capacitance can be assumed to be constant. Moreover, since the electrical restitution curve (ERC) is affected by the action potential history through ionic memory, we have represented the memory via u (respectively, via w) by a time fractional-order dynamic term (respectively, by ), where the positive parameters and are assumed to be constants. The fractional parameters , , and depend on the fractional-order .

Remark 1.

According to the expression of the Caputo derivative, we can obtain that the unit for the dimension of is , with s the unit for the dimension of time and the capacitance for (this result remains valid for and ).

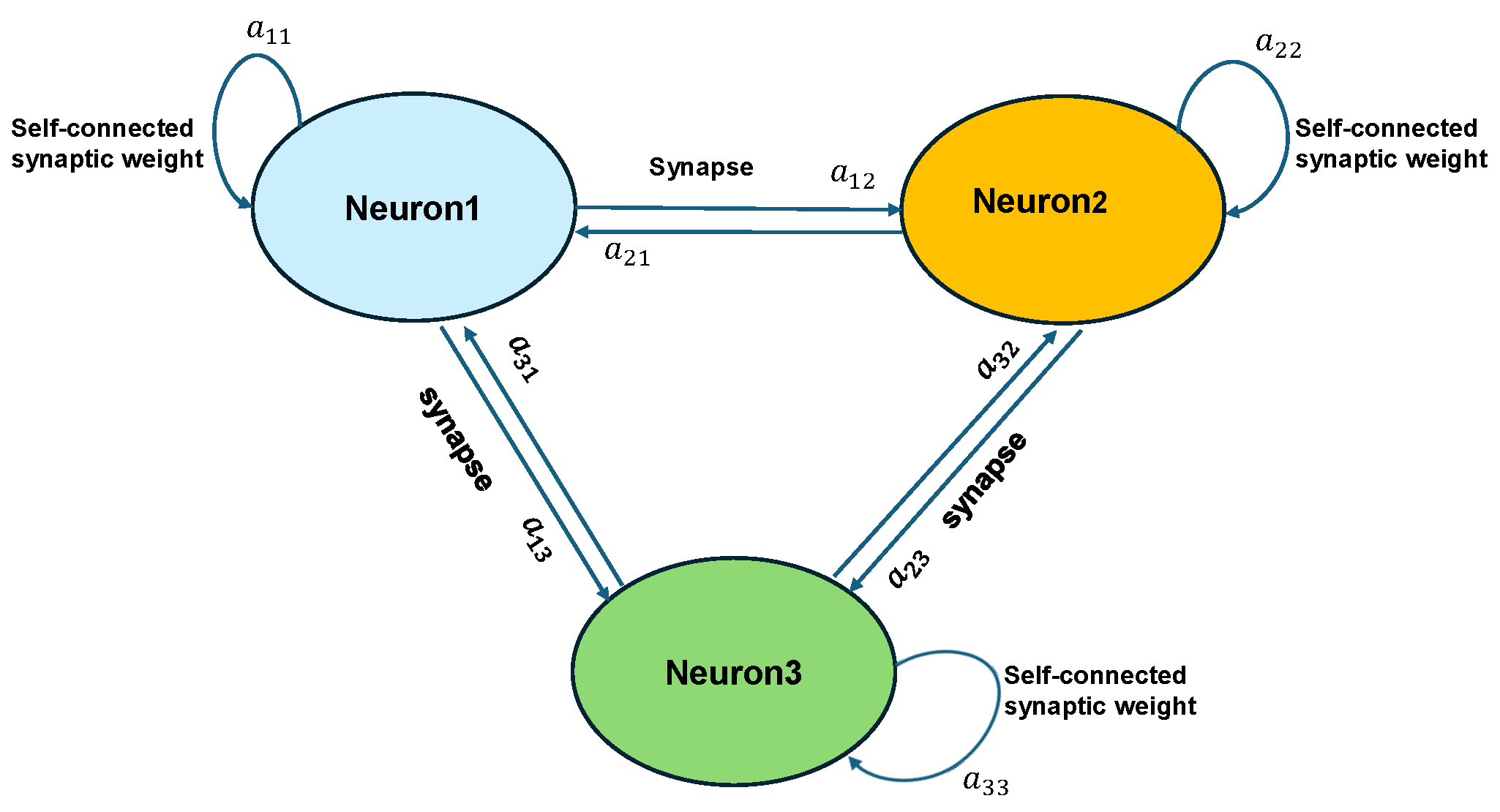

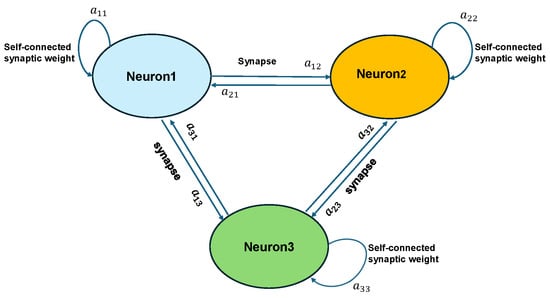

The functions , for , represent the memristor’s synaptic connection weight coefficient (an example with three neurons is depicted in Figure 3).

Figure 3.

Abridged general view of synaptic connection.

The term could be regarded as an emulation of a neurological disease, e.g., epileptic seizures, in which the nonlinear operators are some activation functions. We assume that (for )

where are in , and we denote

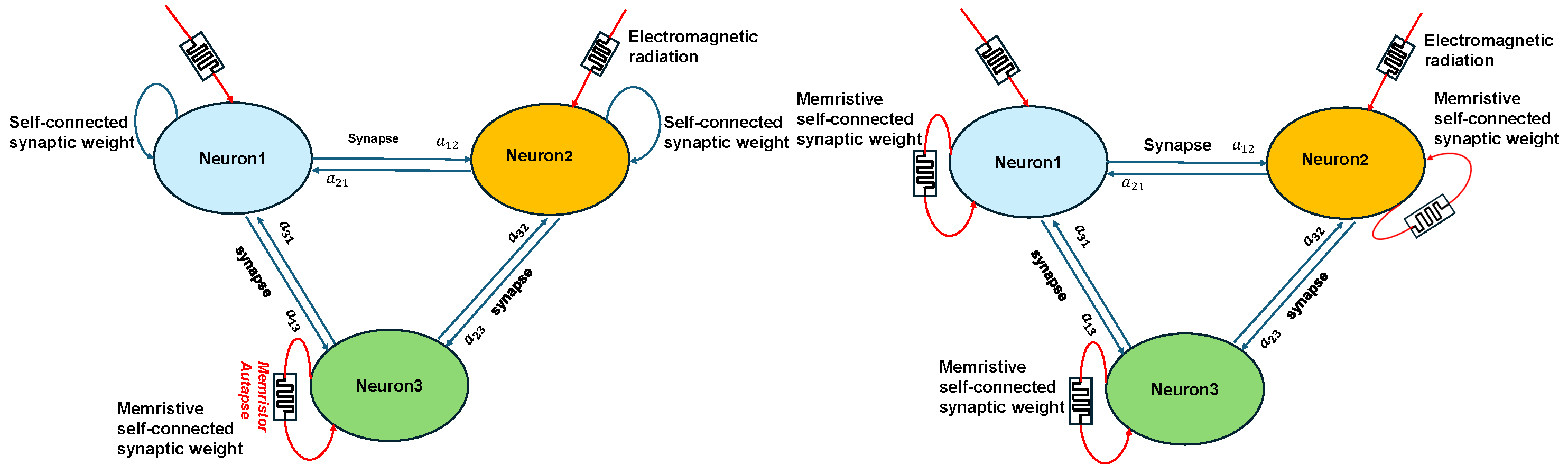

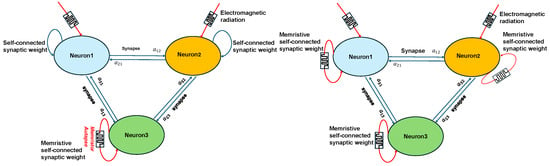

The operator is the memory conductance of the flux-controlled memristor, where , , all the parameters , , , , can be any positive constant, and , with , for . The magnetic flux coupling could be regarded as an additive induction current on the membrane and represents the dynamic effect of electromagnetic induction on neurological diseases (examples with three neurons are depicted in Figure 4). For simplicity, we can write , where , , , and .

Figure 4.

Two examples of connection topology of the neural network with three neurons (based on memristor–autapse and under electromagnetic radiation effects).

The operator , which contains the information of network topology, is defined by (for )

with the coupling (or connectivity) matrix ( is called the Laplacian matrix of the graph), in which are the coefficients of connection from the i-th to the j-th neuron, satisfying the assumption

- (HG)

- for and , i.e., the matrix has vanishing row and column sums and non-negative off-diagonal elements.

Then, can be written as

In graph theory, the Laplacian matrix , also called the graph Laplacian or Kirchhoff matrix, defines the graph topology with m the number of vertices/nodes in graph (with set of vertices and set of edges/links). The diagonal matrix is called the degree matrix of graph and is called the adjacency matrix of the graph.

The state variable , for in this network, is coupled with the other neurons in the network through the state equation by and/or through the boundary conditions as follows (fully connected network on boundary):

where is the outward normal to and , are the coupling strength constants on boundary. The tensors and are the effective diffusion tensors that describe the heterogeneity of brain tissue and local anisotropy of cell diffusion.

The initial conditions of (1) to be specified will be denoted by (for )

The rest of the paper is organized as follows. In the next section, we give some preliminary results useful in the sequel. In Section 4, we shall prove the existence, stability, and uniqueness of weak solutions of the derived model, under some hypotheses for data and some regularity of nonlinear operators. An important feature of the uniqueness of the solution is the semiflow physical property of the system; starting the system at time , letting it run until time , and then restarting it and letting it run from time s to the final time of amounts to running the system directly from time to final time . In Section 5, the existence of an absorbing set in state spaces for the system is discussed, an estimate of the solutions is derived when time is large enough, and then the dissipative dynamics result, with absorbing sets, is proved. In Section 6, synchronization phenomena are discussed and some Mittag–Leffler synchronization criteria for such complex dynamical networks are established in different situations. Precisely, some sufficient conditions for the synchronization are obtained first for the complete (or identical) synchronization (which refers to the process by which two or more identical dynamical systems adjust their motion in order to converge to the same dynamical state as time approaches infinity) problem and then for the master–slave synchronization problem via appropriate pinning feedback controllers and adaptive controllers. In Section 7, conclusions are discussed. In Appendix A, the full proof of well-posedness of the derived system is shown, and in Appendix B, a brief introduction to some definitions and basic results in fractional calculus in the Rieman–Liouville sense and Caputo sense is given.

3. Assumptions, Notations, and Some Fundamental Inequalities

Some basic definitions, notations, fundamental inequalities, and preliminary lemmas are introduced and other results are developed.

Let , , be an open and bounded set with a smooth boundary and . We use the standard notation for Sobolev spaces (see [59]), denoting the norm of (, ) by . In the special case , we use instead of . The duality pairing of a Banach space X with its dual space is given by . For a Hilbert space Y, the inner product is denoted by and the inner product in is denoted by . For any pair of real numbers , we introduce the Sobolev space which is a Hilbert space normed by , where space denotes the Sobolev space of order s of functions defined on and taking values in , and defined by interpolation as , for with and , and .

We now recall the following Poincaré–Steklov inequalities (see, e.g., [60]):

Lemma 1.

(Poincaré–Steklov inequality) Assume that ℧ is a bounded connected open subset of with a sufficiently regular boundary (e.g., a Lipschitz boundary). Then,

where and is the smallest eigenvalue of the Laplacian supplemented with the Robin boundary condition on (with any positive constant).

Lemma 2.

(Extended Poincaré–Steklov inequality). Assume that and that ℧ is a bounded connected open subset of with a sufficiently regular boundary (e.g., a Lipschitz boundary). Let R be a bounded linear form on whose restriction on constant functions is not zero. Then, there exists a Poincaré–Steklov constant such that

Remark 2.

Let q be a nonnegative integer and ℧ be a bounded connected open subset of with a sufficiently regular boundary . We have the following results (see, e.g., [59]):

(i) , , with continuous embedding (with the exception that if , then and if , then ).

(ii) (Gagliardo–Nirenberg inequalities) There exists such that

where , , and such that (with the exception that if is a nonnegative integer, ).

Definition 1

(see, e.g., [61]). A real valued function defined on , , is a Carathéodory function iff is measurable for all and is continuous for almost all .

Our study involves the following fundamental inequalities, which are repeated here for review:

- (i) Hölder’s inequality: , where

- (ii) Young’s inequality ( and ):

- (iii) Minkowski’s integral inequality ():

Finally, we denote by the Lebesgue measure of ℧, by the sign of the scalar x, and by the set of linear and continuous operators from a vector space A into a vector space B. The operator stands for the adjoint to linear operator between Banach spaces.

From now on, we assume that the following assumptions hold for nonlinear operators, matrix coupling, and tensor functions appearing in our model on .

First we introduce the following spaces: and (endowed with their usual norms). We will denote by the dual of . We have the following continuous embeddings ( is such that ):

and the injection is compact, where if and if .

For tensor functions, we assume that the following assumptions hold.

(H1)

We assume that the conductivity tensor functions , , are symmetric, positive definite matrix functions and that they are uniformly elliptic, i.e., there exist constants and such that ()

The operators F and , which describe behavior of the system, are supposed to satisfy the following assumptions.

(H2)

The operators F and are Carathéodory functions from into . Furthermore, the following requirements hold.

- The nonlinear scalar activation function , which can be taken as with as a decreasing function on the second variable, satisfies ():

- (i)

- ;

- (ii)

- ;

- (iii)

- and ;

- (iv)

- ;

- (v)

- and .

, , and , for , are positive constants, , for , are given functions, and is the primitive function of . - The nonlinear scalar activation functions are bounded with and satisfy -Lipschitz condition, i.e.,

- (vi)

- and , , with and .

For the operators , which are defined from the matrix coupling G, we introduce first the following notations: the matrix , where and the matrix , where , for . Then, the matrix is symmetric, the matrix is antisymmetric, and can be represented uniquely as . It is evident that both and have zero row sums (i.e., and ) and . Then, we can now derive the following two lemmas.

Lemma 3.

For in and , we have the following inequalities:

where , and (υ is any positive constant, to be chosen appropriately).

Proof.

Since , we have (according to assumption )

and

This completes the proof. □

The estimates of the previous lemma are needed to construct a priori estimates (to establish the existence result).

Lemma 4.

For all , we have the following relations (with for ).

,

Proof.

Since , we can deduce that

and then the relation (i). For the relation (ii), we have (we proceed in a similar way as in [62])

Since and , we can deduce that

We can deduce that (since ), and then , with , for . For the term I, we have that

and then

This completes the proof. □

Remark 3.

(a) If then (for all ) and consequently (with ) and (in Lemma 4).

(b) The matrix is symmetric and satisfies .

Remark 4.

Let be an arbitrary antisymmetric matrix. Then, for any vector w, we have

To end this section, we give the following lemmas and definitions. From, e.g., [63,64], we can deduce, respectively, the following Lemmas.

Lemma 5.

(A generalized Gronwall’s inequality) Assume , h is a nonnegative function locally integrable on (some ) and b is a nonnegative, bounded, nondecreasing continuous function defined on . Let f be a nonnegative and locally integrable function on with, for , Then (for ), If, in addition, h is a nondecreasing function on , then

The used function is the classical two-parametric Mittag–Leffler function (usually denoted by if ), which is defined by The function is an entire function of the variable z for any , .

Lemma 6.

Let ω be a locally integrable nonnegative function on such that . Then, we have

The invertibility of follows from the complete monotonicity property of . As shown in [65], this function is completely monotone if and only if and . Since and , then the inverse function of f, is the function ( and ).

Definition 2

(see, e.g., [66]). The initial-boundary value problem (1)–(6) is called dissipative in a Banach space if there is a bounded set, in , for some positive constants such that for any bounded subsets (of ) and all initial data belonging to , there exists a time , depending on , such that the corresponding solution is contained in for all . The set is called an absorbing set and r is called a radius of dissipativity.

Remark 5.

(i) We can also express the previous definition as follows. For any bounded set , there exists a finite time such that any solution started with initial condition in remains within the bounded ball for all time .

(ii) The study of dissipative dynamics opens the way to the analysis of the synchronization problem.

Definition 3

4. Well-Posedness of the System

This section concerns the existence and uniqueness of a weak solution to problem (1), under Lipschitz and boundedness assumptions on the non-linear operators. We now define the following bilinear forms: Under hypothesis (8), it can easily be shown that the forms (for ) are symmetric coercive and continuous on . We can now write the weak formulation of the initial-boundary value problem (1)–(6) (for all v, , in and , with ):

The first main theorem of this paper is then the following result.

Theorem 1.

Let assumptions

(H1)

and be fulfilled and . Assume that there exists such that

Then, for any (for ) and , there exists a unique weak solution to problem (1) verifying (for )

Moreover, if , then and .

Proof.

To establish the existence of a result of a weak solution to system (1), we proceed as in [39] by applying the Faedo–Galerkin method, deriving a priori estimates, and then passing to the limit in the approximate solutions using compactness arguments. The uniqueness result can be evaluated in the classical way. The full proof is given in Appendix A. □

5. Dissipative Dynamics of the Solution

In this section, first we prove that all the weak solutions of initial value problem (1) exist for time . Then, we show that there exists an absorbing set in space for the solution semiflow, which is dissipative in space in the sense of Definition 2.

Theorem 2.

Under the assumptions of Theorem 1, there exists a unique global weak solution

Proof.

Taking the scalar product of the equations of system (1) by , , and , respectively, and adding these equations for , we obtain, according to Lemma 4 and assumption :

By using similar arguments to derive (A9), we can deduce

where , and , with , , , and . The values and are chosen appropriately so that and , i.e., and . In particular, we have

We can solve this Caputo fractional differential inequality (17) to obtain the following estimate in maximal existence time interval (from Lemma 6):

where , and

.

Since the solution will never blow up at any finite time, then the maximal existence time interval is . Consequently, the solution of initial-boundary value problem (1)–(6) exists in space , for any time . Then we have the existence of solution semiflow for (1)–(6), that is, a mapping , enjoying the semigroup property for any . Moreover, from (18), we can deduce that for any (in view of the asymptotic property of the Mittag–Leffer function):

where (since ) and then .

Afterwards, for any bounded set (of ), with , we have, if :

and then there exists a finite , given by such that the solution trajectories that started at initial time from the set will permanently enter the set , for all .

So, is an absorbing set in for the semiflow and this semiflow is dissipative. This completes the proof. □

6. Synchronization Phenomena

Before analyzing the synchronization problems, we will examine the uniform boundedness of the solution in .

6.1. Uniform Boundedness in

In this section, we prove a result on the ultimately uniform boundedness of solution in

Lemma 7.

For all , we have the following results.

- (i)

- ;

- (ii)

Proof.

Since , then (since )

Because , we derive For (ii), we have . Since (from Young’s inequality and the fact that , for )

we obtain that

This completes the proof. □

Theorem 3.

Proof.

As is an increasing function, then the primitive is convex and we can have, from [68] (since is independent on time), Consequently, by taking the scalar product of the first and third equations of system (1) by and , respectively, and adding these equations for , we can deduce (according to Lemma 7)

where and (by hypothesis).

Since , we have and ; then (by using Young’s inequality),

From (20), (22) and (23), we can deduce

with to be chosen appropriately. In particular, we can deduce

where and

, with for an appropriate .

In the sequel, we assume that is in a bounded set of , and then, from Theorem 3, there exists , such that for , is in a ball of , where depend on some given parameters of the problem.

6.2. Local Complete Synchronization

In this section, we assume that and we consider the local synchronization solutions of (1), whether the synchronous state is robust to perturbations, whenever the initial conditions belong to some appropriate open and bounded set. Set , and on . Then, is a solution of

with the boundary conditions

Theorem 4.

Under the assumptions of Theorem 3, if there exist appropriate constants and , with , such that

where , and (which can be appropriately and arbitrarily selected), then the response system (1) is local complete (Mittag–Leffler) synchronized in at a uniform Mittag–Leffler rate.

Proof.

Multiply (28) by the function and integrate the resulting system over all of to obtain (according to (29) and Lemma 4)

Since we have (because, for any , , and ), according to assumption (H1):

Then (from Young’s inequality),

where is any positive parameter (to be chosen appropriately).

Consequently (by summing the first and the second inequalities of (33)),

We take such that , i.e., . From Gagliardo–Nirenberg inequalities, we have that there exists such that () . Then,

where (since for all , ). Thus, the previous relations with (34) yield the following inequalities:

By summing (for all ), we can deduce that (according to Lemma 4)

By adding the above two inequalities, we can deduce that

From the Poincaré–Steklov inequality, we can deduce that

and then,

Since, from (30), there exist appropriate constants and such that

and

it holds that (for )

where , and .

Consequently (from Lemma 6),

where is the Mittag–Leffler synchronization rate and is given by

Finally, □

Corollary 1.

We assume that there exists appropriate positive constants and , for with , such that

where , and is any positive parameter (to be chosen appropriately). Then, the response system (1) is local complete (Mittag–Leffler) synchronized in at a uniform Mittag–Leffler rate.

6.3. Master–Slave Synchronization via Pinning Control

The goal of pinning control is to synchronize the whole of the memristor-based neural network by controlling a select part of neurons of the network. Without losing any generality, we may assume that the first q () neurons would be pinning. Controlled synchronization refers to a case when the synchronization phenomenon is artificially induced by using a suitably designed control law. In order to explore the synchronization behavior via pinning control, we introduce the corresponding slave system to the master system (1) by (for )

where, for , are reasonable controllers and satisfies the assumption (2). The initial and boundary conditions of (38) are

Introduce now the symmetric matrix such that if and . Then .

Remark 6.

We can prove easily that (for )

and

Set , , , , and .

Thus, from (38), (39), (1), (5), and (6), we can deduce that (for )

with the initial and boundary conditions of (38) (for )

6.3.1. Feedback Control

In this section, we assume that the control is a feedback law.

Theorem 5.

Assume that assumptions of Theorem 3 hold. Suppose that the pinning feedback control functions (for ) satisfy

where (for ) and (for ) are positive constants, and , for . Then, if there exist appropriate constants and , for , such that (with )

where is any positive parameter (to be chosen appropriately) and

the master–slave systems (1) and (42) can achieve, in , Mittag–Leffler synchronization via the pinning feedback control functions .

Proof.

Multiply (42) by the function and integrate the resulting system over all of to obtain (according to (43) and Lemma 4)

By using a similar argument as in (32), we can deduce that

Then (according to (48), (3), (2), and assumptions (H1)):

where is any positive parameter (to be chosen appropriately).

Combining the first and second inequalities of (49), we obtain

By taking such that and by using similar arguments to derive (34), we can deduce that

with .

Substitute (50) into the previous inequalities

Adding the above inequality, we can write

From (41), we can deduce that

According to Poincaré–Steklov, we obtain (using the expression of )

Let us put

Then (with )

From (44), we can deduce (with )

According to assumption (45), we can deduce that

where . Then, by Lemma 6, we can deduce that

where . Hence, the error system (42) is globally asymptotically stable and the system (1) and response system (38) are globally (Mittag–Leffler) synchronized in under the feedback controllers (at a uniform Mittag–Leffler rate). This completes the proof of Theorem. □

Corollary 2.

Assume that the assumptions of Theorem 3 hold. Suppose that the pinning feedback control functions (for ) satisfy assumption (44). Then, if , for , and if there exist diagonal matrices and with strictly positive diagonal terms such that

Example 1.

The pinning feedback control functions can have, for example, the following forms.

- - First form:

- where (for ) and (for ) are arbitrary positive constants, and , for . We prove easily that satisfies the condition (44) (for ).

- - Second form:

- where if and otherwise, , (for ) and (for ) are arbitrary positive constants, and , for .For this example, we have ifand thenConsequently, we obtain that

6.3.2. Adaptive Control

Now, we will consider the case when the pinning controllers are defined as

where and are solutions of (for and )

and and , for , with , , and positive constants (for and ). Finally, we consider the following functions (for ):

where , for , , for , and , for .

Theorem 6.

When the assumptions of Theorem 3 are satisfied, the master–slave system can achieve Mittag–Leffler synchronization via pinning feedback controllers (58) if there are always appropriate positive constants and (for ) such that (with )

where is any positive parameter (to be chosen appropriately), , , the matrices , and , which depend on the parameter are given by (46) and the matrix .

Proof.

According to (58) and (59), we can have that (from the expression of ) with

Consequently, we derive (according to (55))

and then

with . According to assumption (61), we can deduce that

where .

Then, by Lemma 4.3 of [33], there exists a such that (for )

where and h is any positive constant. Consequently,

We end this analysis by the following corollary (of Theorem 6).

Corollary 3.

When the assumptions of Theorem 3 are satisfied, the master–slave system can achieve Mittag–Leffler synchronization via pinning feedback controllers (58) if (for all ) and if there exist diagonal matrices and with strictly positive diagonal terms such that and are positive-defined matrices.

7. Conclusions

Recent studies in neuroscience have shown the need to develop and implement reliable mathematical models in order to understand and effectively analyze the various neurological activities and disorders in the human brain. In this article, we mainly investigate the long-time behavior of the proposed fractional-order complex memristive neural network model in asymmetrically coupled networks, and the Mittag–Leffler synchronization problems for such coupled dynamical systems when different types of interactions are simultaneously present. A new mathematical brain connectivity model, taking into account the memory characteristics of neurons and their past history, the heterogeneity of brain tissue, and the local anisotropy of cell diffusion, is developed. This developed model is a set of coupled nonlinear Caputo fractional reaction–diffusion equations, in the shape of a fractional-order differential equation coupled with a set of time fractional-order partial differential equations, interacting via an asymmetric complex network. The existence and uniqueness of the weak solution as well as regularity result are established under assumptions on nonlinear terms. The existence of some absorbing sets for this model is established, and then the dissipative dynamics of the model (with absorbing sets) are shown. Finally, some synchronization problems are investigated. A few synchronization criteria are derived to obtain some Mittag–Leffler synchronizations for such complex dynamical networks. Precisely, some sufficient conditions are obtained first for the complete synchronization problem and then for the master–slave synchronization problem via appropriate pinning feedback controllers and adaptive controllers.

The developed analysis in this work can be further applied to extended impulsive models by using the approach developed in [16]. Moreover, it would be interesting to extend this study to synchronization problems for fractional-order coupled dynamical networks in the presence of disturbances and multiple time-varying delays. For predicting and acting on phenomena and undesirable behavior (as opposed to a synchronous state) occurring in brain network dynamics, the established synchronization results can be applied in the study of robustness behavior of uncertain fractional-order neural network models by considering the approach developed in [61].

The future objective is to simulate and validate numerically the developed theoretical results. These studies will be the subject of a forthcoming paper. According to the stochastic nature of synapses (and then the presence of noise), it would also be interesting to investigate the stochastic processes in the brain’s neural network and their impact on the synchronization of network dynamics.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The author declare no conflict of interest.

Appendix A

Proof of Theorem 1.

To establish the existence result of a weak solution to system (1), we proceed as in [39] by applying the Faedo–Galerkin method.

Let be a Hilbert basis and orthogonal in of . For all , we denote by the space generated by , and we introduce the orthogonal projector on the spaces . For each N, we would like to define the approximate solution of problem (1). Setting

where are unknown functions, and replacing by in (1), we obtain and , the system of Galerkin equations ():

where satisfies (by construction)

- Step 1. We show first that, for every N, the system (A1) admits a local solution. The system (A1) is equivalent to an initial value for a system of nonlinear fractional differential equations for functions , in which the nonlinear term is a Carathéodory function. The existence of a local absolutely continuous solution on interval , with is insured by the standard FODE theory (see, e.g., [69,70]). Thus, we have a local solution of (A1) on .

- Step 2. We next derive a priori estimates for functions , which entail that , by applying iteratively step 1. For simplicity, in the next step, we omit the “ ” on T. Now, we setwhere , and are absolutely continuous coefficients.

Then, from (A1), the approximation solution satisfies the following weak formulation:

Take and add these equations for .

We obtain, according to Lemma 4 and assumption ,

From Lemma 3 and assumption , we can deduce that

where , and to be chosen appropriately.

Consequently (from the assumption (13)),

and

with the constants chosen appropriately and such that (by hypothesis).

Choosing and such that and , we can deduce, by summing the previous three inequalities:

where and . Because , we have

we can deduce that

and then we have (from (A7))

where , and .

In particular, we have

From Lemma 6 and the uniform boundedness of (from (A2)), we can deduce that (for all )

and then is uniformly bounded with respect to N. This ensures that, for ,

Moreover, from the inequality of (A9), relations (A32) and (A2), we have that for (since )

We can deduce that

Hence (since and , then )

Now we estimate the fractional derivative .

Taking , and in (A3) and using uniform coercivity of forms and , we can deduce

According to assumptions (H1)-(H2) and the boundedness of function tanh, we obtain from (A16) that

Then, from (A12)–(A15), the regularity of , and the continuous embedding of in , we can derive (according to Young and Hölder inequalities)

Moreover, since is an increasing function, then the primitive of is convex. Hence, from [68], we can deduce (since is independent on time)

Now, we estimate the following term

From we have and and then (according to the regularity of and (A12))

Consequently,

According to (A12)–(A15), the continuous embedding of in , and the uniform boundedness of quantities , , and in , we obtain from the third equation of (A19) that the sequence is uniformly bounded in and we can derive the following estimate (for all ):

Prove now that is uniformly bounded in .

Since satisfies , then, we have

This implies (since )

and then (using Minkowski inequality and the continuous embedding of in )

Using (A12), (A14) and uniform boundedness of quantities in (from (A2)), we obtain and then (from Lemma 5)

This estimate enables us to say that

In order to prove that the local solution can be extended to the whole interval , we use the following process. We suppose that a solution of (A1) on has already been defined and we shall derive the local solution on (where is small enough) by making use of the a priori estimates and fractional derivative with beginning point . So, by this iterative process, we can deduce that Faedo–Galerkin solutions are well defined on the interval . So, we omit details.

- Step 3. We can now show the existence of weak solutions to (1). From results (A12), (A15), (A21) and (A20), Theorem A1 and compactness argument, it follows that there exist and such that there exists a subsequence of also denoted by , such thatFirst, we show that exists in the weak sense and that . Indeed, we take and (then ).

Then, by the weak convergence and Lebesgue’s dominated convergence arguments, we have

Consequently, in the weak sense. In the same way, we prove, in the weak sense, that .

Consider and . According to (A1), we can deduce that

According to (A14), (A23) and to density properties of the space spanned by , and using similar arguments as used to obtain relation (A24), passing to the limit when N goes to infinity is easy for the linear terms. For passing to the limit () in nonlinear terms, which requires hypothesis , we can use the standard technique, which consists of taking the difference between the sequence and its limit in the form of the sum of two quantities such that the first uses the strong convergence property and the second uses the weak convergence property. So we omit details.

Thus, the limiting function satisfies the system (for any elements and of )

In the case of , the continuity of solution at and the equalities , and is a consequence of Lemma A2. This completes the proof of the existence result. For the uniqueness result, let be given such that and , for . Let be the weak solutions to (1) (for ), which corresponds to data , and . According to Lemma 4 and assumption , we can deduce (for ) for the following relation:

Because for any , and , we can deduce according to assumption (H1)–(H2) (since and , for , are in )

Then, by summing for all in (A26), we can deduce that (using the Minkowski inequality, continuous embedding of in , and boundedness of and in )

Consequently,

where and . So

By Lemma 5, we can deduce (since , for ). This completes the proof. □

Appendix B

The purpose of what follows is to recall some basic definitions and results of fractional integrals and derivatives in the Riemann–Liouville sense and Caputo sense. We start from a formal level and, for a given Banach space X, we consider a sufficiently smooth function with values in X, (with ). Each fractional-order parameter is assumed to be in .

Definition A1.

For each fractional-order parameter γ, the forward and backward γth-order Riemann–Liouville fractional integrals are defined, respectively, by ( and )

where is the Euler Γ-function.

For each fractional-order parameters and , the following equality for the fractional integral

holds for an -function f ().

Definition A2.

For each fractional-order parameter γ, the γth-order Riemann–Liouville and γth-order Caputo fractional derivatives on are defined, respectively, by ()

From (A30), we can derive the following relation

Definition A3.

For each fractional-order parameter γ, the backward γth-order Riemann–Liouville and backward γth-order Caputo fractional derivatives, on are defined, respectively, by ( and )

Remark A1.

- For , the forward (respectively, backward) γth-order Riemann–Liouville and Caputo fractional derivatives of f converge to the classical derivative (respectively, to ). Moreover, the γth-order Riemann–Liouville fractional derivative of constant function (with k a constant) is not 0, since

- We can show that the difference between Riemann–Liouville and Caputo fractional derivatives depends only on the values of f on endpoint. More precisely, for , we have ( and )

From [71], we have the following Lemma.

Lemma A1

(Continuity properties of fractional integral in spaces on ). The fractional integral is a continuous operator from:

- (i)

- into , for any ;

- (ii)

- into , for any and ;

- (iii)

- into , for any ;

- (iii)

- into , for any ;

- (iv)

- into .

From Lemma A1 and (A32), we can deduce the following corollary.

Lemma A2.

Let X be a Banach space and . Suppose the Caputo derivative and , then .

We also recall the fractional integration by parts in the formulas (see, e.g., [64,72]):

Lemma A3.

Let and with . Then

- (i)

- if f is an -function on with values in X and g is an -function on with values in X, then

- (ii)

- if and , then

Lemma A4.

Let , g be an -function on with values in X (for ) and f be an absolutely continuous function on with values in X. Then,

- (i)

- (ii)

- (iii)

We end this appendix by giving a compactness theorem in Hilbert spaces. Assume that , , and are Hilbert spaces with

We define the Hilbert space , for a given , by

endowed with the norm For any subset K of , we define the subspace of by

As, e.g., in [39], we have the following compactness result.

Theorem A1.

Let , , and be Hilbert spaces with the injection (A35). Then, for any bounded set K and any , the injection of into is compact.

References

- Sporns, O.; Zwi, J.D. The small world of the cerebral cortex. Neuroinformatics 2004, 2, 145–162. [Google Scholar] [CrossRef] [PubMed]

- Barrat, A.; Barthelemy, M.; Vespignani, A. Dynamical Processes on Complex Networks; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Belmiloudi, A. Mathematical modeling and optimal control problems in brain tumor targeted drug delivery strategies. Int. J. Biomath. 2017, 10, 1750056. [Google Scholar] [CrossRef]

- Venkadesh, S.; Horn, J.D.V. Integrative Models of Brain Structure and Dynamics: Concepts, Challenges, and Methods. Front. Neurosci. 2021, 15, 752332. [Google Scholar] [CrossRef] [PubMed]

- Craddock, R.C.; Jbabdi, S.; Yan, C.G.; Vogelstein, J.T.; Castellanos, F.X.; Di Martino, A.; Kelly, C.; Heberlein, K.; Colcombe, S.; Milham, M.P. Imaging human connectomes at the macroscale. Nat. Methods 2013, 10, 524–539. [Google Scholar] [CrossRef] [PubMed]

- Deco, G.; McIntosh, A.R.; Shen, K.; Hutchison, R.M.; Menon, R.S.; Everling, S.; Hagmann, P.; Jirsa, V.K. Identification of optimal structural connectivity using functional connectivity and neural modeling. J. Neurosci. 2014, 34, 7910–7916. [Google Scholar] [CrossRef] [PubMed]

- Damascelli, M.; Woodward, T.S.; Sanford, N.; Zahid, H.B.; Lim, R.; Scott, A.; Kramer, J.K. Multiple functional brain networks related to pain perception revealed by fMRI. Neuroinformatics 2022, 20, 155–172. [Google Scholar] [CrossRef]

- Hipp, J.F.; Engel, A.K.; Siegel, M. Oscillatory synchronization in large-scale cortical networks predicts perception. Neuron 2011, 69, 387–396. [Google Scholar] [CrossRef] [PubMed]

- Varela, F.; Lachaux, J.P.; Rodriguez, E.; Martinerie, J. The brainweb: Phase synchronization and large-scale integration. Nat. Rev. Neurosci. 2001, 2, 229–239. [Google Scholar] [CrossRef] [PubMed]

- Brookes, M.J.; Woolrich, M.; Luckhoo, H.; Price, D.; Hale, J.R.; Stephenson, M.C.; Barnes, G.R.; Smith, S.M.; Morris, P.G. Investigating the electrophysiological basis of resting state networks using magnetoencephalography. Proc. Natl. Acad. Sci. USA 2011, 108, 16783–16788. [Google Scholar] [CrossRef]

- Das, S.; Maharatna, K. Fractional dynamical model for the generation of ECG like signals from filtered coupled Van-der Pol oscillators. Comput. Methods Programs Biomed. 2013, 122, 490–507. [Google Scholar] [CrossRef]

- Schirner, M.; Kong, X.; Yeo, B.T.; Deco, G.; Ritter, P. Dynamic primitives of brain network interaction. NeuroImage 2022, 250, 118928. [Google Scholar] [CrossRef] [PubMed]

- Axmacher, N.; Mormann, F.; Fernández, G.; Elger, C.E.; Fell, J. Memory formation by neuronal synchronization. Brain Res. Rev. 2006, 52, 170–182. [Google Scholar] [CrossRef] [PubMed]

- Breakspear, M. Dynamic models of large-scale brain activity. Nat. Neurosci. 2017, 20, 340–352. [Google Scholar] [CrossRef] [PubMed]

- Lundstrom, B.N.; Higgs, M.H.; Spain, W.J.; Fairhall, A.L. Fractional differentiation by neocortical pyramidal neurons. Nat. Neurosci. 2008, 11, 1335–1342. [Google Scholar] [CrossRef] [PubMed]

- Belmiloudi, A. Dynamical behavior of nonlinear impulsive abstract partial differential equations on networks with multiple time-varying delays and mixed boundary conditions involving time-varying delays. J. Dyn. Control Syst. 2015, 21, 95–146. [Google Scholar] [CrossRef]

- Gilding, B.H.; Kersner, R. Travelling Waves in Nonlinear Diffusion-Convection Reaction; Birkhäuser: Basel, Switzerland, 2012. [Google Scholar]

- Kondo, S.; Miura, T. Reaction-diffusion model as a framework for understanding biological pattern formation. Science 2010, 329, 1616–1620. [Google Scholar] [CrossRef] [PubMed]

- Babiloni, C.; Lizio, R.; Marzano, N.; Capotosto, P.; Soricelli, A.; Triggiani, A.I.; Cordone, S.; Gesualdo, L.; Del Percio, C. Brain neural synchronization and functional coupling in Alzheimer’s disease as revealed by resting state EEG rhythms. Int. J. Psychophysiol. 2016, 103, 88–102. [Google Scholar] [CrossRef] [PubMed]

- Lehnertz, K.; Bialonski, S.; Horstmann, M.T.; Krug, D.; Rothkegel, A.; Staniek, M.; Wagner, T. Synchronization phenomena in human epileptic brain networks. J. Neurosci. Methods 2009, 183, 42–48. [Google Scholar] [CrossRef]

- Schnitzler, A.; Gross, J. Normal and pathological oscillatory communication in the brain. Nat. Rev. Neurosci. 2005, 6, 285–296. [Google Scholar] [CrossRef]

- Touboul, J.D.; Piette, C.; Venance, L.; Ermentrout, G.B. Noise-induced synchronization and antiresonance in interacting excitable systems: Applications to deep brain stimulation in Parkinson’s disease. Phys. Rev. X 2020, 10, 011073. [Google Scholar] [CrossRef]

- Uhlhaas, P.J.; Singer, W. Neural synchrony in brain disorders: Relevance for cognitive dysfunctions and pathophysiology. Neuron 2006, 52, 155–168. [Google Scholar] [CrossRef] [PubMed]

- Bestmann, S. (Ed.) Computational Neurostimulation; Elsevier: Amsterdam, The Netherlands, 2015. [Google Scholar]

- Chua, L.O. Memristor-the missing circuit element. IEEE Trans. Circuit Theory 1971, 18, 507–519. [Google Scholar] [CrossRef]

- Lin, H.; Wang, C.; Tan, Y. Hidden extreme multistability with hyperchaos and transient chaos in a Hopfield neural network affected by electromagnetic radiation. Nonlinear Dyn. 2020, 99, 2369–2386. [Google Scholar] [CrossRef]

- Njitacke, Z.T.; Kengne, J.; Fotsin, H.B. A plethora of behaviors in a memristor based Hopfield neural networks (HNNs). Int. J. Dyn. Control 2019, 7, 36–52. [Google Scholar] [CrossRef]

- Farnood, M.B.; Shouraki, S.B. Memristor-based circuits for performing basic arithmetic operations. Procedia Comput. Sci. 2011, 3, 128–132. [Google Scholar]

- Jo, S.H.; Chang, T.; Ebong, I.; Bhadviya, B.B.; Mazumder, P.; Lu, W. Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett. 2010, 10, 1297–1301. [Google Scholar] [CrossRef]

- Snider, G.S. Cortical computing with memristive nanodevices. SciDAC Rev. 2008, 10, 58–65. [Google Scholar]

- Anbalagan, P.; Ramachandran, R.; Alzabut, J.; Hincal, E.; Niezabitowski, M. Improved results on finite-time passivity and synchronization problem for fractional-order memristor-based competitive neural networks: Interval matrix approach. Fractal Fract. 2022, 6, 36. [Google Scholar] [CrossRef]

- Bao, G.; Zeng, Z.G.; Shen, Y.J. Region stability analysis and tracking control of memristive recurrent neural network. Neural Netw. 2018, 98, 51–58. [Google Scholar] [CrossRef]

- Chen, J.; Chen, B.; Zeng, Z. O(t-α)-synchronization and Mittag-Leffler synchronization for the fractional-order memristive neural networks with delays and discontinuous neuron activations. Neural Netw. 2018, 100, 10–24. [Google Scholar] [CrossRef]

- Rakkiyappan, R.; Sivaranjani, K.; Velmurugan, G. Passivity and passification of memristor-based complex-valued recurrent neural networks with interval time-varying delays. Neurocomputing 2014, 144, 391–407. [Google Scholar] [CrossRef]

- Takembo, C.N.; Mvogo, A.; Ekobena Fouda, H.P.; Kofané, T.C. Effect of electromagnetic radiation on the dynamics of spatiotemporal patterns in memristor-based neuronal network. Nonlinear Dyn. 2018, 95, 1067–1078. [Google Scholar] [CrossRef]

- Tu, Z.; Wang, D.; Yang, X.; Cao, J. Lagrange stability of memristive quaternion-valued neural networks with neutral items. Neurocomputing 2020, 399, 380–389. [Google Scholar] [CrossRef]

- Zhu, S.; Bao, H. Event-triggered synchronization of coupled memristive neural networks. Appl. Math. Comput. 2022, 415, 126715. [Google Scholar] [CrossRef]

- Baleanu, D.; Lopes, A.M. (Eds.) Applications in engineering, life and social sciences. In Handbook of Fractional Calculus with Applications; De Gruyter: Berlin, Germany, 2019. [Google Scholar]

- Belmiloudi, A. Cardiac memory phenomenon, time-fractional order nonlinear system and bidomain-torso type model in electrocardiology. AIMS Math. 2021, 6, 821–867. [Google Scholar] [CrossRef]

- Hilfer, R. Applications of Fractional Calculus in Physics; World Scientific: Singapore, 2000. [Google Scholar]

- Maheswari, M.L.; Shri, K.S.; Sajid, M. Analysis on existence of system of coupled multifractional nonlinear hybrid differential equations with coupled boundary conditions. AIMS Math. 2024, 9, 13642–13658. [Google Scholar] [CrossRef]

- Magin, R.L. Fractional calculus models of complex dynamics in biological tissues. Comput. Math. Appl. 2010, 59, 1586–1593. [Google Scholar] [CrossRef]

- West, B.J.; Turalska, M.; Grigolini, P. Networks of Echoes: Imitation, Innovation and Invisible Leaders; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Caputo, M. Linear models of dissipation whose Q is almost frequency independent. II. Fract.Calc. Appl. Anal. 2008, 11, 414, Reprinted from Geophys. J. R. Astr. Soc. 1967, 13, 529–539.. [Google Scholar] [CrossRef]

- Ermentrout, G.B.; Terman, D.H. Mathematical Foundations of Neuroscience; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Izhikevich, E.M. Dynamical Systems in Neuroscience; The MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Osipov, G.V.; Kurths, J.; Zhou, C. Synchronization in Oscillatory Networks; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Wu, C.W. Synchronization in Complex Networks of Nonlinear Dynamical Systems; World Scientific: Singapore, 2007. [Google Scholar]

- Ambrosio, B.; Aziz-Alaoui, M.; Phan, V.L. Large time behaviour and synchronization of complex networks of reaction–diffusion systems of FitzHugh-Nagumo type. IMA J. Appl. Math. 2019, 84, 416–443. [Google Scholar] [CrossRef]

- Ding, K.; Han, Q.-L. Synchronization of two coupled Hindmarsh-Rose neurons. Kybernetika 2015, 51, 784–799. [Google Scholar] [CrossRef]

- Huang, Y.; Hou, J.; Yang, E. Passivity and synchronization of coupled reaction-diffusion complex-valued memristive neural networks. Appl. Math. Comput. 2020, 379, 125271. [Google Scholar] [CrossRef]

- Miranville, A.; Cantin, G.; Aziz-Alaoui, M.A. Bifurcations and synchronization in networks of unstable reaction–diffusion system. J. Nonlinear Sci. 2021, 6, 44. [Google Scholar] [CrossRef]

- Yang, X.; Cao, J.; Yang, Z. Synchronization of coupled reaction-diffusion neural networks with time-varying delays via pinning-impulsive controllers. SIAM J. Contr. Optim. 2013, 51, 3486–3510. [Google Scholar] [CrossRef]

- You, Y. Exponential synchronization of memristive Hindmarsh–Rose neural networks. Nonlinear Anal. Real World Appl. 2023, 73, 103909. [Google Scholar] [CrossRef]

- Hymavathi, M.; Ibrahim, T.F.; Ali, M.S.; Stamov, G.; Stamova, I.; Younis, B.A.; Osman, K.I. Synchronization of fractional-order neural networks with time delays and reaction-diffusion Terms via Pinning Control. Mathematics 2022, 10, 3916. [Google Scholar] [CrossRef]

- Li, W.; Gao, X.; Li, R. Dissipativity and synchronization control of fractional-order memristive neural networks with reaction-diffusion terms. Math. Methods Appl. Sci. 2019, 42, 7494–7505. [Google Scholar] [CrossRef]

- Wu, X.; Liu, S.; Wang, H.; Wang, Y. Stability and pinning synchronization of delayed memristive neural networks with fractional-order and reaction–diffusion terms. ISA Trans. 2023, 136, 114–125. [Google Scholar] [CrossRef]

- Tonnesen, J.; Hrabetov, S.; Soria, F.N. Local diffusion in the extracellular space of the brain. Neurobiol. Dis. 2023, 177, 105981. [Google Scholar] [CrossRef]

- Adams, R.A. Sobolev Spaces; Academic Press: New York, NY, USA, 1975. [Google Scholar]

- Ern, A.; Guermond, J.L. Finite Elements I: Approximation and Interpolation, Texts in Applied Mathematics; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Belmiloudi, A. Stabilization, Optimal and Robust Control: Theory and Applications in Biological and Physical Sciences; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Belykh, I.; Belykh, V.N.; Hasler, M. Sychronization in asymmetrically coupled networks with node balance. Chaos 2006, 16, 015102. [Google Scholar] [CrossRef]

- Ye, H.; Gao, J.; Ding, Y. A generalized Gronwall inequality and its application to a fractional differential equation. J. Math. Anal. Appl. 2007, 328, 1075–1081. [Google Scholar] [CrossRef]

- Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and Applications of Fractional Differential Equations; Elsevier: Amsterdam, The Netherlands, 2006. [Google Scholar]

- Gorenflo, R.; Kilbas, A.A.; Mainardi, F.; Rogosin, S.V. Mittag-Leffler Functions: Related Topics and Applications; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Chueshov, I.D. Introduction to the Theory of Infinite-Dimensional Dissipative Systems; ACTA Scientific Publishing House: Kharkiv, Ukraine, 2002. [Google Scholar]

- Bauer, F.; Atay, F.M.; Jost, J. Synchronization in time-discrete networks with general pairwise coupling. Nonlinearity 2009, 22, 2333–2351. [Google Scholar] [CrossRef]

- Li, L.; Liu, J.G. A generalized definition of Caputo derivatives and its application to fractional ODEs. SIAM J. Math. Anal. 2018, 50, 2867–2900. [Google Scholar] [CrossRef]

- Diethelm, K.; Ford, N.J. Analysis of fractional differential equations. J. Math. Anal. Appl. 2002, 265, 229–248. [Google Scholar] [CrossRef]

- Kubica, A.; Yamamoto, M. Initial-boundary value problems for fractional diffusion equations with time-dependent coefficients. Fract. Calc. Appl. Anal. 2018, 21, 276–311. [Google Scholar] [CrossRef]

- Hardy, G.H.; Littlewood, J.E. Some properties of fractional integrals I. Math. Z. 1928, 27, 565–606. [Google Scholar] [CrossRef]

- Zhou, Y. Basic Theory of Fractional Differential Equations; World Scientific: Singapore, 2014. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).