Abstract

As a new member of the NA (negative associated) family, the m-AANA (m-asymptotically almost negatively associated) sequence has many statistical properties that have not been developed. This paper mainly studies its properties in the gradual change point model. Firstly, we propose a least squares type change point estimator, then derive the convergence rates and consistency of the estimator, and provide the limit distributions of the estimator. It is interesting that the convergence rates of the estimator are the same as that of the change point estimator for independent identically distributed observations. Finally, the effectiveness of the estimator in limited samples can be verified through several sets of simulation experiments and an actual hydrological example.

Keywords:

least squares estimator; gradual change; m-AANA sequence; convergence rates; limit distributions MSC:

62E20; 62D05

1. Introduction

Change point problems originally arose from quality control engineering [1]. Because of the heterogeneities in real data sequences, the problems of change point estimation and detection in real data sequences have drawn attention. Scholars have proposed many methods to solve various data problems (see [2,3,4,5,6,7,8,9]). The purpose of change point detection and estimation is to divide a data sequence into several homogeneous segments, and the theories have been applied in many fields like finance [10], medicine [11], environment [12] and so on. For some special problems, such as hydrological and meteorological problems, most of the change point that occur are gradual rather than abrupt. Therefore, the research on the problems of gradual change is very meaningful.

In earlier research, most theories of gradual changes were derived from two-stage regression model [13,14]. Hušková [15] used the least–squares method to estimate an unknown gradual change point and the model is as follows:

For , observations , , ⋯, shall satisfy:

where , , m is the location of the change, are i.i.d. random variables with , , for some . In the same year, Jarušková [16] conducted a log likelihood ratio test of the model on the basis of Hušková [15], and obtained that the asymptotic distribution of the test statistic is Gumbel distribution.

Later, Wang [17] extended the error terms from the traditional i.i.d. sequences to the long memory i.i.d sequences and obtained the consistency of the estimator of the mean gradual change point and the limit distribution of the test statistic. Then, Timmermann [18] tested the gradual change in general random processes and update processes respectively, and also obtained the limit distribution of test statistic. It is well known that most of the previous studies tend to focus on the abrupt change. But for some important time series, such as temperature and hydrology, there is a greater possibility of gradual mean change.

Therefore, this paper considers the gradual change problem and the following model based on Hušková [15] is constructed:

where , , , ⋯, are observations. , , and is unknow change point location. , are unknown parameter, with . are random variables with zero mean.

The least squares method is a classic method (see [19,20]). In model (1), the estimators of and based on the least–squares–type are, respectively:

where:

For convenience of illustration, and are and , respectively.

It can be seen that most previous studies have one thing in common, namely that the error terms are independently and identically distributed. However, the constraints of independent sequences are quite strict, and in practical problems, many time series models may not meet the independent conditions. This leads to a classical question of whether the error terms in a model can be generalized to some more general cases. Therefore, the idea of extending the error terms to the m-AANA sequences is proposed in this paper. Before presenting the main asymptotic results, it is necessary to understand the following definition:

Definition 1 ([21]).

There is an fixed integer , the random variable sequence is called m-AANA sequence if there exists a non negative sequence as such that:

for all , and for all coordinatewise nondecreasing continuous functions f and g whenever the variances exist. The sequence is called the mixing coefficients of . It is not difficult to see that NA family includes NA [22], m-NA [23], AANA [24], and independent sequences.

Scholars have great interest in the sequences of NA family. For example, NA sequence is opposite to PA (positively associated) sequence, but NA sequence has better property than other existing ND (negatively dependent) sequence: under the influence of increasing functions, the disjoint subset of NA random variable sequence is still NA. Therefore, NA sequences appeared in many literary works. Przemysaw [25] obtained the convergence of partial sums of NA sequences; Yang [26] obtained Bernstein type inequalities for NA sequences; and Cai [27] obtained the Marcinkiewicz – Zygmund type strong law of large numbers of NA sequences.

There are many classical studies about m-NA and AANA sequences as generalizations of NA sequences. Hu [23] discussed the complete convergence of m-NA sequences; Yuan [28] proposed a Marcinkiewics-Zygmund type moment inequality for the maximum partial sum of AANA sequences.

For the m-AANA sequence mentioned in this paper, it is a relatively new concept and its research results are less than those of other sequences in NA family. Ko [29] extended Hájek–Rényi inequalities and the strong law of large numbers of Nam [21] to Hilbert space; Ding [30] proposed the CUSUM method to estimate the abrupt change point in a sequence with the error term of m-AANA process, but for a more general case, he did not discuss the gradual change. Therefore, another reason for constructing the main ideas of this paper is is to fill the research gaps of Ding [30].

The rest is arranged as follows: Section 2 describes the main results. A small simulation study under different parameters and an example is provided in Section 3. Section 4 contains the conclusions and outlooks, and the main results are proved in Appendix A.

2. Main Results

For our asymptotic results, assume the model to satisfy the following assumptions:

Assumption 1.

is a sequence of m-AANA random variables with , , and there is a , .

Assumption 2.

The mixing coefficient sequence satisfies .

Assumption 3.

We note , . As ,

In addition, there exists strictly ascending sequence of natural numbers with and . And for some , , .

Remark 1.

Assumption 1 and 2 are the underlying assumptions, and if these assumptions cannot be met, serious problems such as bias in the estimates, inconsistency in the estimates, and invalidity of the estimates may arise in the proof process.

In addition, what can be verified is that the m-AANA random variables satisfy the central limit theorem when Assumption 1–3 are ture.

Let , , we obtain the Theorem 1.

Theorem 1.

If Assumption 1 and 2 are ture, when ,

Theorem 2.

If Theorem 1 holds, when ,

Remark 2.

Interestingly, these convergence rates are the same as those in Hušková [31], but independent sequences are contained in m-AANA sequences.

Theorem 3.

Case 1. Assuming that Assumption 1–3 hold true. If , as , then:

where with is a Gaussian process with zero mean and covariance function.

Case 2. Assuming that Assumption 1–3 hold true. If , as , then:

where is a standard normal variable.

Case 3. Assuming that Assumption 1–3 hold true. If , as , then:

where is a normal random variable with:

are constants and are described in the proofs in Appendix A.

3. Simulations and Example

In this section, assume that there is only one gradual change point of mean at in (1), such that:

satisfy:

where , , is a identity matrix, satisfies:

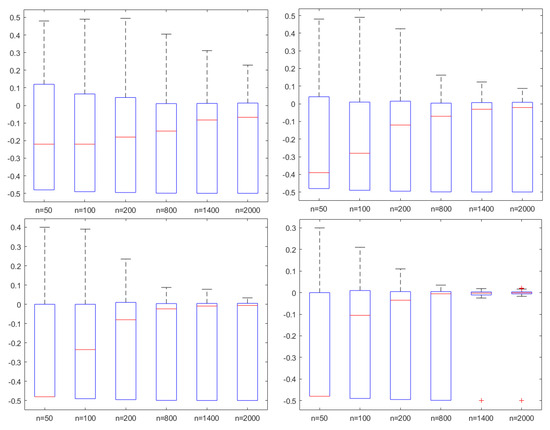

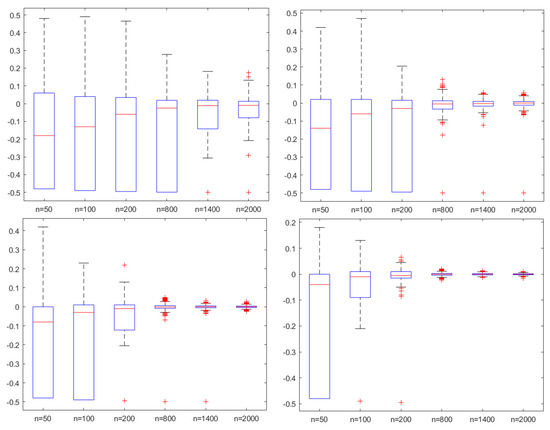

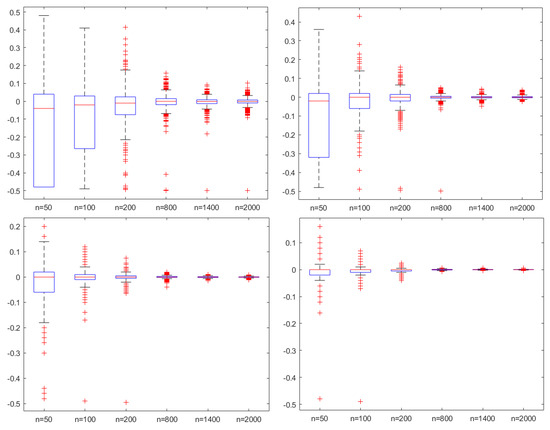

where . It can be verified that is a m-AANA sequence with and . For comparison, we take , , , and , . It is worth noting that after verification, under these conditions, is a non singular matrix, which can be used for simulation experiments. Then, 1000 simulation processes are carried out. Figure 1, Figure 2 and Figure 3 are based on the simulation of from left to right.

Figure 1.

The box plots of with , and .

Figure 2.

The box plots of with , and .

Figure 3.

The box plots of with , and .

In Figure 1, Figure 2 and Figure 3, the ordinate axis represents the value of , and the abscissa is the size of sample n. It is not difficult to find that the larger the gradual coefficient , the worse the performance of our estimator. And as n increases, the estimation effect becomes better, this also implies the result in Theorem 1. For different and , similar results can be obtained, which will not be repeated here.

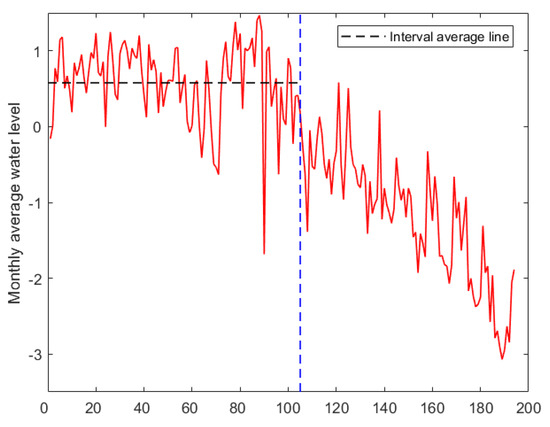

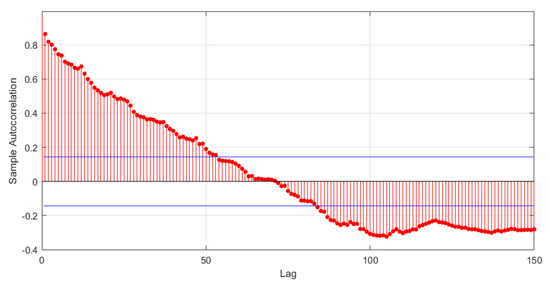

Finally, we do the change-point analysis based on the sequence of monthly average water levels of Hulun Lake in China from 1992 to 2008. For the convenience of description, we subtract the median of the observations. Then, Figure 4 and Figure 5 can be obtained:

Figure 4.

The plot graph of monthly average water levels of Hulun Lake from 1992 to 2008.

Figure 5.

Autocorrelation function.

It can be seen from Figure 5 that we have no sufficient reason to believe that the water levels of Hulun Lake does not meet the conditions of m-AANA sequence. According to Sun [32], the water levels of Hulun Lake have declined rapidly since 2000, due to the influence of the monsoon, changes in precipitation patterns, and the degradation of frozen soil. Using the method in this paper, Table 1 shows the values of under different .

Table 1.

The values of based on water level.

The position of 105 represents the year 2000. The average water levels of Hulun Lake have been decreasing since 2000.

4. Conclusions

This paper proposes a least-squares-type estimator of the gradual change point of sequence based on m-AANA noise and study the consistency of the estimator. At the same time, the convergence rates are obtained in Theorem 2:

Therefore, Theorems 1 and 2 generalize the results in Hušková [31]. Furthermore, due to the asymptotic normality of m-AANA sequences, this paper also derives the limit distributions of the estimator under different in Throrem 3. It can be known that the inappropriate has a great impact on the change point estimator. If , the gradual change point in (2) may be very similar to the abrupt change point, and lose the gradual change properties. If , the dispersion of data may be very large, which is not conducive to determining the correct change point position. So we conduct several simulations to verify the results, and the results show that the larger is, the worse the estimation effect is, but the consistency is still satisfied. Finally, the paper discusses the gradual change of water levels of Hulun Lake in Section 3, and the estimator successfully finds the position of the change point.

There is also some regret in this paper. For example, for series where the variances cannot be estimated, such as a financial heavy-tailed sequence with a heavy-tailed index , the location of the change point cannot be obtained using the method in this paper. Therefore, more suitable methods should be promoted in future works. Moreover, we suspect that there may be more common cases in the selection of , this is also one of the key points to be solved in the future.

Author Contributions

Methodology, T.X.; Software, T.X.; Writing—original draft, T.X.; Supervision, Y.W.; Funding acquisition, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China, 61806155; Natural Science Foundation of Anhui Province, 1908085MF186 and Natural Science Foundation of Anhui Higher Education Institutions of China, KJ2020A0024.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Acknowledgments

The authors are very grateful to the editor and anonymous reviewers for their comments, which enables the authors to greatly improve this paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof of Theorem 1.

Let , (2) can be equivalent to:

after the elementary operation, can be divided into five parts:

where:

and . At this moment, a lemma is needed. □

Lemma A1.

If Assumption 1 and 2 are true, when , then,

Proof of Lemma A1.

Then, another result can be obtained:

Similar to the proof of Equation (A5), the following can be obtained:

from Lemma 2.2 in Hušková [31], uniformly for , as ,

then,

where:

Now suppose and . Obviously, , . Therefore,

By Lemma A1, the following can be obtained:

by the same token,

By combining (A13)–(A17), it can be found that when , ,

therefore, only when , take the maximum value. Theorem 1 has been proved.

Proof of Theorem 2.

Consider first.

According to the Lemmas 2.2–2.4 in Hušková [31], for any , and as . Then, the following can be accessed:

uniformly for . The problem can be considered in three parts. When , combine (A5), (A11), (A19) and (A20), it is obvious that ,

uniformly for .

Next, for ,

When ,

similarly,

In order to obtain the asymptotic distributions of the estimator, it is necessary to prove several lemmas about random terms.

Lemma A2.

Assuming that Assumption 1 and 2 are true, if , as , then,

for any .

Proof of Lemma A2.

Then, it is necessary to define that:

and

It is not difficult to see that:

and at this point, another lemma is required:

Lemma A3 ([21]).

Let be an m-AANA random variables sequence with . It has mixing coefficients . If , there exists a positive constant such that:

where .

After simple calculation, the following can be obtained:

where is a constant. And similarly, for ,

where , and are constants. Next, we need to break down the discussion into situations.

Lemma A4.

If , Assumption 1–3 are true. For any , there exist and such that for ,

where is given in the proof of Theorem 2, and as ,

where and .

Proof of Lemma A4.

By the proof of Lemma 2.3 in [33], for any ,

if or with some . Then, for ,

with some . According to Theorem 12.2 of [1], for all , there exists , then:

(A34) be obtained by choosing a sufficiently small H.

By the holding of Assumption 3 and Theorem 12.3 in [1] to prove the convergence of (A35). It suffices to show that converges in distribution to the distributed random variables with any and and the tightness. Since are the sum of m-AANA random variables, the first expected property can be obtained by applying the central limit theorem. By the tightness conditions from Theorem 12.3 in [33], for , after a simple calculation,

with some . □

Lemma A5.

If , Assumption 1–3 are true. For any , there exist and such that for ,

where is given in the proof of Theorem 2, and as ,

where Y is a normal random variable.

Proof of Lemma A5.

Therefore, the limit distribution is Gaussian with zero mean and the covariance function , which implies (A41). □

Lemma A6.

If , Assumption 1–3 are true. For any , there exist and such that for ,

where is given in the proof of Theorem 2, and as ,

where Y is a normal random variable.

Proof of Lemma A6.

Therefore, the limit distribution is Gaussian with zero mean and the covariance function , which implies (A45). □

References

- Page, E.S. Continuous inspection schemes. Biometrika 1954, 41, 100–115. [Google Scholar] [CrossRef]

- Csörgo, M.; Horváth, L. Limit Theorems in Change-Point Analysis; Wiley: Chichester, UK, 1997. [Google Scholar]

- Horváth, L.; Hušková, M. Change-point detection in panel data. J. Time Ser. Anal. 2012, 33, 631–648. [Google Scholar] [CrossRef]

- Horváth, L.; Rice, G. Extensions of some classical methods in change point analysis. Test. 2014, 23, 219–255. [Google Scholar] [CrossRef]

- Xu, M.; Wu, Y.; Jin, B. Detection of a change-point in variance by a weighted sum of powers of variances test. J. Appl. Stat. 2019, 46, 664–679. [Google Scholar] [CrossRef]

- Gao, M.; Ding, S.S.; Wu, S.P.; Yang, W.Z. The asymptotic distribution of CUSUM estimator based on α-mixing sequences. Commun. Stat. Simul. Comput. 2020, 51, 6101–6113. [Google Scholar] [CrossRef]

- Jin, H.; Wang, A.M.; Zhang, S.; Liu, J. Subsampling ratio tests for structural changes in time series with heavy-tailed AR(p) errors. Commun. Stat. Simul. Comput. 2022, 2022, 2111584. [Google Scholar] [CrossRef]

- Hušková, M.; Prášková, Z.; Steinebach, G.J. Estimating a gradual parameter change in an AR(1)–process. Metrika 2022, 85, 771–808. [Google Scholar] [CrossRef]

- Tian, W.Z.; Pang, L.Y.; Tian, C.L.; Ning, W. Change Point Analysis for Kumaraswamy Distribution. Mathematics 2023, 11, 553. [Google Scholar] [CrossRef]

- Pepelyshev, A.; Polunchenko, A. Real-time financial surveillance via quickest change-point detection methods. Stat. Interface 2017, 10, 93–106. [Google Scholar] [CrossRef]

- Ghosh, P.; Vaida, F. Random change point modelling of HIV immunologic responses. Stat. Med. 2007, 26, 2074–2087. [Google Scholar] [CrossRef]

- Punt, A.E.; Szuwalski, C.S.; Stockhausen, W. An evaluation of stock-recruitment proxies and environmental change points for implementing the US Sustainable Fisheries Act. Fish Res. 2014, 157, 28–40. [Google Scholar] [CrossRef]

- Hinkley, D. Inference in two-phase regression. J. Am. Stat. Assoc. 1971, 66, 736–763. [Google Scholar] [CrossRef]

- Feder, P.I. On asymptotic distribution theory in segmented regression problems. Ann. Stat. 1975, 3, 49–83. [Google Scholar] [CrossRef]

- Hušková, M. Estimators in the location model with gradual changes. Comment. Math. Univ. Carolin. 1998, 1, 147–157. [Google Scholar]

- Jarušková, D. Testing appearance of linear trend. J. Stat. Plan. Infer. 1998, 70, 263–276. [Google Scholar] [CrossRef]

- Wang, L. Gradual changes in long memory processes with applications. Statistics 2007, 41, 221–240. [Google Scholar] [CrossRef]

- Timmermann, H. Monitoring Procedures for Detecting Gradual Changes. Ph.D. Thesis, Universität zu Köln, Köln, Germany, 2014. [Google Scholar]

- Schimmack, M.; Mercorelli, P. Contemporary sinusoidal disturbance detection and nano parameters identification using data scaling based on Recursive Least Squares algorithms. In Proceedings of the 2014 International Conference on Control, Decision and Information Technologies (CoDIT), Metz, France, 3–5 November 2014; pp. 510–515. [Google Scholar]

- Schimmack, M.; Mercorelli, P. An Adaptive Derivative Estimator for Fault-Detection Using a Dynamic System with a Suboptimal Parameter. Algorithms 2019, 12, 101. [Google Scholar] [CrossRef]

- Nam, T.; Hu, T.; Volodin, A. Maximal inequalities and strong law of large numbers for sequences of m-asymptotically almost negatively associated random variables. Commun. Stat. Theory Methods 2017, 46, 2696–2707. [Google Scholar] [CrossRef]

- Block, H.W.; Savits, T.H.; Shaked, M. Some concepts of negative dependence. Ann. Probab. 1982, 10, 765–772. [Google Scholar] [CrossRef]

- Hu, T.C.; Chiang, C.Y.; Taylor, R.L. On complete convergence for arrays of rowwise m-negatively associated random variables. Nonlinear Anal. 2009, 71, e1075–e1081. [Google Scholar] [CrossRef]

- Chandra, T.K.; Ghosal, S. The strong law of large numbers for weighted averages under dependence assumptions. J. Theor. Probab. 1996, 9, 797–809. [Google Scholar] [CrossRef]

- Przemysaw, M. A note on the almost sure convergence of sums of negatively dependent random variables. Stat. Probab. Lett. 1992, 15, 209–213. [Google Scholar]

- Yang, S.C. Uniformly asymptotic normality of the regression weighted estimator for negatively associated samples. Stat. Probab. Lett. 2003, 62, 101–110. [Google Scholar] [CrossRef]

- Cai, G.H. Strong laws of weighted SHINS of NA randomvariables. Metrika 2008, 68, 323–331. [Google Scholar] [CrossRef][Green Version]

- Yuan, D.M.; An, J. Rosenthal type inequalities for asymptotically almost negatively associated random variables and applications. Chin. Ann. Math. B 2009, 40, 117–130. [Google Scholar] [CrossRef]

- Ko, M. Hájek-Rényi inequality for m-asymptotically almost negatively associated random vectors in Hilbert space and applications. J. Inequal. Appl. 2018, 2018, 80. [Google Scholar] [CrossRef]

- Ding, S.S.; Li, X.Q.; Dong, X.; Yang, W.Z. The consistency of the CUSUM–type estimator of the change-Point and its application. Mathematics 2020, 8, 2113. [Google Scholar] [CrossRef]

- Hušková, M. Gradual changes versus abrupt changes. J. Stat. Plan. Inference 1999, 76, 109–125. [Google Scholar] [CrossRef]

- Sun, Z.D.; Huang, Q.; Xue, B. Recent hydrological dynamic and its formation mechanism in Hulun Lake catchment. Arid Land Geogr. 2021, 44, 299–307. [Google Scholar]

- Billingsley, P. Convergence of Probability Measures; Wiley: New York, NY, USA, 1968. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).