1. Introduction

Efficient and accurate monitoring for water area variation is of paramount importance for disaster prevention and the rational planning and utilization of water resources. In recent years, with the rapid advancement of remote sensing technology, remote sensing image-based water body extraction has become a crucial approach for monitoring the dynamic changes in water area.

Thresholding method [

1,

2,

3] is the most classical method for water body extraction in remote sensing imagery, which distinguishes water bodies from other land cover by leveraging their different spectral characteristics. Although the thresholding method is effective for water body extraction in some remote sensing images, its intelligence is limited by the reliance on manual experience in threshold selection. Furthermore, the thresholding method suffers from limited generalization capability, due to its high sensitivity to objective factors such as image preprocessing techniques and the coverage location of remote sensing data. With the advancement of machine learning, numerous machine learning-based approaches have been introduced to address these problems of thresholding method, including support vector machines (SVMs) [

4], principal component analysis (PCA) [

5], and clustering methods [

6]. The machine learning-based methods are superior to the traditional thresholding method in terms of accuracy. However, they exploit only shallow information within the remote sensing images to extract water bodies, which inherently limits their extraction performance and noise robustness when processing large-scale datasets.

With advancements in deep learning technology, it has been widely applied in computer vision domains such as object detection, image classification, and semantic segmentation. Furthermore, deep learning technology has also provided new solutions for water body extraction. Deep learning-based extraction methods can not only identify water body boundaries more accurately but also show superior robustness and generalization performance in complex scenes. In 2017, Isikdogan et al. [

7] designed a convolutional neural network called Deep-WaterMap based on FCN, which effectively distinguishes water from land, snow, rain and clouds. In 2019, Li et al. [

8] used the FCN model to extract water bodies from very high spatial resolution (VHR) images, and the results demonstrated that FCN has excellent water body extraction capabilities. In 2021, Yuan et al. [

9] designed a multi-channel water body detection network (MC-WBDN) based on ResNet and DeepLabV3+ to make more effective use of multispectral information in remote sensing images. This network exhibited enhanced robustness against variations in lighting and weather conditions. In 2023, Lyu et al. [

10] designed the successive attention fusion module (SAFM) and the joint attention module (JAM) to capture spatial details and abstract semantics at different levels simultaneously. And they combined these modules with ResNet to propose the multi-scale sequential attention fusion network (MSAFNet).

In recent years, the transformer architecture [

11,

12], with strong global information modeling capabilities, has become a research hotspot in image processing. It has demonstrated considerable potential and has gained increasing traction in water body extraction applications. In 2023, Chen et al. [

13] proposed a double branch parallel network by combining ResNet and Swin Transformer. This network leverages the deep feature extraction capabilities of ResNet and the global information fusion capabilities of Swin Transformer, thereby improving the accuracy of water body extraction. Zhao et al. [

14] replaced the convolutional layers in the encoder of U-Net [

15] with Vision Transformer and incorporated a convolutional block attention module (CBAM) attention module in the decoder, proposing the ViTenc-Unet. This network has demonstrated outstanding performance in water body extraction tasks on the Qinghai–Tibet Plateau. Kang et al. [

16] proposed the WaterFormer network by embedding Vision Transformer between two different CNNs, aiming to explore long-range dependencies between low-level spatial information and high-level semantic features.

Deep learning-based water body extraction methods demonstrate excellent performance in terms of accuracy, robustness, and generalization. However, their large parameter count and high computational complexity significantly hinder deployment practicality. This limitation prevents these networks from reaching their full performance potential on devices with constrained computational and storage resources or limited size and power consumption. Furthermore, the high latency of these networks hinders their applicability in real-time applications, such as flood disaster monitoring. Therefore, it is of great practical significance to design a lightweight water extraction network that balances accuracy and efficiency.

In contrast to existing water extraction networks, this study prioritizes real-time inference capability as a primary constraint and pursues enhanced segmentation accuracy under this condition. We investigate the impact of network architectures on inference latency to design low-latency modules that improve extraction accuracy. Finally, this paper proposes a lightweight u-shaped network (LU-Net) with an encoder–decoder architecture for water body extraction. The proposed network employs lightweight RepViT [

17] as the encoder for rapid feature extraction. Additionally, a lightweight convolutional block attention module (LCBAM) is designed to replace the squeeze-and-excitation (SE) [

18] attention module in RepViT to enhance the network’s discriminative capability. A lightweight decoding block (LDB) is designed for rapid decoding, and a structurally re-parameterized multi-scale fusion prediction module (SRMFPM) is designed to improve water boundary extraction. The LDB, together with the SRMFPM, constitutes the decoder of LU-Net. Our work adapts the official codebase of RepViT [

17]. The implementation of the network architecture is substantially modified by integrating the proposed LCBAM, LDB, and SRMFPM. Furthermore, the training pipeline is adjusted for our specific task.

The main contributions of this work are summarized as follows:

The CBAM [

19] is introduced to simultaneously capture the importance of different channels and spatial regions in feature maps and enhance the network’s ability to identify water bodies. Specifically, the LCBAM is designed to mitigate the impact of CBAM on inference latency.

The LDB is designed to reduce network parameters and computational complexity for improved inference speed and deployment efficiency.

The SRMFPM is designed to capture multi-scale water body features for enhanced prediction accuracy of multi-scale water boundaries.

3. Experimental Results and Discussion

3.1. Datasets

To evaluate the effectiveness of all models, experiment was conducted on the GID [

24] and LoveDA [

25] datasets. To apply the GID dataset to our experiment, we randomly selected 61 remote sensing images from its large-scale classification set. Each image was cropped into 512 × 512 size, with corresponding label maps reclassified into binary categories: water bodies and non-water bodies. Furthermore, some images with excessive non-water body areas were excluded from the dataset to maintain a balance between positive and negative samples. The final dataset comprised 10,218 512 × 512-sized remote sensing images, divided into 8211 training images, 1003 validation images, and 1004 test images.

Following the processing approach of the GID dataset, 4191 images were selected from the LoveDA dataset, cropped into 512 × 512 patches, and further relabeled into two classes. Additionally, some images exhibiting excessive non-water coverage were discarded. The final curated dataset consists of 8694 512 × 512-sized remote sensing images, with 6816 images allocated to the training set, 939 to the validation set, and 939 to the test set.

3.2. Evaluation Metrics

3.2.1. Metrics of Extraction Performance Evaluation

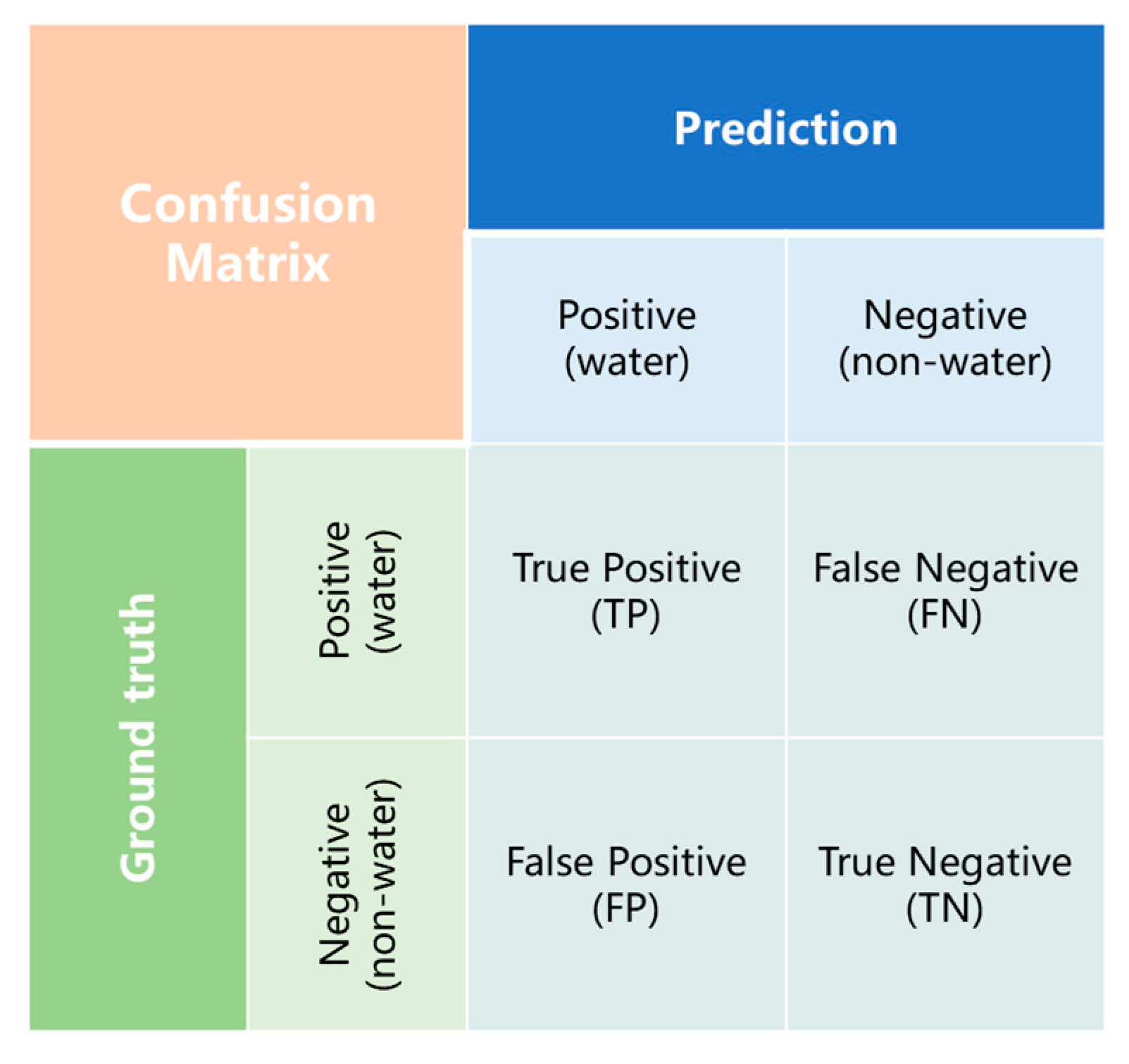

To evaluate the extraction performance of all models in this experiment, a set of metrics is employed, including Mean Intersection over Union (

), overall accuracy (

),

,

, and

. The

coefficient measures the agreement between predictions and ground truth. According to the Landis & Koch benchmark [

26],

values indicate: <0.00 (Poor), 0.00–0.20 (Slight), 0.21–0.40 (Fair), 0.41–0.60 (Moderate), 0.61–0.80 (Substantial), and 0.81–1.00 (Almost Perfect). Additionally, this study employs the Receiver Operating Characteristic (ROC) curve for a further evaluation of model performance, which plots the False Positive Rate (

) on the

x-axis against the True Positive Rate (

) on the

y-axis. A curve that hugs the upper-left corner indicates superior prediction performance.

The calculation formulas for these metrics are defined as follows:

where

refers to the number of pixels correctly classified as a water body;

is the number of pixels correctly predicted as a non-water body;

denotes the number of pixels incorrectly classified as a water body; and

represents the number of pixels incorrectly classified as a non-water body.

Figure 5 provides a visual illustration of

,

,

, and

.

3.2.2. Metrics of Lightweight Performance Evaluation

Three metrics, , , and Latency, are adopted to assess the lightweight performance of all models. refer to the total count of learnable parameters in the model. represent the total number of multiply accumulate operations during model inference. Latency denotes the time required for the model to complete a single inference. Additionally, to provide a more comprehensive evaluation of the model’s lightweight performance, the latency is measured separately on both GPU and CPU.

3.3. Experimental Details

In our study, experiments were conducted on GPU NVIDIA RTX 4070TI SUPER and CPU AMD 7500F with the following configurations: a batch size of 32, maximum epochs of 100, and an initial learning rate of 0.001. Moreover, the AdamW optimizer was used for training, with a weight decay coefficient set to 0.025. The binary cross-entropy (BCE) loss was employed as the objective function for optimization.

3.4. Comparative Experiments

To verify the performance of our approach, we compared it with six networks: EffcientNet-U, ShuffleNetV2-U, MobileNetV3-U, ENet [

27], ERFNet [

28], and CGNet [

29]. EfficientNet-U, ShuffleNetV2-U, and MobileNetV3-U all adopt a U-shaped architecture. Their respective encoders are EfficientNet-B0 [

30], ShuffleNetV2 0.5× [

31], and MobileNetV3-Large [

32]. Their decoders share a unified progressive reconstruction architecture, in which each decoding stage sequentially applies two 3 × 3 convolutional layers for feature fusion, followed by a 2 × 2 transposed convolution layer to restore resolution. The three networks, ENet, ERFNet, and CGNet, are lightweight networks specifically designed for semantic segmentation.

3.4.1. Lightweight Performance Comparison Results

In the lightweight experiments, 4-channel images are used to measure latency and computational cost. The results are summarized in

Table 2. Although LU-Net has higher parameters and

compared to ENet, its latency is reduced by 3.4 MS on the GPU and 11 MS on the CPU due to its more efficient network architecture. Compared to EfficientNet-U, LU-Net achieves latency reductions of 1.2 MS on GPU and 29 MS on CPU. Compared to ShuffleNetV2-U, which has minimal

, LU-Net exhibits higher CPU latency but achieves better GPU latency. Relative to MobileNetV3-U, which shares comparable computational complexity and parameter count, LU-Net reduces the latency by 0.3 MS on the GPU and 3 MS on the CPU. Against CGNet, LU-Net provides 2.4 MS GPU and 32 MS CPU latency reductions thanks to its fewer branches during inference. Furthermore, LU-Net achieves latency reductions of 0.8 MS on the GPU and 49 MS on the CPU in comparison to ERFNet.

3.4.2. Extraction Performance Comparison Results on the GID Dataset

Table 3 presents the experimental results of various networks on the GID dataset. The results demonstrate that LU-Net achieves optimal performance across the three metrics:

at 91.36%,

at 96.71%, and

coefficient at 0.9083, which evaluate global classification quality and categorical consistency. Moreover, The LU-Net also demonstrates strong performance on metrics that focus on positive class classification. It achieves a

of 92.24%, second only to MobileNetV3-U, and a

of 94.04%, the highest among all networks.

Figure 6 presents the ROC curves of various networks on the GID. As shown in the figure, the ROC curve of LU-Net is closest to the top-left corner compared to other networks. These results indicate the superior performance of LU-Net over other methods on the GID.

Compared to other U-shaped lightweight networks, such as ShuffleNetV2-U, LU-Net with a more efficient architecture achieves varying degrees of improvement in , , , and , and is only slightly lower than MobileNetV3-U by 0.09% in . Compared to the lightweight networks specifically designed for semantic segmentation, such as ERFNet, LU-Net achieves improvements across all metrics. Even compared to CGNet, the best performer among them, LU-Net achieves significant enhancements: 0.36% higher in , 0.13% higher in , 0.0041 higher in , 0.58% higher in , and 0.04% higher in .

Figure 7 presents the visualization results for various networks on the GID dataset. The LU-Net outperforms other networks in identifying fine-scale and slender water bodies. The advantage of LU-Net in extracting fine-scale water bodies primarily stems from its multi-scale architecture, where multi-scale convolutions can effectively capture the features of minute water bodies, thereby enhancing recognition performance. For the slender water bodies in row 4, the upper region of row 7, and row 9, the LU-Net demonstrates the optimal identification capability. MobileNetV3-U and EfficientNet-U exhibit the second-best identification results, while ERFNet, ShuffleNetV2-U, ENet, and CGNet show significant breaks in their results. For the small water bodies in rows 2 and 8, LU-Net achieves more complete segmentation. Other networks exhibit varying degrees of omission, with ERFNet and MobileNetV3-U failing to detect nearly all small water bodies.

In addition to having a better recognition effect on fine-scale water bodies, the LU-Net also performs more effectively in identifying water bodies along image edges. As shown in rows 1 and 6, LU-Net achieves more complete segmentation of water bodies within the red circles compared to other networks.

Furthermore, LU-Net exhibits enhanced discrimination between water bodies and non-water regions due to the incorporation of LCBAM. As shown in the red-circled regions in the middle part of row 7, ERFNet, ShuffleNetV2-U, ENet, MobileNetV3-U, and EfficientNet-U misclassify the unmarked dark green background as water. In contrast, LU-Net avoids significant false detections. The LU-Net also demonstrates superior adaptability in some challenging scenarios. For algae-covered fishponds in row 3 and densely distributed small fishponds in row 5, LU-Net achieves more accurate and complete segmentation than other networks.

3.4.3. Extraction Performance Comparison Results on the LoveDA Dataset

Table 4 presents the results of various networks on the LoveDA dataset. The results indicate that LU-Net achieves competitive performance on LoveDA, with metrics of 86.32%

, 95.58%

, 0.8481

, 89.37%

, and 85.83%

. Compared to top-performing CGNet, the LU-Net shows only minor performance drops, with the largest decrease not exceeding 0.08%. Compared to EfficientNet-U, LU-Net exhibits 0.03% lower

and 1.29% reduced

but achieves 0.12% higher

, 1.55% improved

, and 0.0017 increased

. Overall, LU-Net is superior to EfficientNet-U in water extraction performance. Moreover, LU-Net consistently outperforms the other extraction networks across all evaluation metrics.

Figure 8 presents the ROC curves of various networks on the LoveDA dataset. As shown in the figure, LU-Net’s ROC curve ranks second only to CGNet in the low

range. As the

increases, LU-Net’s ROC curve outperforms other networks. These results demonstrate that LU-Net achieves the second-best performance on the LoveDA dataset, slightly inferior to CGNet.

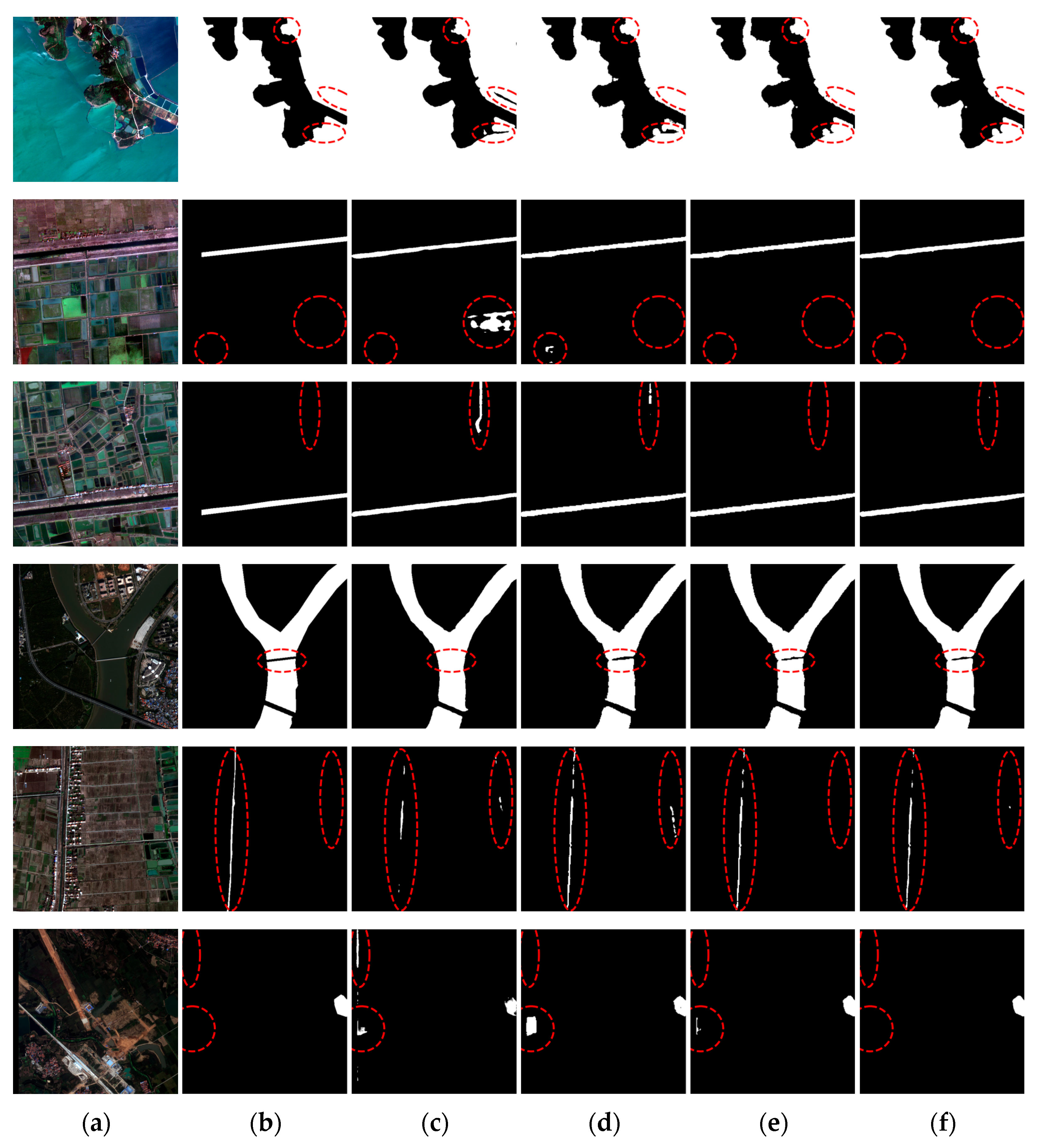

Figure 9 presents the visualization results for various networks on the LoveDA dataset. The results indicate that LU-Net performs better in identifying water bodies than other networks.

For the reflective water surface and the small water body in row 1, LU-Net achieves the best recognition. For the elongated water body in the upper-left section of row 5, only LU-Net and CGNet achieve near-complete segmentation, while other networks, especially ShuffleNetV2-U and EfficientNet-U, show almost no detection. The LU-Net also achieves optimal extraction performance in some challenging scenarios. As illustrated by the semi-transparent water body in the upper red circle of row 6 and the algae-covered water body in row 7, LU-Net provides more complete segmentation than other networks.

Furthermore, LU-Net shows a greater capability to discriminate between water bodies and other land cover types due to the incorporation of LCBAM. For the farmland and wasteland in rows 2 and 4, only LU-Net achieved accurate segmentation of these regions, while other networks showed varying degrees of misclassification. For the building shadows in the red circle of row 3, LU-Net, ERFNet, EfficientNet-U, and CGNet produced no false positives, whereas the other three networks misclassified the shadows as water bodies.

Considering both computational efficiency and extraction accuracy, LU-Net achieves the best overall performance among all evaluated networks. Compared to ShuffleNetV2-U, LU-Net achieves lower GPU latency and better extraction performance on the two datasets, despite exhibiting higher CPU inference time. Compared to CGNet, LU-Net achieves faster inference speed and better extraction performance on the GID dataset, although it shows slightly lower accuracy on the LoveDA dataset. Moreover, LU-Net demonstrates significant advantages in both extraction performance and latency compared to the remaining networks.

3.5. Ablation Study

To analyze the effectiveness of the proposed modules, we first constructed a baseline network. Its encoder is a RepViT network, and its decoder comprises multiple blocks, each consisting of two 3 × 3 convolution layers and one 2 × 2 transposed convolution layer. Then, we developed various network variants by incrementally adding or replacing modules on this baseline. Finally, we evaluated various performance metrics for these variants. The ablation study follows the same experimental setup as the comparison experiments.

3.5.1. Performance Metrics Results

Table 5 presents the lightweight performance results of various network variants.

Table 6 and

Table 7 present the extraction performance results on the GID and LoveDA datasets, respectively.

The results show that introducing LDB reduces GPU latency by 0.5 MS and CPU latency by 7 MS for the baseline network. Moreover, LDB can enhance the network’s water extraction performance by leveraging its multi-scale feature fusion and large receptive field. On the GID dataset, the LDB increases the baseline’s , , and by 0.07%, 0.03%, and 0.0007, respectively. On the LoveDA dataset, the improvements in the same metrics are 0.24%, 0.09%, and 0.0030, respectively.

The LCBAM improves the network’s extraction performance through its superior ability to focus on critical features, despite increasing GPU latency by 0.5 MS and CPU latency by 3 MS. On the GID dataset, LCBAM improves by 0.11%, by 0.04%, by 0.0012, and by 0.35%, although drops by 0.17%. On the LoveDA dataset, the LCBAM boosts , , and by 0.23%, 0.1%, and 0.0029, respectively.

The SRMFPM also enhances segmentation performance through multi-scale prediction, although it slightly increases GPU latency by 0.1 MS and CPU latency by 2 MS. On the GID dataset, SRMFPM improves , , and by 0.06%, 0.02%, and 0.0007. Notably, it increases by 0.09% with no drop in . On the LoveDA dataset, SRMFPM improves , , , and by 0.08%, 0.01%, 0.0009, and 0.57%, respectively, while only reducing by 0.42%.

3.5.2. Visualization Results Analysis

Figure 10 presents the visualization results of network variants on the GID dataset. The results show that progressive integration of the proposed modules into the baseline leads to noticeable improvements in fine-scale water body identification, false positive suppression, and edge extraction.

Replacing the baseline decoder blocks with LDBs significantly improves the identification of small water bodies, thanks to their multi-scale characteristics. As shown in the top red-circled regions of row 1, the small fish ponds are identified more completely after introducing LDBs. Likewise, the slender bridge in row 4 and the narrow river in row 5 are also detected with greater completeness.

The LCBAM enhances the model’s ability to capture subtle differences between the water and background by enhancing water body features. Thus, the integration of LCBAM into the baseline-LDB leads to a notable reduction in false detections. As shown in rows 2 and 3, the extraction results of baseline-LDB-LCBAM are superior to those of baseline-LDB, with almost no false detections in the paddy fields within the red-circled regions.

The proposed SRMFPM further enhances the network’s extraction performance. As shown in row 6, the identification of non-water regions is more accurate in LU-Net than in baseline-LDB-LCBAM. Additionally, SRMFPM enhances the accuracy and smoothness of water body edge segmentation. As illustrated in

Figure 11, the edge segmentation results produced by LU-Net are closer to the ground truth image than those of baseline-LDB-LCBAM.

Figure 12 presents the visualization results of network variants on the LoveDA dataset. As the proposed modules are added progressively, the network demonstrates improved performance in water body extraction.

The integration of LDB enhances the performance in identifying algae-covered water bodies. As shown in rows 1 and 2, the baseline fails to extract many algae-covered water bodies, while the baseline-LDB captures much more of these areas. Moreover, LDB improves the network’s capability to extract small water bodies that are located near image boundaries. As shown in rows 4 and 6, the extraction performance of baseline-LDB is superior to that of the baseline network.

The LCBAM enhances the network’s segmentation accuracy by amplifying the feature differences between water and background. The baseline-LDB produces varying degrees of false detections for the agricultural land in row 3 and the forested area in row 5. However, the baseline-LDB-LCBAM significantly reduces these false positives.

The introduction of SRMFPM further improves the network’s extraction performance. As illustrated in the figure of row 5, LU-Net produces fewer false positives than baseline-LDB-LCBAM. Moreover, adding SRMFPM also improves the recognition of water body edges on the LoveDA dataset. As illustrated in

Figure 13, the network with SRMFPM achieves better edge segmentation closer to the ground truth.

4. Conclusions

This research proposes a lightweight U-shaped water body extraction network named LU-Net, aiming to meet the requirements for real-time extraction and deployment convenience. The LU-Net adopts an encoder–decoder architecture, where RepViT serves as the encoder to enable efficient feature extraction, and the LDB is designed as the decoder to support fast decoding. Furthermore, two key components—LCBAM and SRMFPM—are designed to enhance this network’s discriminative capability and ability to identify water body boundaries. The experimental results demonstrate that LU-Net achieves the best or near-best extraction performance on the GID and LoveDA datasets. Moreover, LU-Net achieves the lowest latency on GPU and the second-lowest latency on CPU, indicating its efficiency across different computing platforms. Considering all aspects, LU-Net strikes the optimal trade-off between extraction accuracy and inference speed among all tested methods. However, this study was primarily validated on the GID and LoveDA datasets. Although they include diverse scenarios, this remains insufficient to comprehensively evaluate the model’s generalization capability and noise robustness across different sensors, seasons, and extreme terrain conditions. In the future, we plan to explore and improve LU-Net’s performance in such complex environments to meet the diverse requirements of real-world water body extraction tasks. Additionally, we will investigate LU-Net’s application potential in multispectral and hyperspectral remote sensing imagery to broaden its deployment scenarios and extend its performance boundaries.