Abstract

Water surface garbage has a significant impact on the protection of water environments and ecological balance, making water surface garbage object detection a critical task. Traditional supervised object detection methods require a large amount of annotated data. To address this issue, we propose a method that combines strong and weak supervision with CLIP (Contrastive Language–Image Pretraining) for water surface garbage object detection. First, we train on a dataset annotated with strong supervision, using traditional object detection algorithms to learn the location information of water surface garbage. Then, we input the water surface garbage images into CLIP’s visual encoder to obtain visual feature representations. Simultaneously, we train CLIP’s text encoder using textual description annotations to obtain textual feature representations of the images. By fusing the visual and textual features, we obtain comprehensive feature representations. In the weak supervision training phase, we input the comprehensive feature representations into the object detection model and employ a training strategy that combines strong and weak supervision to detect and localize water surface garbage. To further improve the model’s performance, we introduce attention mechanisms and data augmentation techniques to enhance the model’s focus and robustness towards water surface garbage. By conducting experiments on two water surface garbage datasets, we validate the effectiveness of the proposed method based on the combination of strong and weak supervision with CLIP for water surface garbage object detection tasks.

1. Introduction

In recent years, the issue of water surface garbage has attracted increasing attention worldwide. The presence of water surface garbage poses threats to marine ecosystems and affects the cleanliness and aesthetics of water bodies. Therefore, developing advanced techniques for detecting and monitoring water surface garbage has become crucial [1,2].

The early detection models related to water surface garbage are mainly based on traditional methods. With the improvement of computer computing power and the rapid development of deep learning models, more and more deep learning models are being applied to the field of water surface garbage detection. Based on relevant object detection algorithms, researchers have developed a series of water surface garbage detectors. However, algorithm performance is the core condition determining the accuracy of water surface garbage detectors. Therefore, we must focus more on the research of water surface garbage detection algorithms.

Object detection, as a fundamental task in computer vision, plays a vital role in identifying and locating objects of interest in images or videos. The development of object detection algorithms has gone through several important stages. Initially, traditional machine-learning-based methods, such as Viola–Jones Detector [3], HOG Detector [4], and DPM Detector [5], relied on manually designed features and classifiers, achieving certain success in object detection but with limited performance in complex scenarios. With the rise of deep learning, significant progress has been made in object detection algorithms for surface garbage detection. Among them, the R-CNN (Region-based Convolutional Neural Network) series introduced region proposal networks (RPN) and convolutional neural networks (CNN) for object detection. Algorithms such as R-CNN [6], Fast R-CNN [7], and Faster R-CNN [8] have achieved significant improvements in accuracy and efficiency. Subsequently, single-stage detectors like YOLO (You Only Look Once) [9] and SSD (Single Shot MultiBox Detector) [10] emerged, enabling faster object detection by directly regressing object bounding boxes and class information. However, these algorithms still face challenges in detecting small objects and objects with significant scale variations. In recent years, attention-based object detection algorithms have gained widespread attention. These algorithms use attention mechanisms to focus on regions in the image relevant to the object, such as Attention R-CNN [11] and SNIPER [12]. Attention mechanisms improve detection performance, particularly in handling complex scenes and occlusion. Although traditional object detection methods have achieved remarkable success in various domains, they often face significant challenges in water surface garbage detection. The dynamic nature of water bodies, irregular shapes and sizes of floating garbage, and the influence of lighting conditions pose unique difficulties in accurately detecting surface garbage.

The latest advancements in visual–linguistic representation learning have led to remarkable models such as CLIP [13], ALIGN [14], and Florans [15]. By training on billions of image–text pairs, using the matching between images and captions, impressive results have been achieved without manual labels, enabling the recognition of a vast range of concepts and transferring learning to many visual recognition tasks. The emergence of the CLIP (Contrastive Language–Image Pretraining) model leverages the power of cross-modal learning, achieving cross-modal representation learning between images and text through joint training, providing new insights and performance improvements for object detection tasks. The CLIP model excels at learning joint representations of images and text, enabling it to understand image content and associated textual descriptions. This cross-modal understanding allows the CLIP model to generalize knowledge across modalities, facilitating transfer learning from one domain to another. By applying the CLIP model to water surface garbage detection, we can leverage textual descriptions of garbage objects combined with visual cues to enhance the accuracy and robustness of detection algorithms.

This paper explores the application of the CLIP model in the field of object detection, specifically for water surface garbage detection. We propose a method that combines strong and weak supervision using CLIP (Contrastive Language–Image Pretraining) for water surface garbage object detection. Firstly, we train the model using strongly annotated datasets to learn the spatial information of water surface garbage through conventional object detection algorithms. Then, we input the water surface garbage images into the visual encoder of CLIP to obtain visual feature representations and simultaneously train the text encoder of CLIP using textual description annotations to obtain textual feature representations. By fusing visual and textual features, we obtain comprehensive feature representations. We input the comprehensive feature representations into the object detection model in the weak supervision training phase, combining strong and weak supervision training strategies for water surface garbage detection and localization. To further enhance the model’s performance, we introduce attention mechanisms and data augmentation techniques to improve the model’s focus and robustness towards water surface garbage. Through experiments on a water surface garbage dataset, we validate the effectiveness of the proposed method that combines strong and weak supervision using CLIP for water surface garbage object detection. Our method achieves satisfactory performance with limited annotated data compared to traditional strong supervision methods. Through experimental evaluations and comparative analyses, we aim to demonstrate the effectiveness and potential of the CLIP-based method in addressing water surface garbage detection. By integrating the CLIP model and leveraging its advantages in cross-modal learning, we can improve the performance and adaptability of object detection algorithms designed for water surface garbage detection. The CLIP-based model, by combining textual descriptions with visual cues, can enhance detection results, reduce false positives, and facilitate transfer learning, thereby advancing the field of water pollution monitoring.

The remainder of this paper is organized as follows: Section 2 provides an overview of water surface garbage detection and the development of the CLIP model and relevant state-of-the-art techniques. Section 3 introduces the overall framework and details of the proposed method. Section 4 presents the experimental datasets, implementation, and result evaluation. Finally, Section 5 provides conclusions and possible suggestions for future work.

2. Related Work

2.1. Water Surface Garbage Detection

Water Surface garbage detection is one of the important applications of deep-learning-based object detection algorithms in the field of environmental protection. With the rapid development of deep learning, many research works have been devoted to developing efficient and accurate algorithms for water surface garbage detection. Deep-learning-based object detection algorithms have been widely applied to surface garbage detection. Pu et al. [16] investigated five classic object detection algorithms, including Faster R-CNN, Cascade R-CNN, YOLOv3, YOLOv5-s, YOLOv5-m, and FloW Img, and experimentally verified and compared their detection accuracy and efficiency. The experimental results showed that YOLOv3 achieved higher detection accuracy compared to the other four algorithms. Yang et al. [17] designed a garbage collection system with autonomous cruising, recognition, and detection capabilities, equipped with the ROS robot operating system and utilizing the YOLO v4 object detection model to detect garbage, which was then applied to an intelligent garbage collection system using the detected targets and their location information. Kong et al. [18] proposed an intelligent robot system, IWSCR, for water surface cleaning, where the core component of the visual system was designed based on YOLOv3. Ying et al. [19] also designed an intelligent underwater robot for surface garbage cleaning. They applied a simple CNN-based method for surface garbage detection, achieving satisfactory performance in the automatic detection and classification of water surface garbage in a laboratory environment. Li et al. [20] proposed an improved YOLOv3-based garbage detection method that enables fast and accurate detection in varying aquatic environments. Zhang et al. [21] improved Faster R-CNN by combining low-level and high-level features. The experimental results showed that the method achieved good real-time performance for detecting floating water surface garbage. Ning et al. [22] proposed a target detection algorithm named PC-Net to address the challenges of complex backgrounds and small objects in floating garbage detection. Compared to existing detection methods, the average precision of detection was significantly improved. Matias et al. [23] trained a convolutional neural network for classifying 96 × 96 images and used it as an object detector by applying a sliding window approach. The results demonstrated that the combination of neural networks with FLS formed a powerful object detection system suitable for small objects in underwater environments. Cai et al. [24] applied the CBAM attention module to the YOLOv5 object detection algorithm to develop a target detection model for plastic waste, aiding in the automatic capture of marine debris. Guo et al. [25] proposed a neural-network-based water surface garbage object detection framework and further introduced a data augmentation strategy that synthesizes degraded images under different weather conditions to improve the network’s generalization and feature representation capabilities. Yang et al. [26] proposed a water surface floating garbage motion target big-data detection and recognition method and system based on blockchain technology. The detection rates of the system in three working environments were 93%, 96%, and 74%, with an average detection rate of 84.3%. Yi et al. [27] introduced a GFL (Generalized Focal Loss)-based GFL_HAM algorithm to improve the accuracy of water surface garbage detection, achieving improvements of 1.09%, 1.36%, and 1.61% in mAP compared to YOLOv3, SSD, and GFL, respectively. Ai et al. [28] proposed a small object localization method based on the lateral inhibition (LI) neural biological phenomenon, discrete wavelet transform (DWT), and parameter-designed pulse-coupled neural network (PD-FC-MSPCNN) to track floating water garbage. Ma et al. [29] presented a floating garbage recognition algorithm that combines U-Net and an improved YOLOv5s, providing a new effective approach for the management of floating waste on water.

Unlike commonly used models in object detection that require pre-training before fine-tuning, cross-modal models such as CLIP can directly achieve zero-shot image classification, which means that classification can be achieved on a specific downstream task without the need for any training data. CLIP includes two models: text encoder and image encoder, where text encoder is used to extract text features and can use the commonly used text transformer model in NLP while the image encoder can be used to extract image features using commonly used CNN models or vision transformers. This also gives cross-modal models such as CLIP the advantages of both vision transformers and CNN. Therefore, this study aims to explore the application of strong and weak supervision combined with the CLIP algorithm in water surface garbage detection.

2.2. Cross-Modal Correlation Algorithm

Cross-modal correlation algorithms have become an important research direction in the fields of computer vision and natural language processing in recent years. These algorithms aim to achieve joint representation learning between images and text, enabling effective matching and understanding of data from different modalities [30,31]. In the past, dealing with the correlation between images and text often involved manually designed features and rules. These methods required manual feature extraction and matching, leading to issues such as modality inconsistency and dimension mismatch, limiting their applicability in complex tasks. With the rapid development of deep learning, deep neural-network-based cross-modal correlation algorithms have made significant progress. These methods utilize deep convolutional neural networks (CNNs) [32,33] and recurrent neural networks (RNNs) [34,35] to handle image and text data separately and then fuse and match their representations. These methods are typically trained through supervised learning, using large-scale annotated image–text pairs for training. However, these methods face challenges when dealing with scarce annotated data and modality mismatch situations.

To address the issues of scarce annotated data and modality mismatch, cross-modal self-supervised learning has become a research hotspot. These methods leverage large-scale unlabeled image and text data for pretraining and learning aligned representations for images and text. Some methods incorporate techniques like generative adversarial networks (GANs) and variational autoencoders (VAEs) to generate richer and continuous cross-modal representations [36,37]. Others introduce image and text reconstruction tasks in self-supervised learning to further enhance the model’s learning capability [38,39]. Additionally, some research explores methods such as cross-modal knowledge distillation and transfer learning to improve the model’s generalization and adaptability [40].

In recent years, cross-modal correlation algorithms have made significant developments in the field of object detection. CLIP utilizes large-scale image and text data for pretraining and learns semantic correlations between images and text. By contrasting images and text samples in the embedding space, CLIP is able to bring relevant image and text samples closer, thus achieving cross-modal object detection. The emergence of CLIP is of great significance in addressing the issue of annotated data in traditional object detection algorithms. ViLBERT (Vision-and-Language BERT) [41] is another important cross-modal correlation algorithm that achieves joint understanding between images and text by pretraining a joint visual and language encoder. ViLBERT can not only perform object detection but also tasks such as image question answering and visual reasoning, enriching the application scenarios of cross-modal correlation algorithms in the field of object detection. LXMERT (Cross-Modality Encoder Representations from Transformers) [42] is a cross-modal correlation algorithm based on the transformer architecture. LXMERT achieves alignment between images and text in the embedding space by jointly training encoders for images and text. It has demonstrated excellent performance in the field of object detection, especially in visual question-answering tasks. UNITER (UNiversal Image–TExt Representation) [43] is a transformer-based cross-modal correlation algorithm that achieves semantic alignment between images and text by jointly training encoders for images and text. The application of UNITER in object detection tasks suggests the potential and advantages of cross-modal correlation algorithms in solving cross-modal object detection problems.

3. Methodology

3.1. Overall Framework

CLIP has achieved tremendous success in various downstream tasks in the field of computer vision, including image classification. The reason behind this success lies in CLIP’s ability to acquire highly generalized common knowledge from a dataset of 400 million text–image pairs collected from the internet through cross-modal contrastive learning. This also enables CLIP to possess zero-shot learning capabilities for unknown classes, which provides a potential solution for recognizing unknown categories of water surface garbage. However, using the vanilla CLIP model directly for water surface garbage detection does not yield satisfactory results, and the reasons for this are as follows:

- The 400 million data samples collected by CLIP from the internet provide general knowledge, but they lack specialization for the specific domain of water surface garbage recognition.

- Compared to the original CLIP used for image classification, water surface garbage detection as an object detection task involves more background interference.

- Directly applying image–text contrastive learning by cropping out garbage objects may lead to different instances of the same class appearing in a single batch. Treating these instances as equal negatives in traditional contrastive loss functions is not reasonable.

- Utilizing annotated samples for strong supervision fine-tuning is labor-intensive.

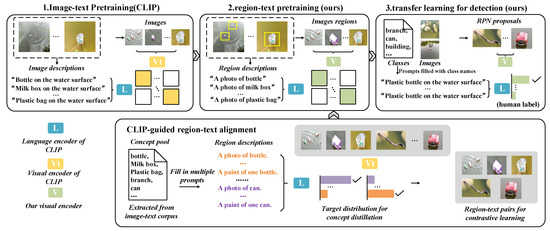

To address the challenges of domain disparity and data labeling mentioned above, this paper proposes a water surface garbage detection method based on domain self-supervised visual transformer pretraining. As shown in Figure 1, the technical roadmap for water surface garbage detection using the combination of strong and weak supervision with CLIP is as follows:

Figure 1.

Overall Technology roadmap.

- Data preparation stage: Collect a dataset of water surface garbage images. Perform strong supervision annotation by labeling the pixel-level garbage positions in each image. Perform weak supervision annotation by labeling the presence of garbage at the image level for each image.

- Strong supervision training stage: Use traditional object detection algorithms (such as Faster R-CNN, YOLO, etc.) for strong supervision training. Utilize the data annotated with strong supervision to learn the location information of water surface garbage.

- Weak supervision training stage: Input the images into CLIP’s visual encoder to obtain visual feature representations. Input the image features and image-level textual descriptions into CLIP’s text encoder to obtain textual feature representations for the images. Then, fuse the visual features and textual features to obtain comprehensive feature representations. Combine traditional object detection algorithms for water surface garbage detection and inference.

3.2. Strong and Weak Supervision Combined with CLIP

CLIP (Contrastive Language–Image Pretraining) is a cross-modal learning model that has garnered significant attention and application in the field of computer vision. It was introduced by the OpenAI team in 2021 and aims to establish a strong connection between images and text through joint training.

Traditional computer vision models typically focus on processing image data, but CLIP’s innovation lies in the joint training of both images and text. This approach enables CLIP to possess a cross-modal understanding, allowing it to comprehend the relationship between images and text simultaneously. This capability has demonstrated remarkable performance in various computer vision tasks, including image classification, object detection, and image generation.

The core idea of CLIP is to learn the correlation between images and text through contrastive learning. During training, CLIP is exposed to a large-scale dataset of image–text pairs, where the images and text are paired together. The model learns to match related image–text pairs while distinguishing unrelated ones. Through this contrastive learning process, CLIP captures the semantic relationship and shared features between images and text.

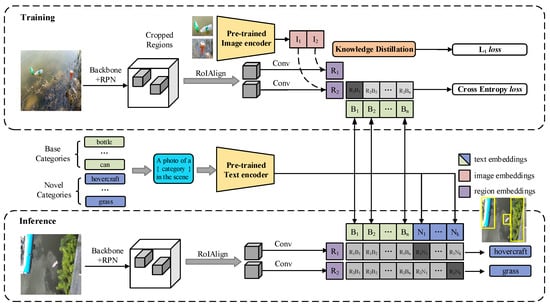

The overall architecture of CLIP, as shown in Figure 2, consists of two key components: the visual encoder and the text encoder. The visual encoder is a convolutional neural network that converts images into low-dimensional visual feature representations. The text encoder is a transformer model that encodes text sequences into semantic feature representations. These two encoders share an embedding space, where the features of images and text are mapped to the same vector space.

Figure 2.

Overall Architecture of CLIP.

To begin, a dataset of water surface garbage images needs to be prepared, where each image has corresponding strong supervision and weak supervision annotations. Strong supervision refers to pixel-level annotations for each image, accurately indicating the location of the water surface garbage. Weak supervision, on the other hand, involves image-level annotations, indicating only the presence or absence of water surface garbage without providing specific location information.

The approach of combining strong supervision with weak supervision and CLIP consists of two steps: training and inference. In the training phase, the following operations are performed: Firstly, the images are cropped to extract the annotated regions, and the pre-trained image encoder is used to encode these regions, resulting in image embeddings (red portion). Taking the Mask R-CNN algorithm as an example, the Mask R-CNN (Backbone + RPN + RoIAlign branch) is used to generate class-agnostic region embeddings (purple portion). The image embeddings and region embeddings need to undergo knowledge distillation. Basic categories (green portion) are transformed into text and fed into the pre-trained text encoder to generate text embeddings (green portion). The region embeddings and text embeddings are then subjected to dot product and softmax normalization. The supervision signal consists of a 1 at the corresponding class position and a 0 for the remaining positions.

In the inference phase, the following operations are performed: The basic categories and new categories are transformed into text and fed into the pre-trained text encoder to generate text embeddings (green and blue portions, respectively). Simultaneously, the Mask R-CNN is used to generate class-agnostic region embeddings. The region embeddings and text embeddings are then subjected to dot product and softmax normalization, and the predicted result for each region is determined by selecting the class with the highest value among the new categories (blue portion).

By combining the strong supervision of traditional object detection algorithms and the cross-modal understanding capability of CLIP, the proposed approach for water surface garbage detection can effectively leverage the localization ability of object detection algorithms and the cross-modal understanding of CLIP to detect water surface garbage from both image and text perspectives. By incorporating different types of supervision information, this method can improve the accuracy and robustness of water surface garbage detection and has practical applications. However, the effectiveness of this method is influenced by the quality of strong and weak supervision annotations, requiring accurate and reliable annotations during the data preparation stage. Additionally, the training and optimization of the model also require large-scale datasets and computational resources to achieve better performance.

3.3. Loss Function of Object Detection

The loss function computation in CLIP for object detection tasks involves two aspects of contrastive learning: positive pair contrast and negative pair contrast. Positive pair contrast refers to comparing the embedding vectors of each image with their corresponding text embedding vectors to maximize their similarity. Negative pair contrast refers to comparing the embedding vectors of each image with the text embedding vectors of other images to minimize their similarity. This contrastive learning can be achieved by calculating cosine similarity or other similarity measures.

Specifically, the positive pair contrast loss function is typically computed by maximizing the margin cosine similarity. For each image and its corresponding text description, the cosine similarity is calculated, and the goal is to maximize this similarity. On the other hand, the negative pair contrast loss function is computed by minimizing the margin cosine similarity. For each image and the text descriptions of other images, the cosine similarity is calculated, and the goal is to minimize this similarity.

By considering both positive pair contrast and negative pair contrast losses, a comprehensive loss function that combines them can be obtained. The loss function for CLIP in object detection tasks can be defined as follows:

where represents the positive pair contrast loss, represents the negative pair contrast loss, and is a hyperparameter that balances the two.

The positive pair contrast loss can use a contrastive loss function, such as the cosine similarity loss:

where represents the similarity score between positive pairs and represents the total number of samples. By calculating the cosine similarity scores between positive pairs and applying the softmax operation, the similarity scores are transformed into a probability distribution. The positive pair contrast loss function encourages the model to establish a close relationship between the image embedding vectors and their corresponding text embedding vectors by maximizing the similarity scores of positive pairs.

The negative pair contrast loss can use a contrastive loss function, such as the cosine similarity loss:

where represents the similarity score between negative pairs and represents the total number of samples. By calculating the cosine similarity scores between negative pairs and applying the softmax operation, the similarity scores are transformed into a probability distribution. The negative pair contrast loss function encourages the model to establish a lower similarity between the image embedding vectors and the text embedding vectors of other images by minimizing the similarity scores of negative pairs.

By jointly optimizing the positive pair contrast loss and the negative pair contrast loss, CLIP in object detection tasks can learn the correlation between images and text and provide accurate object detection results. This joint training approach fully utilizes the interaction between images and text, enabling the model to possess strong cross-modal understanding and contextual reasoning capabilities, thereby improving the accuracy and robustness of object detection.

By considering both strong supervision loss and weak supervision loss, the entire network is jointly optimized using optimization algorithms such as gradient descent to minimize the overall loss. Through the iterative optimization process, the model’s parameters are continuously updated, enabling the model to perform effective water surface garbage detection guided by both strong and weak supervision.

3.4. Model Evaluation Indicators

mAP (mean Average Precision) is a commonly used metric to evaluate the performance of object detection models. Computing mAP involves calculating the Average Precision (AP) for each object class and then taking the average. AP is obtained based on the precision–recall (PR) curve. The PR curve is formed by connecting all the precision–recall points, typically establishing individual PR curves for each predicted bounding box of each class. The corresponding formulas for computation are as follows:

4. Experiments

4.1. Dataset Introduction

The experiments were conducted on a water surface garbage detection dataset, which is divided into a public dataset consisting of 3090 annotated images and a private dataset consisting of 7350 annotated images. Both datasets contain a diverse range of water surface garbage image samples, covering various environmental conditions, types of garbage, and garbage sizes. Taking the public dataset as an example, it consists of 11 categories: cans, leaves, tree branches, grass, milk cartons, plastic bags, bottles, plastic containers, glass bottles, rotten fruits, and balls. The dataset is split as follows, where 2/3 of the annotated images are considered as the training dataset and the remaining 1/3 as the test dataset. The class statistics for this dataset are shown in Table 1 and Table 2.

Table 1.

Statistics of training dataset.

Table 2.

Statistics of test dataset.

4.2. Experiment Setting

To evaluate the water surface garbage object detection algorithm based on the combination of strong supervision and weak supervision with CLIP, we set up an experimental environment. The experimental environment includes the following main components and configurations: Hardware configuration: NVIDIA GeForce RTX 3060 GPU with 12 GB of VRAM. Software configuration: Ubuntu 20.04 LTS operating system, PyTorch 1.9.0 deep learning framework, and Python version 3.8. We used the Batch Gradient Descent optimization algorithm to update the model parameters and employed a learning rate decay strategy to accelerate model convergence. The experimental hyperparameters are shown in Table 3.

Table 3.

Experimental hyperparameter settings.

During the experiment, we fully utilized the GPU resources in the experimental environment to accelerate the training and inference speed, thereby obtaining the results of water surface garbage object detection faster. Specifically, by utilizing the powerful computing capability and VRAM capacity of the GeForce RTX 3060 GPU, we achieved fast and efficient deep-learning computations.

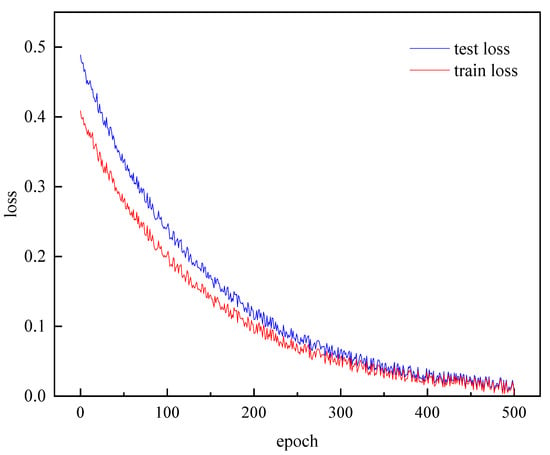

In the experiment of water surface garbage object detection based on the combination of strong supervision and weak supervision with CLIP, we trained the model using both the public dataset and the private dataset. During the training phase, a batch size of 128 was used, and the training batches were optimized using the Adam optimizer with an initial learning rate of 0.000075. The training loss curve is shown in Figure 3.

Figure 3.

Domain-specific pretraining loss curve.

In the downstream water surface garbage detection training, we trained the model for 100 epochs. We used the Adam optimizer with an initial learning rate of 0.0025 and applied a weight decay of 0.0001. The learning rate was reduced to one-tenth of the initial learning rate at the 50th and 80th epochs.

4.3. Performance Evaluation

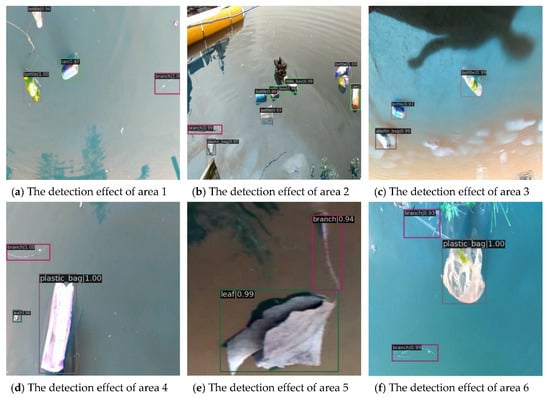

The experimental results of the water surface garbage object detection algorithm are shown in Figure 4. From Figure 4, it can be observed that the algorithm performs well on different types of water surface garbage detection tasks, accurately identifying and localizing garbage objects. These results provide a theoretical basis and technical support for subsequent water surface garbage management.

Figure 4.

Effect chart of water surface garbage detection.

The mAP metrics on the public dataset are shown in Table 4, Table 5 and Table 6. Specifically, a total of 24 experiments were conducted using three types of object detection algorithms, Faster R-CNN, Mask R-CNN, and Cascade Mask R-CNN, with eight different backbones including R-50, R-101, R-50x4, R-50x16, R-50x64, ViT-B/32, ViT-B/16, and ViT-B/14.

Table 4.

Experimental results of Faster R-CNN on public datasets.

Table 5.

Experimental results of Mask R-CNN on public datasets.

Table 6.

Experimental results of Cascade R-CNN on public datasets.

From the results of a total of 24 experiments using 3 different object detection algorithms with 8 different backbones on public datasets, consistent patterns can also be observed. Among algorithms with the same backbone, Faster R-CNN always performs the worst, while Cascade Mask R-CNN always performs the best. Among the different backbones used in each algorithm, ViT-B/14 always achieves the highest performance, while R-50 always achieves the lowest performance, which is consistent with the expected pattern. In addition, the algorithm based on ResNet-101 is superior to the algorithm based on ResNet-50, which may be because ResNet101 is deeper and larger.

Looking at the results from 24 experiments using three different algorithms with eight different backbones, it can be observed that the water surface garbage object detection model based on the combination of strong supervision and weak supervision with CLIP in this study achieves significant improvements in mAP compared to the baseline object detection models. As shown in Table 4, in Faster R-CNN, the largest improvement is observed in Faster R-CNN with R-50x4 as the backbone, achieving a 3.25 mAP increase, while the smallest improvement is observed in Faster R-CNN with ViT-B/16 as the backbone, with a 0.12 mAP increase. As shown in Table 5, in Mask R-CNN, the largest improvement is observed in Mask R-CNN with R-50x64 as the backbone, achieving a 2.37 mAP increase, while the smallest improvement is observed in Mask R-CNN with R-50 as the backbone, with a 0.67 mAP increase. As shown in Table 6, in Cascade R-CNN, the largest improvement is observed in Cascade R-CNN with ViT-B/14 as the backbone, achieving a 1.59 mAP increase, while the smallest improvement is observed in Cascade R-CNN with R-101 as the backbone, with a 0.41 mAP increase. These results indicate that the proposed approach of combining strong supervision and weak supervision with CLIP has great potential in water surface garbage object detection.

Among all the CLIP-based algorithms evaluated on the public dataset, the Cascade R-CNN model with ViT-B/14 as the backbone achieved the best performance with an accuracy of 57.46 mAP. This outperformed the Faster R-CNN and Mask R-CNN models with ViT-B/14 as the backbone, which achieved accuracies of 56.32 mAP and 56.42 mAP, respectively, by 1.14 mAP and 1.04 mAP, respectively. Therefore, it is recommended to consider the Cascade R-CNN model with a ViT-B/14 backbone as the final model for adoption.

The mAP metrics on the private dataset are shown in Table 7, Table 8 and Table 9. Similarly, a total of 24 experiments were conducted using three types of object detection algorithms: Faster R-CNN, Mask R-CNN, and Cascade Mask R-CNN, with eight different backbones including R-50, R-101, R-50x4, R-50x16, R-50x64, ViT-B/32, ViT-B/16, and ViT-B/14.

Table 7.

Experimental results of Faster R-CNN on private datasets.

Table 8.

Experimental results of Mask R-CNN on private datasets.

Table 9.

Experimental results of Cascade R-CNN on private datasets.

From the results of a total of 24 experiments using 3 different object detection algorithms with 8 different backbones on private datasets, consistent patterns can also be observed. Among algorithms with the same backbone, Faster R-CNN always performs the worst, while Cascade Mask R-CNN always performs the best. Among the different backbones used in each algorithm, ViT-B/14 always achieves the highest performance, while R-50 always achieves the lowest performance, which is consistent with the expected pattern. In addition, the algorithm based on ResNet-101 is superior to the algorithm based on ResNet-50, which may be because ResNet101 is deeper and larger.

Based on the results from 24 experiments using three different algorithms with eight different backbones, the water surface garbage object detection model based on the combination of strong supervision and weak supervision with CLIP in this study shows a significant improvement in mAP compared to the baseline object detection models. As shown in Table 7, in Faster R-CNN, the largest improvement is observed in Faster R-CNN with ViT-B/16 as the backbone, achieving a 1.55 mAP increase, while the smallest improvement is observed in Faster R-CNN with R-50x4 as the backbone, with a 0.27 mAP increase. As shown in Table 8, in Mask R-CNN, the largest improvement is observed in Mask R-CNN with R-50x64 as the backbone, achieving a 1.62 mAP increase, while the smallest improvement is observed in Mask R-CNN with R-50 as the backbone, with a 0.37 mAP increase. As shown in Table 9, in Cascade R-CNN, the largest improvement is observed in Cascade R-CNN with ViT-B/14 as the backbone, achieving a 1.38 mAP increase, while the smallest improvement is observed in Cascade R-CNN with R-50 as the backbone, with a 0.25 mAP increase. These results indicate that the proposed approach of combining strong supervision and weak supervision with CLIP has great potential in water surface garbage object detection.

Among all the CLIP-based algorithms evaluated on the private dataset, the Cascade R-CNN model with ViT-B/14 as the backbone achieved the best performance with an accuracy of 79.69 mAP. This outperformed the Faster R-CNN and Mask R-CNN models with ViT-B/14 as the backbone, which achieved accuracies of 77.72 mAP and 77.27 mAP, respectively, by 1.97 mAP and 2.42 mAP, respectively. Therefore, it is recommended to consider the Cascade R-CNN model with a ViT-B/14 backbone as the final model for adoption.

In addition, we also evaluated the performance of the algorithm on different categories of water surface garbage. The results showed that the CLIP-based algorithm achieved good performance in detecting various types of garbage. Whether it was plastic bottles, paper, metal cans, or other types of garbage, the algorithm was able to accurately detect and locate them.

In terms of performance comparison, the CLIP-based algorithm outperformed traditional CNN-based methods in water surface garbage detection. This result further validates the effectiveness and superiority of the proposed approach of combining strong supervision and weak supervision with CLIP. It is particularly noteworthy that the Cascade R-CNN model pre-trained and fine-tuned with domain-specific self-supervised visual transformers achieved the best performance across all metrics. This indicates that combining the CLIP model with self-supervised learning methods can improve the accuracy and robustness of the algorithm in water surface garbage object detection.

5. Conclusions

The proposed water surface garbage object detection algorithm based on the combination of strong supervision and weak supervision with CLIP has made significant progress in addressing the existing challenges. This method leverages the localization capability of traditional object detection algorithms and the cross-modal understanding ability of the CLIP model, enabling the model to detect water surface garbage from both image and text perspectives. By combining different types of supervision information, this approach can improve the accuracy and robustness of water surface garbage detection and has practical applicability in real-world scenarios. The specific conclusions can be summarized as follows:

- (1)

- All experiments conducted on the two datasets using three different object detection algorithms with eight different backbones indicate that, among the algorithms with the same backbone, Faster R-CNN consistently performs the worst, while Cascade Mask R-CNN consistently performs the best. Among the different backbones used in each algorithm, ViT-B/14 consistently achieves the highest performance, while R-50 consistently achieves the lowest performance, which aligns with the expected pattern.

- (2)

- All experiments conducted on the two datasets using three different object detection algorithms with eight different backbones indicate that the proposed approach of combining strong supervision and weak supervision with CLIP significantly improves the mAP values compared to the baseline object detection models.

- (3)

- Among all the CLIP-based algorithms evaluated on the public dataset, the largest improvement is observed in Faster R-CNN with R-50x4 as the backbone, achieving a 3.25 mAP increase. Among all the CLIP-based algorithms evaluated on the private dataset, the largest improvement is observed in Faster R-CNN with ViT-B/16 as the backbone, achieving a 2.05 mAP increase.

- (4)

- Among all the CLIP-based algorithms evaluated on the two datasets, the Cascade R-CNN model with ViT-B/14 as the backbone consistently achieves the best performance, achieving 57.46 mAP on the public dataset and 79.69 mAP on the private dataset.

In conclusion, the water surface garbage object detection algorithm based on the combination of strong supervision and weak supervision with CLIP demonstrates superior performance in the experiments. Its successful application provides new insights and technical support for addressing the challenges in water surface garbage detection and offers valuable guidance for future research and practical implementations.

Author Contributions

Y.M.: methodology and writing—original draft. Z.C.: data curation and writing—review and editing. H.L.: supervisor. Y.Z.: conceptualization and coding. C.L.: software. D.L.: investigation. W.H.: resources. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data generated and analyzed during this study are available from the corresponding author by request.

Conflicts of Interest

The authors declare that they have no known competing financial interest or personal relationship that could have appeared to influence the work reported in this paper.

References

- Chang, H.C.; Hsu, Y.L.; Hung, S.S.; Ou, G.R.; Wu, J.R.; Hsu, C. Autonomous water quality monitoring and water surface cleaning for unmanned surface vehicle. Sensors 2021, 21, 1102. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.; Fu, X. Miniature water surface garbage cleaning robot. In Proceedings of the 2020 International Conference on Computer Engineering and Application (ICCEA), Guangzhou, China, 18–20 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 806–810. [Google Scholar]

- Wang, Y.Q. An analysis of the Viola-Jones face detection algorithm. Image Process. Line 2014, 4, 128–148. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Le, T.N.; Ono, S.; Sugimoto, A.; Kawasaki, H. Attention r-cnn for accident detection. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, Nevada, USA, 19 October–13 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 313–320. [Google Scholar]

- Singh, B.; Najibi, M.; Davis, L.S. Sniper: Efficient multi-scale training. In Proceedings of the Advances in Neural Information Processing Systems 31, Montreal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Event, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.T.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.H.; Li, Z.; Duerig, T. Scaling up visual and vision-language representation learning with noisy text supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Event, 18–24 July 2021; pp. 4904–4916. [Google Scholar]

- Yuan, L.; Chen, D.; Chen, Y.L.; Codella, N.; Dai, X.; Gao, J.; Hu, H.; Huang, X.; Li, B.; Li, C.; et al. Florence: A new foundation model for computer vision. arXiv 2021, arXiv:2111.11432. [Google Scholar]

- Pu, Z.; Geng, X.; Sun, D.; Feng, H.; Chen, J.; Jiang, J. Comparison and Simulation of Deep Learning Detection Algorithms for Floating Objects on the Water Surface. In Proceedings of the 2023 4th International Conference on Computer Engineering and Application (ICCEA), Hangzhou, China, 7–9 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 814–820. [Google Scholar]

- Yang, W.; Che, J.; Zhang, L.; Ma, M. Research of garbage salvage system based on deep learning. In Proceedings of the International Conference on Computer Application and Information Security (ICCAIS 2021), Sousse, Tunisia, 18–20 March 2021; SPIE: Bellingham, WA, USA, 2022; Volume 12260, pp. 292–298. [Google Scholar]

- Kong, S.; Tian, M.; Qiu, C.; Wu, Z.; Yu, J. IWSCR: An intelligent water surface cleaner robot for collecting floating garbage. IEEE Trans. Syst. Man Cybern. Syst. 2020, 51, 6358–6368. [Google Scholar] [CrossRef]

- Yin, X.; Lu, J.; Liu, Y. Garbage Detection on The Water Surface Based on Deep Learning. In Proceedings of the 2022 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shijiazhuang, China, 22–24 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 679–683. [Google Scholar]

- Li, X.; Tian, M.; Kong, S.; Wu, L.; Yu, J. A modified YOLOv3 detection method for vision-based water surface garbage capture robot. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420932715. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y.; Zhang, Z.; Shen, J.; Wang, H. Real-time water surface object detection based on improved faster R-CNN. Sensors 2019, 19, 3523. [Google Scholar] [CrossRef] [PubMed]

- Li, N.; Huang, H.; Wang, X.; Yuan, B.; Liu, Y.; Xu, S. Detection of Floating Garbage on Water Surface Based on PC-Net. Sustainability 2022, 14, 11729. [Google Scholar] [CrossRef]

- Valdenegro-Toro, M. Submerged marine debris detection with autonomous underwater vehicles. In Proceedings of the 2016 International Conference on Robotics and Automation for Humanitarian Applications (RAHA), Kollam, India, 18–20 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–7. [Google Scholar]

- Cai, C.; Gu, S. Research on Marine Garbage Detection Based on Improved Yolov5 Model. J. Phys. Conf. Series 2022, 2405, 012008. [Google Scholar] [CrossRef]

- Guo, Y.; Lu, Y.; Guo, Y.; Liu, R.W.; Chui, K.T. Intelligent vision-enabled detection of water-surface targets for video surveillance in maritime transportation. J. Adv. Transp. 2021, 2021, 9470895. [Google Scholar] [CrossRef]

- Yang, W.; Wang, R.; Li, L. Method and System for Detecting and Recognizing Floating Garbage Moving Targets on Water Surface with Big Data Based on Blockchain Technology. Adv. Multimed. 2022, 2022, 9917770. [Google Scholar] [CrossRef]

- Yi, N.; Luo, W. Research on Water Garbage Detection Algorithm Based on GFL Network. Front. Comput. Intell. Syst. 2023, 3, 154–157. [Google Scholar] [CrossRef]

- Ai, P.; Ma, L.; Wu, B. LI-DWT-and PD-FC-MSPCNN-Based Small-Target Localization Method for Floating Garbage on Water Surfaces. Water 2023, 15, 2302. [Google Scholar] [CrossRef]

- Ma, L.; Wu, B.; Deng, J.; Lian, J. Small-target water-floating garbage detection and recognition based on UNet-YOLOv5s. In Proceedings of the 2023 5th International Conference on Communications, Information System and Computer Engineering (CISCE), Guangzhou, China, 14–16 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 391–395. [Google Scholar]

- Pan, J.Y.; Yang, H.J.; Faloutsos, C.; Duygulu, P. Automatic multimedia cross-modal correlation discovery. In Proceedings of the tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 653–658. [Google Scholar]

- Wang, K.; Yin, Q.; Wang, W.; Wu, S.; Wang, L. A comprehensive survey on cross-modal retrieval. arXiv 2016, arXiv:1607.06215. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Mikolov, T.; Karafi, M.; Burget, L.; Cernock, J.; Khudanpur, S. Recurrent neural network based language model. Interspeech 2010, 2, 1045–1048. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Zhang, H.; Koh, J.Y.; Baldridge, J.; Lee, H.; Yang, Y. Cross-modal contrastive learning for text-to-image generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 833–842. [Google Scholar]

- Jing, C.; Xue, B.; Pan, J. CTI-GAN: Cross-Text-Image Generative Adversarial Network for Bidirectional Cross-modal Generation. In Proceedings of the 5th International Conference on Computer Science and Software Engineering, Guilin, China, 21–23 October 2022; pp. 85–92. [Google Scholar]

- Xu, X.; Lu, H.; Song, J.; Yang, Y.; Shen, H.T.; Li, X. Ternary adversarial networks with self-supervision for zero-shot cross-modal retrieval. IEEE Trans. Cybern. 2019, 50, 2400–2413. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Huang, Q.; Celikyilmaz, A.; Gao, J.; Shen, D.; Wang, Y.F.; Wang, W.Y.; Zhang, L. Reinforced cross-modal matching and self-supervised imitation learning for vision-language navigation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6629–6638. [Google Scholar]

- Thoker, F.M.; Gall, J. Cross-modal knowledge distillation for action recognition. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 6–10. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. In Proceedings of the Advances in Neural Information Processing Systems 32, Vancouver, VC, Canada, 8–14 December 2019. [Google Scholar]

- Tan, H.; Bansal, M. Lxmert: Learning cross-modality encoder representations from transformers. arXiv 2019, arXiv:1908.07490. [Google Scholar]

- Chen, Y.C.; Li, L.; Yu, L.; El Kholy, A.; Ahmed, F.; Gan, Z.; Cheng, Y.; Liu, J. Uniter: Universal image-text representation learning. In Proceedings of the European Conference on Computer Vision, Virtual, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 104–120. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).