1. Introduction

Teamwork permeates economic activities. In some cases, different skills are needed complementing each other to complete a project; in other cases, division of labor plays a crucial role in timely delivery of a product. Partnerships, group projects and team sports are but a few examples in which individuals team up to achieve a certain goal. With the exception of partnerships, it falls upon an employer to hire the team of employees to fulfill the task at hand. In particular, as expenditure in recruitment and assessment surges

1, with U.S. companies spending on average around US

per hire, an increase of nearly

in the last four years, it is clear that recruitment and talent research divisions have turned their attention to more than the job applicants’ professional abilities. As a matter of fact, common practice includes the analysis of criminal

2 and credit histories

3, and more recently, social networks as well

4.

While employees’ technical skills are important, interest in their personal characteristics other than job-relevant skills may be related to the now widespread knowledge that economic agents are not purely selfish, often displaying other-regarding preferences [

3,

4,

5,

6,

7]. The literature in behavioral and experimental economics strongly suggests that social preferences affect outcomes in standard economic models [

8,

9]. The works in [

10,

11,

12,

13] analyze, in particular, the role other-regarding preferences play in interactions among employees in models of the workplace. The main findings in this literature show that the employees’ concerns towards one another affect not only the provision of effort, but also the compensation schemes that are offered. Thus, it is only natural to wonder what an ideal team would look like, for a given set of skills: Would it be a team composed of selfish individuals, whose only concern is their own gains, or maybe a group of altruistic agents, who would be content with increasing their workmates’ wellbeing? Perhaps a crew of moral employees deriving satisfaction in choosing actions they think are the right ones? These are the questions I address in this paper.

In view of the overwhelming experimental evidence of behaviors that are incompatible with purely selfish preferences [

4,

5,

14,

15], it is important to understand how prosocial preferences affect behavior in the workplace and, by extension, the design of contracts in the workplace. I propose a model to address this question.

Specifically, I focus on the optimal compensation schemes that should be used in a standard moral hazard setting to incentivize the employees to fulfill their tasks. In doing so, I am able to compare the profits obtained by the employer from a team composed of individuals with different kinds of prosocial preferences. Although I do not study the recruitment process per se, I am able to make predictions about which preferences the principal would prefer.

The framework utilized is the multiagent moral hazard model, as first proposed by [

16,

17], where a risk-neutral principal hires a team of two risk-averse agents. The agents can exert costly effort in order to stochastically affect the realization of output. By assumption, efforts are simultaneously and independently chosen by the agents and cannot be observed by the principal. On the other hand, output is observable by third parties after being realized and can thus be contracted upon.

In the behavioral economics literature, several classes of prosocial preferences have been proposed. I analyze two of them. The first one is altruism [

18]

5, a class that has been extensively used in the literature on the voluntary contribution of public goods. This is natural since one can think of efforts made in the context of teamwork in a firm as contributions to a public good (the firm’s profit). Second, in light of recent results by [

20,

21], who show that a particular, novel, class of preferences stands out as being favored by evolution, I compare the optimal contract under altruism with the optimal contract under this class of homo moralis preferences, a convex combination of selfishness and morality. In sum, my model allows addressing the following questions: if an employer could choose between a team of two moral agents and a team of two altruists, which team would he/she prefer and why

6?

I characterize the optimal contract for a team of equally altruistic agents and a team of equally moral agents and compare them. First, I find that the trade-off between risk-sharing and incentive provision is present, as in the case with standard selfish preferences. However, as intuition would suggest, I find that high-powered incentives are less needed to induce effort as the agents become more concerned about the right thing to do or about each other’s material payoff, and that the principal’s expected profit obtained from the interaction with each team is increasing in the team’s degree of morality or altruism. Second, if efforts are symmetric and could be contracted upon, the principal would be better off hiring a team of altruistic agents over the other ones, for any degrees of morality and altruism, because altruism towards one’s partner reduces the payment necessary to induce participation, one effect that is not present with selfish or moral preferences. On the other hand, when efforts are not observable, which team is going to be preferred depends on the production technology: in particular, if the stochastic production technology displays increasing returns to efforts, the altruistic team is the least expensive to hire. This is a consequence of the different nature of each class of preferences. While altruistic agents derive benefits from increased material payoffs of their fellows, moral agents take satisfaction in doing the right thing. Intuitively, a higher effort under increasing returns drastically increases the expected material payoff of the agents, on which altruism is based. Meanwhile, the choice of the right thing to do depends only on the contract offered by the principal, and not on the production’s underlying technology. Therefore, under increasing returns, altruistic agents possess higher intrinsic motivation to exert the high level of effort, thus demanding a less high-powered contract and saving costs for the principal.

This paper is closely related to the moral hazard literature, in particular to two of its strands: moral hazard in teams and moral hazard with prosocial preferences. References [

17,

23] characterize the basic results on moral hazard in teams that are used to build the model below

7. References [

25,

26,

27,

28] study optimal incentive schemes under different prosocial preferences: the first three focus on inequity aversion, while the last models agents exhibiting reciprocity concerns towards each other. References [

29,

30,

31] consider moral hazard in team problems where the agents can monitor one another and, therefore, study the effects of peer pressure on the optimal contract design. Furthermore, [

32,

33,

34] study problems where the agents’ prosocial preferences are private information and give conditions for separating equilibria and self-selection by different types. None of them, however, raises the question of which preferences yield the least cost to the principal.

The analysis below differs from the previous literature in three crucial points: first, it considers homo moralis preferences, which has not, to the best of my knowledge, been done before in a contracting setting, thus presenting a simple environment where the principal can profitably explore idiosyncrasies generated by those and altruistic preferences. Second, it does not allow for monitoring, nor private information about the agents’ preferences, so that I can focus solely on the effect of the prosocial preferences on the optimal contract design. Last, and more importantly, the analysis contrasts the optimal contracts under each class of preferences, and derives conditions under which the principal would prefer hiring one team over the other, therefore providing a rationale for firms to collect soft information on potential employees to compose teams that will minimize the total payments to be made.

I proceed as follows.

Section 2 presents the environment, while

Section 3 and

Section 4 study the optimal contract assuming efforts are contractible and non-contractible, respectively.

Section 5 concludes. For the ease of exposition, all proofs are relegated to

Appendix C.

2. The Model

I analyze the interaction between a principal and two agents, denoted by

. The principal hires the two agents to work on a joint task, which generates revenue

for the principal, where

. Each agent can exert either a low or a high effort level

. Efforts determine revenues stochastically, according to the following probability distribution:

Throughout, I assume that revenue is never certain and that the probability of achieving a high outcome is increasing in the total effort exerted by the agents: .

If effort is costless, the assumption above indicates a preference of the principal for both agents to exert effort. However, effort is costly to each agent; for each

8,

The principal offers the agents contracts

,

, specifying payments that will follow each realization of revenues. The principal is assumed to be risk neutral, and his/her payoff is given by:

Denote by

the expected material payoff accruing to agent

i from the effort choices

and wage schedule

, for

. I restrict attention to wage schedules pairs

determining the payments following good and bad realizations of revenues. In what follows, the material payoff function takes the expected additively separable form:

where

is the function that associates the agent’s consumption utility with each amount of money. The dependence of

i’s expected material payoff on

comes from the effect of the other agent’s effort on the probability distribution of revenues. The agents are risk averse towards wages:

is assumed to be twice-continuously differentiable, strictly increasing and strictly concave

9.

The principal faces either a team consisting of two agents characterized by homo moralis preferences with degree of morality

, represented by the utility function:

a team comprised of two altruistic agents, whose preferences are summarized by the utility function:

for

and

,

. Both specifications take the standard selfish preferences as a special case (

), and this will allow comparisons between the results to be presented below and the benchmark moral hazard problem.

As pointed in [

20,

22], this specification of preferences for altruistic agents gives rise to the behavioral equivalence between homo moralis preferences and altruism for

in many classes of games. With that in mind, I will make the following assumption for the rest of the exposition.

Assumption 1. .

Thus, the agents’ utility functions are simplified to:

The relationship among the three parties unfolds as follows. First, the principal offers each agent a contract

, which can be either accepted or rejected by the agents. If at least one agent rejects the contract, the game ends, and every party receives his/her own reservation utility. If both agents accept the principal’s offers, they play a normal form game

10: both of them must simultaneously and independently choose an effort level, from which revenues will be realized according to the probability distribution given by the production technology above. Payments are made according to the schedules proposed by the firm, and the agents’ payoffs in the normal form game are given by their expected utilities with regard to received wages, efforts and preferences. While each agent’s effort choice is private information, revenues and wages are publicly observable. It is also assumed that the agents’ preferences are common knowledge

11.

3. Studying the Benchmark: The Contractible Effort Case

As a starting point, I derive the optimal contract assuming efforts are observable and contractible by the principal, to serve as a benchmark for later results. In what follows, I assume that each agent possesses an outside option that gives him/her utility

if he/she does not accept the principal’s contract offer. Therefore, agent

is willing to participate in the proposed relationship iff:

As discussed in the previous section, standard selfish preferences are a particular case of both homo moralis and altruistic preferences, and for the ease of exposition, I begin this and the next section by analyzing the optimal contract for that instance. Thus, under contractible efforts, the standard Borch rule:

gives:

which implies

for

. The intuition here is the same as in the classical moral hazard problem with one principal and one agent: if effort is contractible, the principal optimally offers a constant wage schedule remunerating the agent according to his/her reservation utility and the cost of the principal’s desired level of effort.

When the principal faces a team of altruistic agents, he/she solves:

An interior solution is characterized by the KKT first-order conditions:

so the Borch rule becomes

12:

for any choices of effort

and

,

. Therefore, if agents are altruistic, the optimal contract under verifiable efforts proposes a constant wage schedule, just as was the case in the benchmark selfish preferences, given by:

which is well-defined for all

. It is easy to see that for

, this is exactly the same expression as for the optimal contract under verifiable efforts in the benchmark case, while that for any positive degree of altruism is lower than it would be for selfish agents. Intuitively, each altruistic agent recognizes that by participating in the relationship, his partner’s material payoff increases, and therefore, the wage demanded for participation declines when the degree of altruism becomes larger.

Finally, consider the team with homo moralis preferences, in which agent

is willing to participate in the proposed relationship iff:

i.e., iff

Some points are noteworthy. First, as mentioned before, in the case where

, this participation constraint reduces to the usual

constraint in the benchmark moral hazard problem, since the selfish preference is a particular case of this framework. Second, for

,

. In this case, agent

i’s choice of effort does not depend on agent

j’s effort choice, and choosing

becomes an individual decision problem. Third, if

, the participation constraint collapses into:

Note here that the agents’ degrees of morality are irrelevant, and the participation constraints are exactly the same as those that would be obtained in a symmetric equilibrium in the benchmark moral hazard problem: by imposing , both expected material payoffs terms are identical, and since the utility function is constructed as a convex combination of these functions, the expressions above are obtained.

By Assumption 1, every agent in each team is identical to his/her partner, since the only source of heterogeneity in the general formulation was given by the preferences. Therefore, I will restrict attention to symmetric choices of effort

in the rest of the discussion

13.

Proposition 1. Suppose Assumption 1 holds. Then, there exists such that for all , the principal induces agents in the teams of moral or altruistic agents to exert high effort by means of a constant wage.

This result is not surprising: if efforts are contractible, the principal compensates the agents with a fixed transfer in case they exert the desired level of effort or punishes them if there is a deviation. Furthermore, if the cost of exerting effort is small, then the amount the principal has to transfer back to the agents in order to have an increased chance of obtaining a high realization of revenues is also small and, thus, profitable to implement. Moreover, since I restrict attention to symmetric equilibria, the degree of morality plays no role when I consider a team of homo moralis agents: the compensation schedule and effort choices are exactly the same as those obtained in the benchmark problem.

Now, I can focus on the central question of the paper: given the optimal contracts that induce the desired level of effort, which team should the principal hire?

Proposition 2. Suppose Assumption 1 holds, efforts are verifiable and the principal wants both agents to make the high effort. For any , the principal prefers hiring the team of altruistic agents over the team of selfish or moral agents.

Intuitively, in situations where someone fails to do the right thing, a moral agent derives part of his/her utility from contemplating what would happen if everyone did the right thing. If both agents do in fact exert high effort, the contemplation in question does not add utility beyond the material utility that the agents thus obtain. By contrast, for altruistic agents, any choice but high effort decreases the material payoff of both agents and, consequently, all of the utility of each altruistic employee. Therefore, intrinsic motivation is larger for altruistic agents, and a team comprised of such employees is less costly for the principal.

4. Moving to the Second Best: Non-Contractible Efforts

Throughout the rest of the exposition, I focus on contracts that induce both agents to participate in the relationship and also exert the high level of effort ().

As a benchmark, focus first on standard selfish preferences. If efforts are non-contractible and the principal wishes to induce both agents to exert effort, he/she must solve, for

,

,

Manipulating the incentive compatibility constraint yields:

By assumption,

and

. Thus, the incentive compatibility constraint implies a monotonicity constraint on the wages following a good and a bad realization of output, since

is assumed to be strictly increasing. Standard arguments show that both the incentive compatibility and the individual rationality constraints must bind at the optimum, so that the solution to the principal’s problem is a contract

such that:

Given the incentive compatibility constraint, it is clear that .

Of course, if the principal wishes to induce the agents not to exert effort, a constant wage schedule would be optimal. Comparison of the principal’s profits when agents exert effort and shirk show that the former is preferred by the employer for any , .

Under altruistic preferences for the agents, the principal’s problem is:

Rewrite the incentive compatibility constraint as:

and notice that the right-hand side is strictly decreasing in the degree of altruism

. The intuition behind this is the tradeoff between explicit and intrinsic incentives. Indeed, as the agent cares less about his/her own material payoff relative to that of his/her teammate, the intrinsic incentive derived from an increase in the probability of a high realization of output (and a consequent increase in the expected material benefit of his/her partner) becomes larger than the explicit incentives given by a high powered contract in inducing the agent to exert the high level of effort.

The proposition below characterizes the optimal contract

14.

Proposition 3. Suppose Assumption 1 holds. There exists such that, for all , it is optimal for the principal to induce both altruistic agents to exert effort, , by means of a contract such that:, with strict inequality for any . Close inspection of the incentive compatibility constraint shows that any contract that would induce a selfish agent to exert the high effort would also induce an altruistic employee to do the same. Furthermore, for any given contract, an increase in

would increase the utility of each agent. Hence, the principal can profit by reducing both wages

15. This argument is formally stated below.

Corollary 1. Suppose Assumption 1 holds. Then, the principal’s expected profits are strictly increasing in θ.

Last, I consider homo moralis preferences. The principal must choose wage vectors

to solve:

for

. Note that the individual rationality constraint can be rewritten in the simpler form:

since, in equilibrium, the principal’s offer induces the symmetric effort choice

.

The incentive compatibility constraint also simplifies to:

The right-hand side of this inequality highlights an interesting fact: a positive degree of morality implies the agent internalizes the cost of choosing a low effort by evaluating what would happen if the other agent also were to make the same decision. This is very different in nature to how an altruistic agent evaluates any deviation: while the latter considers only the effects of his/her own deviation on his/her own material payoff and on his/her partner’s, the former would consider the effect of the same deviation being made by his/her partner on his/her own payoff. Besides, by force of the assumptions presented above, further manipulation of

yields:

where

. Because of the strict concavity of

, the incentive compatibility constraint for moral agents also implies a monotonicity condition on the optimum compensation schedules offered by the principal, even when the agents display the highest degree of morality. This last remark implies that the intrinsic incentives of the most moral agent are not sufficiently large to overcome the need to provide him/her with explicit incentives to exert the high level of effort.

Proposition 4. Suppose Assumption 1 holds. There exists such that, for all , it is optimal for the principal to induce both moral agents to exert effort, , by means of a contract , such that:, and strict inequality for . The intuition behind the monotonicity constraint is the same as in the benchmark model: if wages following a low realization of revenues were larger than their counterpart after a good realization, then agents would prefer to exert low effort in order to receive this higher compensation and save in the cost of exerting effort.

The novelty in the results relates to how the compensation schedules vary with respect to the degree of morality . Keeping in mind that , one can see that as increases, the right-hand side of becomes ever smaller, albeit positive. This implies that the gap in wages following good and bad realizations of revenues must decrease, since the incentive compatibility constraint binds, but monotonicity still holds. Intuitively, the principal can reduce the compensation over high realizations of revenues given to an agent who is very concerned about doing the right thing. However, at the same time, he/she must increase wages after bad outcomes in order to satisfy the participation constraint.

Because of this diminishing wage gap, intuition would suggest that the first-best result is obtained for a sufficiently high degree of morality. However, this is not the case. To see this, take

, where agent

i’s preferences are purely Kantian, and thus, his/her utility is completely characterized by the expected material payoff

. Although the participation constraint does not vary with the agent’s degree of morality

16, the same is not true for the incentive compatibility constraint. Now, when considering the pros and cons of a deviation in terms of effort choice, agent

i internalizes what would happen if agent

j were to do the same. Specifically, this entails a reduction in the probability of the good revenue being realized from

to

, instead of the reduction to

in the selfish term. This internalization is reflected in the

constraint, which becomes:

The denominator on the right-hand side is exactly the difference in the probabilities discussed above. Taking makes the incentive compatibility constraint for a team of moral agents as easy to satisfy as possible, but it still binds, thus pushing the optimal contract away from the first-best one (constant wage schedule).

Given this behavior of wage schedules with respect to the degree of morality, a natural question to be asked is whether the principal is better off with highly moral agents or not. The answer is unconditional and presented in the following result.

Corollary 2. The principal’s expected profit is strictly increasing in θ.

Corollary 2 contrasts with the contractible effort case, where the principal’s profits were identical when hiring a team of selfish agents or a team of moral agents, for any degree of morality the last would display. Mathematically, the result is a consequence of the individual rationality constraints being identical in both cases, while the incentive compatibility constraint has a smaller right-hand side under moral agents than under selfish ones. Intuitively, the principal exploits the agents’ morality, as he/he did with altruistic employees, as well, to induce high effort by means of less high-powered incentives, while inducing participation with a slightly increased payment after a bad realization of output. Thus, one concludes that the expected savings in wages after a good realization made by the principal by choosing a high morality agent offsets the expected increase in payments after low revenues.

One remark is in order here. Because of the assumption that and the monotonicity condition implied by the incentive compatibility constraints for each preferences, it is the case that , where is the optimal contract offered by the principal. Thus, using the constraints again, I have that , so the agents have no incentives to jointly deviate to shirking. The same is also true for altruistic agents.

So far, I have showed that the principal can attain higher profits by exploiting the agents’ morality or altruism, thus reducing high-powered explicit incentives in the optimal contract in such a way that participation and incentives to exert high effort are still satisfied. Therefore, from the employer’s perspective, knowing which class of preferences demands the least amount of explicit incentives is crucial. Lemma 1 tells us that the answer to that question depends on the stochastic production technology.

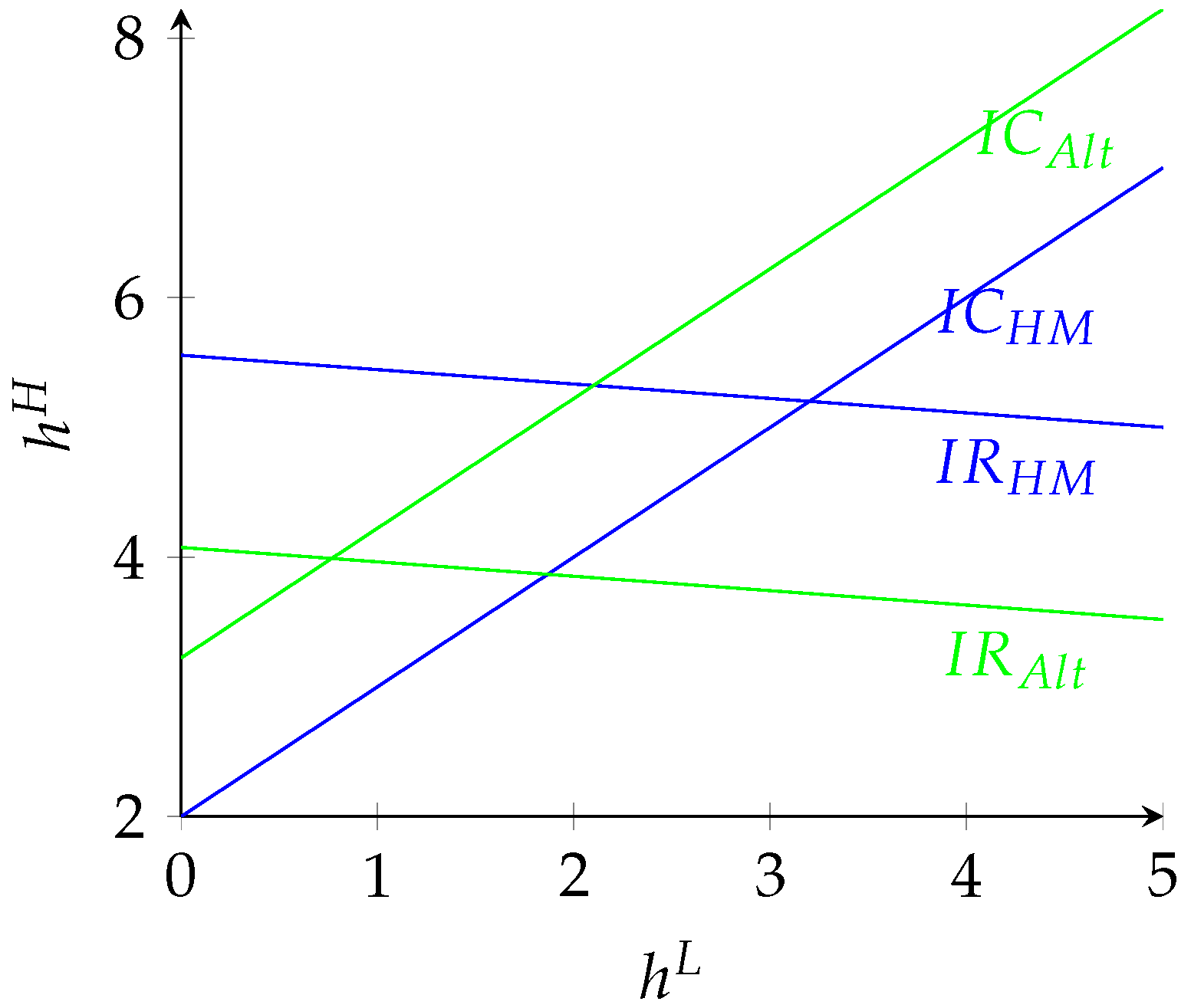

Lemma 1. Under Assumption 1, has the same sign as .

In other words, if the stochastic technology presents increasing returns on aggregate efforts, the optimal contract under homo moralis preferences is (weakly) more high-powered than its counterpart under altruism when

: any contract inducing moral agents to exert high effort would do the same to altruistic employees. The converse is true if the technology has decreasing returns on efforts. This can be seen in

Figure 1. The middle (green) line represents the incentive compatibility constraint for altruistic agents, whose format is not affected by the production technology. The top (red) and the bottom (blue) lines are the graphic representations of the

constraint for moral agents when

and

, respectively

17.

Given the result above, one would expect that the principal’s expected payoff will be uniformly higher if the agents are altruistic rather than moral when the production technology presents increasing returns to efforts, while the opposite would be true if decreasing returns are present. The flaw with such a logic is not considering the effects of the binding individual rationality constraints, which implied under contractible efforts that the team of altruistic agents was always the least expensive to hire. In particular, remember that for altruistic agents, the outside option

is divided by

in the participation constraint, a factor that is not present under selfish and moral preferences. This implies that

should also be smaller than

18. However, the principal has clear preferences over the composition of the team, and the result below precisely states when one team is preferred over the other.

Theorem 1. Assume the principal offers contracts and to homo moralis and altruistic agents, respectively, inducing them to exert the high level of effort. Furthermore, assume Assumption 1 holds. Then, if the stochastic production technology exhibits:

- 1.

increasing returns to efforts (), the principal is better off hiring a team of altruistic agents over a team of moral agents,

- 2.

decreasing returns to efforts () and

the outside option is zero (), the principal prefers a team of moral agents; or

the outside option is positive, and the degree of morality is sufficiently low (, ); the principal prefers a team of moral agents only if .

Under increasing returns to efforts, an altruistic team is less expensive for the principal because of two reasons. First, the wage that must be paid after a bad realization of output is smaller than its counterparts under selfish or moral preferences, and this is a consequence of the fact that the former’s consideration with regards to the payoff of his/her partner slackens the participation constraint. On he other hand, such a concern also slackens the incentive compatibility constraint in this case, because exerting efforts drastically increases the probability of being successful, thus providing implicit incentives for the altruistic worker to exert effort and requiring a less high-powered contract to be proposed by the employer.

Such a difference in the intrinsic incentives to exert effort disappear when the production technology has constant returns, so that the power of the contract remains the same for both teams. However, it is still the case that the principal exploits the fact that altruistic agents derive utility from each other’s material payoff and can thus pay them less.

The third case, with decreasing returns to efforts, is the most interesting, because the preference of the principal results from the net effect of two opposing forces. While it is still true that , Lemma 1 states that now, the power of the contract required by moral agents is smaller than the one for altruistic agents. In the range where such a reduction is the most drastic, the principal will prefer the team of moral agents rather than altruistic ones. The first condition for this to happen is that the probability of a success when both agents are exerting effort is sufficiently high, as can be seen from the incentive compatibility constraints. The second condition is that either the outside option for the agents is zero or if it is positive, the degree of morality or altruism is close to zero. If both cases, the participation constraints for moral and altruistic agents become arbitrarily close (identical if ) so that the exploitability of altruistic preferences, described in the preceding paragraph, becomes small, and the principal profits by hiring the agents demanding the least powered contracts: the moral agents in this case.

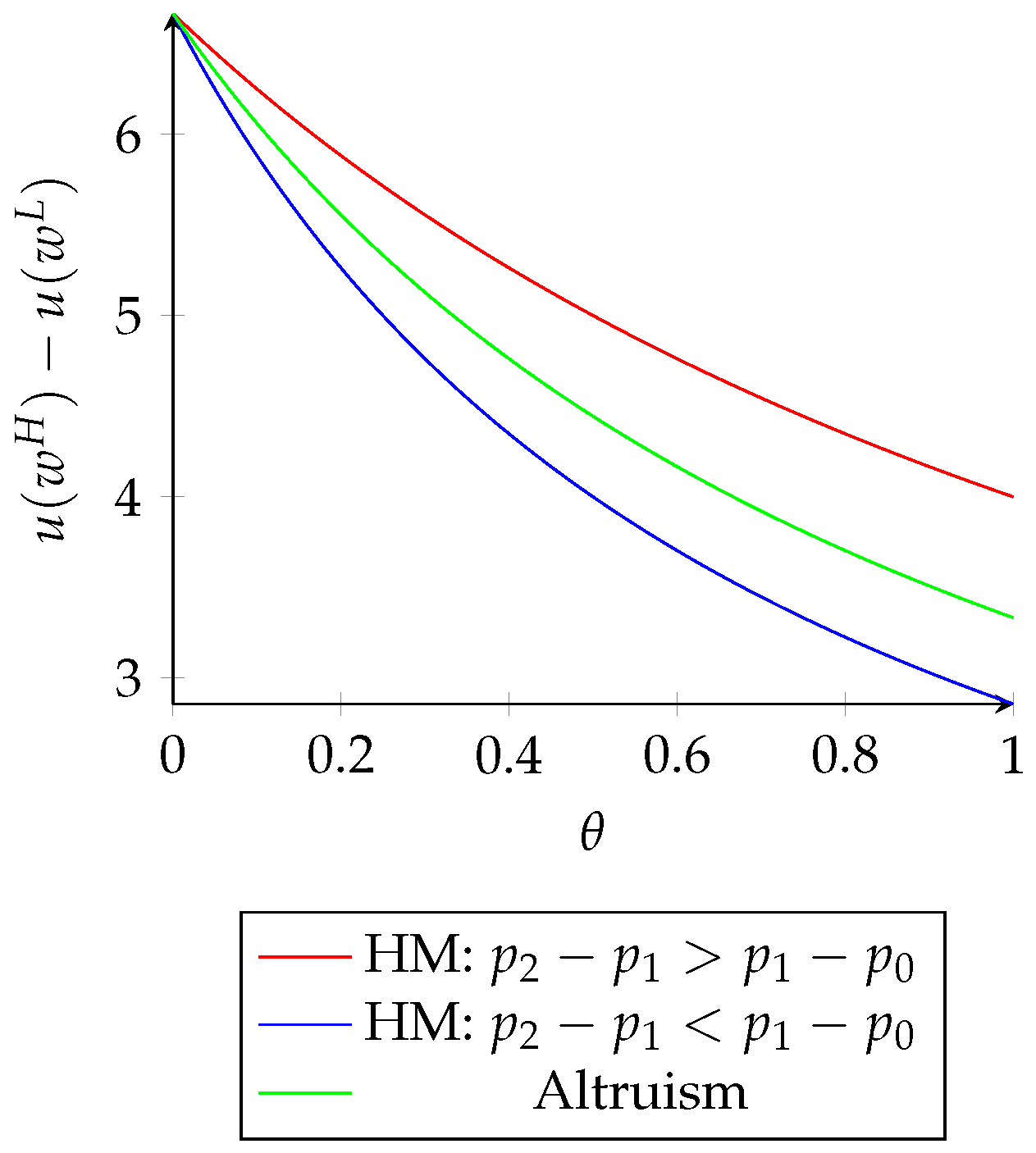

Figure 2a–c provides an example of Theorem 1 for

.

Figure 2a represents the case where the production technology exhibits increasing returns to efforts. With the exception of

, where both teams are identical to the selfish agents, the principal’s profit is higher with a team of altruistic agents (

) than with a team of moral agents (

).

Figure 2b exemplifies the case with decreasing returns to efforts, the zero outside option for the agents and a high probability of success if both agents exert effort (namely, I set

). As Theorem 1 states, under these conditions,

for all equal degrees of morality and altruism.

Finally,

Figure 2c plots the ratio

for decreasing returns to efforts and

. Contrary to the previous case where

, the difference in the participation constraints for moral and altruistic agents makes it unprofitable for the employer to hire the moral team if

becomes larger, since the decrease in

would be, in expected terms, sufficient to compensate the savings related to the power of the contract. This is represented by the region in the figure in which

.

Therefore, a rationale in terms of the principal’s expected profits is given for trying to sort employees with respect to their preferences. If the production technology exhibits increasing returns with respect to efforts, the principal’s choice is straightforward: always choose to employ altruistic agents. However, if the condition does not hold, employing moral individuals may lead to higher profits in comparison to both altruistic and purely selfish agents.