Abstract

A new model of strategic networking is developed and analyzed, where an agent's investment in links is nonspecific. The model comprises a large class of games which are both potential and super- or submodular games. We obtain comparative statics results for Nash equilibria with respect to investment costs for supermodular as well as submodular networking games. We also study supermodular games with potentials. We find that the set of potential maximizers forms a sublattice of the lattice of Nash equilibria and derive comparative statics results for the smallest and the largest potential maximizer. Finally, we provide a broad spectrum of applications from social interaction to industrial organization.To network or not to network, that is the question.

1. Introduction

Models of strategic network formation typically assume that each agent selects his direct links to other agents in which to invest. Yet in practice, a person's networking efforts may not only establish or strengthen desirable links to specific agents, but also create or reinforce links to many if not all other individuals. Beneficial links may come along with detrimental ones. For example, being better connected and more accessible implies potentially more calls from phone banks, more “spam”, more encounters with annoying or hostile people.

To illustrate the latter, consider a population of four persons where each has two friends and one enemy. Each individual i has a binary choice, to network and choose si = 1 at cost 1.5 or not to network and choose si = 0 at zero cost. The intensity of a link between two persons i and j is si + sj. A person likes interacting with friends and dislikes interacting with enemies. Specifically, i enjoys the benefit +(si + sj) when j is a friend and −(si + sj) when j is an enemy. Friendship and enmity between persons are represented by the diagram

si = 0 to si = 1, the person enjoys added benefits +2 from the two friends, −1 from the enemy, and incurs the cost 1.5. Hence the net gain is −0.5. However, a person does not internalize the externalities of her networking effort when she plays her strictly dominant strategy. A switch from si = 0 to si = 1 would create extra benefits +2 for her friends and −1 for her enemy. Hence efficiency requires that everybody is networking.

si = 0 to si = 1, the person enjoys added benefits +2 from the two friends, −1 from the enemy, and incurs the cost 1.5. Hence the net gain is −0.5. However, a person does not internalize the externalities of her networking effort when she plays her strictly dominant strategy. A switch from si = 0 to si = 1 would create extra benefits +2 for her friends and −1 for her enemy. Hence efficiency requires that everybody is networking.

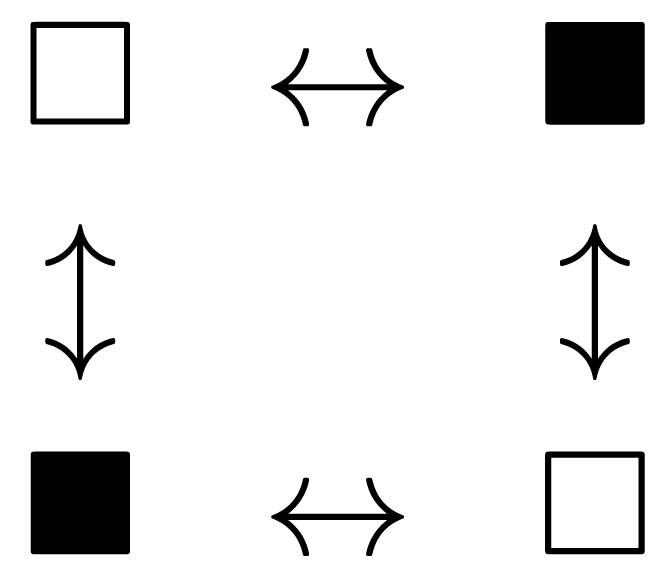

Nonspecific networking does not mean that an individual's networking effort affects everybody else. As a practical matter, networking may be possible between certain persons and not between others. We employ graphs to model restrictions on networking. Then formally, networking takes place within a given network or graph. Not only may different persons be affected differently by an individual's networking effort; but individuals may also differ in their networking efforts, even if the same range of effort levels is available to them. Both in traditional and in electronic interactions, some agents are much more active in networking than others and might be called “networkers”. Some might be considered designated networkers because they have higher benefits or lower costs from networking than others. To fix ideas, consider a population of four individuals. Their networking possibilities are described by a circular graph as follows where ↔ means that networking between the two persons is possible. ■ stands for a high cost person and □ stands for a low cost person or “natural networker”. Individuals are identical ex ante in all other respects.

If benefits and costs of networking are positive, one would expect low cost □-persons are networking more (or at least not less) than high cost individuals. Indeed, this is the case for a wide range of model parameters. But it is not necessarily the case when low costs and high costs are very close. High cost individuals may be networking in equilibrium while low cost individuals are not. This intriguing result is driven by strategic substitutes in networking: In that case, if a □-person has two neighbors whose networking efforts are high, no effort may be the best response, and if a ■-person has two neighbors choosing zero effort, a significant effort may be the best response. For details, we refer to Example 2. A related yet different question is whether more networking occurs when ceteris paribus networking becomes less expensive in a society. The comparative statics in Section 5 addresses this question.

To recapitulate, we develop and analyze a new model of strategic network formation—or rather network utilization in many instances—where

an agent's effort or investment in links is nonspecific;

the intensity and impact of links can differ, possibly with a negative impact of certain links;

networking may take place within a given network (graph).

Our model holds much promise for several reasons:

A broad spectrum of applications.

A rich class of games which are both potential and supermodular games.

Possibility of comparative statics with respect to networking costs.

Possibility of stochastic stability analysis.

Possibility to explore networking within a “social structure”.

In the remainder of the section, we shall elaborate on these points.

Nonspecific networking games

Here we focus on nonspecific networking, meaning that an agent cannot select a specific subset of feasible links which he wants to establish or strengthen. Rather, each agent chooses an effort level or intensity of networking. In the simplest case, the agent faces a binary choice: to network or not to network. If an agent increases his networking effort, all direct links to other agents are strengthened to various degrees. We assume that benefits accrue only from direct links. The set of agents or players is finite. Each agent has a finite strategy set consisting of the networking levels to choose from. For any pair of agents, their networking levels determine the individual benefits which they obtain from interacting with each other. An agent derives an aggregate benefit from the pairwise interactions with all others. This aggregate benefit is a function of the chosen profile of networking levels. In addition, the agent incurs networking costs, which are a function of the agent's own networking level. The agent's payoff is his aggregate benefit minus his cost. The set of agents together with the individual strategy sets and payoff functions constitute a game in strategic form. Equilibrium means Nash equilibrium. 1

Instances of networking

Despite its apparent simplicity, our hitherto unexplored model of nonspecific networking covers a broad spectrum of applications. It allows for social networking where some persons are more attractive than others, and some even possess negative attraction. Attraction or repulsion can be mutual or not. Certain individuals can have greater advantages from networking or smaller costs of networking than others and, therefore, may be considered natural networkers. To the extent that benefits are positive, under-investment in links can occur in equilibrium. When one allows for the possibility that benefits from interactions with certain agents are negative, a player prefers not to have links and interactions with such “bad neighbors”. Therefore, agents may refrain from networking even when link formation is costless. But an agent cannot prevent bad neighbors from networking and, consequently, may suffer from their efforts. Thus, there can be over-investment in the sense that less investment would increase aggregate welfare. The above 4-player game among friends and enemies demonstrates that under-investment is a possibility as well. In Section 6, we shall present an example where under-investment by one group of agents and over-investment by a second group coexist in equilibrium. A person's social networking activities may consist in joining social or sports clubs. Our general framework encompasses such instances of social networking, like the example following Fact 1 in subsection 3.3. In addition to social interaction and networking, the model is applicable in economics, in particular in the context of industrial organization. We mention in Section 8 that the model encompasses specific cases of user network formation.

Potential and supermodular games

The model comprises a large class of games which are both potential and supermodular (strategic complements) games. Finite potential games and finite supermodular games have in common that a Nash equilibrium in pure strategies exists. The literature on games which share both properties is scarce. Dubey, Haimanko, and Zapechelnyuk [3] show that games of strategic substitutes or complements with aggregation are “pseudo-potential” games.2 As a consequence, they obtain existence of a Nash equilibrium and convergence to Nash equilibrium of certain deterministic best response processes. Brânzei, Mallozzi and Tijs [5] investigate the relationship between the class of potential games and the class of supermodular games. They essentially focus on two-person zero-sum games (and a special case of Cournot duopoly). Their main result is that two-player zero-sum supermodular games are potential games and conversely that two-player zero-sum potential games can be transformed into supermodular games. In our model, suitable assumptions on the benefits from pairwise interaction give rise to a novel class of games which are both potential and supermodular games. A different set of assumptions generates an equally rich family of networking games which have both a potential and the strategic substitutes property.

Potential maximization, if applicable, has several strong implications. First of all, the set of potential maximizers is a subset of the set of Nash equilibria. Hence potential maximization constitutes a refinement of Nash equilibrium in potential games.3 If in addition, the game is supermodular, the set of potential maximizers forms a sublattice of the lattice of Nash equilibria. Consequently, if the game is also a symmetric game, then it has at least one symmetric potential maximizer. Finally, again in the case of supermodular potential games, one obtains comparative statics results for the smallest and the largest potential maximizer.

Comparative statics

In Section 5, we obtain comparative statics results for Nash equilibria with respect to networking costs for either class of networking games, those with strategic complements and those with strategic substitutes. For networking games which are both potential and supermodular games, we obtain comparative statics results for the smallest and the largest potential maximizer.

Stochastic stability

If a finite strategic game and specifically a networking game is a potential game, then perturbed best response dynamics with logit trembles yield the maximizers of the potential as the stochastically stable states, as shown by Blume [7,8], Young [9], Baron et al. [10], among others. Hence in this case, all results for potential maximizers apply to stochastically stable states as well. Two qualifications are warranted. First the coincidence of the set of potential maximizers and the set of stochastically stable states need not hold if the potential is not exact or updating is not asynchronous (like in the above papers), as Alos-Ferrer and Netzer (2010) have shown. Second, this is not to say that the study of stochastically stable states under logit perturbations has to be confined to games with exact potentials. See Alós-Ferrer and Netzer [11], Baron et al. [12], and Section 7 of Baron et al. [10].

Social structure

Nonspecific networking admits a differential impact of an agent's networking efforts on the strength of links to various other agents. In particular, undirected graphs serve as a descriptive tool throughout the paper to distinguish between pairs of agents which can form links among themselves and those pairs which cannot reach each other. Such a graph represents a “social structure” in the sense of Chwe [13]. Chwe investigates which social structures are conducive to coordination in a “local information game”. In contrast to Chwe's, our model falls under the rubric of “local interaction games”. Our concern is not whether people coordinate, but who networks and how much, e.g., whether natural networkers invest more in networking than others.

Related Work

The model of Bramoullé and Kranton [14] is similar to ours in many respects, with one important exception: Interaction is not pairwise but rather with the entire group of one's neighbors. For further details, see Remark (c) at the end of Section 4. The model of Cabrales, Calvó-Armengol and Zenou [15] constitutes an instance of nonspecific networking, both with respect to network formation (socialization) and with respect to network utilization. In both respects, the model exhibits strategic complements and quadratic costs. The investments in networks (socialization) give rise to a weighted graph or network. Given the network, productive investments, say a parent's time spent on homework with their child not only affects their own child's scholarly achievement, but also the achievement of other children with whose parents the parent is linked via the network. Under certain conditions, the model has three Nash equilibria, an unstable one where nobody invests in networks and two stable ones with positive investments in networks, one with low levels of networks and production (resulting in under-investment relative to the efficient outcome) and one with high levels (resulting in over-investment). The model shares several traits with ours: Nash equilibrium, pairwise interactions, comparative statics, among others. Their model differs in that investment is in two dimensions, networks and production, payoffs are of a special functional form, and results obtain asymptotically, for replica games. Galeotti and Merlino [16] adopt the network formation (socialization) part of the Cabrales et al. [15] model, with linear costs and link weight or strength replaced by link reliability or probability. Then the investments in networks yield a random graph. In the realized network, a worker with a “needless job offer” can pass on the offer to an adjacent job seeker. The authors find that investment in the network is high and the resulting networks are well connected when the job destruction rate is at intermediate levels, whereas investment is low and the emerging networks are not well connected when the job destruction rate is either low or high. Goyal and Moraga-González [17] consider a finite number of quantity-setting firms. In the first stage of a three-stage game, costless specific (directed, earmarked) network formation occurs, with pairwise stability à la Jackson and Wolinsky as the equilibrium concept. At the second stage, each makes a costly investment in R&D which reduces its marginal cost of production in the third stage. There are non-specific networking effects in that the firm's investment not only reduces its own marginal costs but also those of other firms and more so the costs of its direct neighbors. If at the third stage, the firms operate in independent markets, the complete network is stable and serves them best. If at the third stage, all firms compete in the same market, then each faces a trade-off: To the extent it benefits from the marginal cost reduction efforts of its competitors, its own investment also reduces the marginal costs of the competitors. Hence, with more connectivity, firms invest less, which increases marginal costs and reduces equilibrium outputs. Therefore, while stable, the complete network is undesirable both in terms of industry profits and total surplus.

Outline

In Section 2, we introduce concepts which are of interest not only for networking games. We set the stage in Section 3, where we develop the general model and some of the main results about Nash equilibria, potentials, and potential maximizers. In Section 4, we examine the question of networkers and networking in a class of games with pairwise symmetry. Section 5 is devoted to comparative statics. In Section 6, we present two classes of games with linear benefits and costs. In Section 7, we elaborate on stochastic stability under logit perturbations. Section 8 contains conclusions and extensions.

2. Preliminaries

Here we collect definitions and results that are of interest beyond the investigation of nonspecific networking. Throughout, we consider finite games in strategic or normal form

2.1. Lattices

Let X be a partially ordered set, with partial order ≥. That is, ≥ is a reflexive, transitive and antisymmetric binary relation on X. Antisymmetric means that for any x, y ∈ X, if x ≥ y and y ≥ x then x = y. Given elements x and z in X, denote by x ∨ z or sup{x, z} the least upper bound or join of x and z in X, provided it exists, and by x Λ z or inf {x, z} the greatest lower bound or meet of x and z in X, provided it exists. A partially ordered set X that contains the join and the meet of each pair of its elements is called a lattice. A lattice in which each nonempty subset has a supremum and an infimum is complete. In particular, a finite lattice is complete. If Y is a subset of a lattice X and Y contains the join and the meet with respect to X of each pair of elements of Y, then is Y is a sublattice of X.

2.2. Supermodular Games

Let X and Y be two partially ordered sets and U : X × Y → ℝ.

Definition 1

The function U satisfies decreasing differences (x,y) in ∈ X×Y if for all pairs (x,y) ∈ X×Y and (x′,y′) ∈ X×Y, it is the case that x ≥ x′ and y ≥ y′ implies

The function U satisfies increasing differences in (x,y) ∈ X×Y if for all pairs (x,y) ∈ X×Y and (x′,y′) ∈ X×Y, it is the case that x ≥ x′ and y ≥ y′ implies

Definition 2

Let X be a lattice and U: X → ℝ. The function U is supermodular on X if for all pairs (x, y) ∈ X × X, it is the case that

Let Euclidean spaces ℝl and subsets thereof be endowed with the canonical partial order. In the sequel, let Si ⊆ ℝ, i = 1,…, N, with N > 1. Set S = S1 × … × Sn ⊆ ℝN. For s = (s1,…, sN) ∈ S and i ∈ {1,…, N}, we adopt the game-theoretical notation s = (si, s−i). Similarly, we shall write s = (si, sj, s−ij) in case i,j ∈ {1,…, N}, i ≠j, and S−ij instead of ∏k≠i,j Sk.

Definition 3

A function u: S → ℝ is pairwise supermodular if u(·,·, s−ij): Si × Sj → ℝ satisfies increasing differences for all pairs i, j ∈ {1,…, N}, i ≠ j and s−ij ∈ S−ij.

Since S = S1 × … × SN and Si ⊆ ℝ for all i, the following two properties hold by Theorems 2.6.1, 2.6.2, and Corollary 2.6.1 of Topkis [18]:

For a finite N-player game G = (I, (Si)i∈I, (ui)i∈I) with I = {1,…, N} and Si⊆ ℝ for all i ∈ I, supermodularity amounts to the following

Definition 4

The game G is supermodular if each payoff function ui satisfies increasing differences in (si, s−i) ∈ Si × S−i.4

Pairwise supermodularity is a strategic complements condition when reaction functions exist and equivalent to ∂2ui/∂ sj∂si ≥ 0 for i ≠ j when strategy sets are intervals and payoff functions are sufficiently smooth. For details and further references on lattices and supermodularity see Topkis [18] and Chapter 2 of Vives [19]. Notice that in our context, S is trivially compact and, therefore, Theorem 2 of Zhou [20] and its proof apply:

2.3. Potential Games

When appropriate, we shall employ the concept of a potential P for a game G = (I, (Si)i∈I, (ui) i∈I) pioneered by Monderer and Shapley [21], i.e., a function P : S → ℝ such that

3. The Networking Game

Our model of nonspecific networking constitutes a game in strategic form. There is a finite player set I = {1,…, N} where N > 1. Every player i ∈ I has strategy set

Players receive benefits from pairwise interaction with others: For any pair (i,j)∈I × I,i ≠ j, player i receives a benefit bij(si, sj)∈ ℝ from interacting with j, if i chooses si ∈Si and j chooses sj ∈ Sj. At this preliminary stage, the benefit function bij should be viewed as a reduced form that convolutes several effects. Subsequently, special cases of benefit functions will be considered, where the different aspects of nonspecific networking become more explicit and transparent. Player i∈I incurs a cost ci(si) when choosing si ∈ Si. As a rule, the choice of a higher networking level is more costly: 0 = ci(0) < ci(k1), …, ci(kT). However, in some applications, k0, k1,…, kT may just be labels for different technologies, user networks, natural or artificial languages, etc. which cannot be unambiguously ranked in terms of benefits or costs. The payoff ui(s) for player i depends on the strategy profile (joint strategy) of all players, s = (s1,…, sN)∈ S, and consists of i's total benefit from interacting with other players minus i's cost:

For specific interpretations, it proves advantageous to decompose benefit functions as follows:

The list G = (I, (Si)i∈I, (ui)i∈I) constitutes a game in strategic or normal form and summarizes our model of nonspecific networking. The game G will be referred to as the networking game. The equilibrium concept is Nash equilibrium. Let SNE denote the set of Nash equilibria of G.

We adopt the standard notion of efficiency in the literature on networks. Let W : S → ℝ be the aggregate or utilitarian welfare function given by

It proves convenient and instructive to distinguish the pairs (i,j) with bij ≠ 0 as the edges or links of an undirected graph on the player set I. To this end, we shall use the following terminology and notation related to graphs and networks. Let F = {J ⊆ I: |J| = 2}. A pair Γ = (I, E) with E ⊆ F is called an undirected graph with vertex set I and edge set E. Then the elements of I are called the vertices or nodes of the graph and the elements of E are called the edges or links of the graph. In case {i, j} ∈ E, i.e., in case {i, j} is an edge (link) of the graph, we also say that {i, j} “belongs to the graph” and that i and j are “neighbors” or “adjacent”. Throughout, without further mention, we are restricting ourselves to graphs without isolated nodes. In such a graph, every node has at least one neighbor. Finally, we use the shorthand notation ij for (i,j).

In the sequel, we frequently assume a graph (I, E) such that bij = 0 for all(i, j) with {i, j} ∈ F\E In that case networking takes place within the given network or graph E so that a player can only network with his neighbors in E. If two persons are not neighbors, then interaction between them may be impossible or to no avail. Infeasible could simply mean exorbitantly costly. One possible interpretation is that E represents a preexisting network and players decide to what extent they utilize the network. For example, the network could be a physical infrastructure, like fiber-optical cables, which determines who can network with whom. The network could reflect geographical, legal, language, and a variety of other barriers as well.

Several of the subsequent examples will be based on the circular network (I, E0) with

We are going to explore the implications of two opposite conditions, (A) and (B), on the benefits from networking. We will further consider condition (C) on networking costs and condition (D) on best responses:

There exists an undirected graph (without isolated nodes) (I,E) such that bij = 0 for {i,j} ∉ E and bij satisfies increasing differences in (si, sj) ∈ Si × Sj for {i,j} ∈ E.

There exists an undirected graph (without isolated nodes) (I,E) such that bij = 0 for {i,j} ∉ E and bij satisfies decreasing differences in (si,sj) ∈ Si × Sj for {i,j} ∈ E.

There exist C1 > 0,…, CN > 0 such that ci(si) = Ci·si for i∈I, si∈Si.

For i∈I, there exists a unique best response against each s−i ∈ S−i.

Let us consider Igor, player i who helps his daughter Olga with her homework. Similarly, José, player j, helps his daughter Laura with her homework. Olga and Laura are classmates. It is plausible to assume that greater effort by Igor improves Olga's scholarly achievement and greater effort by José improves Laura's. But there may also be cross-effects. First suppose, as is often assumed, that there exist positive peer effects: Laura's achievement motivates Olga the more the higher Laura's achievement and vice versa. For instance, if José makes a greater effort, then Laura does better, but this also enhances the positive impact of greater effort by Igor on Olga's performance. The same holds true for the cross-effect in the opposite direction. Under those circumstances, (A) is satisfied for i and j. Second, one can also imagine negative peer effects: Olga is frustrated and de-motivated by Laura's success and vice versa. Then (B) is satisfied by i and j. Condition (C) simply means positive linear networking costs. (D) implies that all Nash equilibria are strict. More interestingly, the condition helps strengthen some comparative statics results: Compare Propositions 4 and 5. Ceteris paribus, (D) is generically satisfied with respect to cost parameters.

3.1. Implications of Increasing Differences in Benefits

As a first result, we obtain

Proposition 1

Let G be a networking game where pairwise benefits satisfy (A). Then the set of Nash equilibria SNE ⊆ S is nonempty and the partially ordered set SNE is a lattice.

PROOF

The proof consists in verifying that the hypothesis of (2.4) is satisfied. S = ∏i∈I Si is a finite lattice as the cartesian product of finite lattices. Pick any i∈I. For each j ≠ i, ui(si, sj, s−ij) satisfies increasing differences in (si,sj) on Si × Sj for each fixeds−ij∈S−ij because of the functional form (3.1) and assumption (A) which implies that ui(si, s−i) has increasing differences in (si, s−i) on Si × S−i. Hence G is a supermodular game. The assertion follows from Zhou's Theorem (2.4). ■

Since S is finite, the lattice property of the set of Nash equilibria implies that there exists a Nash equilibrium where every player networks at least as much as in any other Nash equilibrium. If in addition, the game is symmetric, one obtains as a corollary that such an equilibrium is symmetric, hence existence of a symmetric equilibrium. The specific cases examined in subsection 3.4 and Section 6, and the examples given in Section 5 satisfy the assumptions of the proposition.

In general, a networking game need not have a Nash equilibrium in pure strategies:

Example 1

We consider a population of N = 4 players who form the circular network Γ = (I, E0). K = {0,1} so that each player has a binary choice, to network or not to network. The costs functions are ci(si)= (3/2).si. Payoffs are such that even numbered players exhibit strategic substitutes and odd numbered players exhibit strategic complements:

In this example, SNE is empty. Namely, if at least one of the even numbered players plays 0, then the best response of both odd numbered players is to play 0. Against the latter, the best response of both even numbered players is 1. In turn the best response of both odd numbered players is 1. Against the latter, the best response of both even numbered players is 0, and we have reached a cycle where players alternate their choices. If none of the even numbered players plays 1, we also reach a cycle where players alternate their choices.

3.2. Implications of Increasing Differences in Benefits and of the Existence of a Potential

Obviously, every finite potential game has a Nash equilibrium. Moreover, for a networking game that has a potential and satisfies assumption (A) of Proposition 1, the set S* of potential maximizers forms a nonempty sublattice of the equilibrium set SNE:

Proposition 2

Suppose G is a networking game which has a potential P: S → ℝ and satisfies (A). Then:

(α) The potential P is supermodular on S.

(β) The set S* is a nonempty sublattice of SNE and of S.

(γ) Moreover, if ui is supermodular on S for each i∈I, then the set of states s ∈ S which are both efficient and potential maximizing constitutes a sublattice of S.

PROOF

(α): Pick any i ∈ I For all j ≠ i and for all s, s′ ∈ S such that and , we have

The two equalities follow from the definition of a potential P. The inequality follows from (A). This means that P satisfies increasing differences on Si × Sj for each j ≠ i and each fixed s−ij ∈ S−ij. As this property holds for all i ∈ I, we conclude that P is pairwise supermodular and so supermodular on S by (2.2).

(β): The set S* of maximizers of P is nonempty because S is a finite set. By (α) and Theorem 2.7.1 of Topkis [18], S* is a sublattice of S. Moreover, by Proposition 1, SNE is a lattice with respect to the partial order induced by the partial order of S, but not necessarily a sublattice of S. Now S* ⊆ SNE. Thus we have that S* ⊆ SNE ⊆ S and S* is a sublattice of SNE.

(γ): Because the payoff function ui is supermodular on S for each i ∈ I, the utilitarian welfare function W is supermodular on S as the finite sum of supermodular functions by Lemma 2.6.1 in Topkis [18]. It follows that S∞ = arg maxs∈S W(s) is a sublattice of S by Theorem 2.7.1 in Topkis [18]. Now the set of states which are both efficient and potential maximizing is S∞ ∩ S*. Because S* is a sublattice of S by (β) and we demonstrated that S∞ is a sublattice of S as well, it follows that S∞ ∩ S* is a sublattice of S as the intersection of sublattices of S by Lemma 2.2.2 in Topkis [18]. ■

Remarks

Observe that if in addition, G is a symmetric game, then assertion (β) of the proposition implies that G has at least one symmetric potential maximizer.

The result that the set of potential maximizers forms a nonempty sublattice of S (rather than merely a lattice), is also of some practical interest. Namely, then one can easily find a new potential maximizer knowing that two profiles (equilibria) are potential maximizers: If s = (s1,…,sN) and are in S*, then so are and . One cannot necessarily proceed this way within the equilibrium set SNE. For the conclusion of Proposition 1 that the set of Nash equilibria SNE is a nonempty lattice can be hardly replaced by the stronger assertion that SNE is a sublattice of the set of strategy profiles S. The reason is that Zhou's Fixed-Point Theorem ([20], p. 297) cannot be generalized to the effect that the set of fixed points of an increasing correspondence from a nonempty complete lattice X into itself is a sublattice of X; see Zhou ([20], p. 298) and Example 2.5.1 of Topkis ([18], p. 40). For the specific case of a two-player supermodular game where players' strategy sets are totally ordered, Echenique [27] establishes that the set of Nash equilibria is a sublattice of the set of strategy profiles. But he observes that a supermodular game with more than two players need not have an equilibrium set that is a sublattice even if players' strategy sets are totally ordered.

Part (γ) of Proposition 2 does not assert that S∞ ∩ S* is nonempty. See particular instances of inefficient Nash equilibria (and potential maximizers) in subsection 6.1.

The results contained in Propositions 1 and 2 do not depend on the particular form of the payoff functions (3.1). They also hold if (A) is replaced by the more general condition that each payoff function ui satisfies increasing differences in (si, s−i) ∈ Si × S−i.

In general, a networking game satisfies neither condition (A) nor condition (B) as Example 1 demonstrates. A networking game need not be a potential game either. But which restrictions on benefit functions would yield a potential game?

3.3. Existence of a Potential

To formulate sufficient conditions on benefit functions for the existence of a potential of G, let us consider for any pair of distinct players ij, the two-player game Gij with:

player set Iij = {i, j};

strategy sets Si = Sj = K;

payoffs bij(si, sj) for i and bji(sj, si) for j when they play the joint strategy (si, sj) K × K.

Suppose βij is a potential for Gij. We say that βij is symmetric, if βij(si, sj) = βij(sj, si) for all (si, sj) K × K. Existence of a symmetric potential for all pairwise interactions is sufficient for the existence of a potential of the entire networking game:

Fact 1

If for each distinct pair ij, βij is a symmetric potential of Gij, then the function P given by

PROOF

Analogous to proof of Proposition 1 in Baron et al. [10]. ■

Suppose that each player i has four choices si = 0,1,2,3. si = 0 stands for joining no club. si = 1 stands for joining only the chess club at cost c1 > 0 and basic benefit b1. si = 2 stands for joining only the tennis club at cost c2 > c1 and basic benefit b2. si = 3 stands for joining both clubs at cost c1 + c2 and basic benefit b3. Moreover, player i obtains an added benefit or (disutility) if a neighbor belongs to the same club(s). Neighbors i and j both enjoy the added benefit βij(si, sj) which depends on the common club memberships. Set ci (0) = 0, ci(1)= c1 − b1, ci(2) = c2 − b2, ci(3) = c1 + c2 − b3. Then each game Gij has the symmetric potential βij and G has the potential (3.3). The games Gij are congestion games in the sense of Rosenthal [28] which are potential games (and vice versa); see Rosenthal [28], Monderer and Shapley [21], and Voorneveld et al. [29].

Next we impose directly certain restrictions on the pairwise benefit functions and discuss how they relate to the existence of symmetric potentials. For any distinct pair of players ij, we consider the following three conditions:

Identical Benefits:bij(si, sj) = bji(sj,si) for all (si,sj) K × K.

Symmetric Benefits: bij(si,sj) = bji(si,sj) for all (si,sj) K × K.

Interchangeable Actions: bij(si,sj) = bij(sj,si), bji(si,sj) = bji(sj,si) for all (si,sj) K × K.

Condition (I) is tantamount to bij being a (not necessarily symmetric) potential of Gij and bji being a (not necessarily symmetric) potential of Gji. Condition (II) implies existence of a symmetric potential of Gij in case T = 1, but not otherwise. Conditions (I) and (II) combined are equivalent to bij =bji being a symmetric potential of Gij. Any two of the three conditions imply the third one. As an immediate consequence, we obtain

Lemma 1

If the conditions (I)–(III) hold, then the game G has a potential P of the form (3.3) with βij = bij.

While obviously restrictive, existence of a symmetric potential for Gij still leaves a lot of flexibility in terms of functional form and interpretation. To illustrate the scope of applications, let us specialize and assume a decomposition (3.2) with πij ≥ 0. Then (I)–(III) have the following counter-parts:

Identity: πij(si, sj) = πji(sj, si) for all (si, sj) ∈ K × K.

Symmetry: πij(si, sj) = πji(si, sj) for all (si, sj) ∈ K × ?K.

Interchangeability: πij(si, sj) = πij(sj, si), πji(si, sj) = πji(sj, si)for all (si, sj) ∈ K × K.

We also consider a symmetry condition for the valuations υij:

Mutual Affinity: υij = υji.

Mutual affinity can result, e.g., from similarity (kindred spirits) or from complementarity (attraction of opposites). There can be mutual lack of interest, υij = υji = 0, and mutual dislike or disadvantage, υij = υji < 0. Any two of the conditions (i)–(iii) imply the third one. Conditions (i)–(iv) imply (I)–(III).

3.4. Adversity

We repeatedly consider games exhibiting pairwise symmetry of the form (3.2) with (ii) and (iv). But part of the appeal of our approach rests on the fact that it encompasses asymmetric scenarios. For instance, case 2 of Example 2 below can be transformed into a strategically equivalent game where some players are more attractive to their neighbors than the neighbors are to them, which constitutes a violation of (iv). In the current subsection (and in subsection 6.2), we set out to study more systematically networking games with variably attractive players.

In certain pairwise interactions, one party gains when the other loses and vice versa. One can think of chess matches, instances of gambling, or mutual industrial espionage. This means that for such a pair of players ij, the game Gij is a zero-sum game:5 bij(si, sj) = −bji(sj, si) for any pair of networking levels (si, sj) ∈ K × K. If one assumes the functional form (3.2) and equal intensities of interaction, that is (i), then such an adversarial interaction amounts to υij = −υji. It turns out that if Gij is zero-sum, then existence of a potential of Gij and supermodularity of Gij are equivalent.

Proposition 3

Suppose the game Gij is zero-sum. Then the following properties are equivalent:

(α) Gij has a potential.

(β) There exist functions fij: K → ℝand gij: K → ℝ such that bij(si, sj) = fij(si) − gij(sj), bji(sj, si) = gij(sj) − fij(si) for all (si, sj) ∈ K × K.

(γ) Gij is supermodular.

PROOF

By Theorem 1 of Brânzei et al. [5], (α) and (β) are equivalent. The separation property (β) implies increasing differences (in fact constant differences) and, since K ⊆ IR, supermodularity. Hence (β) implies (γ). By Theorem 4 of Brânzei et al. [5], (γ) implies (α). ■

If a zero-sum game Gij has a potential, then the function βij(si, sj) = fij(si) + gij(sj), with fij and gij as in (β), is a potential. The potential is asymmetric unless fij and gij are identical up to an additive constant. Hence, in general, Proposition 1 will not apply. Nevertheless, if each basic game Gij satisfies the separation property (β), then G has a potential given by

4. Networkers and Networking

Both in traditional and in electronic interactions, some agents are much more active in networking than others and might be called “networkers”. Some might be considered designated or natural networkers because they have higher benefits or lower costs from networking than others. In the Introduction, we already raised the question whether natural networkers would necessarily network more. To address this question, we examine the following example.

Example 2

We consider a population of N = 2M players with M ≥ 2. The players form the circular undirected graph Γ = (I, E0). The set of available networking levels is K = {h/2: h = 0,1,…, 10}. The pairwise benefit functions constitute a special case of (3.2):

Costs are of the linear form ci(si) = Ci · si with Ci > 0.

CASE 1

υij = 1 for all ij and Ci = 1 for all i.

Then the networking game G = (I, (Si)i∈I, (ui)i∈I) is symmetric and has the symmetric equilibrium . G has at least two asymmetric equilibria, sΔ = (0,1, 0,1,…, 0,1) and s∇ = (1,0,1,0,…,1,0).6

All three equilibria are inefficient, with the same value W = 2N − M whereas the maximum value of W is 4N − 2N = 2N, since maximization of the welfare function W requires that si + sj = 4 for {i, j} ∈ E0. The game has a potential P given by (3.3) and all three equilibria are maximizers of P.

CASE 2

υij = 1 for all ij and Ci = 1 for i even, Ci = C < 1 for i odd.

Then the odd numbered players have a cost advantage and are the “natural networkers”.

If the cost advantage is rather small, e.g., C = 0.9, then s*, sΔ, and s∇ are still Nash equilibria. In s∇, the natural networkers are not networking while the other players are. However, the cost difference does have an impact: The Nash equilibrium sΔ—where the natural networkers are networking and others are not — is the only potential maximizer.

If the cost advantage is sufficiently large, then only natural networkers are networking in equilibrium. E.g., if C = 0.5, then s** = (4, 0, 4, 0,…, 4, 0) is the only Nash equilibrium and the only potential maximizer.

Remarks

The equilibria s*, sΔ, s∇ and s** discussed in the example are inefficient in that there is under-investment in networking.

If in CASE 2, the payoff function ui of each odd numbered player i is replaced by ui/C, then the Nash equilibria remain the same, although the game is no longer a potential game after these payoff transformations. In the modified game, all players have the same cost functions, but the odd numbered players have greater benefits from networking than the even numbered players. A possible interpretation is that the even numbered players are more attractive to their neighbors than the odd numbered players.

Bramoullé and Kranton [14] consider a different way of nonspecific networking. They assume an undirected graph Γ = (I, E) with vertex set I and edge set E, continuous actions si ≥ 0 for i ∈ I, a C2-function B : ℝ+ → ℝ+ with B(0) = 0, B′ > 0, B″ < 0, and linear cost functions ci(si) = C·si so that B′(e*) = C for some e* > 0. Player i ∈ I has payoffs

where Ni is the set of i′s neighbors in (I, E). If E is a circle, then equilibria similar to s*, sΔ, and s∇ above arise.

5. Comparative Statics in Networking Costs

Intuitively, one would expect that networking activities intensify if networking costs decline. This conjecture proves at least partially true in the presence of strategic substitutes in pairwise interactions. To be precise, we consider conditions (B)–(D). Notice that condition (B) constitutes the antithesis of condition (A). It is satisfied in Example 2. Both (A) and (B) hold for the linear models of subsections 6.1 and 6.2.

Proposition 4

Let G be a networking game satisfying (B)-(D) and let G′ be a second networking game that differs from G only in the marginal networking costs, which are in G′. Further, let s ∈ S be an equilibrium of G and s′ ∈ S be an equilibrium G′. Suppose for all i and s′ ≠ s. Then for some i.

PROOF

Let G, G′, C1,…, CN, , s, s′ be as hypothesized. Since s ≠ s′, there is i ∈ I such that si ≠ s′i. Consider this player i and suppose the conclusion is false, that is for all j ∈ I. We have:

The assumption (D) of unique best responses can be disposed of if one postulates strict cost reductions instead:

Proposition 5

Let G be a networking game that satisfies (B) and (C) and let G′ be a second networking game that differs from G only in the marginal networking costs, which are in G′. Further, let s ∈ S be an equilibrium of G and s′ ∈ S be an equilibrium of G′. Suppose for all i and s′ ≠ s. Then for some i.

PROOF

Let G, G′, C1,…, CN, , s, s′ be as hypothesized. Suppose the conclusion is false, that is for all i ∈ I. Now take any i ∈ I. By assumption, si is a best response of i against s−i in G. Since for all j ≠ i and (B) and (C) hold, the largest best response ŝi of i against in G satisfies ŝi ≥ si. Since , (B) and (C) hold, and G and G′ differ only in marginal networking costs, one obtains s̃i ≥ ŝj for any best response s̃i of i against in G′ and any best response ŝi of i against in G. It follows that because is a best response of i against in G′. But and imply . Since i was arbitrary, s′ = s, which contradicts the hypothesis of the proposition. Hence, to the contrary, the conclusion has to be true. ■

Notice that the conclusion of Propositions 4 and 5 cannot be substantially strengthened for two reasons. For one, G and G′ may have the same equilibria, even if for all i. This follows from the discreteness of the model. Secondly, let G be the game of CASE 1 of Example 2 which satisfies (B)–(D) with Ci = 1 for all i. Let G′ be a game that differs from G only with respect to marginal networking costs. Specifically, set for i odd and for j even. If C′ is sufficiently close to 1, then the conclusion in CASE 2 of Example 2 still applies: s** = (4, 0,…, 4, 0) is an equilibrium of G′ while s* = (2, 2,…, 2, 2) is an equilibrium of G. Obviously s** ≠ s*. But some players have lowered their efforts in s** relative to s*.

Without a strategic substitutes assumption, a cost decline is consistent with a universal reduction of networking activities. Next we provide a numerical example with this property.

Example 3

We consider a population of N = 2M players with M ≥ 2. The players form the circular undirected graph Γ = (I, E0). The set of available networking levels is K = {0, e1/4 −1, e−1} where e = exp(1) is the Euler number. Put bij(si, sj) = 0 for {i,j} ∉ E and for {i,j} ∈ E. Then the pairwise interactions exhibit weak strategic complements rather than strategic substitutes.

With Ci = e−1 for all i, we obtain a game G which has two symmetric equilibria, s0 = (0,…, 0) and s● = (e − 1,…, e − 1).

Setting for all i defines a game G′ which has three symmetric equilibria, s0, s●, and s●● = (e1/4 − 1,…, e1/4 − 1).

Thus, the example has actually several interesting features. First, there exists the equilibrium s0, an instance of mutual obstruction where nobody has an incentive to network if nobody else is networking. Next there exists the equilibrium s● where everybody exerts maximum networking effort. Further, a cost reduction leads to the emergence of a third equilibrium, s●● where everyone makes a positive but less than maximal effort. Regarding our original point, the conclusion of Propositions 4 and 5 obviously need not hold if the strategic substitutes assumption of the form (B) is violated.

The example satisfies assumptions (A) and (C). In addition, the games G and G′ are symmetric. As a consequence of Proposition 1, G and G′ have smallest and largest equilibria which are symmetric. s0 is the smallest equilibrium and s● is the largest equilibrium in both games. Thus, the smallest and the largest equilibrium prove immune to a cost reduction. This observation is consistent with the claim that in response to a cost decrease, the smallest and the largest equilibrium will never decrease. Formally, we obtain a weak monotonicity result by applying an earlier result of Milgrom and Roberts [30]:

Proposition 6

Consider a family of networking games Gτ satisfying (A) and (C) which differ in the marginal cost parameters . Then the smallest and the largest equilibrium of Gτ are non-increasing functions of τ.

PROOF

Endow the parameter space with the reverse ⊵ of its canonical partial order, that is for , τ ⊵ τ′ if and only if for all i. Then the payoff functions given by (3.1) satisfy condition (A5) of Milgrom and Roberts [30]. (A) and (C) imply that each game Gτ is supermodular. Hence by Theorem 6 of Milgrom and Roberts, the smallest and the largest equilibrium of Gτ are non-decreasing in τ with respect to the reverse canonical partial order ⊵ of . Therefore, the assertion holds with respect to the canonical partial order ≥ of . ■

By Proposition 2, if in addition to satisfying (A) and (C), a networking game is a potential game, then the set of potential maximizers forms a nonempty sublattice of the set of equilibrium points. As a consequence of this added structure, there exist a smallest and a largest potential maximizer. Interestingly enough, the comparative statics à la Milgrom and Roberts for supermodular games extend to the smallest and largest potential maximizer. We choose a more abstract formulation in this instance than before. Let Θ be a nonempty subset of some Euclidean space ℝn, n ∈ ℕ, with generic elements θ, θ′, and ϑ.

Proposition 7

Suppose that , is a collection of finite potential games with respective potentials Pθ, θ ∈ Θ. Further suppose that:

For each i ∈ I, Si is a finite subset of ℝ.

For each i ∈ I and each θ ∈ Θ, satisfies increasing differences in (si, s−i) ∈ Si × S−i.

For each i ∈ I and each s−i ∈ S-i, the payoff function satisfies increasing differences in (si, θ) ∈ Si × Θ.

Then the largest (smallest) potential maximizer for each game Gθ is weakly increasing in θ on Θ.

PROOF

Pick any s, s′ ∈ S with s ≥ s′ and any θ, ϑ ∈ Θ with θ ≥ ϑ. Define s(0), s(1),…, s(N) ∈ S as follows: , and si(k) = si for i,k ∈ I, i > k. By construction, s(k) ≥ s(k + 1) for k = 0,1, 2,…, N − 1. Because Gθ and Gϑ are potential games and the payoff function of each player i satisfies increasing differences on Si × Θ, it is the case that s ≥ s′ and θ ≥ ϑ implies

This means that Pθ(s) satisfies increasing differences in (s,θ) on S × Θ. For each θ ∈ Θ, Pθ(s) is supermodular in s on S by assertion (α) of Proposition 2 and Remark (d) following Proposition 2. Then the correspondence S* : Θ ↠ S, θ ↦ arg maxs∈S Pθ(s) is increasing7 in θ ∈ Θ by Theorem 2.8.1 of Topkis [18].

Now consider θ, θ′ ∈ Θ with θ ≥ θ′ and pick any s ∈S*(θ) and s′ ∈ S* (θ′). Because S*(θ) ≥p S* (θ′), supS{s, s′} ∈ S*(θ) and infS{s,s′} ∈ S*(θ′). Since S*(θ) and S*(θ′) are finite sublattices of S, supS S*(θ′) and supS S*(θ′) are the largest elements of S* (θ) and S*(θ′) respectively. Then s′ ≤ supS{s, s′} ≤supS S*(θ) and so supS S*(θ) is an upper bound for S*(θ′). But supS S*(θ′) is the least upper bound for S*(θ′), so supS S*(θ′) ≤ supS S*(θ) as asserted. By next comparing infS S*(θ) and infS S*(θ′), we reach a similar conclusion for the smallest elements of S*(θ) and S*(θ′), respectively. The proof is complete. ■

Example 4

Suppose that for some integer m > 1, Θ = {1,2,…, m}. Moreover, Si = Θ for each i ∈ I and for all i ∈ I, θ ∈ Θ, s ∈ S. Then the game has the potential Pθ(s) = min{θ, s1,…, sN}. For any θ ∈ Θ, the smallest potential maximizer is (θ,…, θ) and the largest potential maximizer is (m, …, m).

Note that the potential does not necessarily depend on θ even when each payoff function does. For instance, suppose that Si = {1,…, m} for each i ∈ I, Θ ⊆ ℝ+ and the payoff function is defined by for all i ∈I, θ ∈ Θ, s ∈ S. Then, for all θ ∈ Θ, Pθ(s) = mini∈I{si}. Proposition 7 still applies: The set of potential maximizers is the singleton set {(m, m, …, m)} for each θ ∈ Θ.

Further note that the pairwise benefit function used in the first example of this section is just one from a rich family of functions of the multiplicative separable form f(si)f(sj) and generalizations thereof, e.g., f(si)f(sj)+g(si)g(sj) with f,g ≥ 0, f′,g′ ≥ 0, f″,g″ ≤ 0, etc., which all present instances of increasing differences.

6. Two Linear Models

6.1. A Linear Model of Mutual Sympathy or Antipathy

Sympathy or antipathy among people need not be mutual, but often they are and here we assume that they are. We consider the special case of (3.2) with bij(si, sj) = (si + sj)·υij. Let (I, E) be any undirected graph on I. If (iv) holds, then (i)–(iv) and, therefore, (I)–(III) hold and G is a potential game. Moreover, (A) and (B) hold. Hence with (iv), the assumptions of Propositions 1 and 2 are met.

Let us specialize further and postulate the mutual affinity condition (iv) and linear networking cost functions satisfying (C). Finally, we assume that players make binary choices, to network, si = 1, or not to network, si = 0. Accordingly, K = {0,1}.

To analyze the specific game G, let Ni be the set of player i′s neighbors and define Wi = Σj∈Ni υij for i ∈ I. Each player i has weakly dominant strategies. Namely, the player's best responses are 1 if Wi − Ci > 0; 0 if Wi − Ci < 0; 0 and 1 if Wi = Ci.

A player's decision creates own payoff (Wi − Ci)si and the surplus (2Wi−Ci)si. Hence a player's best response is inefficient in two instances, if Wi = Ci and the player chooses si = 0 and if Wi < Ci < 2Wi. Therefore, inefficiencies always constitute under-investments. The aggregate functions P and W assume correspondingly simple forms:

In particular, all equilibria are potential maximizers. Depending on model parameters and tie-breaking, equilibria may be efficient or inefficient.

Now mutual affinity allows for mutual lack of interest, υij = υji = 0 and mutual dislike, disadvantage, animosity, antipathy, enmity, or hostility, υij = υji < 0. In the beginning of the Introduction, we have presented an example of four players where each has two friends and one enemy. Obviously, affinities and adversities can give rise to a host of interesting social spill-overs, where a player is affected by affinities between other players. We confine ourselves to one more instructive example.

“The enemy of my enemy is my friend” usually means that if j is i′s enemy and k is j′s enemy, then i and k might form an alliance against j. Yet in the present situation, i may benefit from hostility between j and k in a different way: If i and j are enemies, υij < 0, then i prefers that j is not networking. This is certainly the case if υjk < 0 for all k, that is if j has only enemies. For instance, let N = 3, υ12 = υ21 < 0, and υ13 = 0. Then 1 prefers that 2 is not networking. This is guaranteed if W2 = υ23 + υ21 < C2. Since υ21 < 0 the latter holds if 2 and 3 are enemies, υ23 < 0, or not too close friends, 0 ≤ υ 23 < |υ21| + C2.

6.2. A Linear Model with Variably Attractive Players

We consider networking games of the form (3.2) with (ii) which differ from games with pairwise symmetry. We postulate numbers numbers V1,…, VN such that

If the Vi differ, then (iv) is violated and, as a rule, the pairwise interaction games Gij do not have symmetric potentials. Consequently, Propositions 1 and 2 need not apply. In the sequel, we focus on a linear model which allows a systematic inquiry. This linear model is essentially identical with the one developed and analyzed in the previous subsection, with the crucial exception of condition (v):

Linear Model

We assume an undirected graph (I,E) such that bij(si,sj) = (si + sj)·Vj if {i,j} ∈ E and bij(si,sj) = 0 if {i,j} ∉ E. We assume binary choices, K = {0,1}, and linear networking cost functions satisfying (C). Now let Ni be the set of player i′s neighbors and Zi = |Ni| be the number of his neighbors. Since we always assume that nobody is isolated, Zi ≥ 1. Further define Wi = Σj∈Ni Vj. Then si = 1 is a best response for i iff Wi ≥ Ci and si = 0 is a best response for i iff Wi ≤ Ci. Moreover, G has the potential P(s) = Σi(Wi − Ci)si. The social welfare function W assumes the particular form W(s) = Σi(Wi + ZiVi− Ci)si. It follows that all equilibria are in weakly dominant strategies and potential maximizers. In general, the maximizers of P and W will not coincide. In fact, there can be under- or over-investment. Let us add two more observations.

First, “bad neighbors” may not only harm “good neighbors”, but can also harm each other through their networking efforts. For example, let N = 4, E = F, V1 = V2 = −1, V3 = V4 = 1, 0 < Ci < 1 for all i. Then the unique equilibrium is s = (1,1, 0, 0) with utilities u1(s) = −C1,u2(s) = −C2 and u3(s) = u4(s) = −2. Everybody would be better off at s0 = (0, 0,0, 0). But given any choices by 3 and 4, players 1 and 2 find themselves in a Prisoner's Dilemma. Incidentally, the efficient outcome would be t = (0, 0,1,1) with W(t) = 4 − (C3 + C4). Hence, the equilibrium s—which is in strictly dominant strategies and potential maximizing—exhibits over-investment by 1 and 2 and under-investment by 3 and 4.

Second, the particular networking game G has a potential, even though the games Gij do not have symmetric potentials, thus violating the premise of Fact 1. Incidentally, a game Gij does possess a potential βij given by βij(0,0) = 0, βij(0,1) = Vi, βij(1, 0) = Vj, βij(1, 1) = Vi + Vj. However, βij is asymmetric unless Vi = Vj.

7. Stochastic Stability

It turns out that potential maximizers in finite games are the stochastically stable states for a particular kind of stochastically perturbed best response dynamics. Therefore, any results obtained for potential maximizers also hold for those stochastically stable states. Our specific concept of stochastic stability of outcomes (joint strategies) in a finite N-player game

The perturbed adaptive rule is a logit rule: Suppose the current state is s = (sj)j∈I. In principle, the updating player i wants to play a best reply against s−i = (sj)j≠i. But with some small probability, the player trembles and plays a non-best reply. If the player follows a logit rule, then for all ti ∈ Si, the probability that i chooses ti in state s is given by

The profiles in S̃ will be referred to as stochastically stable states. These are the states in which the system stays most of the time when very little, but still some noise remains. Baron et al. [12] show that S̃ can be partitioned into minimal sets closed under asynchronous best replies. It turns out that the limit stationary distribution exists and the stochastically stable states are the maximizers of the potential, if the underlying game G has a potential:

As an immediate consequence of (7.2), we obtain:

Corollary 1

In Propositions 2 and 7 and elsewhere in Sections 3 to 6, S*, potential maximizer(s) and potential maximizing, respectively, can be replaced by S̃, stochastically stable state(s) and stochastically stable, respectively.

For instance, Lemma 1, Fact 1 and (7.2) apply to Example 3. There, it turns out that with a slight logit perturbation, the best response dynamics would stay most of the time in the equilibrium s●, which is the unique stochastically state of the evolutionary model based on G or G′. In Example 2, case 1, all three equilibria are maximizers of the potential of P and, therefore, stochastically stable states. Consequently, under very small random perturbations, asymmetric outcomes are more likely (since they outnumber the symmetric one) than the symmetric equilibrium. Hence very likely, one observes that some players network more than others, although none of the players are distinguished as natural networkers.

Logit trembles have the appealing feature that mistake probabilities are state-dependent and the probability of making a specific mistake, that is of playing a specific non-best response, is inversely related to the opportunity cost of making the mistake.8 Furthermore, Mattsson and Weibull [31] and Baron et al. [10,12] derive a logit rule as the solution of a maximization problem involving a trade-off between the magnitude of trembles and control costs.

The investigation of logit perturbed best response dynamics for supermodular games with potentials and the associated set of stochastically stable states is one of the original contributions of the current paper. Dubey, Haimanko, and Zapechelnyuk [3] do not consider stochastic perturbations or “noise” and stochastic stability. To our knowledge only two earlier papers, Kandori and Rob [32] and Kaarboe and Tieman [33], combine stochastic stability and supermodularity in a general setting.9 These two papers focus on a class of global interaction games based on two-player and symmetric strict supermodular games. Players gradually adjust their behavior in taking a summary statistic into account. The adjustment process is perturbed by Bernoulli or uniform trembles or slight generalizations thereof. All authors obtain monotonicity results of best responses over the set of states and show that the limit sets of the unperturbed process correspond one-to-one with the set of (strict) Nash equilibria of the recurrent game. Consequently, the set of stochastically stable states is contained in the set of Nash equilibria. Hence supermodular games exposed to uniform trembles and potential games exposed to logit trembles both induce perturbed dynamics under which the stochastically stable states form a subset of the set of Nash equilibria. Unlike the present paper, the earlier literature does not examine the structure of the set of stochastically states and its variation in response to parametric changes.

8. Conclusions and Ramifications

Nonspecific networking means that an individual's networking effort establishes or strengthens links to a multitude of people. The individual cannot single out specific persons with whom she is going to form links. In the simplest case, the individual has a binary choice, to network or not to network. This particular case covers already a variety of interesting scenarios and phenomena. It encompasses scenarios with differential benefits across pairs of individuals, mutual versus non-mutual (positive or negative) affinities, leading for instance to second-order externalities such as the impact of an enemy of an enemy or to the co-existence of under-investment and over-investment in networking as exemplified in Section 6. Often, however, networking efforts are gradual and our model accommodates this possibility as well. Beyond expanding the descriptive scope of the model, the availability of several levels of networking effort makes the question of Section 5—how networking efforts respond to a change in networking costs—much more interesting. One conceivable generalization of our analysis, including the comparative statics, would assume multi-dimensional effort choices, like choosing software-hardware combinations.

The model also encompasses the formation of user networks, not dealt with in this paper. In that particular application, the player set I is interpreted as a finite population of users or adopters. Each player has to adopt exactly one technology or network good from the list K. The list K may consist of computer systems, word processors, internet providers, etc. The adopters of the same good constitute a user network. Baron et al. ([12], p. 574) consider the case of partial but imperfect compatibility of different technologies. Furthermore, two prominent classes of spatial games, both analyzed in detail in Baron et al. ([10], pp. 555-557) permit a novel interpretation as user network formation games. The first class consists of coordination games which can be reinterpreted as network formation games with perfect incompatibility of different technologies. The second class consists of minimum effort coordination games which allow an interpretation of network formation games with downward compatibility of technologies.

Supermodularity and increasing differences, utilized in some of our comparative statics, are cardinal properties. As Milgrom and Shannon [36] point out, comparative statics questions are inherently ordinal questions, and the conditions on objective functions and constraints necessary for comparative statics conclusions should possibly be ordinal. Indeed, Milgrom and Shannon [36] find such ordinal conditions for monotone comparative statics. They introduce and study quasi-supermodular functions and functions with the single crossing property. These functions generalize supermodular functions and functions with increasing differences and preserve the monotonicity conclusion for parametric optimization problems. A list of a wide variety of problems in economics and in noncooperative games presented by Milgrom and Shannon [36] makes a convincing case for the value added of their ordinal extension of complementarity conditions. In view of these results, one might ask whether Proposition 2 can be extended further by invoking such ordinal conditions. Precisely, if we assume that each bij satisfies the single crossing property on Si × Sj, are we then able to show that the potential P is quasi-supermodular on S? Unfortunately, one cannot draw such a conclusion. The reason is that the generality of the single crossing property has its drawbacks: Namely, in the proof of Proposition 2 we make use of Corollary 2.6.1 in Topkis [18] which states that for a function defined on a finite product of totally ordered sets, pairwise supermodularity implies supermodularity. This crucial auxiliary result no longer holds when the single crossing property is substituted for the pairwise supermodularity property Shannon ([37], p. 220) demonstrates that the single crossing property in each pair of variables does not imply quasi-supermodularity in all variables.

Proposition 7 establishes a weak monotonicity result on the set of potential maximizers. It states that the largest (smallest) potential at a lower parameter value is smaller than the largest (smallest) potential maximizer at a higher parameter value. But this result does not assert that a given potential maximizer at a lower parameter value is smaller than any other potential maximizer at a higher parameter value. Echenique and Sabarwal [38, p. 309] give a condition on a pair of parameters θ,θ′ ∈ Θ, θ ≤ θ′, which implies sup SN(θ) ≤ inf SN(θ′) for the two equilibrium sets SNE(θ) and SNE(θ′). Since S*(θ) ⊆ SN(θ) and S*(θ′) ⊆ SN(θ′), their condition also implies supS*(θ) ≤ inf S*(θ′).

A further alternative could make the set of available efforts a (one- or multi-dimensional) interval or convex set and assume sufficient differentiability of the cost and benefit functions. As Brueckner [39] demonstrates in the context of specific networking, one arrives at some conclusions very elegantly, if such a continuous model is highly symmetry, but does not get very far otherwise. Most of our subcases and examples can be easily embedded into a larger continuous model. But again, while this might produce some eloquence and quickness of derivations in some cases, it would only render the analysis more complicated in others. An added complication stems from the fact that the concept of stochastic stability developed in the literature so far (based on logit or other perturbations) and employed in the present paper relies on a finite state space.

The idea that the strength or reliability of a link might depend on the efforts of both agents involved, is also central to the model of Brueckner [39].10 Similarly, Haller and Sarangi [41] and Baron et al. [42] consider the possibility that the reliability of a link between two agents depends on the efforts of both agents. Bloch and Dutta [43] consider the possibility that the strength of a link between two agents depends on the efforts of both agents. In Cabrales et al. [15], link intensity depends on the socialization or networking efforts of both players constituting the link. Moreover, their model exhibits nonspecific networking and productive investments with spill-overs across the network. Since we allow for negative affinity or attraction, some agents might not only abstain from networking but might take counter-measures against the networking attempts of others and be willing to incur costs in order to weaken or sever links. This eventuality suggests a further extension of the formal model.

Acknowledgments

We would like to thank the two referees and the editor for helpful suggestions. Financial support by the French National Agency for Research (ANR)—research program “Models of Influence and Network Theory” ANR.09.BLANC-0321.03—is gratefully acknowledged.

References

- Jackson, M.O.; Wolinsky, A. A Strategic Model of Economic and Social Networks. J. Econ. Theory 1996, 71, 44–74. [Google Scholar]

- Bala, V.; Goyal, S. A Non-Cooperative Model of Network Formation. Econometrica 2000, 68, 1181–1229. [Google Scholar]

- Dubey, P.; Haimanko, O.; Zapechelnyuk, A. Strategic Complements and Substitutes, and Potential Games. Games Econ. Behav. 2006, 54, 77–94. [Google Scholar]

- Voorneveld, M. Best-Response Potential Games. Econ. Lett. 2000, 66, 289–295. [Google Scholar]

- Brânzei, R.; Mallozzi, L.; Tijs, S. Supermodularity and Potential Games. J. Math. Econ. 2003, 39, 39–49. [Google Scholar]

- Peleg, B.; Potters, J.; Tijs, S. Minimality of Consistent Solutions for Strategic Games, in Particular for Potential Games. Econ. Theory 1996, 7, 81–93. [Google Scholar]

- Blume, L. Statistical Mechanics of Strategic Interaction. Games Econ. Behav. 1993, 5, 387–426. [Google Scholar]

- Blume, L. Population Games. In The Economy as an Evolving Complex System II; Arthur, B., Durlauf, S., Lane, D., Eds.; Addison Wesley: Reading, MA, USA, 1997; pp. 425–460. [Google Scholar]

- Young, P. Individual Strategy and Social Structure; Princeton University Press: Princeton, NJ, USA, 1998. [Google Scholar]

- Baron, R.; Durieu, J.; Haller, H.; Solal, P. Control Costs and Potential Functions for Spatial Games. Int. J. Game Theory 2002, 31, 541–561. [Google Scholar]

- Alós-Ferrer, C.; Netzer, N. The Logit-Response Dynamics. Games Econ. Behav. 2010, 68, 413–427. [Google Scholar]

- Baron, R.; Durieu, J.; Haller, H.; Solal, P. A Note on Control Costs and Logit Rules for Strategic Games. J. Evol. Econ. 2002, 12, 563–575. [Google Scholar]

- Chwe, M.S.-Y. Communication and Coordination in Social Networks. Rev. Econ. Stud. 2000, 67, 1–16. [Google Scholar]

- Bramoullé, Y.; Kranton, R. Public Goods in Networks. J. Econ. Theory 2007, 135, 478–494. [Google Scholar]

- Cabrales, A.; Calvó-Armengol, A.; Zenou, Y. Social Interactions and Spillovers. Games Econ. Behav. 2010. Available online: http://dx.doi.org/10.1016/j.geb.2010.10.010 (accessed on 15 February 2011) (forthcoming). [Google Scholar]

- Galeotti, A.; Merlino, L.P. Endogenous Job Contact Networks; Working Paper; 2009. [Google Scholar]

- Goyal, S.; Moraga-González, J.L. R&D Networks. RAND J. Econ. 2001, 32, 686–707. [Google Scholar]

- Topkis, D. Supermodularity and Complementarity; Princeton University Press: Princeton, NJ, USA, 1998. [Google Scholar]

- Vives, X. Oligopoly Pricing. Old Ideas and New Tools; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Zhou, L. The Set of Nash Equilibria of a Supermodular Game is a Complete Lattice. Games Econ. Behav. 1994, 7, 295–300. [Google Scholar]

- Monderer, D.; Shapley, L.S. Potential Games. Games Econ. Behav. 1996, 14, 124–143. [Google Scholar]

- Ellison, G. Learning, Local Interaction, and Coordination. Econometrica 1993, 61, 1047–1071. [Google Scholar]

- Schelling, T. Dynamic Models of Segregation. J. Math. Sociol. 1971, 1, 143–186. [Google Scholar]

- Weidenholzer, S. Coordination Games and Local Interactions: A Survey of the Game Theoretic Literature. Games 2010, 1, 551–585. [Google Scholar]

- Salop, S.C. Monopolistic Competition with Outside Goods. Bell J. Econ. 1979, 10, 141–156. [Google Scholar]

- Berninghaus, S.K.; Schwalbe, U. Conventions, Local Interaction, and Automata Networks. J. Evol. Econ. 1996, 6, 297–312. [Google Scholar]

- Echenique, F. The Equilibrium Set of Two-Player Games with Complementarities is a Sublattice. Econ. Theory 2003, 22, 903–905. [Google Scholar]

- Rosenthal, R.W. A Class of Games Possessing Pure-Strategy Nash Equilibria. Int. J. of Game Theory 1973, 2, 65–67. [Google Scholar]

- Voorneveld, M.; Borm, P.; Facchini, G.; van Megen, F.; Tijs, S. Congestion Games and Potentials Reconsidered. Int. Game Theory Rev. 1999, 1, 283–299. [Google Scholar]

- Milgrom, P.; Roberts, J. Rationalizability, Learning, and Equilibrium in Games with Strategic Complementarities. Econometrica 1990, 58, 1255–1277. [Google Scholar]

- Mattsson, L.-G.; Weibull, J.W. Probabilistic Choice and Procedurally Bounded Rationality. Games Econ. Behav. 2002, 41, 61–78. [Google Scholar]

- Kandori, M.; Rob, R. Evolution of Equilibria in the Long Run: A General Theory and Applications. J. Econ. Theory 1995, 65, 383–414. [Google Scholar]

- Kaarboe, O.; Tieman, A. Equilibrium Selection in Games with Macroeconomic Complementarities; Tinbergen Institute Discussion Paper No. 99-096/1; Amsterdam, The Netherlands, 1999. [Google Scholar]

- Alós-Ferrer, C.; Ania, A.B. The Evolutionary Stability of Perfectly Competitive Behavior. Econ. Theory 2005, 26, 497–516. [Google Scholar]

- Schipper, B.C. Submodularity and the Evolution of Walrasian Behavior. Int. J. Game Theory 2003, 32, 471–477. [Google Scholar]

- Milgrom, P.; Shannon, C. Monotone Comparative Statics. Econometrica 1994, 62, 157–180. [Google Scholar]

- Shannon, C. Weak and Strong Comparative Statics. Economic Theory 1995, 5, 209–227. [Google Scholar]

- Echenique, F.; Sabarwal, T. Strong Comparative Statics of Equilibria. Games Econ. Behav. 2003, 42, 307–314. [Google Scholar]

- Brueckner, J.K. Friendship Networks. J. Regional Sci. 2006, 46, 847–865. [Google Scholar]

- Roy, A.; Sarangi, S. Revisiting Friendship Networks. Econ. Bull. 2009, 29, 2640–2647. [Google Scholar]

- Haller, H.; Sarangi, S. Nash Networks with Heterogeneous Links. Math. Soc. Sci. 2005, 50, 181–201. [Google Scholar]

- Baron, R.; Durieu, J.; Haller, H.; Solal, P. Complexity and Stochastic Evolution of Dyadic Networks. Computers O.R. 2006, 33, 312–327. [Google Scholar]

- Bloch, F.; Dutta, B. Communication Networks with Endogenous Link Strength. Games Econ. Behav. 2009, 66, 39–56. [Google Scholar]

- 1The recent literature on network formation employs mainly two alternative equilibrium concepts—and combinations thereof. Jackson and Wolinsky [1] introduced pairwise stability as solution concept for strategic models of network formation. Here we follow Bala and Goyal [2] in adopting Nash equilibrium as solution concept.

- 2The notion of pseudo-potential games is a generalization of the notion of best-response potential games introduced by Voorneveld [4].

- 3Peleg, Potters and Tijs [6] provide an axiomatic characterization of the solution given by the set of potential maximizers on the class of potential games with potential maximizers. They obtain the result with the same axioms that characterize Nash equilibrium on the class of strategic games with at least one Nash equilibrium.

- 4In case Si is a sublattice of some Euclidean space ℝl, l ≥ 2, the definition imposes that the payoff function ui is supermodular in si ∈ Si for each fixed s−i ∈ S−i. In our case, l = 1, and this condition is trivially met. This is the reason why such games are called supermodular games.

- 5Or strategically equivalent to a zero-sum game.

- 6For M = 2, these are obviously the only other equilibria. For M > 2, there exist also equilibria with strings 1/2, 1, 0, 1, 1/2.

- 7For all θ, θ′ ∈ Θ, θ ≥ θ′ implies S*(θ) ≥p S*(θ′) where ≥p is the strong set order. Precisely, S*(θ) ≥ p S*(θ′)means that for each s S*(θ) and s′ S* (θ′), supS{s, s′} S* (θ) and infS{s, s′} ∈ S*(θ′).

- 8The most prominent alternative, Bernoulli or uniform trembles, does not have this feature. Both types of trembles often, but not always lead to the same set of stochastically stable states or long-run equilibria.

- 9Other papers on stochastic stability and supermodularity (or submodularity) exist but they exclusively deal with symmetric aggregative games that are either submodular or supermodular [Alós-Ferrer and Ania [34], Schipper [35]].

- 10After learning about our work, Sudipta Sarangi pointed out to us Brueckner's paper and a further common trait of the two papers: Brueckner presents two asymmetric examples, one with an agent who creates higher benefits than others and a second example with an agent who is more accessible than others. See Roy and Sarangi [40] for extensions.

© 2011 by the authors; licensee MDPI, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).