ConoGPT: Fine-Tuning a Protein Language Model by Incorporating Disulfide Bond Information for Conotoxin Sequence Generation

Abstract

1. Introduction

2. Results

2.1. Comparison of Generated Sequences with Real Conotoxin Sequences

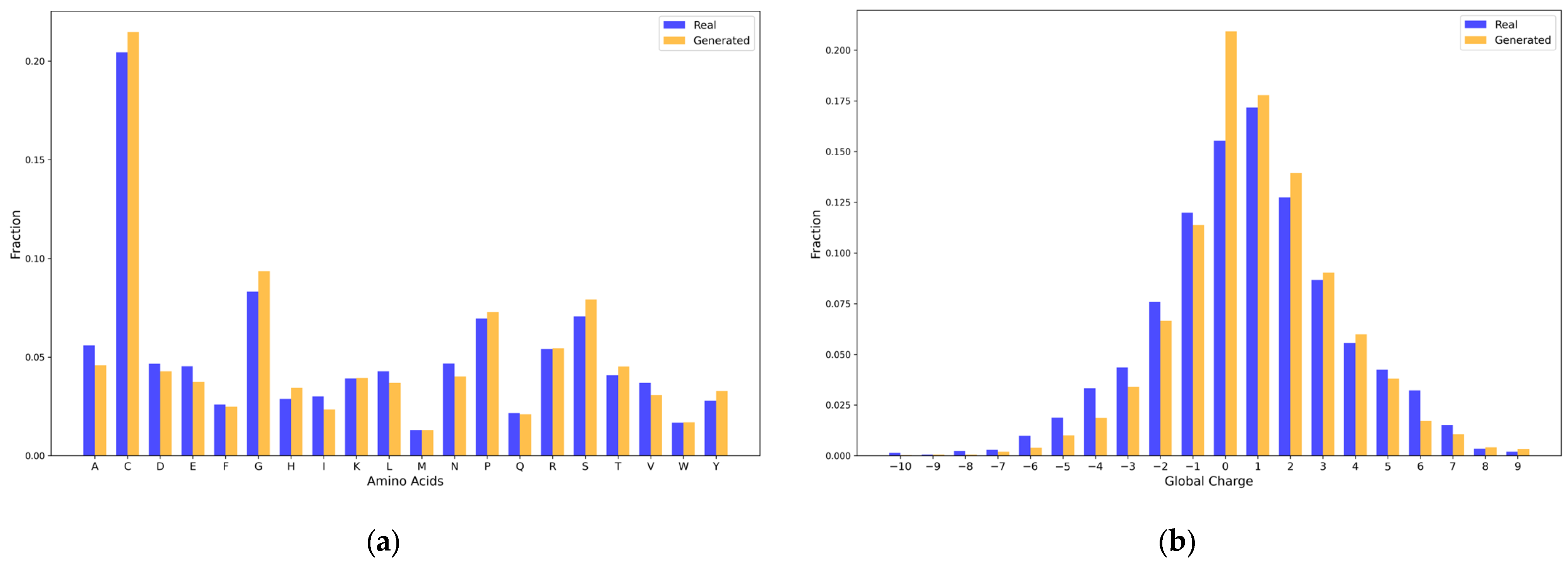

2.1.1. Amino Acid Composition and Physicochemical Properties

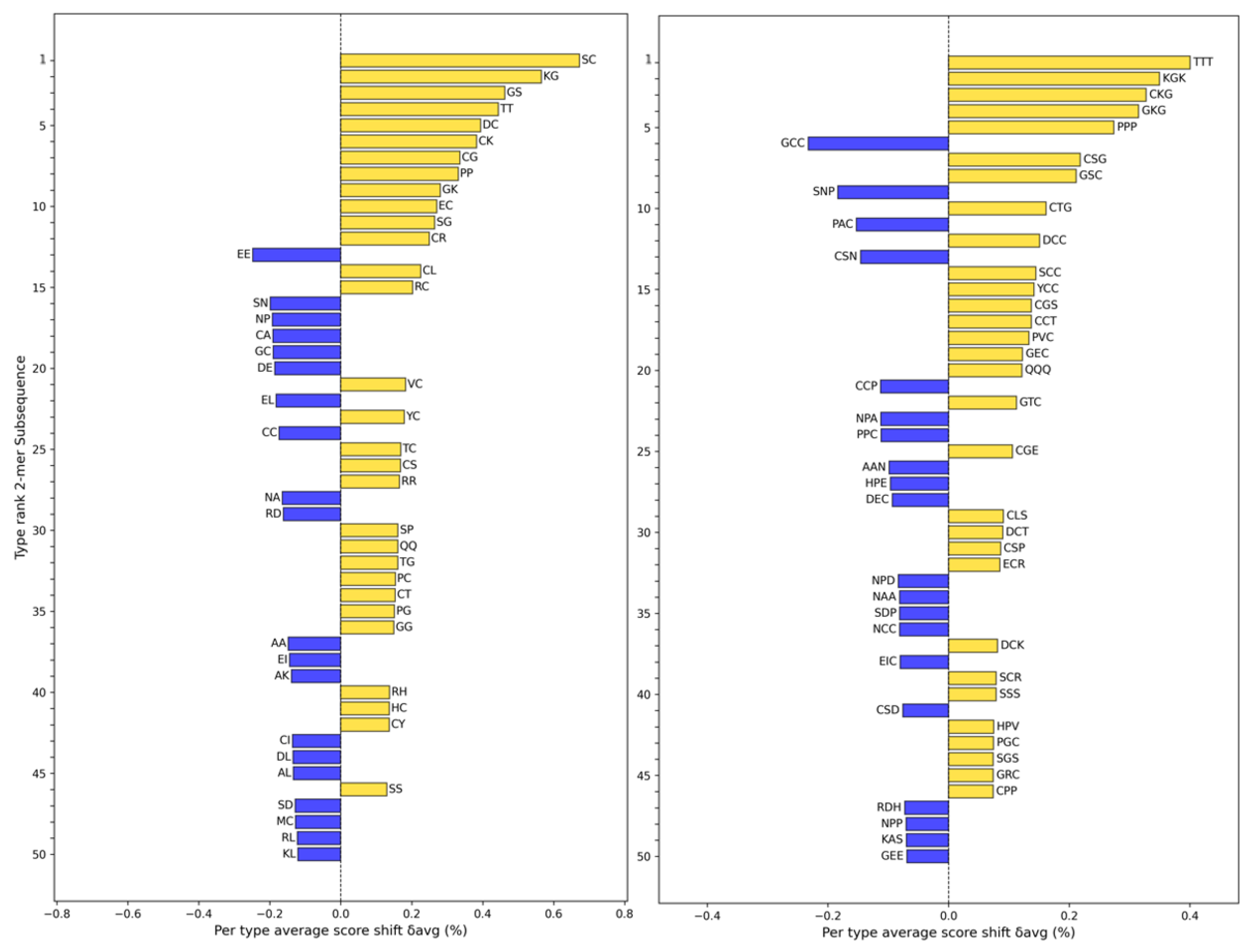

2.1.2. Shannon Entropy of Subsequence Distribution

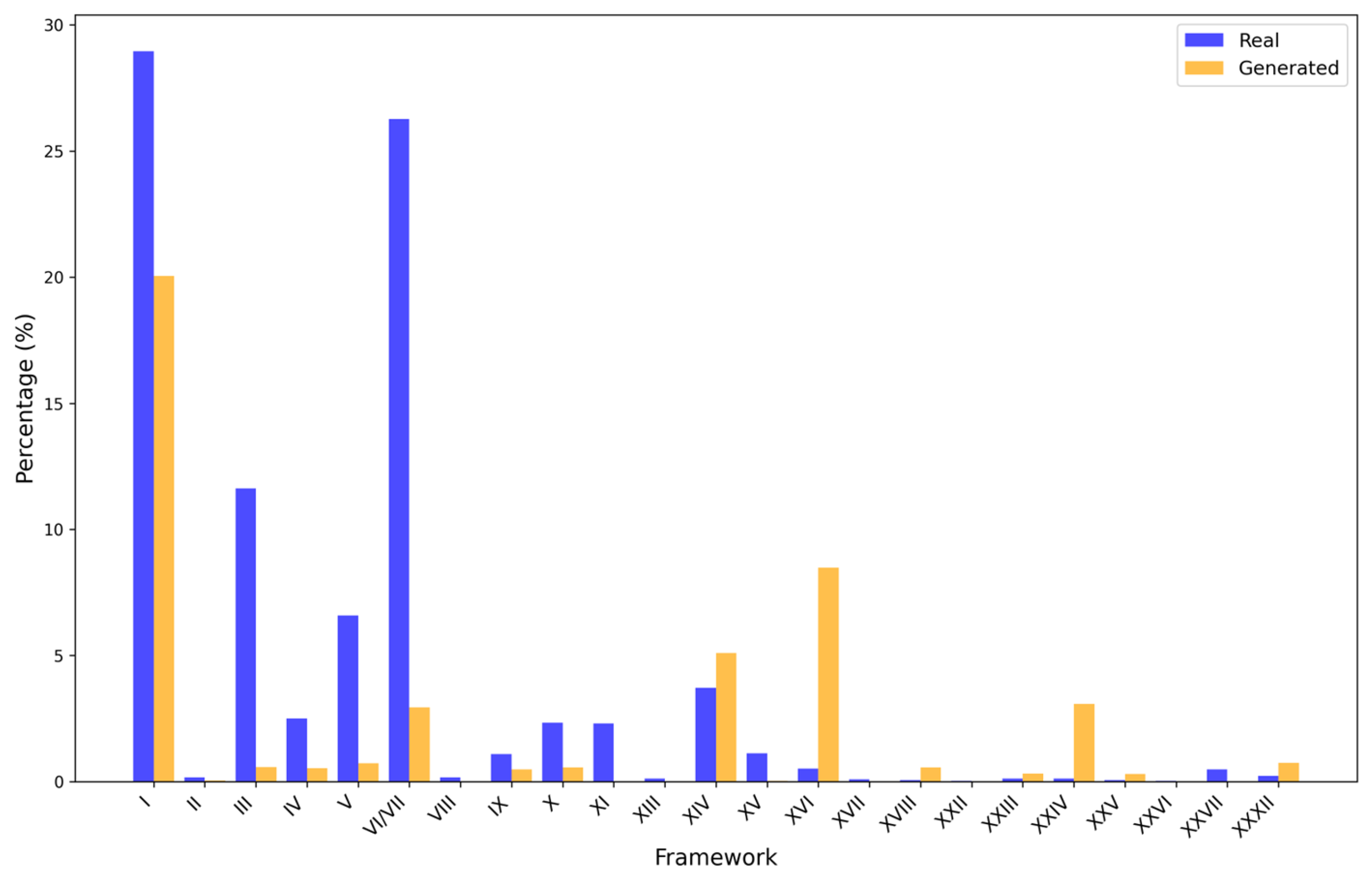

2.1.3. Cysteine Framework Distribution Analysis

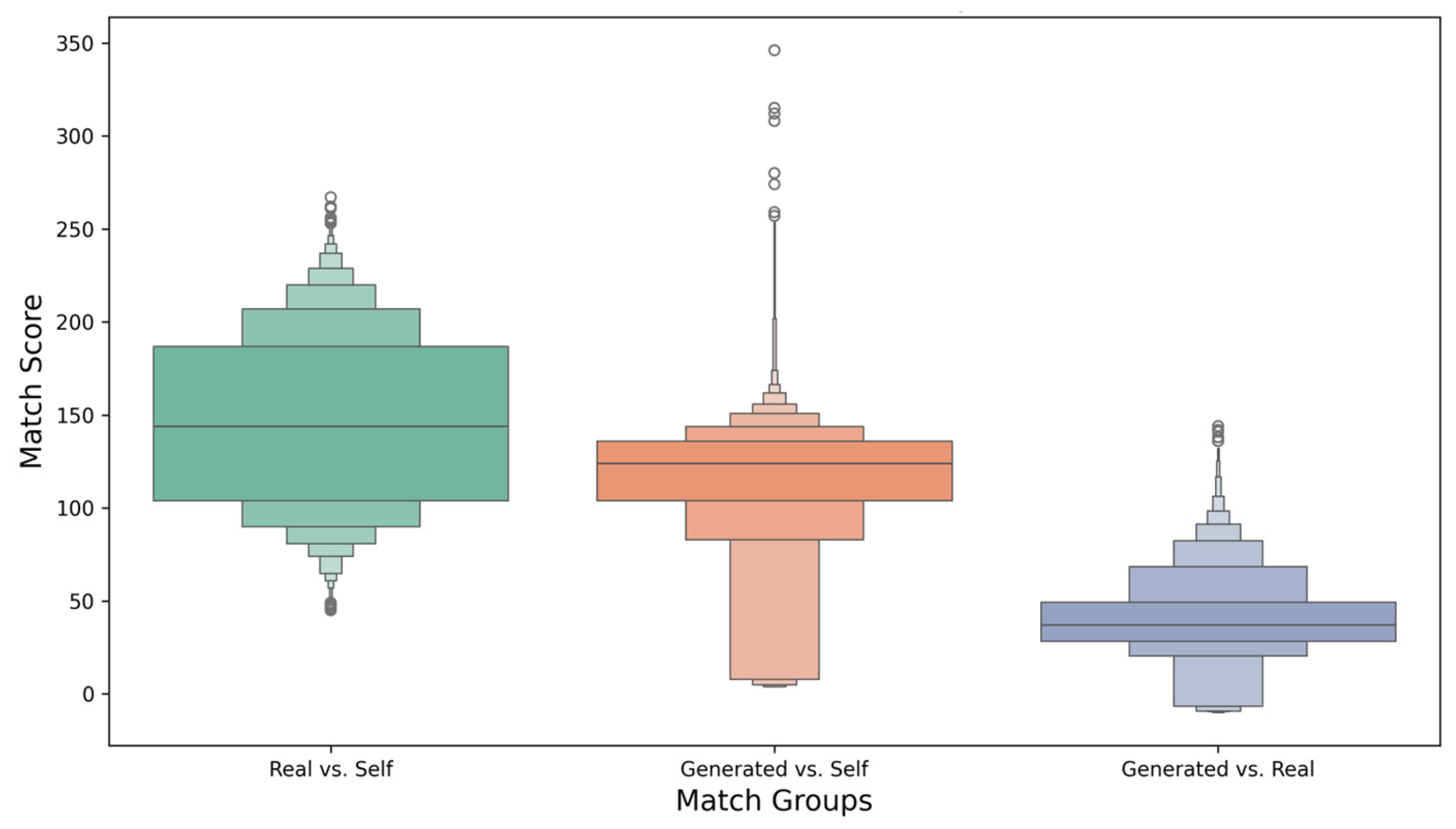

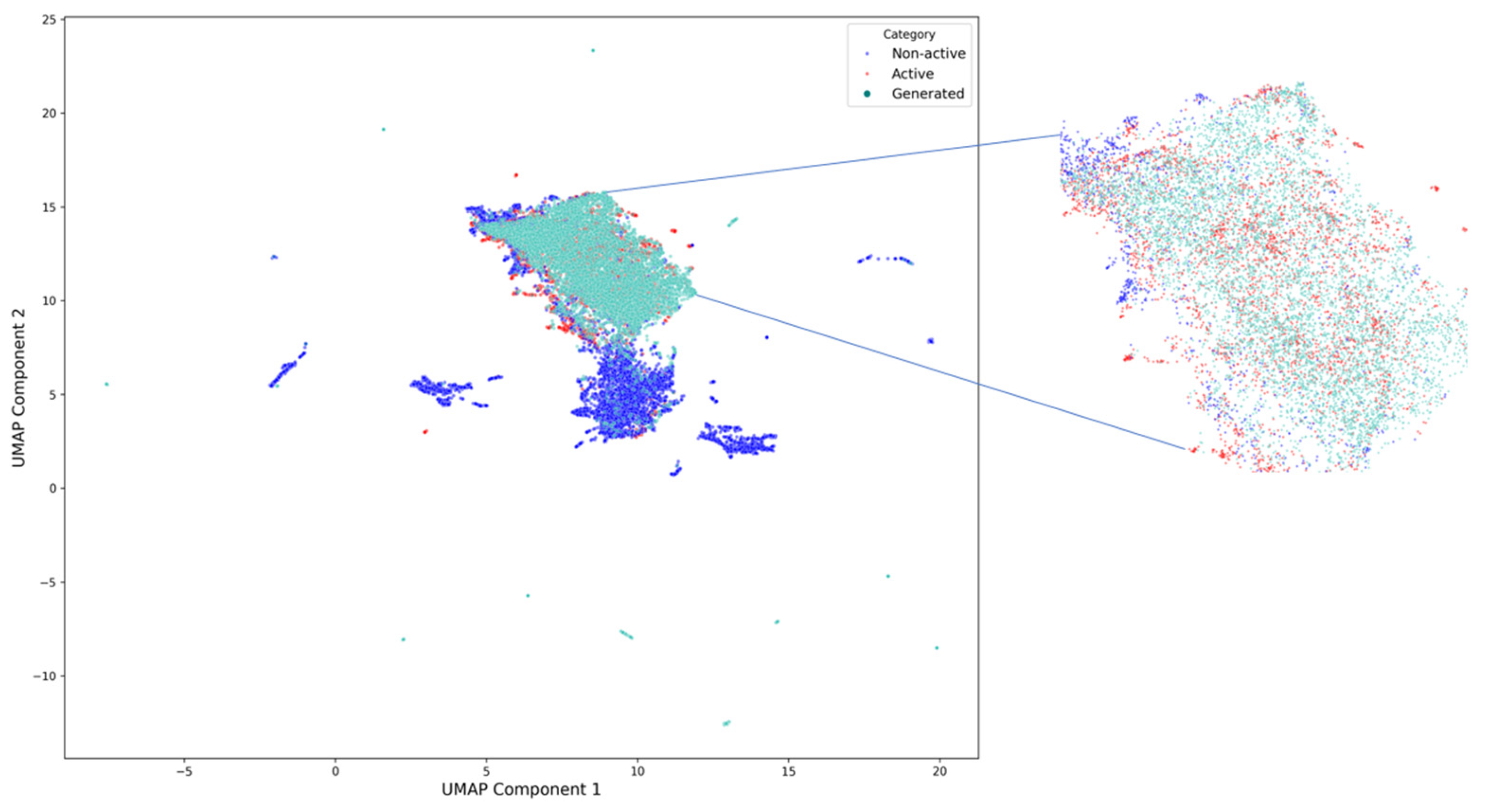

2.1.4. Diversity Analysis

2.1.5. Activity Assessment

2.2. Comparative Analysis of Model Performance

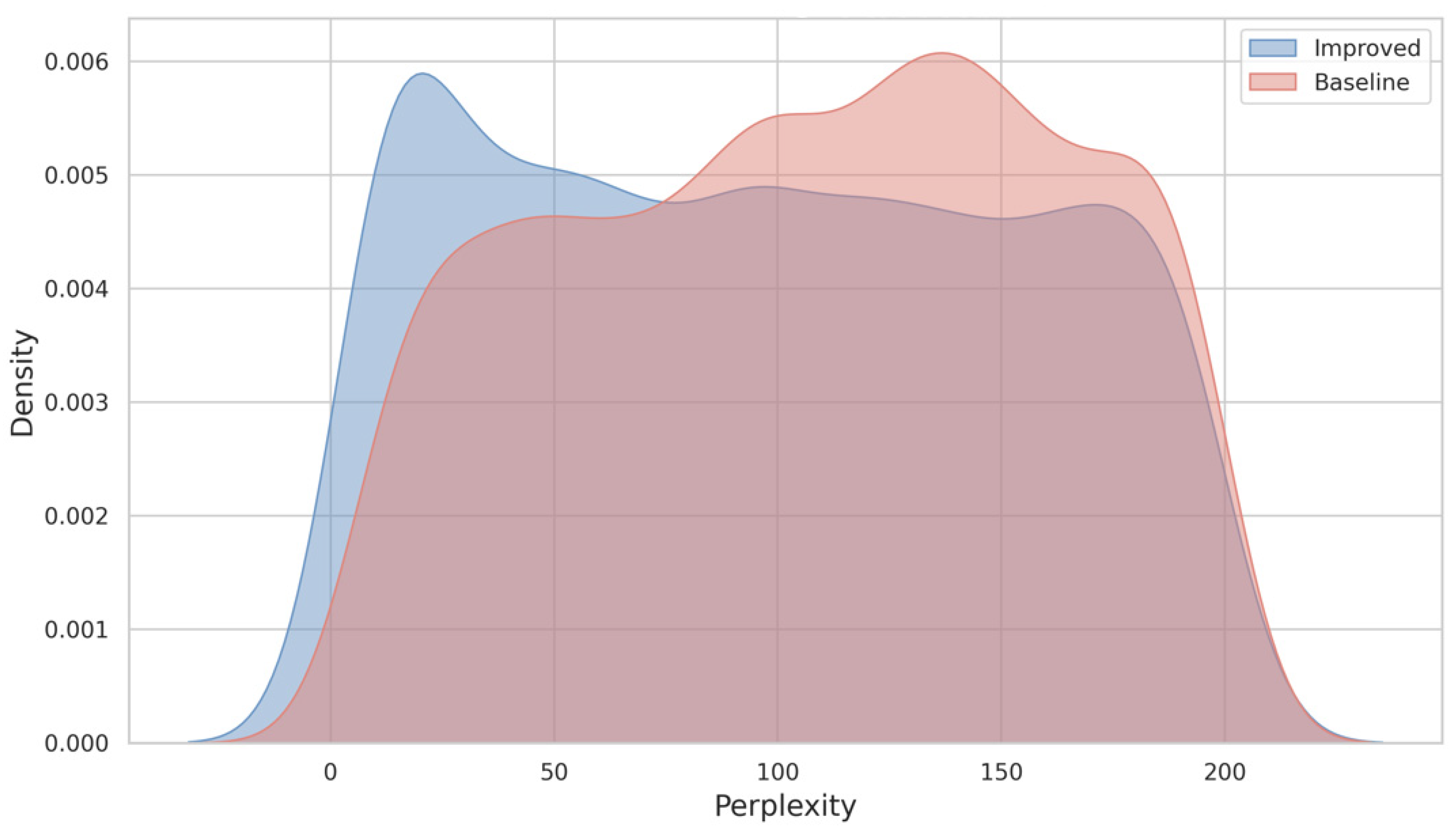

2.2.1. Perplexity Comparison

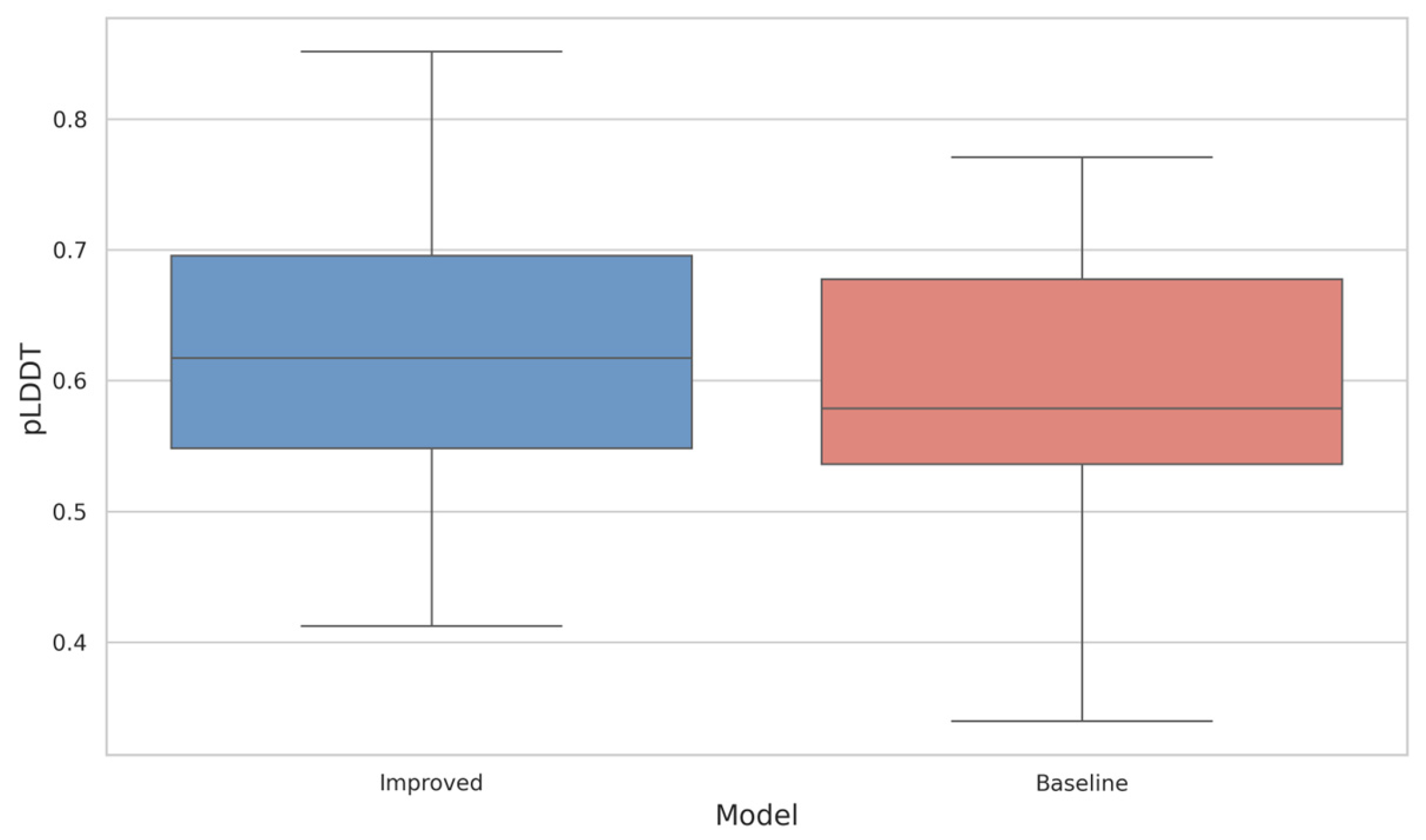

2.2.2. Evaluation of Structural Orderliness in Generated Sequences

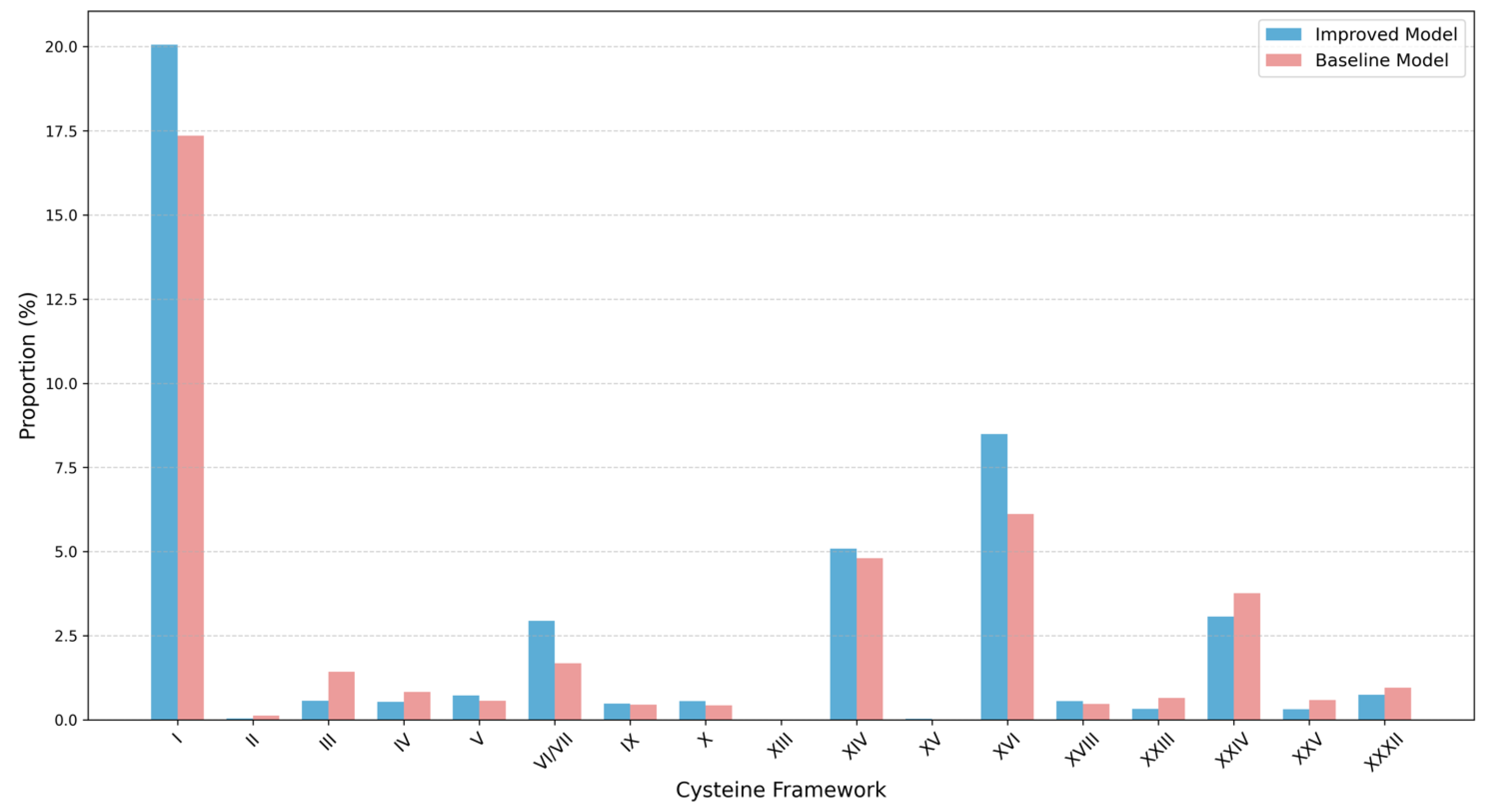

2.2.3. Evaluation of Cysteine Framework Distributions

2.2.4. Analysis of Activity Prediction

2.3. Functional Validation of Generated Sequences

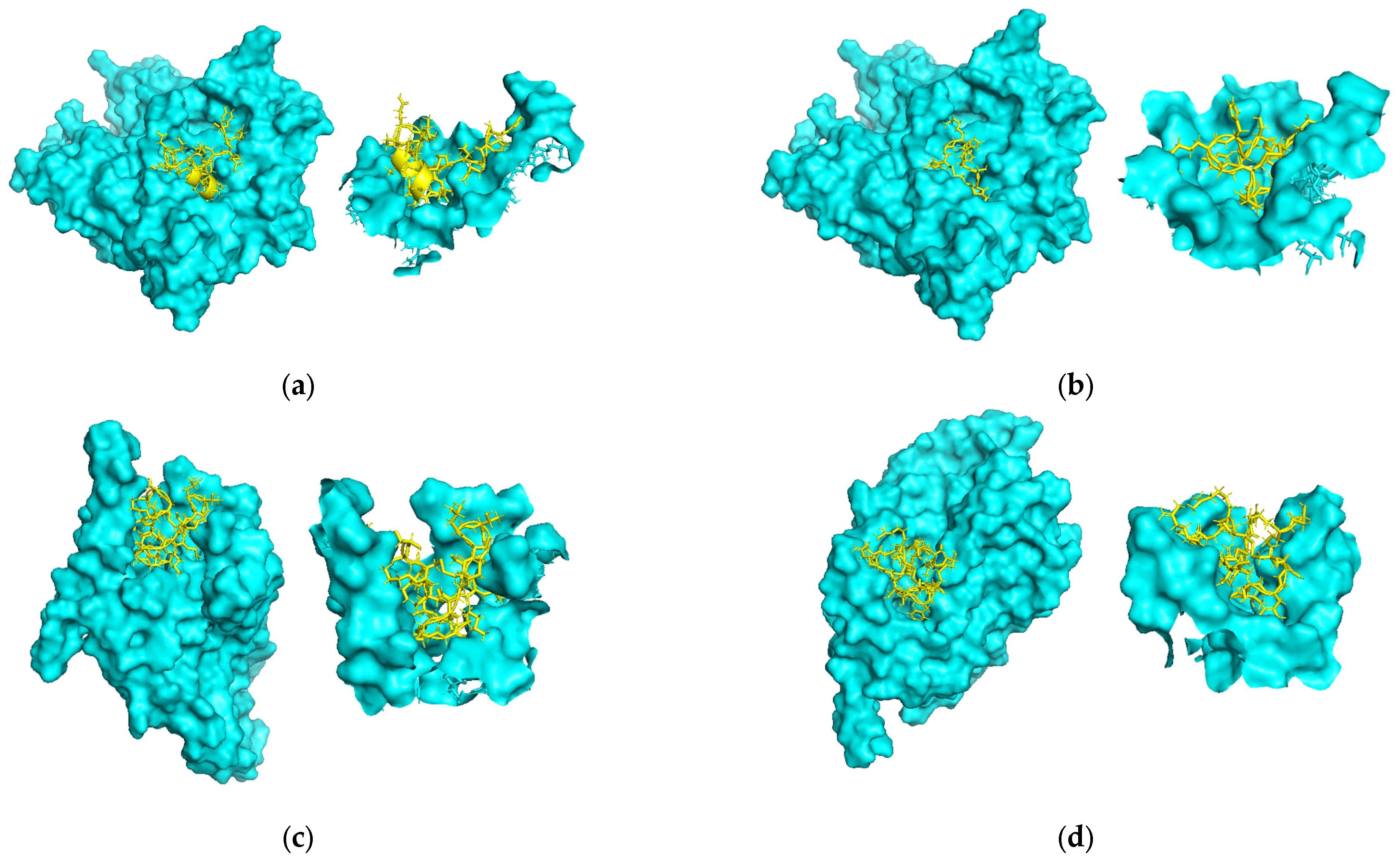

2.3.1. Analysis of Molecular Docking

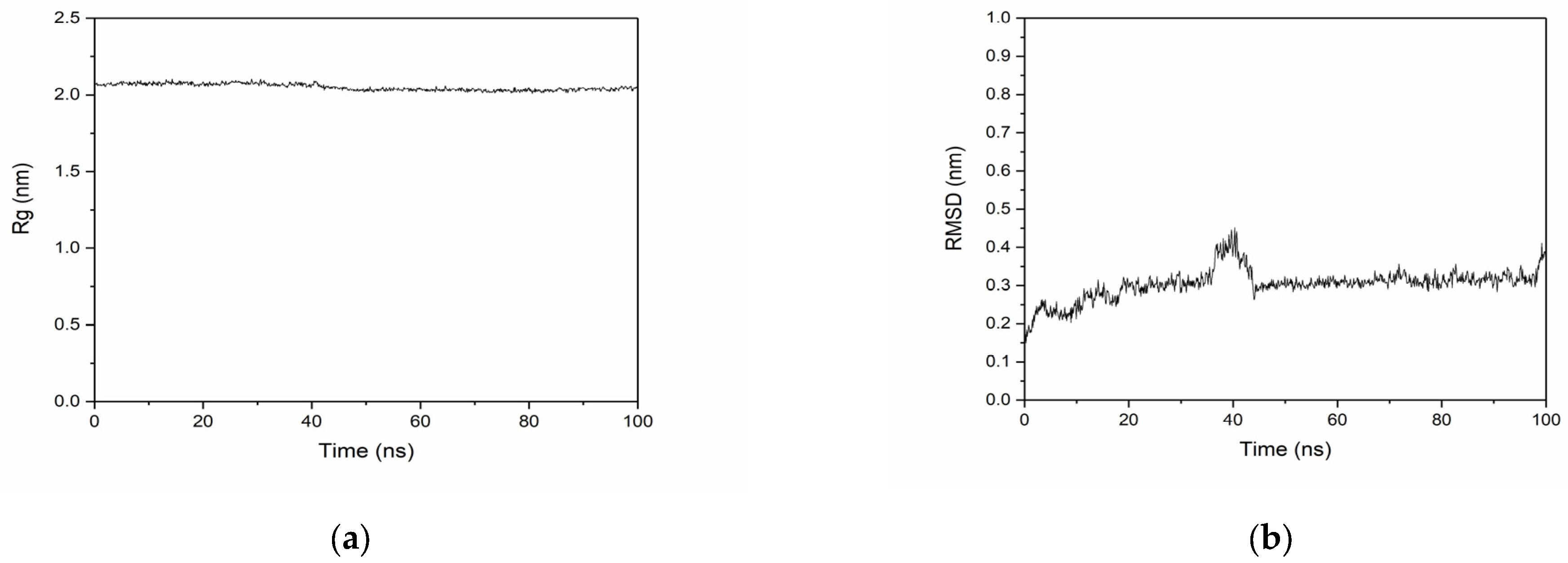

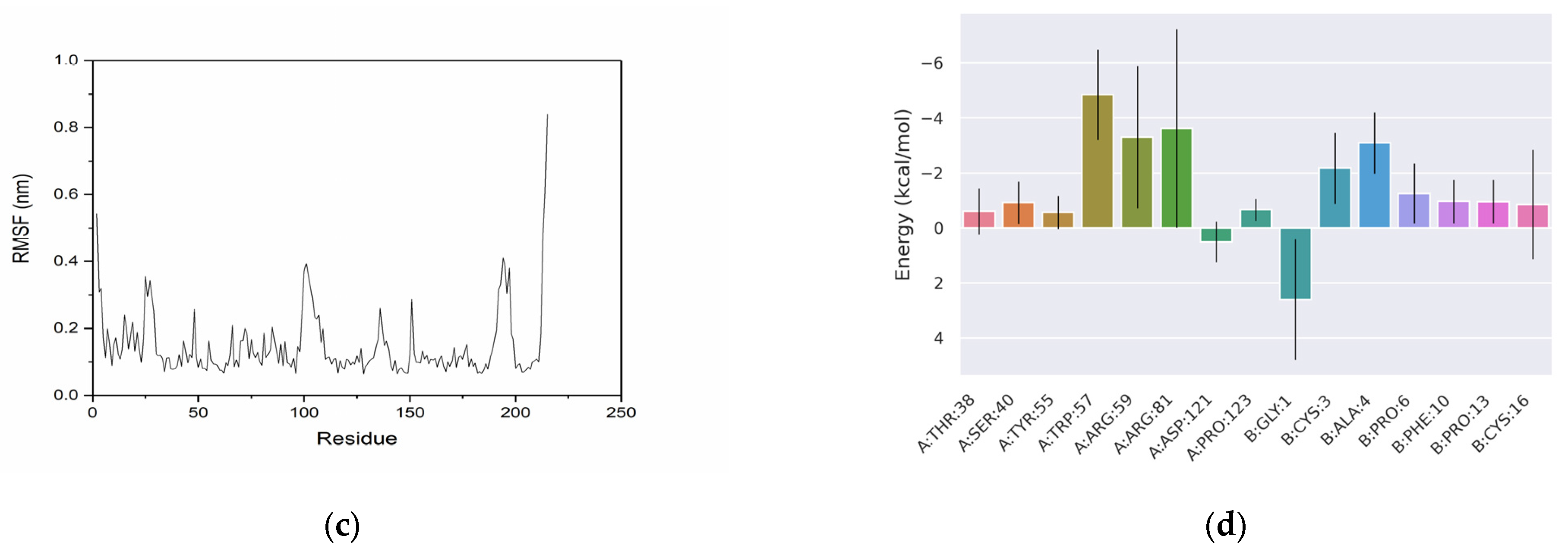

2.3.2. Analysis of Molecular Dynamics Simulation

3. Discussion

4. Materials and Methods

4.1. Data Collection and Preprocessing

4.2. Generation Model

4.3. pLDDT Prediction

4.4. Activity Prediction

4.5. Molecular Docking

4.6. Molecular Dynamics Simulation

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lewis, R.J. Conotoxins as Selective Inhibitors of Neuronal Ion Channels, Receptors and Transporters. IUBMB Life 2004, 56, 89–93. [Google Scholar] [CrossRef] [PubMed]

- Akondi, K.B.; Muttenthaler, M.; Dutertre, S.; Kaas, Q.; Craik, D.J.; Lewis, R.J.; Alewood, P.F. Discovery, Synthesis, and Structure- Activity Relationships of Conotoxins. Chem. Rev. 2014, 114, 5815–5847. [Google Scholar] [CrossRef] [PubMed]

- Pope, J.E.; Deer, T.R. Ziconotide: A Clinical Update and Pharmacologic Review. Expert Opin. Pharmacother. 2013, 14, 957–966. [Google Scholar] [CrossRef] [PubMed]

- Fu, Y.; Li, C.; Dong, S.; Wu, Y.; Zhangsun, D.; Luo, S. Discovery Methodology of Novel Conotoxins from Conus Species. Mar. Drugs 2018, 16, 417. [Google Scholar] [CrossRef]

- Fu, Y.; Zhang, Y.; Ju, S.; Ma, B.; Huang, W.; Luo, S. Isolation and Characterization of Five Novel Disulfide-Poor Conopeptides from Conus marmoreus Venom. J. Venom. Anim. Toxins Incl. Trop. Dis. 2022, 28, e20210116. [Google Scholar] [CrossRef]

- Wu, X.; Hone, A.J.; Huang, Y.H.; Clark, R.J.; McIntosh, J.M.; Kaas, Q.; Craik, D.J. Computational Design of Alpha-Conotoxins to Target Specific Nicotinic Acetylcholine Receptor Subtypes. Chemistry 2023, 30, e202302909. [Google Scholar] [CrossRef]

- Grisoni, F. Chemical Language Models for De Novo Drug Design: Challenges and Opportunities. Curr. Opin. Struct. Biol. 2023, 79, 102527. [Google Scholar] [CrossRef]

- Strokach, A.; Kim, P.M. Deep Generative Modeling for Protein Design. Curr. Opin. Struct. Biol. 2022, 72, 226–236. [Google Scholar] [CrossRef]

- Melo, M.C.; Maasch, J.R.; de la Fuente-Nunez, C. Accelerating Antibiotic Discovery through Artificial Intelligence. Commun. Biol. 2021, 4, 1050. [Google Scholar] [CrossRef]

- Chen, C.H.; Bepler, T.; Pepper, K.; Fu, D.; Lu, T.K. Synthetic Molecular Evolution of Antimicrobial Peptides. Curr. Opin. Biotechnol. 2022, 75, 102718. [Google Scholar] [CrossRef]

- Fernandes, F.C.; Cardoso, M.H.; Gil-Ley, A.; Luchi, L.V.; da Silva, M.G.L.; Macedo, M.L.R.; de la Fuente-Nunez, C.; Franco, O.L. Geometric Deep Learning as a Potential Tool for Antimicrobial Peptide Prediction. Front. Bioinform. 2023, 3, 1216362. [Google Scholar] [CrossRef] [PubMed]

- Ferrell, J.B.; Remington, J.M.; Van Oort, C.M.; Sharafi, M.; Aboushousha, R.; Janssen-Heininger, Y.; Schneebeli, S.T.; Wargo, M.J.; Wshah, S.; Li, J. A Generative Approach Toward Precision Antimicrobial Peptide Design. bioRxiv 2020. [Google Scholar] [CrossRef]

- Van Oort, C.M.; Ferrell, J.B.; Remington, J.M.; Wshah, S.; Li, J. AMPGAN v2: Machine Learning-Guided Design of Antimicrobial Peptides. J. Chem. Inf. Model. 2021, 61, 2198–2207. [Google Scholar] [CrossRef] [PubMed]

- Cao, Q.; Ge, C.; Wang, X.; Harvey, P.J.; Zhang, Z.; Ma, Y.; Wang, X.; Jia, X.; Mobli, M.; Craik, D.J.; et al. Designing Antimicrobial Peptides Using Deep Learning and Molecular Dynamic Simulations. Brief. Bioinform. 2023, 24, bbad058. [Google Scholar] [CrossRef]

- Pandi, A.; Adam, D.; Zare, A.; Trinh, V.T.; Schaefer, S.L.; Burt, M.; Klabunde, B.; Bobkova, E.; Kushwaha, M.; Foroughijabbari, Y.; et al. Cell-Free Biosynthesis Combined with Deep Learning Accelerates De Novo Development of Antimicrobial Peptides. Nat. Commun. 2023, 14, 7197. [Google Scholar] [CrossRef]

- Renaud, S.; Mansbach, R.A. Latent Spaces for Antimicrobial Peptide Design. Dig. Discov. 2023, 2, 441–458. [Google Scholar] [CrossRef]

- Hoffman, S.C.; Chenthamarakshan, V.; Wadhawan, K.; Chen, P.-Y.; Das, P. Optimizing Molecules Using Efficient Queries from Property Evaluations. Nat. Mach. Intell. 2022, 4, 21–31. [Google Scholar] [CrossRef]

- Chen, T.; Vure, P.; Pulugurta, R.; Chatterjee, P. AMP-Diffusion: Integrating Latent Diffusion with Protein Language Models for Antimicrobial Peptide Generation. bioRxiv 2024. [Google Scholar] [CrossRef]

- Szymczak, P.; Możejko, M.; Grzegorzek, T.; Jurczak, R.; Bauer, M.; Neubauer, D.; Sikora, K.; Michalski, M.; Sroka, J.; Setny, P.; et al. Discovering Highly Potent Antimicrobial Peptides with Deep Generative Model HydrAMP. Nat. Commun. 2023, 14, 1453. [Google Scholar] [CrossRef]

- Madani, A.; Krause, B.; Greene, E.R.; Subramanian, S.; Mohr, B.P.; Holton, J.M.; Olmos, J.L., Jr.; Xiong, C.; Sun, Z.Z.; Socher, R.; et al. Large Language Models Generate Functional Protein Sequences across Diverse Families. Nat. Biotechnol. 2023, 41, 1099–1106. [Google Scholar] [CrossRef]

- Ferruz, N.; Schmidt, S.; Höcker, B. ProtGPT2 Is a Deep Unsupervised Language Model for Protein Design. Nat. Commun. 2022, 13, 4348. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Bhatnagar, A.; Ruffolo, J.A.; Madani, A. Conditional Enzyme Generation Using Protein Language Models with Adapters. arXiv 2024, arXiv:2410.03634. [Google Scholar]

- Shrestha, P.; Kandel, J.; Tayara, H.; Chong, K.T. Post-Translational Modification Prediction via Prompt-Based Fine-Tuning of a GPT-2 Model. Nat. Commun. 2024, 15, 6699. [Google Scholar] [CrossRef] [PubMed]

- Olivera, B.M. Conus Peptides: Biodiversity-Based Discovery and Exogenomics. J. Biol. Chem. 2006, 281, 31173–31177. [Google Scholar] [CrossRef]

- Kaas, Q.; Yu, R.; Jin, A.-H.; Dutertre, S.; Craik, D.J. ConoServer: Updated Content, Knowledge, and Discovery Tools in the Conopeptide Database. Nucleic Acids Res. 2012, 40, D325–D330. [Google Scholar] [CrossRef]

- Buczek, O.; Bulaj, G.; Olivera, B.M. Conotoxins and the Posttranslational Modification of Secreted Gene Products. Cell. Mol. Life Sci. 2005, 62, 3067–3079. [Google Scholar] [CrossRef]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Styczynski, M.P.; Jensen, K.L.; Rigoutsos, I.; Stephanopoulos, G. BLOSUM62 Miscalculations Improve Search Performance. Nat. Biotechnol. 2008, 26, 274–275. [Google Scholar] [CrossRef]

- Du, Z.; Ding, X.; Xu, Y.; Li, Y. UniDL4BioPep: A Universal Deep Learning Architecture for Binary Classification in Peptide Bioactivity. Brief. Bioinform. 2023, 24, bbad135. [Google Scholar] [CrossRef]

- McInnes, L.; Healy, J.; Melville, J. UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction. arXiv 2018, arXiv:1802.03426. [Google Scholar]

- Cordoves-Delgado, G.; García-Jacas, C.R. Predicting Antimicrobial Peptides Using ESMFold-Predicted Structures and ESM-2-Based Amino Acid Features with Graph Deep Learning. J. Chem. Inf. Model. 2024, 64, 4310–4321. [Google Scholar] [CrossRef] [PubMed]

- Shah, A.; Guntuboina, C.; Farimani, A.B. Peptide-GPT: Generative Design of Peptides Using Generative Pre-Trained Transformers and Bio-Informatic Supervision. arXiv 2024, arXiv:2410.19222. [Google Scholar]

- Liu, N.; Xu, Z. Using LeDock as a Docking Tool for Computational Drug Design. IOP Conf. Ser. Earth Environ. Sci. 2019, 218, 012149. [Google Scholar] [CrossRef]

- Kaas, Q.; Westermann, J.-C.; Craik, D.J. Conopeptide Characterization and Classifications: An Analysis Using ConoServer. Toxicon 2010, 55, 1491–1509. [Google Scholar] [CrossRef]

- Liu, W.; Caffrey, M. Interactions of tryptophan, tryptophan peptides, and tryptophan alkyl esters at curved membrane interfaces. Biochemistry 2006, 45, 11713–11726. [Google Scholar] [CrossRef][Green Version]

- Khemaissa, S.; Sagan, S.; Walrant, A. Tryptophan, an Amino-Acid Endowed with Unique Properties and Its Many Roles in Membrane Proteins. Crystals 2021, 11, 1032. [Google Scholar] [CrossRef]

- Wen, L.; Chen, Y.; Liao, J.; Zheng, X.; Yin, Z. Preferential interactions between protein and arginine: Effects of arginine on tertiary conformational and colloidal stability of protein solution. Int. J. Pharm. 2015, 478, 753–761. [Google Scholar] [CrossRef]

- UniProt Consortium. UniProt: A Hub for Protein Information. Nucleic Acids Res. 2015, 43, D204–D212. [Google Scholar] [CrossRef]

- Abramson, J.; Adler, J.; Dunger, J.; Evans, R.; Green, T.; Pritzel, A.; Ronneberger, O.; Willmore, L.; Ballard, A.J.; Bambrick, J. Accurate Structure Prediction of Biomolecular Interactions with AlphaFold 3. Nature 2024, 630, 493–500. [Google Scholar] [CrossRef]

| Model | Percentage with pLDDT > 0.7 (%) | Mean pLDDT |

|---|---|---|

| Improve | 21.08 ± 0.56 | 0.6090 ± 0.0033 |

| Baseline | 14.52 ± 1.23 | 0.5820 ± 0.0019 |

| Target | Number of Sequences | Proportion (%) |

|---|---|---|

| nAChR | 173 | 90.57 |

| Na | 1 | 0.52 |

| Ca | 2 | 1.05 |

| K | 0 | 0.00 |

| Unknown | 15 | 7.85 |

| Framework | Cysteine Pattern | Cysteines | Connectivity | Percentage (%) |

|---|---|---|---|---|

| I | CC-C-C | 4 | I–III, II–IV | 28.95 |

| IV | CC-C-C-C-C | 6 | I–V, II–III, IV–VI | 2.50 |

| V | CC-CC | 4 | I–III, II–IV | 6.59 |

| VI/VII | C-C-CC-C-C | 6 | I–IV, II–V, III–VI | 26.27 |

| IX | C-C-C-C-C-C | 6 | I–IV, II–V, III–VI | 1.09 |

| X | CC-C.[PO]C | 4 | I–IV, II–III | 2.33 |

| XI | C-C-CC-CC-C-C | 8 | I–IV, II–VI, III–VII, V–VIII | 2.30 |

| XIV | C-C-C-C | 4 | I–III, II–IV | 3.71 |

| Metric | Value |

|---|---|

| Accuracy (ACC) | 0.959 |

| Balanced accuracy (BACC) | 0.955 |

| Sensitivity (Sn) | 0.969 |

| Specificity (Sp) | 0.942 |

| Matthews correlation coefficient (MCC) | 0.911 |

| Area under the curve (AUC) | 0.991 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, G.; Ge, C.; Han, W.; Yu, R.; Liu, H. ConoGPT: Fine-Tuning a Protein Language Model by Incorporating Disulfide Bond Information for Conotoxin Sequence Generation. Toxins 2025, 17, 93. https://doi.org/10.3390/toxins17020093

Zhao G, Ge C, Han W, Yu R, Liu H. ConoGPT: Fine-Tuning a Protein Language Model by Incorporating Disulfide Bond Information for Conotoxin Sequence Generation. Toxins. 2025; 17(2):93. https://doi.org/10.3390/toxins17020093

Chicago/Turabian StyleZhao, Guohui, Cheng Ge, Wenzheng Han, Rilei Yu, and Hao Liu. 2025. "ConoGPT: Fine-Tuning a Protein Language Model by Incorporating Disulfide Bond Information for Conotoxin Sequence Generation" Toxins 17, no. 2: 93. https://doi.org/10.3390/toxins17020093

APA StyleZhao, G., Ge, C., Han, W., Yu, R., & Liu, H. (2025). ConoGPT: Fine-Tuning a Protein Language Model by Incorporating Disulfide Bond Information for Conotoxin Sequence Generation. Toxins, 17(2), 93. https://doi.org/10.3390/toxins17020093