Reducing Risky Security Behaviours: Utilising Affective Feedback to Educate Users †

Abstract

:1. Introduction

2. Background

2.1. Risky Security Behaviour

2.2. Affective Feedback

2.3. Potential Threats

3. Analysis

3.1. Keeping Users Safe and Preventing Attacks

3.2. Issues with Traditional Security Tools and Advice

4. Methodology

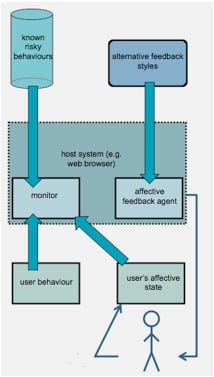

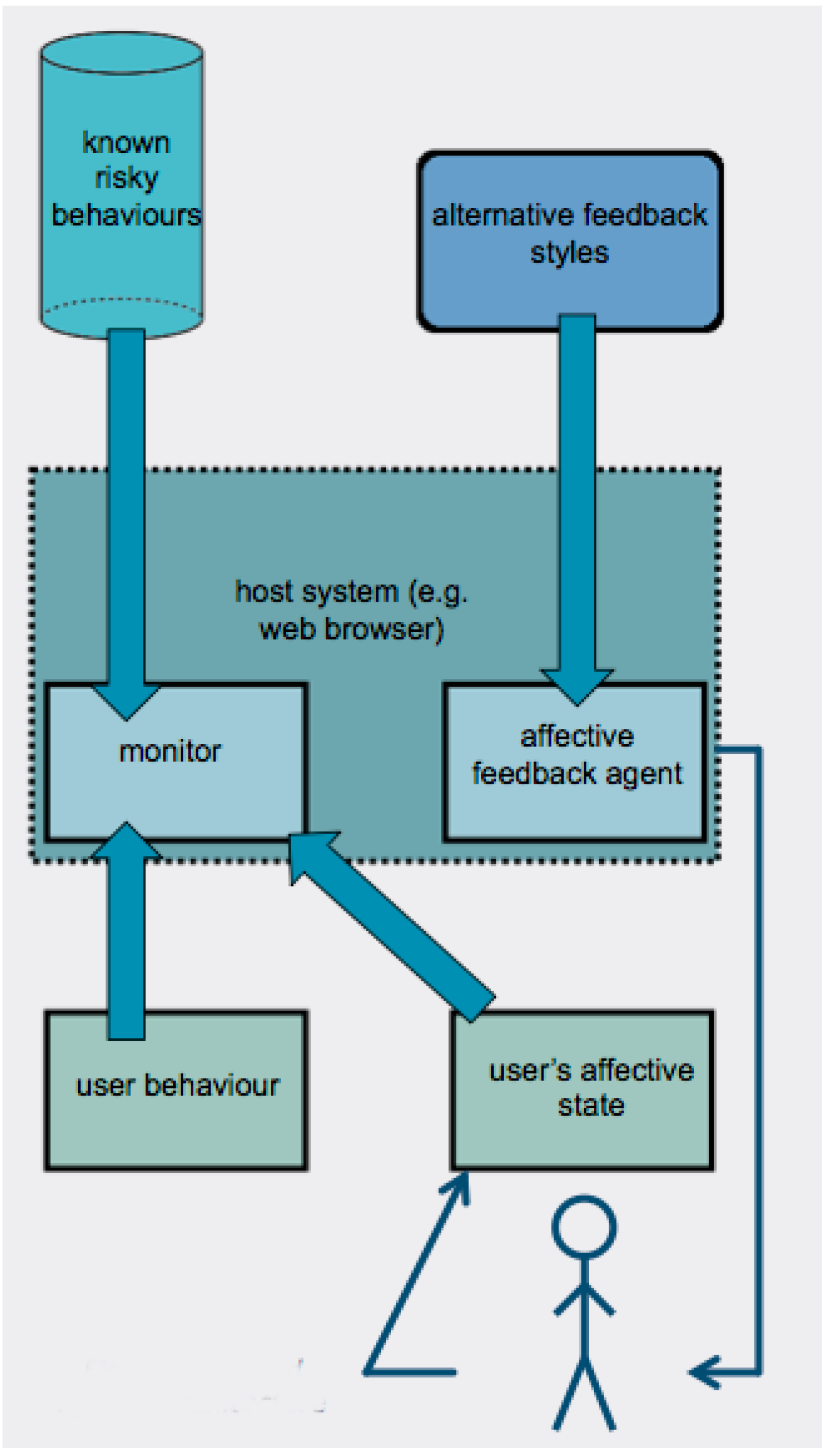

4.1. Proposed System Overview

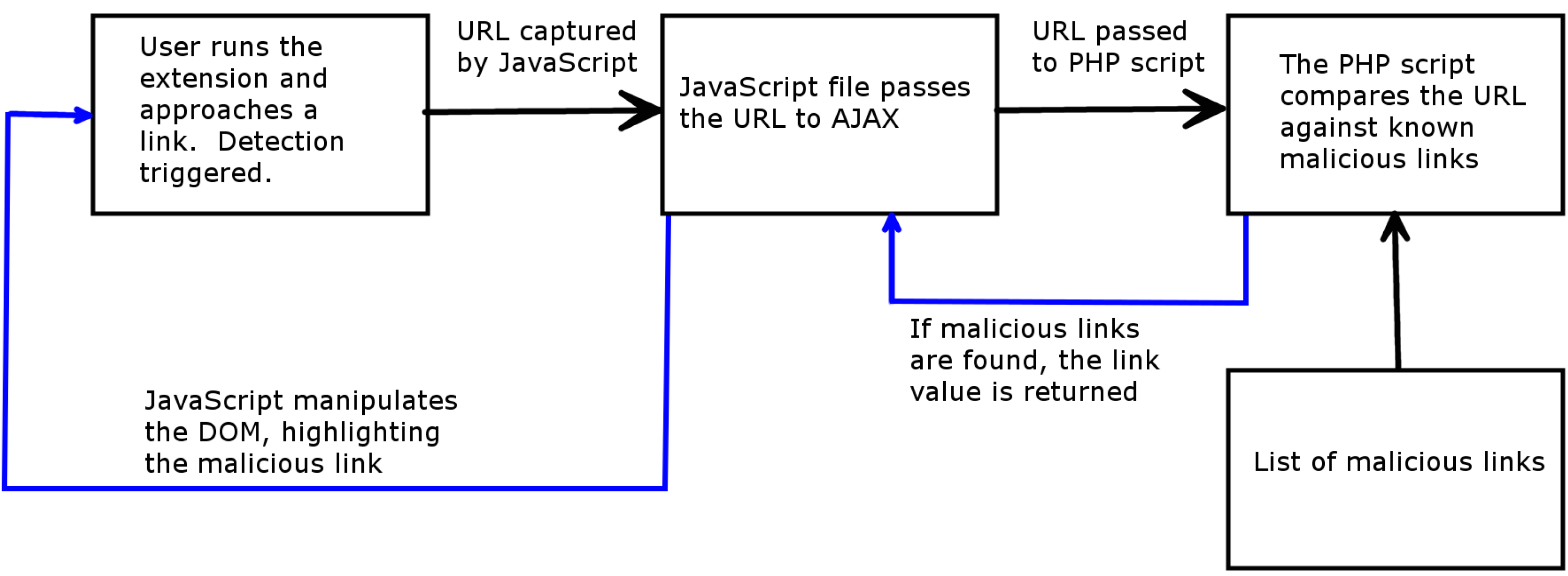

4.2. Technical Details

5. Discussion

6. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Kaspersky Lab. Kaspersky security bulletin 2013. Available online: http://media.kaspersky.com/pdf/KSB_2013_EN.pdf (accessed on 27 April 2014).

- McDarby, G.; Condron, J.; Hughes, D.; Augenblick, N. Affective feedback. Media Lab Europe (2004). Available online: http://medialabeurope.org/mindgames/publications/publicationsAffectiveFeedbackEnablingTechnologies.pdf (accessed on 22 May 2012).

- Robison, J.; McQuiggan, S.; Lester, J. Evaluating the consequences of affective feedback in intelligent tutoring systems. In Proceedings of International Conference on Affective Computing and Intelligent Interaction (ACII 2009), Amsterdam, The Netherlands, 10–12 September 2009; pp. 37–42.

- Hall, L.; Woods, S.; Aylett, R.S.; Newall, L.; Paiva, A.C.R. Achieving Empathic Engagement through Affective Interaction with Synthetic Characters; Tao, J., Tan, T., Picard, R.W., Eds.; Springer: Heidelberg, Germany, 2005; Volume 3784, pp. 731–738. [Google Scholar]

- Li, Y.; Siponen, M. A call for research on home users information security behaviour. In Proceedings of PACIS 2011, Brisbane, QLD, Australia, 7–11 July 2011.

- Stanton, J.M.; Staim, K.R.; Mastrangelob, P.; Jolton, J. Analysis of end user security behaviors. Comput. Secur. 2005, 24, 124–133. [Google Scholar]

- Payne, B.; Edwards, W. A brief introduction to usable security. IEEE Inter. Comput. 2008, 12, 13–21. [Google Scholar] [CrossRef]

- Fetscherin, M. Importance of cultural and risk aspects in music piracy: A cross-national comparison among university students. J. Electron. Commer. Res. 2009, 10, 42–55. [Google Scholar]

- Hadnagy, C. Social Engineering: The Art of Human Hacking; Wiley Publishing: Indianapolis, IN, USA, 2011; pp. 23–24. [Google Scholar]

- Padayachee, K. Taxonomy of compliant information security behavior. Comput. Secur. 2012, 31, 673–680. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 1997; p. 15. [Google Scholar]

- Ur, B.; Kelly, P.G.; Komanduri, S.; Lee, J.; Maass, M.; Mazurek, M.L.; Passaro, T.; Shay, R.; Vidas, T.; Bauer, L.; et al. How does your password measure up? The effect of strength meters on password creation. In Proceedings of Security 2012 the 21st USENIX Conference on Security Symposium, Bellevue, WA, USA, 8–10 August 2012; USENIX Association: Berkeley, CA, USA, 2012. [Google Scholar]

- Dehn, D.; van Mulken, S. The impact of animated interface agents: A review of empirical research. Inter. J. Hum. Comput. Stud. 2000, 52, 1–22. [Google Scholar] [CrossRef]

- Imperva. Cross-Site Scripting. Available online: http://www.imperva.com/Resources/Glossary?term=cross_site_scripting (accessed on 27 April 2014).

- Schechter, S.E.; Dhamija, R.; Ozment, A.; Fischer, I. The emperor’s new security indicators. In Proceedings of 2007 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 20–23 May 2007.

- Kaspersky Lab. Kaspersky Lab Report: 37.3 Million Users Experienced Phishing at-Tacks in the Last Year. 2013. Available online: http://www.kaspersky.com/about/news/press/2013/Kaspersky_Lab_report_37_3_million_users_experienced_phishing_attacks_in_the_last_year (accessed on 27 April 2014).

- FBI. FBI Warns Public That Cyber Criminals Continue to Use Spear-Phishing At-tacks to Compromise Computer Networks. Available online: http://www.fbi.gov/sandiego/press-releases/2013/fbi-warns-public-that-cyber-criminals-continue-to-use-spear-phishing-attacks-to-compromise-computer-networks (accessed on 4 April 2014).

- Furnell, S.M.; Jusoh, A.; Katsabas, D. The challenges of understanding and using security: A survey of end-users. Comput. Secur. 2006, 25, 27–35. [Google Scholar] [CrossRef]

- Bicakci, K.; Yuceel, M.; Erdeniz, B.; Gurbaslar, H.; Atalay, N.B. Graphical passwords as browser extension: Implementation and usability study. In Proceedings of Symposium on Usable Privacy and Security (SOUPS 2009), Mountain View, CA, USA, 15–17 July 2009; ACM: Pittsburgh, PA, USA; pp. 1–17.

- Dhamija, R.; Tygar, J. The battle against phishing: Dynamic security skins. In Proceedings of Symposium on Usable Privacy and Security (SOUPS 2005), Pittsburgh, PA, USA, 6–8 July 2005; pp. 1–12.

- Sheng, S. Anti-phishing phil: The design and evaluation of a game that teaches people not to fall for phish. In Proceedings of Symposium on Usable Privacy and Security (SOUPS 2007), Pittsburgh, PA, USA, 18–20 July 2007; ACM: New York, NY, USA; pp. 1–12.

- Kumaraguru, P.; Cranshaw, K.; Acquistic, A.; Cranor, L.; Hong, J.; Blair, M.A.; Pham, T. School of phish: A real-world evaluation of anti-phishing training. In Proceedings of Symposium on Usable Privacy and Security (SOUPS 2009), Mountain View, CA, USA, 15–17 July 2009; ACM: New York, NY, USA; pp. 1–12.

- Kelley, P.A. “Nutrition Label” for privacy. In Proceedings of Symposium on Usable Privacy and Security (SOUPS 2009), Mountain View, CA, USA, 15–17 July 2009; ACM: New York, NY, USA, 2009; pp. 1–12. [Google Scholar]

- Besmer, A. Social applications: Exploring a more secure framework. In Proceedings of Symposium on Usable Privacy and Security (SOUPS 2009), Mountain View, CA, USA, 15–17 July 2009; ACM: New York, NY, USA, 2009; pp. 1–10. [Google Scholar]

- Maurer, M.; de Luca, A.; Kempe, S. Using data type based security alert dialogs to raise online security awareness. In Proceedings of Symposium on Usable Privacy and Security (SOUPS 2011), Pittsburgh, PA, USA, 20–22 July 2011; pp. 1–13.

- Wu, M.; Miller, C.; Little, G. Web wallet: Preventing phishing attacks by revealing user intentions. In Proceedings of Symposium on Usable Privacy and Security (SOUPS 2006), Pittsburgh, PA, USA, 12–14 July 2006; pp. 1–12.

- Shepherd, L.A.; Archibald, J.; Ferguson, R.I. Perception of risky security behaviour by users: Survey of current approaches. In Proceedings of Human Aspects of Information Security, Privacy, and Trust, Las Vegas, NV, USA, 21–26 July 2013; Volume 8030, pp. 176–185.

- Mozilla Developer Network. SDK and XUL Comparison. Available online: http://developer.mozilla.org/en-US/Add-ons/SDK/Guides/SDK_vs_XUL (accessed on 4 April 2014).

- Ur I.T. Mate Group. hpHosts Online—Simple, Searchable & FREE! Available online: http://hosts-file.net/ (accessed on 4 April 2014).

- Heishman, R.; Duric, Z.; Wechsler, H. Understanding cognitive and affective states using eyelid movements. In Proceedings of First IEEE International Conference on Biometrics: Theory, Applications, and Systems, 2007, Crystal City, VA, USA, 27–29 September 2007; pp. 1–6.

- Doubleday, A.; Ryan, M.; Springett, M.; Sutcliffe, A. A comparison of usability techniques for evaluating design. In Proceedings of the 2nd Conference on Designing Interactive Systems: Processes, Practices, Methods, and Techniques, Amsterdam, The Netherlands, 18–20 August 1997; Coles, S., Ed.; ACM: New York, NY, USA, 1997; pp. 101–110. [Google Scholar]

- Mozilla Developer Network. Local Storage. Available online: https://developer.mozilla.org/en-US/Add-ons/Overlay_Extensions/XUL_School/Local_Storage (accessed on 19 October 2014).

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shepherd, L.A.; Archibald, J.; Ferguson, R.I. Reducing Risky Security Behaviours: Utilising Affective Feedback to Educate Users. Future Internet 2014, 6, 760-772. https://doi.org/10.3390/fi6040760

Shepherd LA, Archibald J, Ferguson RI. Reducing Risky Security Behaviours: Utilising Affective Feedback to Educate Users. Future Internet. 2014; 6(4):760-772. https://doi.org/10.3390/fi6040760

Chicago/Turabian StyleShepherd, Lynsay A., Jacqueline Archibald, and Robert Ian Ferguson. 2014. "Reducing Risky Security Behaviours: Utilising Affective Feedback to Educate Users" Future Internet 6, no. 4: 760-772. https://doi.org/10.3390/fi6040760

APA StyleShepherd, L. A., Archibald, J., & Ferguson, R. I. (2014). Reducing Risky Security Behaviours: Utilising Affective Feedback to Educate Users. Future Internet, 6(4), 760-772. https://doi.org/10.3390/fi6040760