Distributed Performance Measurement and Usability Assessment of the Tor Anonymization Network

Abstract

:1. Introduction

2. Related Work

| Author | Critical Latency Thresholds (s) | Description | Year | Source Classification |

|---|---|---|---|---|

| Tolia [32] | 1 | Thin client response time—annoying | 2006 | Journal |

| Nah [33] | 2 | For simple information retrieval tasks | 2004 | Journal |

| Tolia [32] | 2 | Thin client response time—unacceptable | 2006 | Journal |

| Tolia [32] | 5 | Thin client response time—unusable | 2006 | Journal |

| AccountingWEB [34] | 8 | Optimal web page waiting time | 2000 | Practical advise |

| Bhatti [35] | 8.57 | Average tolerable delay (but high standard deviation of 5.85) | 2000 | Conference |

| Selvidge [36] | 10 | Tolerable delay by users | 1999 | Practical advise |

| Nielson [28] | 10 | Optimal web page waiting time | 1999 | Practical advise |

| Galetta [37] | 12 | Start of significant decrease in user satisfaction | 2004 | Journal |

| Nah [33] | 15 | Free user from physical and mental captivity | 2004 | Journal |

| Ramsay [38] | 41 | Suggestion as cut-off for long delays | 1998 | Journal |

3. Measurement Setup

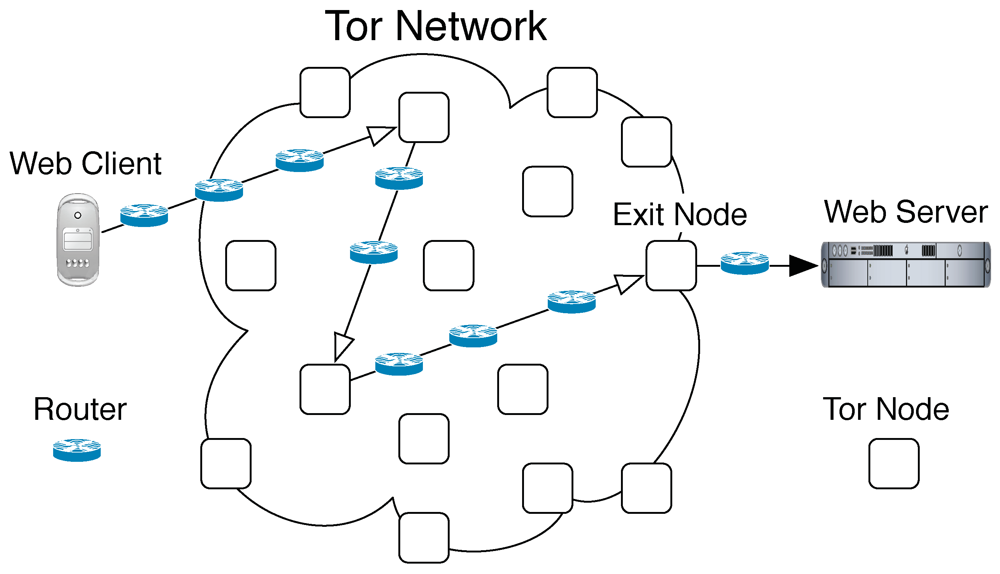

3.1. Introduction to Tor

| Setup | ||||

| Metrics | Locations | Time frame | ||

| HTTP | Latency | Core | Australia | 28 days |

| Page | Brazil | |||

| Download Throughput | Canada | |||

| Germany | ||||

| Russia | ||||

| Taiwan | ||||

| UK | ||||

| USA (2 x) | ||||

| Results | ||||

| Tor vs. direct | Exit Nodes | Daytime Comparison | Mapping of User Cancelation Rates | |

3.2. Metrics

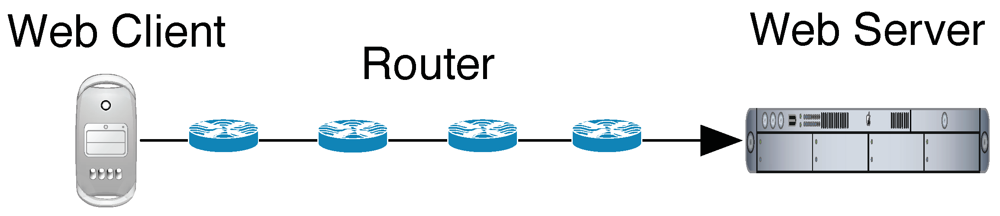

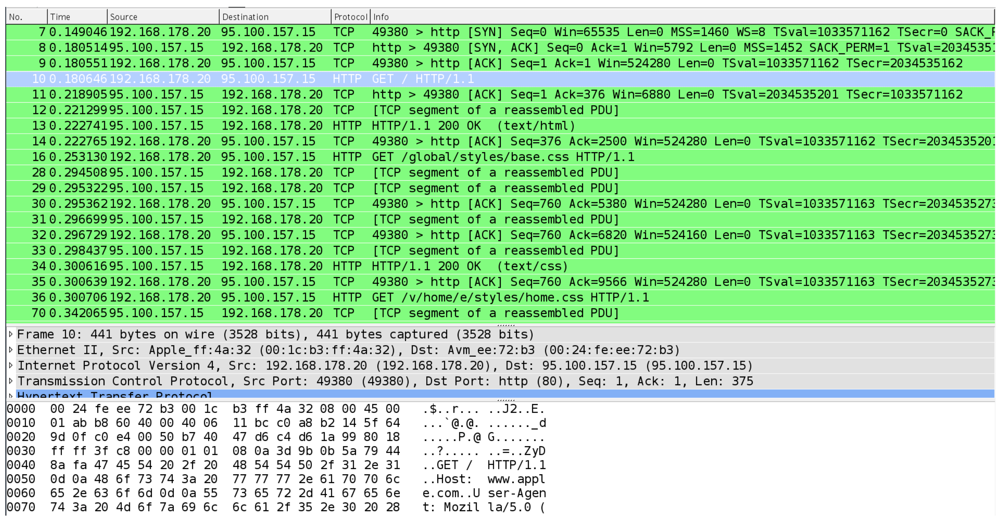

3.2.1. Core Latency: Duration of the First HTTP Request

3.2.2. Page Latency: Duration of a Complete Web Page Download

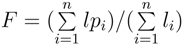

, where n is the number of web pages (here 500), lp the latency with wget -p, and l the latency with wget. Both latencies were measured 5 times in order to flatten one-time effects. Our results indicate an extrapolation factor F of 2.4, which means that on the average the following approximation holds: Page Latency

, where n is the number of web pages (here 500), lp the latency with wget -p, and l the latency with wget. Both latencies were measured 5 times in order to flatten one-time effects. Our results indicate an extrapolation factor F of 2.4, which means that on the average the following approximation holds: Page Latency  Core Latency. Parallel control experiments using the Yslow plugin for Firefox indicated that this approach provides a good estimation in the average case for our set of websites. However, current web browser download web pages via parallel connections which should speed up the download of a complete web page.

Core Latency. Parallel control experiments using the Yslow plugin for Firefox indicated that this approach provides a good estimation in the average case for our set of websites. However, current web browser download web pages via parallel connections which should speed up the download of a complete web page.3.2.3. Download Throughput via HTTP

3.3. Experimental Setup on PlanetLab

| PlanetLab Nodes as Web Clients | Country | Uptime (days) | HTTP Requests | Downloads |

|---|---|---|---|---|

| pl2.eng.monash.edu.au | Australia | 38 | 592,773 | 43,212 |

| planetlab2.pop-parnp.br | Brazil | 38 | 525,863 | 42,712 |

| mercury.silicon-valley.ru | Russia | 38 | 605,313 | 44,850 |

| planetlab2.aston.ac.uk | United Kingdom | 36 | 418,217 | 37,023 |

| planetlab2.wiwi.hu-berlin.de | Germany | 33 | 464,604 | 37,700 |

| planet-lab1.cs.ucr.edu | USA (West Coast) | 30 | 325,305 | 38,759 |

| orbpl1.rutgers.edu | USA (East Coast) | 25 | 279,339 | 24,684 |

| planetlab02.erin.utoronto.ca | Canada | 24 | 355,481 | 32,747 |

| adam.ee.ntu.edu.tw | Taiwan | 23 | 252,936 | 25,598 |

3.4. Time Frame

4. Measurement Results

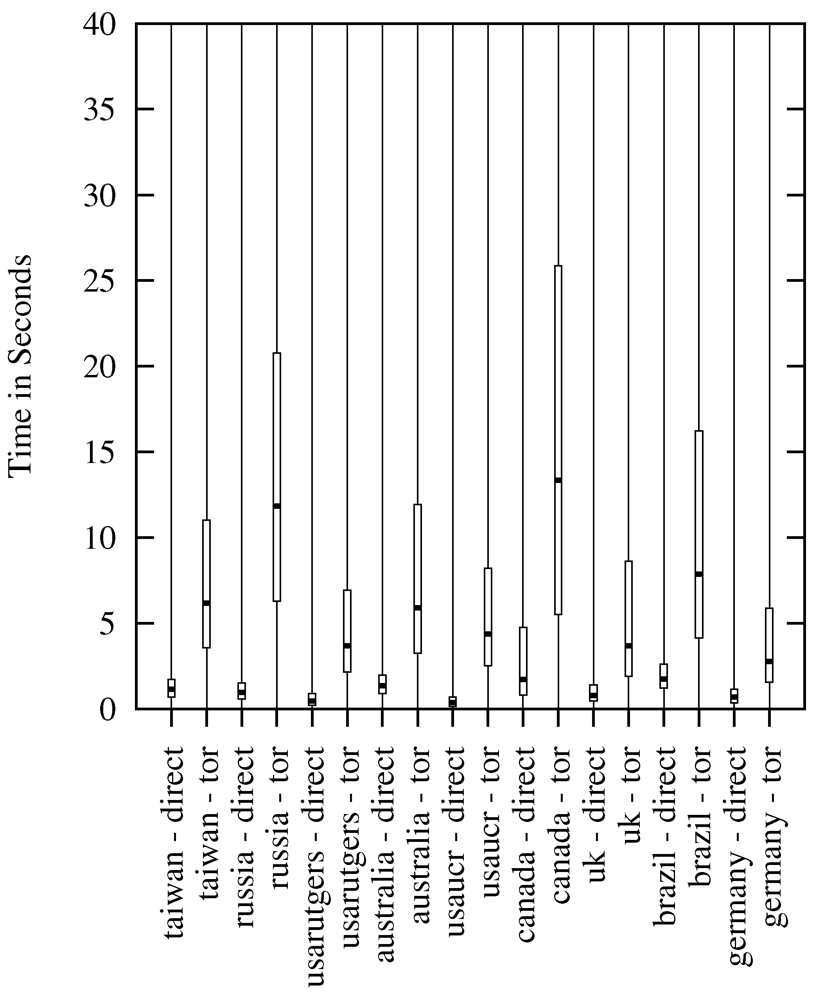

4.1. HTTP Requests

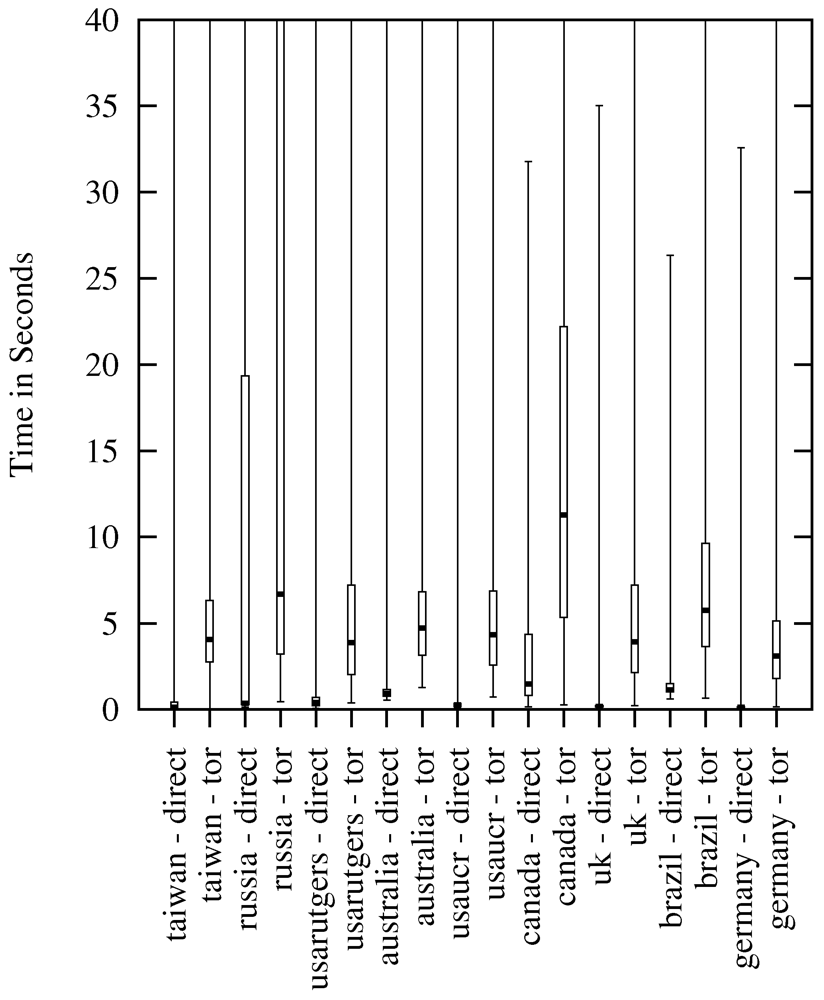

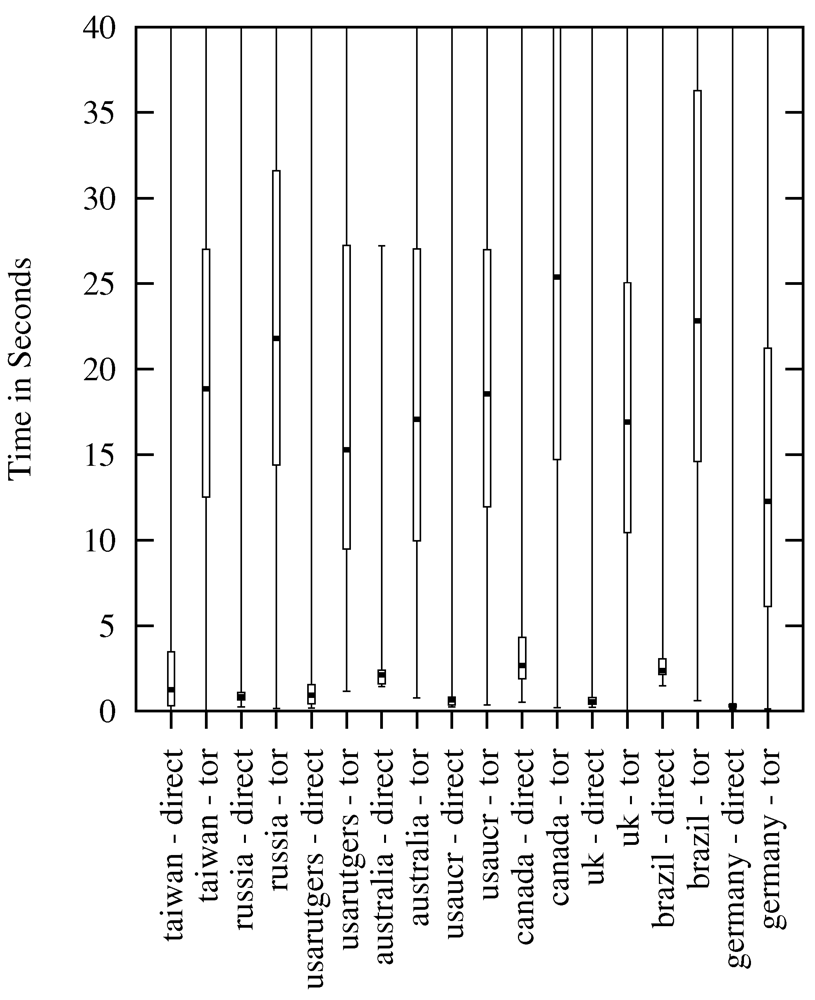

4.2. Download Requests

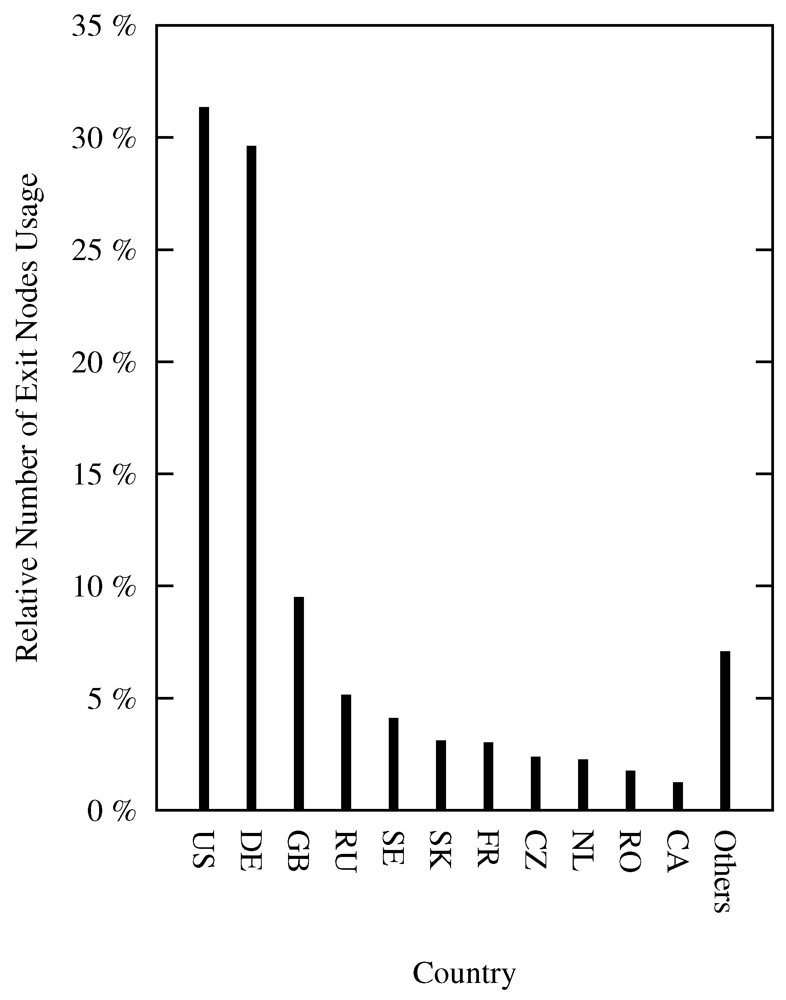

4.3. Exit Nodes

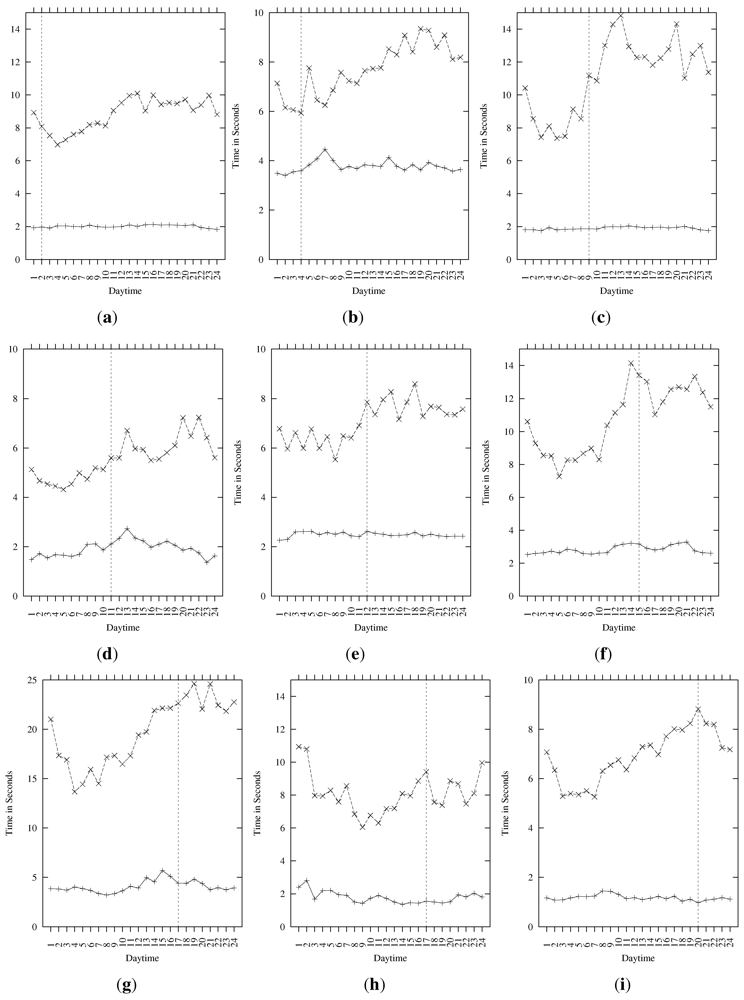

4.4. Daytime View

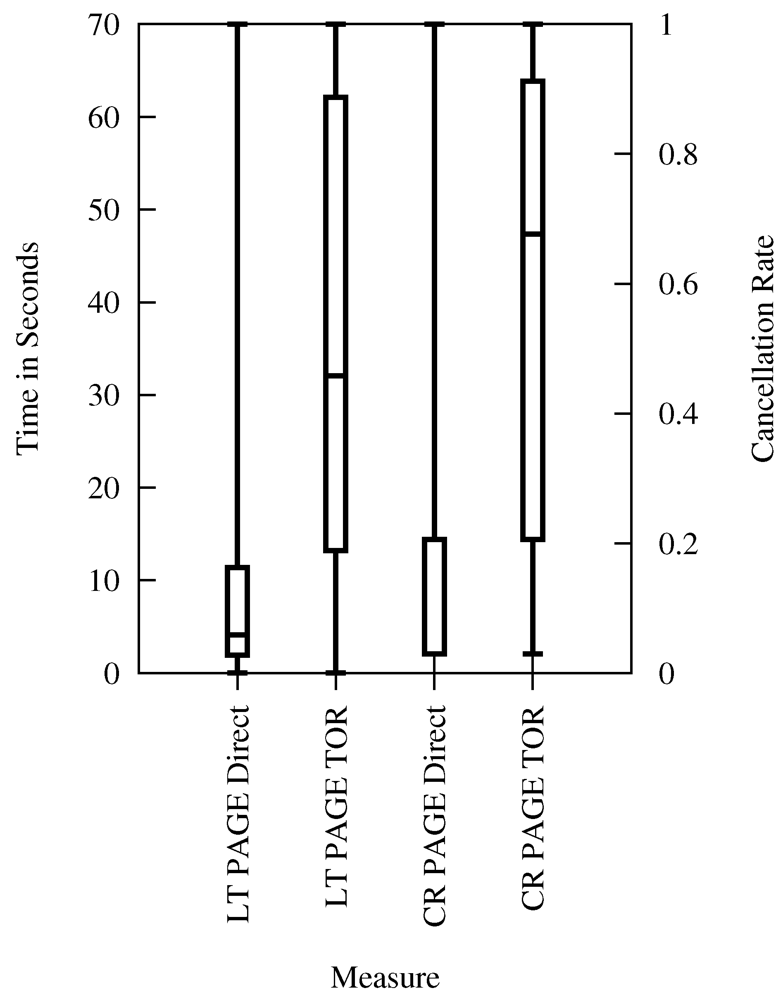

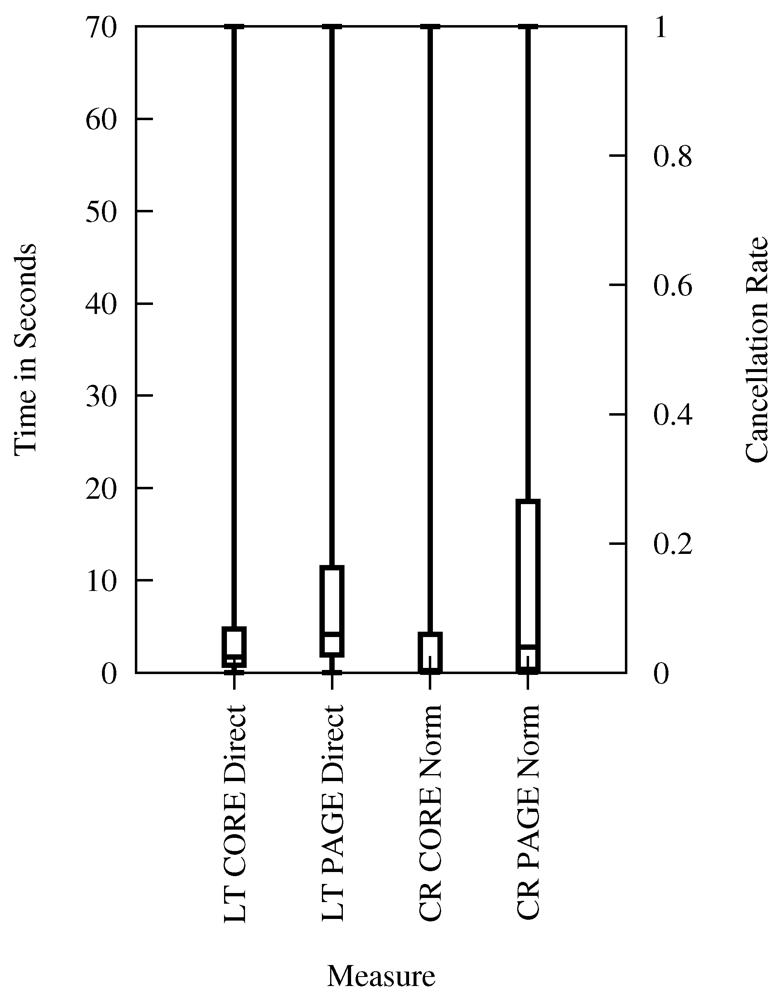

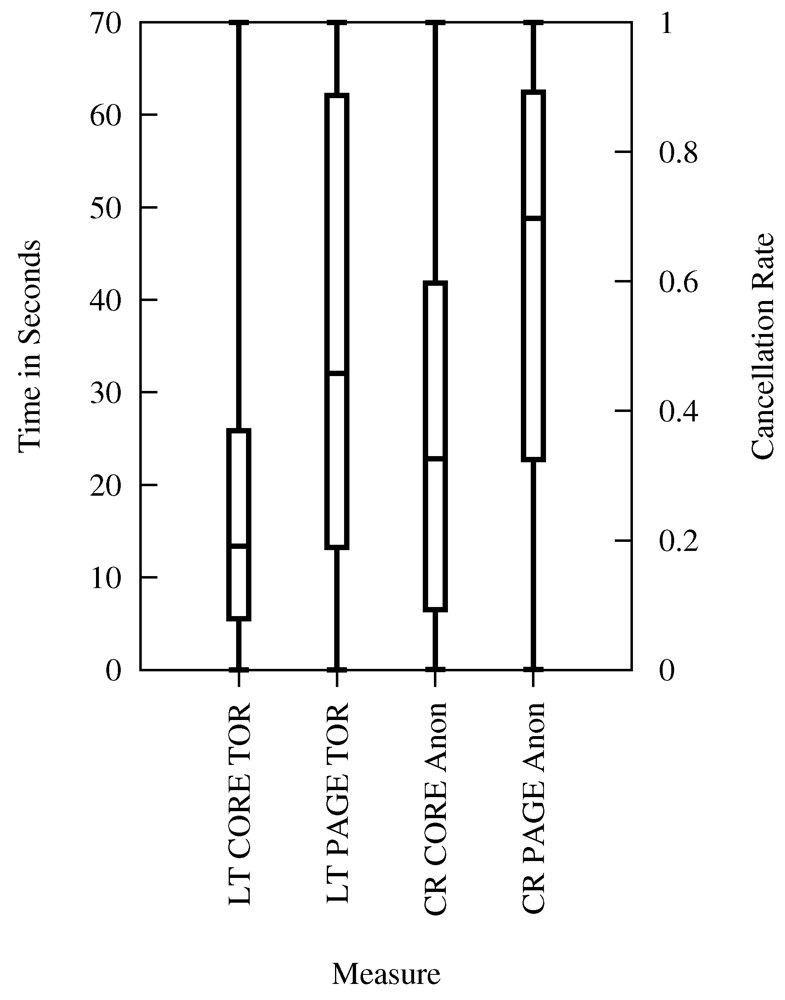

5. Interpretation of Results with Respect to Usability

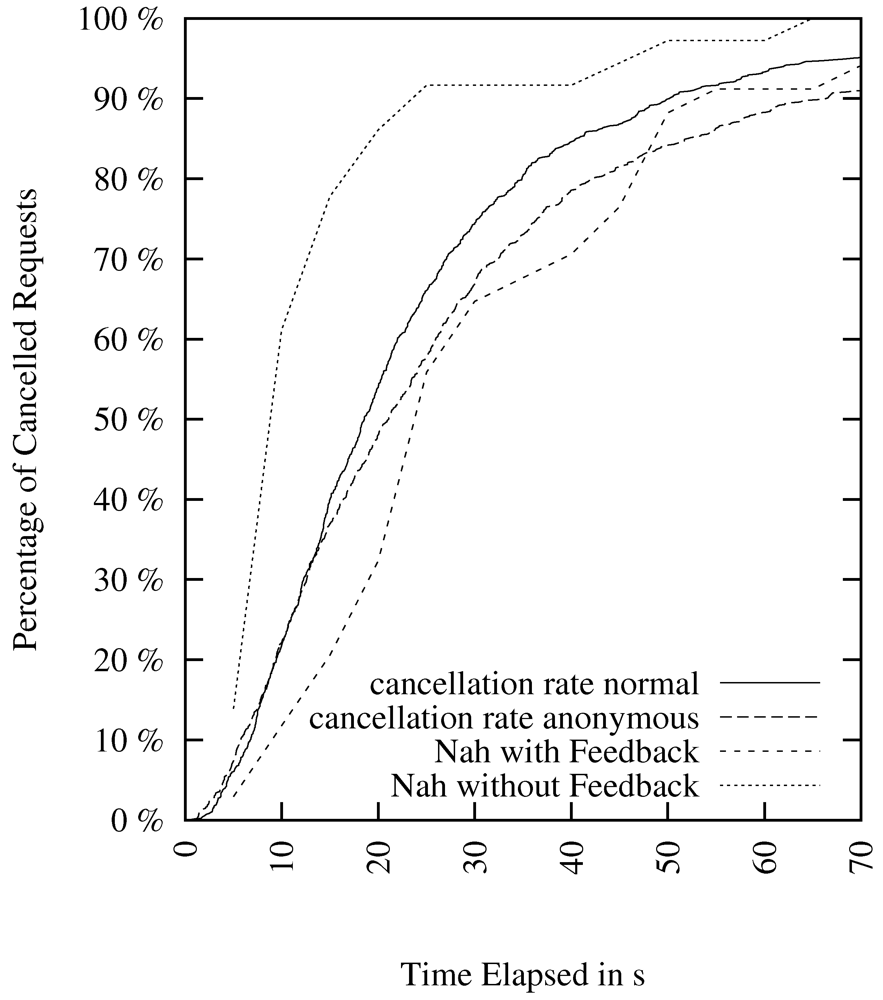

possible combinations, Table 4 shows the meaningful mappings. The results from the lab experiment with feedback can be mapped to page latency because the user is given feedback during loading of the page (the page builds up stepwise). The lab experiment without feedback should be mapped to core latency because the user gets no detailed visual progress feedback until first data is retrieved.

possible combinations, Table 4 shows the meaningful mappings. The results from the lab experiment with feedback can be mapped to page latency because the user is given feedback during loading of the page (the page builds up stepwise). The lab experiment without feedback should be mapped to core latency because the user gets no detailed visual progress feedback until first data is retrieved. | Type of Cancelation Rate | Direct, Core Latency | Tor, Core Latency | Direct, Page Latency | Tor, Page Latency |

|---|---|---|---|---|

| Lab with Feedback | – | – | X | X |

| Lab without Feedback | X | X | – | – |

| Stated Direct | X | – | X | – |

| Stated Anonymous | – | X | – | X |

6. Limitations and Future Work

7. Conclusions

References

- Amnesty International. Undermining Freedom of Expression in China. 2006. Available online: http://www.amnestyusa.org/business/Undermining_Freedom_of_Expression_in_China.pdf (accessed on 8 May 2012).

- Dingledine, R.; Mathewson, N.; Syverson, P. Tor: The Second-Generation Onion Router. In Proceedings of the 13th USENIX Security Symposium, San Diego, CA, USA, 9–13 August 2004.

- Tor Project Web Site. 2010. Available online: https://www.torproject.org/ (accessed on 8 May 2012).

- Dingledine, R.; Mathewson, N. Anonymity Loves Company: Usability and the Network Effect. In Proceedings of the 5th Workshop on the Economics of Information Security (WEIS 2006), Cambridge, UK, 26–28 June 2006.

- Palme, J.; Berglund, M. Anonymity on the Internet. 2002. Available online: http://people.dsv.su.se/jpalme/society/anonymity.html (accessed on 8 May 2012).

- Vitone, D. Anonymous Networks. 2008. Available online: http://blag.cerebralmind.net/wp-content/uploads/2008/05/tor.pdf (accessed on 8 May 2012).

- Loesing, K.; Murdoch, S.J.; Dingledine, R. A Case Study on Measuring Statistical Data in the Tor Anonymity Network. In Proceedings of the Workshop on Ethics in Computer Security Research (WECSR 2010), Canary Islands, Spain, 28–29 January 2010.

- Dingledine, R.; Murdoch, S.J. Performance Improvements on Tor. 2009. Available online: https://www.torproject.org/press/presskit/2009-03-11-performance.pdf (accessed on 8 May 2012).

- Loesing, K. Measuring the Tor Network from Public Directory Information. In Proceedings of the 2nd Hot Topics in Privacy Enhancing Technologies (HotPETs), Seattle, WA, USA, 5–7 August 2009.

- PlanetLab User’s Guide. 2011. Available online: http://www.planet-lab.org/doc/guides/user (accessed on 8 May 2012).

- Wright, T. Security, privacy, and anonymity. ACM 2004, 11. [Google Scholar] [CrossRef]

- Mannan, M.; van Oorschot, P.C. Security and Usability: The Gap in Real-World Online Banking. In Proceedings of the 2007 Workshop on New Security Paradigms, North Conway, NH, USA, 18–21 September 2007; pp. 1–14.

- Acquisti, A.; Dingledine, R.; Syverson, P. On the Economics of Anonymity. In Proceedings of the Financial Cryptography (FC ’03); Wright, R.N., Ed.; Springer: Berlin, Germany, 2003; pp. 84–102. [Google Scholar]

- Bellovin, S.M.; Clark, D.D.; Perrig, A. A Clean-Slate Design for the Next-Generation Secure Internet. Available online: http://mars.cs.kent.edu/peyravi/Net208S/Lec/NextGenInternet.pdf (accessed on 10 May 2012).

- Chaum, D. Untraceable electronic mail, return addresses, and digital pseudonyms. Commun. ACM 1981, 24, 84–88. [Google Scholar] [CrossRef]

- Danezis, G. Mix-Networks with Restricted Routes. In Proceedings of the 3rd Privacy Enhancing Technologies Workshop (PET 2003); Dingledine, R., Ed.; Springer: Berlin, Germany, 2003; 2760, pp. 1–17. [Google Scholar]

- Diaz, C.; Murdoch, S.J.; Troncoso, C. Impact of Network Topology on Anonymity and Overhead in Low-Latency Anonymity Networks. In Proceedings of the 10th International Symposium on Privacy Enhancing Technologies (PETS 2010), Berlin, Germany, 21–23 July 2010.

- Murdoch, S.J.; Watson, R.N.M. Metrics for Security and Performance in Low-Latency Anonymity Networks. In Proceedings of the 8th International Symposium on Privacy Enhancing Technologies (PETS 2008); Springer: Berlin, Germany, 2008; pp. 115–132. [Google Scholar]

- Loesing, K.; Sandmann, W.; Wilms, C.; Wirtz, G. Performance Measurements and Statistics of Tor Hidden Services. In Proceedings of the 2008 International Symposium on Applications and the Internet (SAINT); IEEE CS Press: Turku, Finland, 2008. [Google Scholar]

- Lenhard, J.; Loesing, K.; Wirtz, G. Performance Measurements of Tor Hidden Services in Low-Bandwidth Access Networks. In Proceedings of the 7th International Conference on Applied Cryptography and Network Security (ACNS 09), Paris-Rocquencourt, France, 2–5 June 2009; 5536.

- Tor Metrics Portal. 2010. Available online: http://metrics.torproject.org/ (accessed on 8 May 2012).

- Fabian, B.; Goertz, F.; Kunz, S.; Müller, S.; Nitzsche, M. Privately Waiting—A Usability Analysis of the Tor Anonymity Network. In Proceedings of the 16th Americas Conference on Information Systems (AMCIS 2010); Springer: Berlin, Germany, 2010; 58. [Google Scholar]

- McCoy, D.; Bauer, K.; Grunwald, D.; Kohno, T.; Sicker, D. Shining Light in Dark Places: Understanding the Tor Network. In Proceedings of the 8th International Symposium on Privacy Enhancing Technologies (PETS 2008); Springer: Berlin, Germany, 2008; pp. 63–76. [Google Scholar]

- Perry, M. TorFlow: Tor Network Analysis. Available online: http://fscked.org/talks/TorFlow-HotPETS-final.pdf (accessed on 10 May 2012).

- Dhungel, P.; Steiner, M.; Rimac, I.; Hilt, V.; Ross, K.W. Waiting for Anonymity: Understanding Delays in the Tor Overlay. In Proceedings of the IEEE 10th International Conference on Peer-to-Peer Computing (P2P 2010), Delft, The Netherlands, 25–27 August 2010.

- Hopper, N.; Vasserman, E.Y.; Chan-Tin, E. How much anonymity does network latency leak? ACM Trans. Inf. Syst. Secur. 2010, 13. [Google Scholar] [CrossRef]

- Brecht, F.; Fabian, B.; Kunz, S.; Müller, S. Are You Willing to Wait Longer for Internet Privacy? In Proceedings of the European Conference on Information Systems (ECIS 2011), Helsinki, Finland, 9–11 June 2011.

- Nielson, J. “Top Ten Mistakes" in Web design—Revisited Three Years Later. Available online: http://www.useit.com/alertbox/990502.html (accessed on 8 May 2012).

- Rose, G.; Khoo, H.; Straub, D.W. Current technological impediments to business-to-consumer electronic commerce. Commun. AIS 1999, 1, 1. [Google Scholar]

- Ryan, G.; Valverde, M. Waiting online: A review and research agenda. Int. Res. Electron. Netw. Appl. Policy 2003, 13, 195–205. [Google Scholar] [CrossRef]

- Stockport, G.J.; Kunnath, G.; Sedick, R. Boo.com—The path to failure. J. Interact. Mark. 2001, 15, 56–70. [Google Scholar] [CrossRef]

- Tolia, N.; Andersen, D.; Satyanarayanan, M. Quantifying interactive user experience on thin clients. IEEE Comput. 2006, 39, 46–52. [Google Scholar]

- Nah, F.F. A study on tolerable waiting time: How long are web users willing to wait? Behav. Inf. Technol. 2004, 23, 153–163. [Google Scholar] [CrossRef]

- AccountingWEB. Is Your Web Site Too Big? 2000. Available online: http://www.accountingweb.com/item/29331 (accessed on 8 May 2012).

- Bhatti, N.; Bouch, A.; Kuchinsky, A. Integrating user-perceived quality into Web server design. Comput. Netw. (Amst. Neth. 1999) 2000, 33, 1–16. [Google Scholar]

- Selvidge, P. How Long is Too Long to Wait for a Website to Load? 1999. Available online: http://www.surl.org/usabilitynews/12/time_delay.asp (accessed on 8 May 2012).

- Galletta, D.F.; Henry, R.M.; McCoy, S.; Polak, P. Web site delays: How tolerant are users? J. AIS 2004, 5, 1–28. [Google Scholar]

- Ramsay, J.; Barbesi, A.; Preece, J. A psychological investigation of long retrieval times on the World Wide Web. Interact. Comput. 1998, 10, 77–86. [Google Scholar] [CrossRef]

- Huber, M.; Mulazzani, M.; Weippl, E. Tor HTTP Usage and Information Leakage. In Proceedings of the Communications and Multimedia Security; Springer: Berlin, Germany, 2010; 6109, pp. 245–255. [Google Scholar]

- SEOmoz: The 500 Most Important Websites on the Internet. 2011. Available online: http://www.seomoz.org/top500 (accessed on 8 May 2012).

- Wikipedia. Goodput. 2011. Available online: https://secure.wikimedia.org/wikipedia/en/wiki/Goodput (accessed on 8 May 2012).

- Peterson, L.; Pai, V.S. Experience-driven experimental systems research. Commun. ACM 2007, 50, 38–44. [Google Scholar] [CrossRef]

- Tor partially blocked in China. 2009. Available online: https://blog.torproject.org/blog/tor-partially-blocked-china/ (accessed on 8 May 2012).

- Talbot, D. China Cracks Down on Tor Anonymity Network. Technology Review. 2009. Available online: http://www.technologyreview.com/web/23736/ (accessed on 8 May 2012).

- Berger, M. Tor Nodes Statistics. 2011. Available online: http://www.dianacht.de/torstat/ (accessed on 8 May 2012).

- Panchenko, A.; Renner, J. Path Selection Metrics for Performance-Improved Onion Routing. In Proceedings of the 2009 9th Annual International Symposium on Applications and the Internet; IEEE Computer Society: Washington, DC, USA, 2009; pp. 114–120. [Google Scholar]

- Map of all Google data center locations. Pingdom Blog. 2008. Available online: http://royal.pingdom.com/2008/04/11/map-of-all-google-data-center-locations/ (accessed on 8 May 2012).

- CDN performance. 2010. Available online: http://royal.pingdom.com/2010/05/11/cdn-performance-downloading-jquery-from-google-microsoft-and-edgecast-cdns/ (accessed on 8 May 2012).

- Utrace—Locate IP Addresses and Domain Names. 2011. Available online: http://www.utrace.de/ (accessed on 8 May 2012).

- New Cisco Study Reveals Peak Internet Traffic Increases Due to Social Networking and Broadband Video Usage. 2009. Available online: http://newsroom.cisco.com/dlls/2009/prod_102109.html (accessed on 8 May 2012).

- Cisco Visual Networking Index: Usage Study. 2010. Available online: http://www.cisco.com/en/US/solutions/collateral/ns341/ns525/ns537/ns705/Cisco_VNI_Usage_WP.html (accessed on 8 May 2012).

- Roger Dingledine and Nick Mathewson. 2011. Available online: https://git.torproject.org/checkout/tor/master/doc/spec/path-spec.txt (accessed on 8 May 2012).

- Herrmann, M.; Grothoff, C. Privacy-Implications of Performance-Based Peer Selection by Onion-Routers: A Real-World Case Study Using I2P. In Proceedings of the 11th International Symposium on Privacy Enhancing Technologies (PETS 2011); Springer: Berlin, Germany, 2011; pp. 155–174. [Google Scholar]

© 2012 by the authors; licensee MDPI, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Müller, S.; Brecht, F.; Fabian, B.; Kunz, S.; Kunze, D. Distributed Performance Measurement and Usability Assessment of the Tor Anonymization Network. Future Internet 2012, 4, 488-513. https://doi.org/10.3390/fi4020488

Müller S, Brecht F, Fabian B, Kunz S, Kunze D. Distributed Performance Measurement and Usability Assessment of the Tor Anonymization Network. Future Internet. 2012; 4(2):488-513. https://doi.org/10.3390/fi4020488

Chicago/Turabian StyleMüller, Sebastian, Franziska Brecht, Benjamin Fabian, Steffen Kunz, and Dominik Kunze. 2012. "Distributed Performance Measurement and Usability Assessment of the Tor Anonymization Network" Future Internet 4, no. 2: 488-513. https://doi.org/10.3390/fi4020488

APA StyleMüller, S., Brecht, F., Fabian, B., Kunz, S., & Kunze, D. (2012). Distributed Performance Measurement and Usability Assessment of the Tor Anonymization Network. Future Internet, 4(2), 488-513. https://doi.org/10.3390/fi4020488