1. Introduction

Awareness of fake profiles on social media needs an urgent response because in the current age, new fraudulent methods and technological development exist [

1]. The use and abuse of artificial intelligence (AI) to create fake accounts have been of great concern lately as accounts have taken on a realistic touch. These AI-generated profiles use realistic-looking images and characters to masquerade as real profiles. These accounts are mainly created to spread false information and to scam via networks that are operated predominantly on Twitter platforms [

2]. Such extremely sophisticated fake account systems pose serious risks to the existence of online communities as well as shared information. The essence of machine learning algorithms in the detection of fake profiles is to investigate numerous attributes of accounts, which include interactions among the accounts, behaviors, and network properties. New exciting methods are being developed by technology developers, including keystroke dynamics analysis [

3]. These also involve the analysis of typographic elements using behavioral biometrics to detect anomalies that fake accounts display. Other recent technologies have also made it possible to come up with better tools for detecting fake accounts using advanced systems offering new realistic capabilities against advanced threats. The area continues to present significant challenges, though. The rapid progress of AI enables scammers to create more sophisticated fake accounts that remain beyond existing protection and detection levels [

4]. The creation of deepfake technology poses serious issues in information authentication, where fabricated works produced using deepfake are extremely realistic to both humans and mechanization systems. Deactivation of fraudulent accounts in social media platforms, like Meta, is slow. Financial delays also lead to two types of negative outcomes, which create monetary losses to users and discredit real businesses and individual reputations [

5].

In recent years, the industrial relevance of AI-powered social media monitoring systems has become critical, especially as organizations and platforms struggle to ensure security, transparency, and trust. The threat posed by fake profiles not only affects individual users but also damages public institutions, influences democratic processes, and undermines the integrity of large-scale digital ecosystems. Therefore, robust AI-driven frameworks that are scalable, interpretable, and resistant to adversarial manipulation are now essential to the future of secure social computing.

RoBERTa, built on the BERT architecture, demonstrates a strong capability in capturing deep semantic meaning in textual data, outperforming conventional methods in tasks such as sentiment analysis and fake news detection. It is employed to identify fraudulent content in user-generated text, including bios, posts, and comments. ConvNeXt, a state-of-the-art convolutional model, enhances accuracy in image classification and is effective for verifying profile images and detecting manipulated visuals. Hetero-GAT, designed to model complex relationships within social networks, identifies irregular patterns by analyzing interactions such as likes, comments, and shares. When combined, these models provide a comprehensive solution for detecting fake profiles by integrating textual, visual, and social network data.

Counterfeit profiles have major negative effects on brand benefits, including decreasing consumer trust, which is essential in brand equity. In addition to undermining the security of these platforms, such issues as misinformation, scamming, and impersonation ruin the authenticity of user interactions, subsequently causing lower engagement and brand value. The marketing literature exposes that digital trust is central in brand credibility, and misleading accounts that may be used to provide fraudulent brand equity lead to the loss of brand equity as the consumer perception and use of the brand are being distorted. Protecting logos and intellectual property is not the only way to take advantage of brand credibility; ideally, brand credibility should result in the provision of genuine interactions that stimulate consumer confidence and their desirable loyalty. These factors are critical to the maintenance of the reputation of a brand.

Fake social media profiles pose a significant problem that compromises the security, integrity, and reliability of all online platforms. Online career profiles lacking authenticity serve criminal purposes, including financial crimes, fraudulent promotion activities, and fake coordination scheme operations [

6]. AI technology has become so advanced in creating artificial profiles that detection has become very complex. Due to the implementation of deepfake technology, three key issues have emerged, including identity theft and privacy breaches, as well as the defamation of individuals through the creation of highly realistic fake images and videos. Addressing these issues can be achieved through a proper solution to the current situation, which involves improving safety standards and user reliability on social media platforms [

7]. Adequate identification and removal of fake profiles in digital interactions ensures online integrity and effectively prevents the spread of misinformation and the activities of online scammers. The resolution to this problem generates several benefits for user data security and democratic practices by minimizing the influence of fake accounts in societal discussions [

8]. This study undertakes the effort to develop safe digital communities through sophisticated determination techniques based on machine learning, behavioral analytics, and biometric verification technology.

Fake profiles have significant economic implications, contributing to ad fraud, scam-induced losses, and influencer marketing fraud, costing businesses billions annually. Consumer purchase decisions are heavily influenced by trust signals on social media, such as reviews, follower authenticity, and endorsements. Studies have shown that consumers are more likely to convert, remain loyal, and retain long-term relationships with brands they trust. Trust in digital platforms directly impacts conversion rates, with fraudulent accounts undermining these trust signals. As such, combating fake profiles is crucial not only for security but also for maintaining financial integrity and customer behavior stability in digital ecosystems [

9].

Platform credibility serves as a valuable marketing asset, as platforms with high trust ratings attract advertisers, partners, and influencers seeking to reach authentic audiences. By reducing fake profiles, platforms can differentiate themselves in a competitive market, marketing features like “99% verified accounts” to highlight their commitment to authenticity and security. Implementing an AI-driven system to detect and mitigate fake profiles not only protects the platform but also directly ties to customer acquisition. Users are more likely to engage with platforms they perceive as safe and authentic, enhancing user retention and fostering a trustworthy digital environment for all stakeholders.

The AI model should be viewed not just as a technical security layer but as an integral part of brand risk management. By detecting fake profiles and impersonation accounts, this AI-driven system can be incorporated into brand monitoring tools to identify and mitigate threats targeting corporate brands or public figures. For instance, companies like Twitter and Facebook have faced significant brand damage due to the proliferation of fake accounts and impersonation, which has led to consumer mistrust and diminished brand value. By proactively addressing these risks, brands can better safeguard their reputation, preserve customer trust, and prevent financial losses.

Moreover, as the use of AI technologies grows in industrial sectors, including healthcare, finance, the Internet of Things, and autonomous systems, ensuring the security of social media platforms becomes central to cybersecurity measures in all organizations. This study also aligns with new trends in AI applications, focusing on the ethical use of AI, leveraging unstructured information across various fields of application, and achieving operational speed in responding to online hostilities in real time.

RoBERTa, ConvNeXt, and Hetero-GAT each demonstrate strong performance individually, but their integration into a unified model remains limited. Existing approaches often focus solely on either textual or visual features, which restricts their ability to detect advanced fake profiles. A more robust solution emerges by integrating textual, visual, and social interaction information. Furthermore, many existing models lack scalability across platforms and are vulnerable to adversarial attacks. The proposed framework combines RoBERTa, ConvNeXt, Hetero-GAT, and attention mechanisms, providing adaptability to evolving deception strategies and enabling real-time detection across diverse platforms with large-scale data volumes.

The increasing prevalence of fake profiles on social media poses significant adverse effects, making online authenticity checkups a critical necessity, as online platforms now encompass all facets of human communication, including economic operations and learning [

10]. Fake profile users generate four major issues by distributing inaccurate information and engaging in financial manipulations that not only affect the privacy and anonymity of individuals but also misinform others, harming their minds. These threats erode trust in the digital ecosystem, as they compromise individual safety and societal institutions and democratic structures [

11]. This study responds to the need to combat new deceptions generated by AI-created artificial profiles and fake content. The current state-of-the-art threats require innovative solution strategies, as the existing protection mechanisms are not effective enough. This study employs artificial intelligence techniques and incorporates behavioral information analysis and session authentication, delivering improved potential for detecting fake accounts [

12]. The interrogation aims to create social media spaces that offer stronger security guarantees and credibility. To address this issue, the proposed research aims to develop a reliable detection technique that assists social media networks, cybersecurity experts, and government officials involved in the issue of deception [

13]. The project aims to establish two key points: one is the adoption of new technologies, and the other concerns operational advantages that protect users against threats while simultaneously maintaining data credibility and fostering better online communities [

14].

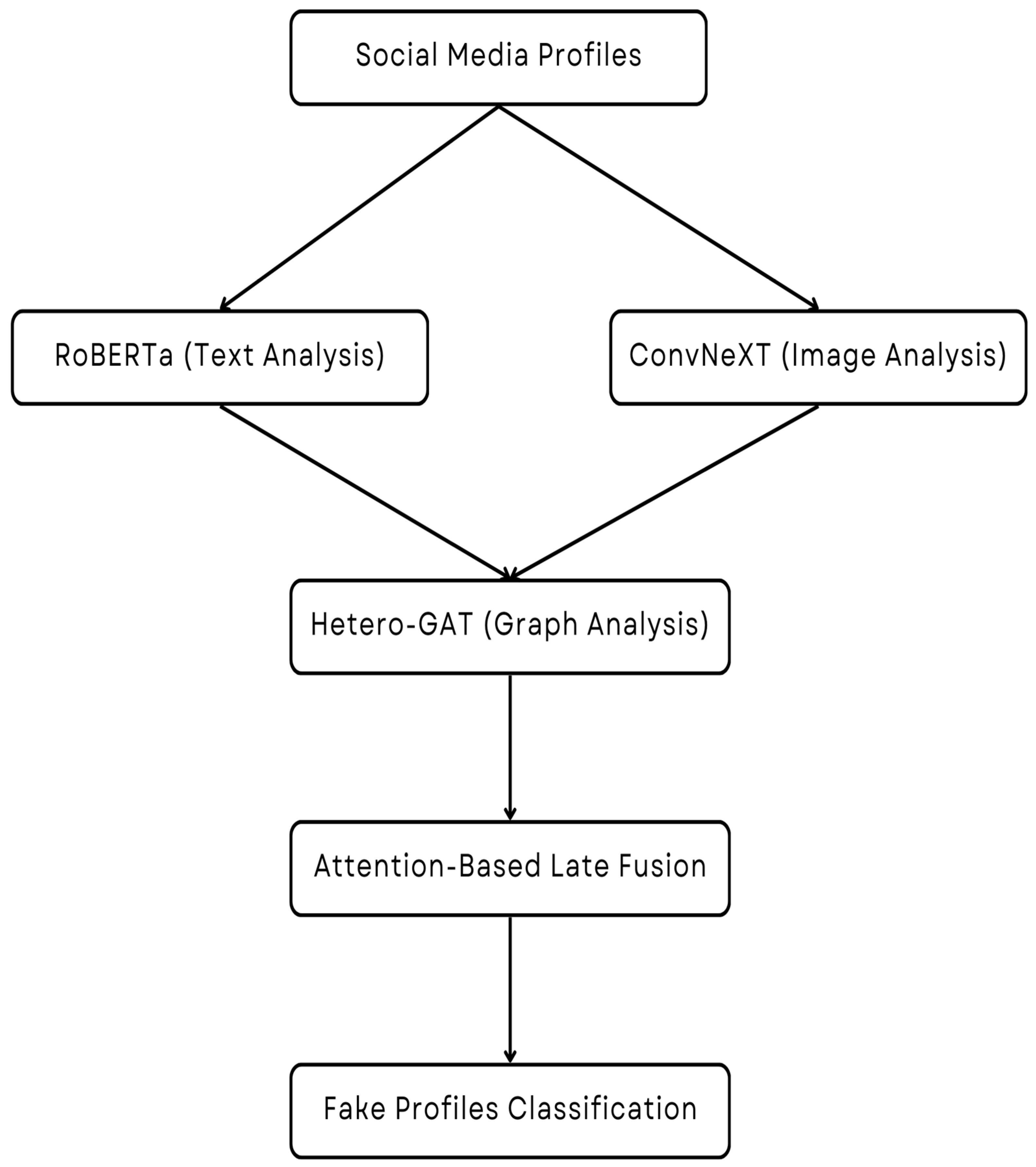

The proposed framework combines RoBERTa to analyze written text, convNeXt to verify the authenticity of images, and Hetero-GAT to investigate complex social networks. A late fusion on an attention-based approach can enable the incorporation of information across various modalities and the precise and understandable detection of fake profiles within the scheme. The ultimate goal is to address the significant issues of cybersecurity and misinformation by implementing a comprehensive plan that enhances the authenticity of online communication.

Despite advancements in fake profile detection, existing methods often focus on isolated modalities (text, image, or network interactions) and struggle to capture the full complexity of deceptive profiles. Many approaches fail to scale effectively across different platforms, and few are designed to withstand adversarial manipulation. Furthermore, many models lack interpretability, which limits their application in real-world scenarios

The key contributions of this study are as follows:

An innovative multi-modal detection system is proposed that integrates RoBERTa for textual analysis, ConvNeXt for image verification, and Hetero-GAT for modeling complex social interactions.

An attention-based late fusion strategy is introduced for cross-modal learning, enhancing detection accuracy and robustness.

The model demonstrates real-time applicability, achieving 98.9% accuracy and a 98.6% F1-score.

The system is shown to be resilient against diverse adversarial attacks, ensuring suitability for deployment in dynamic cybersecurity environments.

This study is organized as follows.

Section 1 presents the background, motivation, and critical research gaps in fake profile detection in social media. It describes the necessity of AI-based, multi-modal options and provides a sketch of the suggested way forward.

Section 2 provides a detailed survey of current detection methods, including rule-based approaches, machine learning classifiers, and novel deep learning-based models, as well as their limitations in detecting modern fake profile generation techniques.

Section 3 gives further details of the postulated multi-modal framework. It demonstrates how RoBERTa can be used to analyze text, ConvNeXt can be used to verify images, and Hetero-GAT can be used to model social interactions. This Section also explains the attention-based late fusion mechanism for assembling the outputs of all modalities.

Section 4 contains a description of the data collection process, data preprocessing, and data implementation. It establishes the evaluation parameters and the experimental conditions in which the model is trained and tested.

Section 5 presents the experimental outcomes, including performance metrics, ablation studies, inference time comparisons, and other characteristics that assess adversarial robustness in various attack settings. In

Section 6, this study will be concluded with key findings and recommendations on how the findings can be applied in the real world, including suggestions for future study on cross-platform generalization and privacy-preserving AI mechanisms.

2. Literature Review

To detect social media imposters, a machine learning detector was created to evaluate texts that are added by individuals and characteristics of their behavior. The experimental findings not only validated the suggested system’s superiority over rule-based models in deceptive account detection but also proved that the suggested system could detect the existence of deceptive accounts at very high rates of success. This article helped to build safe web environments through the discussion of the most important digital security risks, such as misinformation, deception, and manipulation [

15].

Another study developed a system of monitoring that aims to identify the distributors of fake news within the social media platforms, where the metadata of the profile pages and the messages of the posts would be studied. The findings revealed that text analysis and profile characteristics are more accurate in the context of misinformation detection and tracking. It is this combination of semantic traits and behavioral markers that allowed the enhanced grouping of profiles and sources of information propagation, which in turn increased the efficiency of digital forensics in an example of a social media conglomeration [

16].

To detect malicious user behavior, an experiment was carried out using a deep learning approach to enhance cybersecurity on social media. Through different deep learning models, such as convolutional neural networks (CNNs), recurrent neural networks (RNNs), and generative adversarial networks (GANs), the system was able to identify abnormalities in behavior, thus improving threat detection and security enforcement. The multi-architecture guarantee made the system well positioned to respond to a large variety of deceptive behaviors, which made the level of detection high in addition to enhancing the portability and the fitness of the system on different platforms [

17].

A group of researchers proposed a novel framework based on blockchain that uses the benefits of decentralized transparency and machine learning to detect and map out fraudulent profiles. The procedure of storing the records of suspicious activity became safe because of blockchain integration, as user responsibility and trust improved. This combined solution allowed not only immutable records, but also tamper-evident storage, which led the way to more reliable validation of identity in digital environments [

18].

Fake profile detection was performed through a mix of deep convolutional neural network and Random Forest (RF) detection, and stalking behavior forecast was performed on the X (former Twitter) network using the hybrid method. This method of hybridization was the union of statistical feature engineering and elaborate visual feature extraction in determining model recurrences of deception and irregular involvement. The technique was found to be more accurate in detection compared to classic models. It was able to keep its users safe, increasing their safety by significant margins by predicting active threats [

19].

Multi-modal approaches that employed spatiotemporal and contextual cues were used to examine the factors that led to the diffusion of misinformation on digital platforms. Adding in attention mechanisms improved the performance of the model significantly more, enabling it to understand fake data by learning what aspects of the data are meaningful. The strategy also fostered the transparency of platforms and digital security by increasing the granularity of detection on the platform and at the user level [

20].

To understand fraudulent profiles, a Multinomial Naive Bayes classifier was used to examine the user-generated text and metadata. The model was very accurate, indicating lightweight statistical procedures could deliver real-time solutions in low-resource environments. Nevertheless, it also underlined the necessity of continuous feature engineering because of the changing behavioral patterns that are adopted by fraudsters [

21].

The meta-models of machine learning and deep learning were reviewed systematically to determine their applicability in detecting deception in social networks. The review considered evidence from 36 different studies and pointed to several problems, among them being a biased dataset, no model validation on various platforms, and no standard evaluation criteria. With such careful scrutiny, it was evident that detection reliability in the actual environments was to be improved by applying consistent methods of analysis and powerful preprocessing pipelines [

22].

A machine learning-based model was proposed to detect fake Instagram accounts based on a set of behavioral and profile features. The study demonstrated that high performance can be achieved with reduced feature sets, particularly by prioritizing engagement rates and content frequency. Nevertheless, the same issues, such as generalization to unseen datasets or a lack of user behavior patterns, are still important reasons for improvement [

23].

A multi-modal deep learning model was proposed to track fake news by leveraging textual, visual, and contextual information on social networks. Based on CLIP models and LSTM networks, the framework achieves high detection rates and finds that integrating various modalities improves performance. It is instrumental in combating synthetic media and fake news, and as such, would be a solid choice as future content verification systems in platform moderation pipelines [

24].

Table 1 presents an analytical comparison of previously conducted studies that address fake profile detection and the handling of misinformation within the context of social media. The techniques range from traditional machine learning to deep learning, blockchain integration, and multi-modal approaches, where data is combined using text, image, and behavioral data. All the studies offer particular contributions, with accuracy, interpretability, and real-time detection as their key strengths, and generalizability, computational cost, and adversarial robustness as commonly shared limitations. The insights will form the basis of stronger, more adaptive, and scalable AI-based social network security and user authentication frameworks.

Research Gap

Despite the advances in deep learning algorithms like RoBERTa, ConvNeXt, and Hetero-GAT, which demonstrate a significant trend in detecting fake profiles, some key research gaps remain to be filled. Among the most acute issues is the lack of flexibility in existing detection frameworks in response to the changing patterns of deceptive practices implemented by malicious users. These actors continually adapt their methods in response to new security measures, which means that the efficacy of static detection models in the long run is eroded. Therefore, the creation of adaptive systems to dynamically learn and react to new fraudulent patterns is an extremely urgent need.

In addition, although numerous current research efforts, along with the proposed one, show a high level of accuracy on benchmark data, they are frequently not cross-platform checked. The structure of data, the type of data, and the patterns of user behavior vary significantly across different social media platforms, and detection models must adapt effectively to these diverse environments. Their feasibility in real-life applications is limited without extensive testing on various real-life data.

The other main drawback is the performance trade-offs associated with late fusion strategies that utilize attention. Despite their strong multi-modal integration ability and interpretability (i.e., reliability in classifications), these mechanisms typically introduce latency and increased computing load, in addition to the generalized framework. This undermines the scalability of such models in large-scale, real-time operation of social networks.

Additionally, although ConvNeXt performs competitively on visual data analysis, it exhibits degraded performance that fails to address realistic generative AI-based synthetic media and deepfake profile content. Due to the growing sophistication of artificial media, existing visual modules may be insufficient to maintain detection accuracy.

Another research gap is linked to mistrust among end-users and moderators on the platform regarding explainability. Intuitive understanding and visual explanations of the reasoning underlying decisions are not readily available in most detection models, and their high-level utility is thus limited in content moderation teams and stakeholders who face the ultimate responsibility for making policy and user-trust decisions.

Lastly, adversarial robustness in multi-modal systems is insufficiently explored. The current frameworks are seldom tested in the context of coordinated attacks on textual, visual, and graph-based features in unison. These weaknesses have the potential to further impact precision under adversarial circumstances, particularly in high-risk information ecosystems.

To overcome such vulnerabilities, one should model an adaptive, interpretable, and adversarially robust AI architecture that can support generalization beyond platforms and resist adversaries that change over time. Further developments of scalable deployment, cross-domain testing, and real-time interpretability, and ensuring a practical, secure, and trusted integration of fake profile detection systems in imaginable social media settings should be prioritized in the upcoming study.

3. Methodology

Fake accounts on social networks have become a significant cybersecurity issue, endangering user privacy and facilitating mass misinformation campaigns due to their sheer numbers, which threaten immediate security measures and necessitate the development of AI-based defenses. Current rule-based and traditional machine learning systems lack the flexibility to identify changing and deceitful profiles, highlighting the potential of novel AI methods to enhance the resilience of systems in emerging cybersecurity technologies. In this study, a novel three-component deep learning model, integrating RoBERTa, ConvNeXt, and Hetero-GAT, is proposed to detect fake profiles on industrial-scale social networks. The framework is composed by combining all three modalities, namely textual, visual, and social graphs, thereby increasing the fidelity of detection along with the interpretability and deployment readiness of AI-borne cybersecurity infrastructure. The model offers early and late fusion force mechanics, which enable better performance compared to standard classification systems.

3.1. Dataset Description

The evaluation uses a benchmark multi-modal dataset consisting of 150,000 social media profiles (75,000 genuine and 75,000 fake), obtained from publicly available, anonymized repositories widely used in academic research, such as FakeNewsNet and Twitter Bot datasets. The dataset includes text, profile images, and graph-structured interactions, ensuring robustness in real-world operational environments. Textual information from user bios, posts, and comments is analyzed to detect patterns indicative of fake profiles. Profile picture data is examined to identify both artificially generated and computer-manipulated content. In this study, a profile is defined as a complete set of user-related information, covering textual data (name, bio, posts, and comments), visual data (profile and posted images), and social interaction data (connections, followers, likes, and comments). These varied data classes serve as multi-modal inputs to the detection model and collectively contribute to identifying fake profiles. The detection of anomalies leverages social network connections, along with behavioral interactions, which are represented as graph data. Supervised learning models can be trained through the profile authenticity labels detected in the dataset.

Figure 1 shows the proposed fake profile detection framework.

3.2. Dataset Preprocessing

Model robustness improvement requires the use of several preprocessing methods that affect distinct types of data. The RoBERTa model generates embeddings based on deep text context semantics after performing tokenization and removing stop terms on text data processing. The preprocessing steps for images involve size normalization and enhancement through various augmentation methods, which optimize the feature extraction capabilities of ConvNeXt.

In this study, a Gated Recurrent Unit (GRU) is integrated into the RoBERTa model to capture sequential dependencies within textual data, such as user posts and bios. The GRU’s role is to preserve contextual relationships between words across long sequences, enabling the model to better detect patterns of deception in user-generated content. By carrying this out, it enhances RoBERTa’s ability to recognize linguistic anomalies typical of fake profiles that exhibit unusual or inconsistent narrative patterns.

The preprocessing pipeline constructs heterogeneous social graphs, where users and posts are represented as nodes with interaction edges, enabling the Hetero-GAT module to capture complex network patterns for enhanced security monitoring. Standards of features maintain numerical data stability between different modalities, which improves the generalization capabilities and effectiveness of the model.

3.3. RoBERTa

The proposed model incorporates RoBERTa as a core textual encoder, selected for its proven effectiveness in enterprise-level NLP tasks, enhancing its suitability for industrial AI applications in social media analytics. RoBERTa represents an advanced version of the widely used BERT model, which originated from the Bidirectional Encoder Representations from Transformers (BERT) architecture. The BERT model serves as the foundation for RoBERTa because they share the same Transformer architecture base. Sequence-to-sequence tasks with long-range dependencies should utilize the Transformer as their model of choice. The model applies self-attention techniques above recurrence or convolution for spotting relevant input–output connections. The self-attention mechanism operates by applying more weight to essential inputs to shorten the length of the sequence data. The Transformer comprises two fundamental components, including a pair of encoder and decoder units. The transformer contains multiple layers that enable its operations, including self-attention followed by a feed-forward network for the encoder, as well as self-attention layers backed by encoder–decoder attention and a feed-forward network for the decoder. The input text reading function falls under the responsibility of the encoder, but the task of prediction belongs to the decoder component. The proposed model utilizes only the encoder element of RoBERTa as its text encoding layer. The creators of BERT designed the system to solve existing context limitations that appear in unidirectional approaches. The model served its original purpose for both the Masked Language Modeling and Next Sentence Prediction frameworks. BERT accomplishes two tasks through its masked token features and semantic understanding of different text sections, enabling it to predict the following sentence. The RoBERTa model outperforms BERT in various operational aspects. The tokenization method of Byte Pair Encoding at the byte level, as presented in RoBERTa, generates smaller vocabularies and more resource-efficient performance compared to BERT’s character-level Byte Pair Encoding. The data preprocessing of BERT involves static masking, as masking occurs only once. In contrast, RoBERTa performs dynamic masking through multiple duplicated sequences and adjusted attention masks, which enables the RoBERTa model to process a range of input sequences. RoBERTa utilizes extensive training with larger database volumes and increased batch sizes, along with longer sequence inputs, across a prolonged training duration. A total of four datasets were used for training BERT: Book Corpus + English Wikipedia (16 GB), CC-News (76 GB), Open Web Text (38 GB), and Stories (31 GB).

In this study, the input information of the RoBERTa model is established with the pretrained RoBERTa tokenizer. Upon the raw text processing, the tokenizer builds subword token sequences using vocabulary knowledge of the large training corpus of RoBERTa. Using this type of processing, textual semantics are preserved, where out-of-vocabulary items exert less semantic contribution to the text. The tokenization process results in token skeletons, to which an explicit input ID will be assigned by the RoBERTa vocabulary based on its sequence position within the vocabulary. All tokens are given an attention mask, and based on this, the model gains an understanding of their significance compared with the other elements of the input sequence. The attention mechanism allows the model to concentrate on tokens with special words and ignore other less crucial words, which ensures good performance at the next action. The model takes in input IDs and attention masks and mines them down into a 12-stacked network with 768 hidden states. The RoBERTa model works based on self-attentive processing of a sequence extracting information on different levels of abstraction. This is because the RoBERTa model uses a lightweight GRU to implement a dependency in the sequence without incurring excessive complexity that would impede real-time inference, so as to scale in the cyber security pipeline. Adding a GRU facilitates use of contextual information to RoBERTa and multi-token dependencies, resulting in improved prediction outcomes.

3.4. ConvNeXt

ConvNeXt is employed to process and verify profile images as part of the multi-modal detection framework. Profile images, as well as user-uploaded content (posts, photos), are selected based on the presence of an image file linked to a user’s profile. These images are resized and normalized to a fixed size to maintain consistency across the dataset. The images are then passed through ConvNeXt’s feature extraction pipeline, where they undergo depth-wise separable convolutions to extract high-resolution visual features. For profiles without images, the system defaults to relying on textual and social interaction data from the profile to identify possible indicators of fakeness. This ensures that profiles with missing images are still analyzed effectively using other available modalities.

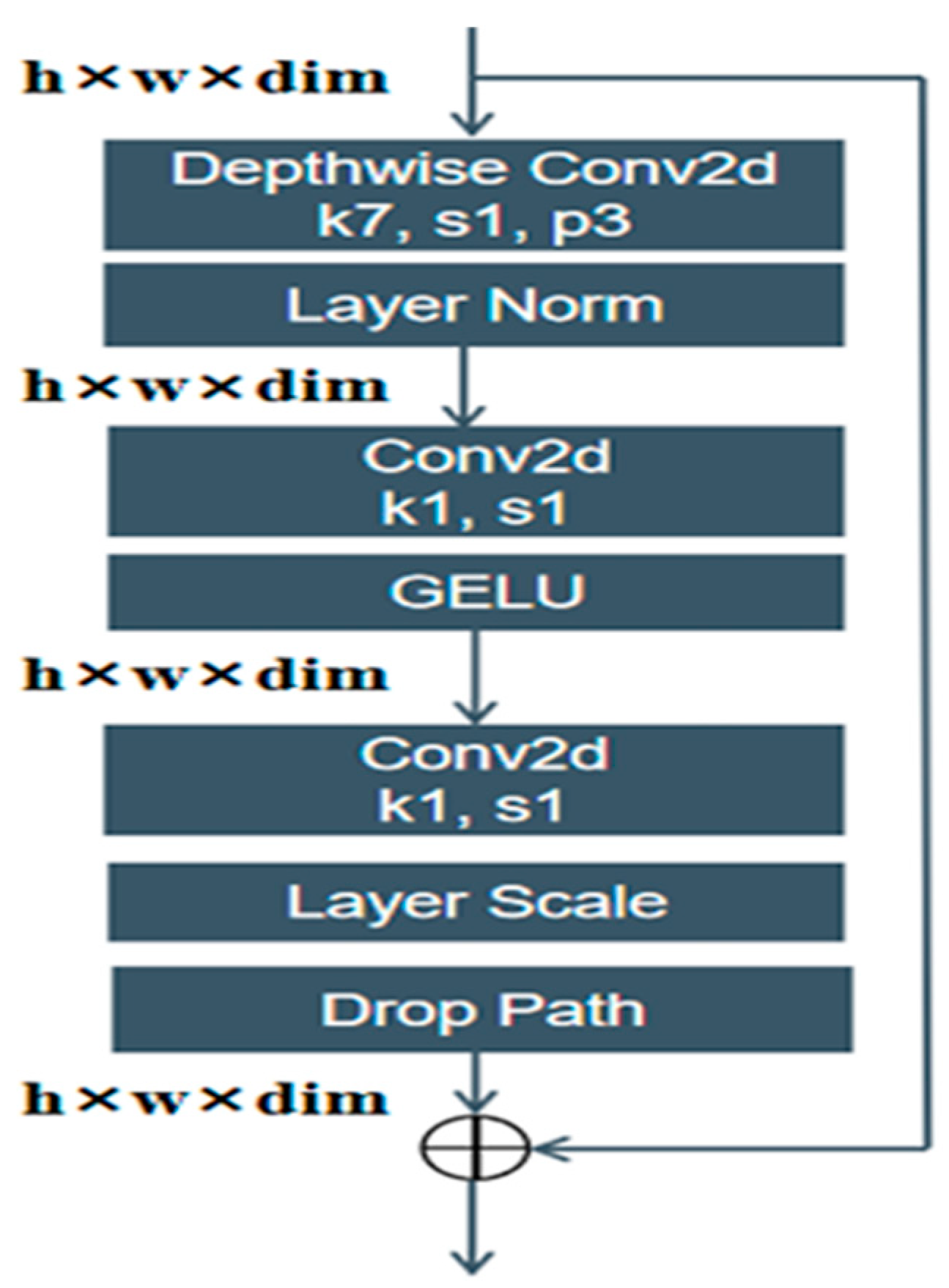

The ConvNeXt framework uses an entire convolutional structure to transform standard CNN architectures by adding depth-wise convolution features alongside LN and inverted bottlenecks to create highly effective high-resolution feature extraction capabilities. Traditional CNNs suffer from scalability issues due to the growth of parameters, particularly with depth expansion, as training and inference operations become increasingly expensive. The predetermined convolutional network structure restricts these networks from effectively exploring complex data patterns, which prevents them from utilizing all the available data information. ConvNeXt utilizes depth-wise separable convolution blocks and inverted bottlenecks to deliver high-resolution image feature extraction with reduced latency, making it ideal for real-time detection in AI-powered security systems. The depth-wise separable convolution consists of a depth-wise convolution followed by a point-wise convolution, as shown in

Figure 2.

Using separate convolutions on each input channel enables the collection of spatial information without allowing channel interactions to occur. The 1 × 1 convolution performs non-linear operations on channel-based information integration. The architectural design of ConvNeXt features multiple ConvNeXt blocks, as shown in

Figure 3.

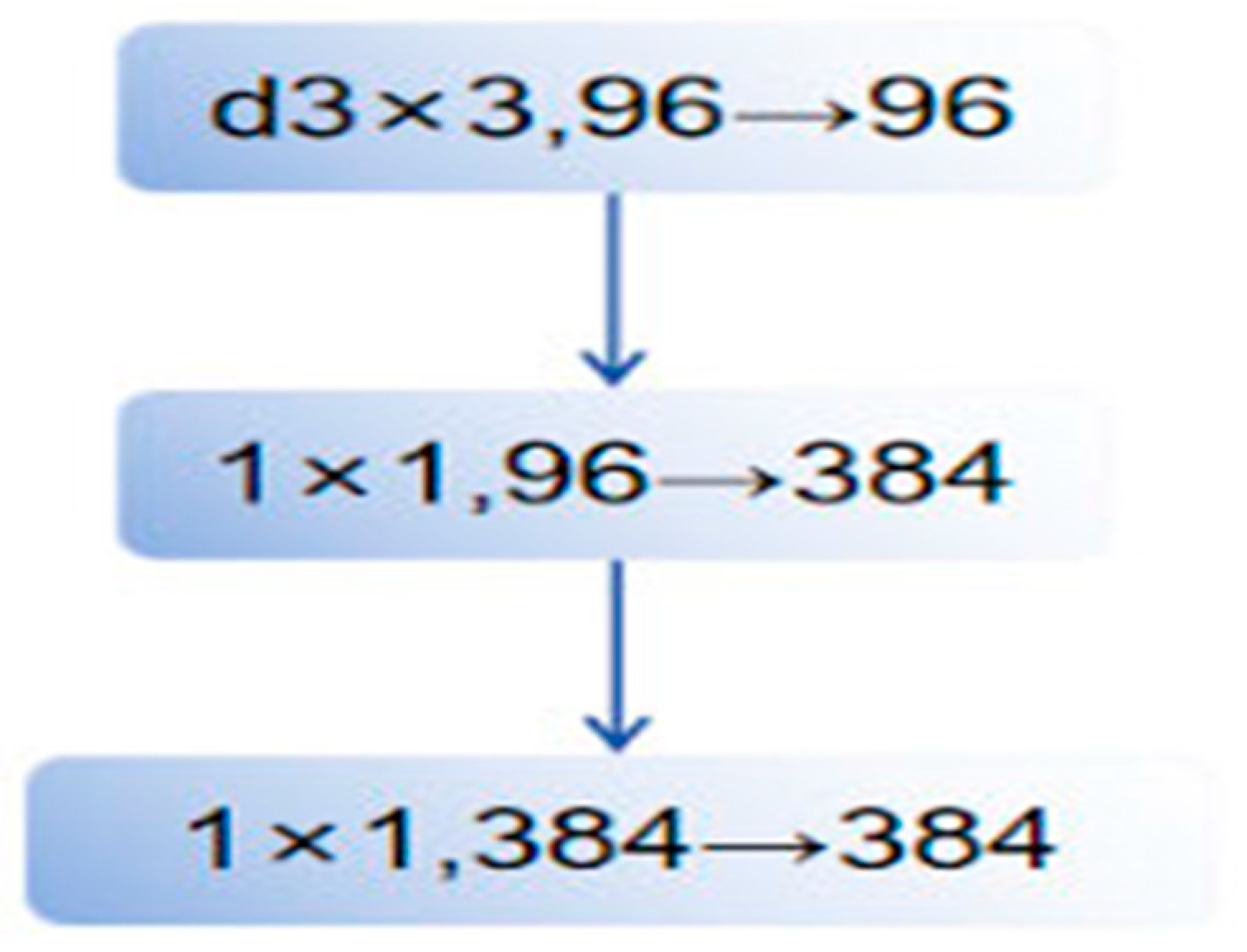

The ConvNeXt block incorporates drop path integration, a method that serves as an efficient means of preventing overfitting. The model is trained with a drop path, which removes random network path segments to learn multiple diverse data representations that enhance generalization. ConvNeXt develops a new bottleneck structure that operates in the opposite direction compared to ResNet.

Figure 4 illustrates the structure, comprising three components: a 1 × 1 convolution kernel, a 3 × 3 convolution kernel, and another 1 × 1 convolution kernel. These three convolutional layers in the model have their output channels modified based on the specifications of individual models. This design of the inverse bottleneck structure begins by increasing channel counts but later reduces them. The feedback structure of the ConvNeXt network enhances its ability to perform non-linear transformations while maintaining feature expression capabilities, resulting in improved performance outcomes.

3.4.1. Feature Extraction Pipeline

Let an input profile image be represented as

where

H,

W, and

C denote height, width, and the number of channels, respectively. The feature extraction process in ConvNeXt follows a hierarchical structure:

where

is a convolutional layer with a stride of P.

where

is the convolution kernel, ∗ represents the convolution operation, and

denotes the depth-wise convolution function.

where

is the feature map activations at spatial locations

3.4.2. Fake Profile Image Classification

To classify images as authentic or fake, the current study introduces a fully connected classification head on top of ConvNeXt’s feature representations. Given the extracted feature vector

, The final classification output

y is computed as

where

and

are learnable parameters, and

is the softmax activation function:

3.5. Heterogeneous Graph Attention Network (Hetero-GAT)

Hetero-GAT is employed to model complex relationships within social network data, which includes multiple types of nodes such as users, posts, images, and interactions like follows, likes, and comments. Each node is embedded with relevant features (e.g., user activity, post content, image metadata), and the edges represent the relationships or interactions between these nodes. The attention mechanism in Hetero-GAT allows the model to dynamically adjust the importance of each relationship based on the context of the task. By analyzing these multi-modal connections, Hetero-GAT is able to capture the distinctive characteristics of fake accounts—such as abnormal engagement patterns, unusual user connections, or irregular content sharing behaviors—providing valuable insights into profile authenticity. This enables the detection of suspicious behavior, such as coordinated manipulation or bot-like activity, that might indicate the presence of fake profiles.

The Hetero-GAT model facilitates the detection of fake profiles by analyzing user connections, content engagement, and posting behavior, thereby enabling complex social interactions. The model represents a heterogeneous social network, where nodes correspond to users and posts, and edges encode interactions such as likes and reposts. Each node

v (user or post) is initialized with an embedding vector:

where

represents the initial feature vector extracted from user metadata (e.g., activity patterns) and post embeddings.

To model relationships in the network, Hetero-GAT applies an attention-based message-passing mechanism, computing attention coefficients for each edge type (A,

r, B), where nodes of type A interact with nodes of type B via relation

r:

where

is a learnable weight matrix,

is an attention vector,

denotes concatenation, and

is the set of neighbors under relation r. This mechanism enables the model to distinguish between different types of social interactions while learning the importance of each connection.

To update node representations, the model aggregates attention-weighted messages across different relation types:

where

l represents the layer index. The final user embeddings are passed through a fully connected layer to classify profiles as real or fake:

where

and

are learnable parameters, and σ is a sigmoid activation function for binary classification. The model is optimized using BCE loss:

where

is the actual label (1 for fake, 0 for real) and

is the predicted probability.

This Hetero-GAT model is integrated into the RoBERTa-ConvNeXt-HeteroGAT framework, complementing RoBERTa for textual analysis and ConvNeXt for image-based analysis. The framework employs an attention-based late fusion mechanism to adaptively weight modality contributions, thereby improving detection accuracy, interpretability, and resilience against multi-modal adversarial attacks, which are key for operational security systems. By effectively modelling anomalous patterns in social networks, Hetero-GAT significantly improves fake profile identification, outperforming conventional classifiers. The modular architecture and efficient preprocessing make this framework well suited for real-time deployment in industrial social platforms, offering robust, explainable, and secure identity validation at scale.

5. Results

The proposed RoBERTa-ConvNeXt-HeteroGAT model shows an enormous improvement in real profile detection compared to traditional machine and deep learning classification models. The multi-modal fusion strategy proved highly effective in jointly capturing text semantics, image authenticity, and complex social interactions, leading to superior results across all evaluation metrics. To evaluate the performance of the proposed model, RoBERTa-ConvNeXt-HeteroGAT, a comparison test was carried out with some classic machine learning and deep learning baselines, including SVM, RF, LSTM, RoBERTa, ConvNeXt, and Hetero-GAT. Such results demonstrate that the suggested architecture demonstrates state-of-the-art performance, incomparably surpassing all baselines in essential metrics. The model achieves high performance, with an accuracy of 98.9%, precision of 98.4%, recall of 98.8%, and F1-score of 98.6. Its discriminative capability is further validated by an AUC-ROC of 99.2%. These findings affirm that multi-modal feature fusion enhances the robustness of detection. A more detailed comparison is presented in

Table 4.

Figure 5 shows the performance comparison of the proposed RoBERTa-ConvNeXt-HeteroGAT model in comparison with baseline classifiers, i.e., SVM, RF, LSTM, RoBERTa, ConvNeXt, and Hetero-GAT, in terms of accuracy, precision, recall, F1-score, and AUC-ROC. The model presented here achieves the best accuracy rate, with 98.9% precision, 98.4% recall, a 98.8% F1-score, and 98.6% AUC-ROC scores, meaning it significantly outperforms any other model. The findings validate the framework as effective, robust, and superior in accurately identifying fake accounts in social networks, making it a strong candidate for AI-driven cybersecurity solutions in online identity verification.

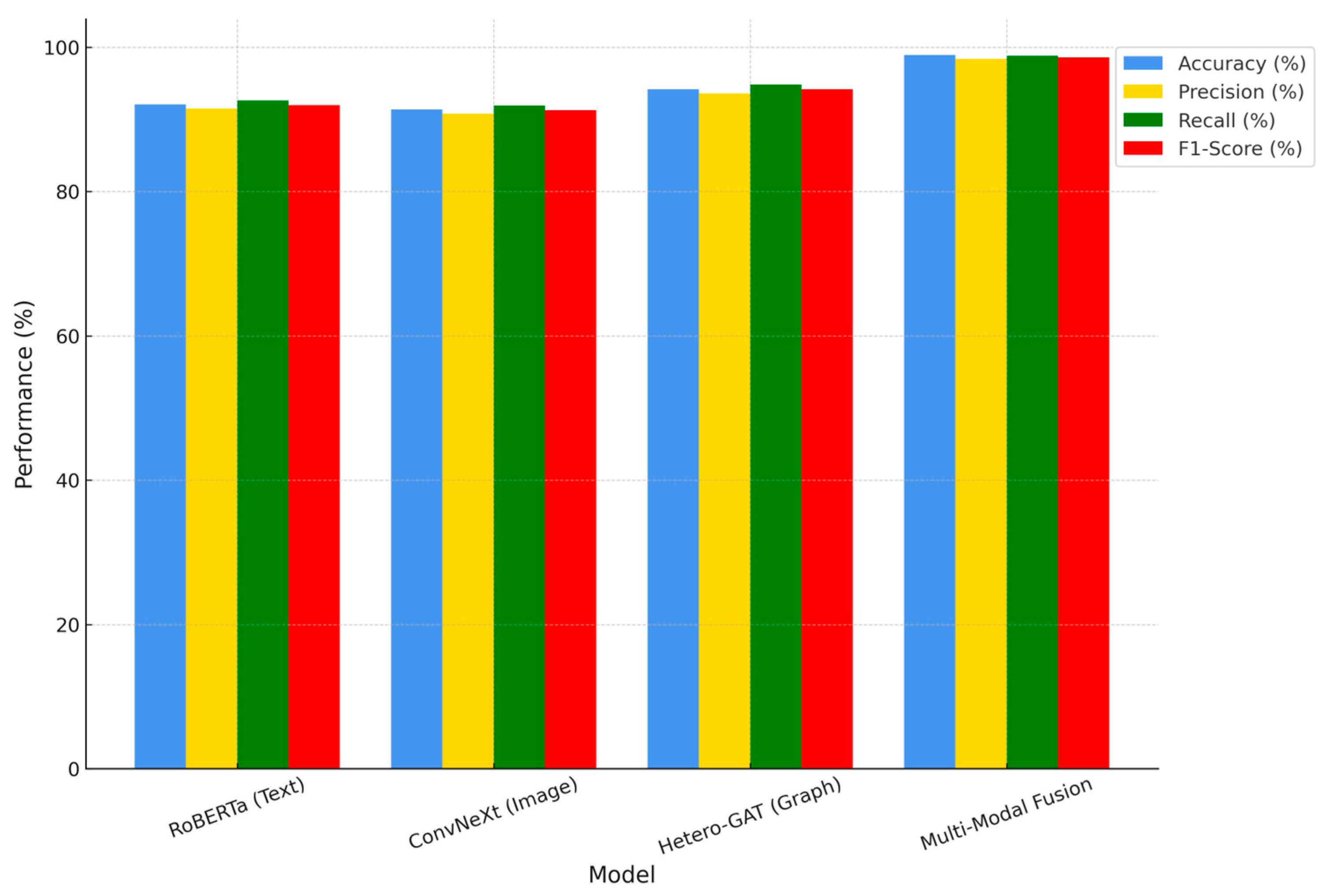

The individual contributions of the text- (RoBERTa), image- (ConvNeXt), and graph-based (Hetero-GAT) components were evaluated in a 3% ablation study. As

Table 5 shows, where individual modalities can be pretty competitive, with Hetero-GAT performing the best as a single modality, the multi-modal fusion approach outperforms all others, achieving 98.9% accuracy, 98.4% precision, 98.8% recall, and a 98.6% F1-score. These results confirm the effectiveness of heterogeneous integration in detecting powerful fake profiles.

Figure 6 shows the relative results of individual modality-based models—RoBERTa (text), ConvNeXt (image), and Hetero-GAT (graph)—and the multi-modal fusion framework. On the one hand, when evaluated separately, all the models may be said to perform well; however, their predictive ability is weaker than that of the integrated method. Multi-modal fusion is the best-performing model on all measures, achieving 98.9% accuracy, 98.4% precision, 98.8% recall, and a 98.6% F1-score. This demonstrates the effectiveness of a holistic approach to fake profile detection, which incorporates textual, visual, and social graph data. The results validate that the use of multiple modalities is a significant strength and reliability factor in detecting cybersecurity threats in real-life scenarios.

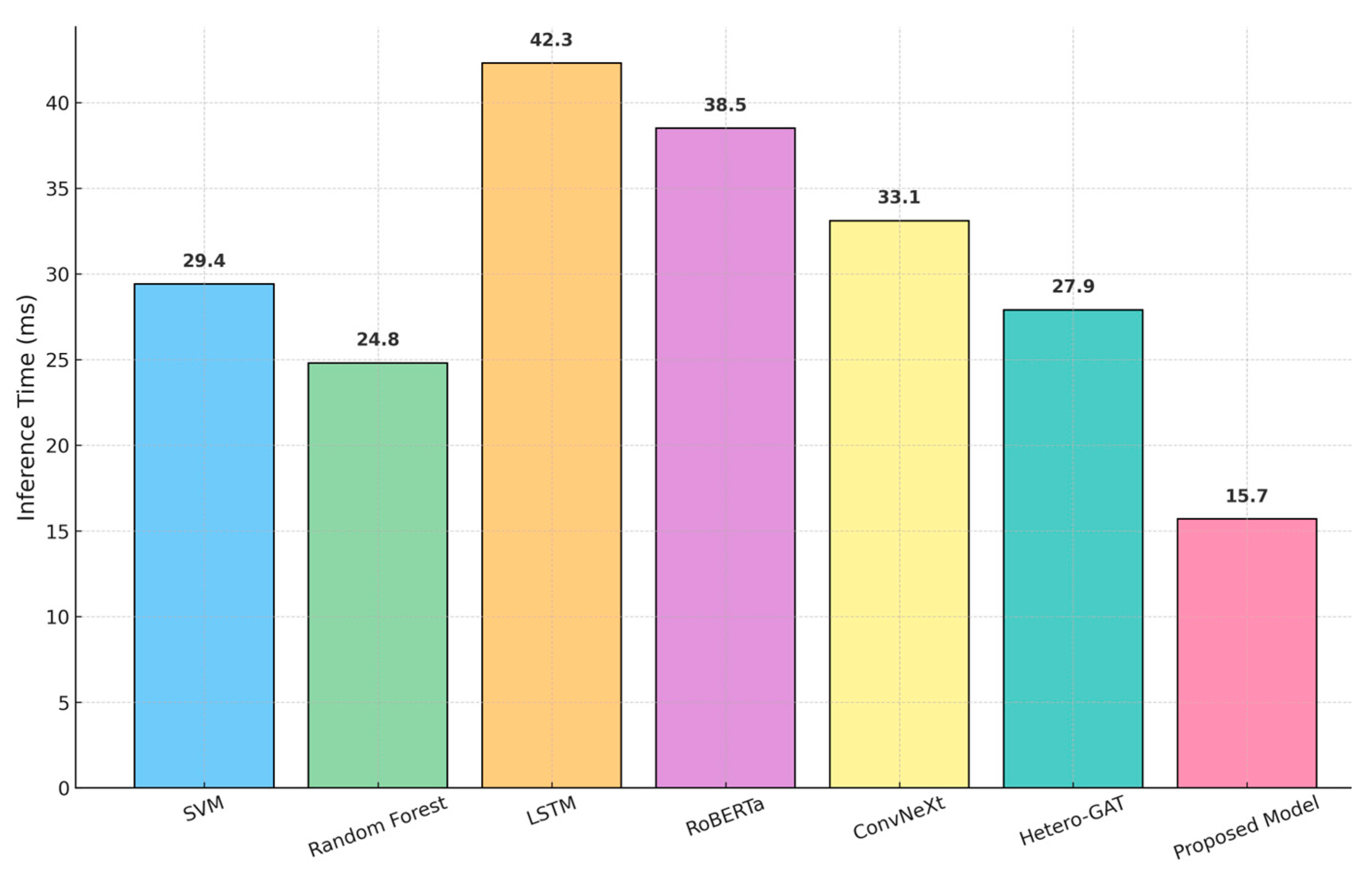

The RoBERTa-ConvNeXt-HeteroGAT model has outstanding real-time efficiency, as each profile can be processed in 15.7 milliseconds. This inference speed outperforms that of all other baseline models, including SVM (29.4 ms), RF (24.8 ms), LSTM (42.3 ms), RoBERTa (38.5 ms), ConvNeXt (33.1 ms), and Hetero-GAT (27.9 ms). This is compared in

Table 6, as highlighted by its applicability in being implemented in large-scale social sites. It operates with low latency, ensuring the timely identification of threats, making it a suitable tool in high-throughput, AI-based cyber environments that demand speed and precision in identifying fake profiles.

A comparison of the inference time of models such as SVM, RF, LSTM, RoBERTa, ConvNeXt, Hetero-GAT, and the proposed RoBERTa-ConvNext-HeteroGAT framework is shown in

Figure 7. The offered model has the shortest processing time (15.7 milliseconds per profile), which is much better than the others based on their approaches. This high performance indicates that it is well suited for real-time applications in which quick decision-making is essential. The proposed architecture would offer low-latency operation compared to standard machine learning and deep learning models. It can therefore be highly effective in large-scale, AI-powered cybersecurity systems that require fast and reliable detection of fake accounts.

The proposed RoBERTa-ConvNeXt-HeteroGAT model was evaluated against various adversarial attacks, including textual, image, and artificially designed engagement patterns. As

Table 7 demonstrates, the model exhibits relatively faultless performance in all adversarial situations. No more than a 4.7% accuracy drop is observed for combined attacks, which is a sign of high resilience. In particular, the model declines by 1.3 percent and 2.1 percent, respectively, under textual perturbations and image manipulations, as well as by 3.4 percent under synthetic engagement. These findings confirm the model’s potential for secure deployment in real-world systems, demonstrating high detection accuracy even under adversarial conditions.

The scalability of the proposed framework was tested in terms of training and testing it on three datasets containing from 10,000 social media profiles to 150,000 social media profiles. As shown in

Table 8, the performance improves as the dataset size increases. The model performs well at 10,000 profiles, achieving an accuracy of 96.1 percent. When the entire dataset of 150,000 profiles is used, the accuracy increases to 98.9 percent. The same tendency is observed with the precision, recall, and F1-score values. These findings demonstrate that the model performs well in generalizing to large-scale social media settings, indicating that it is suitable for industrial use cases that require high-throughput and scalable AI-based detection of fake profiles.

Figure 8 presents a comprehensive assessment of the model’s robustness and scalability. The left panel illustrates the degradation in accuracy under various adversarial attack scenarios. The proposed RoBERTa-ConvNeXt-HeteroGAT model demonstrates strong resilience with minimal performance loss: 1.3% for textual perturbations, 2.1% for image manipulations, 3.4% for synthetic engagement, and 4.7% under combined attacks. The right panel illustrates the scalability of the model, where accuracy continues to rise to 98.9% as the training set size increases from 10,000 to 150,000 profiles. These findings confirm the model’s ability to manage adversarial attacks and scale to realistic and high-traffic-production social media settings.

The approach suggested in this study, based on RoBERTa-ConvNeXt-HeteroGAT, is a highly precise, computationally efficient, and adversarially robust mechanism for identifying fake profiles in industrial social networks. The model outperforms other methods by combining multi-modal data, including textual elements of content, profile images, and graph-initialized behaviors, as input. The model achieves state-of-the-art performance, and the performance gain is substantial compared to single-modality deep learning and conventional machine learning methods. Such architecture not only improves the accuracy of detection but also makes it robust to adversarial manipulation. This demonstrates the effectiveness of multi-modal-driven AI measures in combating misinformation and online security attacks, while providing a secure and scalable framework for identity authentication across online platforms.

Beyond the model’s accuracy, it is essential to track metrics that reflect its business and marketing relevance. Key Performance Indicators (KPIs) should include the percentage reduction in fake follower counts for brands, increases in user trust scores (measured through surveys), and a decrease in misinformation spread across the platform. These outcomes are valuable in demonstrating the model’s impact on brand credibility and consumer trust. Such KPIs can be reported to stakeholders to highlight the platform’s commitment to security and authenticity. They can also be leveraged in brand communication strategies, emphasizing the platform’s role in fostering a trustworthy digital ecosystem.