Abstract

This study introduces a novel method that merges propagation-based transfer learning with word embeddings for rumor detection. This approach aims to use data from languages with abundant resources to enhance performance in languages with limited availability of annotated corpora in this task. Furthermore, we augment our rumor detection framework with two supplementary tasks—stance classification and bot detection—to reinforce the primary task of rumor detection. Utilizing our proposed multi-task system, which incorporates cascade learning models, we generate several pre-trained models that are subsequently fine-tuned for rumor detection in English and Spanish. The results show improvements over the baselines, thus empirically validating the efficacy of our proposed approach. A Macro-F1 of 0.783 is achieved for the Spanish language, and a Macro-F1 of 0.945 is achieved for the English language.

1. Introduction

For many years, the advent of widespread Internet connectivity and the rise of social networks have empowered people to publish and disseminate information in real time. However, despite the immense potential for social good, misinformation has become increasingly prominent in public awareness [1]. Misinformation can be strategically deployed to advance specific agendas, gaining insight from organizations that want to manipulate audiences [2]. The consequences of such misinformation are often underestimated, undermining the trustworthiness of institutions and leaders [3].

Fact-checking initiatives aim to mitigate the impact of false information [4]. By employing hands-on methods to verify the sources, fact-checkers strive to establish the accuracy and truthfulness of the content. To achieve this, they identify the origin of the information and compare it with verified facts. This verification process can be time-consuming and labor-intensive, often requiring journalists to invest significant resources. Given the enormous amount of content that circulates on social networks and the rapid rate with which it spreads, fact-checkers face a constant race to provide accurate and timely verification [5].

To support the fact-checking process, various automated techniques have been developed to detect rumors [6,7,8,9,10]. These automated systems can complement existing fact-checking efforts by highlighting potential false claims, allowing fact-checkers to focus their resources on verifying these high-priority items [11].

Many techniques for automatically detecting rumors rely on machine learning models. These models not only analyze the original content, but also consider user reactions to it [6,7,9], which is why they are often referred to as “propagation-based models” [11]. Most of these techniques focus on Twitter (now X), given its importance as a news-centric social media platform that primarily deals with political and related issues.

Various studies have demonstrated a robust relation between the features associated with the spread of information and the accuracy of the information itself [12,13,14]. For example, Mendoza et al. [12] noted that false content is scrutinized more and questioned than factual content on social networks. Consequently, while some techniques treat the detection of rumors as a simple source categorization problem [15], the most effective methods incorporate the social context. For example, the CSI model [7] considers the user interaction structure to better represent how a rumor propagates. Similarly, Ma et al. [9,10] use neural networks to analyze the content and conversation dynamics surrounding a likely rumor, representing these interactions as a propagation tree.

Despite progress in this field, most developed models are primarily designed for English [11]. Research shows challenges to adapting these existing frameworks for languages with limited resources [16]. This difficulty comes mainly from the high costs of creating and annotating datasets suitable for each specific language [17]. To address this issue, we investigate the ability to transfer knowledge from English-based models to languages with fewer resources in these tasks. According to Shi et al. [18], cross-lingual and cross-domain rumor detection is an emerging task that involves adapting features of well-resourced data to identify rumors in low-resource languages and domains. This task is challenging due to the unique characteristics of each language and domain.

The rationale for tackling this challenge using propagation-based models is as follows. Although rumor detection models relying on source verification can be heavily influenced by cultural and news-related context [15], propagation-based models are expected to be more resilient in transfer learning scenarios. This is because there has been no evidence to suggest that the dynamics of rumor propagation differ between countries and cultures. Furthermore, recent studies have shown similarities in propagation dynamics and user interactions between English [13] and Spanish language settings [14].

This research outlines the creation and empirical assessment of an innovative technique to detect rumors. Using cross-lingual cross-domain learning methods, we employ tools and methodologies to generate word embeddings, specifically those based on the transformer encoder architecture [19] used to create BERT [20]. Our primary goal is to optimally leverage the limited resources available in less commonly spoken languages while benefiting from the extensive resources in widely spoken languages. As part of our ongoing study, we focus on Spanish as the target language and English as the resource-rich language from which we draw various insights.

The core concept of our proposal is to integrate resources for various tasks related to rumor detection within the framework of transfer learning. Performing in a multi-task setting, we take advantage of the availability of multiple datasets in English to facilitate the transfer of learning to Spanish. Our research demonstrates that using multiple datasets in English for complementary tasks improves the performance of the rumor detection model in the target language. Specifically, our proposal defines a multi-task setting comprising bot detection, stance classification, and rumor detection modules. As discussed later, multi-task should not be confused with joint learning. Our work focuses on what is called cascade-based learning, which is a sequential approach.

To favor reproducibility, the source code for the models, training, and experimental setup is available online at https://github.com/eprovidel/rumor-detection (accessed on 22 June 2025).

This paper offers three contributions, as outlined below:

- –

- To the best of our knowledge, we are the first to integrate multi-task and cross-lingual learning in the context of rumor detection. Related work has concentrated on traditional NLP tasks [21,22], or employed just one of these techniques, but not both [23,24].

- –

- To the best of our knowledge, our research is the first to investigate the role of “bot detection” [25,26,27,28], within a multi-task architecture to improve rumor detection efforts. Previous studies have mainly focused on the use of “stance classification” for rumor detection [29,30,31].

- –

- We introduce a methodology that effectively utilizes diverse datasets—varying in structure, language, and task—without requiring consolidation into a single, fully annotated dataset. Unlike previous work, our experimental approach leverages existing datasets in their current form. It employs a data transfer strategy from high-resource languages, particularly English, to Spanish.

The organization of this paper is as follows. Section 2 reviews related work, explicitly focusing on rumor detection (Section 2.1), multi-task learning (Section 2.2), and cross-lingual learning (Section 2.3). Next, Section 3 presents the core definitions necessary for the work and describes the proposed model, starting with a general overview followed by detailed descriptions of each stage. The experimental results are presented in Section 4, which describes the datasets used, the results obtained, and the comparison with the baseline models. In Section 5, we discuss the main findings and limitations of the study, a comparison of the results considering base models, a comparison using different metrics of the text, and an ablation study. Finally, Section 6 summarizes the impact, reach, and limitations of our research and identifies venues for future research.

2. Related Work

2.1. Rumor Detection

Initially, models were based on the features of messages. Among the features considered were attributes based on the text, user, and network [6,32,33,34], where the use of classic machine learning was predominant, such as in SVM [32,35,36,37], Decision Trees [32], Bayesian networks [32], Logistic regression [35,37,38], and Random Forest [35,36,38]. Subsequently, patterns associated with the credibility of messages were identified [6], and the relationship between user stance and rumor veracity was explored [12,34,39].

Then, with the rise of neural networks, these began to be used for the task of rumor detection, with one of the pioneering works of Ma et al. [40] using tanh-Recurrent Neural Network (tanh-RNN), LSTM, and GRU. This was followed by works using CNN [41,42], LSTM [42,43], Adversarial Networks [44], Feedforward Networks [43], and Ensembles [45,46]. In the work of Providel and Mendoza [47], the performance of ten neural network models was compared, including LSTM, Stacked LSTM, GRU, Stacked GRU, Bidirectional LSTM, Bidirectional Stacked LSTM, Bidirectional GRU, Bidirectional Stacked GRU, CNN1D, and Recurrent CNN, for rumor detection in English.

Kwon et al. [48] studied the existence of patterns that incorporate message propagation and message replies over time. Zubiaga et al. [49] explored the use of contextual learning during an event, analyzing the dynamics of the messages considering the message to classify and previous messages. Following this line, Ma et al. [50] used the structure of the conversation, representing it as a tree, where the first message of the conversation corresponds to the root, and all the replies and reposts are represented by the nodes of the tree. This topic was also studied by Vosoughi et al. [13].

In the work of Li et al. [51], a model considers the network of friends, viewed as global information, and the structure of conversations on social networks, as local information, demonstrating that these two sources of information are useful for the rumor detection task. Similarly, Ma and Gao [52], in their work, capture contextual relationships and patterns of truthfulness in conversational structures. Along the same lines, Zhao et al. [53] proposed a web-based system that contains two modules: fact-checking and rumor detection, using a bidirectional convolutional network model based on bidirectional graphs.

A topic that is similar to rumor detection, but in the context of reviews on e-commerce and social media platforms, is the Fake Review Detection problem. Several techniques and methodologies have been proposed, such as graph-based neural networks [54]. A review on this topic is given by the work of Mohawesh et al. [55].

For a detailed study of the techniques of rumor detection, stance classification, bot detection, and the combinations thereof, we refer the reader to our previous review [16] that considered 97 articles and serves as the theoretical background for our current research.

2.2. Multi-Task Learning in Rumor Detection

Multi-task learning, first introduced by Caruana [56], involves cooperatively training models across multiple tasks, typically focusing on a primary task enhanced by auxiliary tasks. Incorporating auxiliary tasks can improve the performance on the main task by enabling the model to learn more robust and universal representations [29,57]. Although Caruana originally proposed joint learning, subsequent research introduced methods such as alternate training and cascade learning [58]. We therefore understand multi-task learning broadly as the improvement of a primary task through the support of auxiliary tasks.

Early multi-task approaches to rumor detection typically combined rumor identification with stance classification [30]. Note that [30] does not use bot detection as an auxiliary task. Controversial posts often elicit a range of responses, forming identifiable patterns that aid in rumor detection. Ma et al. [30] proposed two GRU-based architectures: the Uniform Shared-Layer Architecture (MT-US) and the Enhanced Shared-Layer Architecture (MT-ES), the latter employing task-specific layers.

Building on this idea, Kochkina et al. [29] distinguished between veracity classification and rumor detection, integrating rumor detection as the main task and veracity and stance classification as auxiliary tasks. Their model featured a shared layer followed by LSTM-specific task layers, dense and softmax, and refined word embeddings with pre-trained word2vec [59]. They also evaluated a model named NileTRG* [60], employing a linear SVM based on the characteristics of the bag of words and the position.

Subsequent work further explored multi-task architectures. Li et al. [61] developed a model that uses a shared LSTM and task-specific layers, combining their outputs to improve accuracy prediction without requiring alternate training. Similarly, Islam et al. [62] introduced “RumorSleuth,” which leverages a Variational Autoencoder (VAE) [63] for modeling user characteristics and fusing task outputs.

Wu et al. [24] enhanced multi-task learning by combining word2vec and positional embeddings [20] and introducing an attention/gate mechanism to optimize cross-task learning. Wei et al. [64] proposed Hierarchical-PSV, integrating Conversational-GCN for stance and Stance-Aware RNN for veracity prediction.

More recent advances include a Coupled Transformer [65], leveraging user stance via BERT for thread-level interaction modeling. Along the same lines, Choudhry et al. [66] introduced emotion classification as an auxiliary task, based on the theories of Ekman [67] and Plutchik [68], which significantly improves disinformation detection.

Other notable models include Chen et al. [69]’s reinforcement-learning-based model for early rumor detection, Li et al. [70]’s hierarchical heterogeneous graph for cross-topic feature propagation, and Jiang et al. [71]’s Multi-task Multimodal Rumor Detection Framework (MMRDF), which integrates text and image classifiers.

Expanding task diversity, Wan et al. [72] developed SC-HA-MTL, combining content- and writing style-based rumor detection with hierarchical attention. Similarly, Shahriar et al. [73] generalized deception detection in news, tweets, and reviews using shared and domain-specific branches with LSTM and BERT.

Focusing on graph-based techniques, Abulaish et al. [74] used sBERT and Graph Attention Networks (GAT) to capture user stance and predict the veracity of rumors, while Liu et al. [75] integrated shared and task-specific graph channels for modeling structural and textual features. Chen et al. [76] modeled subthread and stance–rumor interactions to improve rumor detection performance.

Exploring transfer effects in multi-task settings, La-Gatta et al. [77] demonstrated that sentiment analysis, fake news detection, and topic detection benefited from multi-task learning, whereas stance detection faced negative transfer.

2.3. Cross-Lingual Learning

Cross-lingual learning has been applied to several NLP tasks, including misinformation detection for e-commerce [78], hate speech detection [79], and emotion detection [23]. For example, Lin et al. [21] adopted a multi-task learning strategy to minimize reliance on large annotated datasets, targeting POS tagging, NER, and language modeling. Their model employed shared neural layers between languages and tasks, using character-level convolutional embeddings (CharNN) and pre-trained embeddings for Spanish and English, with extensions to Russian, Dutch, and Chechen. Their alternating training strategy mirrored that of Ma et al. [30] and aligned with the objectives of our study, albeit focusing on general NLP rather than rumor detection.

Expanding on these ideas, Pikuliak et al. [22] proposed a zero-shot cross-lingual and multi-task framework. They introduced a tabular task–language association and the notion of “coupling models,” which enhanced the stability and performance during training. Similarly, Ahmad et al. [23] leveraged English resources to develop emotion detection models for Hindi, employing multilingual embeddings and applying layer freezing techniques to prevent catastrophic forgetting.

Focusing on hate speech detection, Rodriguez et al. [79] utilized LaBSE embeddings [80] for zero-shot learning, improving on the SemEval 2019 benchmarks. For rumor detection, Tian et al. [81] implemented a zero-shot learning framework based on MultiFit [82], using a self-training loop for the refinement and evaluation of English and Chinese datasets. Notice that even though this work has similar goals to ours, the authors worked exclusively on the cross-lingual and transfer learning features, but did not use any multi-task approach.

More recent work has advanced cross-lingual rumor detection. For example, Lin et al. [83] proposed ACLR-BiGCN, which combines adversarial contrastive learning with graph convolutional networks for detection based on conversations in datasets in English and Chinese. Similarly, Awal et al. [84] introduced MUSCAT, integrating hierarchical conversation representation, multi-head co-attention focused on root tweets, and multilingual pre-trained models, supplementing data through translation and creating the SEAR dataset.

The transfer of knowledge from English to Korean was tackled by Han [85] through a model comprising a news encoder, a language discriminator, and a fake news detector, utilizing adversarial learning and building new datasets for evaluation. Along the same lines, Shi et al. [18] proposed the DES framework for cross-lingual and cross-domain rumor detection, focusing on disentangling emotional and semantic features, adapting them across domains, and employing a joint self-training strategy, evaluated on datasets such as Weibo-COV19 and Twitter-COV19. Recently, Zhang et al. [86] introduced a model that utilizes conversation graph structures and an auxiliary self-supervised contrastive task to enhance rumor detection in low-resource and zero-shot scenarios, as tested in the same multilingual datasets.

After reviewing related studies, it was observed that the tasks of stance classification and rumor detection have been integrated under a multi-task learning approach. However, as far as is known, such an integration has not been applied to the task of bot detection. Regarding the architectures used, a consensus has been identified concerning the structure of the models, in which shared layers are included across tasks, allowing the learned knowledge to be transferred to support the learning of another task. Task-specific layers are also incorporated, where the learned knowledge is used exclusively for the corresponding task. In addition, a trend has been recognized toward the use of fine-tuning techniques on pre-trained models such as BERT or LaBSE. A recurring topic in several of the analyzed works is the use of cross-lingual models as a strategy to address the scarcity of resources in languages other than English, an aspect that is also addressed in the present study for the Spanish language.

3. Multi-Task Cross-Lingual Learning for Rumor Detection

3.1. Definitions

A scenario where someone posts a message on a social media platform is studied. This action can trigger various reactions, either replies to the original message or forward copies. Forwarding allows the message to be spread across the platform, potentially reaching a broad audience. In this context, we introduce the concept of “Propagation Trees” (PTs) [50]. A propagation tree is the structure of interactions triggered by a root message (the message that triggers the thread), denoted by , that gives rise to multiple responses , , from a set of users U.

To build a predictive model for rumor detection, we use a dataset comprising n propagation trees, represented as . With these definitions in place, we can outline the specific tasks that our approach will focus on:

- Rumor detection task: This task aims to determine the veracity of a root message of a propagation tree . For doing so, it has been shown that it is helpful to consider the complete propagation tree; hence, . The labels used in y vary between works, but a minimal definition is .

- Stance classification task: This task focuses on classifying the position expressed by a user on the veracity of the root message. If is the response given in the propagation tree , then we can define . The labels in z are generally with , known as stance SDQC.

- Bot detection task: This task classifies whether a user that posts a message is a human or a bot. That is, with .

3.2. Overall Description of the Proposal

Without losing generality, we formulate the problem by focusing on Twitter’s interaction mechanisms. However, this methodology can be applied to any platform that supports a reply mechanism for preceding comments. This feature is sufficient to build a propagation tree starting from a root message. Other platforms where this methodology can be applied include TikTok, Facebook, YouTube, and Threads.

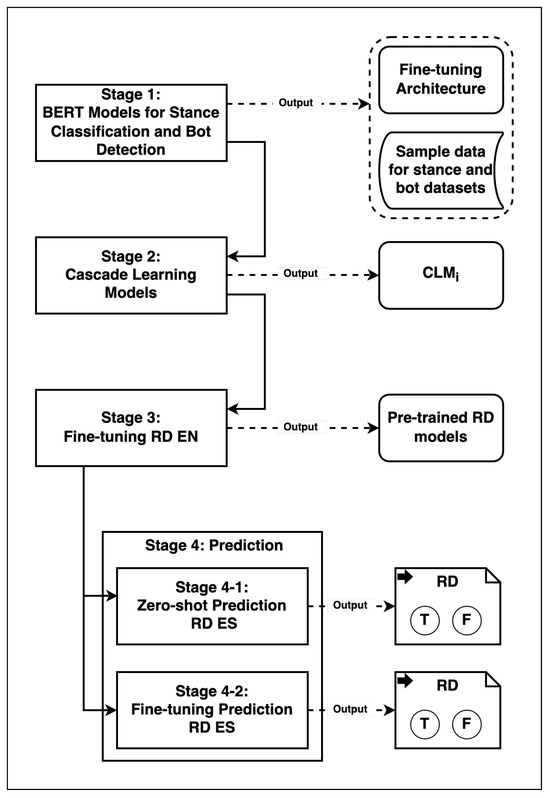

Our approach outlines four main stages, presented in Figure 1. The core concept of this methodology is to initially use individually trained models for specific auxiliary tasks. These models are then sequentially integrated to create a propagation-based model for rumor detection.

Figure 1.

General architecture composed of four stages. Every stage generates an output and is used for the next stage, with the exception of the first stage.

Stage 1. We develop auxiliary task-specific models that are trained independently of the main model. This involves creating one model for stance classification and another for detecting bots. Both models employ BERT (specifically, BERT base-uncased) as their text encoder. For each task, the primary objective is to achieve results that are competitive with the state of the art, even though these implementations are not necessarily expected to produce the best outcomes for every task.

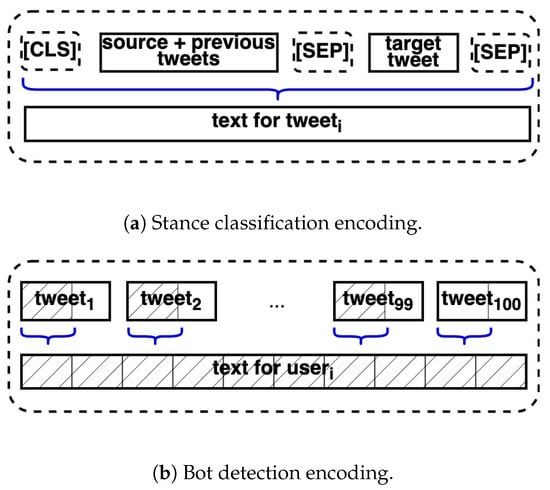

To develop the stance classification model, the BUT-FIT methodology is adopted, originally introduced by Fajcik et al. [87] in RumorEval 2019. This approach has proven to be the most effective BERT-based strategy in competitive settings. BUT-FIT capitalizes on the notion that the context of a specific response within a propagation tree can be accurately inferred from both the root message and the preceding message in the conversation. This method effectively circumvents the input size constraints of BERT, as it only requires a consideration of three tweets at a time, as is illustrated in Figure 2a. The context of the target tweet incorporates the root message and the preceding message. To encode these tweets, we concatenate the text of these messages and enclose them between the special CLS and SEP symbols of BERT. Then, the target message is encoded, and the instance is closed using the SEP symbol. We subsequently fine-tune BERT using this encoding scheme to produce the stance classification model.

Figure 2.

The encoding techniques employed for training two separate models: (a) the model for classifying stances, and (b) the model designed for detecting bots, using the 100 tweets available in the dataset, per user.

Similarly to the efforts made in stance classification, we also aimed to identify a bot detection method that yields competitive results. To achieve this, the technique described by Polignano et al. [88] is adapted, leveraging BERT (BERT-base-uncased) to encode the text. We operated under the constraint that the only available data for training and evaluation consist of a sample of 100 tweets per user, as illustrated in Figure 2b. To avoid BERT’s input size limitations, a sampling strategy on the pool of 100 tweets is employed. This strategy is based on a study by Chi et al. [89], which suggests extracting a specific number of characters from the beginning, end, or middle of each sampled tweet. Our implementation allows for customization through parameters controlling the total number of sampled tweets and the number of tokens extracted from each tweet.

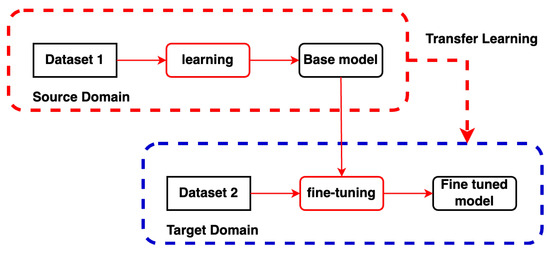

Stage 2. We use the concept named “cascade learning models” [90] (CLMs for short), which is based on the principle of sequential learning. A CLM is a sequence of models linked through pre-training and fine-tuning strategies. The first link in this chain starts with a pre-trained model tailored for a specific task in a source domain. Suppose that this pre-trained model is designed for text encoding. In that case, it can be adapted for a different task, or even the same task in other languages, by employing a fine-tuning process using data specific to the new task. This chaining mechanism outlines a transfer learning strategy from a source domain to a target domain, allowing the new model to benefit from the pre-trained model by adapting it to new data. We explain this basic mechanism in Figure 3.

Figure 3.

The chaining mechanism that defines a cascade learning favors transference learning across domains.

The depth of the chain, which is determined by the number of resources that define it, establishes the arity, i.e., the number of components that make up the cascade. Note that the arity of the chain is calculated as 1 (the base model) plus each fine-tuned model. Figure 3 illustrates a learning chain of an arity of 2 because it has the base model, and the fine-tuned model obtained after fine-tuning using Dataset 2. This mechanism to build a collection of models using a limited set of resources is used. Our exploration strategy involves creatively combining available resources in every possible way to generate a cascading collection of models that will be useful for the primary task at hand. Without a loss of generality, let us assume that we have three resources: a dataset for stance classification in English (Stance EN), another for bot detection in English (Bot EN), and a third for bot detection in Spanish (Bot ES). This transfer learning exploitation strategy will produce a collection of models trained using a model chaining mechanism. Table 1 indicates that our transfer learning exploration strategy produces fifteen base models.

Table 1.

CLMs constructed by sequential fine-tuning of mBERT models, each with a specific task and dataset.

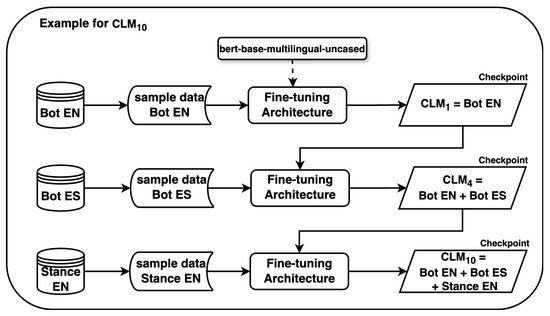

The strategy for exploiting transfers between domains will generate a collection of base models. These models incorporate the reuse of previously trained models with lower arity. Figure 4 illustrates an example of model reuse within the chain, progressing from models with arity levels of 1 and 2 to ultimately achieve a model with an arity of 3. By constructing all permutations of sizes 2 and 3, we can access previous labels to avoid retraining existing models. In this example, the text is encoded using mBERT (bert-base-multilingual-uncased, https://huggingface.co/bert-base-multilingual-uncased, accessed on 22 June 2025), which favors the learning of cross-lingual transference. In addition, the last chain of the cascade considers cross-domain transference, from bot detection to stance classification. Transfer learning is feasible because both systems use the same text encoding, in this case, mBERT. This allows for seamless transfer across different languages and domains.

Figure 4.

The chaining mechanism that defines cascade learning reuses models with arity levels of 1 and 2. In this example, CLM10 reuses CLM4, which is based on CLM1.

Stage 3. In the third stage, the focus is on the primary objective. This stage in the methodology aims to transfer the knowledge gained from a pre-trained CLM to the main task. Given that these baseline models are based on learning chains based on cross-lingual and cross-domain transfer, the aim is to evaluate the impact of cross-lingual transfer learning across various scenarios represented in different CLMs and apply this to the main task.

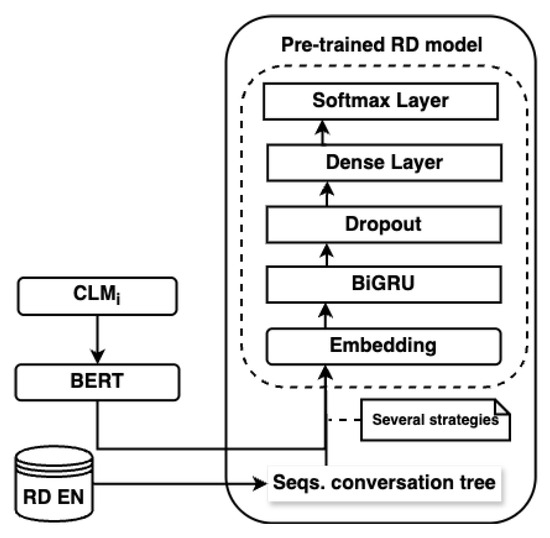

More specifically, to facilitate knowledge transfer from a baseline model to the rumor detection task, these baseline models are used to encode the text of each propagation tree. This methodology establishes an empirical framework that allows us to identify which base models create the most favorable conditions to tackle the main task. We employ each base model to encode the text fed into the primary task model. Given that each base model is a fine-tuned mBERT encoding model specialized for a particular sequence of auxiliary tasks, it aids in accurately encoding instances for rumor detection by transferring its acquired knowledge through textual representation from auxiliary tasks. Figure 5 describes this approach.

Figure 5.

To pre-train the main task model, we used the base models learned from the auxiliary tasks to encode the text. RD stands for rumor detection.

As illustrated in Figure 5, the primary task is addressed using a Bidirectional GRU model [91], i.e., a Gated Recurrent Unit (GRU) architecture designed to capture sequential dependencies in both directions—left to right and right to left—over the input sequence. The motivation for choosing this specific model is to provide contextual information for the classification. To feed the model, we chronologically input a sequence of tweets based on each tweet’s timestamp. In determining the encoding for each tweet, various strategies were tried out, based on a base model selected in stage 2.

- mBERT-embedding: use the embedding layer of the base model.

- mBERT-pooler: use the pooler layer of the base model.

- mBERT-second-to-last: use the second-to-last layer of the base model.

- mBERT-sum-four-last: use the sum of the last four layers of the base model.

- sBERT-default: use the default constructor of embeddings defined for sentence BERT.

In addition to our base CLMs, a standard mBERT checkpoint for comparison was incorporated under the name Base0. With 16 primary text encoding models (15 CLMs and Base0) and 5 different strategies to generate embeddings in the main task, we have 80 unique settings, denoted from to . This extensive experimental setup allows us to explore various settings for cross-domain and cross-lingual transfer learning in the main task. The primary task we focus on at this stage is the detection of rumors in English, as described in RD EN (Figure 5), which refers to the rumor dataset in English.

Stage 4. The final stage of the methodology involves applying transfer learning from an English rumor detection model to a Spanish rumor detection model. We employ two strategies to assess the effectiveness of the proposed transfer learning approach. The first strategy involves zero-shot learning and the second utilizes a recently developed dataset for rumor detection in Spanish.

- –

- Zero-shot evaluation. It is worth noting that the pre-trained models have not been trained on any Spanish rumor detection data to date. This is why we first assess the zero-shot capabilities of the pre-trained models of stage 3. We also compare their performance with a baseline mBERT model for comprehensive understanding.

- –

- Fine-tuning for Rumor Detection in Spanish. Lastly, we evaluate the potential of the pre-trained models from Stage 3 for fine-tuning to improve their performance in detecting rumors in Spanish.

4. Experiments

4.1. Computational Resources

All models and tests were performed at different times, mostly using https://vast.ai (accessed on 22 June 2025) instances with NVIDIA GPUs that have at least 12 GB of VRAM. We used a variety of x64 processors and different GPU models. In general, model training and validation ranged between 6 and 20 h, depending on the task being performed. The most expensive task was the construction of embeddings from the datasets, followed by the construction of the cascade learning models (described in the following). In summary, we estimate that the total computation used in the study, including the trial and error phases, amounts to approximately 400 GPU hours.

4.2. Datasets

In this section, we outline the datasets used for the experimental assessment of our study. The focus is on analyzing conversations on microblogging platforms, particularly Twitter. Details of the datasets used for our study can be found in Table 2.

Table 2.

Basic statistics of the different datasets used in this work.

- Twitter16 [50] (https://www.dropbox.com/s/7ewzdrbelpmrnxu/rumdetect2017.zip?dl=0, accessed on 21 March 2021). This dataset is utilized for the detection of rumors in English. It contains 753 root messages with veracity annotations. Each of these messages comes with propagation trees that detail the replies for the primary message. The veracity of each message was determined by cross-referencing fact-checking websites such as Snopes (http://snopes.com, accessed on 22 June 2025). The messages have been categorized into four veracity labels: true, false, unverified, and non-rumor (abbreviated as TFUN) [50]. To align this dataset with the detection of rumors in Spanish, we exclusively used the categories of true and false rumors, which were also used as an annotation scheme in Spanish. Accordingly, veracity annotations correspond to the following:

- –

- True rumor (true): It has been verified that the message corresponds to a rumor. There are 188 messages with this label.

- –

- False rumor (false): The message indeed does not correspond to a rumor. There are 180 messages with this label.

- RumourEval2019 [92] (https://figshare.com/articles/dataset/RumourEval_2019_data/8845580, accessed on 21 March 2021). The dataset referenced here was used in the RumorEval 2019 competition for Task 7, which consisted of two subtasks. The first subtask involved determining a user’s stance regarding a specific message, while the second aimed to classify the truthfulness of that message. This dataset is exclusively in English. Although the competition provided data from both Twitter and Reddit, our study only incorporates Twitter data, which is detailed in Table 2. This dataset is organized around various significant events, including the Charlie Hebdo shooting in Paris, the Ferguson riots in the U.S., and the plane crash in the French Alps, to name a few. The original dataset is separated into training and testing sets. It comprises 325 propagation trees, totaling 5568 tweets. For rumor detection, this dataset employs three distinct labels: true, false, and unverified (TFU). We did not utilize this specific dataset partition in our study, since our objective is to assess cross-domain transfer capabilities not within the same domain; in this case, it would be from one rumor detection task to another. The data for stance classification was labeled by crowdsourcing, adhering to standard SDQC labels, which stand for support, deny, query, and comment. The distribution of messages per label is as follows: 1145 labeled as support, 507 as deny, 526 as query, and 4456 as comment.

- PAN2019 [27] (https://zenodo.org/record/3692340#.YzxAHexBz0p, accessed on 20 October 2022). This dataset was used for the “Author Profiling PAN (Plagiarism Analysis, Authorship Identification, and Near-Duplicate Detection)” competition at CLEF 2019. The dataset includes Spanish and English data, amalgamating pre-existing sources that had already classified accounts as ‘bot’ or ‘human’. The original dataset is separated into training and testing sets. In addition, the dataset contains gender labels for human accounts. However, this study focuses solely on distinguishing between bot and human accounts. The labeling process was manual, with each author contributing 100 tweets, which were also included in the dataset.

- Disinformation data [14] (https://github.com/marcelomendoza/disinformation-data, accessed on 12 January 2023). This dataset features Chile news labeled by prominent fact-checking organizations, including fastCheck.cl (accessed on 22 June 2025), factChecking.cl (accessed on 22 June 2025), and decodificador (https://www.instagram.com/decodificadorcl/?hl=es, accessed on 22 June 2025). From October 2019 to October 2021, it encompasses significant events such as the Chilean Social Outbreak, the COVID-19 pandemic, the Chilean presidential elections of 2021, and more. In line with other datasets, it presents multiple propagation trees, each stemming from a primary message labeled for its truthfulness. The labels assigned for classification of veracity are ‘true’, ‘false’, and ‘imprecise’ (TFI). The distribution of messages per label includes 129 tweets labeled ‘true’, 113 as ‘false’, and 65 as ‘imprecise’. To align this dataset with the task of detecting rumors in English, we only utilized the categories of true and false rumors. These categories are also used as an annotation scheme in English.

4.3. Performance on Auxiliary Tasks—Stage 1

The overall performance of the models in the auxiliary tasks is described, which serves as the basis for the CLMs used throughout this work. This initial validation was performed only in English, using bert-base-uncased as a baseline to evaluate performance. Recall that this validation aims to demonstrate competitive performance on the auxiliary tasks. The construction of CLMs uses bert-base-multilingual-uncased (https://huggingface.co/bert-base-multilingual-uncased, accessed on 22 June 2025) and the hyperparameters described below.

Stance classification. As in BUT-FIT’s implementation [87], a weighted loss function is used to address dataset imbalance. In practice, we experimented with variations such as gradient clipping, bootstrapping resampling, and learning rates. The weighted loss provided the best performance. The best results were obtained using dropout at , three epochs, the learning rate at 1 × 10−5, and the size of the training and validation batch at 2. Table 3 summarizes the results obtained, by seed, during training. The seeds were obtained by using a random number generator. The best model achieved 0.605 Macro-F1. This approach is competitive with the results reported by Fajcik et al. [87], although they report around 0.531 Macro-F1 when using both Reddit and Twitter data. In contrast, we achieve a score of around 0.6 using Twitter data.

Table 3.

Stance classification performance, for English language.

Bot detection. After an initial exploration of the parameter space, the best results were obtained using dropout at , 5 epochs, a learning rate at 1 × , and training and validation batch size at 4. We explored the impact of the sampling strategies as well as the maximum number of tweets to be sampled. Hence, we considered the following options:

- , number of tweets per author: 5, 15, 25, 50, 75, and 100.

- Sampling strategies: tail, head, head+tail.

For the English language, we set the variable to 512 because it is the limit of tokens processable by mBERT. By combining these options, we achieved 18 settings in which 3 random seeds were evaluated after training for 5 epochs. In each setting, ALEN tweets, without replacement, were sampled from the available messages of an author, and then for each tweet sampled in this way, characters were extracted. Table 4 (third column) shows the results of these experiments.

Table 4.

Bot detection performance for English and Spanish languages.

Taking into account the results presented in Table 4, we chose the configuration with the best result during the construction of the CLMs. This configuration has Macro-F1 0.969 with parameters ALEN = 25 and sampling strategy = tail.

In the context of bot detection in Spanish (see Table 4, fourth column), the same experimental setting was used for model selection, as previously established. Observations indicate that, in general, the results are slightly lower than those achieved when detecting bots in English. The best-performing model, which was selected for transfer learning, achieved a Macro-F1 score of 0.933 with ALEN = 50, and employed a sampling strategy based on heads. However, for simplicity, the same value for ALEN and the strategy of constructing CLMs were chosen. In the results (Table 4), Macro-F1 and the accuracy metrics are presented, since the accuracy is almost the same value; this is because the human and bot classes are balanced.

4.4. Results on Rumor Detection in English—Stage 2 and 3

Building on the models of the three auxiliary tasks presented earlier, we proceeded to evaluate the 80 experimental settings (Section 3) outlined before to study their performance on the task of rumor detection in English. A standard training/evaluation loop was set up, incorporating testing on each epoch. All models run this loop for 1000 epochs. This choice denotes a large number of epochs in which the performance of each model can be adequately evaluated because the training has been running long enough and no further improvements are possible. In practical terms, this makes development easier than implementing techniques such as early stopping. After training all CLMs, we study their behavior and performance.

Regarding their behavior, we note whether a setting presents learning or not, which is observed graphically in the score/loss curve of each setting. In addition, we note the epoch in which we consider the model has stopped learning, alongside its Macro-F1 score at that specific point; note that this might not necessarily be the highest score achieved during training, although we also consider the best score overall. Note that all Macro-F1 scores refer to the test set evaluated after training at every epoch.

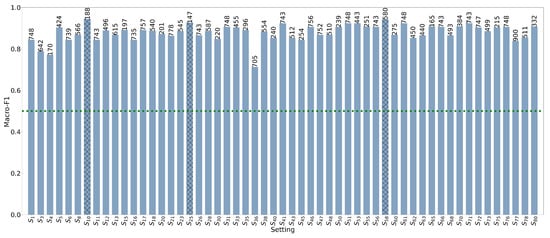

After evaluating all CLMs, the following conclusions were drawn: of 80 total settings, 55 showed evidence of learning, while 25 settings were excluded due to a lack of any evidence of learning. This initial assessment was based on the information loss curves described above. Figure 6 presents a general overview of all the models that learn (that is, the 55 settings, % of the 80 settings) and their performance. In the figure, the green line indicates the score to use as a reference value for the score of each setting. In addition, for the 55 settings that learn, all their results have a Macro-F1 score greater than 0.7. More specifically,

Figure 6.

Best Macro-F1 results on the test set for CLM models fine-tuned on the Twitter16 dataset, over a fixed amount of 1000 epochs. The x axis presents the number of settings , and the y axis shows the Macro-F1 scores. The horizontal bar delimits the barrier of the score. The number over each bar indicates in which epoch during training the best score is obtained for each setting. The hatched bars correspond to the three best results.

- A proportion of % (3 settings) obtain a score Macro-F1 in the interval .

- A proportion of % (30 settings) obtain a score Macro-F1 in the interval .

- A proportion of % (22 settings) obtain a score Macro-F1 over .

Furthermore, in terms of the 55 settings that demonstrated learning, each achieved a Macro-F1 score greater than 0.7. The best-performing settings are detailed in Table 5.

Table 5.

CLMs that obtain the best results for the English language, sorted by setting number.

As Table 5 shows, the three best results have Macro-F1 scores greater than 0.9. We highlight the setting since it uses the three auxiliary tasks. This suggests that the number of tasks used to train a CLM has a noticeable impact on its performance.

When considering the models in the best-fit CLM training schemes, with three models, we see that

- Bot detection appears in all the best results, such as the first or second model. This suggests that this task is more valuable as a starting point for CLM training.

- More specifically, bot detection in English is the most commonly used first model or task.

- Regarding the third model/task, from the above analysis, it is clear that stance classification appears less frequently (only in one setting in the top three results).

4.5. Results on Rumor Detection in Spanish—Stage 4

Taking into account the 55 settings selected from the previous stage, we now evaluate them using the Disinformation dataset (described in Table 2). First, we assess the zero-shot performance as a general indicator of cross-lingual transfer learning from all the auxiliary tasks and rumor detection in English. Then, we evaluate the fine-tuning process for rumor detection in Spanish. This approach allows us to examine the multi-tasking cross-lingual transfer learning from English to Spanish.

4.5.1. Zero-Shot Evaluation

Since zero-shot evaluation does not allocate any data for training, the results were tested in a zero-shot setting across different dataset partitions. The dataset is chronologically ordered according to the publication date of the news articles, and on the basis of this sequence, test partitions of varying lengths were constructed. We experimented with different partitions of the dataset: 15%, 30%, 45%, 50%, and 100% of the data. It should be noted that at this stage, we use only a percentage of the data for the tests, and the remaining data are not used. In total, 4 out of the 55 settings achieved a score greater than , as shown in Table 6. The best performance is achieved with (45% data partitioning), obtaining a Macro-F1 score of 0.667. This corresponds to CLM12, a configuration that leverages all three tasks, reinforcing the idea that auxiliary tasks can effectively support the main task of rumor detection.

Table 6.

Best configurations for each data partition, using zero-shot strategy, for Spanish language.

As can be observed in Table 6, bot detection appears mainly as the first task, which is consistent with the results of the previous section.

4.5.2. Fine-Tuning for Rumor Detection in Spanish

We now evaluate the 55 settings selected in the previous stage as initial checkpoints for fine-tuning rumor detection in Spanish using the Disinformation dataset. We used a 70/30 training–test split for these experiments, preserving the temporal order for the partitions. Similarly to fine-tuning on Twitter16, we run each setting while measuring the accuracy and Macro-F1 score on the test after each epoch.

When fine-tuning is applied, it is observed that the results for rumor detection in Spanish significantly improve compared to those obtained through zero-shot learning. We present the best models identified by fine-tuning rumor detection in Spanish. These results are detailed in Table 7.

Table 7.

CLMs that obtained the best results in rumor detection in Spanish using fine-tuning.

Table 7 reveals that the best-performing model corresponds to , a model based on the CLM14 scheme, a CLM with arity = 3, using the three models, starting with Stance EN, followed by Bot EN and, finally, Bot ES. Although this sequence differs from the one that gave the best results in English (Table 5), it is important to emphasize the use of the three models in achieving the best results. The results demonstrate the success of the transfer learning strategy in this scenario.

5. Discussion of Results

5.1. Main Findings of the Study

This study thoroughly analyzes various transfer learning strategies from English to Spanish for rumor detection. Specifically, we have shown that the use of multilingual embeddings, such as mBERT, enables the transfer of learning from English to Spanish. The results obtained from a baseline without auxiliary tasks are competitive, indicating that rumor detection, both in English and Spanish, based on the datasets used in this study, has high predictive capacity. In addition to this finding, we have shown that the use of transfer learning, specifically through CLM, is beneficial for improving the results of a strong baseline. In the most complex case analyzed in this study, rumor detection in Spanish, improvements are achieved through a CLM of arity 3.

In general, using bot detection as an auxiliary task is relevant for rumor detection in English and when using a zero-shot strategy in the Spanish language test, serving in both cases as the initial model. In the case of models fine-tuned for rumor detection in Spanish, stance classification is prioritized as the first task. For all three cases, the results for rumor detection are promising, supported by auxiliary tasks such as bot detection and stance classification. Finally, we highlight that most of the results, that is, the 55 models fine-tuned for English and 54 for Spanish with the fine-tuned models, reach metric values higher than 0.6, which can be attributed to the transfer learning applied to the tasks: Bot EN, Bot ES, and Stance EN.

5.2. Limitations of the Study

Although our research demonstrates improvements in performance for the target task when using CLMs, the study has certain limitations. First, due to restricted access to private data from other studies, we were limited to one single dataset to classify stances in English. This hinders our ability to address the transfer of the stance classification task from Spanish to the main tasks. Another limitation lies in our focus on datasets based on microblogging content, specifically from Twitter. This focus is primarily because the vast majority of resources in this area are sourced from Twitter. Given the recent changes in Twitter’s API terms of use, now X, it is anticipated that resources from other social media platforms will become available, potentially enabling the training and evaluation of these methodologies on different platforms. Finally, we opted to standardize our approach using mBERT models for all tasks, simplifying the implementation of cascade-based transfer learning. Although this design decision improves performance on the main task, the results on auxiliary tasks do not compete well with state-of-the-art methods specifically designed for those tasks.

5.3. Comparison of Results

5.3.1. Comparison with Base Models

In reviewing the literature, to the best of our knowledge, no research has been found that addresses the rumor detection task for the Spanish language using the two auxiliary tasks (bot detection and stance classification). Therefore, the best results obtained for the Spanish language are compared with those of models that do not take advantage of learning transfer from auxiliary tasks. For this, mBERT is used as the base model, with variations in the embedding strategy. A comparison is also provided with the BiGRU neural network, the same model used in Stage 3, which does not incorporate any auxiliary tasks. As can be seen in Table 8, the current proposal outperforms all other results, achieving an improvement of 2% over the result that immediately precedes it in the table and 15% over the first result in the table.

Table 8.

Comparison of the results of the best-performing model for Spanish data with baseline models, sorted by Macro-F1.

The embedding strategy does not appear to follow a consistent pattern. However, when the results are analyzed from lowest to highest, it can be observed that the embedding mBERT-sum-four-last appears twice among the lowest-performing entries, while mBERT-second-to-last is consistently present among the top-performing ones; this embedding also corresponds to the best result obtained using the Spanish Disinformation dataset.

5.3.2. Stylistic Metric Comparison

In order to present a descriptive analysis of linguistic metrics applied to true and false rumors in Spanish, using both fine-tuning and zero-shot approaches, four specific metrics are considered. Each metric is computed as follows: for every propagation tree, the metric is applied to the root message as well as to all replies within the conversation tree. Subsequently, the average value for each tree is calculated, resulting in a single representative metric per conversation or propagation tree. The metrics used are number of words, number of sentences, average token length, and perplexity. The label TP denotes a false rumor that has been correctly classified, while TN indicates a true rumor that has been correctly classified. The results for the fine-tuning setting are presented first, followed by those obtained using the zero-shot approach.

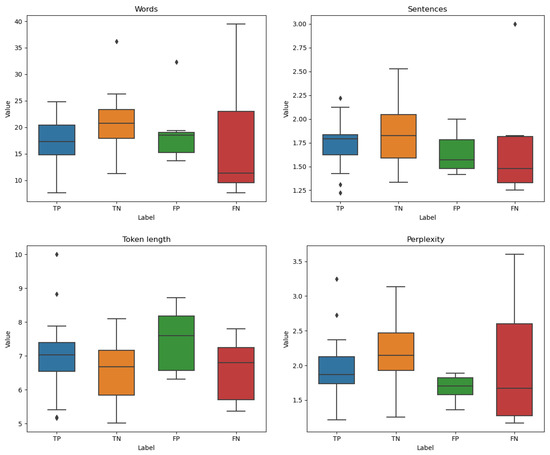

For the results obtained through fine-tuning, Figure 7 presents the different metrics across all trees, grouped by classification. From the figure, the following observations can be made:

Figure 7.

Stylistic metrics for Spanish using fine-tuning. In the case of words and perplexity, the differences are statistically significant (according to the Kruskal–Wallis test, with p-value < 0.05). However, this is not the case for sentences and token length, where the differences are not statistically significant.

- In terms of word count, the FN class shows substantial variability: while some texts are extremely short, others are significantly longer. This inconsistency may introduce confusion for the classifier. Additionally, the lower median word count observed in this class indicates that many instances consist of only a few words, which could further impair the accuracy of the classification. In contrast, the FP boxplot reveals that texts in this category tend to be more uniform in length. This regularity might serve as a cue that the classifier relies upon, potentially resulting in systematic misclassification.

- In the case of the number of sentences per message, misclassified instances (FP and FN) tend to include fewer sentences than correctly classified ones (TP and TN). This pattern suggests that shorter texts can hinder the performance of the classifier. Similar to the previous metric, the FN group shows a more noticeable reduction in the median number of sentences—remaining generally low—with the presence of an outlier that could contribute to the classifier’s confusion.

- On average, false positives (FPs) exhibit a greater token length, which may adversely affect the classifier’s performance. In contrast, true negatives (TNs) and false negatives (FNs) typically consist of shorter tokens, potentially reflecting simpler texts. Interestingly, true positives (TPs) include several outliers. Overall, the average token length seems to play a role in the misclassification of FPs compared to the other categories.

- According to the perplexity metric, false positives (FPs) tend to have low values, indicating that the model considered these instances predictable, even though it misclassified them. In contrast, false negatives (FNs) exhibit a wider distribution of perplexity scores, ranging from low to high, which suggests that the model fails on both straightforward and more complex texts. For true negatives (TNs) and true positives (TPs), correct classifications are generally associated with texts of moderate perplexity, reflecting a balanced level of complexity.

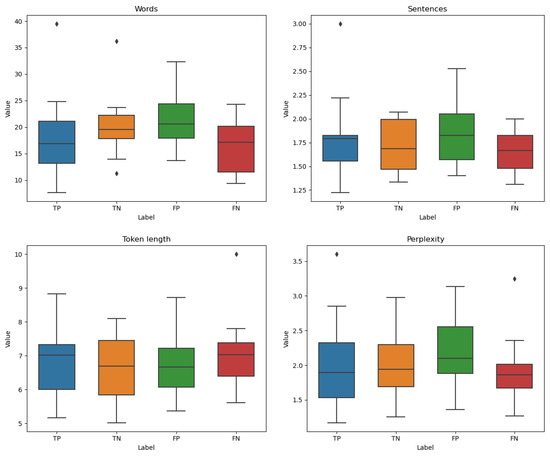

For the results obtained using the zero-shot approach, as shown in Figure 8, the following observations can be made:

Figure 8.

Stylistic metrics for Spanish using zero-shot methods. For all four metrics, there is insufficient evidence to support statistical significance, as determined by the Kruskal–Wallis test (p-value < 0.05).

- In the case of words, FP contains a larger number of words. When compared to the results of TP, this difference may contribute to classification errors.

- Regarding the sentence metrics, all groups exhibit similar distributions. In the case of FP, there is a higher number of sentences, indicating greater structural complexity, which could be a potential source of error. Nevertheless, the average number of sentences per tree does not appear to be a strong discriminative feature for classification.

- For the length token metric, as with the previous one, all groups exhibit similar distributions. In the case of the FN group, however, there is an outlier that corresponds to a particularly complex text. This increased complexity may explain the classification error, especially considering that such outliers are absent in the other groups.

- In the case of FP, the texts exhibit higher perplexity, suggesting they are more challenging to classify. This is further evidenced by some out-of-range failures, likely due to the presence of outliers. In contrast, TP and TN texts show lower perplexity, indicating that they are easier to classify.

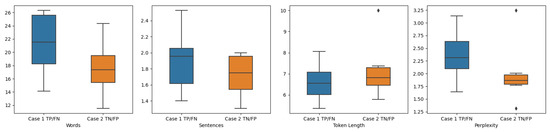

As previously noted, there are instances in which the model produces misclassifications. The following analysis provides a descriptive overview of such cases, specifically those where the zero-shot model yields an incorrect prediction, but the fine-tuned model successfully corrects it. In particular, Case 1—TP/FN—refers to situations where the zero-shot model produces a false negative (FN), while the fine-tuned model correctly identifies it as a true positive (TP). Conversely, Case 2—TN/FP—corresponds to cases where the zero-shot model yields a false positive (FP), and the fine-tuned model accurately classifies them as true negatives (TNs).

In Figure 9, the following observations can be made:

Figure 9.

Comparison between fine-tuning and zero-shot. The differences observed for words and perplexity are statistically significant (according to the Kruskal–Wallis test, with a p-value < 0.05). However, this is not the case for sentences and token length, where no significant differences were found.

- In terms of word count, messages that were misclassified as false negatives (FNs) are significantly longer than those misclassified as false positives (FPs). This indicates that texts correctly classified as true positives (TPs) tend to be longer. These findings suggest that the zero-shot classifier struggles to handle longer texts effectively.

- Regarding sentences, the situation is comparable: texts containing a greater number of sentences tended to be classified as false negatives (FNs), which in this context, correspond to true positives (TPs). This suggests that false rumors, in general, exhibit a higher structural complexity than true ones. Once again, the zero-shot classifier failed to account for this aspect.

- In the case of token length, the misclassified texts tended to contain, on average, longer tokens. This suggests that the zero-shot classifier may have mistaken lexical complexity for factual accuracy.

- On the perplexity metric, misclassified false negatives (FNs) exhibit significantly higher values than misclassified false positives (FPs). The zero-shot model appears overly confident in labeling simple texts as true rumors, while it struggles with more complex texts, often misclassifying them as false rumors.

In summary, while the zero-shot model may rely primarily on surface-level features—such as text length or linguistic complexity—for classification, the fine-tuned model has learned to identify deeper semantic and structural patterns within the texts, allowing it to effectively distinguish between true and false rumors.

5.3.3. Ablation Study

Given that a CLM consists of a sequence of models, where each model receives knowledge from its predecessor, and considering that the best performance was achieved with CLM14, we present the intermediate results to illustrate how information from auxiliary tasks was progressively integrated. Detailed results are provided in Table 9.

Table 9.

Intermediate results in the construction of the CLM14, for Spanish language.

As shown in Table 9, incorporating Bot EN as the second model results in a more effective transfer of learning compared to Bot ES, a finding that is further supported by the result of CLM15.

6. Conclusions

This study introduces an innovative method to utilize cross-lingual cross-domain transfer learning in a target task. We aim to implement contemporary machine learning models in Spanish, which lack annotated data for the specific tasks addressed in this study. Our strategy combines cascade-based multi-task transfer learning with mBERT-based word embeddings. This allows us to construct multiple pre-trained models using the available data and then adapt them to the domain in the target task. Our methodology is structured in four stages and can be systematically applied depending on the availability of annotated data.

For practical application and validation of our method, we focus on rumor detection, using two auxiliary tasks: stance classification and bot detection. Our detailed experimental assessment explores 80 distinct configurations to discern the most effective way to utilize cross-lingual, cross-domain transfer learning across all tasks and datasets. The validation of our technique is supported by experimental results that demonstrate a marked enhancement in the target task. Impressively, our study reveals that the results for rumor detection in Spanish are promising, underscoring the benefits of using auxiliary tasks in English.

Looking ahead, our research agenda includes exploring the integration of additional tasks, such as hate speech detection. We also want to compare our approach with multi-task joint learning frameworks to highlight differences from current cascade-based strategies. Furthermore, it is essential to assess the effectiveness of these methods on various social media platforms, to reduce the dependence on data obtained solely from Twitter (now X). Lastly, we are intrigued by the potential of applying the techniques outlined in this study to languages other than Spanish.

Author Contributions

Conceptualization, M.M. and E.P.; methodology, E.P.; software, E.P.; validation, E.P. and M.M.; resources, M.S.; data curation, E.P.; writing—original draft preparation, E.P. and M.M.; writing—review and editing, M.M. and M.S.; project administration, M.S. All authors have read and agreed to the published version of the manuscript.

Funding

Marcelo Mendoza acknowledges funding support from the Millennium Institute for Foundational Research on Data (IMFD) (ANID—Millennium Science Initiative Program—Code ICN17_002) and the National Center of Artificial Intelligence (CENIA FB210017, Basal ANID). Marcelo Mendoza was also funded by the ANID grant FONDECYT 1241462. The founders played no role in the design of this study.

Institutional Review Board Statement

The study was reviewed by the Research Ethics and Safety Unit of the Pontificia Universidad Católica de Chile and was declared exempt from ethical and safety evaluation, since it did not investigate people or personal and/or sensitive data, nor did living beings or individuals participate in it. Materials, tangible or intangible, specially protected, will be used in scientific research and are not considered risky activities or agents that may put the subjects who participate, carry out, and/or intervene in the research and the environment at risk. The ethics committee certificate is included in the attached documents.

Informed Consent Statement

Not applicable.

Data Availability Statement

All the data used in the paper comes from open-access sources. Their URLs are included in the main body of the paper.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of the data; in the writing of the manuscript; or in the decision to publish the results.

References

- Shu, K.; Bhattacharjee, A.; Alatawi, F.; Nazer, T.H.; Ding, K.; Karami, M.; Liu, H. Combating disinformation in a social media age. WIREs Data Min. Knowl. Discov. 2020, 10, e1385. [Google Scholar] [CrossRef]

- Allcott, H.; Gentzkow, M. Social Media and Fake News in the 2016 Election. J. Econ. Perspect. 2017, 31, 211–236. [Google Scholar] [CrossRef]

- Allcott, H.; Gentzkow, M.; Yu, C. Trends in the diffusion of misinformation on social media. Res. Politics 2019, 6, 205316801984855. [Google Scholar] [CrossRef]

- Graves, L.; Nyhan, B.; Reifler, J. Understanding Innovations in Journalistic Practice: A Field Experiment Examining Motivations for Fact-Checking. J. Commun. 2016, 66, 102–138. [Google Scholar] [CrossRef]

- Brandtzaeg, P.B.; Følstad, A.; Chaparro, M. How Journalists and Social Media Users Perceive Online Fact-Checking and Verification Services. J. Pract. 2018, 12, 1109–1129. [Google Scholar] [CrossRef]

- Castillo, C.; Mendoza, M.; Poblete, B. Predicting information credibility in time-sensitive social media. Internet Res. 2013, 23, 560–588. [Google Scholar] [CrossRef]

- Ruchansky, N.; Seo, S.; Liu, Y. CSI: A hybrid deep model for fake news detection. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 1–5 October 2017; Volume 1, pp. 797–806. [Google Scholar]

- Liu, Y.; Wu, Y.-F.B. Early detection of fake news on social media through propagation path classification with recurrent and convolutional networks. In Thirty-Second AAAI Conference on Artificial Intelligence; AAAI Press: Palo Alto, CA, USA, 2018; Volume 1. [Google Scholar]

- Ma, J.; Gao, W.; Wong, K. Rumor detection on Twitter with tree-structured recursive neural networks. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, ACL 2018, Melbourne, Australia, 15–20 July 2018; Volume 1, pp. 1980–1989. [Google Scholar]

- Ma, J.; Gao, W.; Joty, S.R.; Wong, K.-F. An Attention-based Rumor Detection Model with Tree-structured Recursive Neural Networks. ACM Trans. Intell. Syst. Technol. 2020, 11, 42:1–42:28. [Google Scholar] [CrossRef]

- Sun, L.; Rao, Y.; Wu, L.; Zhang, X.; Lan, Y.; Nazir, A. Fighting False Information from Propagation Process: A Survey. ACM Comput. Surv. 2023, 55, 207:1–207:38. [Google Scholar] [CrossRef]

- Mendoza, M.; Poblete, B.; Castillo, C. Twitter under crisis: Can we trust what we RT? In Proceedings of the 1st Workshop on Social Media Analysis, SOMA 2010, Washington, DC, USA, 25 July 2010; Volume 1, pp. 71–79. [Google Scholar]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef]

- Mendoza, M.; Valenzuela, S.; Núñez-Mussa, E.; Padilla, F.; Providel, E.; Campos, S.; Bassi, R.; Riquelme, A.; Aldana, V.; López, C. A study on information disorders on social networks during the Chilean social outbreak and COVID-19 pandemic. Appl. Sci. 2023, 13, 5347. [Google Scholar] [CrossRef]

- Garg, S.; Sharma, D.K. New Politifact: A Dataset for Counterfeit News. In Proceedings of the 2020 9th International Conference System Modeling and Advancement in Research Trends (SMART), Bangalore, India, 17–19 December 2020; Volume 1, pp. 17–22. [Google Scholar]

- Providel, E.; Mendoza, M. Misleading information in Spanish: A survey. Soc. Netw. Anal. Min. 2021, 11, 36. [Google Scholar] [CrossRef]

- De, A.; Bandyopadhyay, D.; Gain, B.; Ekbal, A. A Transformer-Based Approach to Multilingual Fake News Detection in Low-Resource Languages. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2021, 21, 9:1–9:20. [Google Scholar] [CrossRef]

- Shi, Y.; Zhang, X.; Shang, Y.; Yu, N. Don’t Be Misled by Emotion! Disentangle Emotions and Semantics for Cross-Language and Cross-Domain Rumor Detection. IEEE Trans. Big Data 2024, 10, 249–259. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gómez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; Volume 1 (Long and Short Papers). [Google Scholar]

- Lin, Y.; Yang, S.; Stoyanov, V.; Ji, H. A Multi-lingual Multi-task Architecture for Low-resource Sequence Labeling. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 799–809. [Google Scholar]

- Pikuliak, M.; Šimko, M. Combining Cross-lingual and Cross-task Supervision for Zero-Shot Learning. In Text, Speech, and Dialogue; Springer: Berlin/Heidelberg, Germany, 2020; pp. 162–170. [Google Scholar]

- Ahmad, Z.; Jindal, R.; Ekbal, A.; Bhattachharyya, P. Borrow from rich cousin: Transfer learning for emotion detection using cross lingual embedding. Expert Syst. Appl. 2020, 139, 112851. [Google Scholar] [CrossRef]

- Wu, L.; Rao, Y.; Jin, H.; Nazir, A.; Sun, L. Different Absorption from the Same Sharing: Sifted Multi-task Learning for Fake News Detection. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 4644–4653. [Google Scholar]

- Chu, Z.; Gianvecchio, S.; Wang, H.; Jajodia, S. Who is Tweeting on Twitter: Human, Bot, or Cyborg? In Proceedings of the 26th Annual Computer Security Applications Conference, Tampa, FL, USA, 6–10 December 2010; pp. 21–30, ACSAC ’10. [Google Scholar]

- Khaund, T.; Al-khateeb, S.; Tokdemir, S.; Agarwal, N. Analyzing Social Bots and Their Coordination During Natural Disasters. In SBP-BRiMS; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Rangel, F.; Rosso, P. Overview of the 7th author profiling task at Pan 2019: Bots and gender profiling in twitter. In Proceedings of the Conference of 20th Working Notes of Conference and Labs of the Evaluation Forum, CLEF, Padua, Italy, 9–11 September 2019; p. 2380. [Google Scholar]

- Castillo, S.; Allende-Cid, H.; Palma, W.; Alfaro, R.; Ramos, H.; Gonzalez, C.; Elortegui, C.; Santander, P. Detection of Bots and Cyborgs in Twitter: A Study on the Chilean Presidential Election in 2017. In Proceedings of the Conference of 11th International Conference on Social Computing and Social Media, SCSM 2019, Madrid, Spain, 9–11 September 2019; pp. 311–323. [Google Scholar]

- Kochkina, E.; Liakata, M.; Zubiaga, A. All-in-one: Multi-task Learning for Rumour Verification. In Proceedings of the 27th International Conference on Computational Linguistics, San Sebastián, Spain, 20–26 August 2018; pp. 3402–3413. [Google Scholar]

- Ma, J.; Gao, W.; Wong, K.-F. Detect Rumor and Stance Jointly by Neural Multi-Task Learning. In Proceedings of the Companion Proceedings of the The Web Conference 2018, Lyon, France, 23–27 April 2018; pp. 585–593. [Google Scholar]

- Alsaif, H.F.; Aldossari, H.D. Review of stance detection for rumor verification in social media. Eng. Appl. Artif. Intell. 2023, 119, 105801. [Google Scholar] [CrossRef]

- Castillo, C.; Mendoza, M.; Poblete, B. Information credibility on Twitter. In Proceedings of the 20th International Conference on World Wide Web, Hyderabad, India, 28 March–1 April 2011; pp. 675–684. [Google Scholar]

- Qazvinian, V.; Rosengren, E.; Radev, D.R.; Mei, Q. Rumor Has It: Identifying Misinformation in Microblogs. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Edinburgh, UK, 28–31 August 2011; pp. 1589–1599. [Google Scholar]

- Zhao, Z.; Resnick, P.; Mei, Q. Enquiring Minds: Early Detection of Rumors in Social Media from Enquiry Posts. In Proceedings of the 24th International Conference on World Wide Web (WWW), Florence, Italy, 18–22 May 2015; pp. 1395–1405. [Google Scholar]

- Posadas-Durán, J.; Gómez-Adorno, H.; Sidorov, G.; Moreno, J. Detection of Fake News in a New Corpus for the Spanish Language. J. Intell. Fuzzy Syst. 2019, 36, 4868–4876. [Google Scholar] [CrossRef]

- Abonizio, H.; De Morais, J.; Tavares, G.; Barbon Junior, S. Language-Independent Fake News Detection: English, Portuguese, and Spanish Mutual Features. Future Internet 2020, 12, 87. [Google Scholar] [CrossRef]

- Salazar, E.; Tenorio, G.; Naranjo, L. Evaluation of the Precision of the Binary Classification Models for the Identification of True or False News in Costa Rica. Rev. Ibérica Sist. Tecnol. Informação 2020, E38, 156–170. [Google Scholar]

- Boididou, C.; Papadopoulos, S.; Zampoglou, M.; Apostolidis, L.; Papadopoulou, O.; Kompatsiaris, Y. Detection and Visualization of Misleading Content on Twitter. Int. J. Multimed. Inf. Retr. 2018, 7, 71–86. [Google Scholar] [CrossRef]

- Zubiaga, A.; Liakata, M.; Procter, R.; Wong Sak Hoi, G.; Tolmie, P. Analysing How People Orient to and Spread Rumours in Social Media by Looking at Conversational Threads. PLoS ONE 2016, 11, e0150989. [Google Scholar] [CrossRef]

- Ma, J.; Gao, W.; Mitra, P.; Kwon, S.; Jansen, B.J.; Wong, K.F.; Cha, M. Detecting Rumors from Microblogs with Recurrent Neural Networks. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, IJCAI 2016, New York, NY, USA, 9–15 July 2016; Volume 1, pp. 3818–3824. [Google Scholar]

- Yu, F.; Liu, Q.; Wu, S.; Wang, L.; Tan, T. A Convolutional Approach for Misinformation Identification. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence (IJCAI), Melbourne, Australia, 19–25 August 2017; pp. 3901–3907. [Google Scholar]

- Ajao, O.; Bhowmik, D.; Zargari, S. Fake News Identification on Twitter with Hybrid CNN and RNN Models. In Proceedings of the 9th International Conference on Social Media and Society (SMSociety), Vancouver, NA, Canada, 18–20 July 2018; pp. 226–230. [Google Scholar]

- Deepak, S.; Chitturi, B. Deep Neural Approach to Fake-News Identification. Procedia Comput. Sci. 2020, 167, 2236–2243. [Google Scholar]

- Wang, Y.; Ma, F.; Jin, Z.; Yuan, Y.; Xun, G.; Jha, K.; Su, L.; Gao, J. EANN: Event Adversarial Neural Networks for Multi-Modal Fake News Detection. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 849–857. [Google Scholar]

- Buda, J.; Bolonyai, F. An Ensemble Model Using N-grams and Statistical Features to Identify Fake News Spreaders on Twitter. In Proceedings of the Working Notes of CLEF 2020–Conference and Labs of the Evaluation Forum, Thessaloniki, Greece, 22–25 September 2020; Volume 2696. [Google Scholar]

- Zapata, A.; Providel, E.; Mendoza, M. Empirical Evaluation of Machine Learning Ensembles for Rumor Detection. In Proceedings of the Social Computing and Social Media: Design, User Experience and Impact—14th International Conference, SCSM 2022, San Diego, CA, USA, 7–10 August 2022. [Google Scholar]

- Providel, E.; Mendoza, M. Using Deep Learning to Detect Rumors in Twitter. In Proceedings of the Social Computing and Social Media. Design, Ethics, User Behavior, and Social Network Analysis–12th International Conference (SCSM), Madrid, Spain, 12–14 October 2020; pp. 321–334. [Google Scholar]

- Kwon, S.; Cha, M.; Jung, K. Rumor Detection over Varying Time Windows. PLoS ONE 2017, 12, e0168344. [Google Scholar] [CrossRef] [PubMed]

- Zubiaga, A.; Liakata, M.; Procter, R. Exploiting Context for Rumour Detection in Social Media. In Social Informatics—9th International Conference, SocInfo, Oxford, UK, Proceedings, Part I; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Ma, J.; Gao, W.; Wong, K.-F. Detect rumors in microblog posts using propagation structure via kernel learning. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, ACL 2017, Vancouver, Canada, 30 July–4 August 2017; Volume 1, pp. 708–717. [Google Scholar]

- Li, J.; Ni, S.; Kao, H.-Y. Birds of a Feather Rumor Together? Exploring Homogeneity and Conversation Structure in Social Media for Rumor Detection. IEEE Access 2020, 8, 212865–212875. [Google Scholar] [CrossRef]

- Ma, J.; Gao, W. Debunking Rumors on Twitter with Tree Transformer. In Proceedings of the 28th International Conference on Computational Linguistics, Guadalajara, Mexico, 2–7 November 2020. [Google Scholar]

- Zhao, R.; Arana-Catania, M.; Zhu, L.; Kochkina, E.; Gui, L.; Zubiaga, A.; Procter, R.; Liakata, M.; He, Y. PANACEA: An Automated Misinformation Detection System on COVID-19. In Proceedings of the 17th Conference of the European Chapter of the Association for Computational Linguistics: System Demonstrations, Zagreb, Croatia, 10–14 April 2023. [Google Scholar]

- Yu, S.; Ren, J.; Li, S.; Naseriparsa, M.; Xia, F. Graph Learning for Fake Review Detection. Front. Artif. Intell. 2022, 5, 922589. [Google Scholar] [CrossRef]

- Mohawesh, R.; Xu, S.; Tran, S.N.; Ollington, R.; Springer, M.; Jararweh, Y.; Maqsood, S. Fake Reviews Detection: A Survey. IEEE Access 2021, 9, 65771–65802. [Google Scholar] [CrossRef]

- Caruana, R. Multitask Learning. Mach. Learn. 1997, 28, 41–75. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q. A Survey on Multi-Task Learning. IEEE Trans. Knowl. Data Eng. 2022, 34, 5586–5609. [Google Scholar] [CrossRef]

- Pikuliak, M.; Šimko, M.; Bieliková, M. Cross-lingual learning for text processing: A survey. Expert Syst. Appl. 2021, 165, 113765. [Google Scholar] [CrossRef]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed Representations of Words and Phrases and Their Compositionality. In Proceedings of the 26th International Conference on Neural Information Processing Systems—Volume 2, NIPS’13, Vancouver, BC, Canada, 5–10 December 2013; pp. 3111–3119. [Google Scholar]

- Enayet, O.; El-Beltagy, S.R. NileTMRG at SemEval-2017 Task 8: Determining Rumour and Veracity Support for Rumours on Twitter. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 470–474. [Google Scholar]

- Li, Q.; Zhang, Q.; Si, L. Rumor Detection by Exploiting User Credibility Information, Attention and Multi-task Learning. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 1–6 July 2019; pp. 1173–1179. [Google Scholar]

- Islam, M.R.; Muthiah, S.; Ramakrishnan, N. RumorSleuth: Joint Detection of Rumor Veracity and User Stance. In Proceedings of the 2019 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, ASONAM ’19, Barcelona, Spain, 26–29 August 2019; pp. 131–136. [Google Scholar]