1. Introduction

Remote Direct Memory Access (RDMA) has gained widespread adoption in data centers (DCs) due to its ability to significantly enhance performance by providing ultra-low latency, high throughput, and minimal CPU overhead. These advantages are achieved through techniques such as kernel bypass and zero memory copy, which allow for direct memory access between servers without involving the CPU. This capability is crucial for latency-sensitive and bandwidth-intensive applications, such as distributed machine learning [

1] and cloud storage [

2,

3], where large volumes of data need to be transferred quickly and efficiently.

RDMA’s wide application in DCs also drives lots of research studies on how to improve RDMA transmission performance in DC networks (DCNs) [

4]. These studies have significantly improved intra-DC RDMA transmission performance by focusing on optimizing congestion control and load balancing through innovative algorithms and specialized hardware upgrades. Concurrently, the industry is actively pursuing optimizations for intra-data center RDMA, with tech giants like Google and Amazon at the forefront. To enhance key performance metrics such as scalability, latency, and bandwidth in large-scale data center operations, new transmission protocols, like UEC, SRD, Falcon, and TTPoE, are being developed specifically for RDMA applications. These efforts have collectively advanced the application of RDMA within DCs.

In recent years, the scale of DC infrastructures has significantly expanded to support the needs of emerging data-intensive and computing-intensive services. Due to constraints such as land, energy, and connectivity [

5,

6,

7], cloud service providers (CSPs) typically deploy multiple DCs in different regions to balance user demand and operational costs [

8]. Meanwhile, applications relying on inter-DC networks are continuously evolving. Many data-intensive and computing-intensive applications, such as data analysis [

9,

10], machine learning [

11,

12], graph processing [

13,

14], and high-performance computing (HPC) [

15,

16], involve substantial amounts of data distributed across multiple DCs. Specifically, with the rapid development of Artificial Intelligence (AI) applications, the explosive growth in parameters of large language models (LLMs) has led to a significant increase in the computing power required for LLM pre-training; consequently, leveraging the computing power of multi-regional DCs to provide bigger computing capabilities to support ultra-scale computing requirements has become a recent research focus [

17,

18].

To meet the demands for high-throughput, low-latency, and lossless data transmission across DCs, applying RDMA to inter-DC scenarios is a natural choice. This approach extends the performance and cost-efficiency advantages observed in intra-DC applications to inter-DC environments. However, when RDMA is deployed across DCs, a series of new challenges are presented, such as congestion control, buffer pressure, load balance, and so on. For instance, regarding congestion control, the existing RDMA congestion control algorithms are mainly designed for the intra-DC network, and it is proved that the performance of these algorithms is severely impaired in inter-DC networks. The long-haul, high-bandwidth link introduces a large round-trip time (RTT) and bandwidth delay product (BDP) [

19], which delays the network signals and results in a large queueing latency. To address these challenges, various methods have been proposed by researchers, focusing on long-range congestion control or packet loss control to optimize the performance of RDMA in specific inter-DC application scenarios. However, these methods still exhibit certain limitations. Therefore, further research is required to explore additional optimizations to enhance the effectiveness of RDMA in inter-DC applications.

As illustrated in

Figure 1, the evolution of RDMA has progressed through three key stages: proposal, maturation, and modernization. Its application scope has also broadened, extending from intra-DC to inter-DC use cases. This article will align its structure with this developmental trajectory. We first introduce the background of RDMA, including its working principles and related technologies applied in DCN. Next, the requirements and challenges of inter-DC RDMA are analyzed. Subsequently, a survey of the existing inter-DC RDMA solutions and related research is conducted. Following this, several future research directions for inter-DC RDMA are examined. Finally, this article concludes with a summary of the findings.

3. Inter-DC RDMA Requirements and Challenges

The growing trend of DC interconnection (DCI) is driven by two key factors: the rise of inter-DC applications and resource constraints.

Emerging inter-DC applications: Many applications, such as cloud services, data analytics, machine learning, and graph processing, involve large datasets distributed across multiple DCs. These applications commonly adhere to the “moving compute to data” model, necessitating efficient data transfer and processing across DCs. For instance, scientific research such as the InfiniCloud project interconnects supercomputing centers around the nation to facilitate collaborative research efforts. In the past few years, federated cloud computing has been mooted as a means to tackle the demands of data privacy management and international digital sovereignty, which also requires data processing in geographically distributed DCs. In addition to the data requirements, the substantial computing power required for LLM training in recent years has led to widespread discussions on effectively utilizing the computing resources of multiple DCs to support large-scale LLM pre-training tasks.

Resource constraints: At the same time, the expansion of large DCs is limited by land, power, and connectivity constraints. To address this, large CSPs have adopted a strategy of using multiple interconnected DCs through dedicated fibers to serve a region rather than relying on a single mega-DC. This approach, known as DCI, involves fiber lengths ranging from tens of kilometers for city-wide interconnections to thousands of kilometers for nation-wide interconnections. For instance, China has been developing a national computing system that interconnects computing nodes and DC clusters across the country to balance resource distribution, called the China Computing Network (C

2NET) [

42,

43]. This system leverages computing infrastructures located in the west, where land and electricity costs are lower, to meet the computing demands of the east.

Owing to the aforementioned reasons, the interconnection of multiple DCs to support various emerging applications will emerge as an important trend in the future. In recent years, with the conditions for wide-area interconnection among DCs gradually being perfected, some rely on the existing wide area network (WAN) infrastructure, while others deploy dedicated optical fibers to provide high-speed interconnection [

44,

45,

46]. Thus, for inter-DC applications, directly establishing RDMA connections over DCI is highly advisable. Firstly, these services typically employ RDMA for communication within the DC due to stringent performance requirements. When these services communicate across DCs, it is better to continue using existing RDMA technology to achieve single-connection high throughput over long-distance links. Second, using RDMA can maintain API consistency and reduce the complexity of application deployment across DCs. However, deploying current DCN-specific RDMA solutions across DCs presents several significant challenges, primarily due to the differences in network conditions compared to intra-DC environments.

Congestion control: Existing intra-DC RDMA solutions (e.g., DCQCN [

25], TIMELY [

29], and IRN [

28]) leverage congestion control algorithms to ensure a lossless network environment. However, these algorithms struggle with the unique conditions of inter-DC networks, leading to impaired performance. Inter-DC links have much longer propagation delays compared to intra-DC links. The inter-DC latency varies from 1 millisecond to several tens of milliseconds. For instance, city-wide interconnections within 100 km result in a latency of approximately 1 millisecond, whereas some nation-wide or continent-wide interconnections can exceed 1000 km, bringing a 10-millisecond level latency [

47]. This increased latency heavily affects the performance of existing RDMA congestion control algorithms such as DCQCN, TIMELY, and HPCC. These algorithms are designed for low-latency environments, which adjust the sending rate according to ECN, RTT, or other timely feedback, so that they struggle to adapt to the high-latency conditions of inter-DC networks. The long RTT slows down the convergence of these algorithms, leading to oscillating sending rates and queue lengths, which can impair throughput and cause queue starvation.

Buffer pressure: Lossless RDMA requires at least one bandwidth-delay product (BDP) buffer space to avoid packet loss [

19], and the switches in DCs usually have shallow buffers in order to ensure low queuing delay. However, the latency across DCs far exceeds that within a DC, and the inter-DC link capacities are usually very large, resulting in large buffer pressure on the egress switches connected to long-haul dedicated links. Therefore, if intra-DC RDMA solutions are simply deployed in inter-DC scenarios with traditional switches, this can lead to buffer overflow and packet loss, which further complicates the retransmission process. Consequently, the packet loss issues caused by buffer pressure not only pose challenges to loss packet recovery mechanisms, resulting in higher latency and lower bandwidth utilization, but also impose a stricter requirement on the design of switches for inter-DC interconnection scenarios.

Loss packet recovery: When RDMA solutions that rely on lossless networks are deployed across DCs, RDMA’s congestion control mechanism may struggle to respond promptly to network state changes. RDMA typically uses a GBN retransmission mechanism, where the sender retransmits all packets starting from the lost one. In high-latency environments, this can result in substantial retransmission overhead, reducing transmission efficiency. Therefore, the sluggishly responsive congestion control not only influences the detection of packet loss but also leads to higher packet loss rates and triggers more frequent retransmissions, exacerbating network congestion. For lossy RDMA solutions, the impact of inter-DC deployment on packet loss recovery is insignificant as well. Lossy solutions permit switches to drop packets, with the sender retransmitting lost packets when specific events are triggered, called selective retransmission. Typical examples of lossy solutions include IRN [

28] for RoCEv2 and traditional TCP. Unlike PFC, IRN sends all packets within a sliding window (i.e., a bitmap), and packets are dropped by switches when buffer overflow occurs. The fixed-size sliding window limits the number of inflight packets and affects data transmission performance. Selective retransmission can achieve good performance with short RTTs (i.e., DCN environment), and inter-DC long-haul transmission introduces extremely larger RTTs than intra-DC links. IRN’s static bitmap is too rigid to balance the intra-DC and inter-DC traffic simultaneously. Furthermore, it is difficult for the sender to perceive packet loss in time when congestion occurs at downstream DCs, and then packet retransmission also introduces high flow latency due to the large inter-DC RTT. Therefore, current lossy solutions cannot meet the requirements of high throughput and low latency for inter-DC applications.

Load balance: In DCNs, multiple equal-cost parallel paths are available between end-host pairs. Traditional RDMA uses single-path transmission, failing to fully utilize the abundant network capacity. To leverage multipath capabilities, researchers have proposed various multipath transmission and load-balancing mechanisms for intra-DC RDMA solutions. These solutions (e.g., MP-RDMA [

38], PLB [

39], and Proteus [

40]) allow for multiple paths to balance total bandwidth, enhancing throughput and minimizing the flow completion time (FCT) by fully utilizing parallel paths. The multipath-enabled load-balancing process generally consists of two stages. First, the congestion status of candidate multipaths is obtained, and initial traffic distribution considers these statuses to evenly allocate data flows across paths. The second stage involves dynamic adjustment during flow transmission, where congested flows are rerouted to non-congested paths. These schemes significantly improve intra-DC RDMA performance, especially in scenarios with a high proportion of elephant flows. However, these load-balancing optimization schemes are challenging to deploy in inter-DC scenarios to enhance inter-DC RDMA performance. This is because in DCNs, multiple equivalent paths typically exist between computing nodes (servers), whereas inter-DC transmission is DC oriented, usually with only one dedicated path between DCs. Even if multiple paths are available, the high latency of long-distance transmission results in higher packet arrival time intervals, making out-of-order issues more problematic. Therefore, optimizing inter-DC RDMA performance through current intra-DC multipath-enabled, load-balancing methods is not feasible.

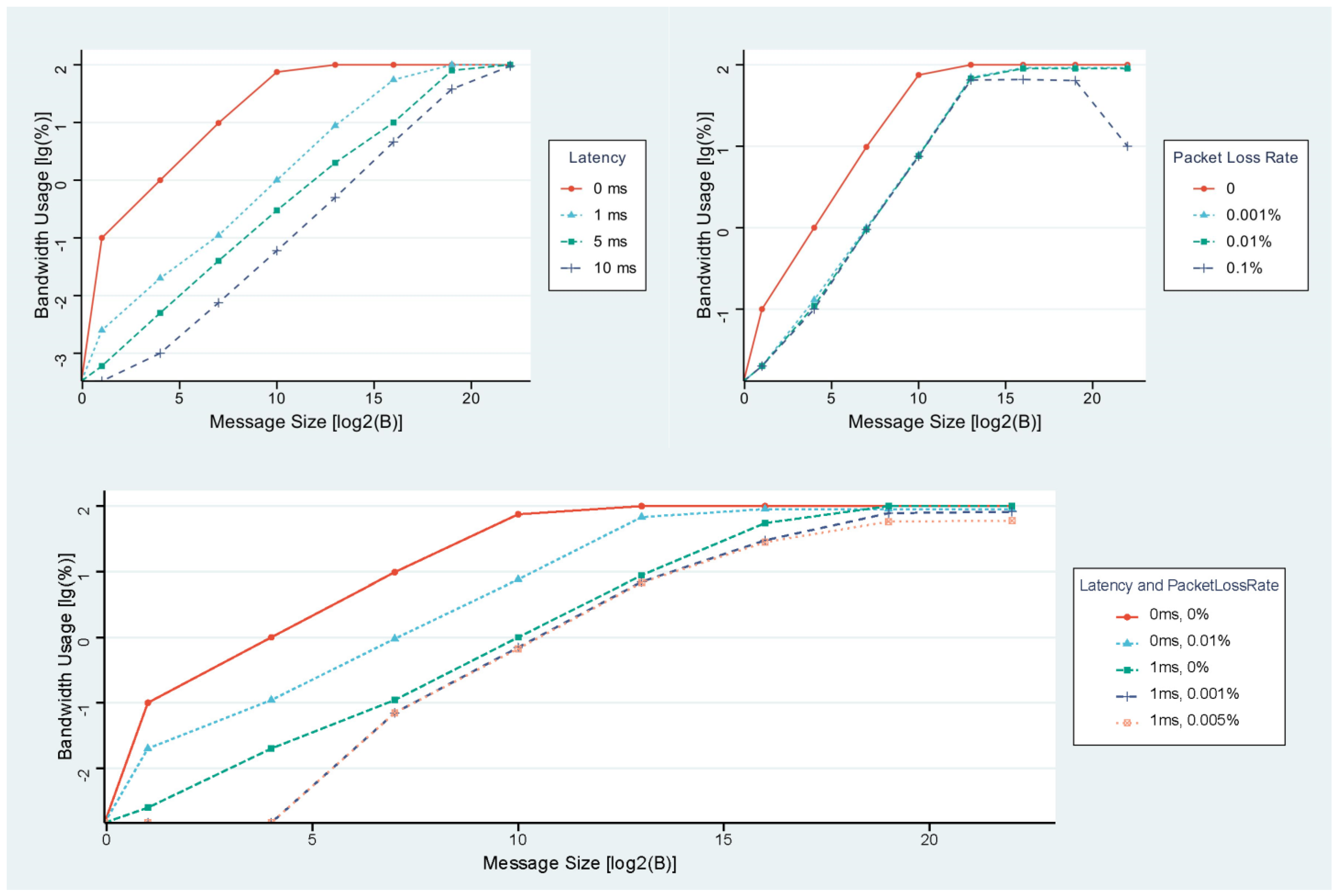

As analyzed above, the deployment of RDMA across DCs introduces significant challenges due to long latency and low reliability caused by long-range connection. These challenges affect congestion control, packet loss recovery, load-balancing mechanisms, and network device design. Without appropriate optimizations, RDMA performance can degrade sharply. Numerous experiments have demonstrated that the high latency associated with inter-DC deployments brings a significantly decline in RDMA performance. Additionally, in such scenarios, packet loss exacerbates performance degradation. The worst-case scenario occurs when both high latency and packet loss exist, leading to even poorer performance.

Figure 2 illustrates the impact of latency and packet loss on RDMA performance according to [

48]. The tests were conducted with 10 G throughput, examining the effects of varying latency, packet loss rates, and their combined impact on the performance of RDMA connections. It is evident that under low throughput like 10 G, the performance degradation caused by increased latency and packet loss can hardly be mitigated by increasing the message size. However, in real-world inter-DC scenarios, where throughput demands are significantly higher and network conditions are far more complex, the performance degradation of RDMA becomes unacceptably severe.

4. Existing Representative Works

To address the aforementioned challenges, which will be faced when deploying RDMA across DCs, and to apply current RDMA solutions to long-distance scenarios while minimizing performance degradation, numerous researchers have pursued various strategies. A significant portion of these efforts centers on improving congestion management and packet loss control, aiming to optimally ensure transmission efficiency with high-latency.

4.1. SWING

SWING [

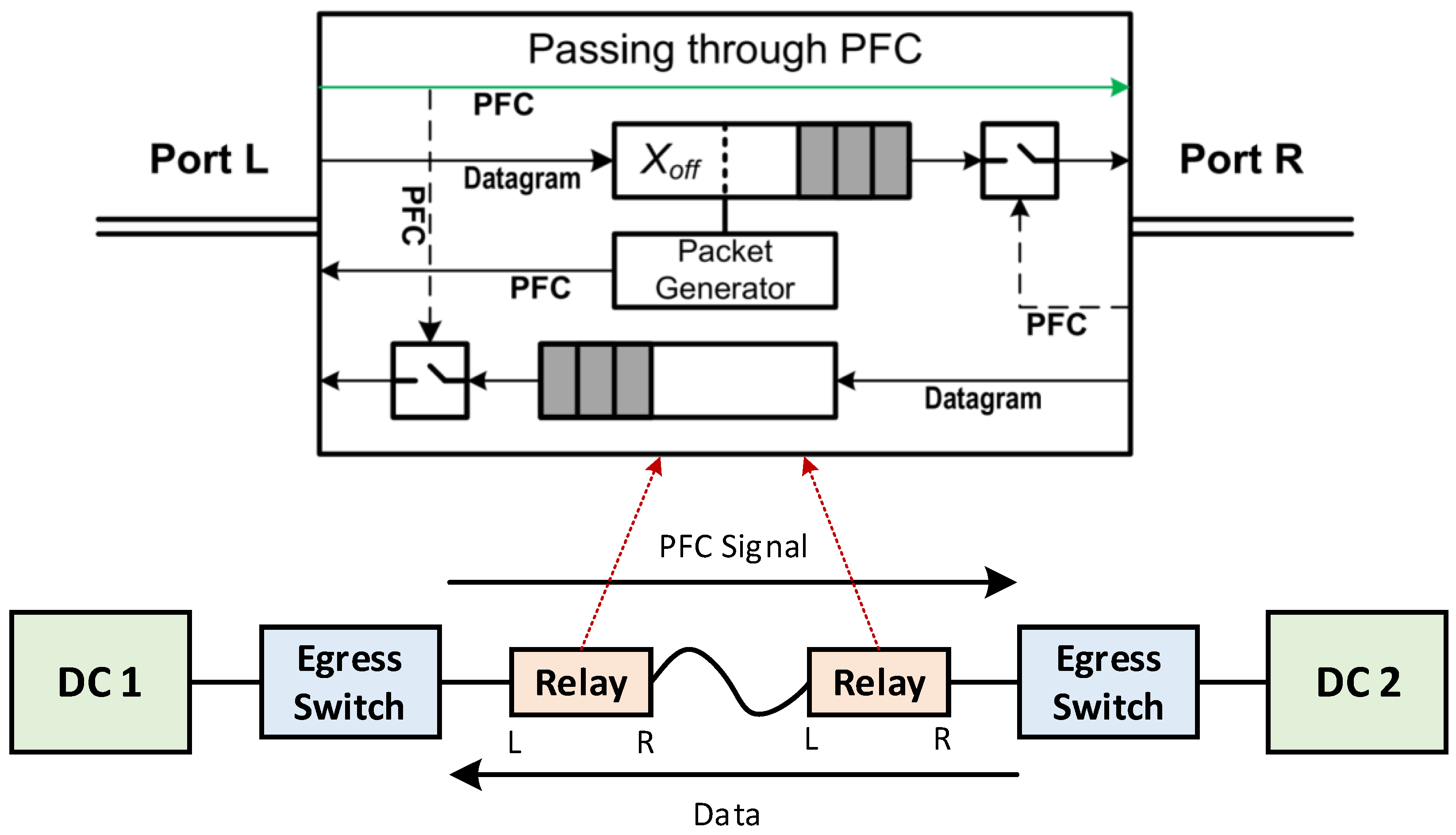

19] introduces a PFC-relay mechanism to enable efficient lossless RDMA across long-distance DCI, addressing the critical challenge of PFC-induced throughput loss and excessive buffer requirements in traditional solutions. The core innovation lies in deploying relay devices at both ends of the DCI link, which act as intermediaries to dynamically relay PFC messages between DCs. Unlike native PFC, where long propagation delays force switches to reserve buffers equivalent to 2 × BDP to avoid throughput loss, SWING’s relays establish a semi-duplex feedback loop: they mimic the local switch’s draining rate and propagate PFC signals in a time-synchronized manner, reducing the required buffer to just one BDP per direction. This mechanism ensures that upstream and downstream switches receive timely congestion feedback, eliminating the idle periods caused by delayed RESUME messages in traditional PFC.

As demonstrated in

Figure 3, the relay device intercepts PFC signals from the local DC switch, forwards them across the long-distance link with minimal processing delay, and enforces a cyclic sending rate matching the remote switch’s capacity. This creates a “swing” effect where traffic is paced to the network’s draining rate, preventing buffer overflow while maintaining full link utilization. Critically, SWING achieves this without modifying intra-DC networks or RDMA protocols, retaining full compatibility with existing RoCEv2 infrastructure. The authors evaluate SWING by conducting experiments in various traffic scenarios to measure its performance and comparing it with the original PFC solution. The results show that SWING can reduce the average FCT by 14% to 66%, leveraging half the buffer of traditional deep-buffer solutions while eliminating HoL blocking and PFC storm issues. By focusing on protocol-layer relay rather than hardware upgrades, SWING offers a pragmatic solution to extend lossless RDM’s benefits to inter-DC links with minimal infrastructure changes.

4.2. Bifrost

Bifrost [

23] introduces a downstream-driven predictive flow control to extend RoCEv2 for efficient long-distance inter-DC transmission, addressing the inefficiencies of PFC without additional hardware. Unlike SWING, which relies on external relay devices, Bifrost innovatively modifies switch chip logic to leverage virtual incoming packets, a novel concept where the downstream port uses historical pause frame records to predict the upper bound of in-flight packets for the next RTT. This allows proactive, fine-grained control over upstream sending rates, eliminating the need for deep buffers while maintaining Ethernet/IP compatibility.

As illustrated in

Figure 4, the design centers on the downstream port maintaining a history of pause frames to infer incoming packet limits, termed “virtual incoming packets.” By combining this predictive upper bound with the real-time queue length, Bifrost dynamically adjusts the flow rates in microsecond-scale time slots, ensuring the upstream matches the downstream draining capacity with minimal delay. This approach reduces buffer requirements to one-hop BDP, half of PFC’s 2 × BDP requirement, while achieving zero packet loss.

Crucially, Bifrost reuses PFC frame formats and integrates with existing switch architectures, requiring only lightweight modifications to the hardware logic of egress switches (e.g., on-chip co-processor for virtual packet tracking). This avoids additional device deployment and ensures seamless compatibility with intra-DC RoCEv2 infrastructure. Real-world experiments demonstrate that Bifrost reduces average FCT by up to 22.5% and tail latency by 42% compared to PFC, using 37% less buffer space. By shifting congestion control intelligence to the downstream switch and enabling predictive signaling, Bifrost achieves a balance of low latency, high throughput, and minimal buffer usage, making it a pragmatic solution for extending lossless RDMA to inter-DC links without compromising protocol compatibility.

4.3. LSCC

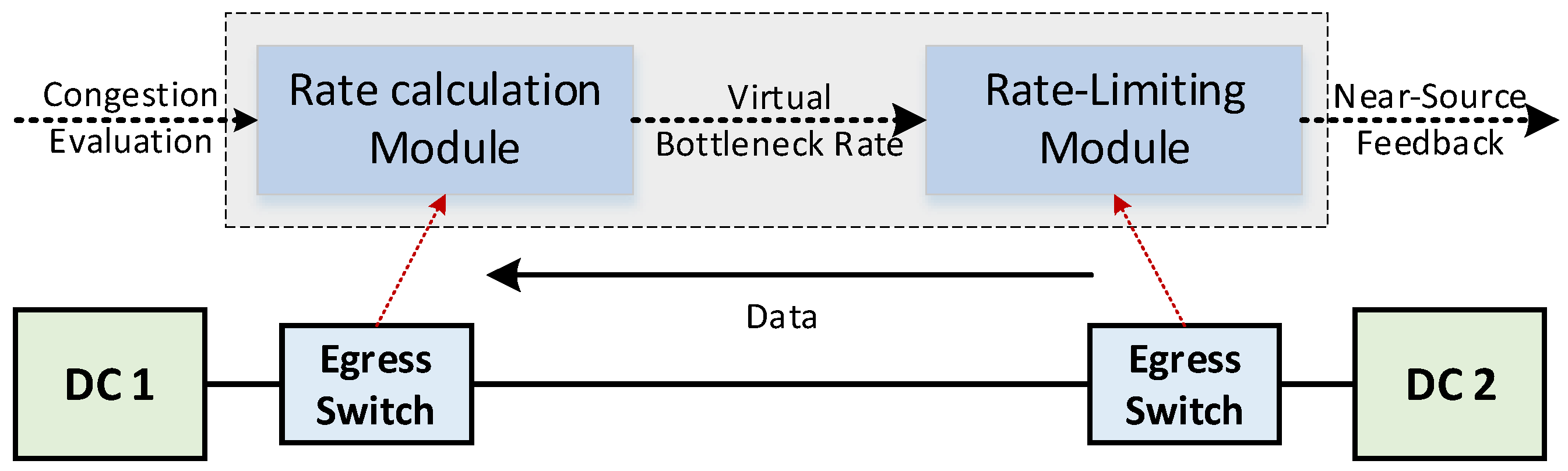

LSCC [

49] breaks up long feedback loops and achieves timely adjustments at the traffic source to improve inter-DC RDMA congestion control. As shown in

Figure 5, LSCC employs segmented link control to avoid delayed congestion control. A segmented feedback loop is established between egress switches connected to the long-haul link, providing timely congestion feedback and reducing buffer pressure on switches. The entire inter-DC transmission is divided into three segments: sender to local egress switch, local egress switch to remote egress switch, and remote egress switch to receiver.

By deploying the rate calculation and rate-limiting modules at the egress switches, LSCC establishes a feedback loop over the long-haul link. This loop transmits bottleneck information from the remote DC to the local egress switch, which then provides timely congestion signals to the traffic source. Additionally, the feedback loop offers reliable buffering for the remote DC, interrupting the propagation of PFC signals and ensuring optimal bandwidth utilization of the long-haul link.

Specifically, the rate calculation module and rate-limiting module are deployed at the ports of the egress switches connected to the long-haul link, collaborating to work as the segmented feedback loop. The rate calculation module at the remote egress switch periodically assesses the congestion level within its local DC based on the received feedback signals and quantifies it into a virtual bottleneck rate, which is then fed back to the rate-limiting module located in the remote egress switch. Upon receiving the feedback rate from the remote egress switch, the local egress switch should immediately adjust its rate to match the feedback rate. The rate-limiting module sends direct feedback signals to sources within its local DC based on the received bottleneck rate, cooperating with the rate calculation module’s schedule.

The authors conduct evaluations using DPDK implementation and large-scale simulations, and the results demonstrate that LSCC significantly reduces average FCT by 30–65% under realistic DC loads and 31–56% under inter-DC loads.

4.4. BiCC

BiCC [

50] also breaks down the congestion control loop, including the near-source control loop, near-destination control loop, and end-to-end control loop, which leverages two-side DCI switches to manage congestion both at the sender and receiver sides, reducing the control loop to a single DC scale. This approach is illustrated in

Figure 6.

The workflow of near-source control loop is composed of three parts. First, the sender-side DCI switch detects congestion within the sender-side DC. Then, the near-source feedback (e.g., Congestion Notification Packet) is generated and sent back to the sender. Lastly, the sender accordingly adjusts its sending rate based on the feedback to alleviate congestion promptly. Similarly, the end-to-end control loop works following the three main steps of detection, feedback generation, and rate adjustment, but it applies to the receiver-side DC. Specifically, the receiver-side DCI switch detects congestion within the receiver-side DC and sends feedback to the sender, with the sending rate being adjusted by combining this feedback with the near-source feedback.

The near-destination control loop cooperates with the near-source control loop and end-to-end control loop, and it mainly focuses on alleviating congestion within the receiver-side DC and solving the HoL blocking issue. Specifically, the receiver-side DCI switch uses Virtual Output Queues (VOQs) to manage traffic and prevent HoL blocking. It dynamically allocates VOQs and schedules packet forwarding based on congestion information, ensuring smooth traffic flow.

The authors implement BiCC on commodity P4-based switches and evaluates its performance through testbed experiments and NS3 simulations, showing significant improvements in congestion avoidance and FCTs. Specifically, BiCC reduces the average FCT for intra-DC and inter-DC traffic by up to 53% and 51%. Additionally, since BiCC is designed to work as a building block with existing host-driven congestion control methods, it has a good compatibility with the existing infrastructures.

4.5. LoWAR

Unlike any of these jobs mentioned above, LoWAR [

51] is designed for lossy networks and focuses on addressing the performance challenges of RDMA in lossy WANs. LoWAR inserts a Forward Error Correction (FEC) shim layer into the RDMA protocol stack. This layer contains both TX and RX paths with encoding and decoding controllers.

Figure 7 describes the design diagram of LoWAR. The sender encodes data packets into coding blocks and generates repair packets. When packets are sent, a repair header extension is used to negotiate FEC parameters between the sender and receiver. On the receiving side, the RX path decodes the incoming data packets and uses the repair packets to recover lost data. The decoding controller distinguishes between data and repair packets and stores the data packets in a decoding buffer. If packets are out of order, they are held in a reorder buffer. Once the bitmap confirms that the repair is complete, the packets are delivered to the upper RDMA stack in the correct order, ensuring reliable data transmission even in the presence of packet loss.

The authors implement LoWAR prototype on FPGAs and set up the experiment in a lossy 10 GE WAN environment with different RTTs of 40 ms and 80 ms and various packet loss rates ranging from 0.001% to 0.1%. The results show that LoWAR significantly improved RDMA throughput. In the 40 ms RTT and 0.001~0.01% loss rate scenario, the throughput was 2.05~5.01 times that of CX-5. In the 80 ms RTT, the improvement was even more pronounced, reaching 11.55~19.07 times.

However, the transmission frequency of repair packets, determined by real-time feedback such as the RTT and packet loss rate, cannot withstand bursty continuous packet losses. To address such bursts, the transmission frequency of repair packets must be increased, resulting in significant additional bandwidth overhead. Concurrently, LoWAR’s packet loss detection relies on bitmaps, and the generation of repair packets and loss packet recovery depends on the computing and storage resources of the hardware. In high-bandwidth scenarios, the resource consumption is exceedingly high. Thus, LoWAR is better suited for environments experiencing significant packet loss and limited bandwidth.

4.6. Others

In addition to the aforementioned optimization schemes for RDMA in inter-DC scenarios, some other optimization schemes targeting non-RDMA inter-DC data transmission have also been proposed. These methods can provide valuable references for the design of future inter-DC RDMA solutions.

GEMINI [

52] is a congestion control algorithm that integrates both ECN and delay signals to handle the distinct characteristics of DCNs and WANs. GEMINI aims to achieve low latency and high throughput by modulating window dynamics and maintaining low buffer occupancy. GEMINI is designed based on the TCP protocol, being developed using the generic congestion control interface of the TCP kernel stack.

Compared to the reactive congestion control mechanism of GEMINI, FlashPass [

53] proposes a proactive congestion control mechanism designed specifically for shallow-buffered WANs, aiming to avoid the high packet loss and degraded throughput in shallow-buffered WANs due to large bandwidth-delay product traffic. FlashPass employs a sender-driven emulation process with send time calibration to avoid data packet crashes and incorporates early data transmission and over-provisioning with selective dropping to efficiently manage credit allocation.

GTCP [

54] introduces a hybrid congestion control mechanism to address the challenges in inter-DC networks by switching between sender-based and receiver-driven modes based on congestion detection. When it detects congestion within a DC, the inter-DC flow switches to the receiver-driven mode to avoid the impact on intra-DC flow, and inter-DC flow periodically probes the available bandwidth to switch back to sender-based mode when there is spare bandwidth, avoiding bandwidth wastage. This allows it to balance low latency for intra-DC flows and high throughput for inter-DC flows. Also, for intra-DC flows, GTCP uses a pausing mechanism to prevent queue build-up, ensuring low latency.

IDCC [

55] is a delay-based congestion control scheme that uses delay measurements to handle congestion in both intra-DC and inter-DC networks, which also adopts a hybrid congestion control mechanism. IDCC leverages In-band Network Telemetry (INT) to measure intra-DC queuing delay and uses RTT to monitor WAN delay. By leveraging queue depth information from INT, IDCC dynamically adjusts inter-DC traffic behavior to minimize latency for intra-DC traffic. When INT is unavailable, RTT signals are used to adapt the congestion window, ensuring efficient utilization of WAN bandwidth. To enhance stability and convergence speed, IDCC employs a Proportional Integral Derivative (PID) controller for window adjustment. Furthermore, IDCC incorporates an active control mechanism to optimize the performance of short flows within the DC. It introduces a weight function based on sent bytes, prioritizing short flows during congestion window adjustments. This approach reduces the completion time of short flows while maintaining overall network efficiency.

Similar to LSCC and BiCC, Annulus [

56] employs a separate control loop to achieve better congestion control in inter-DC scenarios. Due to the completely different characteristics and requirements of WAN and DCN traffic. Annulus uses one loop to handle bottlenecks with only one type of traffic (WAN or DCN), while the other loop handles bottlenecks shared between WAN and DCN traffic near the traffic source, using direct feedback from the bottleneck. The near-source control loop relies on direct feedback from switches to quickly adjust the sending rate, reducing the feedback delay for WAN traffic and improving overall performance.

As illustrated in

Table 1, existing optimization approaches for non-RDMA inter-DC data transmission primarily focus on congestion control, encompassing all three categories of congestion control methods: reactive, proactive, and hybrid.

4.7. Comparison

In this section, several recent RDMA optimization designs for inter-DC scenarios were analyzed and compared. Additionally, representative optimization schemes for non-RDMA-based inter-DC data transmission were evaluated. We mainly compared the properties of these schemes in terms of optimization method, network reliability, implementing location. It is hoped that these analyses will provide valuable references for the design of future inter-DC RDMA solutions.

From an optimization perspective, most research efforts focus on congestion control as their primary domain of study. Congestion control algorithms are classified into three categories, including proactive congestion control, reactive congestion control, and hybrid congestion control [

57]. Proactive congestion control aims to prevent congestion before it occurs by managing link bandwidth allocation in advance; reactive congestion control adjusts the sending rate in response to congestion signals (e.g., packet loss, RTT, switch queue length, and ECN); hybrid congestion control combines the above two, expecting to integrate the advantages of both proactive and reactive congestion control. As clearly illustrated in

Table 2, most inter-DC RDMA optimization schemes use reactive [

19,

23] and hybrid [

49,

50] congestion control methods, while non-RDMA-specific congestion control schemes encompass reactive [

52], proactive [

53], and hybrid approaches [

54,

55,

56]. Additionally, the congestion control schemes typically employ two types of congestion control loops: those using a single end-to-end congestion control loop [

19,

23,

52] and those using multiple congestion control loops [

49,

50,

54,

55,

56]. Multiple control loops are typically composed of near-end control loops and end-to-end control loops. Near-end control loops can effectively supplement the slow response of end-to-end control loops. Both RDMA-specific and non-RDMA-specific congestion control optimization methods utilize these two types of congestion control loops. Schemes based on a single end-to-end congestion control loop require less hardware modifications during actual implementation, thereby ensuring better compatibility with existing infrastructure. Solutions designed with multiple control loops evidently provide better performance, as they can instantly detect congestion and quickly relay feedback to the source, allowing for timely rate adjustments; therefore, these methods tend to perform better as the distance of the DCI link increases. In addition to the above optimization methods for congestion control, there are also proactive error correction methods [

51] specifically designed for WANs with relatively low bandwidth and high packet loss rate. These methods utilize the FEC mechanism to proactively generate repair packets to deal with packet loss situations in advance instead of passively waiting to handle them after packet loss occurs.

Table 3 presents a comparison of existing works in terms of network reliability requirements, where RDMA-specific solutions such as SWING [

19], BiFrost [

23], LSCC [

49], and BiCC [

50] are primarily designed for lossless networks. These solutions often incorporate reliability techniques like optimization based on PFC (for SWING, BiFrost, and LSCC) or collaboration with host-driven congestion control (for BiCC), yet they rarely include optimizations for packet retransmission mechanisms in lossy environments. Only one solution, LoWAR [

51], considers the RDMA transmission in a lossy WAN environment, which adopts proactive error correction to recover loss packets. In contrast, non-RDMA solutions do not restrict themselves to specific network reliability types. For instance, GEMINI [

52] and GTCP [

54] work under lossy environments and rely on TCP reliability mechanisms. FlashPass [

53] uses tail loss probing with credit scheduled retransmission. However, solutions like IDCC [

55] and Annulus [

56] have no special optimizations for reliability, basically reusing the native reliability mechanisms of the transmission protocols they employ. Despite these varied approaches, there is a notable lack of discussion on handling packet loss in cross-DC scenarios across all these schemes, leaving a gap in addressing reliability challenges specific to such environments.

With regard to implementation methods and locations, RDMA-specific solutions exhibit a variety of implementation strategies. Some require the addition of dedicated devices to existing links [

19]; others necessitate modifications to the hardware logic of egress switches (i.e., DCI switches) [

23] or even require to design specific hardware functions for DCI switches and NICs located in end hosts [

49,

50,

51]. In contrast, non-RDMA-specific solutions employ almost entirely software-based implementations. They are generally developed either based on the universal congestion control interface defined in the Linux kernel protocol stack [

52,

54,

55,

56] or by developing a dedicated module and installing it into the protocol stack [

53]. As can be seen in the

Table 4, non-RDMA-specific solutions are much simpler to deploy than RDMA-specific solutions. However, RDMA-specific approaches, through redesigning or modifying existing hardware, introduce specialized hardware that better aligns with their methods, enabling them to achieve higher performance.

As summarized in

Table 5, RDMA-specific solutions primarily rely on reactive and hybrid congestion control methods, each tailored to improve response speed. Some solutions introduce dedicated devices to enhance feedback efficiency, while others use measurement techniques to monitor real-time link conditions, improving feedback accuracy. A few approaches break the congestion control loop by using near-end feedback to accelerate rate adjustments. These solutions are typically designed for lossless networks, often as enhancements to PFC-based schemes. Additionally, they frequently involve redesigning or modifying hardware processing logic to align with their proposed algorithms, resulting in superior performance. In contrast, non-RDMA-specific solutions employ a wider range of congestion control methods, including reactive, proactive, and hybrid approaches. They do not require high network reliability (i.e., lossless networks) and are generally compatible with lossy networks. Furthermore, their implementations are primarily software-based, making them simpler and more flexible.

5. Future Directions

From the analysis and comparison with existing works in the previous section, we can determine several promising research directions for inter-DC RDMA solutions. For instance, since all current inter-DC RDMA solutions rely on reactive congestion control, future research could investigate more varied approaches, such as leveraging the benefits of active or hybrid congestion control methods. Additionally, the majority of existing inter-DC RDMA solutions are designed for lossless networks, with little exploration into optimizing packet retransmission mechanisms for lossy networks. Moreover, current inter-DC RDMA solutions do not consider load-balancing optimization methods based on multipath routing. As the scale of multi-DC interconnection increases, with hundreds or thousands of computing nodes and DCs collaborating, this will become an important research direction. Furthermore, it is difficult for current switch devices to support novel congestion control and packet retransmission methods designed for inter-DC RDMA; therefore, it is necessary to design an enhanced switch device with the ability to support high-performance inter-DC RDMA solutions.

5.1. Congestion Control Mechanisms

Active congestion control methods often allocate bandwidth in advance by sensing and predicting the current network congestion state before transmission begins. Additionally, some of them maintain flow tables to track the status of data flows and use this information to make real-time adjustments to prevent congestion. Since active congestion control methods manage congestion before it occurs, they can respond more quickly to potential issues, especially in inter-DC scenarios where the distance between the receiver and sender is long. Hybrid congestion control methods try to combine the advantages of both reactive and proactive congestion control mechanisms, switching between them based on the current network state and the traffic characteristics of the application scenario. Therefore, researching hybrid congestion control methods for inter-DC RDMA is a promising direction. By incorporating active congestion control mechanisms, future inter-DC RDMA solutions can fully leverage their advantages in long-distance scenarios, achieving better performance.

5.2. Packet Retransmission

Based on our previous analysis, there is currently a lack of research on packet retransmission optimization for inter-DC RDMA transmission scenarios. However, the GBN packet retransmission mechanism in RDMA not only severely delays FCT but also results in extremely low bandwidth utilization. This is why RDMA relies on the reliability of lossless networks when used within DCs, as its performance is significantly degraded when packet loss occurs. Therefore, when the application scenario extends to inter-DC, the longer packet loss feedback loop caused by long-distance interconnection undoubtedly exacerbates the impact of packet loss on performance. Consequently, a more efficient packet retransmission mechanism for inter-DC RDMA is an important research direction for the future.

Currently, the commonly used packet retransmission schemes in intra-DC scenarios are generally divided into two types: GBN and Selective Repeat (SR). The primary advantage of the GBN scheme is its simplicity, as the logic for packet loss detection and handling is straightforward and does not rely on specialized network equipment. However, GBN results in a significant amount of redundant data transmission, leading to low bandwidth utilization and increased latency. In contrast, the SR scheme avoids unnecessary transmissions by retransmitting only the lost packets, thereby making more efficient use of bandwidth. However, the implementation of this scheme is more complex and resource-intensive due to the requirement for multiple timers and buffers to manage out-of-order packets.

Regardless of the retransmission mechanism mentioned above, when applied to long-distance inter-DC connections, packet loss and retransmission feedback are significantly delayed, leading to notable performance degradation. Therefore, in long-distance interconnection scenarios, it is advisable to consider the in-path packet loss detection and retransmission mechanism or near-end packet loss detection and retransmission mechanism. The in-path packet loss detection and retransmission mechanism refers to the capabilities of intermediate nodes within the transmission path to detect and retransmit lost packets rather than relying solely on end-to-end detection and retransmission. However, this approach requires all intermediate nodes in the network to possess packet loss detection and retransmission capabilities, which poses compatibility challenges with existing infrastructure. In contrast, the near-end packet loss detection and retransmission mechanism requires only the deployment of specialized network devices at the DC egress to enable the designed packet loss detection and retransmission methods. Therefore, inter-DC RDMA solutions for lossy networks, based on near-end packet loss detection and retransmission mechanism, could be a highly significant research direction.

5.3. Network Device (Switch and NIC)

The existing egress devices for DCs are generally traditional switch devices, which excel at packet switching but lack support for novel inter-DC RDMA congestion control and packet retransmission mechanisms. Inter-DC RDMA often requires these DCI switches to run corresponding functions to better assist its congestion control algorithms and packet retransmission mechanisms, thereby offering superior data transmission performance. For instance, congestion notification can be performed in advance to significantly shorten congestion feedback time, prompting the sender to quickly converge to a reasonable sending rate. Alternatively, the state of each flow, including congestion level and the number of in-flight packets, can be detected and recorded, and the flow state can be used to calculate and notify the sender for appropriate rate adjustment. Similarly, commercial NICs often struggle to meet the precise rate calculation and rate-limiting functions required by new congestion control algorithms, and they cannot support the functions required by new retransmission mechanisms either, such as the packet reordering function needed by selective retransmission schemes.

In summary, due to the limitations of existing network devices (i.e., traditional switches and NICs) in leveraging the advantages of new congestion control and packet retransmission methods for inter-DC RDMA, there is a clear need to design new network devices that offer these essential capabilities.

5.4. Multipath and Load Balance

Existing optimizations on load balancing for intra-DC RDMA schemes are generally designed based on network links. In the inter-DC RDMA application scenarios, there is typically one dedicated optical link between two DCs. Consequently, traditional multipath concepts do not apply to inter-DC RDMA. However, current optical transmission systems leverage spatial division multiplexing and wavelength division multiplexing to enhance transmission capacity. Therefore, when designing multipath schemes for inter-DC RDMA in the future, wavelengths and fiber cores can be considered as the rerouting granularities for multipath transmission. Additionally, as the scale of multi-DC interconnection networks increases, such as in the case of the C2NET, rerouting and replanning across DCs will be necessary. For instance, when a certain DC’s computing tasks are overloaded, redistribution will be required, and load balancing can be performed based on the current status of each DC during task planning. Therefore, inter-DC multipath RDMA and its load-balancing solutions must not only adapt existing intra-DC schemes to inter-DC environments but also drive innovation in new interdisciplinary research areas.

5.5. New Protocols

Currently, in order to meet the requirements of large-scale deployment of intra-DC services, such as AI/LLM training and HPC applications, various major technology companies have designed and developed their own transport protocols (e.g., FALCON, SRD, TTPoE, etc.). Based on their self-developed transport protocols, they have implemented and optimized RDMA, effectively enhancing the scalability, congestion control effect, and other related performances of RDMA in the intra-DC scenario. However, these solutions have not yet been adapted for long-range DCI scenarios. In the future, inter-DC-oriented features—such as tolerance for high latency and packet loss—could be integrated into these protocols. Alternatively, entirely new protocols could be developed to meet the specific demands of long-haul transmission.

6. Conclusions

This article explores the challenges, status, and future directions of inter-DC RDMA, a critical field driven by the increased cross-DC applications, such as large-scale LLM training. Among the critical challenges are the long RTT/BDP impairing intra-DC congestion control, buffer pressure, and inefficient GBN retransmission in lossy network. Notable advancements have been made through solutions like SWING (PFC-relay mechanism), Bifrost (downstream-driven predictive control), and LSCC (segmented feedback loops), which enhance performance by reducing buffer requirements or accelerating congestion response. However, these approaches are often accompanied by trade-offs in hardware dependency, deployment complexity, or bandwidth overhead, while they notably lack considerations for packet loss recovery and load balancing.

Future research should focus on sophisticated congestion control, efficient packet retransmission, specialized switches/NICs with inter-DC RDMA support mechanisms, and multipath optimization via optical multiplexing for large-scale DCI networks. As the first review article in this emerging domain, this work can serve as a foundational reference, guiding research toward robust, scalable inter-DC RDMA for future cross-DC applications.