Abstract

During the COVID-19 pandemic, social media platforms emerged as both vital information sources and conduits for the rapid spread of propaganda and misinformation. However, existing studies often rely on single-label classification, lack contextual sensitivity, or use models that struggle to effectively capture nuanced propaganda cues across multiple categories. These limitations hinder the development of robust, generalizable detection systems in dynamic online environments. In this study, we propose a novel deep learning (DL) framework grounded in fine-tuning the RoBERTa model for a multi-label, multi-class (ML-MC) classification task, selecting RoBERTa due to its strong contextual representation capabilities and demonstrated superiority in complex NLP tasks. Our approach is rigorously benchmarked against traditional and neural methods, including, TF-IDF with n-grams, Conditional Random Fields (CRFs), and long short-term memory (LSTM) networks. While LSTM models show strong performance in capturing sequential patterns, our RoBERTa-based model achieves the highest overall accuracy at 88%, outperforming state-of-the-art baselines. Framed within the diffusion of innovations theory, the proposed model offers clear relative advantages—including accuracy, scalability, and contextual adaptability—that support its early adoption by Information Systems researchers and practitioners. This study not only contributes a high-performing detection model but also delivers methodological and theoretical insights for combating propaganda in digital discourse, enhancing resilience in online information ecosystems.

1. Introduction

The proliferation of fake news, propaganda, and misinformation on social media has emerged as a significant societal challenge over the past decade [1]. This issue impacts critical domains such as broadcasting, the economy, politics, healthcare, societal stability, and climate change [2]. Propaganda, in particular, represents a strategic effort to influence individuals or groups by shaping their beliefs or actions to achieve specific objectives [3]. This differs from disinformation, which involves the intentional spread of false information, and misinformation, which refers to unintentional inaccuracies [4]. The COVID-19 pandemic highlighted the urgency of addressing these challenges, with a surge in propaganda and misinformation circulating in public social media discourse [5].

Detecting propaganda is an inherently complex natural language processing (NLP) task. Existing deep learning (DL)-based approaches have made significant progress, employing methods such as sentence-level classification for news articles, tweets, and public discussions [6,7,8]. However, the professional and convincing nature of propaganda posts presents ongoing challenges. Many current DL models struggle to accurately detect and interpret sophisticated propaganda designed to appear credible and persuasive [9].

Given these limitations, this research explores the use of fine-tuned DL models to address gaps in existing methods. Additionally, our study introduces a theoretical framework based on the diffusion of innovations theory [10] to enhance the adoption and application of these advanced detection models within the Information Systems (IS) community. By leveraging this framework, we aim to facilitate the dissemination of robust DL techniques for detecting propaganda, thus strengthening societal efforts to combat misinformation on social media.

1.1. Motivation of the Study

The importance of propaganda detection lies in its potential to mitigate the spread of misinformation and its associated societal harm. Despite its critical role, existing DL-based methods, including Bi-directional Encoder Representations from Transformers (BERT), Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs), exhibit limitations [11,12]. These approaches often focus on local word associations and sequential patterns, failing to capture long-range and non-adjacent word dependencies critical for identifying nuanced propaganda techniques. Moreover, while fine-tuned models have demonstrated success in multi-label, multi-class (ML-MC) text classification tasks, their application to propaganda detection remains underexplored [13].

Existing methods also face challenges in detecting specific propaganda techniques such as logical fallacies, sowing doubt, and repetition [14,15,16]. These limitations are exacerbated by the weak annotations common in datasets like those derived from social media platforms. To address these issues, we propose a novel DL approach tailored to detect fine-grained propaganda techniques using advanced model architectures like RoBERTa, a robustly optimized BERT pre-training method. This model demonstrates state-of-the-art performance in ML-MC tasks, making it a promising candidate for improving propaganda detection.

The motivation for this study is further reinforced by the challenges posed during the COVID-19 pandemic. The rapid surge of information, coupled with misinformation and propaganda about the virus, created an urgent need for advanced detection methods to ensure accurate and timely information dissemination. By focusing on a curated dataset of COVID-19-related discussions, annotated with six distinct propaganda techniques and themes, our research addresses gaps in existing models and datasets.

Additionally, previous studies have highlighted the limitations of annotated datasets, such as the Propaganda Text Corpus (PTC) (https://alt.qcri.org/semeval2020/ (accessed on 2 February 2025)), which may not fully capture real-world propaganda techniques [11]. By creating a comprehensive and representative dataset and leveraging advanced DL models, this research aims to overcome these challenges. Our work also aligns with the diffusion of innovations theory, providing a pathway for integrating and scaling these advanced methodologies within the broader IS community. This theoretical underpinning ensures that the proposed methods are not only effective but also widely adopted to counteract the pervasive threat of propaganda on social media.

1.2. Research Questions and Objectives

This study aims to enhance the detection and classification of propaganda in COVID-19-related social media data, addressing critical gaps in existing methodologies and leveraging the diffusion of innovations theory to facilitate the adoption of advanced techniques. The research is guided by the following research questions (RQ):

- RQ1: How can fine-tuned DL models, such as RoBERTa, address the limitations of traditional methods like BERT, CNNs, and RNNs in detecting propaganda in COVID-19-related social media data?

- RQ2: What are the unique challenges of multi-label classification in propaganda detection, and how can novel DL-based approaches mitigate these challenges?

- RQ3: How do fine-tuned DL models compare with traditional NLP techniques in terms of accuracy, reliability, and robustness for propaganda detection?

- RQ4: What is the impact of addressing data uncertainty and incorporating augmentation techniques on the performance of ML-MC classification systems in COVID-19-related contexts?

The primary objective of this research is to design, implement, and evaluate fine-tuned DL models for propaganda detection in social media discussions. Specifically, this involves the following:

- Developing a novel ML-MC classification approach leveraging advanced DL architectures such as RoBERTa to improve accuracy and interpretability.

- Addressing challenges in multi-label classification by incorporating innovative data augmentation and optimization techniques.

- Conducting comparative analyses of traditional NLP methods and fine-tuned DL models to highlight the relative advantages of the latter.

- Investigating the effects of data uncertainty and augmentation on classification performance, thereby enhancing model robustness in real-world scenarios.

These objectives align with the principles of diffusion of innovations theory by focusing on developing solutions with demonstrable relative advantage, compatibility with existing methods, and reduced complexity, thus fostering their adoption within the IS community.

1.3. Contributions of the Study

This study makes several significant contributions to the field of propaganda detection and classification. First, it introduces an innovative methodological framework specifically designed for social media text corpora, utilizing fine-tuned RoBERTa models. By addressing the limitations of traditional methods such as long short-term memory (LSTM), CRF, n-gram, and TF-IDF, this study advances the state of the art in detecting nuanced propaganda techniques. Grounded in the diffusion of innovations theory, the research demonstrates how these advanced DL techniques offer relative advantages and compatibility with existing IS frameworks, promoting their broader adoption.

Second, another critical contribution is the development of a comprehensive dataset that focuses on COVID-19-related discussions. This dataset, curated from the ProText corpus and social media platforms such as Facebook and Twitter, includes rigorous labeling of six distinct propaganda techniques and six COVID-19 themes. The meticulous annotation process establishes a robust foundation for ML-MC classification tasks. By adhering to the principles of trialability and observability emphasized in the diffusion of innovations theory, the dataset enables researchers and practitioners to evaluate and adopt the proposed methodologies effectively.

Third, this study enhances the performance of DL models by optimizing network parameters through data augmentation and an analysis of information uncertainty. These optimizations significantly improve the accuracy and robustness of multi-label classification systems, emphasizing the practical applicability of the research. The insights derived from these advancements hold particular relevance for real-world scenarios, especially for health practitioners and policymakers tasked with monitoring and mitigating the spread of misinformation during crises.

Lastly, this research contributes strategically to the design and implementation of health information systems, particularly in the context of global health crises. By addressing the critical role of IS in combating propaganda and misinformation, this study highlights the importance of integrating advanced DL techniques into public health communication strategies. This alignment with the IS community’s core values of innovation, rigor, and real-world impact ensures that the proposed methodologies have both academic and practical significance, fostering their widespread adoption and utility.

2. Related Work

Propaganda detection has evolved significantly over the years. Earlier approaches largely relied on non-scientific methods for defining propaganda [17]. As research progressed, more sophisticated techniques such as Proppy and Character n-grams were introduced, particularly for identifying propaganda in online news articles at the document level [7]. With the rise in social media usage during the COVID-19 pandemic, there was a notable shift towards analyzing user-generated content. Researchers explored the use of social media data for various applications, including predicting public opinion, sentiment analysis, misinformation spread, and public health monitoring [18,19,20].

Recent advances in DL have greatly enhanced propaganda detection capabilities. Studies employing BERT-based models have demonstrated superior performance by integrating multiple token features into hybrid models [12,21,22,23]. Embedding techniques like Word2Vec and FastText have been applied to benchmark CNN, RNN, and BERT models [1]. Notably, this study pioneers a novel method that examines both information features and propaganda themes, offering comprehensive insights into the dynamic interactions within DL models.

The increased use of platforms like Facebook and Twitter during the pandemic further underscores the relevance of social media in effective communication strategies. Research has highlighted the critical role of social media in promoting health policies and raising awareness [24,25,26,27,28,29]. These developments illustrate the rich opportunities and complex challenges in leveraging social media for propaganda detection.

2.1. Propaganda Detection at Document and Sentence Level

Historically, propaganda detection focused primarily on document-level analyses, often labeling entire news articles based on source credibility [17]. Maazallahi, et al. [30] introduced a neural network model with language infusion to distinguish verified versus uncertain tweets, highlighting the linguistic complexity of social media data. Similarly, Proppy and Character n-gram models were early attempts to automate propaganda identification in online news [7]. However, relying solely on the source of publication has limitations, as even propagandistic outlets occasionally publish factual content, and credible outlets may occasionally disseminate biased information [11]. This realization spurred a shift toward finer-grained analysis.

Contemporary methods increasingly focus on sentence-level classification. Recent models, such as fine-tuned BERT variants, address these challenges by incorporating context-aware embeddings [23]. Dewantara, et al. [31] linked CNNs with GloVe embeddings to detect propaganda fragments, while Maazallahi, Asadpour, and Bazmi [30] proposed heterogeneous models integrating social media interactions with linguistic features. Moreover, residual bi-directional LSTM architectures combined with ELMO embeddings have demonstrated improvements in capturing contextual dependencies [32]. These innovations significantly advance the granularity and reliability of propaganda detection tasks. However, despite these advances, most current models are constrained by the training dataset’s biases and fail to generalize effectively across domains. Furthermore, there is a lack of models that simultaneously exploit syntax, pragmatics, and semantics in a holistic manner, limiting their real-world applicability.

2.2. Transformer-Based Advances in Propaganda Detection

Transformer models like BERT and RoBERTa have revolutionized NLP, including propaganda detection. Recent studies have shown promising results using these models supplemented with engineered features. Da San Martino, et al. [33] demonstrated how transformers capture persistent word connections for nuanced propaganda detection.

Li and Xiao [22] enhanced BERT by integrating BiLSTM architectures and employing a joint loss function, effectively combining syntactic and pragmatic signals at multiple text levels. Paraschiv, et al. [34] incorporated Conditional Random Fields (CRFs) and a customized dense layer atop BERT for token-level propaganda identification. Additionally, hybrid approaches using Logistic Regression classifiers with RoBERTa tokenization have been explored for span identification tasks [35]. These efforts underline the power of transformer-based embeddings in capturing subtle linguistic cues.

However, technical deficiencies persist. Many models are highly dependent on domain-specific fine-tuning and struggle with cross-domain generalization. Moreover, while token-level classification improves granularity, it often sacrifices coherence at the document level.

2.3. Propaganda Detection at Multi-Class Multi-Label Level

As propaganda manifests in multiple techniques simultaneously, multi-label and multi-class learning frameworks have gained traction. Vosoughi, et al. [36] introduced the Multi-view Propaganda (M-Prop) model, employing transformer-based multi-view embeddings for textual data.

Similarly, Fang, et al. [37] proposed MPDES, leveraging emotion analysis and task-specific split-and-share modules. Feng, et al. [38] advanced the field by fine-tuning both textual and multimodal transformers to jointly model text and images.

Despite these innovations, multimodal propaganda detection faces several obstacles. Most models independently encode modalities, limiting complementary feature extraction [39]. Fusion mechanisms often lack coherent reasoning capabilities, hampering the modeling of latent semantic relationships [40]. Additionally, a scarcity of annotated multimodal datasets remains a significant barrier [41].

Currently, the majority of research relies heavily on the SemEval 2021 dataset [42], which constrains the models’ adaptability to varied real-world contexts. Consequently, text-only modalities continue to dominate propaganda detection efforts. A summary of multi-label methods on text classification is provided in Table 1.

Table 1.

Summary of the related studies that applied multi-label methods on text classification (slogan) using deep learning models.

2.4. Neural Approach for Detecting and Classifying Propaganda

Neural models have shown substantial progress in detecting and classifying propaganda. As an illustration, MPDES [37] is a model for detecting multimodal persuasive techniques, which incorporates emotion semantics through emotion analysis and examines detailed task-specific features by employing a split-and-share module. Similarly, Vijayaraghavan and Vosoughi [44] proposed a neural approach for detecting and classifying propaganda tweets across fine-grained categories by integrating multi-view contextual embeddings through pairwise cross-view transformers. Feng, Tang, Liu, Yin, Feng, Sun, and Chen [38] proposed two multimodal approaches: one augments text-based transformers with visual features, while the other fine-tunes multimodal transformers to jointly analyze text and images for propaganda classification.

However, several challenges persist in multi-label multi-class propaganda detection. Initially, as multimodal propaganda detection belongs to the vision-language domain, the field faces limitations due to weak annotations and a lack of publicly available datasets [44]. Thereafter, current models tend to encode information from different modalities independently, which limits their ability to extract high-order complementary information from the multimodal context [40]. Finally, while many approaches focus on feature fusion, they often fail to establish coherent reasoning between modalities, making it difficult to capture latent semantic relationships [41]. Notably, most existing studies rely exclusively on the SemEval 2021 dataset [42], which further constrains progress in this area. As a result, propaganda detection based solely on the textual modality remains the mainstream approach. However, there remains a notable gap: few studies critically assess these technical limitations or explain how they impact the overall effectiveness of propaganda detection. Addressing these shortcomings through a detailed technical analysis would not only highlight current research gaps but also provide a foundation for articulating the innovative contributions of new approaches.

This study adopts the fine-tuned RoBERTa model, which includes coding analysis with feature representation and a classifier for propaganda detection. This approach aims to improve the extraction of complementary information and the modeling of latent semantic relationships, thereby enhancing the overall accuracy and reliability of propaganda detection.

3. Material and Methods

To address the challenges of detecting propaganda in COVID-19-related social media discussions, we developed an innovative ML-MC classification approach, structured into three primary phases. These phases focus on the novel aspects of our methodology, emphasizing the contributions of our proposed model while minimizing the discussion of existing methods.

The first phase involves data collection, pre-processing, and annotation. We curated a comprehensive dataset from public discussions on social media platforms, including Facebook and Twitter. This dataset was manually annotated to ensure high-quality training data for our model. Pre-processing involved removing irrelevant content, such as common stopwords, and applying targeted filters to retain information-rich text regions. This step also included labeling specific propaganda techniques and COVID-19-related themes, as outlined in our proposed taxonomy. The meticulous annotation process, guided by domain experts in NLP, ensured robust and reliable input for subsequent analysis.

In the second phase, we focused on feature representation and DL-based text classification, leveraging advanced techniques to extract meaningful insights from the data. Instead of relying solely on traditional methods such as Term Frequency–Inverse Document Frequency (TF-IDF), we employed modern embedding techniques that integrate contextual word relationships. These embeddings were generated using state-of-the-art pre-trained models such as RoBERTa and BERT, which capture nuanced linguistic patterns essential for accurate propaganda detection. Our approach further incorporated task-specific tuning to enhance the effectiveness of these embeddings in addressing the unique challenges of multi-label classification.

The third phase emphasized the novel ML-MC classification framework and propaganda detection. We developed a multi-layered architecture that integrates embedding generation, task-specific classification layers, and a final softmax-based classifier. This framework was tailored to detect and classify intricate propaganda techniques at the sentence level. Our approach uniquely combines supervised learning for structured labeling with unsupervised learning to capture latent patterns in the data, enhancing both interpretability and predictive performance. The inclusion of advanced models such as RoBERTa and ALBERT, alongside domain-specific optimizations, underscores the originality and rigor of our methodology.

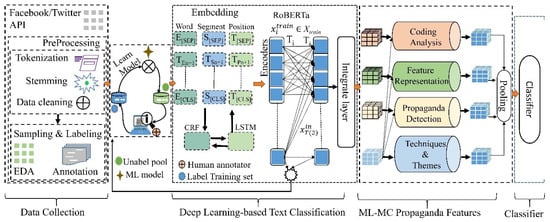

Figure 1 illustrates the complete propaganda detection pipeline, detailing each stage from data collection and pre-processing to classification and final integration. This pipeline represents a cohesive and systematic approach, showcasing the interplay between novel algorithmic designs, advanced embeddings, and robust classification strategies. By focusing on these three phases, our methodology demonstrates its innovative contributions to propaganda detection, emphasizing the integration of cutting-edge DL models, sophisticated data processing, and a well-structured classification framework. This approach directly addresses the limitations of existing methods, offering a significant advancement in the field.

Figure 1.

Propaganda detection pipeline with embedding, softmax, and final classifier layer.

3.1. Data Collection

The ProText dataset was constructed by leveraging publicly available information from social media platforms through both manual collection from Facebook and the use of the Twitter standard search API. These techniques were applied to social media discussions using specific search phrases combined with ProText [7,16,45]. The popularity of the ProText collection was gauged based on public engagement metrics, such as posts with the highest number of likes, shares, and discussions, or popular tweets, spanning from January 2020 to 2023.

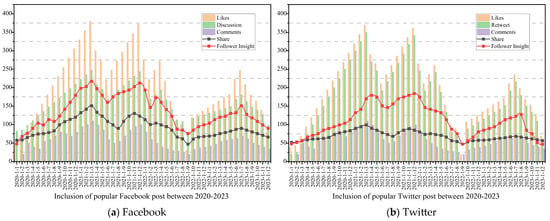

The ProText (https://github.com/Ahmadpir/ProText (accessed on 5 February 2025)) collection was motivated by datasets from SemEval (2019-20), Qprop [7], and PTC [11], which were used as standards for annotating ProText. However, these datasets are only available for general themes/topics, limiting their utility for classification purposes. Statistically, ProText includes 7345 top instances from Facebook and 5125 popular tweets, which were used to develop the training, development, and testing data with a split ratio of 0.7:0.1:0.2, as shown in Table 2. Sampling was performed through stratification, categorizing the instances based on the six propaganda techniques and themes. The training and development sets of the ProText dataset align with those described in previous studies [11]. The inclusion of popular Tweets or Facebook posts from 2020 to 2023 considers likes, follower insights, shares, and discussions, as illustrated in Figure 2.

Table 2.

Propaganda contextual instances of datasets in a variety of circumstances.

Figure 2.

The attachment of popular Tweets/Facebook posts between 2020 and 2023.

3.1.1. Data Pre-Processing

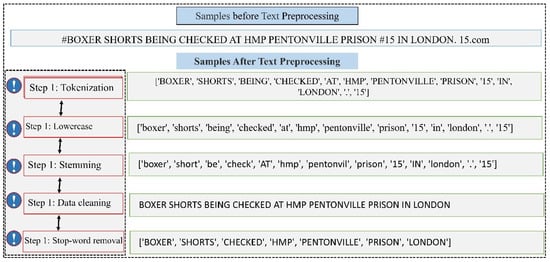

The prevalence of self-established or poorly reviewed news articles on social media often results in a lack of editorial oversight and fact-checking [46], leading to the introduction of typos and dialects that add noise to the data. This underscores the importance of optimizing pre-processing steps for effective propaganda detection. Given the noisy nature of social media text, this stage employs a series of operations including tokenization, data augmentation, stemming, cleaning, and stop-word removal to create structured and reliable inputs for the model. Furthermore, we employed a TF-IDF weighting scheme to identify the model’s features, representing each instance by a vector of term weights. The pre-processing phase encompassed several procedures (see Figure 3) to enhance classification accuracy, including text language and domain identification, dimension reduction, and more [47].

Figure 3.

Sample instances before and after pre-processing.

Step 1: Tokenization. Tokenization, or text segmentation, divides the text into tokens using delimiters such as commas, spaces, and periods to create distinct units for analysis.

Step 2: Data augmentation and lowercase. During our experiments on ProText datasets, we encountered an imbalance between non-propaganda and propaganda news data. To address this, we performed data augmentation to increase the size of the propaganda news dataset. This involved four operations: random insertion (RI), random deletion (RD), synonymous substitution (SS), and random exchange (RE) [48]. Table 3 presents examples of augmented sentences. The implementation details and parameter configurations are as follows:

Table 3.

Sentence developed utilizing data augmentation methodologies.

- Random Insertion (RI): A synonym of a randomly selected word in the sentence is inserted at a random position. For each sentence, up to two insertions were performed (insertion rate = 0.2).

- Random Deletion (RD): Each word in the sentence was randomly deleted with a probability of 0.1 (deletion rate = 0.1).

- Synonym Substitution (SS): A maximum of two words per sentence were replaced with their synonyms using WordNet-based similarity matching, ensuring semantic coherence (substitution rate = 0.2).

- Random Swap (RS): Two randomly selected words in a sentence were swapped. This was repeated up to two times per sentence (swap rate = 0.2).

Step 3: Stemming. Using NLTK, stemming was performed to reduce words to their root forms, which minimized morphological variations, reduced feature space, and enhanced classification performance [32,49].

Step 4: Data cleaning. The text data were cleaned by removing numbers, non-English letters, special characters (#, $, %, @, &), URL tags, punctuation marks, and other irrelevant data.

Step 5: Stop-word removal. Stop-words, which are common and insignificant words such as prepositions, conjunctions, and pronouns, were removed to improve classification performance.

Removing punctuation and special characters during pre-processing can potentially affect model performance, as it may alter the context and sentiment of the text. Special characters and emojis often carry additional meaning or convey emotions critical for understanding the underlying propaganda techniques or sentiments expressed in the text. To address this, we will explore the impact of punctuation and special character removal on model performance. This exploration will involve comparing the model’s performance with and without the removal of punctuation and special characters using appropriate evaluation metrics. Additionally, we will examine whether incorporating techniques such as encoding special characters or representing emojis as tokens can mitigate any negative effects on model performance. Through this analysis, we aim to provide insights into the trade-offs involved in pre-processing techniques for propaganda detection and ensure our approach maximizes the model’s effectiveness in capturing propaganda cues from social media content.

The final model architecture integrates BERT, RoBERTa, LSTM, and CRF components to form a robust multi-label, multi-class (ML-MC) propaganda detection framework. The process is structured as follows:

- Pre-trained Encoders: Both BERT and RoBERTa were employed as contextualized embedding layers. BERT was used to extract base-level semantic embeddings, while RoBERTa refined these embeddings with more robust pre-training on longer sequences. Outputs from both models were concatenated to capture diverse contextual representations.

- LSTM Layer: The concatenated embeddings were passed through a bi-directional LSTM layer (hidden size = 256) to capture temporal dependencies and long-range relationships in the sequence.

- CRF Layer: On top of the LSTM outputs, a Conditional Random Field (CRF) layer was used for structured sequence tagging. This layer effectively modeled label dependencies and improved the accuracy of multi-label classification, especially for overlapping propaganda techniques.

- Training and Optimization: The model was fine-tuned using the Adam optimizer (learning rate = 2 × 10−5, batch size = 16), with early stopping applied to avoid overfitting. The cross-entropy loss was used for multi-class tagging, combined with CRF’s negative log likelihood.

This hybrid approach leverages the strengths of both transformer-based language models and sequence-based classifiers, enabling precise detection of nuanced propaganda features across texts, as detailed in Section 3.2.

3.1.2. Data Sampling and Labeling

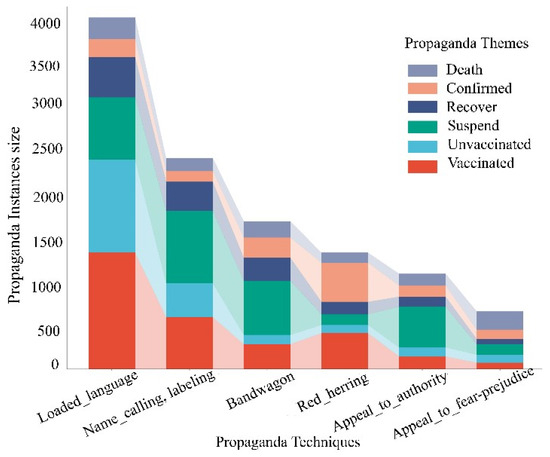

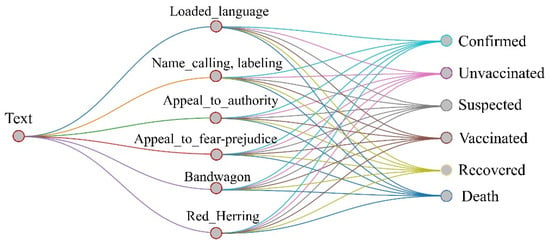

This stage focuses on curating a high-quality labeled dataset essential for training and evaluating the propaganda detection model. During the annotation process, annotators identified example records with preconceived notions [50]. Consequently, pre-processing the annotated COVID-19 dataset was essential to ensure its high quality and suitability for developing innovative models. The propaganda-related data were categorized into six classes—Loaded_language, Name_calling, Labeling, Bandwagon, Red_herring, Appeal_to_authority, and Appeal_to_fear-prejudice—along with six themes—suspected, confirmed, recovered, deceased, vaccinated, and unvaccinated—as illustrated in Figure 4.

Figure 4.

The propaganda data sample six classes and themes.

The datasets derived from social media discussions on COVID-19 encompass these six distinct propaganda classes and themes. Traditionally, explorative data analysis (EDA) employs simple comparative research techniques [51]. To mitigate overfitting and enhance the proposed models, EDA methods such as swapping, random replacement, deletion, and insertion are employed. Given the imbalance in propaganda techniques within the dataset, an under-sampling method was applied to facilitate the completion of the ML-MC task.

3.1.3. Data Imbalance Processing

Addressing data imbalance is critical for ensuring that minority propaganda classes are adequately learned by the model. In this stage, under-sampling was applied to balance the dataset, focusing on improving model performance without overfitting, given the rich feature representations generated during data augmentation.

We present data imbalance in the ProText dataset—where non-propaganda instances significantly outnumber propaganda examples—an under-sampling technique was applied to the majority class [52]. This approach was chosen over over-sampling to avoid potential overfitting and reduce training time, especially given the relatively high-quality and semantically rich augmented propaganda samples. Under-sampling proved more effective in balancing class distribution without duplicating data, ensuring the robust performance of the ML-MC model in detecting minority class instances.

3.2. DL-Based Text Classification

The detection of propaganda in COVID-19-related public discussions presents unique challenges, particularly in ML-MC classification tasks. Traditional text classification methods, such as bag-of-words (BoW) approaches, lack the ability to capture contextual relationships between words, limiting their effectiveness. Our study addresses these limitations by employing an advanced transformer-based model tailored for propaganda detection in COVID-19-related discourse.

This study leverages the strengths of DL models to automatically extract context-sensitive features from raw text. These features allow for the identification of nuanced relationships and patterns within the data, which are crucial for classification. Specifically, we use a transformer model enhanced with TF-IDF and n-gram embeddings to improve feature representation. This approach enables our model to distinguish subtle propaganda techniques while maintaining scalability for large datasets. Key innovations of our DL-based classification approach include the following:

Active max pooling: This mechanism highlights the most relevant textual features, ensuring that critical information influencing classification is prioritized. This improves the model’s ability to detect fine-grained propaganda elements in text.

Two-fold cross-entropy loss function: Designed for multi-label classification, this loss function enhances the model’s performance by addressing overlapping labels and improving predictive accuracy across multiple classes.

Hidden bottleneck layers: By introducing these layers, our approach refines text representations, reduces model size, and optimizes computational efficiency without sacrificing performance.

In contrast to prior work, which often focuses on tasks like fake news detection or sentiment analysis [53], our approach specifically targets propaganda detection in the context of COVID-19. This focus fills a critical gap by providing tools for continuous monitoring and prevention of misinformation in public health discourse. Additionally, our model’s architecture is designed to handle the vast label and feature spaces inherent to multi-label classification, ensuring robustness and scalability.

This research underscores the importance of proactive propaganda detection, particularly for healthcare organizations and policymakers. By integrating advanced DL techniques into public health strategies, our methodology provides a practical and scalable solution for addressing misinformation during global health crises. The emphasis on innovative modeling techniques and their application to real-world challenges highlights the main contribution of this study, advancing the state of the art in ML-MC text classification.

3.2.1. Transformer-Based DL Architecture

Our approach leverages the RoBERTa model, a transformer-based DL architecture, as the core of our propaganda detection framework. RoBERTa is an enhancement of the BERT model, tailored to address specific limitations in its pre-training methodology. Unlike BERT, which incorporates a next-sentence prediction (NSP) task during pre-training, RoBERTa removes this task, focusing instead on more extensive language masking, larger mini-batch sizes, and increased learning rates. These modifications improve its ability to capture nuanced language patterns, making it particularly effective for tasks requiring deep contextual understanding.

In this study, we select RoBERTa over other BERT variants due to its proven performance in addressing challenges similar to those presented by our dataset. While models such as Multi-Task Deep Neural Networks (MT-DNN) [54], ALBERT (A Lite BERT) [55], and the generalized autoregressive model (XLNET) [56] have shown promise in other domains, RoBERTa’s design aligns closely with the requirements of our ML-MC propaganda detection task. Its architecture is optimized for handling complex, context-sensitive text, which is critical for accurately identifying propaganda techniques in COVID-19-related public discussions.

By fine-tuning RoBERTa on our curated dataset, we capitalize on its ability to adapt pre-trained parameters to domain-specific challenges. This approach allows for superior classification performance, making it a central component of our proposed methodology. The emphasis on RoBERTa underscores our commitment to leveraging state-of-the-art tools to achieve high accuracy and robustness in detecting and classifying propaganda.

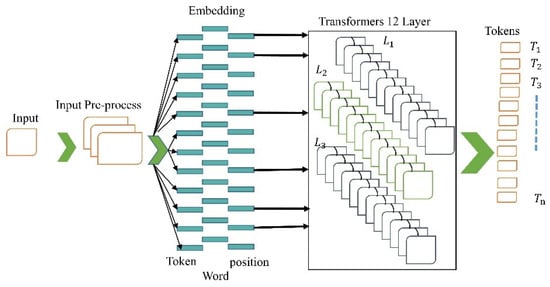

3.2.2. Embedding

The embedding stage is designed to transform textual inputs into dense, context-rich vector representations that preserve both syntactic and semantic information. In our proposed approach, we utilize advanced word embedding techniques to represent textual data in a manner that effectively captures semantic and syntactic relationships. Embeddings play a crucial role in DL-based NLP by transforming words and phrases into continuous vectors within a lower-dimensional space. For this study, we focus on embeddings derived from the 12-layer RoBERTa transformer model, which is central to our text classification framework.

Unlike traditional embedding methods such as Word2Vec and ELMO, which rely on predefined static representations or task-specific training with limited context, the embeddings generated by RoBERTa are dynamically adjusted during fine-tuning. This enables our model to capture deeper contextual nuances and relationships between words, making it highly effective for propaganda detection in complex multi-label classification tasks. The RoBERTa-based embeddings not only enhance feature representation but also contribute to improved classification accuracy by integrating context across longer text spans.

By focusing on RoBERTa embeddings, our approach emphasizes the advantages of transformer-based architectures in capturing rich text representations. This ensures that our propaganda detection framework is equipped to handle the intricate patterns and subtle signals present in COVID-19-related public discourse. Figure 5 illustrates the embedding process within the 12-layer RoBERTa model, showcasing how textual data are transformed into a structured representation optimized for classification tasks. This embedding strategy is tailored to align with the specific requirements of our study, underscoring the main contribution of leveraging state-of-the-art techniques to enhance propaganda detection and classification.

Figure 5.

Embedding (token/word/position) model and transformer 12-layer encoder.

3.2.3. LSTM and CRF Model

This stage enhances sequence labeling and contextual dependency modeling by integrating LSTM networks with CRF layers. The goal is to effectively capture both local and global structures in the text, enabling precise identification of propaganda techniques at the token and sequence levels.

In our proposed framework, we integrate LSTM networks with CRF to enhance sequence labeling and contextual analysis in propaganda detection. This hybrid approach capitalizes on the strengths of both models, with CRF excelling in structured prediction tasks and LSTM networks providing robust context-aware representations. The CRF component operates as a global model for sequence labeling, efficiently handling flexible features and assigning optimal label sequences to input text. By leveraging probabilistic modeling, the CRF assigns weighted values to input sequences, optimizing the label predictions. This enables the CRF to capture relationships between labels and input features, which is particularly useful for identifying sentence-level propaganda techniques [57]. The ability of the CRF to model dependencies among labels ensures a coherent and accurate labeling process, which is critical in complex classification tasks.

The labeled outputs from the CRF are then fed into LSTM networks for deeper contextual analysis. LSTMs, with their ability to capture long-term dependencies, are particularly well suited for processing sequential data such as text. In our framework, the LSTM refines the predictions by incorporating context across the sequence, enhancing the overall accuracy and interpretability of the classification results.

This integration of CRF and LSTM addresses key challenges in propaganda detection by combining the structured prediction capabilities of CRF with the context-aware processing power of LSTM networks. By focusing on the synergy between these models, our approach represents a significant advancement over traditional sequence labeling methods, ensuring both scalability and robustness in handling complex multi-label classification tasks. This hybrid model is a cornerstone of our methodology, emphasizing its role in advancing propaganda detection within COVID-19-related public discourse.

3.3. ML-MC Propaganda Features

This stage focuses on feature engineering and representation, with the objective of accurately encoding textual characteristics relevant to detecting multiple overlapping propaganda labels. Through structured coding analysis, TF-IDF transformations, and advanced segment tagging techniques, the features are optimized for input into the DL classification model.

3.3.1. Coding Analysis

To effectively train our propaganda detection model, we conducted a structured coding analysis to identify and label various propaganda techniques present on online social media platforms. This process was critical to creating a robust and high-quality dataset tailored to the study’s objectives. Unlike prior studies that broadly classified misinformation, fake news, and rumors [26], our analysis specifically focused on delineating propaganda techniques within the context of COVID-19-related discourse. This targeted approach enables our model to address a significant gap in the literature by advancing the detection of fine-grained propaganda.

The coding analysis followed a rigorous, multi-phase methodology adapted from established qualitative research frameworks [58]. The process consisted of six systematic steps: (a) initial examination and planning, (b) open and axial coding, (c) development of a preliminary codebook, (d) pilot testing of the codebook, (e) final coding procedures, and (f) iterative review to derive final themes. These steps were instrumental in ensuring the reliability and validity of the dataset used for model training.

During the preliminary stage, a random sampling algorithm was employed to select representative records from the complete dataset for analysis. Each sampled record was thoroughly examined and categorized based on a coding scheme designed to capture the nuances of propaganda techniques. The scheme was informed by prior research while being customized to meet the specific needs of this study. A detailed overview of the propaganda types, their definitions, and examples is provided in Table 4.

Table 4.

List of propaganda types and definitions with sample examples.

The second phase of coding involved collaboration with five expert annotators who were extensively trained in the coding scheme. A sample dataset of 12,470 records was annotated, with each record reviewed by three annotators to ensure consistency. Annotators were assigned 2494 records each, selected randomly to minimize bias. Training sessions provided comprehensive instructions and examples for each propaganda category, ensuring uniform interpretation and application of the coding scheme.

To assess the reliability of the annotations, we calculated inter-coder reliability using Krippendorff’s alpha, a robust statistical measure suited for datasets with multiple observers and categories [59]. This ensured a high degree of agreement among annotators, confirming the reliability of the coding process. Final labels were consolidated using a majority-vote mechanism, further enhancing the quality of the annotated dataset.

This rigorous coding process not only provided a foundational dataset for model training but also contributed to advancing the understanding of propaganda in online discourse. By focusing on fine-grained classification, our study lays the groundwork for developing sophisticated detection methods tailored to address real-world challenges in misinformation and propaganda.

3.3.2. Feature Representation

Common words often carry minimal relevant information, necessitating techniques, such as TF-IDF [60] and LIWC [61] for word-frequency-dependent feature extraction. To ensure uniform vocabulary across classes and themes for DL at the experiment’s outset, raw text underwent cleaning processes [62]. The bag-of-words (BoW) method converted text into numeric values based on word frequency in specific propaganda instances and common words. Additionally, we selected keywords related to COVID-19, with or without hashtags (such as corona and #2019-nCov), as well as epidemic-related terms, such as lockdown, quarantine, and nCov. This approach allowed us to collect sufficient data to mirror public sentiment on social media. The provided instances were sourced from datasets that clearly labeled them into specific propaganda types. Machine learning methods can classify text content only after converting it into the following numerical feature vectors:

While the number of documents consisting of a word w, tf(t,d) shows the word terms t in individual documents d, and N is the total number of documents. We specified a document d collection D as multi-class, a word w, and an individual document , as computed.

3.3.3. Propaganda Detection

The propaganda detection problem is transformed into a multi-label multi-class classification (ML-MC) task by incorporating segment types with ‘invalid’ tokens ([CLS] and [SEP]), which are pruned from other sentences [12]. Thus, propagandist text documents are approached at the sentence level (SLP), focusing on two key components: information features and information themes. The primary objective is to detect six propaganda techniques, each associated with six themes within a given text sample [6,7].

In this approach, the ML-MC model starts with fewer instances of the Red_herring propaganda technique [63] during the pre-processing step. The model then predicts the themes corresponding to the detected technique. RoBERTa is utilized to classify tokens, concentrating them in the last hidden layer using the LSTM model. A novel model is introduced to regulate the order of propaganda/non-propaganda (prop/non-prop) tags and filter out invalid tokens through LSTM and CRF layers as an additional step. Various propaganda strategies, such as Loaded Language, Name Calling, Labeling, and Bandwagon [12,21,22], are frequently encountered in news articles, although often unnoticed. Ultimately, a total of M predictions is obtained for each token-based classifier related to propaganda labels. The COVID-19 corpus has segmented entire documents using BIOE (B—begin, I—inside, O—outside, E—end) tags, incorporating both propaganda and non-propaganda (prop and non-prop) labels, each assigned a single header entity-type tag. The relationship between techniques and themes is illustrated in Figure 6.

Figure 6.

Statistics on the propaganda snippets relationship between the detection of propaganda techniques and theme.

In the COVID-19 corpus (ProText), the entire document is segmented, including tags for propaganda and non-propaganda (prop and non-prop), each assigned a single header entity tag, as detailed in Table 5.

Table 5.

Two different segment patterns and a segment header.

Wherein is given as the input segment of the prop and non-prop with index number i, to address the imbalance created by the loss of unique text during pre-processing, over-sampling techniques were employed. Several advanced BERT-based models, such as ALBERT, MT-DNN, and XLNET, have significantly improved the quality of BERT models [55,56,57]. Various embedding approaches, including BERT variants, word to vector (w2v), and FastText, were utilized to assess the performance of DL models, including CNN, RNN, and RoBERTa [1,64]. The results presented in Section 4 demonstrate that this classifier layer significantly enhances the study of text illustrations, leading to improved prediction accuracy.

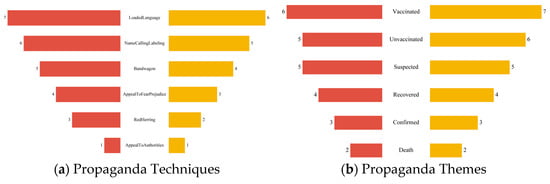

3.3.4. Propaganda Techniques and Themes

The news articles we read daily are often laden with subtle propaganda strategies, even if we are unaware of their presence. Failing to recognize propagandist content can be risky, as it disseminates information crafted to reinforce predetermined beliefs or narratives, shaping our perspectives on global matters. Within ProText, the most prevalent propaganda technique is Loaded_language, appearing almost twice as frequently as Name_calling and labeling, as detailed in Table 6. Other techniques, such as Appeal_to_authority and Fear_of_prejudice, emerge less frequently but are expressed over more extended periods. The sample data provided serve as a discerning illustration of propaganda themes. Table 7 exhibits a comprehensive overview, demonstrating the thorough review of all articles and the corresponding adjustments made to the labels. Samples of popular tweets, Facebook comments (ProText), and other public discussions related to COVID-19 on social media platforms were collected to construct the training dataset.

Table 6.

Propaganda contextual instances of common words in a source dataset.

Table 7.

Propaganda themes that were discussed at COVID-19 are listed.

The ProText dataset was meticulously annotated by five experts, each dedicating 800 working hours to classify instances according to propaganda techniques and themes. These experts, including professors, post-doctoral researchers, and scholars in data mining, DL, and computational linguistics, hailed from esteemed institutions. Due to the time-intensive nature of the annotation process, this study did not encompass other logical fallacies. The annotation process for test articles was further extended by adding one consolidation step.

Following in-depth discussions and consensus among at least two experts not involved in the initial annotations, a Kappa (γ) agreement measure of 0.63 was achieved, indicating moderate to substantial agreement among the annotators. This level of agreement is acceptable for complex tasks, including propaganda detection, as shown in Figure 7. The rationale behind this assessment is elaborated in detail in [11]. The annotation process was thoroughly evaluated in terms of γ agreement between annotators and the final gold labels [50]. This level of agreement signifies a considerable degree of consistency in the annotations, considering the complexity and subjectivity inherent in classifying instances according to propaganda techniques and themes.

Figure 7.

Kappa (γ) agreement measure for all techniques, and themes.

Word links are evaluated in both directions due to the LSTM tri-unit structure. The input, output, and forget states are calculated as follows:

where xt is the input, ig is the input state, og is the output state, and ht is the hidden state, connected to the context . The input state ig specifies input and and specify the biases and weights for the input state. The output state og indicates output, , and specify the biases and weights for output. The forget state , where and indicate the weights and biases, specifies the forget state. This procedure is repeated for the final classifier to enhance the representation of coding analysis and propaganda detection, ultimately leading to improved performance and accuracy. In addition to ML-MC propagation enhancement, mean pooling is performed to obtain the final representations Hfinal for enhanced propaganda detection.

3.4. Final Classifier

The final classification stage combines all learned representations through a softmax-based output layer to predict the most probable propaganda technique and theme for each input instance. The classification process employs final node representations (Hfinal) as input for a softmax layer, which predicts the label L of news articles based on these learned representations. By computing probabilities for each potential label, the softmax function assigns the most likely classification to a given news article. This methodology allows the classifier to effectively categorize news articles into appropriate classes by utilizing the features encoded within the node representations, as follows:

where b is the bias and w is the weight of the input, output, and forget state. We aim to reduce the disparity between the true label and the predicted label by employing the cross-entropy function:

where L′ is the true label and yi is the one-hot vector representation of the ground truth label and the predicted label.

L = Softmax(b + w × Hfinal)

4. Experimental Setup and Results

This section outlines the experimental setup, the datasets used for evaluating the framework, and the performance metrics employed. Subsequently, it details the specific baseline methods used for comparison. Finally, we delve into our results, providing a comprehensive discussion and summarizing our findings.

4.1. Experimental Configuration

This section provides a comprehensive overview of the experiment, including the dataset, experimental environment setup, and results. The experimental setup involved training and testing models on NVIDIA GTX 1070Ti GPUs with 16 GB of DDR3 RAM, running on a 64-bit Windows 10 operating system. The study utilized an ensemble model implemented with the Hugging Face library. The coding environment was configured in Python 3.60, utilizing the PyTorch 2.7.0 framework, and employed the cross-entropy improver technique after pre-processing the data.

4.2. Performance Metrics

The effectiveness of the proposed models was evaluated using various established criteria, including precision, recall, accuracy, and weighted F1 score. Accuracy (Acc) quantifies the proportion of correctly classified instances within the total dataset, providing an overall assessment of model performance in slogan detection and classification. The F1 score, being the harmonic mean of precision and recall, balances these two metrics, offering a comprehensive measure of the model’s efficacy in accurately classifying unseen slogans, particularly in scenarios with imbalanced or skewed data distributions. Recall (R) represents the proportion of actual slogans correctly identified by the model, indicating its sensitivity to true positives. Precision (P) denotes the ratio of correctly identified slogans to the total instances predicted as slogans, elucidating the model’s reliability in minimizing false positives. Additionally, the evaluation encompassed metrics such as G-mean (GM), Normalized Mutual Information (NMI), and Matthews Correlation Coefficient (MCC). These metrics include True Negative (Tn), False Negative (Fn), True Positive (Tp), and False Positive (Fp), contributing to precision and recall calculations. These metrics are widely recognized for assessing a model’s performance. Notably, the weighted F1 score, which represents a weighted average of F1 scores for individual classes, was calculated considering class size. This approach ensures a fair evaluation of the model’s performance in multi-class classification tasks where class sizes vary. Consequently, F1 is regarded as the primary metric, while Acc, P, and R are considered secondary metrics in determining the model’s efficacy.

G-mean is a valuable metric for assessing a model’s accuracy as it considers both precision and recall, providing a comprehensive evaluation of the model’s performance, as follows:

MCC serves as a significant indicator of a binary classifier’s performance. It measures the correlation between predicted and true classes, quantified by the Pearson correlation coefficient. In the MCC scale, a value of 1 signifies an ideal prediction, 0 indicates performance equivalent to random guessing, and −1 represents the poorest prediction scenario, as follows:

In statistics, a mutual information measure quantifies the mutual information between two variables, normalized by their respective entropies. This metric finds frequent application in evaluating model performance in the fields of machine learning and information retrieval, as follows:

where represents the mutual information between variables X and Y, and , denote the entropies of X and Y, respectively.

4.3. Hyper-Parameters Tuning

In our experiments, the model architecture was enhanced using hidden dimensions of 768 × 100, with RoBERTa employed as the pre-trained encoder to generate contextual embeddings. A bi-directional LSTM layer was then applied, with a hidden size ranging from 100 to 200, enabling the model to effectively capture long-range dependencies in both temporal directions. Following this, a Conditional Random Field (CRF) layer was integrated on top of the LSTM outputs. While the CRF layer does not utilize traditional hidden units, it was optimized using negative log-likelihood loss, allowing it to model label dependencies and improve sequence tagging performance for nuanced propaganda detection. To mitigate overfitting, a dropout value of 0.5 was chosen, following Probst, et al. [65]. Additionally, the Hyperband algorithm, as described by Li, et al. [66], was employed to compare its performance against popular Bayesian optimization methods for hyper-parameter optimization in ML-MC problems. The ML-MC model, configured with 10 epochs and batch sizes of 16, was tuned using an Adam optimizer. The learning rate was set within the range of 2–5 × (with a maximum of 2 and a minimum of 5), and weight decay varied from 0.1 to 0.001 for other hyper-parameters. Table 8 illustrates the optimal hyper-parameter ranges for the specific ML-MC model.

Table 8.

All hyper-parameters tuning range and its detail values with all datasets.

4.4. Baselines

We selected a range of competitive models as benchmarks to assess the performance of state-of-the-art (SOTA) models on our benchmark dataset. Each experiment was repeated five times, and the average score was reported for analysis. For the propaganda classification task, we employed fine-tuning BERT [21] and RoBERTa [67] models using cross-entropy loss function for training. To maintain experiment fairness and robustness, identical parameters, such as maximum length, initial learning rate, and optimizer, were applied for the same (ProText) dataset and encoder. A threshold of 0.3 was set, leading to the rejection of outputs below this value for MT-DNN, XLNET, and ALBERT networks [54,55,56]. In text classification, question answering, and natural language inference experiments, RoBERTa demonstrated exceptional effectiveness [35].

- −

- RoBERTa: Tokens associated with each sentence were randomly masked at a rate of 15%, enabling the models to learn contextual information even when words were not explicitly mentioned [67].

- −

- BERT: The BERT model was pre-trained on unlabeled data for various pre-training tasks. Fine-tuning involved initializing parameters with pre-trained values, which were then fine-tuned using labeled downstream data [21].

- −

- MT-DNN: Several improved BERT models, including MT-DNN, have emerged as SOTA methods [54].

- −

- XLNET: Pre-training in XLNET incorporates concepts from Transformer-XL, an advanced autoregressive model [56].

- −

- ALBERT: ALBERT enhances BERT training speed by reducing parameter and memory consumption [55].

- −

- H-GIN (hierarchical graph-based integration network) (Ahmad, Guo, AboElenein, Haq, Ahmad, Algarni and A. Ateya [52]): This constructs an inter-intra channel graph using residual-driven enhancement and processing and attention-driven feature fusing, enabling effective classification at two distinct levels.

4.5. Main Results Analysis

The COVID-19 pandemic has highlighted the importance of social media as a critical platform for information dissemination, including news, fake news, propaganda, and even self-reported symptoms. In this study, we meticulously analyzed public discussions related to COVID-19 on Facebook and Twitter, compiling the content into a unified repository named ProText. The primary objective was to discern various propaganda types within these public discussions on social media platforms, specifically focusing on the COVID-19 theme. In addition, our research involved conducting multi-label multi-class experiments using models such as RoBERTa and BERT, and their variants. These experiments were compared with results obtained from traditional algorithms. DL techniques were applied individually, incorporating a specific set of features to construct a self-learning corpus.

The study’s analysis also included comparing optimization methods to identify the optimal parameters for benchmark algorithms. Remarkably, our ML-MC classification model outperformed existing state-of-the-art techniques, achieving an impressive accuracy rate of 88%. The insights gained from this research are invaluable, especially for government and healthcare agencies. These findings can be used to effectively detect and monitor propaganda on social media platforms, enhancing their ability to combat misinformation during the ongoing COVID-19 crisis.

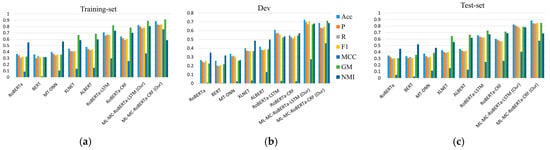

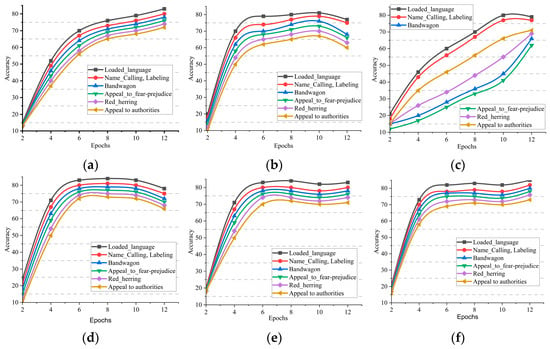

The BERT model utilized the pre-trained “bert-base-uncased” version, comprising 110 million parameters trained on lower-cased English text [21]. During prediction, label distributions in all reached leaves were aggregated for each test document. RoBERTa, BERT, MT-DNN [54], XLNET [56], and ALBERT [55] models were enhanced with NLP features, integrating LSTM and CRF networks [6,35]. These models were compared based on propaganda techniques using the ProText Dev, training, and test datasets, as illustrated in Figure 8.

Figure 8.

The performance of the proposed framework when evaluated with different models on the development set (a), training set (b), and testing set (c). The results highlight the effectiveness of the proposed framework across different datasets and demonstrate its robustness and versatility in various scenarios.

The proposed ML-MC approach utilizing RoBERTa with CRF and LSTM effectively tackled substantial challenges and consistently achieved F1 scores of around 80% or higher. Notably, other methods faced memory constraints, highlighting the scalability and efficiency of the RoBERTa-based approach. The ML-MC-RoBERTa-CRF model was meticulously fine-tuned and achieved commendable performance metrics, including an F1 score of 0.7861, a precision of 0.7992, and a recall of 0.7734 when evaluated on the ProText dataset. In parallel, the ML-MC-RoBERTa-LSTM model demonstrated even higher proficiency, securing an impressive F1 score of 0.8363, along with a precision of 0.8319, and a recall of 0.8275. These results highlight the effectiveness of the proposed propaganda detection models, which exhibit substantial accuracy in identifying propaganda classes within each theme, as shown in Figure 9.

Figure 9.

Evaluation performance accuracy of each propaganda theme: (a) suspected, (b) conform, (c) death, (d) recover, (e) vaccinated, (f) unvaccinated on propaganda classes using RoBERTa in ProText data.

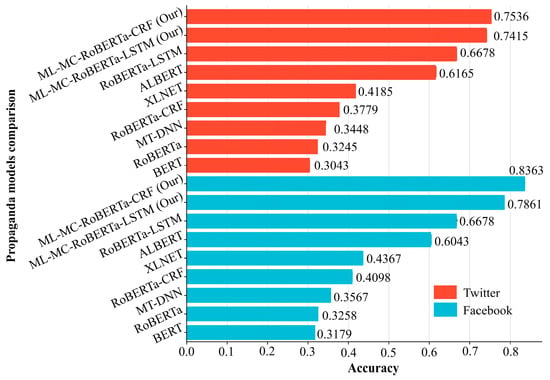

We also conducted robustness tests by introducing various categories and modifying hyper-parameters to assess the model’s resilience. Our proposed model demonstrated superior performance when evaluated against conventional propaganda themes. This outcome underscores the value of NLP features in propaganda prediction, particularly when employing ML-MC techniques on the ProText dataset. These NLP features, extracted from news articles, were instrumental in predicting and comparing accuracy across different instances of the ProText dataset, including data sourced from Facebook and Twitter, as illustrated in Figure 10.

Figure 10.

Evaluation performance (Acc) compared to the traditional model on multiple data, with NLP features network model.

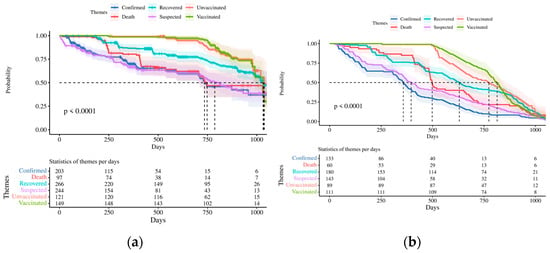

Transformer architectures, particularly those based on autoregressive layers, proved to be the optimal choice for analyzing ProText data due to their ability to predict future behavior based on past data. Our proposed ML-MC model achieved an accuracy rate of 82% on ProText test data. In summary, the fine-tuned RoBERTa model, as presented in this article, has been seamlessly integrated with NLP modules, leveraging extensive public participation in social media discussions. Moreover, the Kaplan–Meier curve is estimated for the time-to-event distribution and is disaggregated by high–low theme scores, as shown in Figure 11.

Figure 11.

Kaplan–Meier estimates propaganda theme scores on ProText data based on (a) Facebook and (b) Twitter.

The ML-MC-based NLP model constructed these curves algorithmically, extracting progression events. Consequently, higher theme scores correlate with a shorter tail of the time-to-event distribution, indicating that discussions related to various topics (such as vaccination status, suspected cases, confirmed cases, deaths, and recoveries) occur more rapidly for samples with high theme scores. p-values, determined through a log-rank test, were used for statistical significance assessment. All models underwent rigorous examination, and each model led to consistent conclusions.

4.5.1. Evaluation Performance Comparison

The experimental scenario demonstrates that words not aligned with TF-IDF features were extracted from the raw text, ensuring that the feature set (vocabulary) for each class and theme in the dataset remained consistent, particularly in tests involving DL methods. Transformers were employed to explore how propaganda functions within COVID-19-related text corpora. Both models utilized dynamic masking strategies alongside fixed batch sizes to prevent the model from excessively memorizing the training corpus. The evaluation focused on our ML-MC-RoBERTa model incorporating CRF and LSTM networks, chosen for its lower computational complexity. However, larger versions were also employed as robustness checks, with no significant improvements observed, as indicated in Table 9. The study further revealed that the fine-tuned RoBERTa model, combined with CRF and LSTM, demonstrated superior performance, achieving the highest evaluation performance. The “Roberta-base” model [67], an integral part of the BERTBase architecture with 125 million parameters and additional training on extensive text corpora, played a pivotal role in RoBERTa’s effectiveness.

Table 9.

Propaganda detection evaluation performance on ProText dataset.

H-GIN enhances local word co-occurrence features by incorporating three channel layers—syntactic, semantic, and sequential—which have shown improved effectiveness in experimental results with an accuracy of almost 87% [52].

4.5.2. Ablation Study

Ablation analysis is performed to investigate the individual contributions of components within our model. To prove the impact of each main part in ML-MC, we compare ML-MC with its diverse sentence length.

Furthermore, the ProText dataset was segmented into sentence-level units, with sentence length (Sen-Len) ranges tailored to each platform—12–120 characters for Twitter and 80–320 characters for Facebook. Each document in the dataset is tied to a single class label, under the assumption that its content is topically focused. The proposed model was trained using multi-label, multi-class (ML-MC) techniques and evaluated against a balanced dataset. Table 10 illustrates that the optimal sentence length varies by platform. For Facebook, a Sen-Len of 260 characters yielded the highest performance, with an F1 score of 0.8185 and an accuracy of 89.93%. This suggests that moderately long text segments provide richer context without introducing excessive noise. In contrast, Twitter achieved its best results at a Sen-Len of 60 characters, with an F1 score of 0.7179 and 76.08% accuracy, likely due to the inherently concise nature of tweets, where shorter inputs reduce noise and misclassification. These findings underscore the importance of platform-specific optimization in sentence length to enhance classification accuracy.

Table 10.

Task evaluation performance on test data with diverse sentence length.

The ablation experiment results indicate that all core components of the ML-MC model contribute significantly to its effectiveness, underscoring their importance in accurately detecting propaganda.

5. Discussion

The COVID-19 pandemic has underscored the critical role of social media in the dissemination of information, including news, fake news, propaganda, and self-reported symptoms. Our study meticulously analyzed COVID-19-related discussions on Facebook and Twitter, compiling the data into a unified repository named ProText. The primary objective was to identify various types of propaganda using multi-label multi-class (ML-MC) experiments with models such as RoBERTa and BERT, compared against traditional algorithms. The study findings are supported by the theoretical underpinning of the diffusion of innovations theory, which aims to bridge the gap between the development of advanced methodologies and their practical application in combating the spread of misinformation on social media platforms.

5.1. Summary of the Findings

Optimization and performance: The analyses identified optimal parameters for benchmark algorithms, demonstrating that our ML-MC classification model outperformed state-of-the-art methods with an impressive 88% accuracy rate. In the context of the diffusion of innovations theory [10], this result highlights the rapid adoption and effectiveness of our advanced models. The model’s accuracy underscores its potential to help government and healthcare agencies in effectively combating misinformation and accelerating the diffusion of accurate information.

Feature extraction: The findings indicate the consistent vocabulary across datasets reinforces the importance of reliable features in detecting propaganda. Through the lens of diffusion of innovations theory, maintaining a consistent vocabulary and stable feature set facilitates quicker and more efficient identification and dissemination of truthful information—but also is critical for the accuracy and effectiveness of NLP and text classification tasks [68]—thereby curbing the spread of propaganda by ensuring that key terms and phrases are consistently recognized and managed.

Transformer models and performance: The superior performance of the RoBERTa model, particularly when implemented with CRF and LSTM networks and utilizing dynamic masking strategies, signifies the model’s robustness [69] in processing and filtering high volumes of information within NLP tasks [67]. From the perspective of the diffusion of innovation model, these advanced models are critical for managing the rapid spread of both accurate and false information, enhancing the quality and trustworthiness of the information that is widely adopted.

Model comparison: Comparing different models (RoBERTa, BERT, MT-DNN, XLNET, and ALBERT) with integrated NLP features [55,56,67,69] highlighted the clear advantage of our ML-MC approach. Within the diffusion of innovations framework, showcasing these models’ efficacy in ranking and disseminating information supports the notion that better tools significantly enhance the diffusion process by swiftly distributing valuable, truthful information to a broader audience.

Robustness checks: Incorporating various categories and adjusting hyper-parameters to test the model’s resilience [25,65,66] demonstrated its superior performance in propaganda prediction. The diffusion of innovations theory suggests that adaptable and robust tools are better suited for managing information in dynamic environments. This robustness ensures that our models can handle diverse scenarios effectively, improving the speed and accuracy of important information diffusion, aligning with crisis communication theory [70], and suggesting our model’s application in multiple crisis scenarios.

Sentence length configuration: Optimizing sentence lengths for Facebook and Twitter datasets led to improved accuracy in performing NLP tasks [71], particularly when shorter sentence lengths were used, with 260 characters for Facebook achieving 89% and 60 characters for Twitter achieving 76% accuracy. This emphasizes the significance of sentence length and structure in the effectiveness of word representations and NLP models [72]. According to the diffusion of innovations theory, concise messaging can facilitate quicker adoption by reducing cognitive load and making it easier for users to process and share vital information. This, in turn, minimizes classification errors and enhances the overall effectiveness of information dissemination.

5.2. Theoretical Implications

This study makes significant theoretical contributions to the field of propaganda detection and classification, informed by the findings and the diffusion of innovations theory, which offers a robust framework for understanding the adoption and diffusion of advanced methodologies.

First, this research advances the theoretical understanding of propaganda detection by emphasizing the complexity of identifying subtle cues and concealed messages within textual data. Unlike previous studies that predominantly focused on document-level analysis [6,12,73], our work adopts a fine-grained ML-MC framework capable of detecting six distinct propaganda techniques and six COVID-19-related themes at the sentence level. This granularity allows for more nuanced filtering and classification, especially on social media platforms. The integration of the diffusion of innovations theory highlights the practical advantages of this approach, as it equips both IS researchers and general users to detect propaganda early, promoting the rapid dissemination of accurate and trustworthy information.

Second, this study introduces the ProText dataset, which addresses key gaps in prior datasets such as PTC [11] and Proppy [7] that lacked comprehensive fact-checking and fine-grained labeling. ProText is meticulously curated at the sentence level, incorporating balanced annotations for both propaganda techniques and themes. This dataset not only serves as a valuable resource for IS researchers but also contributes to a deeper theoretical understanding of propaganda’s motivations, which extend beyond personal or organizational gains to societal impacts. By integrating traditional ML and advanced DL techniques, the dataset offers new opportunities for theoretical exploration within AI and IS research, while aligning with the diffusion of innovations theory to foster the rapid adoption of these methodologies in diverse domains.

Third, this study introduces a novel ML-MC model that bridges the theoretical gap between multi-label (ML) and multi-class (MC) tasks. This model provides a detailed framework for addressing challenges, such as media bias and fact-checking, while demonstrating clear advantages over traditional methods [74,75]. By unifying ML and MC tasks, this approach not only enhances the understanding of propaganda classification but also facilitates quicker adoption by showcasing its relative advantage, as emphasized in the diffusion of innovations theory. This contribution is especially relevant in crisis scenarios, such as natural disasters or political upheavals, where accurate and timely information dissemination is critical.

Finally, this research enhances the theoretical understanding of propaganda mechanisms, enabling IS researchers and practitioners to critically evaluate and counteract public opinion manipulation on social media platforms. By moving beyond traditional classification methods [57,64], the study underscores the importance of promoting user awareness and vigilance in digital environments. It introduces tools that enhance digital citizenship by safeguarding user privacy and fostering social equality while upholding freedom of speech. These advancements align with the diffusion of innovations theory by highlighting the innovation’s visibility and relative advantage, encouraging widespread adoption in the fight against misinformation.

These findings are critical in restricting cybercrime and preventing fraud on digital platforms, ensuring that entities like Facebook and Twitter remain accountable for user privacy and digital integrity. They provide a robust reference point for future IS research, emphasizing the role of advanced DL techniques in safeguarding societal and digital ecosystems while fostering equitable and transparent communication channels.

5.3. Practical Implications

This study provides actionable insights and practical implications for various stakeholders, including social media platforms, policymakers, the general public, businesses, and IS researchers. These implications emphasize the utility of the proposed propaganda detection methodologies in combating misinformation and fostering a trustworthy digital environment.

First, social media platforms stand to gain significantly by adopting advanced propaganda detection techniques. Integrating the algorithms developed in this study can improve content quality and protect users from harmful propaganda, thereby enhancing user trust and engagement. This improved content integrity can bolster platform credibility, fostering sustainable growth and user loyalty. By employing robust detection filters, platforms such as Twitter and Facebook can effectively eliminate misleading content, delivering accurate and reliable information to their audiences. Such measures are critical in maintaining brand reputation and ensuring a positive user experience, ultimately supporting their long-term viability in a competitive digital ecosystem.

Second, policymakers can utilize these findings to establish and enforce regulatory frameworks requiring social media platforms to adopt effective propaganda detection mechanisms. By setting industry standards and accountability measures, policymakers can ensure the integrity of information shared online while safeguarding user privacy. This regulatory intervention can also curb cybercrime, fraud, and the dissemination of malicious content, thereby preserving the digital ecosystem’s integrity. Additionally, by promoting the implementation of advanced propaganda detection technologies, policymakers can contribute to the circulation of accurate and unbiased information, fostering public welfare, social equality, and freedom of speech.