A Systematic Review on the Combination of VR, IoT and AI Technologies, and Their Integration in Applications

Abstract

1. Introduction

- Identify the primary application domains of VR-AI-IoT integration.

- Identify and analyze methodologies, tools, and frameworks used for combining these technologies.

- Highlight unique advantages of their synergy.

- Discuss key limitations and challenges that hinder practical adoption.

- Offer directions for future research to advance interdisciplinary innovation.

2. Background

2.1. Virtual Reality

2.2. Internet of Things

2.3. Artificial Intelligence

2.4. Combined Technologies

3. Systematic Review Methodology

3.1. Research Questions

- RQ1: What are the primary fields of application where VR, AI, and IoT are used in combination?

- RQ2: How are the three core technologies studied (VR-AI-IoT) or their subsets currently being integrated in different application domains, and what unique advantages does their combined usage offer compared to isolated or paired applications?

- RQ3: What methodologies, tools, and architectures are commonly employed in studies combining VR, AI, and IoT?

- RQ4: What are the current limitations and challenges in combining VR, AI, and IoT, particularly regarding data collection, interoperability, and user experience?

- RQ5: Are there any emerging frameworks or models of the seamless combination of the three technologies, for specific application domains?

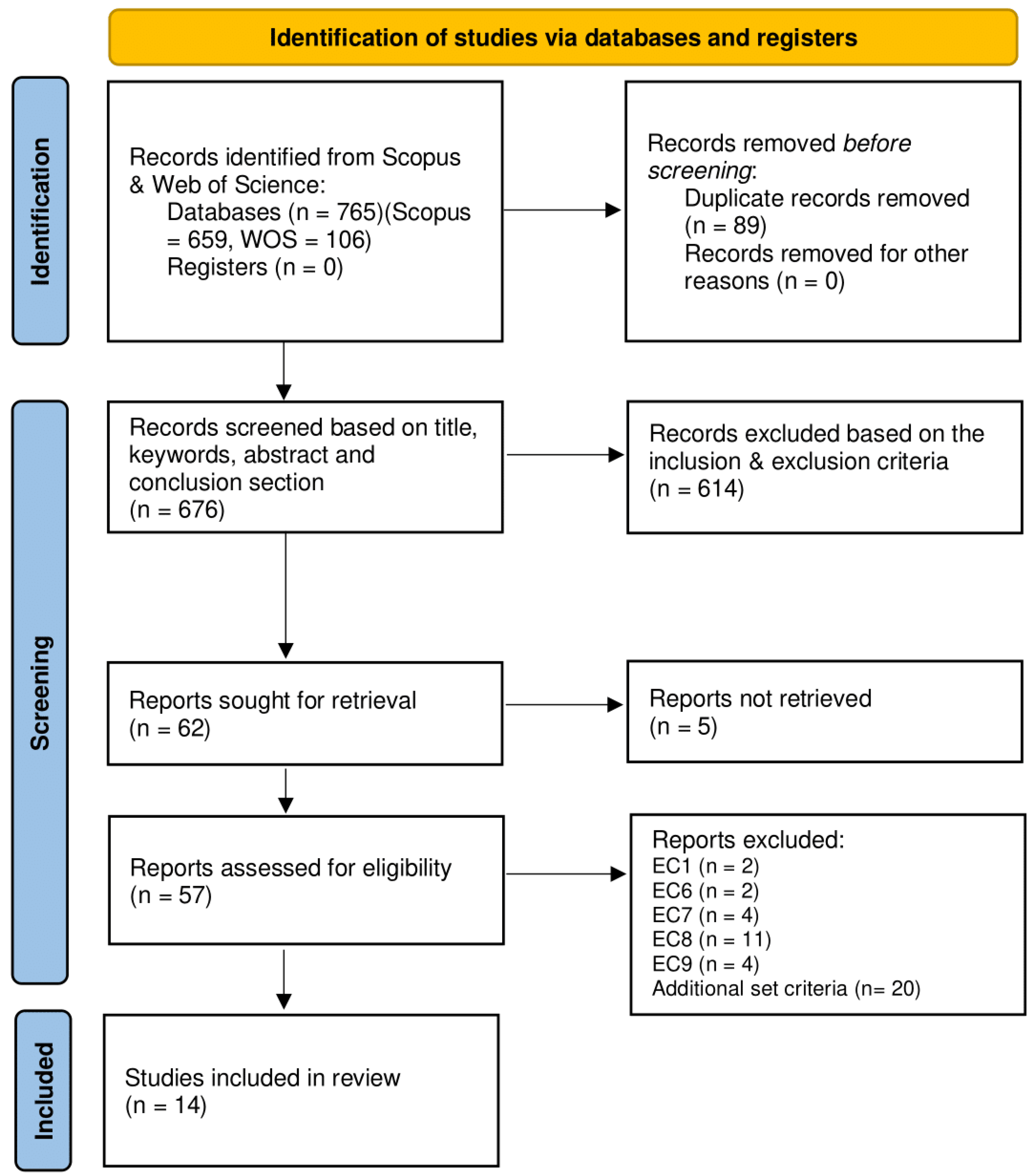

3.2. Eligibility Criteria

- Inclusion CriteriaIC1: Studies published between 2020–2024IC2: Peer-reviewed articlesIC3: Referring to at least two combined technologiesIC4: Full text available in English

- Exclusion CriteriaEC1: Review papers, editorials, or opinion piecesEC2: Grey literature (technical reports, white papers, blogs)EC3: Retracted publicationsEC4: Publications without DOIEC5: Duplicate publications of the same studyEC6: Papers presenting single-technology implementationsEC7: Papers presenting system architecture proposals without any prototype implementationsEC8: Content with missing or unclear methodology.EC9: Papers without adequate proof of a system’s existence or inadequate testing of the proof-of-concept implementation (Simulations with fabricated data are not considered adequate as well as testing with a significant focus on a single part of the system, e.g., AI model improvements within IoT or data transfer latency, etc.) are not considered. Papers that neither show images nor mention a testbed or experiment are also excluded. Only implementations or experiments that use the proposed system in its entirety are considered, regardless of scale, as long as it could be applied to real-life scenarios, even after further improvements.

- Number of citations to the paper.

- Impact factor of the journal published.

- Clarity of the methodology followed (e.g., tables provided, graphs, step by step details etc.)

3.3. Search Queries & Databases

3.4. PRISMA

4. Overview of Included Studies

| Work | Application Field | Technology Combination | Type of Implementation | Methodology, Models & Tools Used | Open Technology | Advantages | Limitations and Challenges | Key Results |

|---|---|---|---|---|---|---|---|---|

| [65] Zixuan Zhang et al. (2020) | Healthcare, Smart home, Entertainment | AI + IoT + VR | Real scenario in gait monitoring as well as other applications | T-TENGs, 1D CNN, Python with Keras and TensorFlow, Unity for VR | Not specified | Low-cost, Self-powered, High accuracy in gait identification (93.54%), Potential for real-time monitoring without privacy concerns, Additional applications in VR games and smart home systems | Limited accuracy with an increasing number of participants, Environmental sensitivity affecting sensor outputs, Worse single sensor results, Need for larger datasets for improved model performance | Introduced a fully complete non-privacy invasive wearable electronic system of smart socks. Achieved 93.54% accuracy in gait identification among 13 participants and 96.67% accuracy in detecting five different human activities useful for healthcare. Demonstrated potential applications in VR fitness games and smart home systems |

| [66] Zhongda Sun et al. (2021) | Industry, Retail | AI + IoT + VR | Simulation with real items, proposed real life scenario | T-TENG, L-TENG, PVDF, Python with Keras, 1D-CNN, SVM, DBN, Arduino Mega 2560, Oscilloscope | Not specified | Low-cost, High-accuracy (97.14%), Works in low light conditions which is important for industrial applications, Immersive VR experience through DTs, Integration of temperature sensing gives new capabilities, Allows for comprehensive understanding of the products handled in VR world | Need for effective sensor fusion, Difficulty recognizing similarly shaped objects, Recognition accuracy influenced by grasping angles and PVDF sensor’s by contact pressure. Limited dataset potentially affecting generalizability, Possible noticeable time lag between virtual and real world. Future efforts could enhance robustness in diverse settings and applications | Successfully developed a soft robotic manipulator that integrates multiple sensors for enhanced object recognition and user interaction in a virtual shopping environment. The system achieved a high recognition accuracy with potential for real-time applications in unmanned working spaces. It also proposed a shop digital twin and a two-way communication concept |

| [67] Abdul Rehman Javed et al. (2023) | Healthcare | AI + IoT | Real small scale testing | Smartphone sensors (Accelerometer, Gyroscope, Magnetometer, and GPS), Python for AI, Hoeffding Tree, Logistic regression, Naive Bayes, k-Means | Not specified | Privacy-preserved solution, Real-time human activity recognition and assistance, Acceptable accuracy (90%), Easy access and with relatively low cost considering the use of smartphone sensors | No multi-resident home implementation, Need for optimal location clustering to improve accuracy of activity recognition, suggesting existing methods may not adequately differentiate between closely located activities. Collecting a more diverse dataset with varied activities and participants, along with real-world testing, could enhance its performance | Successfully integrated AI and IoT to provide real-time support for the cognitively impaired, Potential for improved health assessments and personalized care plans. The findings underscore the importance of privacy preservation in handling sensitive user data. Good accuracy of over 90% in recognizing daily life activities to sensor data |

| [68] Jun Zhang and Yonghang Tai in their (2022) | Healthcare | AI + IoT + VR | Real testing scenario | Unity for the DT, CNN for treatment prediction, For vulnerability detection: CodeBERT, Word2vec, GloVe, FastText For the Training simulator: Laptop, Undisclosed force feedback devices, Oculus VR-Headsets, Positioners, Cameras | Not specified | Immersive surgical training through real-time feedback and interaction, Proven to improve novice doctors, Implementation of a new vulnerability tolerance scheme that enhances the cyber resilience | Limited scope of clinical data and only to lung biopsy, System may not fully replicate real-world conditions, Relatively low accuracy of the proposed cybersecurity measure, Insights from healthcare professionals could be improved with better evaluation metrics, Scalability and cost implications not addressed | The proposed medical digital twin significantly improved novice doctors’ surgical skills to near-expert levels, using a custom CNN for treatment suggestions. Mixed Reality (MR) training was highly effective, and CodeBERT enhanced the cyber security of the MDT by improving software vulnerability identification accuracy |

| [69] Lorenzo Stacchio et al. (2022) | Industry, Healthcare, Smart home and more | AI + IoT + VR | Real testing scenario; but observational study regarding the manufacturing setting | Microsoft HoloLens 2 for AR, HTC VIVE for VR Unity for VR, YOLO V5 for object detection, SIFT (Scale-Invariant Feature Transform) for image matching | Not specified | Real-time collaboration and knowledge sharing through human annotations, Enhancing the learning experience and facilitating better communication in a variety of fields, Interconnects the physical with the virtual world through parallel DTs thus keeping the advantages of both | Need for improved annotation retrieval methods, Reliance on user engagement for annotations, Implementing advanced technologies such as XR and crowd intelligence may introduce technical challenges, Scalability might be an issue due to potential information overload, Testing with a wider audience could be beneficial | A versatile DT annotation system, the HCLINT-DT framework supports knowledge preservation and sharing through annotations. It connects the real world with a virtual digital twin via AR and VR, fostering collaborative learning. An online survey of 30 participants found the AR interface easy to use, though some preferred traditional photo albums over augmented ones |

| [70] Sabryna V. Fernandes et al. (2022) | Industry | AI + IoT + VR | Real live laboratory scenario | Mobile Mapping Systems (MMS), Drones for aerial imaging, Thermal cameras for asset inspection, and Ground Penetrating Radar (GPR) for underground mapping, LiDAR to identify object characteristics and 3D image representation, SQL, Undisclosed AI algorithms/models | Not specified | Attempt to include legacy devices, Significant reduction in working hours for inspections, Decrease in on-field time for various activities, Dynamic and real-time monitoring, Predictive maintenance, VR training without the associated risks of real life tasks, Cost-Effectiveness | Costly high-quality equipment, Complexity of integrating various data sources, Expanding data integration even for legacy devices, Could incorporate more sophisticated data analytics tools and elaborate more on the additional info accessible to the users through the DT, as well as develop a constant user feedback mechanism, Address information overload | The digital twin for the electrical distribution system improved operational efficiencies, using high-end tools, IoT sensors, and knowledge from previous studies. It reduced inspection hours by 90% and on-field time by 65%, DTs enhanced asset management and industrial collaboration through VR and AR, and service quality in smart cities |

| [71] Haymontee Khan et al. (2021) | Education | AI + VR | Real testing scenario | Smartphones, Google Cardboard, Decision Tree algorithm for the learning assistance | Not specified | App for teaching that is made to work in devices with low specification standards and incorporates both AI, AR and VR through low cost tools. | Ensuring accessibility to affordable technology for all students, No cost-effectiveness analysis provided, Does not address the long-term effectiveness, scalability, and potential technological barriers faced by students, The testing user base was extremely limited (20 students), The AI capabilities are just as limited without the use of advanced methods such as deep learning and neural networks, No feedback mechanism, Content is very limited and not expandable by users, UI could be improved, Does not consider ways to complement traditional educational methods and support diverse learning styles. | Proposed an AR, VR, and AI-enhanced system for education allowing for interactive material, assistance in quizzes. Testing showed that students that used it performed better than those who didn’t |

| [72] Jian Wang et al. | Education | AI + IoT + VR | Simulation experiments | Kinect, Undisclosed VR Headsets, VR-Ready computers, SVM and PSO, MySQL, Microsoft SQL Server 2018 and ASP.NET for web applications, MATLAB 2019 for simulations | Doesn’t provide specific code or files but the paper is very detailed providing data openly thus making it more easy to replicate | Exceptional AI capabilities with cutting-edge algorithms and optimization, Feedback mechanism, Expandable content, Takes into account conventional teaching methods, Provides a whole framework from activity evaluation to curriculum and instructional material building. | Yet to be implemented in a real life higher education classroom, Need for more robust data management, Could benefit from user experience feedback as well as test the long term effectiveness, A cost-effectiveness analysis would be useful as well. | An immersive VR-based information management system was created to enhance college PE by integrating AI algorithms such as SVM and PSO, and providing real-time feedback using Kinect. The optimized model significantly improved performance metrics, showcasing the transformative potential of this technology on physical education |

| [75] Kuan-Ting Lai et al. | Smart Cities | AI + IoT + VR | Real world testing and simulations | ArduPilot microcontroller, MAVLink protocol, Android device with Snapdragon CPU, Microsoft AirSim, TensorFlow Lite, MobileNet SSD for object detection, Elliptic-curve cryptography, TCP and WebRTC protocols. The following drones: Bebop 2, F450 and X800 | Open source in GitHub | Low-cost conversion of standard drones into AIoT drones, Ability to run real-time AI models for tasks such as object detection, Secure cloud server for managing drone fleets, VR simulations for training and testing, Open-source approach. | Improve drones’ autonomy and weight, Could add more VR environments and AI models, Could also showcase optimal route planning scenarios. | The AI Wings system effectively commands and controls multiple AIoT drones, achieving real-time object detection at over 30 FPS using MobileNet SSD on Snapdragon 855. The experimental medical drone service showcased the system’s capability to deliver AEDs quickly, showing practical applications in emergency scenarios |

| [77] Qian Qu et al. (2024) | Smart Cities | AI + IoT + VR | Real testing scenario with a proof-of-concept prototype | Unreal Engine 5, YOLOv8 for object detection, Android device, Drones, Meta software, Meta Quest 3, RTSP protocol | Not specified | Real-time data processing and monitoring, Integration of physical and digital environments. | No detection accuracy reported, Lack of a comprehensive security analysis of the implemented protocols, No user experience evaluation, Network slicing layer and microchained security networks yet to be implemented in real testing, Ethical Considerations could also be discussed. | Integration of AI, IoT, and VR in UAVs enabled real-time object detection and situational awareness with low latency. The microverse concept is feasible and validated by these findings, enhances public safety and urban governance by providing dynamic digital representations of real-world scenarios |

| [78] Shun-Ren Yang (2023) | Smart Cities | AI + IoT | Real testbed scenarios | SIP, LSTM, CNN, Hybrid blockchain architecture for secure messaging | According to the article, the project was open and could be downloaded through GitHub but it is not up anymore (date checked: 1 January 2025) | Low latency and high quality of experience for messaging and streaming-based AIoT applications through the more advanced SIP protocol, Addresses the limitations of current IoT service platforms which struggle to support diverse AIoT applications requiring multimedia capabilities. | More details about the experiments conducted are needed, such as where and in how many places the system was actually implemented. Need for enhancement of the audio recognition accuracy. Does not address user experience. | Introduced the low latency AIoT platform AIoTtalk and showcased road traffic prediction as well as neighborhood violence detection applications |

| [73] Paolo Sernani (2020) | Art & Culture | AI + IoT | Laboratory simulations | Sewio UWB tags and antennas, Apache Cordova for the app development, Google’s speech-to-text services for voice recognition, Redis for in-memory database management | Not specified | Enhances museum visitor engagement by providing personalized, voice-based interactions with artworks, Attempts to avoid distractions from digital displays, Allows for the use of natural language, Understands user’s proximity to exhibitions through tracking tags, Offers an experience that wouldn’t be possible with a traditional audio or human guide. | Lack of multilingual support, Not tested in noisy environments, No formal evaluation methodology, No user study or quantitative data on system effectiveness and learning engagement, No discussion on scalability and concurrent users, No XR Capabilities, No pattern behavior analyzation. | Managed to provide personalized museum visits through voice and text interactions, demonstrating the potential to enhance user engagement without overwhelming visitors with information. System can effectively localize users and provide relevant information |

| [74] Yunqi Guo (2024) | Art & Culture | AI + IoT + VR | Real testing with 2 public datasets and 1 made by the authors | LangChain framework for AI agent construction, WebXR with three.js for AR rendering, GPT-3.5 and GPT-4 LLMs for scene description generation, DreamGaussian, MVDream and Genie for Text-to-3D conversion, Blender for 3D rendering, AR Goggles with attached Camera, Meta Quest 3 | Not specified | Real-time sensor data visualization in AR with low-latency, Dynamic scene adaptation, High fidelity in IoT data interpretation, Cross-platform compatibility, Enhanced user interaction while taking into account user feedback, Good cooperation of many tools and technologies. | LLMs struggled to map sensor data accurately to real-world entities, Some generative models produced less detailed textures and object collisions, No real-world deployment, Limited evaluation of user feedback, Potential computational overhead for real-time scene generation on lower-end devices, Heavy reliance on pre-defined prompts for LLMs limiting flexibility in unexpected scenarios. | Proposed a framework for using IoT sensor and spatial data to generate immersive, contextually relevant AR scenes, achieving high scores in fidelity and utilization. The system is built to transform intangible environmental data into contextual visual representations with low-latency and high accuracy that enhance users’ situational awareness and interactions depicting the environment’s status in an artistic way |

| [79] Jae-won Lee et al. (2023) | Entertainment, Metaverse | AI + IoT + VR | Limited lab testing but with working proof | Webcam for image capture, PoseNet for pose estimation, and a Teleport Information Extractor for posture classification, Raspberry Pi 4, Google Coral USB Accelerator, MCPI (Minecraft Pi Edition API), and Mobius as the IoT server, MobileNet V1 as the backbone network, MQTT and HTTPS for data transfer. | Not specified | Allows for unrestricted content consumption without spatial constraints, Compatible with devices adhering to the oneM2M standard, Network-based connections instead of wired ones, enhancing user mobility and interaction in the metaverse. | Very limited functionality and use case, Requires specific lighting conditions, No discussion of latency and accuracy of the recognition, No comparison to existing solutions, Not scalable, Seems impractical for actual applications, No consideration of industry standards beyond oneM2M, Limited testing, Only showcased its application in the Minecraft video game environment. | A system that estimates user postures using an AIoT device and enabled the teleportation of user avatars in a metaverse environment based on these postures. The experiments demonstrated effective communication between the AIoT device and the metaverse server, allowing for real-time repositioning of avatars based on user movements. |

5. Addressing the Research Questions

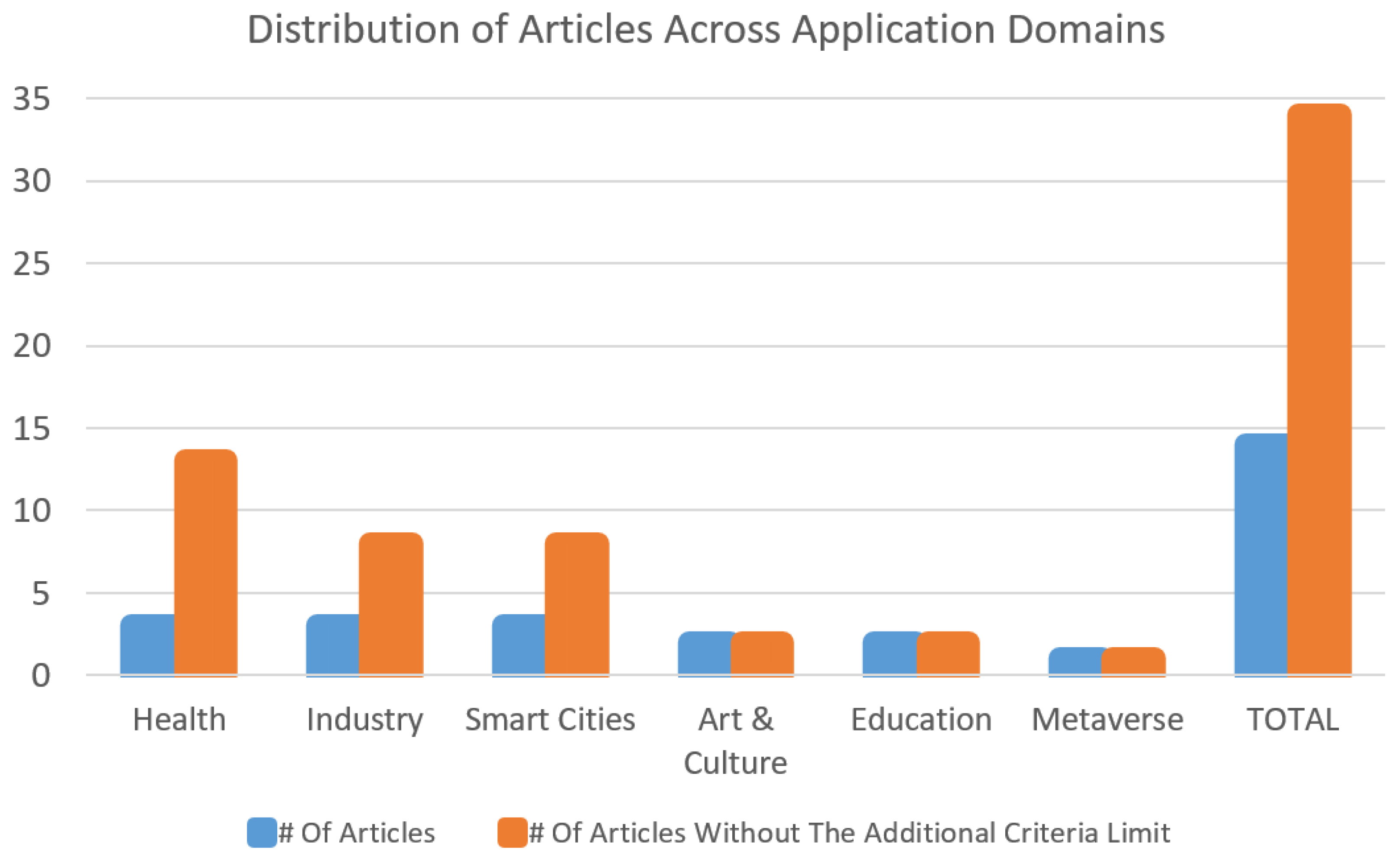

5.1. RQ1: What Are the Primary Fields of Application Where VR, AI, and IoT Are Used in Combination?

5.2. RQ2: How Are the Three Core Technologies Studied (VR-AI-IoT) or Their Subsets Currently Being Integrated in Different Application Domains, and What Unique Advantages Does Their Combined Usage Offer Compared to Isolated or Paired Applications?

5.2.1. Healthcare

5.2.2. Industry 4.0

5.2.3. Smart Cities

5.2.4. Education

5.2.5. Art & Culture

5.3. RQ3: What Methodologies, Tools and Architectures Are Commonly Employed in Studies Combining VR, AI, and IoT?

5.3.1. Digital Twins

5.3.2. Layered Architectures

5.3.3. Machine Learning Models

5.3.4. Communication Protocols

5.3.5. Tools and Platforms

5.4. RQ4: What Are the Current Limitations and Challenges in Combining VR, AI, and IoT, Particularly Regarding Data Collection, Interoperability, and User Experience?

5.5. RQ5: Are There Any Emerging Frameworks or Models of the Seamless Combination of the Three Technologies, for Specific Application Domains?

6. Future Research Directions

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence | NLP | Natural Language Processing |

| AIoT | Artificial Intelligence of Things | PET | privacy-Enhancing Technologies |

| CNN | Convolutional Neural Network | PLC | Programmable Logic Controller |

| DBN | Deep Belief Network | PoC | Proof of Concept |

| DL | Deep Learning | PSO | Particle Swarm Optimization |

| DP | Differential Privacy | PVDF | PolyVinyliDene Fluoride |

| DT | Digital Twin | QoS | Quality of Service |

| EDT | Emerging Disrupted Technologies | R-CNN | Region-based Convolutional Neural Networks |

| ET | Emerging Technologies | RNN | Recurrent Neural Network |

| FL | Federated Learning | RSU | Roadside Unit |

| HCI | Human Computer Interaction | SIP | Session Initiation Protocol |

| HE | Homomorphic Encryption | SUS | System Usability Scale |

| HRC | Human-robot collaboration | SVM | Support Vector Machine |

| IoT | Internet of Things | TENG | TriboElectric NanoGenerators |

| IoV | Internet-of-Vehicles | T-TENG | Tactile TriboElectric NanoGenerators |

| LiDAR | Light Detection And Ranging | UAV | Unmanned Aerial Vehicle |

| LLM | Large Language Model | UWB | Ultra-WideBand |

| LSTM | Long Short-Term Memory | VR | Virtual Reality |

| L-TENG | Length TriboElectric NanoGenerators | WOS | Web of Science |

| MaaS | Mobility as a Service | XAI | eXplainable AI |

| MCU | Microcontroller Unit | XR | eXtended Reality |

| ML | Machine Learning | ||

| NDT | Network Digital Twin |

References

- Yang, U.; Son, H.; Han, K. Developing a Realistic VR Interface to Recreate a Full-body Immersive Fire Scene Experience. In Proceedings of the SIGGRAPH Asia 2023 Posters Conference, Sydney, NSW, Australia, 12–15 December 2023; Association for Computing Machinery: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Innocente, C.; Ulrich, L.; Moos, S.; Vezzetti, E. A framework study on the use of immersive XR technologies in the cultural heritage domain. J. Cult. Herit. 2023, 62, 268–283. [Google Scholar] [CrossRef]

- Saeed, M.; Khan, A.; Khan, M.; Saad, M.; El Saddik, A.; Gueaieb, W. Gaming-Based Education System for Children on Road Safety in Metaverse Towards Smart Cities. In Proceedings of the 2023 IEEE International Smart Cities Conference (ISC2), Bucharest, Romania, 24–27 September 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Lai, Y.H.; Chen, S.Y.; Lai, C.F.; Chang, Y.C.; Su, Y.S. Study on enhancing AIoT computational thinking skills by plot image-based VR. Interact. Learn. Environ. 2021, 29, 482–495. [Google Scholar] [CrossRef]

- Epp, R.; Lin, D.; Bezemer, C.P. An Empirical Study of Trends of Popular Virtual Reality Games and Their Complaints. IEEE Trans. Games 2021, 13, 275–286. [Google Scholar] [CrossRef]

- Naranjo, J.E.; Sanchez, D.G.; Robalino-Lopez, A.; Robalino-Lopez, P.; Alarcon-Ortiz, A.; Garcia, M.V. A Scoping Review on Virtual Reality-Based Industrial Training. Appl. Sci. 2020, 10, 8224. [Google Scholar] [CrossRef]

- Lopez, M.A.; Terrón, S.; Lombardo, J.M.; González-Crespo, R. Towards a solution to create, test and publish mixed reality experiences for occupational safety and health learning: Training-MR. Int. J. Interact. Multimed. Artif. Intell. 2021, 7, 212. [Google Scholar]

- Rizzo, A.; Goodwin, G.; De Vito, A.; Bell, J. Recent Advances in Virtual Reality and Psychology: Introduction to the Special Issue. Transl. Issues Psychol. Sci. 2021, 7, 213–217. [Google Scholar] [CrossRef]

- Maslej, N.; Fattorini, L.; Perrault, R.; Parli, V.; Reuel, A.; Brynjolfsson, E.; Etchemendy, J.; Ligett, K.; Lyons, T.; Manyika, J.; et al. Artificial Intelligence Index Report 2024 Stanford Institute for Human-Centered Artificial Intelligence. Available online: https://hai-production.s3.amazonaws.com/files/hai_ai-index-report-2024-smaller2.pdf (accessed on 25 January 2025).

- Muralikrishna, V.; Vijayalakshmi, M. Autonomous Human Computer Interaction System in Windows Environment Using YOLO and LLM. In Proceedings of the 4th International Conference on Artificial Intelligence and Smart Energy; Manoharan, S., Tugui, A., Baig, Z., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 157–169. [Google Scholar]

- Akpan, I.J.; Kobara, Y.M.; Owolabi, J.; Akpan, A.A.; Offodile, O.F. Conversational and generative artificial intelligence and human–chatbot interaction in education and research. Int. Trans. Oper. Res. 2024, 32, 1251–1281. [Google Scholar] [CrossRef]

- Kalla, D.; Smith, N.; Samaah, F.; Kuraku, S. Study and analysis of chat GPT and its impact on different fields of study. Int. J. Innov. Sci. Res. Technol. 2023, 8, 827–833. [Google Scholar]

- Al-Fuqaha, A.; Guizani, M.; Mohammadi, M.; Aledhari, M.; Ayyash, M. Internet of Things: A Survey on Enabling Technologies, Protocols, and Applications. Commun. Surv. Tutor. 2015, 17, 2347–2376. [Google Scholar] [CrossRef]

- Reiners, D.; Davahli, M.R.; Karwowski, W.; Cruz-Neira, C. The Combination of Artificial Intelligence and Extended Reality: A Systematic Review. Front. Virtual Real. 2021, 2, 721933. [Google Scholar] [CrossRef]

- Muhammed, D.; Ahvar, E.; Ahvar, S.; Trocan, M.; Montpetit, M.J.; Ehsani, R. Artificial Intelligence of Things (AIoT) for smart agriculture: A review of architectures, technologies and solutions. J. Netw. Comput. Appl. 2024, 228, 103905. [Google Scholar] [CrossRef]

- Hu, M.; Luo, X.; Chen, J.; Lee, Y.C.; Zhou, Y.; Wu, D. Virtual reality: A survey of enabling technologies and its applications in IoT. J. Netw. Comput. Appl. 2021, 178, 102970. [Google Scholar] [CrossRef]

- Ribeiro de Oliveira, T.a.; Biancardi Rodrigues, B.; Moura da Silva, M.; Antonio, N. Spinassé, R.; Giesen Ludke, G.; Ruy Soares Gaudio, M.; Iglesias Rocha Gomes, G.; Guio Cotini, L.; da Silva Vargens, D.; Queiroz Schimidt, M.; et al. Virtual Reality Solutions Employing Artificial Intelligence Methods: A Systematic Literature Review. ACM Comput. Surv. 2023, 55, 1–29. [Google Scholar] [CrossRef]

- Adli, H.K.; Remli, M.A.; Wan Salihin Wong, K.N.S.; Ismail, N.A.; González-Briones, A.; Corchado, J.M.; Mohamad, M.S. Recent Advancements and Challenges of AIoT Application in Smart Agriculture: A Review. Sensors 2023, 23, 3752. [Google Scholar] [CrossRef]

- Chaturvedi, R.; Verma, S.; Ali, F.; Kumar, S. Reshaping Tourist Experience with AI-Enabled Technologies: A Comprehensive Review and Future Research Agenda. Int. J. Hum.–Comput. Interact. 2023, 40, 5517–5533. [Google Scholar] [CrossRef]

- Pyun, K.R.; Rogers, J.A.; Ko, S.H. Materials and devices for immersive virtual reality. Nat. Rev. Mater. 2022, 7, 841–843. [Google Scholar] [CrossRef]

- Baashar, Y.; Alkawsi, G.; Wan Ahmad, W.N.; Alomari, M.A.; Alhussian, H.; Tiong, S.K. Towards Wearable Augmented Reality in Healthcare: A Comparative Survey and Analysis of Head-Mounted Displays. Int. J. Environ. Res. Public Health 2023, 20, 3940. [Google Scholar] [CrossRef]

- Liberatore, M.J.; Wagner, W.P. Virtual, mixed, and augmented reality: A systematic review for immersive systems research. Virtual Real. 2021, 25, 773–799. [Google Scholar] [CrossRef]

- Al-Ansi, A.M.; Jaboob, M.; Garad, A.; Al-Ansi, A. Analyzing augmented reality (AR) and virtual reality (VR) recent development in education. Soc. Sci. Humanit. Open 2023, 8, 100532. [Google Scholar] [CrossRef]

- Velazquez-Pimentel, D.; Hurkxkens, T.; Nehme, J. A Virtual Reality for the Digital Surgeon. In Digital Surgery; Springer International Publishing: Cham, Switzerland, 2020; pp. 183–201. [Google Scholar] [CrossRef]

- Chheang, V.; Schott, D.; Saalfeld, P.; Vradelis, L.; Huber, T.; Huettl, F.; Lang, H.; Preim, B.; Hansen, C. Advanced liver surgery training in collaborative VR environments. Comput. Graph. 2024, 119, 103879. [Google Scholar] [CrossRef]

- Marougkas, A.; Troussas, C.; Krouska, A.; Sgouropoulou, C. How personalized and effective is immersive virtual reality in education? A systematic literature review for the last decade. Multimed. Tools Appl. 2023, 83, 18185–18233. [Google Scholar] [CrossRef]

- Thomas, A. Virtual Reality (VR)—Statistics & Facts. 2025. Available online: https://www.statista.com/topics/2532/virtual-reality-vr/ (accessed on 25 January 2025).

- Kari, T.; Kosa, M. Acceptance and use of virtual reality games: An extension of HMSAM. Virtual Real. 2023, 27, 1585–1605. [Google Scholar] [CrossRef] [PubMed]

- Weinstein, L.; Chardonnet, J.R.; Merienne, F. Cybersickness and the perception of latency in immersive virtual reality. Front. Virtual Real. 2020, 1, 582204. [Google Scholar] [CrossRef]

- Hatami, M.; Qu, Q.; Chen, Y.; Kholidy, H.; Blasch, E.; Ardiles-Cruz, E. A Survey of the Real-Time Metaverse: Challenges and Opportunities. Future Internet 2024, 16, 379. [Google Scholar] [CrossRef]

- Hamad, A.; Jia, B. How Virtual Reality Technology Has Changed Our Lives: An Overview of the Current and Potential Applications and Limitations. Int. J. Environ. Res. Public Health 2022, 19, 11278. [Google Scholar] [CrossRef]

- Meske, C.; Hermanns, T.; Jelonek, M.; Doganguen, A. Enabling Human Interaction in Virtual Reality: An Explorative Overview of Opportunities and Limitations of Current VR Technology. In HCI International 2022 – Late Breaking Papers: Interacting with eXtended Reality and Artificial Intelligence; Chen, J.Y.S., Fragomeni, G., Degen, H., Ntoa, S., Eds.; Springer Nature: Cham, Switzerland, 2022; pp. 114–131. [Google Scholar] [CrossRef]

- Abbasi, M.; Váz, P.; Silva, J.; Martins, P. Enhancing Visual Perception in Immersive VR and AR Environments: AI-Driven Color and Clarity Adjustments Under Dynamic Lighting Conditions. Technologies 2024, 12, 216. [Google Scholar] [CrossRef]

- Ashtari, N.; Bunt, A.; McGrenere, J.; Nebeling, M.; Chilana, P.K. Creating Augmented and Virtual Reality Applications: Current Practices, Challenges, and Opportunities. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ’20); Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Minerva, R.; Biru, A.; Rotondi, D. Towards a definition of the Internet of Things (IoT). In Proceedings of the Towards a Definition of the Internet of Things (IoT). IEEE; 2015. Available online: https://iot.ieee.org/images/files/pdf/IEEE_IoT_Towards_Definition_Internet_of_Things_Revision1_27MAY15.pdf (accessed on 25 January 2025).

- Shovic, J.C. Raspberry Pi IoT Projects: Prototyping Experiments for Makers; Apress: Berkley, CA, USA, 2021. [Google Scholar] [CrossRef]

- Pliatsios, A.; Goumopoulos, C.; Kotis, K. Interoperability in IoT: A Vital Key Factor to Create the “Social Network” of Things. In Proceedings of the 13hh International Conference on Mobile Ubiquitous Computing, Systems, Services and Technologies (UBICOMM 2019), Porto, Portugal, 22–26 September 2019. [Google Scholar]

- Gokhale, P.; Bhat, O.; Bhat, S. Introduction to IOT. Int. Adv. Res. J. Sci. Eng. Technol. 2018, 5, 41–44. [Google Scholar]

- Li, K.; Cui, Y.; Li, W.; Lv, T.; Yuan, X.; Li, S.; Ni, W.; Simsek, M.; Dressler, F. When Internet of Things meets Metaverse: Convergence of Physical and Cyber Worlds. arXiv 2022, arXiv:2208.13501. [Google Scholar] [CrossRef]

- Chen, J.; Shi, Y.; Yi, C.; Du, H.; Kang, J.; Niyato, D. Generative AI-Driven Human Digital Twin in IoT-Healthcare: A Comprehensive Survey. arXiv 2024, arXiv:2401.13699. [Google Scholar] [CrossRef]

- Al-Nbhany, W.A.N.A.; Zahary, A.T.; Al-Shargabi, A.A. Blockchain-IoT Healthcare Applications and Trends: A Review. IEEE Access 2024, 12, 4178–4212. [Google Scholar] [CrossRef]

- Omrany, H.; Al-Obaidi, K.M.; Hossain, M.; Alduais, N.A.M.; Al-Duais, H.S.; Ghaffarianhoseini, A. IoT-enabled smart cities: A hybrid systematic analysis of key research areas, challenges, and recommendations for future direction. Discov. Cities 2024, 1, 2. [Google Scholar] [CrossRef]

- Elgazzar, K.; Khalil, H.; Alghamdi, T.; Badr, A.; Abdelkader, G.; Elewah, A.; Buyya, R. Revisiting the internet of things: New trends, opportunities and grand challenges. Front. Internet Things 2022, 1, 1073780. [Google Scholar] [CrossRef]

- Kumar, S.; Tiwari, P.; Zymbler, M. Internet of Things is a revolutionary approach for future technology enhancement: A review. J. Big Data 2019, 6, 1–21. [Google Scholar] [CrossRef]

- Khanna, A.; Kaur, S. Internet of Things (IoT), Applications and Challenges: A Comprehensive Review. Wirel. Pers. Commun. 2020, 114, 1687–1762. [Google Scholar] [CrossRef]

- Malhotra, P.; Singh, Y.; Anand, P.; Bangotra, D.K.; Singh, P.K.; Hong, W.C. Internet of Things: Evolution, Concerns and Security Challenges. Sensors 2021, 21, 1809. [Google Scholar] [CrossRef] [PubMed]

- European Parliament. What Is Artificial Intelligence and How Is It Used? Available online: https://www.europarl.europa.eu/topics/en/article/20200827STO85804/what-is-artificial-intelligence-and-how-is-it-used (accessed on 25 January 2025).

- IBM. AI vs. Machine Learning vs. Deep Learning vs. Neural Networks. Available online: https://www.ibm.com/think/topics/ai-vs-machine-learning-vs-deep-learning-vs-neural-networks (accessed on 25 January 2025).

- Bi, S.; Wang, C.; Zhang, J.; Huang, W.; Wu, B.; Gong, Y.; Ni, W. A Survey on Artificial Intelligence Aided Internet-of-Things Technologies in Emerging Smart Libraries. Sensors 2022, 22, 2991. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8. [Google Scholar] [CrossRef]

- Das, S.; Tariq, A.; Santos, T.; Kantareddy, S.S.; Banerjee, I. Recurrent Neural Networks (RNNs): Architectures, Training Tricks, and Introduction to Influential Research. In Machine Learning for Brain Disorders; Springer: New York, NY, USA, 2023; pp. 117–138. [Google Scholar] [CrossRef]

- Mienye, I.D.; Swart, T.G. A Comprehensive Review of Deep Learning: Architectures, Recent Advances, and Applications. Information 2024, 15, 755. [Google Scholar] [CrossRef]

- OpenAI. GPT-3 Applications. Available online: https://openai.com/index/gpt-3-apps/ (accessed on 25 January 2025).

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar]

- Bansal, G.; Chamola, V.; Hussain, A.; Guizani, M.; Niyato, D. Transforming Conversations with AI—A Comprehensive Study of ChatGPT. Cogn. Comput. 2024, 16, 2487–2510. [Google Scholar] [CrossRef]

- Varitimiadis, S.; Kotis, K.; Pittou, D.; Konstantakis, G. Graph-Based Conversational AI: Towards a Distributed and Collaborative Multi-Chatbot Approach for Museums. Appl. Sci. 2021, 11, 9160. [Google Scholar] [CrossRef]

- IBM. What Is AI Bias? Available online: https://www.ibm.com/think/topics/ai-bias (accessed on 25 January 2025).

- King, J.; Meinhardt, C. Privacy in an AI Era: How Do We Protect Our Personal Information? Available online: https://hai.stanford.edu/news/privacy-ai-era-how-do-we-protect-our-personal-information (accessed on 25 January 2025).

- Cheong, B.C. Transparency and accountability in AI systems: Safeguarding wellbeing in the age of algorithmic decision-making. Front. Hum. Dyn. 2024, 6, 1421273. [Google Scholar] [CrossRef]

- McCoy, L.G.; Ci Ng, F.Y.; Sauer, C.M.; Yap Legaspi, K.E.; Jain, B.; Gallifant, J.; McClurkin, M.; Hammond, A.; Goode, D.; Gichoya, J.; et al. Understanding and training for the impact of large language models and artificial intelligence in healthcare practice: A narrative review. BMC Med. Educ. 2024, 24, 1096. [Google Scholar] [CrossRef]

- Thakur, R.; Panse, P.; Bhanarkar, P.; Borkar, P. AIoT: Role of AI in IoT, Applications and Future Trends. Res. Trends Artif. Intell. Internet Things 2023, 42–53. [Google Scholar]

- DusunIoT. AIoT—Artificial Intelligence of Things. Available online: https://www.dusuniot.com/blog/aiot-artificial-intelligence-of-things/ (accessed on 25 January 2025).

- Zhang, Z.; Wen, F.; Sun, Z.; Guo, X.; He, T.; Lee, C. Artificial Intelligence-Enabled Sensing Technologies in the 5G/Internet of Things Era: From Virtual Reality/Augmented Reality to the Digital Twin. Adv. Intell. Syst. 2022, 4, 2100228. [Google Scholar] [CrossRef]

- Devagiri, J.S.; Paheding, S.; Niyaz, Q.; Yang, X.; Smith, S. Augmented Reality and Artificial Intelligence in industry: Trends, tools, and future challenges. Expert Syst. Appl. 2022, 207, 118002. [Google Scholar] [CrossRef]

- Zhang, Z.; He, T.; Zhu, M.; Sun, Z.; Shi, Q.; Zhu, J.; Dong, B.; Yuce, M.R.; Lee, C. Deep learning-enabled triboelectric smart socks for IoT-based gait analysis and VR applications. Npj Flex. Electron. 2020, 4, 29. [Google Scholar] [CrossRef]

- Sun, Z.; Zhu, M.; Zhang, Z.; Chen, Z.; Shi, Q.; Shan, X.; Yeow, R.C.H.; Lee, C. Artificial intelligence of things (AIoT) enabled virtual shop applications using self-powered sensor enhanced soft robotic manipulator. Adv. Sci. 2021, 8, e2100230. [Google Scholar]

- Javed, A.R.; Sarwar, M.U.; ur Rehman, S.; Khan, H.U.; Al-Otaibi, Y.D.; Alnumay, W.S. PP-SPA: Privacy preserved smartphone-based personal assistant to improve routine life functioning of cognitive impaired individuals. Neural Process. Lett. 2023, 55, 35–52. [Google Scholar] [CrossRef]

- Zhang, J.; Tai, Y. Secure medical digital twin via human-centric interaction and cyber vulnerability resilience. Conn. Sci. 2022, 34, 895–910. [Google Scholar] [CrossRef]

- Stacchio, L.; Angeli, A.; Marfia, G. Empowering digital twins with eXtended reality collaborations. Virtual Real. Intell. Hardw. 2022, 4, 487–505. [Google Scholar] [CrossRef]

- Fernandes, S.V.; João, D.V.; Cardoso, B.B.; Martins, M.A.I.; Carvalho, E.G. Digital Twin Concept Developing on an Electrical Distribution System—An Application Case. Energies 2022, 15, 2836. [Google Scholar] [CrossRef]

- Khan, H.; Soroni, F.; Sadek Mahmood, S.J.; Mannan, N.; Khan, M.M. Education System for Bangladesh Using Augmented Reality, Virtual Reality and Artificial Intelligence. In Proceedings of the 2021 IEEE World AI IoT Congress (AIIoT), Seatle, WA, USA, 10–13 May 2021; pp. 137–142. [Google Scholar] [CrossRef]

- Wang, J.; Yang, Y.; Liu, H.; Jiang, L. Enhancing the college and university physical education teaching and learning experience using virtual reality and particle swarm optimization. Soft Comput. 2024, 28, 1277–1294. [Google Scholar] [CrossRef]

- Sernani, P.; Vagni, S.; Falcionelli, N.; Mekuria, D.N.; Tomassini, S.; Dragoni, A.F. Voice Interaction with Artworks via Indoor Localization: A Vocal Museum. In Proceedings of the 7th International Conference on Augmented Reality, Virtual Reality, and Computer Graphics (AVR 2020), Lecce, Italy, 7–10 September 2020; De Paolis, L.T., Bourdot, P., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 66–78. [Google Scholar] [CrossRef]

- Guo, Y.; Hou, K.; Yan, Z.; Chen, H.; Xing, G.; Jiang, X. Sensor2Scene: Foundation Model-Driven Interactive Realities. In Proceedings of the 2024 IEEE International Workshop on Foundation Models for Cyber-Physical Systems & Internet of Things (FMSys 2024), Hong Kong, China, 13–15 May 2024; pp. 13–19. [Google Scholar] [CrossRef]

- Lai, K.T.; Chung, Y.T.; Su, J.J.; Lai, C.H.; Huang, Y.H. AI Wings: An AIoT Drone System for Commanding ArduPilot UAVs. IEEE Syst. J. 2023, 17, 2213–2224. [Google Scholar] [CrossRef]

- Kuanting/aiwings. Available online: https://github.com/kuanting/aiwings (accessed on 25 January 2025).

- Qu, Q.; Hatami, M.; Xu, R.; Nagothu, D.; Chen, Y.; Li, X.; Blasch, E.; Ardiles-Cruz, E.; Chen, G. The microverse: A task-oriented edge-scale metaverse. Future Internet 2024, 16, 60. [Google Scholar] [CrossRef]

- Yang, S.R.; Lin, Y.C.; Lin, P.; Fang, Y. AIoTtalk: A SIP-Based Service Platform for Heterogeneous Artificial Intelligence of Things Applications. IEEE Internet Things J. 2023, 10, 14167–14181. [Google Scholar] [CrossRef]

- Lee, J.W.; Lee, Y.; Choi, H.B.; Son, S.W.; Leem, E.; Seo, J. A metaverse Avatar Teleport System Using an AIoT Pose Estimation Device. In Proceedings of the 2023 IEEE International Conference on Metaverse Computing, Networking and Applications (MetaCom 2023), Kyoto, Japan, 26–28 June 2023; pp. 698–703. [Google Scholar] [CrossRef]

- Li, H.; Ma, W.; Wang, H.; Liu, G.; Wen, X.; Zhang, Y.; Yang, M.; Luo, G.; Xie, G.; Sun, C. A framework and method for Human-Robot cooperative safe control based on digital twin. Adv. Eng. Inform. 2022, 53, 101701. [Google Scholar] [CrossRef]

- Zhang, D.; Xu, F.; Pun, C.M.; Yang, Y.; Lan, R.; Wang, L.; Li, Y.; Gao, H. Virtual Reality Aided High-Quality 3D Reconstruction by Remote Drones. ACM Trans. Internet Technol. 2021, 22, 1–20. [Google Scholar] [CrossRef]

- Wu, H.T. The internet-of-vehicle traffic condition system developed by artificial intelligence of things. J. Supercomput. 2021, 78, 2665–2680. [Google Scholar] [CrossRef]

- Miranda Calero, J.A.; Rituerto-Gonzalez, E.; Luis-Mingueza, C.; Canabal, M.F.; Barcenas, A.R.; Lanza-Gutierrez, J.M.; Pelaez-Moreno, C.; Lopez-Ongil, C. Bindi: Affective Internet of Things to Combat Gender-Based Violence. IEEE Internet Things J. 2022, 9, 21174–21193. [Google Scholar] [CrossRef]

- Yu, F.; Yu, C.; Tian, Z.; Liu, X.; Cao, J.; Liu, L.; Du, C.; Jiang, M. Intelligent Wearable System With Motion and Emotion Recognition Based on Digital Twin Technology. IEEE Internet Things J. 2024, 11, 26314–26328. [Google Scholar] [CrossRef]

- Joseph, S.; Priya S, B.; R, P.; M, S.K.; S, S.; V, J.; R, S.P. IoT Empowered AI: Transforming Object Recognition and NLP Summarization with Generative AI. In Proceedings of the 2023 IEEE International Conference on Computer Vision and Machine Intelligence (CVMI), Gwalior, India, 10–11 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Mihai, S.; Yaqoob, M.; Hung, D.V.; Davis, W.; Towakel, P.; Raza, M.; Karamanoglu, M.; Barn, B.; Shetve, D.; Prasad, R.V.; et al. Digital Twins: A Survey on Enabling Technologies, Challenges, Trends and Future Prospects. IEEE Commun. Surv. Tutor. 2022, 24, 2255–2291. [Google Scholar] [CrossRef]

- Unity Technologies. Unity Real-Time Development Platform: 3D, 2D, VR & AR Engine. Available online: https://unity.com/ (accessed on 25 January 2025).

- Epic Games, Inc. The Most Powerful Real-Time 3D Creation Tool— Unreal Engine. Available online: unrealengine.com (accessed on 25 January 2025).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: tensorflow.org (accessed on 25 January 2025).

- Keras—Deep Learning for Humans. Available online: https://keras.io/ (accessed on 25 January 2025).

- Alkaeed, M.; Qayyum, A.; Qadir, J. Privacy preservation in Artificial Intelligence and Extended Reality (AI-XR) metaverses: A survey. J. Netw. Comput. Appl. 2024, 231, 103989. [Google Scholar] [CrossRef]

- Khalid, N.; Qayyum, A.; Bilal, M.; Al-Fuqaha, A.; Qadir, J. Privacy-preserving artificial intelligence in healthcare: Techniques and applications. Comput. Biol. Med. 2023, 158, 106848. [Google Scholar] [CrossRef]

- Lazaros, K.; Koumadorakis, D.E.; Vrahatis, A.G.; Kotsiantis, S. Federated Learning: Navigating the Landscape of Collaborative Intelligence. Electronics 2024, 13, 4744. [Google Scholar] [CrossRef]

- Ferrara, E. Fairness and Bias in Artificial Intelligence: A Brief Survey of Sources, Impacts, and Mitigation Strategies. Sci 2023, 6, 3. [Google Scholar] [CrossRef]

- Min, A. Artifical Intelligence and Bias: Challenges, Implications, and Remedies. J. Soc. Res. 2023, 2, 3808–3817. [Google Scholar] [CrossRef]

- Hanna, M.G.; Pantanowitz, L.; Jackson, B.; Palmer, O.; Visweswaran, S.; Pantanowitz, J.; Deebajah, M.; Rashidi, H.H. Ethical and Bias Considerations in Artificial Intelligence/Machine Learning. Mod. Pathol. 2025, 38, 100686. [Google Scholar] [CrossRef]

| Work | Application Field | Technology Combination | Type of Implementation | Methodology, Models & Tools Used | Open Technology | Advantages | Limitations and Challenges | Key Results |

|---|---|---|---|---|---|---|---|---|

| [80] Li et al. (2022) | Industry | AI + VR + IoT | Simulations and real testing scenarios | HMDs, Binocular cameras, CNNs for motion detection, Unity Engine, Algorithm for distance calculation, OPC Unified Architecture, UDP and TCP protocol for communications, Siemens S7-1200 PLC, ABB-IRB1600 industrial robotic arm | Not specified | Significantly improved the response time for collision avoidance in HRC, Achieved an average response time of 147.06 ms offering real-time monitoring. | Lack of consideration for other moving obstacles in the environment that may cause collisions, Limited dataset, Scalability of the system wasn’t discussed. | Developed a safety control framework for HRC based on DT technology regarding the retention of safe distance. The system achieved a high detection accuracy rate of 97.25% for label recognition and 97.00% for human key point detection, while effectively managing the safety distance between humans and robots during operation. |

| [81] Zhang et al. (2021) | Smart Cities | AI + VR + IoT | Simulations and real testing scenarios | Telexistence drone, NVIDIA Jetson TX2 for processing, a MYNTAI D1000-50 stereo camera for image capture, VINS-Mono framework for visual-inertial odometry, Inertial Measurement Unit, Depth sensors, HMD (Oculus Rift), WiFi, MAVLink protocol for UAV communication | Not specified | Provided better accuracy and completeness of 3D models compared to traditional methods. The system allowed for real-time feedback during the scanning process of outdoor urban environments, enhancing user interaction and navigation guidance. | Latency in data transmission, Computational overheads, UAV autonomy | Improved 3D reconstruction quality by the developed VR interface which achieved higher accuracy and completeness in reconstructions compared to traditional joystick controls. The system’s real-time feedback mechanism allowed users to actively fill in gaps during the scanning process, resulting in more complete models. |

| [82] Wu et al. (2021) | Mobility | AI + IoT | Real testing scenario | Faster Region-based Convolutional Neural Networks (R-CNN) for object recognition, Federated learning, 6G Networks, Raspberry Pi, GPS, Cameras | Not specified | Automatically identifies road conditions and shares relevant multimedia information with nearby vehicles, reducing the need for extensive fixed infrastructure and improving data privacy through federated learning while using cutting-edge technologies like 6G and simple tools like a common dashboard camera. | Dependence on 6G networks, Interoperability and standardization concerns, Legal and ethical concerns, Scalability and implementation costs, Lack of specific accuracy metrics and needs for a larger and wider dataset representing various cases and conditions. | Successfully demonstrated the ability to recognize road conditions and share information in real-time, achieving a significant recognition rate for road obstacles and traffic conditions. The integration of federated learning improved the accuracy of the object recognition model, contributing to enhanced driving safety and efficient traffic management while also maintaining data security. |

| [83] Miranda Calero et al. (2022) | Health (specifically addressing gender-based violence) | AI + IoT + VR | Testing on real data | Physiological sensors, KNN classifier, NN for speech data processing, Commercial off-the-shelf smart sensors and a smartphone application, ARM Cortex-M4, Python, MongoDB | No open technology is explicitly mentioned, except the freely available WEMAC dataset | Provides an autonomous, inconspicuous solution for detecting gender-based violence situations by automatically identifying fear-related emotions, eliminating the need for manual operation by victims during critical moments. | Low classification accuracy (63.61%), Limited small sample size of 47 participants, Computational constraints on the edge devices | Introduced the WEMAC dataset, Integrated physiological and auditory data to detect potential gender-based violence situations and achieved an overall fear classification accuracy of 63.61% using a subject-independent approach. |

| [84] Yu et al. (2024) | Health | AI + IoT + VR | Real testing scenario | TGAM module for electroencephalogram signal acquisition, UWB position chip, Ten-axis accelerometer, Raspberry Pi, CNN, bidirectional LSTM, TB-SFENet developed for motion and emotion recognition, Unreal Engine 5 | The authors expressed willingness to share their emotion classification dataset (TEEC) with institutions, but access would require participant consent due to privacy concerns. There is no indication of open code or tools being provided. | High accuracy in recognizing user states while providing real-time interaction and visualization, enhancing health monitoring while efficiently combining both AI, IoT, and VR | User comfort, Potential interference issues with the UWB positioning accuracy, Scalability | Proposed an intelligent wearable system with high-precision motion and emotion recognition through the developed TB-SFENet model. The DT platform provided real-time interaction and visualization. Showcased significant potential for applications in intelligent healthcare and safety monitoring. |

| [85] Joseph et al. (2023) | Education, Home Automation, Healthcare | AI + IoT + AR | Proof of concept working mechanism | Unity 3D, Vuforia Engine, YOLOv8, OpenCV, APIs for content retrieval, ESP32 microcontroller, Wi-Fi, Bluetooth | Not specified | Cost-effective and accessible requiring only a smartphone camera, making AR and IoT technologies accessible to a wide audience. It enhances user convenience through intuitive hand gestures, promotes energy efficiency by tracking power consumption, and serves as an educational tool for interactive learning. | Need for increased object recognition accuracy, Lack of easily expandable educational content, Need for social features, Scalability concerns, Heavy reliance on smartphone resources, Interoperability with a variety of IoT devices, Lack of testing and user experience research, Lack of security measures | Created a multi-functional application that provides users with real-time control over household appliances and educational content. It allows immersive learning experiences and efficient energy management, demonstrating significant potential for enhancing home automation and education. |

| Application Domain | # Of Articles | # Of Articles Without The Additional Criteria Limit |

|---|---|---|

| Health | 3 | 13 |

| Industry | 3 | 8 |

| Smart Cities | 3 | 8 |

| Art & Culture | 2 | 2 |

| Education | 2 | 2 |

| Metaverse | 1 | 1 |

| Total | 14 | 34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kostadimas, D.; Kasapakis, V.; Kotis, K. A Systematic Review on the Combination of VR, IoT and AI Technologies, and Their Integration in Applications. Future Internet 2025, 17, 163. https://doi.org/10.3390/fi17040163

Kostadimas D, Kasapakis V, Kotis K. A Systematic Review on the Combination of VR, IoT and AI Technologies, and Their Integration in Applications. Future Internet. 2025; 17(4):163. https://doi.org/10.3390/fi17040163

Chicago/Turabian StyleKostadimas, Dimitris, Vlasios Kasapakis, and Konstantinos Kotis. 2025. "A Systematic Review on the Combination of VR, IoT and AI Technologies, and Their Integration in Applications" Future Internet 17, no. 4: 163. https://doi.org/10.3390/fi17040163

APA StyleKostadimas, D., Kasapakis, V., & Kotis, K. (2025). A Systematic Review on the Combination of VR, IoT and AI Technologies, and Their Integration in Applications. Future Internet, 17(4), 163. https://doi.org/10.3390/fi17040163