1. Introduction

The rapid growth of Internet of Things (IoT) devices has revolutionized various fields, including smart cities [

1], healthcare [

2], industrial automation [

3], and transportation systems. These devices are like little data factories, churning out massive amounts of information that can help us make smarter decisions. However, centralizing all these data for training machine learning models presents several challenges. First, privacy becomes a significant concern. People may be reluctant to share their data openly, fearing potential misuse or breaches. Second, the sheer volume of data involved can strain communication networks. Transmitting large datasets back and forth consumes substantial bandwidth, which can lead to inefficiencies and increased costs. Moreover, as IoT networks continue to expand, these issues only become more pronounced. The growing number of connected devices generate even more data, exacerbating the problems of privacy and communication overhead. This is where Federated Learning (FL) comes into play, offering a promising solution by allowing data to remain on the edge devices while still enabling collaborative model training. This approach reduces communication costs and keeps data more secure [

4,

5]. While Federated Learning (FL) seems like an ideal solution, it does come with its own set of challenges. One major hurdle is client mobility. As devices move around, their network connections can become unstable, leading to inconsistent participation in the training process. Furthermore, the varying capabilities of these devices, such as differences in computational power, energy availability, and bandwidth, can result in unbalanced contributions. Some devices may struggle to keep up with the demands of FL, causing delays and reducing overall efficiency. These challenges are particularly pronounced in dynamic IoT environments, where devices frequently change locations and conditions. Traditional FL methods often assume more stable and homogeneous settings, which do not always align with the realities of IoT networks. Therefore, addressing client mobility and heterogeneity is crucial for effective FL implementation in such environments.

Client mobility can mess things up for Federated Learning (FL). When devices are always on the move, it leads to unstable connections, inconsistent computing power, and clients that come and go unpredictably. All these factors cause delays, incomplete model updates, and slow down the whole process. Traditional methods like Federated Averaging (FedAvg) [

6] assume that clients stay put and do not change much, which does not work in IoT networks where everything is constantly moving. Then, there is the issue of device differences. Some devices have more computing power, some have more energy, and others have better internet connections. This creates an uneven playing field where some devices struggle to keep up with FL requirements [

7,

8]. While some approaches try to address this by being smart about resource usage or using reinforcement learning to adapt to changes, they often fall short in highly mobile settings. They might be unable to balance choosing diverse clients and ensuring stable participation [

9,

10]. Existing strategies that consider mobility also have their limitations. For example, FedCS [

11] tries to pick clients based on their computing and communication abilities. Still, it assumes clients will always be available, which is not true in high-mobility environments. Latency-Aware FL [

9] focuses on picking clients with low latency, but it does not predict future movements, so clients often drop out unexpectedly. Vehicular FL models [

12] work well for vehicles following set routes but do not handle unpredictable movement in unstructured IoT scenarios. Reinforcement learning-based methods [

13] can dynamically adjust client selection but require a lot of computational power, which is not always feasible for small IoT devices. This motivated the development of Mobility-Aware Client Selection (MACS). MACS combines predictive modeling of how clients will move, real-time evaluation of their resources, and adaptive selection mechanisms. It does not just look at what is happening right now; it predicts future states to make smarter choices. MACS ensures stable and efficient participation in FL, even when devices are constantly moving. By doing this, MACS speeds up convergence and keeps the model more stable than previous methods.

While MACS was initially designed with IoT networks in mind, its applications extend far beyond. It can be instrumental in any environment where client mobility plays a significant role, and stable selection is crucial for Federated Learning (FL). MACS can revolutionize traffic monitoring, environmental sensing, and public safety in smart cities by picking reliable, high-resource nodes. MACS ensures that models receive continuous updates, leading to better urban management. In healthcare, MACS, focusing on stable wearable devices, optimizes remote patient monitoring and medical AI models. MACS boosts diagnostic accuracy and minimizes disruptions in real-time health tracking. These examples show how versatile MACS is; it thrives in dynamic, data-driven environments where efficient and stable FL participation is key. This paper introduces Mobility-Aware Client Selection (MACS), a groundbreaking strategy that predicts client movement. MACS can pick clients who are likely to stay connected and perform consistently by doing this. This predictive power helps strike a balance between choosing mobile clients that bring diverse data and stable clients that ensure reliable participation. As a result, MACS makes FL more efficient, speeds up model convergence, and cuts down on latency. MACS predict and evaluates and checks each client’s computational capabilities and network conditions before selecting them for training rounds. Moreover, MACS ensures that only the best candidates are chosen. Plus, it has a dynamic resource allocation framework that adjusts resources on the fly based on client feedback. This adaptability is especially valuable in IoT setups, where devices move around and have different capabilities. In short, MACS is a game-changer. It tackles the challenges of mobility and resource heterogeneity head-on, making FL more effective in dynamic IoT environments.

The main contributions of this paper are as follows:

A mobility prediction model that forecasts clients’ future states, ensuring stable and efficient participation in FL rounds.

A resource-aware evaluation mechanism to select clients based on predicted computational and communication capacities, improving FL performance.

A dynamic resource allocation strategy to optimize real-time resource utilization, addressing variability in network conditions and client requirements.

A comprehensive evaluation of MACS through simulations, demonstrating its advantages in dynamic IoT environments, particularly in addressing mobility and resource constraints.

The rest of the paper is structured as follows.

Section 2 reviews related work in FL, focusing on client selection strategies for dynamic IoT environments.

Section 3 introduces the system models, including mobility-aware and resource-aware considerations, and formulates the client selection problem.

Section 4 details the MACS algorithm, explaining its mobility prediction, resource-aware client evaluation, and dynamic resource allocation.

Section 5 presents simulation results, comparing MACS with baseline methods. Finally,

Section 6 concludes the paper and discusses future research directions.

2. Related Work

Federated Learning (FL) has been widely explored for training models across edge devices while keeping data private. However, dealing with mobile clients is still a big challenge. Traditional methods often assume network conditions stay the same, which is not always true in real-world scenarios. Take FedAvg [

4], for example. It picks clients randomly or based on their computing power, assuming they will stay connected throughout the training process. However, in environments where devices are constantly moving or losing connection, this approach does not work. FedCS [

11] tried to improve things by considering communication limits, but it did not factor in client mobility. So when devices move out of range, they drop out frequently, disrupting the training process. Similarly, FedRDS [

14] used regularization and data-sharing techniques to handle client drift and boost model accuracy. While it did well in some aspects, it did not tackle the mobility issue, making it less effective in dynamic IoT settings. Latency-Aware FL [

9] aimed to speed up training by choosing clients with fast connections. However, it struggled with unstable participation without predicting mobility as client availability kept changing. Zafar et al. [

15] looked at FL in vehicular edge computing, developing ways to handle rapid client movement. These methods worked well in structured environments like vehicular networks, but they do not adapt as well to IoT applications, where device movement is often unpredictable. Existing methods have made strides in various areas, but they fall short when handling the unique challenges of dynamic IoT environments. This is where new approaches, like Mobility-Aware Client Selection (MACS), come into play. MACS addresses these issues head-on, ensuring stable and efficient FL even when clients are on the move.

Some studies have employed reinforcement learning (RL) to optimize client selection dynamically. For example, Albelaihi et al. [

13] applied RL in NOMA-enabled FL to improve client selection, adapting to changing network conditions. However, such methods require extensive computational resources, making them impractical for resource-constrained IoT devices. Yu et al. [

16] introduced a latency-aware selection framework to minimize communication delays. While effective in controlled settings, the lack of mobility prediction limits its adaptability in highly dynamic environments. Recent advancements in RL-based client selection have shown promise in addressing these challenges. Rjoub et al. [

17] proposed an RL-based approach using Deep Q-Learning (DQL) to optimize client selection in FL. Their method selects clients based on resource availability, data quality, and past contributions, balancing model convergence speed and fairness. Despite its effectiveness, this approach assumes relatively stable client availability and does not explicitly account for mobility-induced instability. Guan et al. [

18] addressed the challenge of non-IID data in FL by using Proximal Policy Optimization (PPO) to select clients dynamically. Their RL agent learns to prioritize clients whose data distributions contribute most effectively to global model convergence. However, this method focuses on data heterogeneity and does not consider mobility-induced instability. Zhang et al. [

19] explored Multi-Agent Reinforcement Learning (MARL) for client selection in FL. Each client is modeled as an agent, and the RL framework optimizes the selection process by considering local and global objectives. While MARL enhances scalability, it introduces additional computational overhead, which may not be feasible for resource-constrained IoT devices. Zhang et al. [

20] introduced an adaptive client selection strategy using Deep Reinforcement Learning (DRL). Their approach dynamically selects clients by considering factors like computational capabilities, network conditions, and data quality. Essentially, DRL learns over time to make better choices about which clients to include in each training round. However, this method comes with a catch. It requires a lot of processing power, which can be a big issue in IoT environments where devices often have limited resources. While it is great at making smart decisions based on various factors, the high computational demands make it less practical for widespread use in IoT settings.

Recent studies have also looked into hybrid approaches that combine Reinforcement Learning (RL) with other techniques to tackle specific challenges in Federated Learning (FL). For example, Zhao et al. [

21] devised an energy-efficient way to pick clients using RL. Their method selects clients based on how much energy they use, the quality of their data, and communication costs. This approach reduces energy use while keeping the model accurate, making it a good fit for mobile and edge computing. Wan et al. [

22] took a different angle by introducing a fairness-aware RL mechanism. Their RL agent learns to balance getting the best model accuracy and ensuring all clients are fairly represented. While this addresses the fairness issue in client selection, it still struggles in environments where devices move around unpredictably. Despite these advancements, many existing RL-based methods are demanding regarding computational power, making them impractical for IoT devices that often have limited resources. Additionally, many of these methods do not explicitly account for the instability caused by device mobility, which is crucial in dynamic IoT settings. For instance, FedCS [

11] and Latency-Aware FL [

9] focus on communication quality and latency but do not predict mobility, leading to frequent dropouts when devices move out of range. Similarly, RL-based methods like those proposed by Wang et al. [

23] and Tariq et al. [

24] introduce significant computational overhead, which is not ideal for IoT deployments with limited processing power. This work proposes that the Mobility-Aware Client Selection (MACS) framework tackles these issues by integrating mobility prediction, resource-aware client evaluation, and dynamic selection mechanisms. Unlike FedCS, which assumes clients stay available throughout training, MACS predicts device movement to ensure stable participation. Compared to Latency-Aware FL, MACS does not just rely on real-time latency conditions; it uses future mobility estimation to minimize dropouts. While effective for structured movement, methods designed for vehicles do not work as well for general IoT applications. MACS is built to handle both structured and unstructured mobility patterns. Moreover, MACS has a lower computational overhead than reinforcement learning-based approaches, which makes it more suitable for IoT devices with limited processing power. Ensuring the trustworthiness of data in FL is another challenge, as unreliable clients can introduce biased updates or security risks. Iqbal et al. [

25] suggested a feedback-based trust mechanism where clients assign trust scores based on observed data consistency. Marche et al. [

26] used a machine learning model to detect anomalies in IoT data exchanges, enhancing reliability. Nevertheless, these methods require continuous monitoring and additional computational resources, which may not always be feasible in FL due to communication constraints. MACS inherently improves data reliability by selecting clients with stable mobility patterns and sufficient computational resources, reducing the likelihood of unreliable contributions. Several studies have explored various client selection strategies in FL, but many fall short regarding high-mobility IoT environments.

Table 1 provides a clear comparison of existing approaches. FedCS [

11,

27,

28] picks clients based on communication quality but does not consider mobility, leading to unstable participation. Latency-Aware FL [

9,

29] focuses on low-latency clients but lacks mobility prediction, causing frequent dropouts when network conditions change. Vehicular FL [

12,

30] works well for structured mobility but is not as effective for general IoT applications with unpredictable movement. Reinforcement learning-based approaches [

13,

31,

32] optimize selection over time but come with high computational overhead, making them impractical for resource-limited devices. MACS integrates predictive mobility modeling, real-time resource-aware selection, and adaptive mechanisms to enhance client participation in FL. Unlike FedCS and Latency-Aware FL, MACS proactively selects clients based on anticipated mobility, reducing instability in training. It also achieves lower computational complexity than reinforcement learning-based methods, which require significant processing power.

This work introduces the Mobility-Aware Client Selection (MACS) strategy to improve Federated Learning (FL) in dynamic environments. Unlike other methods, MACS uses a clever mobility prediction model. Macs looks at past data and movement patterns to predict where clients will go next. MACS strikes a balance between selecting mobile clients that contribute diverse data and stable clients that ensure consistent participation. It includes a resource-aware evaluation mechanism that assesses each client’s computational power and network conditions, ensuring that only capable clients are chosen for training. This prevents delays caused by resource-limited devices. Additionally, MACS features a dynamic resource allocation mechanism that adjusts in real-time to changing network conditions, maintaining training efficiency even as device capabilities fluctuate. These features enable MACS to handle dynamic environments more effectively than previous methods.

3. System Models and Problem Formulation

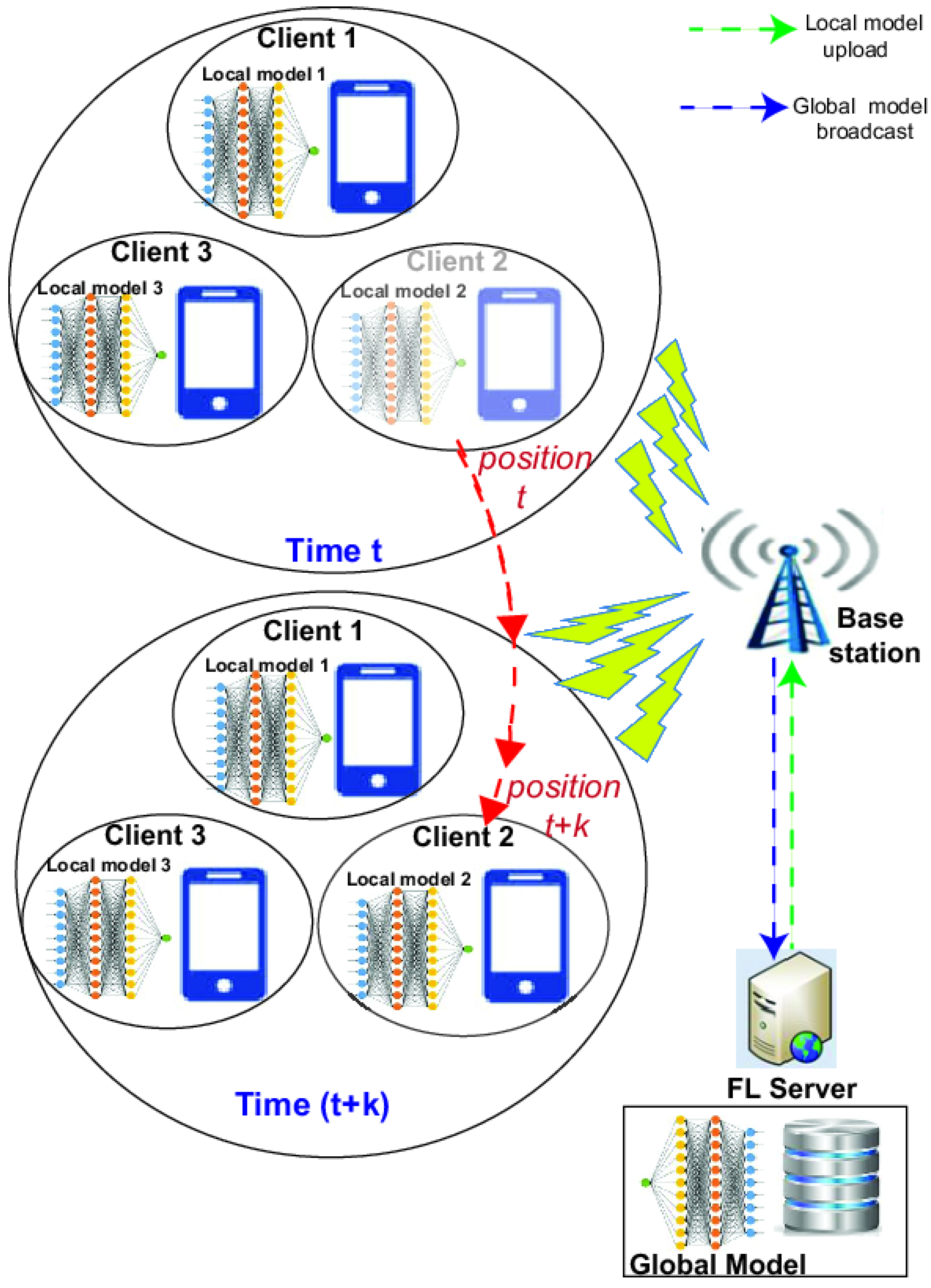

To address the challenges posed by client mobility and resource variability in federated learning (FL), this paper proposes the Mobility-Aware Client Selection (MACS) strategy. MACS ensures that selected clients maintain stable computational and communication capabilities while meeting latency constraints, improving the overall training process. Consider a federated learning system consisting of a base station (BS) that orchestrates the FL process and a set of distributed IoT clients, denoted as , located within the BS’s coverage area. Each client can either participate in the FL training process () or not (), where is a binary variable indicating client selection. MACS evaluates all clients in based on their predicted mobility, computational capacity, and communication quality, selecting a subset of clients for each training round.

Figure 1 illustrates the reference scenario of federated learning in a dynamic IoT environment. At time

t, mobile clients such as smartphones and sensors participate in local training and send model updates to the FL server through a base station. As clients move (e.g., Client 2), connectivity may fluctuate, affecting their ability to contribute updates. By time

, client positions change, introducing challenges such as unstable participation, delayed transmissions, and potential connectivity loss. The FL server aggregates the received updates and broadcasts the global model for the next round. MACS addresses these challenges by predicting client mobility and selecting stable clients based on communication quality and computational resources, ensuring reliable participation and minimizing training delays.

Unlike Static or Random selection strategies, MACS improves client selection in FL through three key mechanisms. First, the mobility prediction component estimates whether a client will remain connected for the entire FL round based on historical movement data and real-time conditions. Second, the resource-aware selection mechanism filters clients based on computational power and network stability, preventing delays and failed updates. Finally, dynamic adaptation continuously adjusts client selection strategies to reduce dropout rates and ensure timely training completion. These mechanisms enable MACS to operate efficiently in IoT networks with high mobility and diverse resource constraints, improving convergence speed, reducing communication overhead, and enhancing the robustness of federated learning in dynamic environments.

3.1. Computational Latency with Mobility Awareness

Computational latency refers to the time required for a client to complete a local training iteration before transmitting updates to the server. It is influenced by factors such as the size of the dataset, model complexity, and the client’s processing capacity. For instance, an IoT device with limited computational resources will take longer to process updates compared to a more powerful device. MACS addresses this by selecting clients with sufficient processing capability to ensure timely updates, reducing overall training delays. The computational latency for each client,

, depends on the client’s computational resources and is estimated as follows:

where

is the predicted computational capacity of client

u at future time

, derived from the mobility prediction model that anticipates changes in client resources based on their historical movement patterns.

is the average number of CPU cycles required for processing each data sample.

is the number of data samples available to client

u.

indicates the number of iterations needed to achieve the desired accuracy

, where

v is defined as follows:

with

being the learning rate, and

L and

depending on the eigenvalues of the Hessian matrix of the loss function.

3.2. Communication Latency Considering Mobility

The communication latency for each client to upload its local model update to the BS depends on its communication conditions and mobility. The achievable data rate for client

u, denoted as

, is given by the following:

where

B is the total available bandwidth allocated to all participating clients,

is the transmission power of client

u,

is the channel gain, which varies based on the location and mobility of client

u, and

is the background noise power.

The uploading latency,

, for client

u to transmit its local update to the BS is as follows:

where

s is the size of the model update. Clients with higher data rates experience lower transmission delays, while those with weaker connections require more time to complete the upload or may fail. MACS selects clients with stable connections to ensure reliable participation and reduce delays in global model aggregation.

3.3. Mobility Prediction Model

The Mobility Prediction Model is a core component of MACS, enabling the prediction of each client’s future computational and communication state based on historical mobility data. This prediction allows MACS to evaluate whether the client will have sufficient resources at a future time point to contribute effectively to the training process. Specifically, the computational capacity and data rate at future time

are predicted as follows:

where

represents the predicted mobility state of client

u at future time

.

3.4. Problem Formulation

The formulation of the client selection problem is as follows:

The objective is to maximize the number of selected clients in a global iteration. The first constraint ensures that the total latency (including computational and upload latency) for the last client in is less than or equal to the predefined deadline, denoted as . The computational capacity threshold in MACS ensures that clients can complete local training within the required time. It is determined based on the number of CPU cycles needed per data sample and the client’s available processing power. Clients below this threshold are excluded to avoid delays in training rounds. The threshold varies based on the use case and hardware. In resource-limited IoT environments, it is set lower to allow for wider participation, even for low-power devices. In high-performance edge computing, it is raised to prioritize clients with faster processing, improving training efficiency. Adjusting the threshold dynamically helps maintain a balance between computational capability and timely model updates. The second constraint defines the last client in . The predicted computational capacity and data rate depend on the predicted mobility state . The third constraint implies that is a binary variable, indicating whether a client is selected or not.

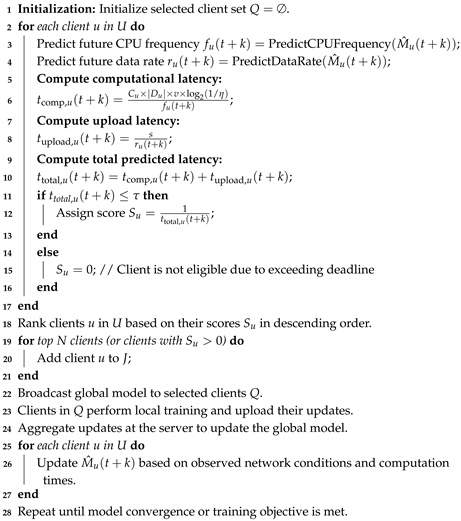

4. Mobility-Aware Client Selection (MACS) Algorithm

MACS applies a heuristic approach to maximize the number of selected clients while ensuring they meet the latency requirements: (1) All clients are initialized as unselected (). The set of selected clients () is initially empty, and the iteration counter t is set to zero. (2) Each client is evaluated based on its predicted latency, and scores are assigned accordingly. Clients with a positive score are ranked in descending order of . (3) The top N clients (or those with ) are selected to participate. During each iteration, clients are reassessed, and the resource allocation is adjusted to ensure the feasibility of participation. (4) A dynamic resource allocation strategy is employed, which ensures that the total allocated bandwidth for clients does not exceed the available bandwidth of the base station. The MACS algorithm iteratively updates client selection, ensuring the global model benefits from diverse and stable contributions while addressing both resource and mobility constraints. The MACS algorithm is summarized in Algorithm 1.

The MACS algorithm is composed of three core components:

Mobility Prediction Model: This module forecasts the future mobility states of clients based on historical data and trajectory patterns. It determines the likelihood of a client maintaining stable connectivity during training, enabling informed client selection.

Resource-Aware Client Evaluation: Clients are evaluated based on computational capacity, data rate, and predicted mobility states. This ensures that selected clients can contribute effectively without causing delays.

Dynamic Resource Allocation: Real-time feedback from participating clients is used to adjust computational resources and communication bandwidth dynamically. This adaptive allocation optimizes resource utilization while minimizing training delays.

| Algorithm 1: Mobility-Aware Client Selection (MACS) |

![Futureinternet 17 00109 i001]() |

The MACS strategy addresses the challenges of federated learning (FL) in dynamic IoT environments characterized by client mobility and resource heterogeneity. By incorporating a mobility prediction model, resource-aware evaluation, and dynamic resource allocation, MACS optimizes the client selection process to ensure efficient training under stringent latency constraints. By dynamically predicting client states, MACS selects clients likely to maintain stable communication and computational capabilities throughout a training iteration. This approach enhances convergence rates and improves the overall efficiency of resource utilization in federated learning.

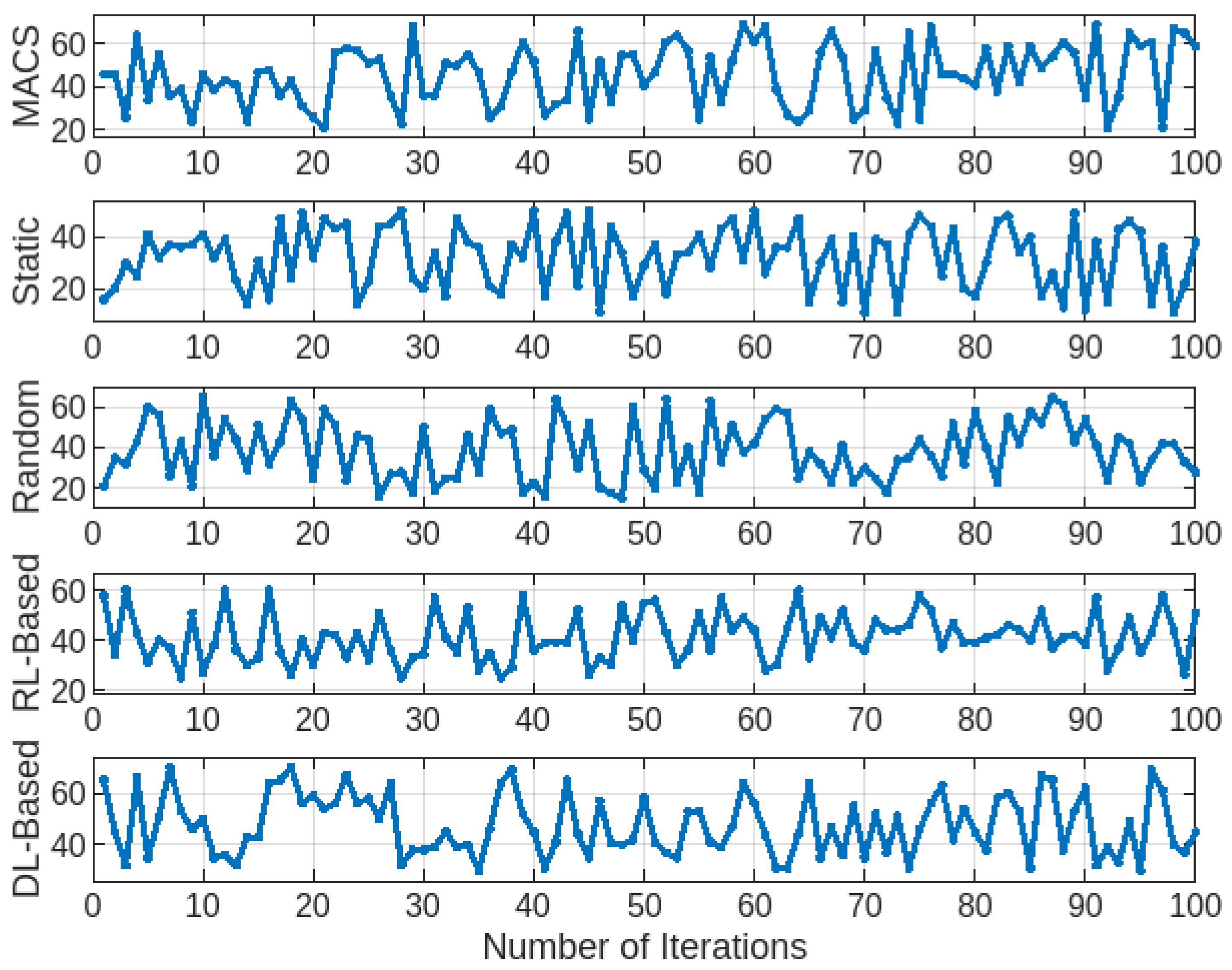

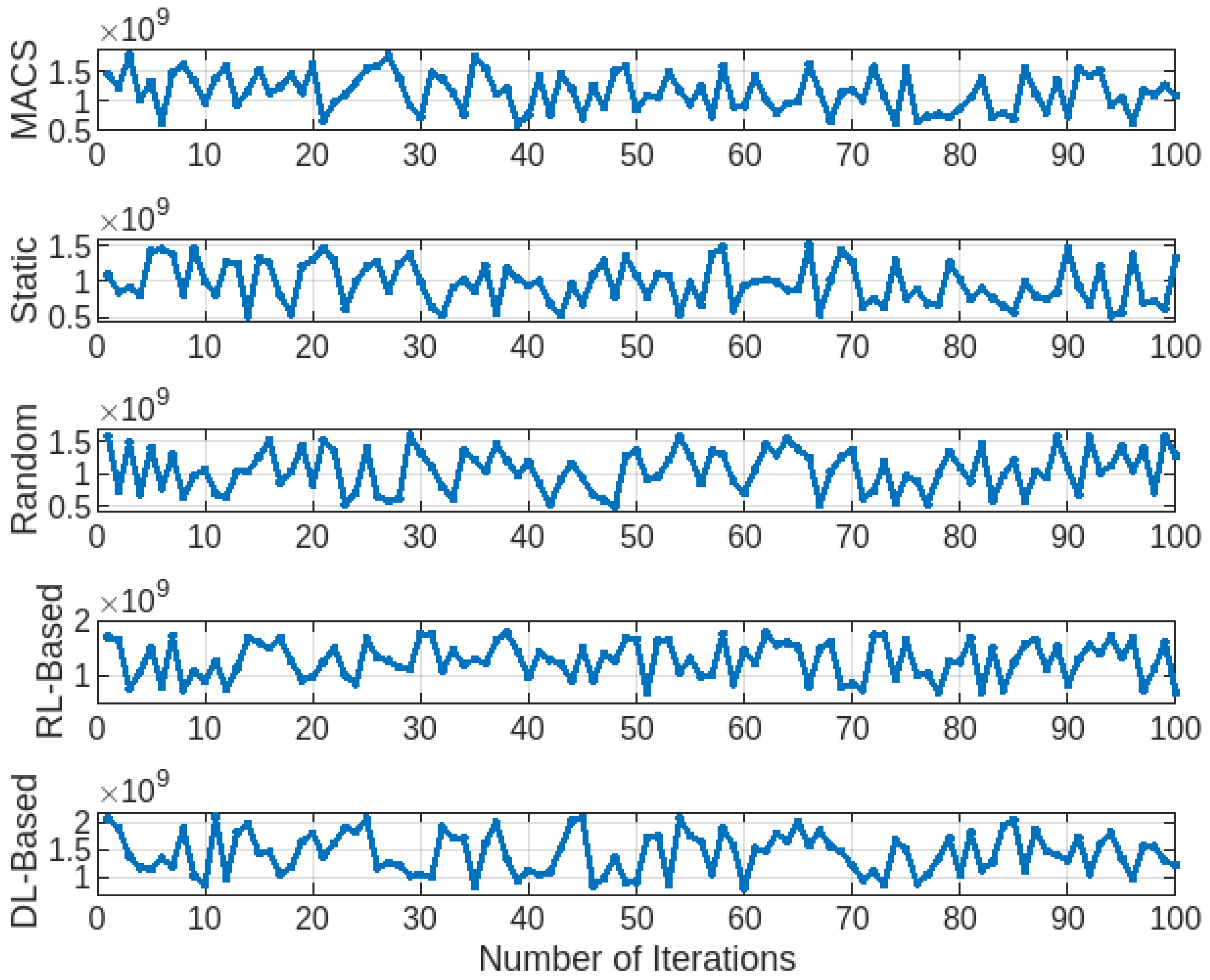

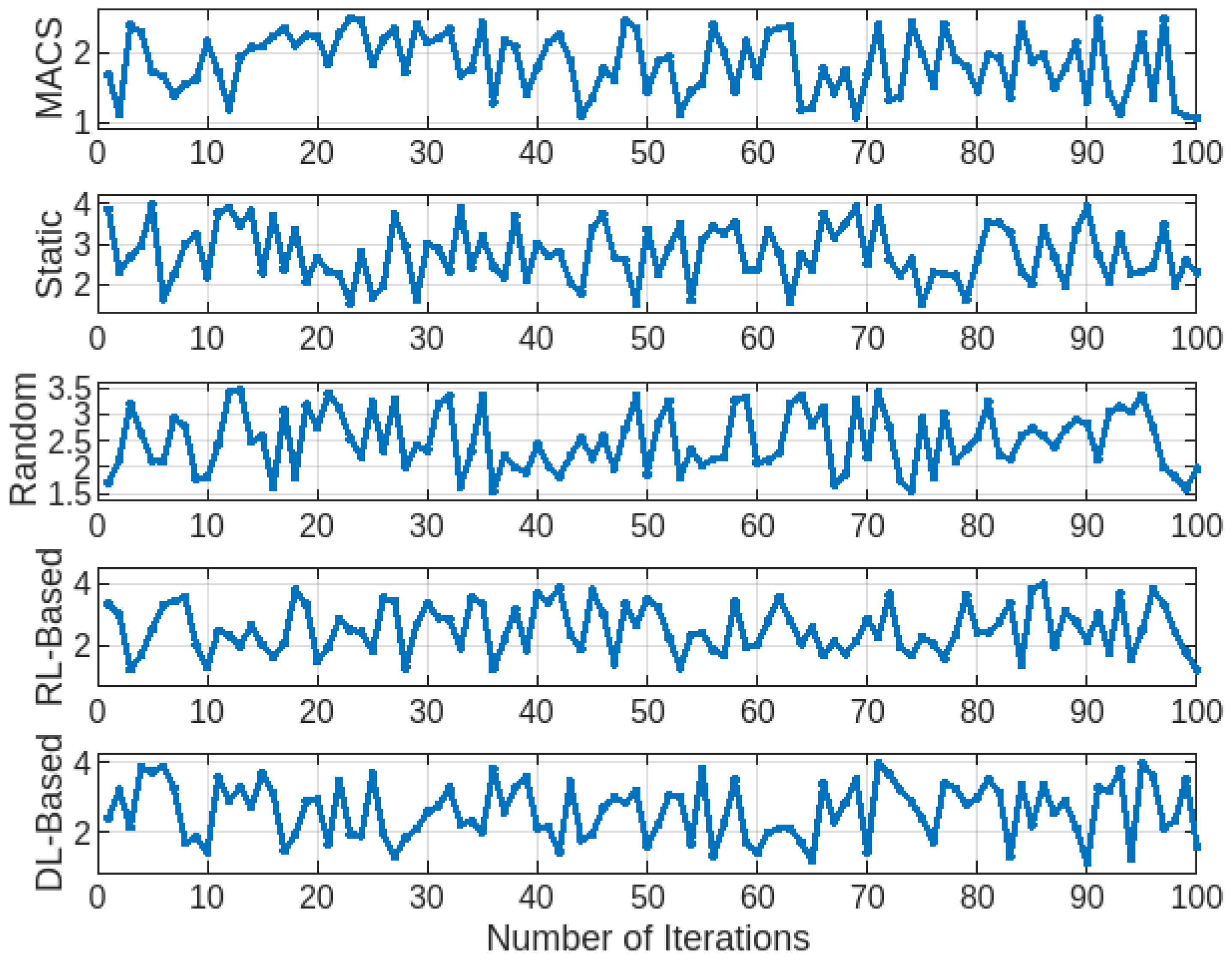

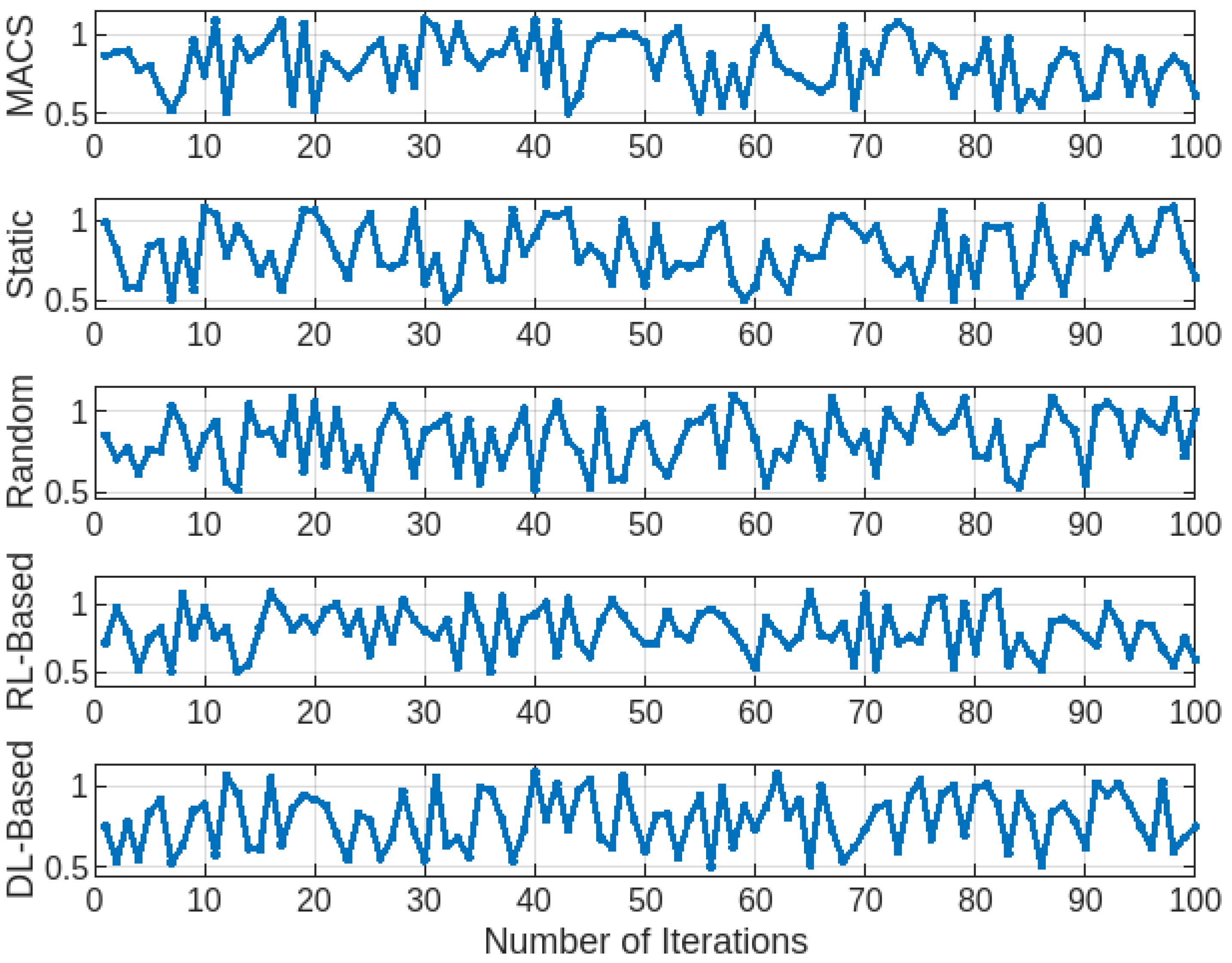

6. Conclusions

This paper presents the Mobility-Aware Client Selection (MACS) strategy to enhance federated learning (FL) in dynamic IoT environments. MACS improves client selection by integrating mobility prediction and resource-aware evaluation, ensuring stable participation under varying network and computational conditions. Simulation results demonstrate that MACS outperforms Random selection, Static selection, Reinforcement Learning-based FL (RL-based), and Deep Learning-based FL (DL-based) in key metrics such as selected clients, network stability, and reduced latency. While MACS significantly enhances selection efficiency, the introduction of mobility prediction adds some computational overhead. This overhead may need optimization, especially for low-power IoT devices. Maintaining stable client participation remains particularly challenging in high-mobility environments like vehicular networks. To address these challenges, shorter prediction intervals or hierarchical selection strategies could improve performance in such scenarios. Future work will explore integrating deep learning models, including Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTMs), and Transformers, to refine mobility prediction by analyzing sequential patterns. Additionally, Federated Reinforcement Learning methods, such as Deep Q-Networks (DQN) and Actor–Critic models, could be considered to refine further client selection strategies based on real-time conditions. These advancements would enhance MACS’s adaptability while keeping its computational demands practical for IoT deployments. An interesting direction for future research is the integration of lightweight reinforcement learning techniques with MACS. By integrating the strengths of both approaches, it is possible to achieve more efficient and adaptive client selection strategies. This combination could improve performance while keeping computational requirements manageable, making it more suitable for resource-constrained IoT devices. MACS offers a robust solution for improving federated learning in dynamic IoT environments. While there are challenges related to computational overhead and high-mobility scenarios, potential optimizations and enhancements promise to make MACS an even more effective tool for client selection in federated learning applications.