Adversarial Robustness Evaluation for Multi-View Deep Learning Cybersecurity Anomaly Detection

Abstract

1. Introduction

- (1)

- Development of a Multi-Dimensional, Multi-Level Adversarial Robustness Evaluation Framework: This study introduces an innovative evaluation framework designed to systematically assess the adversarial robustness of deep learning models for IDSs. The proposed framework integrates multiple dimensions—including perturbation strength ( ranging from 0.01 to 0.2) and attack coverage (80% and 90%)—utilizing the TON_IoT and UNSW-NB15 datasets as benchmarks. Unlike conventional evaluation approaches that typically examine a single attack intensity or a fixed proportion of adversarial samples, this framework allows for a more comprehensive evaluation of intrusion detection model robustness across different levels of attack intensity and coverage, thereby offering a more reliable foundation for practical IDS deployment and security assessment.

- (2)

- Multi-Level Validation of the Robustness of Multi-View DL Models: While prior studies have predominantly concentrated on enhancing the adversarial robustness of single-view models, this work introduces a novel multi-view fusion strategy to validate the robustness advantages of multi-view architectures in adversarial settings. Experimental findings reveal that by aggregating complementary information from feature spaces, multi-view models demonstrate a marked ability to withstand targeted attacks that compromise single-view models. This integration not only bolsters the resistance of intrusion detection systems to adversarial threats but also enhances their stability and generalization performance across diverse threat scenarios.

- (3)

- Two-Dimensional Adversarial Training Strategy: This study presents a two-dimensional adversarial training framework that jointly modulates attack intensity (via progressive adjustment of ) and attack coverage (by varying the proportion of adversarial samples) during model training, thereby constructing a more challenging and diverse augmented training set. This strategy strengthens the model’s resilience to known adversarial patterns. In contrast to conventional adversarial training—which typically targets a single perturbation strength—this method achieves broader and more representative coverage of adversarial examples, equipping the model to better adapt to the complexity and diversity of real-world adversarial environments.

- (4)

- Dual Test for Performance and Robustness: In the testing phase, this study adopts a dual verification mechanism that assesses model performance on both clean and adversarial test sets. By analyzing both performance retention and adversarial robustness, the stability of multi-view models across different evaluation environments is thoroughly examined. This approach effectively overcomes the limitations of conventional evaluation methods that overlook adversarial scenarios, thereby offering a rigorous and systematic methodology for the validation of reliable intrusion detection systems.

2. Related Work

3. Methodology

3.1. Overview

3.2. Model Design

- (1)

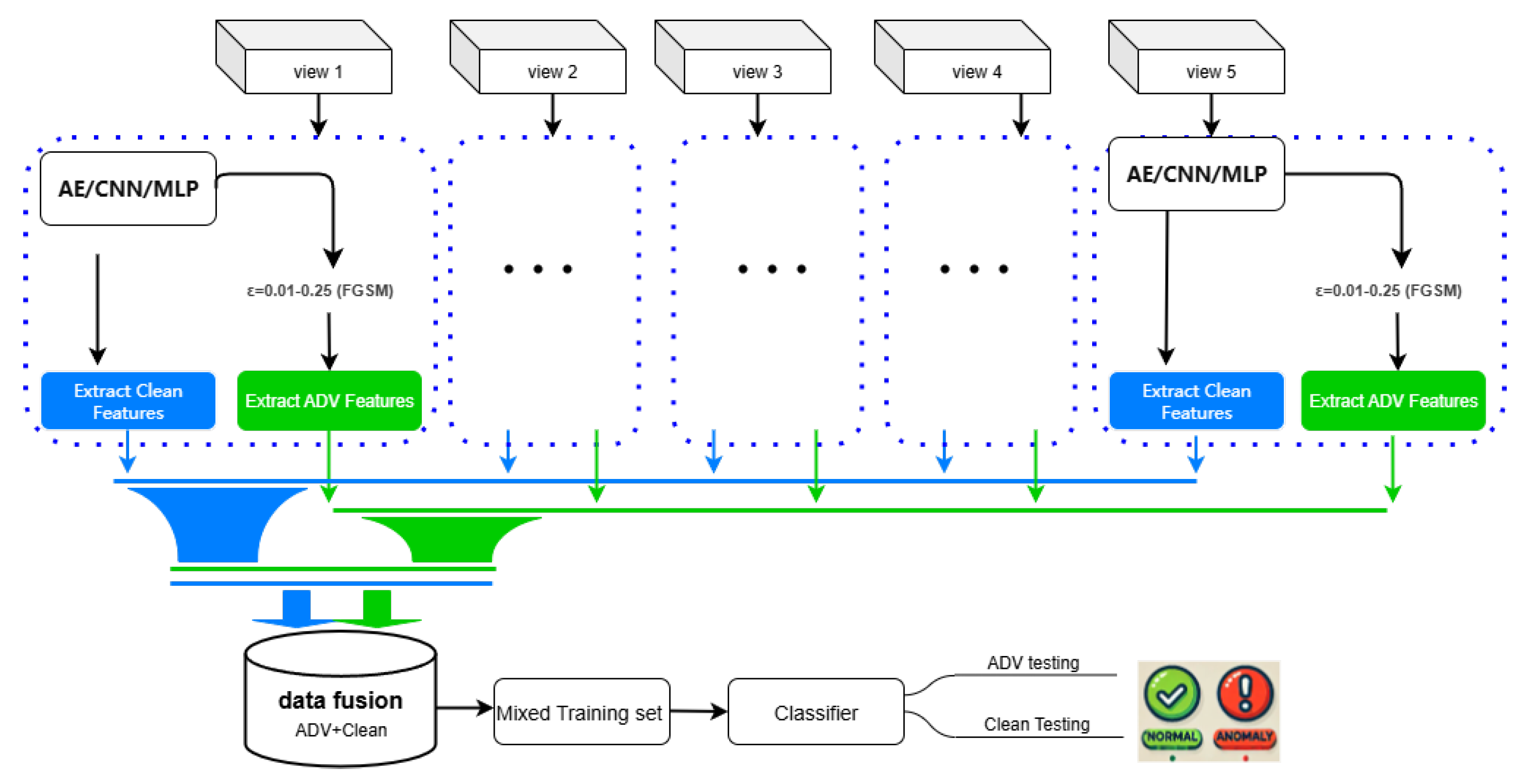

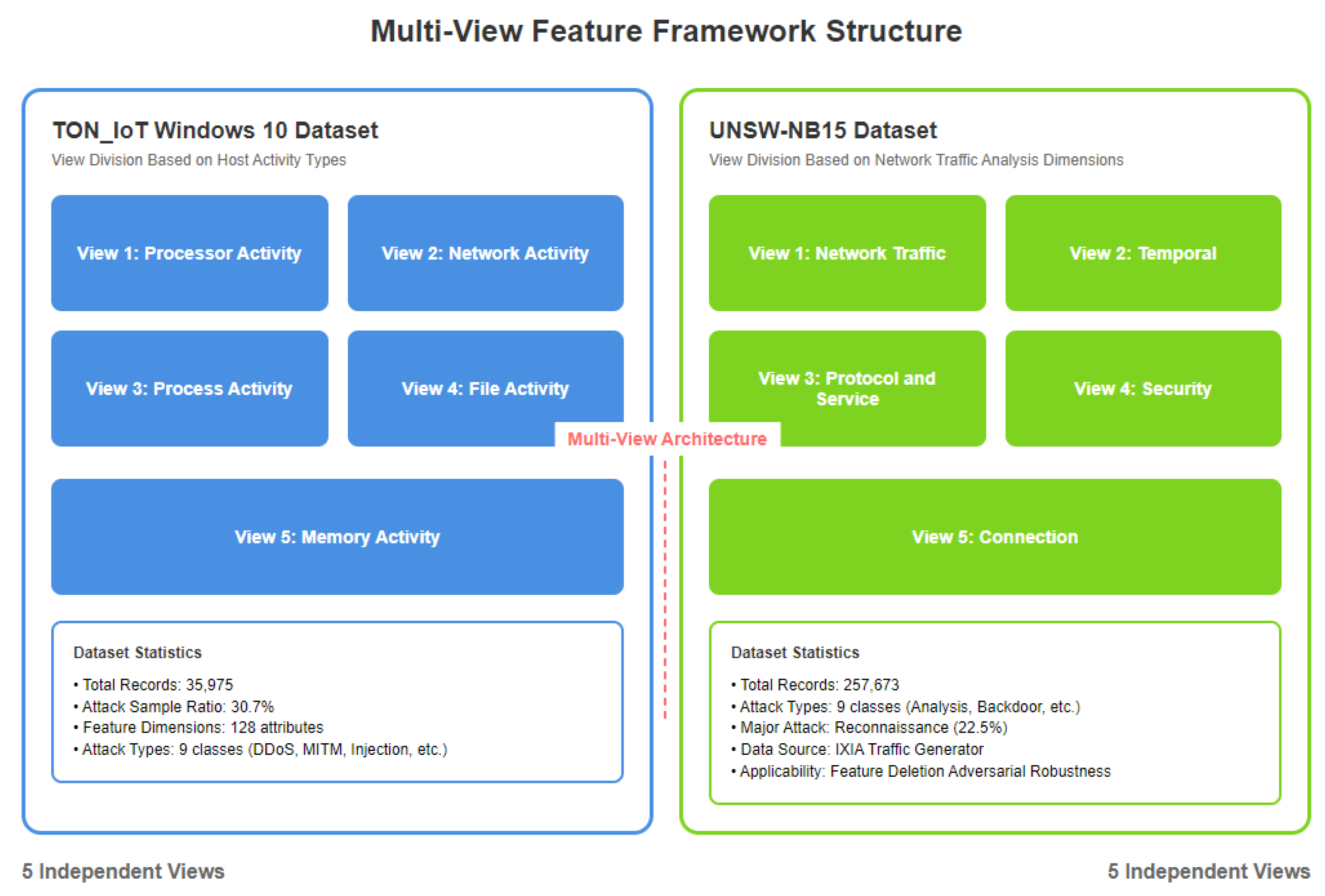

- View LayerThis layer consists of five distinct data views (View 1 to View 5), each representing a feature space. Each view independently processes the raw data using its feature extraction module—such as an AE, CNN, or multi-layer perceptron (MLP)—to extract both clean and adversarial features from the raw input. For single-view models, a single extraction module processes the entire dataset.

- (2)

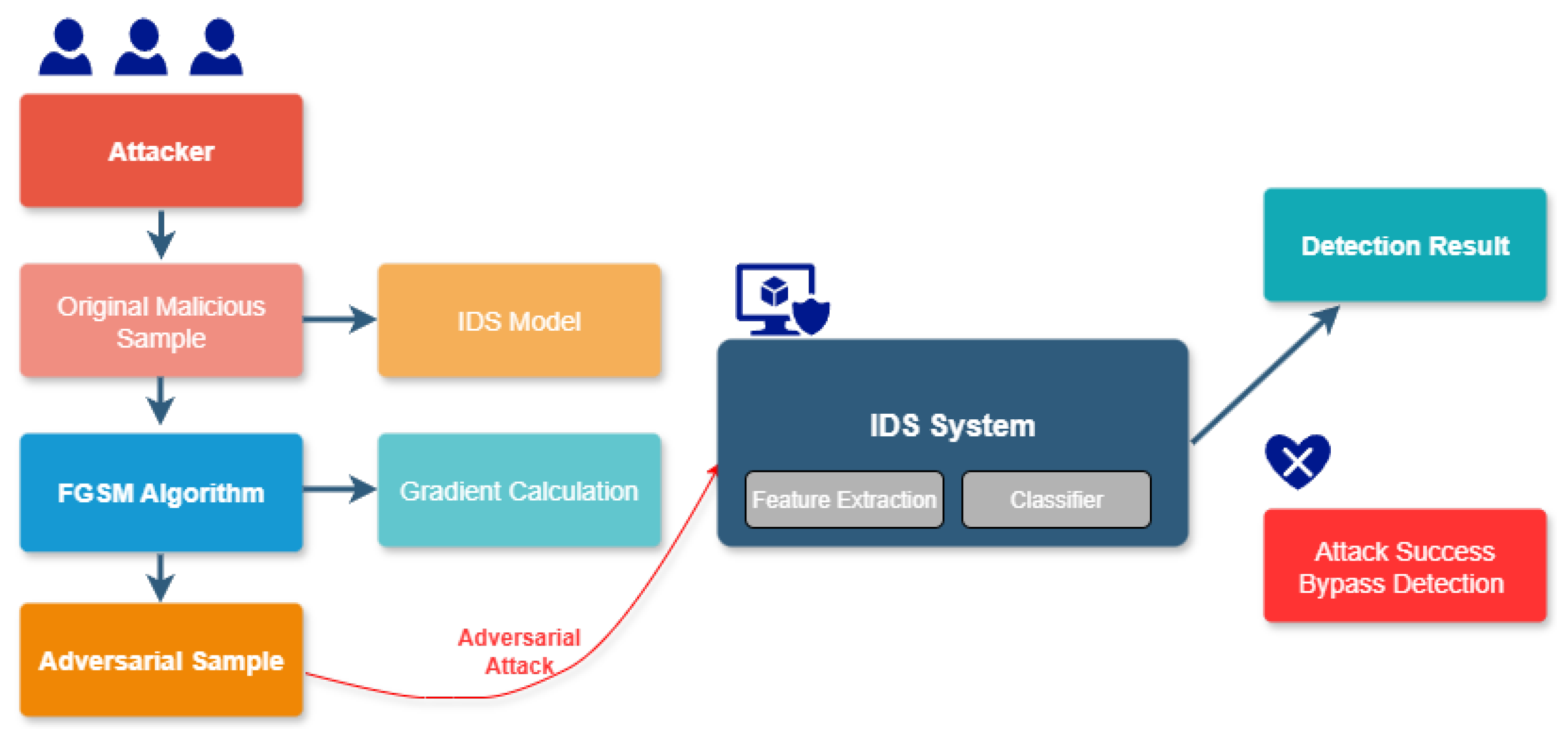

- Adversarial Sample Generation and Feature ExtractionPerturbation Application: During training, adversarial perturbations are introduced to the input data to create adversarial samples. The Fast Gradient Sign Method (FGSM) is adopted in this study as the adversarial attack approach. The perturbation is defined as follows [19]:where denotes the original input sample, y represents the corresponding label, J is the model loss function, and is the perturbation magnitude parameter that controls the deviation between the adversarial sample and the original input.Feature Extraction: For each view, both clean and adversarial data are processed by the corresponding AE, CNN, or MLP modules to generate clean features and adversarial features, respectively.

- (3)

- Feature Fusion LayerIn the multi-view model, clean and adversarial features from each view are combined by adjusting the proportion of adversarial examples in the training set (80% or 90%), thereby constructing training environments with different levels of attack coverage.

- (4)

- Classification LayerThe fused features are fed into a unified classifier to perform the intrusion detection task. The performance of single-view models (AE, CNN, MLP) and multi-view models (AE, CNN, DGCCA) is systematically compared to evaluate the benefits of multi-view fusion in terms of feature complementarity and adversarial robustness.

| Algorithm 1 Multi-view AE with FGSM-based Robust Training |

Require: Preprocessed dataset D with label column label, subset sizes S, encoding dims E, FGSM perturbation strengths Ensure: Average F1 score, ROC-AUC, PR-AUC per 1. Remove non-feature columns, impute missing values with mean, normalize with MinMaxScaler. 2. Stratified split D into training/validation/test sets with ratio 8:1:1. 3. Partition features into 5 views according to dataset-specific grouping rule . 4. For each view, train shallow AutoEncoder and extract encoded features. 5. Generate adversarial samples using FGSM on each view: . 6. Concatenate all view encodings into (clean) and (adversarial). 7. Construct mixed training set by replacing 80% of clean samples with adversarial ones. 8. Train MLP classifier with 1 hidden layer on fused features. 9. Tune threshold on validation set to maximize F1 score. 10. Evaluate classifier on test set using F1 score, ROC-AUC, and PR-AUC. 11. For each , repeat steps 5–10 with 30 random seeds (30 runs) and report results as mean ± standard deviation. 12. Return average metrics (F1, ROC-AUC, PR-AUC) for each . |

| Algorithm 2 Multi-view CNN with FGSM-Adversarial Training |

Require: Preprocessed dataset D, subset sizes S, perturbations , FGSM ratio Ensure: F1 score, ROC-AUC, PR-AUC for each 1. Clean missing values, normalize features, and split D into train/val/test sets. 2. Divide features into 5 subsets 3. For each subset, compute reshape shape to convert vectors into 2D tensors. 4. For each and each run: 4.1 For each view : Reshape to . Train small CNN to perform binary classification. Generate FGSM adversarial samples : Function generate_fgsm_samples(, X, y, ): Compute and apply Return clipped to End Function Create mixed training set with ratio adversarial samples. Store reshaped and mixed inputs for final model. 4.2 Build shared multi-input CNN model: Each input → CNN branch → concatenated embedding. Fully-connected layers for binary prediction. 4.3 Train on multi-view inputs using binary cross-entropy loss. 4.4 On validation set, find threshold that maximizes F1 score. 4.5 Evaluate on test set: compute F1, ROC-AUC, and PR-AUC. 5. Repeat steps 1–4 with 30 different random seeds (30 runs), and report results as mean ± standard deviation. 6. Return performance metrics per . |

| Algorithm 3 DGCCA with FGSM-Adversarial Training |

Require: Multi-view data , label vectors Y, Network config , values, adv ratio Ensure: Performance metrics (F1, ROC-AUC, PR-AUC) for each 1. Preprocess dataset: missing value imputation, normalization, stratified split. 2. Partition features into V views (5 views). 3. For each : For each run (total r times): Initialize DGCCA model with V MLP subnets and CCA loss. Generate FGSM adversarial views: Function fgsm_attack(): return Select of samples and perturb each view with FGSM gradient. Combine clean + perturbed views as training input. Train with adversarial data to minimize CCA loss. If enabled, apply post-training linear GCCA to obtain projections . On validation set, evaluate using: Majority vote of view outputs → predicted label. Compute F1 score, ROC-AUC, PR-AUC. Record scores. 4. Report metrics as mean ± standard deviation over 30 runs for each . 5. Return: Performance trends across values. |

3.3. Adversarial Training Pipeline

- (1)

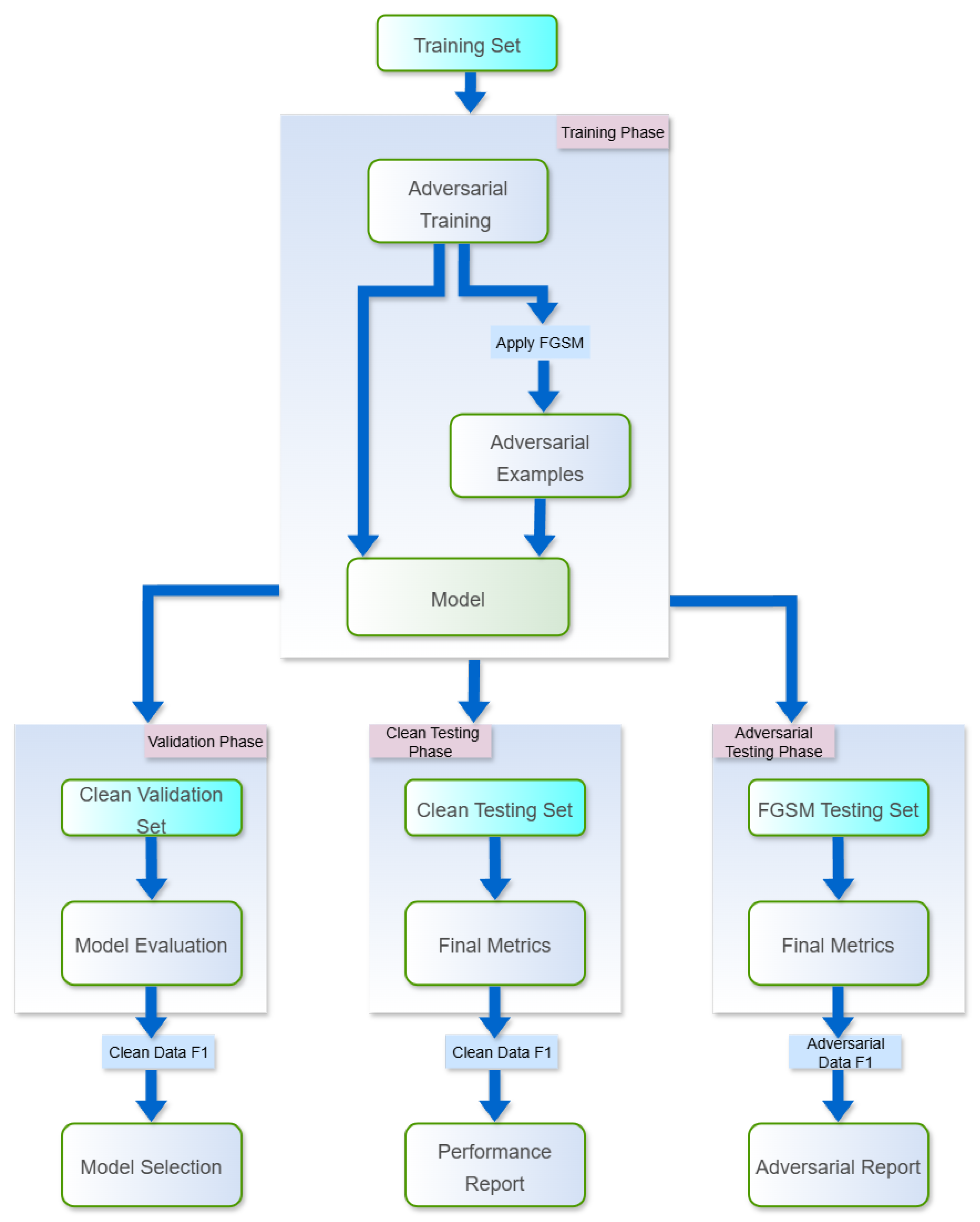

- Training PhaseAdversarial Sample Generation: The FGSM attack is applied to the original training set, with the perturbation intensity parameter () adjusted to generate adversarial samples at varying attack strengths.Construction of Mixed Training Set: Adversarial samples are combined with clean data at predefined ratios (e.g., 80%, 90%) to simulate environments with different levels of attack coverage, resulting in an augmented training set.

- (2)

- Validation PhaseThe clean validation set is used to evaluate model performance (e.g., F1 score), and the candidate model with the best performance on benign data is selected. This ensures that the basic detection capability is maintained and not compromised by adversarial training.

- (3)

- Testing PhaseClean Testing: The model’s performance is evaluated on an unperturbed test set to assess its effectiveness and reliability in real-world scenarios.Adversarial Testing: The model’s robustness is quantified using an adversarial test set generated by FGSM, with analyses conducted across varying perturbation strengths () and attack coverage levels to evaluate stability under adversarial conditions.

3.4. Adversarial Attacks and Sample Generation

4. Preparation for the Experiment

4.1. Data Set

4.2. Multi-View Framework Design

4.3. Data Preprocessing

5. Experiment and Results

5.1. Experiment Outline

5.1.1. Evaluation Under Different Adversarial Sample Ratios (80%, 90%)

5.1.2. Valuation Based on Perturbation Intensity ( from 0.01 to 0.2)

5.1.3. Dual Verification of Model Robustness and Stability

5.2. Experimental Deployment Based on the TON_IoT Dataset

5.3. Experimental Deployment Based on the UNSW-NB15 Dataset

6. Conclusions

6.1. Experimental Conclusion Analysis

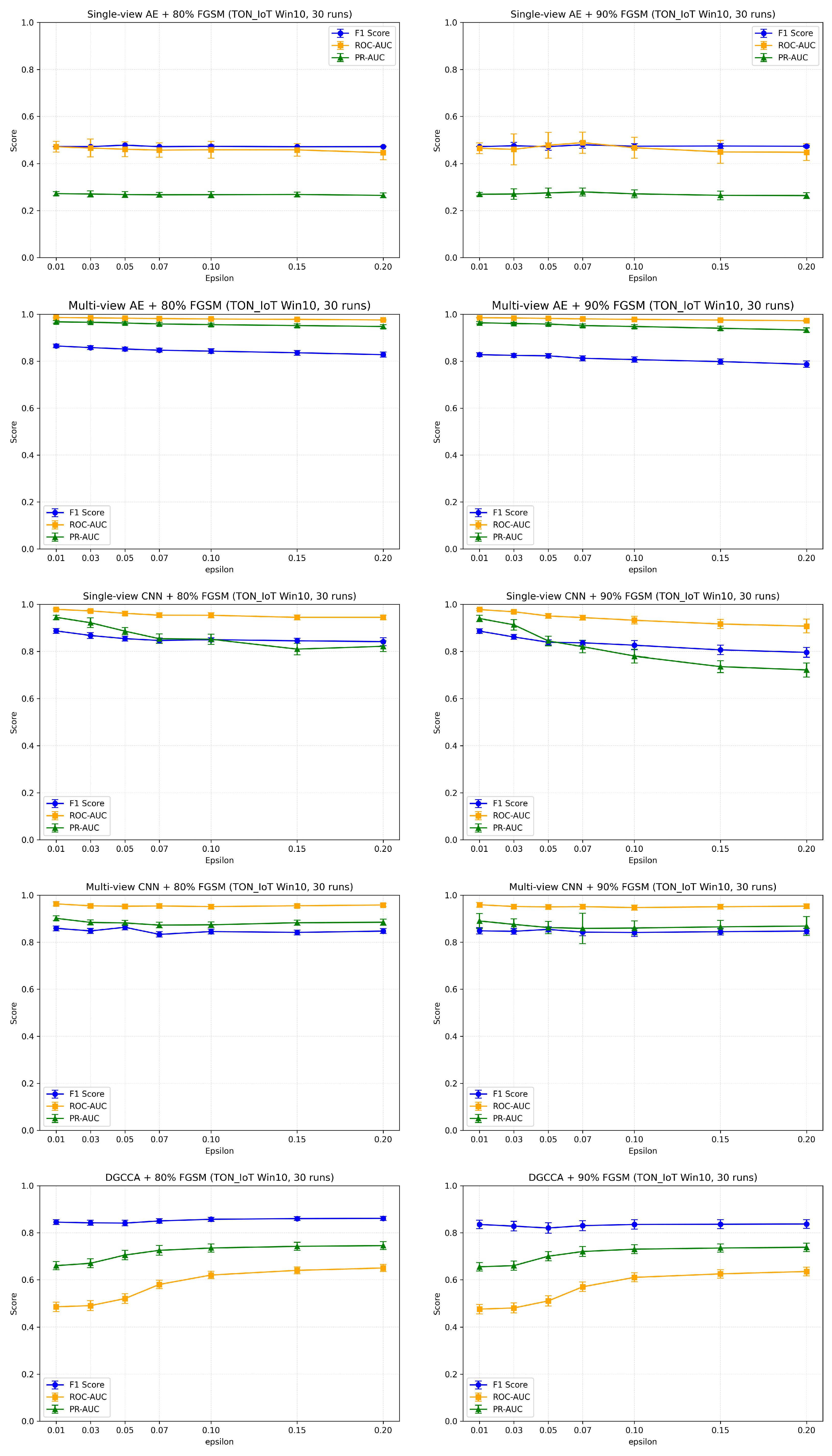

6.1.1. Analysis Based on the TON_IoT Dataset

- (1)

- After introducing perturbations and varying proportions of adversarial examples, all models exhibited different levels of performance degradation on both the clean and adversarial test sets, highlighting the well-known robustness–accuracy trade-off in adversarial training. The degradation generally ranged between 3% and 7%, with some models—particularly the single-view AE—experiencing much larger drops, which means that they nearly experienced complete performance failure. A sharper decline indicates lower stability under adversarial conditions. In contrast, multi-view models demonstrated more moderate performance degradation, suggesting better adversarial robustness. Among them, the multi-view CNN achieved the most balanced results across both the clean and adversarial test sets, representing the best trade-off between performance and robustness among all evaluated models. These findings indicate that the multi-view fusion strategy, especially when combined with a well-structured CNN architecture, not only enhances robustness against adversarial attacks but also maintains better stability on clean distributions.

- (2)

- Under varying perturbation intensities, the F1 score of the multi-view CNN decreases by less than 0.04 within the range , maintaining a relatively stable trend. The multi-view AE similarly exhibits limited performance fluctuation, with a ΔF1 not exceeding 0.03. While the single-view CNN shows a downward trend, it still performs better than DGCCA. In contrast, the PR-AUC and ROC-AUC curves of the single-view AE and DGCCA fluctuate significantly under medium to high perturbation levels. Overall, the multi-view models exhibit more stable performance across different perturbation intensities, with smoother metric curves and slower degradation, indicating stronger resistance to adversarial perturbations.

6.1.2. Analysis Based on the UNSW-NB15 Dataset

- (1)

- A consistent trend with the previous dataset is observed: after adversarial training, the F1 scores of all models exhibit varying degrees of decline. However, in both the clean and adversarial test sets, multi-view models consistently demonstrate stronger resistance to adversarial interference, confirming their robustness superiority.

- (2)

- Under different perturbation intensities, the F1 score of the multi-view CNN fluctuates by only about 0.02 across the range, maintaining the most stable performance. The multi-view AE also shows excellent stability. In contrast, the single-view CNN experiences a significant drop in performance, especially when . The single-view AE shows a highly unstable curve and is easily affected by perturbations. Although the DGCCA model remains relatively stable, its overall performance is limited, with considerable fluctuations observed in its ROC-AUC curve. These results further demonstrate that multi-view fusion models maintain superior stability and robustness under adversarial conditions.

6.2. Comprehensive Conclusion

- (1)

- The multi-view fusion strategy significantly outperforms the single-view baseline models. In particular, the multi-view CNN achieves the highest F1 scores across both datasets, multiple attack ratios, and various perturbation intensities.

- (2)

- The multi-view models exhibit superior resistance to adversarial perturbations. They demonstrate the smallest degradation in F1, ROC-AUC, and PR-AUC scores as increases, indicating good robustness under escalating attack strengths.

- (3)

- The multi-view models retain strong generalization ability. Compared to single-view models, they maintain comparable performance on both clean and adversarial test sets, suggesting that adversarial training does not compromise their original detection capabilities.

- (4)

- DGCCA is a special case among multi-view models. Unlike other multi-view architectures that focus on feature extraction within each individual view followed by simple concatenation, DGCCA explicitly models inter-view correlations by projecting all views into a shared latent subspace. While this design captures structural dependencies between views, it may also amplify inter-view interference. As a result, DGCCA tends to exhibit weaker overall performance on both sets compared to some single-view baselines.

6.3. Theoretical Contributions and Practical Value

6.4. Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Aldhaheri, A.; Alwahedi, F.; Ferrag, M.A.; Battah, A. Deep learning for cyber threat detection in IoT networks: A review. Internet Things Cyber-Phys. Syst. 2024, 4, 110–128. [Google Scholar] [CrossRef]

- Lin, Z.; Shi, Y.; Xue, Z. IDSGAN: Generative Adversarial Networks for Attack Generation against Intrusion Detection Systems. In Proceedings of the Advances in Knowledge Discovery and Data Mining: 26th Pacific-Asia Conference, Chengdu, China, 16–19 May 2022. [Google Scholar]

- Zhang, J.; Sun, H.; Wang, J. Malicious domain name detection model based on CNN-LSTM. In Proceedings of the Third International Conference on Computer Communication and Network Security (CCNS 2022), Hohhot, China, 15–17 July 2022. [Google Scholar]

- Bai, J.; Ju, L. Intrusion Detection Algorithm Based on Adversarial Autocoder. J. Southwest China Norm. Univ. Nat. Sci. Ed. 2021, 46, 77–83. [Google Scholar] [CrossRef]

- Pelekis, S.; Koutroubas, T.; Blika, A.; Berdelis, A.; Karakolis, E.; Ntanos, C.; Spiliotis, E.; Askounis, D. Adversarial machine learning: A review of methods, tools, and critical industry sectors. Artif. Intell. Rev. 2025, 58, 226. [Google Scholar] [CrossRef]

- Li, Y.; Xu, Y.; Liu, Z.; Hou, H.; Zheng, Y.; Xin, Y.; Zhao, Y.; Cui, L. Robust detection for network intrusion of industrial IoT based on multi-CNN fusion. Measurement 2020, 154, 107450. [Google Scholar] [CrossRef]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Alotaibi, A.; Rassam, M.A. Adversarial Machine Learning Attacks against Intrusion Detection Systems: A Survey on Strategies and Defense. Future Internet 2023, 15, 62. [Google Scholar] [CrossRef]

- Issa, M.; Aljanabi, M.; Muhialdeen, H. Systematic literature review on intrusion detection systems: Research trends, algorithms, methods, datasets, and limitations. J. Intell. Syst. 2024, 33, 20230248. [Google Scholar] [CrossRef]

- Sajid, M.; Malik, K.R.; Almogren, A.; Malik, T.S.; Khan, A.H.; Tanveer, J.; Rehman, A.U. Enhancing intrusion detection: A hybrid machine and deep learning approach. J. Cloud Comput. 2024, 13, 123. [Google Scholar] [CrossRef]

- Zhang, Y.; Muniyandi, R.C.; Qamar, F. A Review of Deep Learning Applications in Intrusion Detection Systems: Overcoming Challenges in Spatiotemporal Feature Extraction and Data Imbalance. Appl. Sci. 2025, 15, 1552. [Google Scholar] [CrossRef]

- Barni, M.; Kallas, K.; Nowroozi, E.; Tondi, B. On the Transferability of Adversarial Examples against CNN-based Image Forensics. In Proceedings of the ICASSP 2019—IEEE International Conference on Acoustics, Speech and Signal Processing, Brighton, UK, 12–17 May 2019; pp. 8286–8290. [Google Scholar] [CrossRef]

- Han, S.; Lin, C.; Shen, C.; Wang, Q.; Guan, X. Interpreting Adversarial Examples in Deep Learning: A Review. ACM Comput. Surv. 2023, 55, 328. [Google Scholar] [CrossRef]

- Sauka, K.; Shin, G.-Y.; Kim, D.-W.; Han, M.-M. Adversarial Robust and Explainable Network Intrusion Detection Systems Based on Deep Learning. Appl. Sci. 2022, 12, 6451. [Google Scholar] [CrossRef]

- Liu, H.; Lang, B. Machine Learning and Deep Learning Methods for Intrusion Detection Systems: A Survey. Appl. Sci. 2019, 9, 4396. [Google Scholar] [CrossRef]

- Li, Y.; Li, L.; Wang, L.; Zhang, T.; Gong, B. NATTACK: Learning the Distributions of Adversarial Examples for an Improved Black-Box Attack on Deep Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Awad, Z.; Zakaria, M.; Hassan, R. An enhanced ensemble defense framework for boosting adversarial robustness of intrusion detection systems. Sci. Rep. 2025, 15, 14177. [Google Scholar] [CrossRef]

- Chowdhury, K. Adversarial Machine Learning: Attacking and Safeguarding Image Datasets. In Proceedings of the 2024 4th International Conference on Ubiquitous Computing and Intelligent Information Systems (ICUIS), Gobichettipalayam, India, 12–13 December 2024; pp. 1360–1365. [Google Scholar] [CrossRef]

- Halbouni, A.; Gunawan, T.S.; Habaebi, M.H.; Halbouni, M.; Kartiwi, M.; Ahmad, R. CNN-LSTM: Hybrid Deep Neural Network for Network Intrusion Detection System. IEEE Access 2022, 10, 99837–99849. [Google Scholar] [CrossRef]

- Li, M.; Qiao, Y.; Lee, B. A Comparative Analysis of Single and Multi-View Deep Learning for Cybersecurity Anomaly Detection. IEEE Access 2025, 13, 83996–84012. [Google Scholar] [CrossRef]

- Lella, E.; Macchiarulo, N.; Pazienza, A.; Lofù, D.; Abbatecola, A.; Noviello, P. Improving the Robustness of DNNs-based Network Intrusion Detection Systems through Adversarial Training. arXiv 2023, arXiv:10193009. [Google Scholar]

- Moustafa, N. TON_IoT Datasets. UNSW Canberra. 2022. Available online: https://research.unsw.edu.au/projects/toniot-datasets (accessed on 3 April 2022).

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems. In Proceedings of the Military Communications and Information Systems Conference (MilCIS), Canberra, ACT, Australia, 10–12 November 2015. [Google Scholar]

- Moustafa, N.; Slay, J. The evaluation of Network Anomaly Detection Systems: Statistical analysis of the UNSW-NB15 dataset and the comparison with the KDD99 dataset. Inf. Secur. J. A Glob. Perspect. 2016, 25, 18–31. [Google Scholar] [CrossRef]

| Models | F1 Score | ||

|---|---|---|---|

| (0% adv) | (80% adv) | (90% adv) | |

| Single-view AE | 0.72 | 0.4778 ± 0.0003 | 0.4718 ± 0.0162 |

| Single-view CNN | 0.892 | 0.8539 ± 0.0123 | 0.8386 ± 0.0130 |

| Multi-view AE | 0.871 | 0.8521 ± 0.0083 | 0.8231 ± 0.0091 |

| Multi-view CNN | 0.925 | 0.8631 ± 0.0104 | 0.8543 ± 0.0112 |

| DGCCA | 0.86 | 0.8418 ± 0.0004 | 0.8020 ± 0.0223 |

| Models | F1 Score | ||

|---|---|---|---|

| (0% adv) | (80% adv) | (90% adv) | |

| Single-view AE | 0.72 | 0.4722 ± 0.0140 | 0.4719 ± 0.00172 |

| Single-view CNN | 0.892 | 0.6184 ± 0.0130 | 0.6010 ± 0.0128 |

| Multi-view AE | 0.871 | 0.7817 ± 0.0075 | 0.7362 ± 0.0079 |

| Multi-view CNN | 0.925 | 0.7823 ± 0.0024 | 0.7396 ± 0.0054 |

| DGCCA | 0.86 | 0.6433 ± 0.0120 | 0.5480 ± 0.0146 |

| Models | F1 Score | ||

|---|---|---|---|

| (0% adv) | (80% adv) | (90% adv) | |

| Single-view AE | 0.797 | 0.6121 ± 0.022 | 0.3629 ± 0.015 |

| Single-view CNN | 0.806 | 0.7641 ± 0.0156 | 0.7588 ± 0.0209 |

| Multi-view AE | 0.848 | 0.8243 ± 0.014 | 0.8162 ± 0.014 |

| Multi-view CNN | 0.856 | 0.8476 ± 0.012 | 0.8237 ± 0.014 |

| DGCCA | 0.8 | 0.7589 ± 0.015 | 0.7530 ± 0.0218 |

| Models | F1 Score | ||

|---|---|---|---|

| (0% adv) | (80% adv) | (90% adv) | |

| Single-view AE | 0.797 | 0.6232 ± 0.017 | 0.3514 ± 0.013 |

| Single-view CNN | 0.806 | 0.6481 ± 0.0096 | 0.6221 ± 0.0085 |

| Multi-view AE | 0.848 | 0.75128 ± 0.0054 | 0.7324 ± 0.0071 |

| Multi-view CNN | 0.856 | 0.7568 ± 0.0051 | 0.7344 ± 0.0060 |

| DGCCA | 0.8 | 0.6161 ± 0.13 | 0.4879 ± 0.14 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Qiao, Y.; Lee, B. Adversarial Robustness Evaluation for Multi-View Deep Learning Cybersecurity Anomaly Detection. Future Internet 2025, 17, 459. https://doi.org/10.3390/fi17100459

Li M, Qiao Y, Lee B. Adversarial Robustness Evaluation for Multi-View Deep Learning Cybersecurity Anomaly Detection. Future Internet. 2025; 17(10):459. https://doi.org/10.3390/fi17100459

Chicago/Turabian StyleLi, Min, Yuansong Qiao, and Brian Lee. 2025. "Adversarial Robustness Evaluation for Multi-View Deep Learning Cybersecurity Anomaly Detection" Future Internet 17, no. 10: 459. https://doi.org/10.3390/fi17100459

APA StyleLi, M., Qiao, Y., & Lee, B. (2025). Adversarial Robustness Evaluation for Multi-View Deep Learning Cybersecurity Anomaly Detection. Future Internet, 17(10), 459. https://doi.org/10.3390/fi17100459