Abstract

Object detection and human action recognition have great significance in many real-world applications. Understanding how a human being interacts with different objects, i.e., human–object interaction, is also crucial in this regard since it enables diverse applications related to security, surveillance, and immersive reality. Thus, this study explored the potential of using a wearable camera for object detection and human–object interaction recognition, which is a key technology for the future Internet and ubiquitous computing. We propose a system that uses an egocentric camera view to recognize objects and human–object interactions by analyzing the wearer’s hand pose. Our novel idea leverages the hand joint data of the user, which were extracted from the egocentric camera view, for recognizing different objects and related interactions. Traditional methods for human–object interaction rely on a third-person, i.e., exocentric, camera view by extracting morphological and color/texture-related features, and thus, often fall short when faced with occlusion, camera variations, and background clutter. Moreover, deep learning-based approaches in this regard necessitate substantial data for training, leading to a significant computational overhead. Our proposed approach capitalizes on hand joint data captured from an egocentric perspective, offering a robust solution to the limitations of traditional methods. We propose a machine learning-based innovative technique for feature extraction and description from 3D hand joint data by presenting two distinct approaches: object-dependent and object-independent interaction recognition. The proposed method offered advantages in computational efficiency compared with deep learning methods and was validated using the publicly available HOI4D dataset, where it achieved a best-case average F1-score of 74%. The proposed system paves the way for intuitive human–computer collaboration within the future Internet, enabling applications like seamless object manipulation and natural user interfaces for smart devices, human–robot interactions, virtual reality, and augmented reality.

1. Introduction

Computer vision is an imperative field of research with diverse applications that can enable communications through wireless networks through pervasive cameras for improving human–computer interaction. One of the most crucial areas in this regard is the detection/recognition of human–objection interactions from video cameras (particularly wearable cameras). Understanding human–object interaction (HOI) aims to interpret how human beings interact with objects around them by processing images, videos, or any other data [1]. In this regard, previous studies identified humans and objects within a scene using object detection algorithms. Researchers also analyze the spatial relationships and body poses to interpret interactions, like holding or using an object. For videos, they examine how these interactions change over time to capture the full dynamics of human actions. HOI recognition (HOIR) is marked by its significant applications in robotics and assistive technologies. However, it faces numerous challenges in its implementation. Most of the previous studies proposed HOI methods that rely on fixed/static cameras. These studies focused on extracting the texture information, color, and shape of the objects [1,2,3] for HOIR. Such methods may fail to perform efficiently in scenarios where objects can exhibit variability in texture, color, or shape due to lighting changes, occlusions, and variations in object appearance. Thus, this variability can lead to ambiguity in recognition results and decrease the robustness of the model. Furthermore, deep learning-based HOIR approaches rely heavily on extensive datasets to model the complex variety of interactions, which is a task that is both cost intensive and laborious [4]. The ambiguity in interpreting interaction, such as distinguishing whether a person is simply holding or using an object, adds a layer of complexity that requires nuanced understanding [5]. The models often struggle to generalize across different contexts, which decreases their effectiveness in unfamiliar scenarios. Also, the use of deep learning for object detection and action recognition has a high computational cost [6,7]. These challenges emphasize the need to develop alternative approaches for the HOIR system, keeping in mind the computational cost and the efficacy of the output results. A few researchers utilized the egocentric camera view for HOIR as well [8]. The egocentric view offers unique advantages over traditional exocentric (i.e., third-person) camera views by capturing interactions from the user’s perspective, which is essential for understanding natural interactions. This perspective emphasizes only the relevant objects and areas, offering clear visibility and direct insight into user attention and intent.

Keeping in view the above discussion, hand pose information can be extracted from a wearable egocentric camera to implement HOIR. By utilizing detailed information from the hand pose, we can interpret interactions between humans and objects. Advances in computer vision and machine learning have enabled the precise tracking and analysis of hand joints [9], allowing for the identification of objects based on hand movements. Incorporating hand pose information into HOIR can enhance the detection of subtle differences in how objects are used, making it more robust against common real-world issues, like obscured object views, occlusion, and background clutter. The existing studies show promise in estimating hand pose data from images and videos, which can be crucial for HOIR. In a previous study, the authors proposed a method for estimating hand pose information from images/videos and they highlighted the significant improvements in the results [10]. Furthermore, in another study, the authors demonstrated how deep learning techniques are applied to extract hand pose information from images/videos [11]. Following this, we intended to use the hand pose information to make an HOIR model that emphasized the importance of hand movements for this task. Our idea was to leverage the hand joint information from images and videos generated from a wearable egocentric camera for the interpretation of HOI. Such an approach can address the issues related to the texture-, color-, and shape-based features of existing HOIR approaches, while also keeping the system computationally efficient.

This paper presents “HP4HOIR”, which is a novel framework that uses 3D hand pose data from a wearable egocentric camera for HOIR. The proposed framework emphasizes the dynamics of hand movements for understanding and interpreting interactions between humans and objects. In this work, we used two approaches, i.e., object-dependent interaction recognition (ODIR) and object-independent interaction recognition (OIIR), for HOIR. The first approach, i.e., ODIR, starts by identifying the object held by the human being before determining the nature of the interaction in the next stage. On the other side, the OIIR approach simplifies the process by directly recognizing the HOI without prior identification of the object. We present the detailed results and analysis for both these approaches in the results section (i.e., Section 4). The results show that the proposed framework for HOIR produced effective results while keeping in view the trade-off between accuracy and computational complexity. Thus, the proposed framework paves the way for future innovations in interactive systems and assistive technologies that involve human–robot interactions. It is also beneficial for privacy-sensitive applications, as it offers more privacy by neglecting other information.

List of Contributions

To the best of our knowledge, it is for the first time that the hand pose information, i.e., hand joints, is explicitly used for HOIR in any study. With this, the major contributions of this paper are as follows:

- We propose a novel framework “HP4HOIR” that leverages hand pose information from an egocentric view for the recognition of HOIs.

- We propose a feature extraction and description method for the hand pose data, which captures unique attributes from the hand joints data and represents them as a feature vector for HOIR.

- We conducted a detailed analysis of ODIR and OIIR, which provided insights into the accuracy and computational cost of each approach in recognizing HOI.

2. Related Work

In the context of HOIR, the literature reveals a variety of models/methodologies that have significantly advanced the understanding and capability of such systems across various applications [12,13,14,15,16,17,18,19]. These studies offer a foundational backdrop against which the current research on HOIR stands.

A study proposed a caption generation model for the recognition of actions of sports players while utilizing an ontology-based identification scheme [20]. Researchers utilized the capabilities of graph convolutional neural networks and multiple multilayer perceptron for the identification of HOI [21,22]. Similarly, another work used the hidden Markov model for the precise positioning of the target and recognition of human interaction [23]. Furthermore, a recent study used a hybrid model for the detection of objects while using the skeletal method [24].

The experimental results show that their proposed model had an average accuracy of 98.5% in the recognition of HOIs. A recent work stated the difficulty of organizing a dataset in which all the combinations of humans and objects are considered [25]. This process is very time consuming and requires a huge amount of computational resources. Therefore, the authors proposed an HOI detector for all new and unseen interactions. There are two types of encoder in their proposed model: one is for visual data and the other is used to detect and recognize text. Their proposed model efficiently detects HOIs while utilizing the capabilities of the layered architecture of an HOI vision transformer. Similarly, relation parsing neural network techniques are used for the detection of HOI. Two graphs are used in the proposed model to capture the relationship between objects and humans. The first graph is responsible for identifying the relationship between a person and body parts, while the second graph captures the relationship between the surrounding object and body part. Furthermore, an action-parsing machine is used for creating an association between both these graphs. The authors used the V-COCO dataset to evaluate the performance of the proposed model. Another work proposes a mechanism in which unseen data are utilized for the detection of objects [26]. The authors utilized the capabilities of an external knowledge graph for identifying the process of learning verb–noun pairs. Their proposed model not only beats state-of-the-art schemes in terms of recognition of unseen combinations but also shows promising ability in the detection of novel concepts. Moreover, researchers proposed a neural network-based mechanism that detects the motion of humans for leveraging neighboring frames, which ultimately helps in predicting occluded frames [27]. Furthermore, the authors also proposed a pose prediction network, in which object poses can be recovered even under heavy occlusions. In the literature, a study is found that used a scene graph that can recognize the semantic relationships presented in the image dataset, which ultimately helps in the identification of crucial and significant contextual information for human–object interaction inference [28].

The abovementioned literature reviews the models that use visual datasets of videos or images for HOIR. These models produce efficient recognition performance; however, they are computationally intensive. Moreover, these methods are designed to detect/recognize HOIs from images/videos generated by the standard (i.e., exocentric) camera view. However, for privacy-preserving applications, the egocentric camera view is crucial. A few studies proposed HOIR methods based on egocentric views [29,30], which are crucial for applications in virtual reality and human–robot collaboration [31,32]. Thus, this paper presents a method for HOIR based on the videos available from egocentric cameras using the HOI4D dataset. In this regard, we propose a framework that simplifies the process of HOI by using hand pose information. The proposed framework is based on the observation that many interactions between humans and objects involve the hands, which makes the hand pose a strong indicator of the nature and type of interaction. Our proposed model reduces the scope of detection by just focusing on the hand gesture. It does not use any complex deep learning algorithm, and thus, evades computational overhead. Thus, this research work contributes to the ongoing evolution of HOI detection technologies, promising enhanced capabilities in fields requiring detailed interaction analysis, such as virtual reality, robotics, and interactive systems.

3. Proposed System

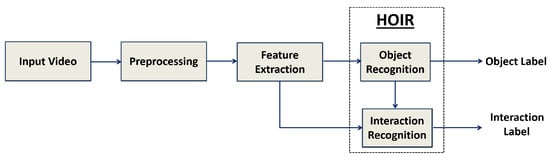

The block diagram of the proposed framework “HP4HOIR” is shown in Figure 1 and Figure 2 using a step-wise approach. The detail of each step is further discussed in the following subsections.

Figure 1.

Object-dependent interaction recognition (ODIR).

Figure 2.

Object-independent interaction recognition (OIIR).

3.1. Data Acquisition

The proposed method was developed to work on an egocentric dataset, i.e., first-person view. Although with conventional cameras, i.e., third-person views, there are many datasets available for the same kind of tasks, there is lack of comprehensive egocentric datasets that may be relevant to this study. Most of the relevant datasets (one significant example is [33]) are based on synthetic data, where 2D images are used to generate 3D synthetic images of the hand, and then further processing is performed on synthetic hands. The HOI4D dataset, which was developed by Liu and their team [34], is a good choice in this regard, as it processes the hand annotations against the real images. Furthermore, the HOI4D contains a lot of variations, as it consists of videos from four different cameras that are independent of each other, with different indoor conditions. The dataset includes 2.4 million RGB egocentric video frames from 4000 video sequences. Nine individuals performed interactions with 800 object instances in the dataset across 16 categories in different indoor environments. Each single HOI was captured in a range of settings (differences in rooms, room layouts, users, cameras, backgrounds, simplicity and complexity of interactions, etc.). The dataset divides interactions into simple and complex scenarios, with the former offering clear, consistent views and the latter introducing more variability. For more specifics, Table 1 details the object categories and their interactions. To ensure the robustness of our proposed algorithm, with respect to in-the-wild conditions, we performed extensive testing on the HOI4D dataset using class-wise cross-validation. This ensured that the output results were free of any biases, as each data sample (whether simple or complex, from different persons, in varying room settings, and using different cameras) was used for training and testing, but in different iterations.

Table 1.

List of objects and associated interactions in HOI4D dataset.

3.2. Segmentation and Hand Joint Extraction

Before recognizing HOI from the video sequences, we first performed the required preprocessing. In this regard, we first applied window-based segmentation over each video sequence and divided it into non-overlapping and fixed-size (i.e., 5 s) segments. This division was based on the findings that interactions are typically captured well within such a short span, allowing for the precise and efficient analysis of dynamic hand movements. We called each window segment a video snippet and labeled it as per the original video sequence. We then converted each video snippet into individual frames, where a frame was represented as a 2D RGB matrix. Each segment consisted of a series of 75 frames, which were processed individually one-by-one to capture frame-based hand joints. The hand pose information obtained from the set of 75 frames was then concatenated to form a complete representation of the window. This method ensured that interactions were consistently captured and facilitated accurate hand pose information extraction from each segment.

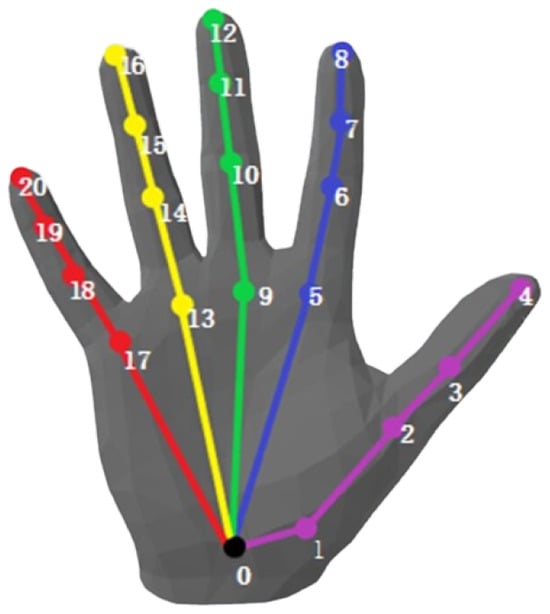

Against each frame, we obtained a set of 21 key points, i.e, standard hand joints [34,35], from the user’s hand as 2D landmarks. These keypoints were further transformed into a 3D representation using the MANO parametric hand model [35]. The process started with 2D joint landmarks from hand images, which a pre-trained regressor used to estimate the initial shape and pose parameters. These parameters were optimized to minimize the reprojection error, and the refined parameters were applied to the MANO model to obtain consistent 3D coordinates of the hand joints. Thus, we finally obtained a 3D hand joint representation, which was recorded as a matrix known as the hand joint matrix (HJM), against each frame. This matrix represented the hand movement information in the form of hand joints, where each hand joint was represented as a 3D point, i.e., spatial coordinates (x, y, z). Figure 3 provides a sample representation of the 3D hand joint model illustrated by MANO.

Figure 3.

Representation of the MANO 3D hand joint model.

3.3. Hand Joint Transformation: 3D to 7D Space

We further transformed the 3D (HJM) matrix into a seven-dimensional (7D) space by incorporating the following four metrics as additional dimensions: the Euclidean norm () and pair-wise planar distances (, , ). Consequently, each hand joint matrix was then described in the form of a matrix, i.e., , per frame.

The inclusion of the Euclidean norm and pair-wise planar distances helped to capture the spatial relationships between the hand joints across different axes, and thus, provided a comprehensive view of the hand poses. This characteristic was crucial for accurately classifying the objects and interactions, as it minimized the variability introduced by different hand orientations. The inclusion of additional metrics, i.e, the Euclidean norm and pair-wise planar distances, assisted in capturing spatial relationships between the hand joints across different axes and provided a comprehensive view of hand poses. These metrics were chosen specifically for their invariance to rotation and ensured that the hand poses were represented consistently regardless of their orientation or position in 3D space. Thus, their addition produced a richer and robust feature set that could further help with classifying different objects and interactions based on hand poses.

To further enhance the robustness and comparability of the feature set, we standardized each column within the matrix to a scale from 0 to 1, and referred to this updated matrix as . This normalization step ensured that all features contributed equally to the classification process, thus preventing any single feature from dominating due to scale differences. The resulting normalized matrix thus provided a richer and more robust representation of hand poses.

3.4. Feature Extraction and Description

After preprocessing, we extracted 18 time-domain features, as outlined in Table 2, from each matrix, i.e., . These features represented the statistical attributes of the hand joints data and were useful for the pattern recognition task that involved movement classification. We extracted these features for the HOIR because of their efficient performance involved hand movement data from motion sensors. Also, these features entailed low computational cost compared with the deep learning-based feature extraction methods. Moreover, these features provided signal traits that had great significance in recognizing hand motion patterns.

Table 2.

List of statistical features extracted for HOIR.

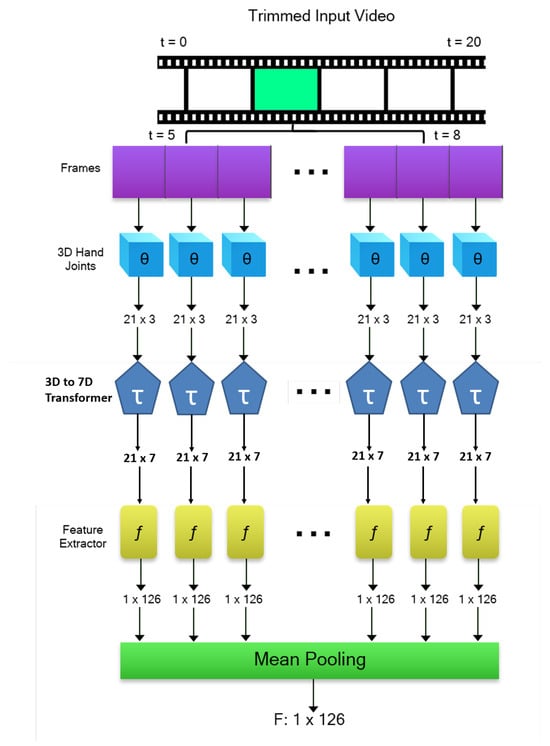

We extracted these features against each column of the matrix, which produced a feature vector of size per frame. We then applied mean pooling to aggregate these features over the entire video snippet, which resulted in the finalized feature vector of size . Figure 4 illustrates the process of feature extraction and description.

Figure 4.

Feature extraction and description process for proposed framework (where “” represents the process of hand joints extraction, “” represents the process of the 3D to 7D transformation, and “f” represents the process of feature extraction).

3.5. Classification

In the final step of the proposed framework, we performed classification for HOIR based on two different approaches, i.e., ODIR and OIIR. In the first approach, we employed the ODIR method, which consisted of two steps. In the first step, ODIR classified the object based on hand joint features. Then, in the next step, using the classified object label and hand joint features, ODIR subsequently recognized the interaction being performed with the object. For example, for ODIR, we aimed to detect an object first, e.g., “Bottle”, and then recognize its relevant interaction, such as “pick and place”. Thus, in this case, we had 16 different object classes in the first step, with varying numbers of classes in the second step (depending upon the number of interactions with each object).

The second approach, i.e., OIIR, omitted the object classification step and directly focused on the recognition of HOI as a whole, e.g., “Pick and Place Bottle”. Thus, we had 54 classes in this case based on the combination of all objects and their related interactions.

We chose the random forest (RF) classifier for the tasks involved in this step. For ODIR, we trained 17 RF classifiers (one for object classification and 16 for object-wise interaction recognition) separately, whereas in the case of OIIR, we trained one RF classifiers for recognition of the HOI as a whole, with 54 classes in total.

4. Experimental Results and Analysis

In this section, we evaluate the performance of the proposed framework “HP4HOIR” and provide a detailed analysis of the results, along with the implementation details and evaluation methods.

4.1. Implementation Details

As the proposed framework uses two different approaches for HOIR, i.e., ODIR and OIIR, the dataset was labeled in two different ways. The label assignment in the case of ODIR is different from OIIR. For ODIR, we assigned each video snippet an object and interaction label. In the case of object recognition, all interactions relevant to an object were assigned the same object label, whereas for interaction recognition, we combined all instances of the interactions against each object and assigned the respective interaction label. The ground truths for these labels came from the corresponding video sequences in the HOI4D dataset. In the case of OIIR, we combined the object and the relevant interaction label to generate a single HOI label for each video snippet. Thus, overall, we had 54 different HOIs for OIIR, with 16 object categories: bowl, bottle, mug, toy car, bucket, knife, kettle, chair, storage furniture, pliers, laptop, lamp, safe, trash can, scissor, and stapler. In this study, we employed a random forest (RF) classifier implemented using scikit-learn. The RF classifier was configured with a maximum depth of 25 and it comprised 100 decision trees. A personal desktop computer was used for the experiments in this study. The computer was equipped with an Intel(R) Core(TM) i5-8265U CPU that operated at 1.60 GHz (up to 1.80 GHz with turbo boost), with 8.00 GB of RAM.

4.2. Evaluation Metrics and Validation Method

For evaluating the proposed framework, we used accuracy (acc.), precision (pre.), recall (rec.), and F1-score (F1) as the performance measures. In addition, we focused on the computational time to assess the computational overhead. For validation purposes, we adopt a fivefold cross-validation method by dividing our dataset into five equal parts. We used a class-wise approach to stratify these parts by ensuring that each part reflected the overall distribution of target classes found in the complete dataset. This means that the proportion of each class was consistent across all folds, thereby preserving the balance of the dataset during both training and testing phases. This distribution was vital for keeping the class balance consistent in both the training and testing phases. Furthermore, this validation approach ensured the use of the entire dataset for training and testing purpose, but in different iterations, which allowed us to train and test our model robustly, and thus, avoid any biases that might arise from how the data were split.

4.3. Analysis of ODIR

The ODIR approach consisted of two steps, i.e., object recognition and object-wise interaction recognition, the results of which are described as follows.

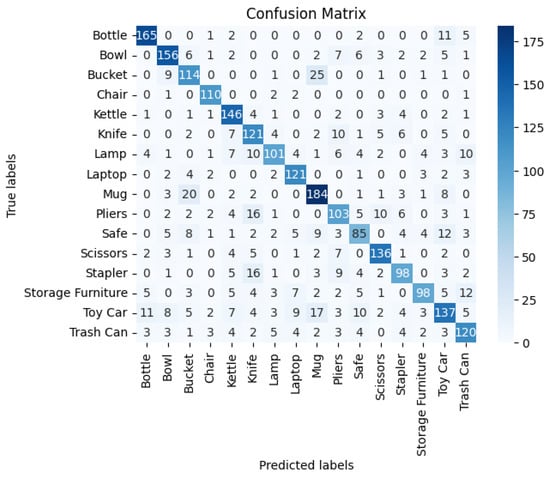

4.3.1. ODIR: Analysis of Object Recognition

For object recognition, we trained an RF classifier on the features extracted from the hand joint data to classify 16 objects. The numerical results obtained for object recognition using an RF classifier are shown in Table 3. The table presents the performance metrics obtained for each of the individual objects. It can be observed from these results that the recognition performances of “Bottle”, “Chair”, “Kettle”, “Laptop”, and “Scissors” were better compared with other objects. Each of these objects was recognized with an accuracy or F1-score of more than 80%. This depicts that it was easier to recognize these objects based on the hand joint information. More precisely, “Chair” was recognized with the highest accuracy of 91.2%. In contrast, objects such as “Safe”, “Toy Car”, “Storage Furniture”, and “Lamp” achieved a recognition performance of less than 70% (in terms of accuracy and F1-score). Thus, this gave us a notion that these objects were difficult to classify based on the hand joint information.

Table 3.

ODIR numerical results for object classification.

This was because the interactions related to these objects were quite similar to each other in terms of the hand motion patterns, which caused these objects to be confused during the classification stage. The confusion matrix shown in Figure 5 revealed strengths in distinguishing items such as “Bottle” and “Mugs” with high accuracy, as indicated by the high values on the diagonal. It also uncovered specific confusions, like mixing up “Bowls” with “Buckets”, thus pointing to areas where the model misinterpreted similarities. The frequent mislabeling of “Toy Car” was a sign that this category needed particular attention for improvement. The overall average F1-score achieved for object recognition was 73%.

Figure 5.

Confusion matrix for object recognition.

4.3.2. ODIR: Analysis of Interaction Recognition

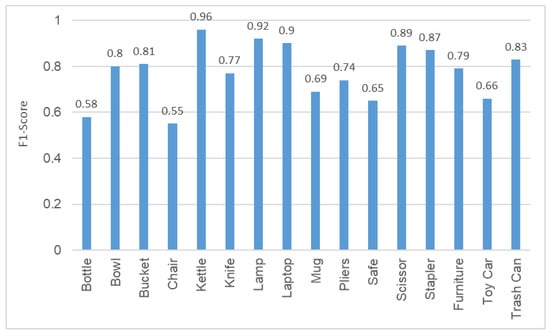

After the object recognition, the ODIR entailed recognition of the interactions made with an object based on the same set of extracted features. The RF classifier took as inputs the feature vector and object label for classifying an interaction. Table 4 shows the detailed performance parameters for the object-wise interaction recognition. It can be seen that the interactions made with objects such as “Kettle”, “Laptop”, “Lamp”, “Trash Can”, “Scissors”, and “Stapler” were more accurately recognized. The results show a varied performance across different objects and tasks. For instance, the task of ”Pick and place” showed a strong average performance (as the F1-score) across most objects, like “Bowl”: 0.86, “Bottle”: 0.72, and “Mugs”: 0.76. However, complex tasks, such as “Pour all the water into a Mug with Bottle”, demonstrated a significantly lower F1-score of 0.37, suggesting challenges in their recognition. In particular, such interactions involved multiple steps and precise hand actions/movements (with subtle differences) for their completion, which must be recognized in a sequence. Misclassifying any step in such cases may lead to a drop in the overall interaction classification performance. Table 4 also shows the values of true positives, true negatives, false positives, and false negatives for each of the object interactions. These metrics gave us a granular look at the model behavior. Figure 6 shows the average F1-score obtained for all interactions as a whole within an object category, which can be observed for further analysis.

Table 4.

ODIR numerical results for interaction classification.

Figure 6.

Object-wise F1-score for interaction recognition.

4.4. Analysis of OIIR

This section evaluates and discusses the results for OIIR using an RF classifier. The second approach for HOIR was based on OIIR, which directly classified the HOIs without any prior object information. We trained an RF classifier in this regard to classify a set of 54 HOIs based on the features extracted from the hand joint data. Table 5 presents the experimental results obtained for the HOI classification using an RF. The maximum performance was observed for interactions such as “Bowl: Pick and Place” and “Kettle Pour Water into a Mug”, where they achieved F1-scores of 0.87 and 0.81, respectively. The overall average F1-score obtained for the HOI classification in this case was 60%. These results suggest that the OIIR approach was capable of handling tasks that involved direct and simple object interactions. However, there is room for improvement in the classification of complex HOIs, as depicted in Table 5. For example, such interactions that involved a drawer (like putting in or taking some object out of the drawer) were mostly confused with each other, and thus, need improvements. These interactions may have very similar movements and hand positions when interacting with the drawer, resulting in their lower recognition performance.

Table 5.

OIIR numerical results for HOI classification.

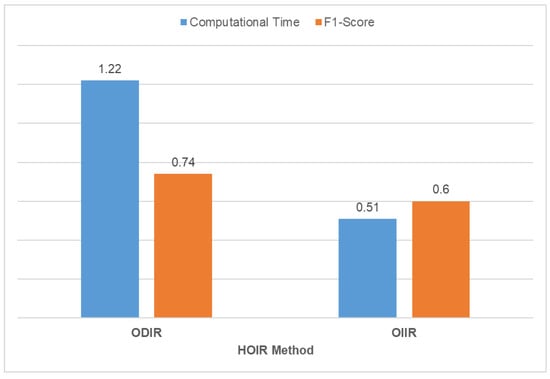

4.5. Time and Performance Analysis: ODIR vs. OIIR

This section provides a comparative analysis of the ODIR and OIIR for HOIR, which highlights significant differences in the performance of these two approaches. The comparison is made in terms of the average F1-score and mean computational time taken for classifying a single instance, which is shown in Figure 7. ODIR, which is a two-stage method, first identified objects and then classified their relevant interactions, and thus, resulted in an inference time of 1.22 milliseconds per sample. However, the overall average F1-score (based on object recognition and interaction classification results) obtained for ODIR was 74%. In contrast, the OIIR employed a single-stage method that simultaneously predicted objects and their interactions as an HOI, and thus, reduced the inference time to 0.51 milliseconds per sample. The average F1-score obtained in this case was 60%, which was 14% lower than that achieved with the ODIR. This was because OIIR classified a set of 54 classes as a whole, whereas ODIR had a lower number of classes owing to a two-step classification. As a result, it was easier to distinguish between different object categories and corresponding interactions within an object, as was the case with the ODIR.

Figure 7.

Time and performance analysis: ODIR vs. OIIR.

Generally, it was easier to train and classify the interactions within an object instead of recognizing the interactions globally. Thus, in the case of joint HOI labels, there was a high probability of inter-class similarity between different classes, which eventually led to misclassification, which reduced the system performance. The overall results achieved for the proposed scheme demonstrated the efficiency of ODIR over OIIR, particularly in scenarios where the correctness of output labels was crucial. However, OIIR can be useful in scenarios that require quick decision making, and thus, is better suited for real-time applications, where speed is crucial. In general, there should be a trade-off between the accuracy (or F1-score) and time complexity of the decision making.

The proposed HOIR approach is based on only time-domain features; thus, it is computationally efficient when compared with the state-of-the-art deep learning models due to its simpler mathematical operations for extracting features, resulting in linear time complexity. In contrast, deep learning includes complex operations, nonlinear activations, and repeated backpropagations, which results in extra computational overhead.

5. Conclusions

This study demonstrated the potential of integrating 3D hand pose information for recognizing objects and interpreting HOIs. In this aspect, this paper presents a novel framework that takes a video sequence as input, estimates hand joints from it frame by frame, and applies feature extraction and description over the hand joints data. These features are passed to the random forest classifier for training and classifying the object and human–object interaction. Our findings indicate that the inclusion of 3D hand joint data provided the ability to discern subtle nuances in HOIs with satisfactory performance (an average F1-score of 74%). The proposed method can be further enhanced by leveraging the hand pose data with the visual object-related features (such as color, texture, and shape). Furthermore, deep learning-based models can also be modified and applied over the hand joints data for automatic feature extraction. With hand joints, it is possible to use the hand vertices information as well. In this way, the accuracy of the system can be further enhanced. Thus, the findings obtained from this study can be used further to develop a robust system for HOIs, particularly for immersive reality applications.

Author Contributions

Conceptualization, M.E.U.H. and M.A.A.; Methodology, D.H.; Software, D.H., A.Y. and F.M.; Formal analysis, F.M.; Resources, A.Y. and M.A.A.; Data curation, D.H.; Writing—original draft, D.H.; Writing—review & editing, M.A.A., D.H. and M.E.U.H.; Visualization, F.M.; Supervision, M.A.A.; Funding acquisition, M.A.A. All authors read and agreed to the published version of this manuscript.

Funding

This research work is supported by the School of Information Technology, Whitecliffe, Wellington, New Zealand, and Air University, Islamabad, Pakistan.

Data Availability Statement

The dataset used in this study is publicly available as the “HOI4D” dataset and is cited in the paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gupta, S.; Malik, J. Visual semantic role labeling. arXiv 2015, arXiv:1505.04474. [Google Scholar]

- Hou, Z.; Yu, B.; Qiao, Y.; Peng, X.; Tao, D. Affordance transfer learning for human-object interaction detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 495–504. [Google Scholar]

- Li, Q.; Xie, X.; Zhang, J.; Shi, G. Few-shot human–object interaction video recognition with transformers. Neural Netw. 2023, 163, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Chao, Y.W.; Wang, Z.; He, Y.; Wang, J.; Deng, J. Hico: A benchmark for recognizing human-object interactions in images. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1017–1025. [Google Scholar]

- Sadhu, A.; Gupta, T.; Yatskar, M.; Nevatia, R.; Kembhavi, A. Visual semantic role labeling for video understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5589–5600. [Google Scholar]

- Li, Y.; Ouyang, W.; Zhou, B.; Shi, J.; Zhang, C.; Wang, X. Factorizable net: An efficient subgraph-based framework for scene graph generation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 335–351. [Google Scholar]

- Zhou, T.; Wang, W.; Qi, S.; Ling, H.; Shen, J. Cascaded human-object interaction recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4263–4272. [Google Scholar]

- Bansal, S.; Wray, M.; Damen, D. HOI-Ref: Hand-Object Interaction Referral in Egocentric Vision. arXiv 2024, arXiv:2404.09933. [Google Scholar]

- Cai, M.; Kitani, K.; Sato, Y. Understanding hand-object manipulation by modeling the contextual relationship between actions, grasp types and object attributes. arXiv 2018, arXiv:1807.08254. [Google Scholar]

- Chen, L.; Lin, S.Y.; Xie, Y.; Lin, Y.Y.; Xie, X. Mvhm: A large-scale multi-view hand mesh benchmark for accurate 3d hand pose estimation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 836–845. [Google Scholar]

- Ge, L.; Ren, Z.; Yuan, J. Point-to-point regression pointnet for 3d hand pose estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 475–491. [Google Scholar]

- Wan, B.; Zhou, D.; Liu, Y.; Li, R.; He, X. Pose-aware multi-level feature network for human object interaction detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9469–9478. [Google Scholar]

- Chu, J.; Jin, L.; Xing, J.; Zhao, J. UniParser: Multi-Human Parsing with Unified Correlation Representation Learning. arXiv 2023, arXiv:2310.08984. [Google Scholar]

- Chu, J.; Jin, L.; Fan, X.; Teng, Y.; Wei, Y.; Fang, Y.; Xing, J.; Zhao, J. Single-Stage Multi-human Parsing via Point Sets and Center-based Offsets. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 1863–1873. [Google Scholar]

- Wang, T.; Yang, T.; Danelljan, M.; Khan, F.S.; Zhang, X.; Sun, J. Learning human-object interaction detection using interaction points. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4116–4125. [Google Scholar]

- He, T.; Gao, L.; Song, J.; Li, Y.F. Exploiting scene graphs for human-object interaction detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 15984–15993. [Google Scholar]

- Nagarajan, T.; Feichtenhofer, C.; Grauman, K. Grounded human-object interaction hotspots from video. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8688–8697. [Google Scholar]

- Ehatisham-ul Haq, M.; Azam, M.A.; Loo, J.; Shuang, K.; Islam, S.; Naeem, U.; Amin, Y. Authentication of smartphone users based on activity recognition and mobile sensing. Sensors 2017, 17, 2043. [Google Scholar] [CrossRef] [PubMed]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J. A survey of online activity recognition using mobile phones. Sensors 2015, 15, 2059–2085. [Google Scholar] [CrossRef] [PubMed]

- Kanimozhi, S.; Raj Priya, B.; Sandhiya, K.; Sowmya, R.; Mala, T. Human Movement Analysis through Conceptual Human-Object Interaction in Sports Video. 2020. Available online: https://ssrn.com/abstract=4525389 (accessed on 21 July 2024).

- Ye, Q.; Xu, X.; Li, R. Human-object Behavior Analysis Based on Interaction Feature Generation Algorithm. Int. J. Adv. Comput. Sci. Appl. 2023, 14. [Google Scholar] [CrossRef]

- Yang, N.; Zheng, Y.; Guo, X. Efficient transformer for human-object interaction detection. In Proceedings of the Sixth International Conference on Computer Information Science and Application Technology (CISAT 2023), SPIE, Hangzhou, China, 26–28 May 2023; Volume 12800, pp. 536–542. [Google Scholar]

- Zaib, M.H.; Khan, M.J. An HMM-Based Approach for Human Interaction Using Multiple Feature Descriptors. 2023. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4656240 (accessed on 21 July 2024).

- Ozaki, H.; Tran, D.T.; Lee, J.H. Effective human–object interaction recognition for edge devices in intelligent space. SICE J. Control. Meas. Syst. Integr. 2024, 17, 1–9. [Google Scholar] [CrossRef]

- Gkioxari, G.; Girshick, R.; Dollár, P.; He, K. Detecting and recognizing human-object interactions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8359–8367. [Google Scholar]

- Zhou, P.; Chi, M. Relation parsing neural network for human-object interaction detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 843–851. [Google Scholar]

- Kato, K.; Li, Y.; Gupta, A. Compositional learning for human object interaction. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 234–251. [Google Scholar]

- Xie, X.; Bhatnagar, B.L.; Pons-Moll, G. Visibility aware human-object interaction tracking from single rgb camera. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 4757–4768. [Google Scholar]

- Purwanto, D.; Chen, Y.T.; Fang, W.H. First-person action recognition with temporal pooling and Hilbert–Huang transform. IEEE Trans. Multimed. 2019, 21, 3122–3135. [Google Scholar] [CrossRef]

- Liu, T.; Zhao, R.; Jia, W.; Lam, K.M.; Kong, J. Holistic-guided disentangled learning with cross-video semantics mining for concurrent first-person and third-person activity recognition. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 5211–5225. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Xu, M.; Choi, C.; Crandall, D.J.; Atkins, E.M.; Dariush, B. Egocentric vision-based future vehicle localization for intelligent driving assistance systems. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: New York, NY, USA, 2019; pp. 9711–9717. [Google Scholar]

- Liu, O.; Rakita, D.; Mutlu, B.; Gleicher, M. Understanding human-robot interaction in virtual reality. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28–31 August 2017; IEEE: New York, NY, USA, 2017; pp. 751–757. [Google Scholar]

- Leonardi, R.; Ragusa, F.; Furnari, A.; Farinella, G.M. Exploiting multimodal synthetic data for egocentric human-object interaction detection in an industrial scenario. Comput. Vis. Image Underst. 2024, 242, 103984. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y.; Jiang, C.; Lyu, K.; Wan, W.; Shen, H.; Liang, B.; Fu, Z.; Wang, H.; Yi, L. Hoi4d: A 4d egocentric dataset for category-level human-object interaction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18—24 June 2022; pp. 21013–21022. [Google Scholar]

- Romero, J.; Tzionas, D.; Black, M.J. Embodied hands: Modeling and capturing hands and bodies together. ACM Trans. Graph. 2017, 36, 245. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).