In this section, we describe the policy-dividing and combination mechanism, which aims to improve the efficiency of policy distribution, therefore improving the problem of unsynchronized policy updates across routers and maintaining network consistency. SR technology is key to two points. The first point is to segment the forwarding path, and each segment is identified by SID. The second point is to sort and combine SIDs at the start node to form a SID list and determine the forwarding path of data packets. Data packets can then be transmitted through the network along a forwarding path that has been planned in advance. In SRv6, SID is a 128-bit value in the form of an IPv6 address. Considering that an SRv6 policy entry is a sequence formed by multiple SIDs, we propose to divide each SRv6 policy into several SID blocks and transmit these blocks in multicast form with the aim of improving policy distribution efficiency.

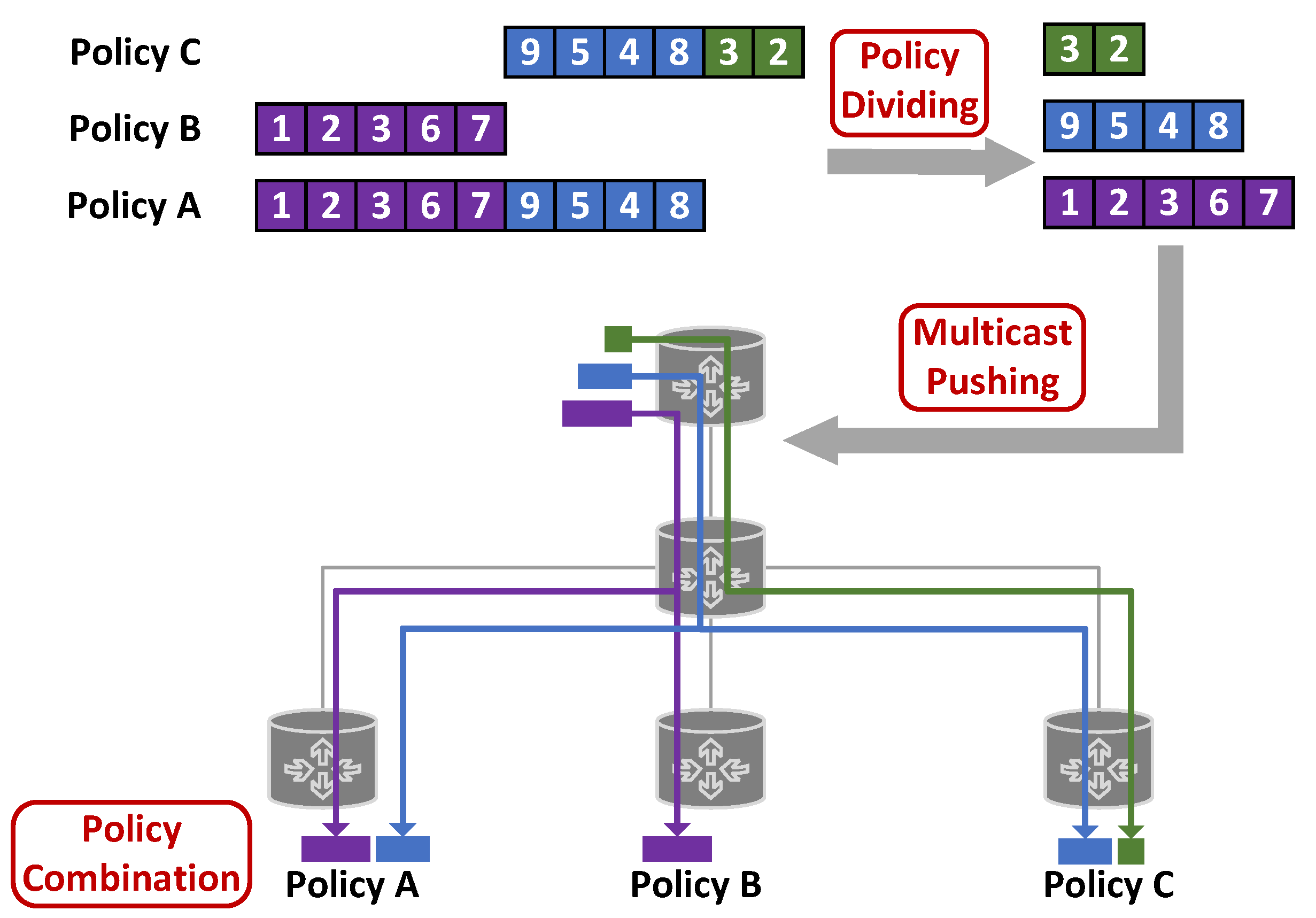

Based on the above ideas, the mechanism contains three functional modules: SRv6 policy dividing, SRv6 policy pushing, and SRv6 policy combination. First, the policy-dividing function module is responsible for dividing the policy entries into multiple policy distribution SID blocks. Then, the policy-pushing function module is responsible for distributing these SID blocks in a multicast manner to the corresponding target nodes of the policy to which the SID blocks belong. Finally, the SRv6 policy combination function module is responsible for combining the SID blocks to generate complete SRv6 policies.

Figure 1 shows the architecture of SUDC.

3.1. SRv6 Policy Dividing

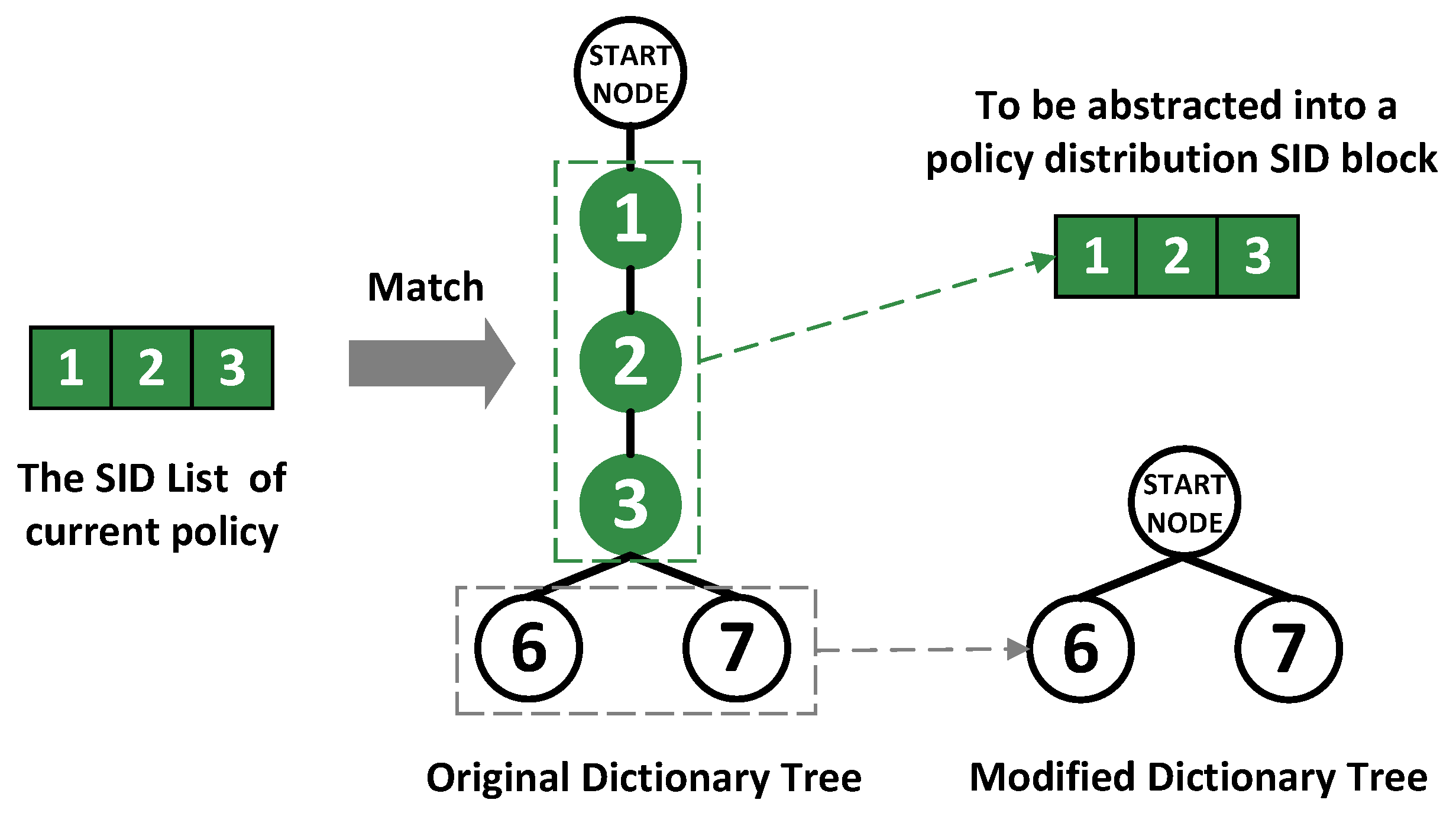

In the SRv6 policy-dividing module, the SID lists of policy entries to be distributed are divided into multiple parts, some of which are common to multiple policies and some of which are unique to a single policy. Each part is wrapped into a policy distribution SID block in order to facilitate policy pushing. This function is performed at the control plane.

The process of distributing all policy entries derived from one routing calculation by the controller is called one policy distribution. During one policy distribution, this function module first analyzes all SRv6 policy entries to be distributed. Each SRv6 policy entry includes two parts: the policy target and the SID list. The policy target indicates the target router to which the SRv6 policy needs to be published, and the SID list indicates the path rule of the policy entry. For the sake of simplicity of representation and ease of understanding, SIDs in this paper are denoted by the label of routers. For example, each number in the SID List of Policy A below represents a SID. For different SRv6 policy entries, SID blocks with consecutive SIDs of the same order are abstracted to form policy distribution SID blocks. The remaining SID blocks are also wrapped into policy distribution SID blocks, respectively. The policy distribution SID block is the target structure formed after dividing the SID list of these SRv6 policy entries. It is also the minimum unit for policy distribution in SUDC. The minimum unit needs some numbers to mark it in order to facilitate policy pushing and policy combination. Therefore, we stipulate that each policy distribution SID block must contain the distribution serial number, sequence number, and SID list. The distribution serial number indicates the number assigned to the current policy distribution. The sequence number identifies the order of the SID blocks. The SID list indicates the SID sequence of the policy distribution block.

A practical example of SRv6 policy dividing is given:

Suppose there are three SRv6 policy entries to be distributed, and the distribution serial number is 101.

Policy A: The policy target is router a, and the SID list is 1->2->3->6->7->9->5->4->8.

Policy B: The policy target is router b, and the SID list is 1->2->3->6->7.

Policy C: The policy target is router c, and the SID list is 9->5->4->8->3->2.

The SID block of Policy A and Policy B with consecutive SIDs in the same order is 1->2->3->6->7. Therefore, the first policy distribution SID block is shown in the first row of

Table 1.

After abstracting the policy distribution SID block 1->2->3->6->7, the entire SID list of Policy B has been distributed. However, the remaining SID sequence of Policy A, 9->5->4->8, has not yet been distributed. Therefore, we will continue to plan for the remaining part of Policy A.

Policy C and the remaining part of Policy A have consecutive SID sequences in the same order, which are 9->5->4->8. Therefore, the second policy distribution SID block is shown in the second row of

Table 1.

After abstracting the policy distribution SID block 9->5->4->8, the entire SID list of Policy A has been distributed. However, the remaining SID sequence of Policy C, 3->2, has not yet been distributed. Therefore, we will continue to plan for the remaining part of Policy C.

For Policy C, the unique SID sequence is 3->2. Therefore, the third policy distribution SID block is individually planned for Policy C, as shown in the third row of

Table 1.

After the SRv6 policy-dividing function is performed, the SRv6 policy entries derived from one routing calculation by the controller are decomposed into multiple policy distribution SID blocks.

3.2. SRv6 Policy Pushing

The SRv6 policy-pushing module distributes the SID blocks derived from the SRv6 policy-dividing module to the target routers. This function is carried out on the control plane. SRv6 policy-pushing module consists of four steps: push initiation, BitString mapping, BIERv6 multicast push, and push completion.

SRv6 policy pushing relies on BIERv6 [

25], which enables the network to specify the set of destination nodes at the multicast source endpoint. In BIERv6, BitString is set at the source endpoint to specify the destination node set for multicast. Subsequent nodes replicate and forward the multicast packet based solely on the BitString. Therefore, BIERv6 avoids the resource and time overhead required to build and maintain multicast trees in the traditional multicast approach.

3.2.1. Push Initiation

Push initiation involves multicasting the policy push initiation signal to all target nodes involved in this policy distribution through BIERv6 technology. The BitString of the push initiation signal is encoded in such a way that all the bits corresponding to the nodes that need to perform the policy update are set to 1. The specific type of signal is not specified in this scheme, which can be a specific multicast Internet Protocol (IP) address or a specific User Datagram Protocol (UDP) port.

3.2.2. BitString Mapping

BitString mapping involves determining the BitString encoding for each policy distribution SID block in ascending order of sequence number. First, identify the SRv6 policies to which the policy distribution SID block belongs and determine all target nodes to which this policy distribution SID block needs to be multicast. Then, the BitString encoding of the policy distribution SID block is set in such a way that the corresponding bits of all target nodes are set to 1, and the remaining bits are set to 0. After BitString mapping is completed, each policy distribution SID block has its own BitString encoding that indicates the target node set.

3.2.3. BIERv6 Multicast Push

BIERv6 multicast push aims to distribute all policy distribution SID blocks via multicast in ascending order of sequence number. Multicast push adopts BIERv6 or a BIERv6-derived technology, such as Multicast Source Routing over IPv6 Best Effort (MSR6 BE). The BitString encoding required for BIERv6 multicast push is determined by the step BitString mapping.

3.2.4. Push Completion

After completing the push of all policy distribution SID blocks, the control plane pushes a push completion signal via multicast to all policy target nodes involved in this policy distribution. The BitString of the push completion signal is encoded in such a way that all the bits corresponding to the nodes that need to perform the policy update are set to 1. The specific type of signal, which can be a specific multicast IP address or a specific UDP port, is not specified in this scheme. As soon as the policy target nodes receive the push completion signal, they execute the SRv6 policy combination function.

3.3. SRv6 Policy Combination

The SRv6 policy combination module aims to combine policy distribution SID blocks to generate complete SRv6 policies. This function is executed at the data plane, and the policy target SRv6 nodes execute this function. Each relevant SRv6 node combines the received policy distribution SID blocks in ascending order of sequence number to generate a complete SRv6 policy. The destination Internet Protocol version 6 (IPv6) address matched by the combination-complete policy is the last address of the SID list. Then, the node writes the combination-complete SRv6 policy to the SRv6 node pipeline.

We continue to illustrate the process of the SRv6 policy combination using the example given in the SRv6 policy-dividing module.

After SRv6 policy pushing, node a receives two policy distribution SID blocks, which are shown in the first and second lines of

Table 2. Node a combines the received SID blocks in ascending order of sequence number to generate the complete SRv6 policy, 1->2->3->6->7->9->5->4->8. The destination IPv6 address matched by the combination-complete policy is the IPv6 address of node 9. Then, node a writes this policy to its SRv6 pipeline.

After SRv6 policy pushing, node b receives one policy distribution SID block, which is shown in the first line of

Table 2. Node b receives one policy distribution SID block, so the complete SRv6 policy is 1->2->3->6->7. The destination IPv6 address matched by the combination-complete policy is the IPv6 address of node 7. Then, node b writes this policy to its SRv6 pipeline.

After SRv6 policy pushing, node c receives two policy distribution SID blocks, which are shown in the second and third lines of

Table 2. Node c combines the received SID blocks in ascending order of sequence number to generate the complete SRv6 policy, 9->5->4->8->3->2. The destination IPv6 address matched by the combination-complete policy is the IPv6 address of node 2. Then, node c writes this policy to its SRv6 pipeline.