Abstract

In this study, improvements are made to Inter-SubNet, a state-of-the-art speech enhancement method. Inter-SubNet is a single-channel speech enhancement framework that enhances the sub-band spectral model by integrating global spectral information, such as cross-band relationships and patterns. Despite the success of Inter-SubNet, one crucial aspect probably overlooked by Inter-SubNet is the unequal perceptual weighting of different spectral regions by the human ear, as it employs MSE as its loss function. In addition, MSE loss has a potential convergence concern for model learning due to gradient explosion. Hence, we propose further enhancing Inter-SubNet by either integrating perceptual loss with MSE loss or modifying MSE loss directly in the learning process. Among various types of perceptual loss, we adopt the temporal acoustic parameter (TAP) loss, which provides detailed estimation for low-level acoustic descriptors, thereby offering a comprehensive evaluation of speech signal distortion. In addition, we leverage Huber loss, a combination of L1 and L2 (MSE) loss, to avoid the potential convergence issue for the training of Inter-SubNet. By the evaluation conducted on the VoiceBank-DEMAND database and task, we see that Inter-SubNet with the modified loss function reveals improvements in speech enhancement performance. Specifically, replacing MSE loss with Huber loss results in increases of 0.057 and 0.38 in WB-PESQ and SI-SDR metrics, respectively. Additionally, integrating TAP loss with MSE loss yields improvements of 0.115 and 0.196 in WB-PESQ and CSIG metrics.

1. Introduction

Speech enhancement (SE), which improves the quality and intelligibility of speech signals in the presence of noise and other distortions, is a crucial research topic in signal processing. Traditional statistics-based SE methods such as spectral subtraction and Wiener filtering often struggle to give satisfactory performance in complicated acoustic environments. In contrast, advancements in deep learning, especially deep neural networks (DNNs), have revolutionized SE techniques [1,2,3,4,5,6].

Speech processing tasks have been successfully performed using DNNs, which can learn complicated representations from large datasets. DNNs in SE have enabled models that can learn to separate speech from noise more reliably than traditional methods. Here, we briefly describe these methodologies’ evolution, highlighting key contributions and advancements in the field.

DNNs were first used for SE in the early 2010s. For example, X. Lu and Y. Tsao et al. [7] pioneered a Deep Denoising Autoencoder structure for a speech enhancement task and showed it performed better than a classic minimum-mean-square-error (MMSE)-based algorithm [8]. Following that, the development of convolutional neural networks (CNNs) was a huge step forward in the practice of SE. Since CNNs are particularly good at capturing spatial hierarchies in data, they are appropriate for processing spectrograms of speech signals. The work in [9] utilized CNNs for SE, showing that their model outperformed traditional SE methods.

Recurrent neural networks (RNNs), particularly long short-term memory (LSTM) networks, have also been employed for SE due to their ability to model temporal dependencies in sequential data. For example, ref. [10] introduced a time-domain SE model based on LSTM, demonstrating superior performance in real-time applications. Furthermore, recent advancements have led to end-to-end SE systems that directly map noisy audio to enhanced audio without the need for intermediate representations. Ref. [11] presented an end-to-end deep learning framework that performs multichannel speech enhancement in the time domain. In addition, incorporating attention mechanisms into DNN architectures has further enhanced the performance of SE models. The work in [12] introduced an attention-based model that dynamically focuses on relevant parts of the input signal, leading to improved noise suppression and speech quality. Moreover, to consider the demand for real-time SE, research towards optimizing DNN architectures has also been developed for efficiency. For example, ref. [13] proposed a lightweight network that maintains high performance while significantly reducing computational complexity, making it suitable for real-time applications.

In addition to the network structure, an SE model can also be classified based on the following factors:

- The type of audio signal it operates on. For instance, time-domain methods [10,14,15] process the raw time-domain waveform of the noisy speech signal, while frequency-domain methods [16,17,18,19] transform the noisy time-domain signal to the frequency domain using a short-time Fourier transform (STFT) and then learn a mapping from the noisy STFT representation to an enhanced STFT.

- The target that operates on the noisy representation to produce a cleaner output. For example, masking-based methods [20,21,22] estimate a mask or weight for each time-frequency bin of noisy speech to indicate the likelihood that the signal is speech rather than noise and then apply the mask to suppress noise. Conversely, mapping-based methods [14,23,24] directly map the noisy speech to a clean speech signal, with the DNN learning a complex mapping function to transform the noisy speech into a more speech-like signal.

The performance of a deep neural network for SE is influenced by various factors, including the hyperparameters, architecture, initialization, training data, and loss function. By carefully optimizing these elements, researchers can improve existing deep-neural-network-based SE methods. While modifying the network’s architecture can be challenging, adjusting the loss function often yields more tangible results. Since the loss function is only utilized during the training phase and not during inference, this approach allows for performance enhancements without adding to the computational burden or time required for inference.

Among various state-of-the-art DNN-based SE models, Inter-SubNet [25] is a masking-based and frequency-domain method since it pursues a complex ideal ratio mask (cIRM) [22] that applies to the STFT of the input signal. Essentially, Inter-SubNet is a refined and streamlined version of FullSubNet [26] and FullSubNet+ [27], two highly effective SE models. While achieving superior results, Inter-SubNet boasts a significantly smaller model size, making it more computationally efficient. According to [25], Inter-SubNet was also rigorously tested on the DNS Challenge dataset and consistently outperformed some other leading and state-of-the-art methods, including DCCRN-E [14], Conv-TasNet [28], PoCoNet [29], DCCRN+ [30], TRU-Net [31], CTS-Net [32], FullSubNet [26], and FullSubNet+ [27].

Building upon the insights gained above, this study proposes a novel modification to Inter-SubNet, focusing on its loss function. By refining the loss function, we aim to enhance Inter-SubNet’s performance without compromising its computational efficiency. The original Inter-SubNet model employs the mean-squared error (MSE) of cIRM as its loss function, which, while effective in certain scenarios, can exhibit limitations. The following may be included in these limitations:

- MSE is sensitive to outliers and can suffer from gradient explosion issues, which can impede the training process.

- By only minimizing the estimation error of the cIRM, the MSE loss function overlooks the inherent variability in the importance of different time-frequency bins of a speech signal. This is important because human perception is not uniform across all frequency bands.

- In scenarios with low signal-to-noise ratios (SNRs), MSE can cause the model to excessively reduce certain frequency regions where noise dominates, negatively impacting the quality of the speech component.

- While human auditory perception operates on a non-linear scale, the cIRM is defined on a linear frequency scale. This discrepancy may further impede the efficacy of MSE in revising cIRM to capture perceptual details.

To address these limitations, we suggest integrating Huber loss [33] and temporal acoustic parameter (TAP) loss [34] into the training of Inter-SubNet. Huber loss, which combines L1 and L2 losses, is less sensitive to outliers than MSE and is a robust loss function. On the other hand, TAP loss provides a detailed estimation for 25 low-level acoustic descriptors, offering a comprehensive evaluation of speech signal distortion. These modifications to Inter-SubNet are expected to improve speech enhancement performance by addressing gradient explosion issues and considering perceptual characteristics. It is important to note that these modifications only impact the training process and do not increase computational complexity or latency during inference. The experiments conducted on the VoiceBank-DEMAND database exhibit the superiority of the presented modifications. Our enhanced Inter-SubNet consistently outperforms the original model across various speech enhancement metrics, highlighting the effectiveness of our approach.

The remainder of this study is organized as follows: An overview of the Inter-SubNet framework is provided in Section 2. Huber loss, TAP loss, and potential methods of integrating them with the initial MSE loss are introduced in Section 3. Section 4 provides the experimental setup, and Section 5 presents the experimental results and analyses. Lastly, Section 6 offers concluding remarks.

2. Inter-SubNet

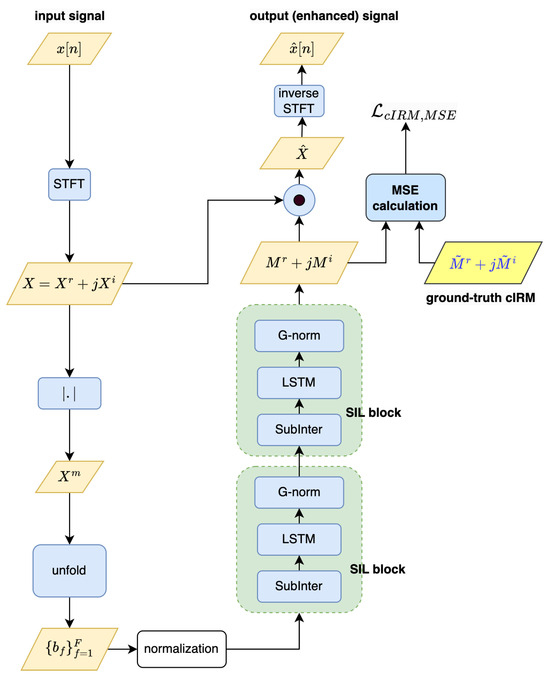

This section briefly introduces the SE framework, Inter-SubNet, which serves as the model for further improvement. The flowchart of the training of Inter-SubNet is shown in Figure 1, and is described as follows:

- Initially, the input signal is transformed into a complex-valued spectrogram using the short-time Fourier transform (STFT). Here, F and T represent the total number of frequency bins and frames, respectively. The magnitude part of the spectrogram, denoted as , is obtained by calculating the absolute value of each entry in the complex spectrogram. is then split into sub-band units using an “unfold” process. Each unit is generated by concatenating a frequency bin vector with its adjacent frequency bin vectors, extending N frequency bins on each side. That is,Note that circular Fourier frequencies are used for those frequency indices f for if or .

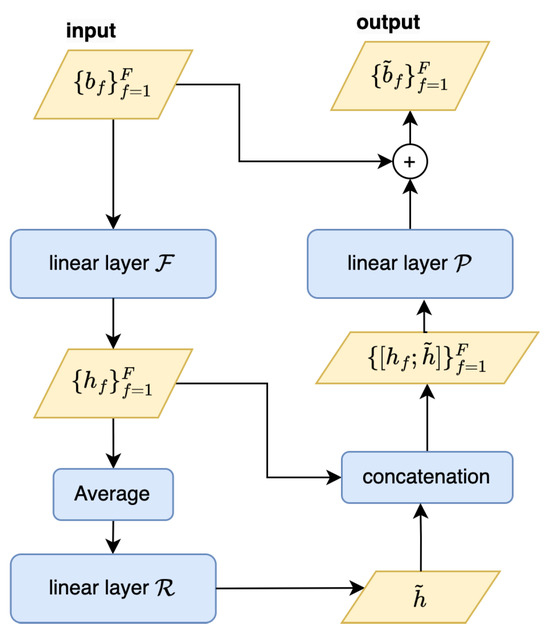

- The sub-band units pass through a stack of two SubInter-LSTM (SIL) blocks and a fully connected layer to produce the complex ideal ratio mask (cIRM), . Each SIL block comprises a SubInter module, an LSTM network, and a group normalization layer (G-Norm). The SubInter module plays a crucial role in capturing local and global spectral information, the flowchart of which is depicted in Figure 2. This module takes the sub-band units as input and outputs enhanced sub-bands through a series of procedures:

- (a)

- The dimension transformation (permutation) and a linear layer are applied to the input sub-band units:where the output is the first hidden representation that contains parallel sub-band information, and refers to a linear layer operation, and “” refers to the dimension transformation operation.

- (b)

- The first hidden representation is averaged across all sub-bands and then passed through another linear layer:where denotes a linear layer. The output , the second hidden representation, contains the global spectral information due to the average operation considering cross-band dependencies and refers to sub-band interaction.

- (c)

- Each of the sub-bands is concatenated with . Then, the set of all updated sub-bands undergoes the third linear layer and the dimension transformation (permutation). Additionally, a residual connection is implemented to add back the original units . Therefore, the ultimate output for this module is as follows:where denotes a linear layer. Obviously, the final enhanced sub-band features intertwine global and local (sub-band) spectral information.

Upon completion of the SubInter module, the LSTM network acquires the local and complementary global spectral information embedded within these sub-band features , which acts like the LSTM layers of the sub-band model in FullSubNet+ [27]. A G-norm layer is then used to normalize the LSTM outputs. - The output cIRM, , is element-wise multiplied with the input complex-valued spectrogram aswhere “⊙” is the element-wise multiplication operator. The resulting spectrogram is then converted back to a time-domain signal using the inverse STFT, providing an enhanced version of the input signal

The mean-squared error (MSE) between the estimated cIRM, , and the ground-truth cIRM (denoted by ) is employed as the loss function to train the Inter-SubNet framework. The MSE loss is expressed by

where is the Frobenius norm.

Figure 1.

The flowchart of Inter-SubNet (using the MSE of cIRM as the loss).

Figure 2.

The flowchart of the SubInter module in Inter-SubNet.

3. Inter-SubNet with New Loss Function

The primary objective of this study is to revise the training process of the Inter-SubNet architecture by restructuring its loss function. Our approach uses two different loss functions: Huber loss and temporal acoustic parameter (TAP) loss. In simple terms, Huber loss helps address potential convergence and outlier issues when training networks using MSE loss. On the other hand, TAP loss can enhance Inter-SubNet’s ability to emulate human sound production and perception.

3.1. Huber Loss

Huber loss [33], also termed smooth L1 loss, is a robust loss function used primarily in regression tasks, combining the properties of both L2 loss (mean squared error, MSE) and L1 loss (mean absolute error, MAE). It is expressed as follows:

where y and are the estimated and ground-truth values, respectively, and is a threshold parameter that determines the transition point between the quadratic and linear loss. For errors smaller than , Huber loss behaves like L2 loss, providing smoothness and differentiability, which is advantageous for optimization. For larger errors, it transitions to L1 loss, which is less sensitive to outliers.

One of the primary advantages of Huber loss over L2 (MSE) loss is its robustness to outliers, while MSE can disproportionately penalize large errors due to its quadratic nature, leading to skewed results in the presence of outliers, Huber loss mitigates this effect by treating large errors linearly beyond the threshold . As a result, Huber loss provides a balanced approach to error measurement, combining the strengths of both L1 and L2 losses while reducing the impact of outliers, making it a preferred choice in many regression tasks.

3.2. TAP Loss

Conventional loss functions such as MSE loss employed in the training of Inter-SubNet only take into account the differences between individual points of signals or signals’ masks in either the time domain or frequency domain. Consequently, they have low correlations with perceptual speech quality or they may overemphasize high-energy regions in signals. To solve this problem, we can further improve deep networks by taking into account acoustic characteristics. This helps improve the perceptual quality of enhanced speech in addition to noise reduction. The research in [34] focuses on analyzing 25 acoustic features at each time frame of an input speech, which are thus called temporal acoustic parameters (TAPs). A differentiable estimator was created for these TAPs. Leveraging the differentiability of the TAP estimator, the built TAP loss can be employed to learn any deep network for speech enhancement.

The four classes that cover the aforesaid 25 TAPs are as follows [34]

- Frequency-related: These parameters capture characteristics related to the frequency content of the speech signal.

- Energy- or amplitude-related: These parameters reflect the loudness and energy levels of the speech signal, critical for understanding the intensity and dynamics of speech.

- Spectral-balance-related: These parameters assess the energy distribution across different frequency bands, providing insights into the tonal balance and clarity of the speech.

- Other temporal parameters: These parameters capture the temporal dynamics of the speech signal, including variations over time that are important for conveying speech rhythm and flow.

Here, we introduce the derivation of TAP loss by referring to [34]. Let denote the TAPs of a time-domain utterance . The TAP estimator produces an estimate of as

where denotes the TAP estimator operation, which converts to its complex spectrogram and then passes to a recurrent neural network to output the estimate . In order to learn the network parameters of the TAP estimator, the MAE is employed:

where t and p are the time frame index and TAP index, respectively, and is the expectation operator over all time frames and TAPs.

Once the aforesaid TAP estimator is learned, it is frozen as a fixed processing component to build TAP loss between the enhanced signal and the ground-truth signal . Let be the short-time Fourier transform (STFT) of ; the frame-wise energy weights are calculated by

Then, the final TAP loss is defined as

where is the sigmoid function, and “⊙” is the operation of element-wise multiplication with broadcasting. From Equation (11), TAP loss is in fact the MAE between ground-truth (clean) and estimated TAPs with smoothed frame energy-weighting. The smoothed frame energy weights are used to highlight the TAPs of high-energy frames.

3.3. Discussion of Some Other Losses

As discussed in the previous two subsections, Huber loss serves as a robust loss function, while TAP loss particularly considers the perceptual quality of enhanced speech. Here, we introduce the related losses and provide a simple comparison of them with Huber loss and TAP loss.

3.3.1. Robust Loss

In addition to Huber loss, Charbonnier loss [35] is another robust loss function used in machine learning, particularly in regression tasks. Given y and as the estimated and ground-truth values, Charbonnier loss is expressed as follows:

where is a small constant. Compared to Huber loss, Charbonnier loss is fully differentiable and has a smoother transition from quadratic to linear. Charbonnier loss can be viewed as a differentiable approximation of the Huber loss. Additionally, Huber loss has an explicit parameter ( in Equation (7)) that controls the transition between quadratic and linear behavior, making it easier to adjust the sensitivity to outliers. In comparison, Charbonnier loss does not have a direct parameter for controlling this transition, which might make it less flexible in specific applications. Therefore, Huber loss is preferred over Charbonnier loss when there is a need for clear control over outlier sensitivity. Conversely, Charbonnier loss might be favored in tasks that require smoother gradients.

3.3.2. Perceptual Loss

In training SE networks, perceptual loss functions have been used to better align the model’s output with human auditory perception. The following presents some key perceptual loss functions from the recent literature:

- Perceptual Evaluation of Speech Quality (PESQ) [36,37]: PESQ is widely used for assessing speech quality. However, the original PESQ algorithm is non-differentiable, making it unsuitable as a direct loss function. To address this limitation, researchers have trained neural networks to mimic PESQ’s behavior, creating differentiable surrogate loss functions that enable gradient-based optimization.

- Short-Time Objective Intelligibility (STOI) [38]: STOI measures speech intelligibility and has been used as a metric to evaluate speech enhancement methods. Like PESQ, STOI’s non-differentiable nature poses challenges for direct optimization. To overcome this, researchers have explicitly developed differentiable versions or approximations of STOI for training deep-learning SE models.

- Phone-Fortified Perceptual Loss (PFPL) [39]: The PFPL integrates phonetic information through wav2vec, a self-supervised learning framework that captures rich phonetic information. It uses Wasserstein distance to quantify the distributional differences between the phonetic feature representations of clean and enhanced speech. This approach allows for assessing how well the enhanced speech preserves phonetic attributes compared to the original clean speech. PFPL has also shown a strong correlation with PESQ and STOI.

Similar to PESQ, STOI, and PFPL losses, the TAP loss employed in this study also considers various perceptual characteristics of enhanced signals. These perceptual losses are generally combined with conventional MSE loss related to estimated signals or features to further improve SE performance. As it integrates up to 25 temporal acoustic features, TAP loss is regarded as more comprehensive, offering a deeper insight into how enhancements affect speech compared to other perceptual losses. However, the effectiveness of each loss function ultimately depends on the specific applications.

3.4. Presented Method

The method presented here modifies Inter-SubNet by adjusting its loss function during training. Let us denote the estimated and ground-truth time signals as and . We provide three options for the loss function:

- The new loss is calculated using the mean Huber loss measured with the estimated and ground-truth cIRMs for and . It is expressed as follows:where is from Equation (7).

- The new loss is a combination of TAP loss with the MSE-based cIRM loss:

- The new loss is a combination of TAP loss with the mean Huber-based cIRM loss:

In Equations (14) and (15), , , and are from Equations (6), (11), and (13), and , and are the weight hyper-parameters for TAP loss.

The gradient of the employed loss is backpropagated to update both the complex ideal ratio mask (cIRM) and the other parameters in Inter-SubNet during training. The following is a detailed explanation of how this process works:

- Forward Pass: In the forward pass, the network processes the noisy speech signal to generate the complex ideal ratio mask (cIRM). This mask is then applied to the time-frequency representation of the noisy signal, resulting in enhanced speech output.

- Loss Calculation: The estimated cIRM mask is evaluated against the ground-truth mask using MSE or Huber loss. Simultaneously, we assess the enhanced speech against the clean target speech using TAP loss. This loss function specifically measures how effectively the enhanced speech retains crucial temporal acoustic parameters.

- Backward Pass: The total loss, which combines both MSE/Huber loss and TAP loss, is utilized to compute the gradient with respect to all network parameters by applying the chain rule of calculus through each layer. Compared to MSE loss for the cIRM, TAP loss additionally incorporates layers that perform the inverse STFT and those responsible for estimating TAPs.

- Updating Network Parameters: The computed gradients are used to update the parameters of the Inter-SubNet framework with an optimizer.

This process is repeated for multiple epochs over the training dataset, allowing Inter-SubNet to learn and adjust its parameters based on the feedback from the employed loss.

4. Experimental Setup

In order to evaluate the efficacy of the presented method, we employed the VoiceBank-DEMAND database and task [40,41], which combine the VoiceBank speech dataset with noise sources from the DEMAND database. The training dataset consisted of 11,572 utterances collected from 28 speakers. As for the test dataset, it included 824 utterances spoken by only 2 speakers. In the training set, the utterances underwent corruption by ten types of noise from the DEMAND database. The entire DEMAND dataset included a variety of noise types categorized into six main groups (Residential, Nature, Office, Public, Street, and Transportation). Each category consisted of three specific scenes, totaling 18 distinct noise scenes, providing recordings that reflect various real-world acoustic environments.

These noises were introduced to the training set at four distinct signal-to-noise ratios (SNRs): 0, 5, 10, and 15 in dB. Conversely, the test set contained utterances affected by five different types of DEMAND noise, present at four different SNRs: 2.5, 7.5, 12.5, and 17.5 in dB. Additionally, we reserved around 200 utterances from the training set for validation purposes.

For the training of the Inter-SubNet framework, we referred to [34] and employed the following parameter settings:

- A Hanning window with 32ms frame size and 16ms frame shift was applied to input signals to obtain the spectrogram.

- The sub-band unit was set to have frequency bins, and the number of frames T for the input-target sequence pairs during training was set to 192.

- For the first SIL block, the SubInter module and the LSTM contained 102 hidden units and 384 hidden units, respectively. For the second SIL block, the SubInter module and the LSTM contained 307 hidden units and 384 hidden units, respectively.

- The batch size was reduced from the initial value of 20 in the script to 4 to accommodate the GPU constraints of the servers in our laboratory.

Following [34], the TAP estimator used in TAP loss was set to be a 3-layer bi-LSTM with 256 hidden units, and it was trained on the DNS 2020 clean dataset [42]. As for the employed Huber loss, the hyperparameter in Equation (7) was set to 1. According to [34], the FullSubNet framework trained with a loss function that includes cIRM-wise MSE loss and a 0.03-weighted TAP loss yielded the best results. Therefore, we varied the weights of the TAP loss, in Equation (14) and in Equation (15), within the range of in increments of to evaluate the performance of the trained Inter-SubNet. The experiments were conducted using the PyTorch programming language on a desktop computer featuring an AMD Ryzen 5 3550X 6-Core Processor (Santa Clara, CA, USA) and an NVIDIA GP102 GPU (Santa Clara, CA, USA).

To evaluate Inter-SubNet with different loss function settings in SE performance, we employed several objective metrics:

- Wideband Perceptual Evaluation of Speech Quality (WB-PESQ) [36]: This metric ranks the level of enhancement for wideband speech signals, typically sampled at frequencies of 16 kHz or higher. WB-PESQ indicates the quality difference between the enhanced and clean speech signals, and it ranges from to 4.5.

- Narrowband Perceptual Evaluation of Speech Quality (NB-PESQ) [37]: Similar to WB-PESQ, NB-PESQ evaluates the quality of speech signals but is limited to narrowband ones, typically sampled at 8 kHz. WB-PESQ involves high-fidelity speech processing, whereas NB-PESQ is more suited for traditional telephony.

- Short-Time Objective Intelligibility (STOI) [38]: This metric measures the objective intelligibility for short-time time-frequency (TF) regions of an utterance with discrete Fourier transform (DFT). STOI ranges from 0 to 1; a higher STOI score corresponds to better intelligibility.

- Scale-Invariant Signal-to-Distortion Ratio (SI-SNR) [43]: This metric usually reflects the degree of artifact distortion between the processed utterance and the clean counterpart , which is formulated bywherewith being the inner product of and .

- Composite Measure for Overall Quality (COVL) [44]: This metric is for the Mean Opinion Score (MOS) prediction of the overall quality of the enhanced speech. It reflects how listeners perceive the overall quality of the speech signal. COVL scores range from 0 to 5, where a higher score indicates better overall speech quality. COVL is commonly used in the development of SE systems to quantify improvements in speech quality as perceived by human listeners.

- Composite Measure for Signal Distortion (CSIG): [44]: This metric refers to the MOS prediction of signal distortion, which ranges from 0 to 5, with higher scores indicating lower distortion and better quality of the speech signal. It evaluates how much distortion has been introduced during the enhancement process. CSIG is useful for assessing the effectiveness of SE algorithms in preserving the integrity of the original speech while reducing noise and other distortions.

5. Experimental Results and Discussion

In Table 1, we can observe the SE metric scores of Inter-SubNet using three different loss functions. The results show that replacing MSE loss with Huber loss for cIRM estimation leads to a significant improvement in all six metrics, demonstrating the effectiveness of Huber loss in enhancing the Inter-SubNet performance. On the other hand, replacing MSE loss (for cIRM) with TAP loss (for time-domain signals) improves COVL and CSIG scores but worsens the remaining SE metrics (WB-PESQ, NB-PESQ, STOI, and SI-SDR). This suggests that solely minimizing TAP loss does not effectively optimize the cIRM used in Inter-SubNet, a masking-based SE framework.

Next, we incorporated TAP loss into the original MSE loss of Inter-SubNet. The corresponding results are displayed in Table 2. From the table, the following observations can be made:

- TAP loss fine-tunes Inter-SubNet to enhance its performance. The combination of MSE and TAP losses leads to better results in almost all SE metrics (except SI-SDR) compared to when individual losses are used alone.

- When the weight for TAP loss is set within the range of , similar good performance is achieved. However, increasing to 0.04 degrades some metrics.

- The introduction of TAP loss does not lead to an improvement in the SI-SDR metric. This may be due to the fact that SI-SDR is more concerned with physical distortion rather than the perceptual interference that TAP loss addresses.

Table 2.

The various SE performance scores of Inter-SubNet trained with different loss functions: as in Equation (6), as in Equation (11), and as in Equation (14) with several assignments of .

| Loss | WB-PESQ | NB-PESQ | STOI | SI-SDR | COVL | CSIG | |

|---|---|---|---|---|---|---|---|

| (baseline) | 2.843 | 3.588 | 0.943 | 18.427 | 3.419 | 4.000 | |

| 2.746 | 3.478 | 0.939 | 17.338 | 3.442 | 4.127 | ||

| 2.958 | 3.622 | 0.946 | 18.279 | 3.587 | 4.196 | ||

| 2.915 | 3.591 | 0.946 | 18.307 | 3.564 | 4.193 | ||

| 2.913 | 3.605 | 0.946 | 18.407 | 3.562 | 4.191 | ||

| 2.923 | 3.587 | 0.945 | 18.236 | 3.557 | 4.172 | ||

In addition, Table 3 lists the results for Inter-SubNet using the fusion of Huber loss and TAP loss. We find that using TAP loss in addition to Huber loss results in better scores for the WB-PESQ, COVL, and CSIG metrics. When we set to 0.02, the CSIG score reaches , which is the best result among all the cases discussed in this section.

Table 4 summarizes the results from the previous three tables as part of an ablation study. Our findings confirm that the combination of Huber and TAP loss outperforms each loss component. Specifically, substituting MSE loss with Huber loss proves advantageous, while TAP loss alone yields poorer performance than MSE loss (baseline).

Table 4.

The ablation study, summarizing the influence of Huber loss and TAP loss on the performance of Inter-SubNet.

In addition, we compared the revised Inter-SubNet (incorporating Huber and TAP losses) with several prominent speech enhancement frameworks, SEGAN [24], CMGAN [45], and MP-SENet [46], which primarily utilize generative adversarial networks (GANs), along with MANNER [47] and SE-Conformer [48], which mainly employ attention mechanisms. This evaluation, detailed in Table 5, is based on their experimental results for the NB-PESQ and STOI metrics obtained from the VoiceBank-DEMAND dataset, as reported in the literature. From the table, it is evident that the revised Inter-SubNet achieves a superior NB-PESQ score compared to all other methods evaluated. In contrast, nearly all methods (with the exception of SEGAN) yield very similar STOI scores. These findings confirm that the proposed method performs better than or at least on par with state-of-the-art speech enhancement techniques that rely on GAN- or attention-based mechanisms. Given its impressive performance and compact model size, we believe that the revised Inter-SubNet is highly suitable for real-world applications.

Table 5.

The NB-PESQ and STOI performance scores (rounded to the nearest two decimal places) of the revised Inter-SubNet (with Huber and TAP losses), SEGAN [24], CMGAN [45], MP-SENet [46], MANNER [47], and SE-Conformer [48].

Finally, Table 6 details the memory usage, training time, inference time, and the number of model parameters for Inter-SubNet trained with various loss functions. The experiments were conducted using the PyTorch programming language on a desktop computer featuring an AMD Ryzen 5 3550X 6-Core Processor and an NVIDIA GP102 GPU. The data indicate that modifying the loss function primarily influences the training time of Inter-SubNet and the model complexity when considering the TAP estimator in the count of model parameters. However, the choice of loss function does not affect the inference time of Inter-SubNet; thus, all versions presented here demonstrate very similar inference time.

Table 6.

The memory usage, training time, inference time, and model size of Inter-SubNet trained using different loss functions. The programming language used was PyTorch, and the desktop computer conducting the experiments was equipped with an AMD Ryzen 5 3550X 6-Core Processor and an NVIDIA GP102 GPU. Note: The TAP estimator used in TAP loss contains 5.2106 M parameters.

6. Conclusions

This study aims to enhance Inter-SubNet, a state-of-the-art speech enhancement framework, by incorporating Huber loss and temporal acoustic parameter (TAP) loss. Our experiments on the VoiceBank-DEMAND dataset demonstrate that both replacing MSE loss with Huber loss and integrating TAP loss with MSE loss improve Inter-SubNet’s performance across various metrics. Importantly, these modifications do not increase the model’s complexity for inference, making them practical for real-world applications. As for the future research direction, we plan to apply Huber and TAP losses to the other state-of-the-art speech enhancement frameworks and other speech processing tasks (such as voice activity detection, speech separation, and speaker diarization) to evaluate their effectiveness. In addition, we will explore novel loss functions that benefit the training of neural networks.

Author Contributions

Methodology, J.-W.H., P.-C.H. and L.-Y.L.; Software, P.-C.H. and L.-Y.L.; Validation, J.-W.H., P.-C.H. and L.-Y.L.; Formal analysis, J.-W.H. and P.-C.H.; Investigation, J.-W.H. and P.-C.H.; Resources, J.-W.H.; Data curation, J.-W.H.; Writing—original draft, J.-W.H., P.-C.H. and L.-Y.L.; Writing—review & editing, J.-W.H.; Visualization, J.-W.H.; Supervision, J.-W.H.; Project administration, J.-W.H.; Funding acquisition, J.-W.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ochieng, P. Deep neural network techniques for monaural speech enhancement: State of the art analysis. arXiv 2023, arXiv:2212.00369. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, T. Fractional feature-based speech enhancement with deep neural network. Speech Commun. 2023, 153, 102971. [Google Scholar] [CrossRef]

- Hao, X.; Xu, C.; Xie, L. Neural speech enhancement with unsupervised pre-training and mixture training. Neural Netw. 2023, 158, 216–227. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Han, J.; Zhang, T.; Qing, D. Speech Enhancement from Fused Features Based on Deep Neural Network and Gated Recurrent Unit Network. EURASIP J. Adv. Signal Process. 2021. [Google Scholar] [CrossRef]

- Skariah, D.; Thomas, J. Review of Speech Enhancement Methods using Generative Adversarial Networks. In Proceedings of the 2023 International Conference on Control, Communication and Computing (ICCC), Thiruvananthapuram, India, 19–21 May 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Karjol, P.; Ajay Kumar, M.; Ghosh, P.K. Speech Enhancement Using Multiple Deep Neural Networks. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5049–5052. [Google Scholar] [CrossRef]

- Lu, X.; Tsao, Y.; Matsuda, S.; Hori, C. Speech enhancement based on deep denoising autoencoder. In Proceedings of the Interspeech 2013, Lyon, France, 25–29 August 2013; pp. 436–440. [Google Scholar] [CrossRef]

- Cohen, I. Noise spectrum estimation in adverse environments: Improved minima controlled recursive averaging. IEEE Trans. Speech Audio Process. 2003, 11, 466–475. [Google Scholar] [CrossRef]

- Fu, S.W.; Tsao, Y.; Lu, X. SNR-Aware Convolutional Neural Network Modeling for Speech Enhancement. In Proceedings of the Interspeech 2016, San Francisco, CA, USA, 8–12 September 2016; pp. 3768–3772. [Google Scholar] [CrossRef]

- Luo, Y.; Mesgarani, N. TaSNet: Time-Domain Audio Separation Network for Real-Time, Single-Channel Speech Separation. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 696–700. [Google Scholar] [CrossRef]

- Pang, J.; Li, H.; Jiang, T.; Wang, H.; Liao, X.; Luo, L.; Liu, H. A Dual-Channel End-to-End Speech Enhancement Method Using Complex Operations in the Time Domain. Appl. Sci. 2023, 13, 7698. [Google Scholar] [CrossRef]

- Fan, J.; Yang, J.; Zhang, X.; Yao, Y. Real-time single-channel speech enhancement based on causal attention mechanism. Appl. Acoust. 2022, 201, 109084. [Google Scholar] [CrossRef]

- Yang, L.; Liu, W.; Meng, R.; Lee, G.; Baek, S.; Moon, H.G. Fspen: An Ultra-Lightweight Network for Real Time Speech Enahncment. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 10671–10675. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, Y.; Lv, S.; Zhang, S.; Wu, J.; Zhang, B.; Xie, L. DCCRN: Deep Complex Convolution Recurrent Network for Phase-Aware Speech Enhancement. In Proceedings of the Interspeech 2020, Shanghai, China, 25–29 October 2020; pp. 2472–2476. [Google Scholar] [CrossRef]

- Koh, H.I.; Na, S.; Kim, M.N. Speech Perception Improvement Algorithm Based on a Dual-Path Long Short-Term Memory Network. Bioengineering 2023, 10, 1325. [Google Scholar] [CrossRef] [PubMed]

- Nossier, S.A.; Wall, J.; Moniri, M.; Glackin, C.; Cannings, N. A Comparative Study of Time and Frequency Domain Approaches to Deep Learning based Speech Enhancement. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, X.; Li, Y.; Dong, Y.; Wang, D.; Xiong, S. Neural Noise Embedding for End-To-End Speech Enhancement with Conditional Layer Normalization. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 7113–7117. [Google Scholar] [CrossRef]

- Yin, D.; Luo, C.; Xiong, Z.; Zeng, W. PHASEN: A Phase-and-Harmonics-Aware Speech Enhancement Network. Proc. Aaai Conf. Artif. Intell. 2020, 34, 9458–9465. [Google Scholar] [CrossRef]

- Zhao, H.; Zarar, S.; Tashev, I.; Lee, C.H. Convolutional-Recurrent Neural Networks for Speech Enhancement. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2401–2405. [Google Scholar] [CrossRef]

- Graetzer, S.; Hopkins, C. Comparison of ideal mask-based speech enhancement algorithms for speech mixed with white noise at low mixture signal-to-noise ratios. J. Acoust. Soc. Am. 2022, 152, 3458–3470. [Google Scholar] [CrossRef] [PubMed]

- Routray, S.; Mao, Q. Phase sensitive masking-based single channel speech enhancement using conditional generative adversarial network. Comput. Speech Lang. 2022, 71, 101270. [Google Scholar] [CrossRef]

- Williamson, D.S.; Wang, Y.; Wang, D. Complex Ratio Masking for Monaural Speech Separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 483492. [Google Scholar] [CrossRef] [PubMed]

- Tan, K.; Wang, D. A Convolutional Recurrent Neural Network for Real-Time Speech Enhancement. In Proceedings of the Interspeech 2018, Hyderabad, India, 2–6 September 2018; pp. 3229–3233. [Google Scholar] [CrossRef]

- Pascual, S.; Bonafonte, A.; Serrà, J. SEGAN: Speech Enhancement Generative Adversarial Network. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; pp. 3642–3646. [Google Scholar] [CrossRef]

- Chen, J.; Rao, W.; Wang, Z.; Lin, J.; Wu, Z.; Wang, Y.; Shang, S.; Meng, H. Inter-Subnet: Speech Enhancement with Subband Interaction. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Hao, X.; Su, X.; Horaud, R.; Li, X. Fullsubnet: A Full-Band and Sub-Band Fusion Model for Real-Time Single-Channel Speech Enhancement. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 6633–6637. [Google Scholar] [CrossRef]

- Chen, J.; Wang, Z.; Tuo, D.; Wu, Z.; Kang, S.; Meng, H. FullSubNet+: Channel Attention Fullsubnet with Complex Spectrograms for Speech Enhancement. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 7857–7861. [Google Scholar] [CrossRef]

- Luo, Y.; Mesgarani, N. Conv-TasNet: Surpassing Ideal Time–Frequency Magnitude Masking for Speech Separation. IEEE/ACM Trans. Audio, Speech, Lang. Process. 2019, 27, 1256–1266. [Google Scholar] [CrossRef] [PubMed]

- Isik, U.; Giri, R.; Phansalkar, N.; Valin, J.M.; Helwani, K.; Krishnaswamy, A. PoCoNet: Better Speech Enhancement with Frequency-Positional Embeddings, Semi-Supervised Conversational Data, and Biased Loss. In Proceedings of the Interspeech 2020, Shanghai, China, 25–29 October 2020; pp. 2487–2491. [Google Scholar]

- Lv, S.; Hu, Y.; Wu, J.; Xie, L. DCCRN+: Channel-wise Subband DCCRN with SNR Estimation for Speech Enhancement. In Proceedings of the Interspeech 2021, Brno, Czech Republic, 30 August–3 September 2021; pp. 2816–2820. [Google Scholar]

- Choi, H.S.; Park, S.; Lee, J.H.; Heo, H.; Jeon, D.; Lee, K. Real-Time Denoising and Dereverberation wtih Tiny Recurrent U-Net. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 5789–5793. [Google Scholar] [CrossRef]

- Li, A.; Liu, W.; Zheng, C.; Fan, C.; Li, X. Two Heads are Better Than One: A Two-Stage Complex Spectral Mapping Approach for Monaural Speech Enhancement. IEEE/ACM Trans. Audio, Speech, Lang. Process. 2021, 29, 1829–1843. [Google Scholar] [CrossRef]

- Huber, P.J. Robust Estimation of a Location Parameter. Ann. Math. Stat. 1964, 35, 73–101. [Google Scholar] [CrossRef]

- Zeng, Y.; Konan, J.; Han, S.; Bick, D.; Yang, M.; Kumar, A.; Watanabe, S.; Raj, B. TAPLoss: A Temporal Acoustic Parameter Loss for Speech Enhancement. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Charbonnier, P.; Blanc-Feraud, L.; Aubert, G.; Barlaud, M. Two deterministic half-quadratic regularization algorithms for computed imaging. In Proceedings of the International Conference on Image Processing, Austin, TX, USA, 13–16 November 1994; Volume 2, pp. 168–172. [Google Scholar] [CrossRef]

- ITU-T. Wideband Extension to Recommendation P.862 for the Assessment of Wideband Telephone Networks and Speech Codecs; Technical Report P.862.2; International Telecommunication Union: Geneva, Switzerland, 2005. [Google Scholar]

- ITU-T. Perceptual Evaluation of Speech Quality (PESQ), an Objective Method for End-to-End Speech Quality Assessment of Narrowband Telephone Networks and Speech Codecs; Technical Report P.862; International Telecommunication Union: Geneva, Switzerland, 2001. [Google Scholar]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. An Algorithm for Intelligibility Prediction of Time–Frequency Weighted Noisy Speech. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 2125–2136. [Google Scholar] [CrossRef]

- Hsieh, T.A.; Yu, C.; Fu, S.W.; Lu, X.; Tsao, Y. Improving Perceptual Quality by Phone-Fortified Perceptual Loss Using Wasserstein Distance for Speech Enhancement. In Proceedings of the Interspeech 2021, Brno, Czech Republic, 30 August–3 September 2021; pp. 196–200. [Google Scholar] [CrossRef]

- Valentini-Botinhao, C.; Wang, X.; Takaki, S.; Yamagishi, J. Investigating RNN-based speech enhancement methods for noise-robust Text-to-Speech. In Proceedings of the 9th ISCA Workshop on Speech Synthesis Workshop (SSW 9), Sunnyvale, CA, USA, 13–15 September 2016; pp. 146–152. [Google Scholar] [CrossRef]

- Thiemann, J.; Ito, N.; Vincent, E. The Diverse Environments Multi-channel Acoustic Noise Database (DEMAND): A database of multichannel environmental noise recordings. In Proceedings of the 21st International Congress on Acoustics, Montreal, QC, Canada, 2–7 June 2013; pp. 1–6. [Google Scholar]

- Panayotov, V.; Chen, G.; Povey, D.; Khudanpur, S. Librispeech: An ASR corpus based on public domain audio books. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 5206–5210. [Google Scholar] [CrossRef]

- Roux, J.L.; Wisdom, S.; Erdogan, H.; Hershey, J.R. SDR—Half-baked or Well Done? In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 626–630. [Google Scholar] [CrossRef]

- Hu, Y.; Loizou, P.C. Evaluation of Objective Quality Measures for Speech Enhancement. IEEE Trans. Audio Speech Lang. Process. 2008, 16, 229–238. [Google Scholar] [CrossRef]

- Cao, R.; Abdulatif, S.; Yang, B. CMGAN: Conformer-based Metric GAN for Speech Enhancement. In Proceedings of the Interspeech 2022, Incheon, Republic of Korea, 18–22 September 2022; pp. 936–940. [Google Scholar] [CrossRef]

- Lu, Y.X.; Ai, Y.; Ling, Z.H. MP-SENet: A Speech Enhancement Model with Parallel Denoising of Magnitude and Phase Spectra. In Proceedings of the Interspeech 2023, Dublin, Ireland, 20–24 August 2023; pp. 3834–3838. [Google Scholar] [CrossRef]

- Park, H.J.; Kang, B.H.; Shin, W.; Kim, J.S.; Han, S.W. MANNER: Multi-View Attention Network For Noise Erasure. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 7842–7846. [Google Scholar] [CrossRef]

- Kim, E.; Seo, H. SE-Conformer: Time-Domain Speech Enhancement Using Conformer. In Proceedings of the Interspeech 2021, Brno, Czech Republic, 30 August–3 September 2021; pp. 2736–2740. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).