Abstract

Massive open online courses (MOOCs) have exploded in popularity; course reviews are important sources for exploring learners’ perceptions about different factors associated with course design and implementation. This study aims to investigate the possibility of automatic classification for the semantic content of MOOC course reviews to understand factors that can predict learners’ satisfaction and their perceptions of these factors. To do this, this study employs a quantitative research methodology based on sentiment analysis and deep learning. Learners’ review data from Class Central are analyzed to automatically identify the key factors related to course design and implementation and the learners’ perceptions of these factors. A total of 186,738 review sentences associated with 13 subject areas are analyzed, and consequently, seven course factors that learners frequently mentioned are found. These factors include: “Platforms and tools”, “Course quality”, “Learning resources”, “Instructor”, “Relationship”, “Process”, and “Assessment”. Subsequently, each factor is assigned a sentimental value using lexicon-driven methodologies, and the topics that can influence learners’ learning experiences the most are decided. In addition, learners’ perceptions across different topics and subjects are explored and discussed. The findings of this study contribute to helping MOOC instructors in tailoring course design and implementation to bring more satisfactory learning experiences for learners.

1. Introduction

Massive open online courses (MOOCs) have received intensive attention since their first appearance in 2007 [1]. The popularity of MOOCs is promoted by several factors. First, the broad accessibility of the Internet makes MOOCs available for global learners [2]. Second, MOOCs are cost-efficient for everyone, particularly learners in developing countries and/or regions [3]. In addition, the diversity of MOOC resources means that there are courses to suit the taste and needs of different learners [4]. The popularity of MOOCs thus prompts many educational institutions to produce MOOCs.

However, the development and implementation of a MOOC are not cheap; thus, there is a need to justify the benefits [5]. As a result, researchers and instructors have gone to great effort to understand MOOC success and the factors that contribute to their success [6].

Learners’ perceptions of and satisfaction with a MOOC are factors that are increasingly adopted for measuring MOOC success. Traditional ways of understanding learners’ perceptions of a MOOC may use questionnaire survey data (e.g., [7,8,9]). However, this can obtain limited information, and the analysis results depend heavily on the questionnaire design. Additionally, it usually takes a long time to collect all necessary data, and thus a timely and dynamic analysis is impossible.

Nowadays, many MOOC providers and platforms have integrated interactive technologies to allow learners to freely express their perceptions of and satisfaction with different aspects concerning MOOC design and implementation. This source of data is essential for tracking MOOCs’ performance; thus, it is crucial to exploit rich information to allow a timely, dynamic, and automatic understanding of a MOOC’s performance in satisfying learners [10].

Previous studies on MOOC data analysis for understanding learner satisfaction focus mainly on learners’ demographics, personal characteristics, and disposition (e.g., [11,12,13]). Research on course review data analysis is primarily conducted based on qualitative analysis methodologies. Owing to the continuingly growing number of learner-generated reviews, it would be very time-consuming and labor-intensive to detect topics from learner-produced review data through manual evaluation [14]. Thus, alternative analysis methodologies based on natural language processing (NLP) and machine learning should be considered. Although there are studies that have touched upon topic mining, learner sentiment detection, and topic classification in the context of MOOC review analysis (e.g., [15,16,17,18,19,20]), there are a lack of comprehensive and automated course review data analyses from topical and sentimental perspectives, and especially a lack of studies combining deep learning and sentiment analysis methodologies. Additionally, since learners’ satisfaction can vary across subject areas due to differences in study objectives, different modes of assessment, etc. [21], and a comparison of learner dissatisfaction across different subjects are needed.

To that end, the present study aims to understand learner satisfaction with MOOCs based on course review data analysis using sentiment analysis and deep neural networks, with a particular focus on the factors concerning MOOC design and implementation that can lead to learner satisfaction. More specifically, learners’ concerning factors and the sentimental scores on course quality, learning resources, instructors, relationship, assessment, process, platforms, and tools are investigated. We also examine the differences in learners’ satisfaction with the identified factors across different subject areas. The present study is conducted to answer the following three research questions (RQs):

RQ1: Can deep learning automatically identify factors that can predict learner satisfaction in MOOCs?

RQ2: What factors are frequently mentioned by learners?

RQ3: How do learners’ perceptions of the identified factors differ across subjects?

The findings of this study are helpful for MOOC educators and instructors during their design and implementation of a MOOC with a particular focus on improving learners’ satisfaction. With a better understanding of learners’ perceptions of different factors, instructors can tailor their course designs to produce MOOCs that can bring more satisfactory learning experiences for learners.

2. Literature Review

2.1. MOOCs

By providing free online courses, MOOCs offer an openness that enables higher education to be highly accessible worldwide [22,23]. MOOCs are an essential channel to promote the practices of ubiquitous and blended learning that have been popularly adopted in higher education settings (e.g., [24,25]). Despite the constantly growing number of MOOC learners, there is a low retention rate in MOOCs; thus, an increase in research understanding factors that can contribute to MOOC success is needed [23,26,27,28]. Based on the analysis of a Standford MOOC dataset, Hewawalpita et al. [29] found that many MOOC learners did not complete all course learning activities. Watted and Barak [30] found that personal interest, eagerness for self-promotion, and gamification features contributed to learners’ intention to complete a MOOC.

According to Milligan [31], “understanding the nature of learners and their engagement is critical to the success of any online education provision, especially those MOOCs where there is an expectation that the learners should self-motivate and self-direct their learning” (p. 1882). Similarly, Hone and El Said [32] indicated that more studies should be conducted to exploit successful MOOC design and implementation to ensure a high level of course completion.

2.2. Understanding Learners’ Satisfaction with MOOCs

Satisfaction, which shows learners’ perceptions of their learning experiences, is a crucial psychological factor that affects learners’ learning [33]. According to Hew et al. [34], satisfaction is significantly associated with the perceived quality of instruction in conventional face-to-face classroom learning and online education [35,36,37]. In recent years, the significance of learner satisfaction for measuring MOOC success has been increasingly recognized by educators and researchers. For example, Rabin et al. [38] suggest that learner satisfaction is a more appropriate measure of MOOC success, as it primarily focuses on learners’ perceptions of learning experiences. Rabin et al. also claimed that because of different learning goals held by learners, MOOC success ought to be assessed by student-oriented indicators such as satisfaction, rather than outdated indicators such as dropout rates. In other words, when a learner does not intend to complete a MOOC, the completion rate as a success measure seems inappropriate. In addition, when more learners are satisfied with MOOCs, more newcomers will enroll and participate in MOOCs.

2.3. Research on MOOC Learner Satisfaction Based on Course Review Data Analysis

In analyzing the course review data regarding learners’ satisfaction/dissatisfaction with their enrolled course [39], most studies have adopted qualitative manual coding methodologies (e.g., [40,41]). For instance, by qualitatively analyzing 4466 course reviews, [41] recognized seven factors that contributed to learner engagement, including “problem-centric learning, active learning supported by timely feedback, course resources that cater to participants’ learning needs or preferences, and instructor attributes such as enthusiasm or humor” (p. 1). However, the reliability of the results derived from qualitative analysis methodologies depends heavily on analyst expertise. Furthermore, as manual data coding is labor-intensive, only a small dataset can be investigated, making it difficult to deal with the constantly increasing number of course reviews.

With the increasing availability of “big data” in MOOCs alongside the recent trend of applying machine learning and NLP techniques for educational purposes, there has been a rapid growth in studies that adopt text mining and machine learning to gain insight into the determinants of learner satisfaction based on course review data [42,43]. For instance, ref. [34] utilized five supervised machine learning techniques to classify a random sample of 8274 MOOC review sentences into six major topical categories (i.e., structure, video, instructors, content and resources, interaction, and assessment), identifying gradient boosting trees model’s excellent classification performance. By training machine learning classifiers based on K-nearest neighbors, gradient boosting trees, support vector machines, logistic regression, and naive Bayes for the analysis of 24,000 reflective sentences produced by 6000 MOOC learners, [44] found the satisfactory performance of gradient boosting trees in understanding learners’ perceptions. However, ref. [34,44] merely adopted machine learning, and deep neural networks that are widely accepted as preferred solutions for various NLP tasks were not considered. In addition, a comprehensive analysis of course review data from the perspectives of both topics and sentiments in an automated manner is lacking.

To capitalize on the advantages of NLP-oriented text-mining methodologies, the present study incorporates different analysis methods such as TextRCNN and sentiment analysis to conduct a more thorough analysis of the textual content of learner review data. By uncovering learners’ focal points and sentiments based on course review data, we aim to obtain an in-depth understanding of learners’ perceptions of their learning experiences in MOOCs.

3. Methods

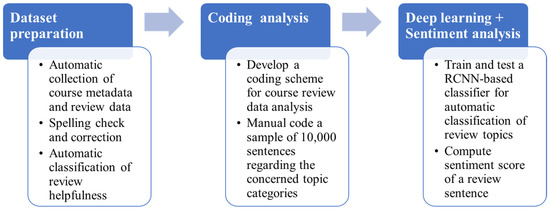

Figure 1 displays the architectural schema of data collection and analysis methodologies. A more detailed description of each major step (dataset preparation, coding scheme development, data coding procedure, review topic classification, and review sentiment analysis) is given in the following sections.

Figure 1.

Architectural schema of data collection and analysis methodologies.

3.1. Dataset Preparation

Course metadata and review data from Class Central were trawled for further processing. After excluding duplicated MOOCs, MOOCs with fewer than 20 review comments (https://www.classcentral.com/help/highest-rated-online-courses accessed on 21 June 2022), and reviews not written in English, 102,184 reviews remained for spell check and correction using TextBlob (https://textblob.readthedocs.io/en/dev/ accessed on 21 June 2022). According to Park and Nicolau [45], online reviews can be divided into helpful and unhelpful reviews. Unhelpful reviews contain limited helpful information, thus contributing little to our understanding of customer satisfaction. Thus, there is a need to distinguish helpful reviews from unhelpful ones before conducting a formal analysis [46]. This study thus treated the top 80% of reviews ranked by helpful votes as helpful reviews. Then, naive Bayes was adopted as a classification model following the suggestion of Lubis et al. [47,48], with TF-IDF (term frequency–inverse document frequency) vectors constructed based on terms in the texts being used as inputs for classifier training and testing. A total of 99,779 helpful reviews were identified and used for further analysis.

3.2. Coding Scheme

This study develops a coding scheme for course review data analysis based on Moore’s theory of transactional distance [49]. For example, Hew et al. [34] used six variables espoused in transactional distance theory to facilitate the understanding of learners’ satisfaction with MOOCs, including course structure, videos, instructors, course content, learning resources, interaction, and assessment. By taking into consideration the factors in previous literature, this study designs a coding scheme with categories “Platforms and tools”, “Course quality”, “Learning resources”, “Instructor”, “Relationship”, “Process”, and “Assessment”. The specific descriptions and examples of different categories are given in Table 1.

Table 1.

Coding scheme for course review data.

3.3. Coding Procedure

This study used sentences as the unit of analysis. Prior to training and testing the automatic classifier, there was a need to produce an “instructional” dataset. Thus, a researcher manually coded a sample of 10,000 sentences based on the seven categories. For example, the sentence “the lecture material aligns well with the textbook he has written for the course, as well as the think python textbook” was coded as “Learning resources” related mainly to lecture materials and textbooks. Another sentence, “the instructor was more involved than I have experienced in many MOOCs, and this was much appreciated and enhanced the learning experience”, was coded as “Instructor” since it emphasizes instructor involvement. An example, “the assignment was quite difficult so we could maintain the level”, was coded as “Assessment” because it focuses on course assignment. To ensure coding reliability, 200 out of the 10,000 sentences were coded by another researcher. As the agreement between the two researchers reached above 90%, only the codes showing inconsistencies were revised after discussion.

3.4. Automatic Classification of Review Data

The training and testing of the classifier included the following steps. First, each sentence was retrieved from textual review content as the model input alongside its referred topical categories. Second, sentences were chopped up into tokens, with punctuation and stop words being removed. Subsequently, the corpus was randomly categorized into training, validating, and testing datasets.

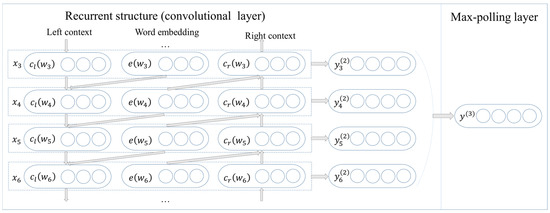

The present study adopted TextRCNN (recurrent convolutional neural network) for classifier training and testing. TextRCNN was developed by Lai et al. [50] as a deep neural model to capture text semantics. RCNN exploits recurrent structure’s capabilities for capturing contextual information and learning text feature representations. RCNN’s structure is presented in Figure 2. RCNN defines and as the previous and next words of . is or to represent left or right. is , , , and so on. represents the word ’s left or right context as a dense vector with real value elements. donates a non-linear activation function. represents a matrix for transforming a hidden layer into the subsequent one. represents a matrix for combining the semantics of the present word with the subsequent word’s left or right context. donates word ’s word embedding as a dense vector with real value elements. By using Equations (1) and (2), the word ’s left- and right-side context vectors and can be obtained. By using Equation (3), the representation of the word turns into the word vector’s concatenation, the forward and backward context vector. The forward and backward recurrent neural networks (RNNs) are adopted for obtaining the representations of individual words’ forward and backward contexts. In Equation (4), donates the bias vector. A linear transformation and a activation function are adopted to simultaneously. The result is then obtained and sent to the following layer. In the max pooling layer, representations of all words are obtained by using Equation (5), where the represents an element-wise function.

Figure 2.

The main structure of RCNN.

To measure how the trained classifier performs, precision, recall, and F1 scores were adopted [51], as shown in Equations (6)–(8). The classifier was trained for 100 epochs with a batch size of 64; categorical cross-entropy was utilized for loss computation.

3.5. Sentiment Analysis of Review Data

Sentiment analysis is a “field of study that analyzes people’s opinions, sentiments, evaluations, appraisals, attitudes, and emotions towards entities such as products, services, organizations, individuals, issues, events, topics, and their attributes” (p. 7) [52]. In the context of teaching and learning, the understanding of MOOC learners’ positive and negative sentiments helps instructors better understand learners’ needs and satisfaction. The present study calculated learners’ sentimental values for each sentence using the syuzhet package, with four sentiment lexicons being considered, including “syuzhet”, “afinn”, “bing”, and “nrc”. To be specific, the “syuzhet” lexicon is composed of 10,748 words in relation to a sentimental value ranging between −1 (negative) and 1 (positive), where 7161 negative words dominate the whole corpus. The “afinn” lexicon consists of a list of 2477 Internet slang and obscene words in English to indicate semantic orientation ranging from −5 (negative) to 5 (positive). The “bing” lexicon includes 2006 and 4783 positive and negative words. Table 2 shows the details of the “syuzhet”, “afinn”, and “bing” lexicons. The “nrc” dictionary proposed by Mohammad and Turney [53] differs from the above three lexicons because it detects eight types of emotions and the associated valences instead of just reporting positive or negative words. The “nrc” lexicon comprises 13,889 words distributed among the different categories, as shown in Table 3.

Table 2.

Details about the “syuzhet”, “afinn”, and “bing” lexicons.

Table 3.

Words in the “nrc” lexicon.

Considering the differences between the four lexicons, we also considered the average value of the sentiment scores calculated based on the four lexicons. A positive sentiment about the instructor could be “the teachers are wonderful and are extremely suppurative”. A negative sentiment about instructors is “the problem is that the professors seem to talk to themselves”. A positive sentiment about assessment could be “the exercise is useful, and the code has some real-world applications, but I would have gotten more out of it if I was able to write some of the segments myself”. A negative sentiment about assessment is “you can’t submit quires to see if you got them right and all your coming problems are marked wrong”.

4. Results

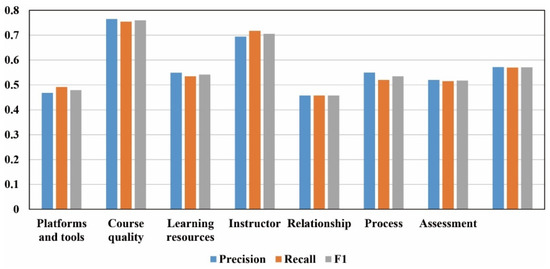

4.1. Performance of the Classification Model

The RCNN classifier’s performance across different categories is presented in Figure 3. The precision values for “Course quality”, “Instructor”, and “Process” were 76.46%, 69.37%, and 54.93%. Regarding recall, the top included “Course quality”, “Instructor”, and “Learning resources”, with values of 75.41%, 71.73%, and 53.45%. Regarding the F1 score, “Course quality”, “Instructor”, and “Learning resources” achieved the highest scores of 75.93%, 70.53%, and 54.15%, respectively. For “Course quality” and “Instructor”, 75.41% and 71.73% of records were classified accurately. To sum up, the RCNN classifier could identify course reviews regarding their topical categories such as “Course quality” and “Instructor”. However, it performed relatively poorly in classifying course reviews associated with categories such as “Platforms and tools” and “Relationship”.

Figure 3.

Performance of RCNN based on precision, recall, and F1-score.

The trained RCNN model was used to predict labels for unannotated sentences. Examples of this automatic prediction process are as follows. For instance, when the sentence “the professors made the lessons lighthearted and understandable” was input into the RCNN classifier, the classifier analyzed its semantic content and predicted confidence levels for all categories. After the analysis, the label with the highest confidence was identified as the predicted category of the input sentence. Thus, the category “Instructor” achieved a probability of 1.0, reflecting its relevance to the category “Instructor”. Similarly, the prediction result for the sentence “a lot of the exercises in this course are about mathematical induction, which is an extremely important skill in university mathematics” indicates its relevance to the category “Assessment” with a probability of 1.0. The prediction result for another sentence, “the only thing that I could not use was the voice recorder”, shows its relevance to the category “Platforms and tools” with a probability of 1.0. By using the RCNN classifier, a final data corpus with complete labels for each sentence was obtained. The distribution of different topics in the data corpus is presented in Table 4. The prevalence of different topics varied a lot. For example, the category “Course quality” had the most sentences (i.e., 105,130, with a proportion of 56.30%), whereas “Process” had the least (i.e., 3165, 1.69%).

Table 4.

Distribution of different categories.

4.2. Learners’ Perceptions of Different Factors

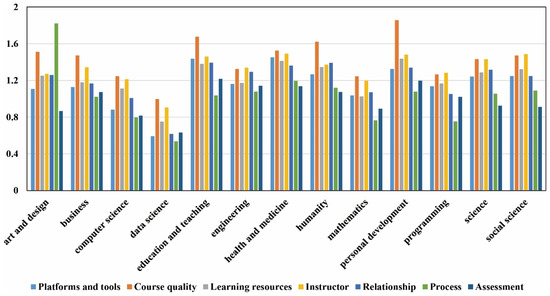

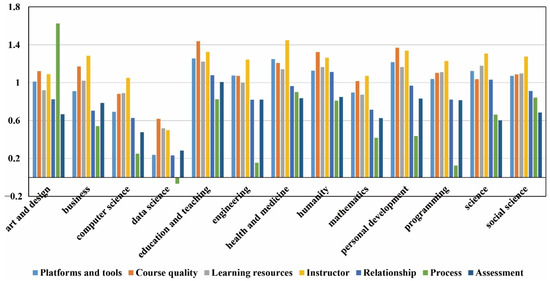

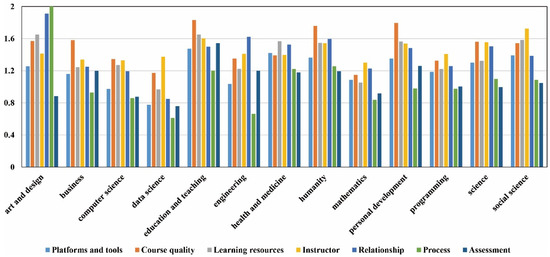

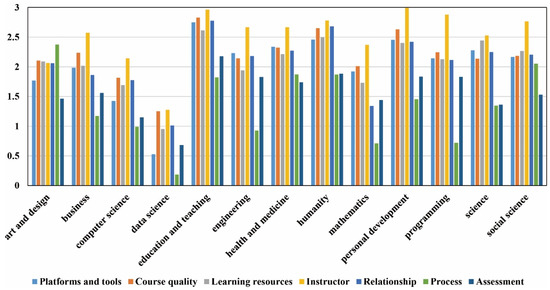

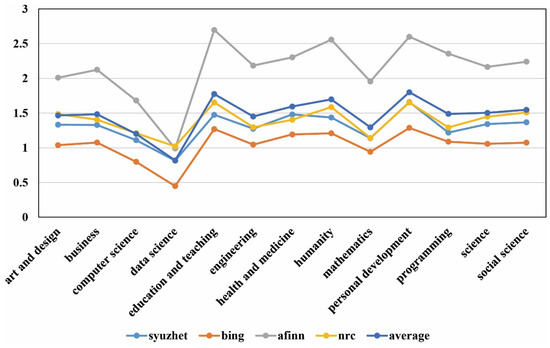

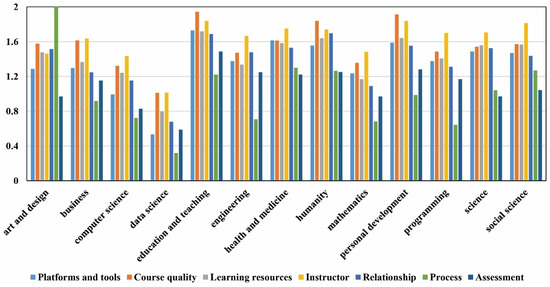

Learners’ satisfaction with different factors across different subjects is determined based on sentiment scores obtained via sentiment analysis. Figure 4 shows the averaged sentiment scores calculated using “syuzhet” for each topic in different subjects. Figure 5 shows the averaged sentiment scores computed using “bing” for each topic in different subjects. Figure 6 shows the averaged sentiment scores computed using “afinn” for each topic in different subjects. Figure 7 shows the averaged sentiment scores computed using “nrc” for each topic in different subjects. From the results, we can see that the distribution patterns for the four figures were similar. For example, for the four types of calculation methods, learners tended to show the lowest level of satisfaction towards almost all of the different factors in their learning of MOOCs related to Data Science. Additionally, for MOOCs related to the fields of Humanities and Social Sciences (for example, Education and Teaching, Humanities, Personal Development, and Social Science), learners tended to show a higher level of satisfaction towards the different factors in comparison to MOOCs related to the fields of Science and Technology (for example, Programming, Mathematics, and Data Science). Such a finding is validated by Figure 8, which shows the averaged overall sentiment scores calculated based on different methodologies for each subject. For example, regarding the subject of Data Science, the overall sentiment scores seem to be low based on different calculation methods. In contrast, for subjects such as Education and Teaching, Humanities, and Personal Development, the overall sentiment scores seem to be high based on the different calculation methods.

Figure 4.

Averaged sentiment scores for each topic in different subjects (calculated based on “syuzhet”).

Figure 5.

Averaged sentiment scores for each topic in different subjects (calculated based on “bing”).

Figure 6.

Averaged sentiment scores for each topic in different subjects (calculated based on “nrc”).

Figure 7.

Averaged sentiment scores for each topic in different subjects (calculated based on “afinn”).

Figure 8.

Averaged overall sentiment scores for each subject (calculated based on different methodologies).

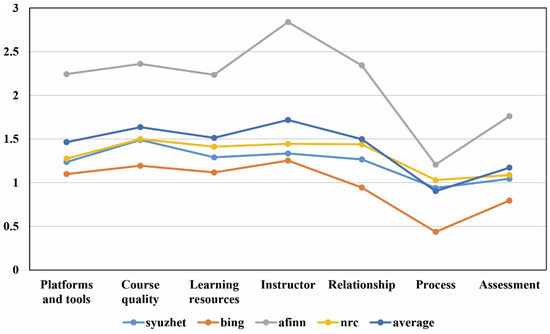

We also obtained the averaged sentiment scores for different subjects, which were calculated based on the averaged sentiment scores obtained based on “syuzhet”, “bing”, “afinn”, and “nrc”. The results are presented in Figure 9, and do not vary much from the previous analysis. When looking at individual factors, there are some interesting results worth noting. For example, in terms of “Process”, learners in almost all subjects showed a low level of satisfaction, especially for learners participating in courses related to Data Science. The low satisfaction towards “Process” is validated by Figure 10, where the overall sentiment scores for “Process” seem to be low based on the different calculation methods. Regarding “Instructor”, learners in almost all subjects showed a high level of satisfaction. The high satisfaction towards “Instructor” is validated by Figure 10, where the overall sentiment scores for “Instructor” seem to be high based on different calculation methods.

Figure 9.

Averaged scores calculated based on averaged sentiment scores of “syuzhet”, “bing”, “afinn”, and “nrc”.

Figure 10.

Averaged overall sentiment scores for each topic (calculated based on different methodologies).

5. Discussion

5.1. Can Deep Learning Automatically Identify Factors That Can Predict Learner Satisfaction in MOOCs?

To answer RQ1, this study investigates the potential of context classification of course review data using deep neural networks. To achieve this, it is essential that the classifier is capable of understanding semantic content. As the semantics of natural language are complicated, alongside the high dimensionality of text representations, conventional machine learning approaches usually fail to learn deep semantic information. Thus, the present study adopts RCNN to combine the unique capabilities of the convolutional neural network (CNN) and the RNN to capture and learn the deep relationships within 99,779 helpful MOOC reviews. Before classifier training, 10,000 randomly selected sentences from these reviews were coded manually regarding the referred topics according to a coding scheme with seven categories (“Platforms and tools”, “Course quality”, “Learning resources”, “Instructor”, “Relationship”, “Process”, and “Assessment”) being considered. The classification performance of the RCNN classifier indicates its capability to classify the referred topical categories mentioned by MOOC learners during their evaluation of the attended courses regarding instructional design and implementation.

As for the RCNN classifier’s performance across different categories, variations were found. For example, the RCNN achieved a classification accuracy of higher than 70% for categories such as “Instructor” and “Course quality”. In contrast, it showed a classification accuracy of lower than 50% for categories such as “Relationship” and “Platforms and tools”. The sample sizes may explain the differences in classification performance across different categories. More specifically, the categories with higher classification performance tended to have a larger sample size than those with a lower performance. This means that it was difficult for the deep neural network-based classifier to capture the deep relationships from the course review content for categories with sample sizes. Another explanation lies in the easiness of topic interpretation. Take the category “Instructor” as an example. It is relatively easy for the classifier to discriminate because learners commonly use terms such as professor, instructor, and teacher when expressing their perception of or satisfaction towards instructors. Thus, the classifier can associate the “Instructor” effortlessly with the appearance of these identifiable terms within a sentence. On the contrary, it is much more difficult for the classifier to detect categories such as “Relationship” and “Process”, as they are less likely to associate with identifiable terms.

5.2. What Factors Are Frequently Mentioned by Learners?

The classification results provide answers to RQ2, showing that learners more frequently mentioned issues regarding categories such as “Course quality”, “Instructor”, and “Assessment”, as compared to categories such as “Relationship” and “Process”. Firstly, course quality is the most frequently discussed topic among MOOC learners. Course quality’s significance is also reported in prior research. For instance, according to Albelbisi and Yusop [54], content quality and course materials that are easily understandable are essential for encouraging self-learning in MOOCs. Similarly, our results indicate that the success of MOOCs is positively associated with the high quality of course design, course content, and ease of content understanding. This finding is also highlighted by [55,56], who reported course quality’s direct effect on learners’ success in online learning. As Yousef et al. [57] suggest, the quality of course content is essential in promoting global learners’ motivation to enroll and participate in MOOCs. A MOOC with first-rate design enables learners to organize and plan their learning independently, promotes their motivation to set goals, identifies efficient learning methodologies, and attains learning success. Lin et al. [58] also verified that learners’ perceptions about the MOOC quality could significantly affect their acceptance of knowledge. Considering the importance of course quality, we suggest that designers and instructors of MOOCs ought to guarantee that the course materials are easy to understand with high-quality content to provide learners with a genuine chance to develop responsibility for their learning.

“Instructor” was the second most frequently mentioned issue among MOOC learners. This is consistent with prior research [27,28]. According to Hew et al. [34], knowledgeable, enthusiastic, and humorous instructors can satisfy learners more easily. Watson et al. [59] also suggest that instructors should show specialization in the subjects they teach and deliver the content clearly and concisely by using case studies and examples.

Another topic frequently mentioned by MOOC learners is “Assessment”, which is consistent with Jordan [60]. This suggests that designers and instructors of MOOCs ought to pay more attention to the potential of assessments to promote learner satisfaction because this allows learners to verify and assess their learning against their goals. The significance of assessment in MOOCs has been highlighted by researchers. According to Bali [61], assessment tasks in MOOCs ought to offer chances for learners to apply their learned knowledge rather than being used to merely recall knowledge. Similarly, Hew [62] highlighted the necessity of active learning and knowledge application to improve MOOC learners’ engagement. According to Hew et al. and [34]’s analyses of learners’ course review data, assessments that could promote learner satisfaction should be clearly stated, implicitly associated with lecture content, and capable of allowing learners to apply knowledge learned in practice.

Comparatively, learners tended to mention the “Relationship” and “Process” factors infrequently. This suggests that interaction or instructor feedback has little impact on their perceptions of learning in MOOCs. One reason is that most learners understand that MOOC instructors have little time to spend supporting individuals in a large class. As a result, learners commonly have low expectations about interaction or instructor feedback. As learners in MOOCs are mainly motivated to broaden their horizons and enhance their expertise [63], they care mainly about whether they can achieve useful learning (e.g., their expected skills or knowledge) via MOOCs rather than about how the course is taught or whether there is rich interaction.

5.3. How Do Learners’ Perceptions of the Identified Factors Differ across Subjects?

The investigation into learners’ perceptions of the identified factors across different subjects provides answers to RQ3, indicating that learners’ experiences in MOOCs are associated with subject differences. Such a finding is also confirmed by Li et al. [18], who considered MOOCs related to Arts and Humanities, Business, and Social Science as knowledge-seeking courses with an emphasis on learning concepts or principles, checking knowledge with quizzes and assignments, and enhancing decision-making in practice. On the contrary, MOOCs in Computer Science, Data Science, and Information Technology are mainly skill-seeking-driven and require learning through sampled problem-solving, laboratory tasks, projects, and assignments to promote new skill acquisition. Thus, in designing and implementing MOOCs related to different domains, designers and instructors should consider learners’ different concerns and needs. For example, to satisfy learners that enroll in skill-seeking courses, more attention should be paid to problem-solving and project and assignment design to demonstrate their acquired skills.

We also found that learners in almost all subjects showed a low level of satisfaction towards topics such as “Process”. In contrast, they tended to show high satisfaction towards topics such as “Instructor”. A reason for the low satisfaction level of “Process” lies in the difficulties in providing inquiry support to individuals because instructors are overwhelmed with the workload of dealing with large classes [64]. Another challenge for MOOC instructors is feedback provision on assignments in line with individual solutions [65]. However, some providers have integrated interactive technologies and modules (e.g., discussion forums and live chats) into MOOC platforms to support problem-solving and knowledge inquiry. However, it takes considerable time and effort to run these interactive modules [66]. Therefore, alternative tools such as video feedback can be integrated to “help MOOC instructors scale up the provision of perceived personal attention to students” (p. 15) [65]. Additionally, MOOC designers and instructors ought to pay more attention to problem-solving during instruction rather than merely focusing on information delivery [41].

6. Conclusions

This study adopts deep learning and sentiment analysis to explore learners’ perceptions of different factors regarding MOOC design and implementation, and understand factors that can impact learners’ satisfaction with their learning in MOOCs. The contributions of this study include: (1) providing a quantitative analysis of 102,184 recodes of learners’ reviews on MOOCs; (2) proposing a novel deep learning and sentiment analysis methodological framework for the examination of large-scale learner-produced review content; and (3) identifying essential factors that are frequently mentioned by learners who have attended MOOCs.

This study has limitations. We only used data collected in Class Central; thus, different MOOC websites such as EdX, Coursera, and FutureLearn should be considered to validate the results and findings. Another limitation lies in the use of sentences for the calculation of sentimental scores. This can be error-prone, since learners sometimes show contradictory attitudes towards different issues within one sentence. Thus, future work should focus on proposing fine-grained sentiment analysis models for the detection of learners’ accurate perceptions of a specific topic based on the associated context. In addition, the RCNN classifier’s performance was especially low for some categories (e.g., “Relationship” and “Platforms and tools”) compared to others. Future work should consider revising the topic categories and seek ways to improve the classification performance.

Author Contributions

Conceptualization, X.C. and F.L.W.; methodology, G.C.; software, M.-K.C.; validation, F.L.W. and H.X.; formal analysis, X.C.; investigation, F.L.W.; resources, H.X.; data curation, G.C.; writing—original draft preparation, X.C.; writing—review and editing, M.-K.C.; visualization, G.C.; supervision, F.L.W.; project administration, H.X.; funding acquisition, F.L.W., G.C. and H.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Research Grants Council of the Hong Kong Special Adminstrative Region, China (UGC/FDS16/E01/19), The One-off Special Fund from Central and Faculty Fund in Support of Research from 2019/20 to 2021/22 (MIT02/19-20), The Interdisciplinary Research Scheme of Dean’s Research Fund 2021/22 (FLASS/DRF/IDS-3) of The Education University of Hong Kong, and The Faculty Research Grants (DB22B4), Lingnan University.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Liu, C.; Zou, D.; Chen, X.; Xie, H.; Chan, W.H. A bibliometric review on latent topics and trends of the empirical MOOC literature (2008–2019). Asia Pac. Educ. Rev. 2021, 22, 515–534. [Google Scholar] [CrossRef]

- Barclay, C.; Logan, D. Towards an understanding of the implementation & adoption of massive online open courses (MOOCs) in a developing economy context. In Proceedings of the Proceedings Annual Workshop of the AIS Special Interest Group for ICT in Global Development, Milano, Italy, 14 December 2013; pp. 1–14. [Google Scholar]

- Kennedy, J. Characteristics of massive open online courses (MOOCs): A research review, 2009–2012. J. Interact. Online Learn. 2014, 13, 1–15. [Google Scholar]

- Means, B.; Toyama, Y.; Murphy, R.; Bakia, M.; Jones, K. Evaluation of Evidence-Based Practices in Online Learning: A Meta-Analysis and Review of Online Learning Studies; Center for Technology in Learning, U.S. Department of Education: Washington, DC, USA, 2009.

- Hollands, F.M.; Tirthali, D. MOOCs: Expectations and Reality; Center for Benefit-Cost Studies of Education, Teachers College, Columbia University: New York, NY, USA, 2014; Volume 138. [Google Scholar]

- Henderikx, M.A.; Kreijns, K.; Kalz, M. Refining success and dropout in massive open online courses based on the intention–behavior gap. Distance Educ. 2017, 38, 353–368. [Google Scholar] [CrossRef]

- Ma, L.; Lee, C.S. Investigating the adoption of MOOC s: A technology–user–environment perspective. J. Comput. Assist. Learn. 2019, 35, 89–98. [Google Scholar] [CrossRef]

- Zhang, M.; Yin, S.; Luo, M.; Yan, W. Learner control, user characteristics, platform difference, and their role in adoption intention for MOOC learning in China. Australas. J. Educ. Technol. 2017, 33. [Google Scholar] [CrossRef]

- Teo, T.; Dai, H.M. The role of time in the acceptance of MOOCs among Chinese university students. Interact. Learn. Environ. 2019, 30, 651–664. [Google Scholar] [CrossRef]

- Gupta, K.P. Investigating the adoption of MOOCs in a developing country: Application of technology-user-environment framework and self-determination theory. Interact. Technol. Smart Educ. 2019, 17, 355–375. [Google Scholar] [CrossRef]

- Rizvi, S.; Rienties, B.; Khoja, S.A. The role of demographics in online learning; A decision tree based approach. Comput. Educ. 2019, 137, 32–47. [Google Scholar] [CrossRef]

- Montoya, M.S.R.; Martínez-Pérez, S.; Rodríguez-Abitia, G.; Lopez-Caudana, E. Digital accreditations in MOOC-based training on sustainability: Factors that influence terminal efficiency. Australas. J. Educ. Technol. 2021, 38, 164–182. [Google Scholar]

- Hong, J.-C.; Lee, Y.-F.; Ye, J.-H. Procrastination predicts online self-regulated learning and online learning ineffectiveness during the coronavirus lockdown. Personal. Individ. Differ. 2021, 174, 110673. [Google Scholar] [CrossRef]

- El-Halees, A. Mining opinions in user-generated contents to improve course evaluation. In Proceedings of the International Conference on Software Engineering and Computer Systems, Pahang, Malaysia, 27–29 June 2011; pp. 107–115. [Google Scholar]

- Chen, X.; Zou, D.; Cheng, G.; Xie, H. Detecting latent topics and trends in educational technologies over four decades using structural topic modeling: A retrospective of all volumes of Computers & Education. Comput. Educ. 2020, 151, 103855. [Google Scholar]

- Chen, X.; Zou, D.; Xie, H. Fifty years of British Journal of Educational Technology: A topic modeling based bibliometric perspective. Br. J. Educ. Technol. 2020, 51, 692–708. [Google Scholar] [CrossRef]

- Lundqvist, K.; Liyanagunawardena, T.; Starkey, L. Evaluation of student feedback within a mooc using sentiment analysis and target groups. Int. Rev. Res. Open Distrib. Learn. 2020, 21, 140–156. [Google Scholar] [CrossRef]

- Li, L.; Johnson, J.; Aarhus, W.; Shah, D. Key factors in MOOC pedagogy based on NLP sentiment analysis of learner reviews: What makes a hit. Comput. Educ. 2022, 176, 104354. [Google Scholar] [CrossRef]

- Burstein, J.; Marcu, D.; Andreyev, S.; Chodorow, M. Towards automatic classification of discourse elements in essays. In Proceedings of the 39th annual meeting of the Association for Computational Linguistics, Toulouse, France, 9–11 July 2001; pp. 98–105. [Google Scholar]

- Satu, M.S.; Khan, M.I.; Mahmud, M.; Uddin, S.; Summers, M.A.; Quinn, J.M.; Moni, M.A. Tclustvid: A novel machine learning classification model to investigate topics and sentiment in covid-19 tweets. Knowl.-Based Syst. 2021, 226, 107126. [Google Scholar] [CrossRef] [PubMed]

- Laird, T.F.N.; Shoup, R.; Kuh, G.D.; Schwarz, M.J. The effects of discipline on deep approaches to student learning and college outcomes. Res. High. Educ. 2008, 49, 469–494. [Google Scholar] [CrossRef]

- Geng, S.; Niu, B.; Feng, Y.; Huang, M. Understanding the focal points and sentiment of learners in MOOC reviews: A machine learning and SC-LIWC-based approach. Br. J. Educ. Technol. 2020, 51, 1785–1803. [Google Scholar] [CrossRef]

- Aparicio, M.; Oliveira, T.; Bacao, F.; Painho, M. Gamification: A key determinant of massive open online course (MOOC) success. Inf. Manag. 2019, 56, 39–54. [Google Scholar] [CrossRef]

- Limone, P. Towards a hybrid ecosystem of blended learning within university contexts. In Proceedings of the Technology Enhanced Learning Environments for Blended Education—The Italian e-Learning Conference 2021, Foggia, Italy, 5–6 October 2021; pp. 1–7. Available online: https://sourceforge.net/projects/openccg/ (accessed on 21 June 2022).

- Di Fuccio, R.; Ferrara, F.; Siano, G.; Di, A. ALEAS: An interactive application for ubiquitous learning in higher education in statistics. In Proceedings of the Stat. Edu’21-New Perspectives in Statistics Education, Vieste, Italy, 10–11 June 2022; pp. 77–82. [Google Scholar]

- Rai, L.; Chunrao, D. Influencing factors of success and failure in MOOC and general analysis of learner behavior. Int. J. Inf. Educ. Technol. 2016, 6, 262. [Google Scholar] [CrossRef]

- Chen, X.; Cheng, G.; Xie, H.; Chen, G.; Zou, D. Understanding MOOC Reviews: Text Mining using Structural Topic Model. Hum.-Cent. Intell. Syst. 2021, 1, 55–65. [Google Scholar] [CrossRef]

- Chen, X.; Zou, D.; Xie, H.; Cheng, G. What Are MOOCs Learners’ Concerns? Text Analysis of Reviews for Computer Science Courses. In Proceedings of the International Conference on Database Systems for Advanced Applications, Jeju, Korea, 24–27 September 2020; pp. 73–79. [Google Scholar]

- Hewawalpita, S.; Herath, S.; Perera, I.; Meedeniya, D. Effective Learning Content Offering in MOOCs with Virtual Reality-An Exploratory Study on Learner Experience. J. Univers. Comput. Sci. 2018, 24, 129–148. [Google Scholar]

- Watted, A.; Barak, M. Motivating factors of MOOC completers: Comparing between university-affiliated students and general participants. Internet High. Educ. 2018, 37, 11–20. [Google Scholar] [CrossRef]

- Phan, T.; McNeil, S.G.; Robin, B.R. Students’ patterns of engagement and course performance in a Massive Open Online Course. Comput. Educ. 2016, 95, 36–44. [Google Scholar] [CrossRef]

- Hone, S.; El Said, G.R. Exploring the factors affecting MOOC retention: A survey study. Comput. Educ. 2016, 98, 157–168. [Google Scholar] [CrossRef]

- Kuo, Y.-C.; Walker, A.E.; Schroder, K.E.; Belland, B.R. Interaction, Internet self-efficacy, and self-regulated learning as predictors of student satisfaction in online education courses. Internet High. Educ. 2014, 20, 35–50. [Google Scholar] [CrossRef]

- Hew, K.F.; Hu, X.; Qiao, C.; Tang, Y. What predicts student satisfaction with MOOCs: A gradient boosting trees supervised machine learning and sentiment analysis approach. Comput. Educ. 2020, 145, 103724. [Google Scholar] [CrossRef]

- Jiang, H.; Islam, A.; Gu, X.; Spector, J.M. Online learning satisfaction in higher education during the COVID-19 pandemic: A regional comparison between Eastern and Western Chinese universities. Educ. Inf. Technol. 2021, 26, 6747–6769. [Google Scholar] [CrossRef] [PubMed]

- Sutherland, D.; Warwick, P.; Anderson, J.; Learmonth, M. How do quality of teaching, assessment, and feedback drive undergraduate course satisfaction in UK Business Schools? A comparative analysis with nonbusiness school courses using the UK National Student Survey. J. Manag. Educ. 2018, 42, 618–649. [Google Scholar] [CrossRef]

- Elia, G.; Solazzo, G.; Lorenzo, G.; Passiante, G. Assessing learners’ satisfaction in collaborative online courses through a big data approach. Comput. Hum. Behav. 2019, 92, 589–599. [Google Scholar] [CrossRef]

- Rabin, E.; Kalman, Y.M.; Kalz, M. An empirical investigation of the antecedents of learner-centered outcome measures in MOOCs. Int. J. Educ. Technol. High. Educ. 2019, 16, 14. [Google Scholar] [CrossRef]

- Ramesh, A.; Goldwasser, D.; Huang, B.; Daumé, H., III; Getoor, L. Modeling learner engagement in MOOCs using probabilistic soft logic. In Proceedings of the NIPS Workshop on Data Driven Education, Lake Tahoe, NV, USA, 9 December 2013; pp. 62–69. [Google Scholar]

- Nanda, G.; Douglas, K.A.; Waller, D.R.; Merzdorf, H.E.; Goldwasser, D. Analyzing large collections of open-ended feedback from MOOC learners using LDA topic modeling and qualitative analysis. IEEE Trans. Learn. Technol. 2021, 14, 146–160. [Google Scholar] [CrossRef]

- Foon Hew, K. Unpacking the strategies of ten highly rated MOOCs: Implications for engaging students in large online courses. Teach. Coll. Rec. 2018, 120, 1–40. [Google Scholar]

- Powell, S.; MacNeill, S. Institutional readiness for analytics. JISC CETIS Anal. Ser. 2012, 1, 1–10. [Google Scholar]

- Mak, S.; Williams, R.; Mackness, J. Blogs and forums as communication and learning tools in a MOOC. In Proceedings of the 7th International Conference on Networked Learning 2010, Aalborg, Denmark, 3–4 May 2010; pp. 275–285. [Google Scholar]

- Hew, K.F.; Qiao, C.; Tang, Y. Understanding student engagement in large-scale open online courses: A machine learning facilitated analysis of student’s reflections in 18 highly rated MOOCs. Int. Rev. Res. Open Distrib. Learn. 2018, 19, 69–93. [Google Scholar] [CrossRef]

- Park, S.; Nicolau, J.L. Asymmetric effects of online consumer reviews. Ann. Tour. Res. 2015, 50, 67–83. [Google Scholar] [CrossRef]

- O’Mahony, M.P.; Smyth, B. A classification-based review recommender. In Research and Development in Intelligent Systems XXVI; Springer: Berlin/Heidelberg, Germany, 2010; pp. 49–62. [Google Scholar]

- Lubis, F.F.; Rosmansyah, Y.; Supangkat, S.H. Topic discovery of online course reviews using LDA with leveraging reviews helpfulness. Int. J. Electr. Comput. Eng. 2019, 9, 426–438. [Google Scholar] [CrossRef]

- Lubis, F.F.; Rosmansyah, Y.; Supangkat, S.H. Improving course review helpfulness prediction through sentiment analysis. In Proceedings of the 2017 International Conference on ICT For Smart Society (ICISS), Tangerang, Indonesia, 18–19 September 2017; pp. 1–5. [Google Scholar]

- Moore, M. Theory of transactional distance. In Theoretical Principles of Distance Education; Keegan, D., Ed.; Routledge: New York, NY, USA, 1993; pp. 22–38. [Google Scholar]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent convolutional neural networks for text classification. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 2267–2273. [Google Scholar]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Liu, B. Sentiment analysis and opinion mining. Synth. Lect. Hum. Lang. Technol. 2012, 5, 1–167. [Google Scholar]

- Mohammad, S.; Turney, P. Emotions evoked by common words and phrases: Using mechanical turk to create an emotion lexicon. In Proceedings of the NAACL HLT 2010 Workshop on Computational Approaches to Analysis and Generation of Emotion in Text, Los Angeles, CA, USA, 5 June 2010; pp. 26–34. [Google Scholar]

- Albelbisi, N.A.; Yusop, F.D. Factors influencing learners’ self–regulated learning skills in a massive open online course (MOOC) environment. Turk. Online J. Distance Educ. 2019, 20, 1–16. [Google Scholar] [CrossRef]

- Mohammadi, H. Investigating users’ perspectives on e-learning: An integration of TAM and IS success model. Comput. Hum. Behav. 2015, 45, 359–374. [Google Scholar] [CrossRef]

- Cidral, W.A.; Oliveira, T.; Di Felice, M.; Aparicio, M. E-learning success determinants: Brazilian empirical study. Comput. Educ. 2018, 122, 273–290. [Google Scholar] [CrossRef]

- Yousef, A.M.F.; Chatti, M.A.; Schroeder, U.; Wosnitza, M. What drives a successful MOOC? An empirical examination of criteria to assure design quality of MOOCs. In Proceedings of the 2014 IEEE 14th International Conference on Advanced Learning Technologies, Athens, Greece, 7–10 July 2014; pp. 44–48. [Google Scholar]

- Lin, Y.-L.; Lin, H.-W.; Hung, T.-T. Value hierarchy for massive open online courses. Comput. Hum. Behav. 2015, 53, 408–418. [Google Scholar] [CrossRef]

- Watson, S.L.; Watson, W.R.; Janakiraman, S.; Richardson, J. A team of instructors’ use of social presence, teaching presence, and attitudinal dissonance strategies: An animal behaviour and welfare MOOC. Int. Rev. Res. Open Distrib. Learn. 2017, 18, 68–91. [Google Scholar] [CrossRef][Green Version]

- Jordan, K. Massive open online course completion rates revisited: Assessment, length and attrition. Int. Rev. Res. Open Distrib. Learn. 2015, 16, 341–358. [Google Scholar] [CrossRef]

- Bali, M. MOOC pedagogy: Gleaning good practice from existing MOOCs. J. Online Learn. Teach. 2014, 10, 44–55. [Google Scholar]

- Hew, K.F. Promoting engagement in online courses: What strategies can we learn from three highly rated MOOCS. Br. J. Educ. Technol. 2016, 47, 320–341. [Google Scholar] [CrossRef]

- Milligan, C.; Littlejohn, A. Why study on a MOOC? The motives of students and professionals. Int. Rev. Res. Open Distrib. Learn. 2017, 18, 92–102. [Google Scholar] [CrossRef]

- Zheng, S.; Wisniewski, P.; Rosson, M.B.; Carroll, J.M. Ask the instructors: Motivations and challenges of teaching massive open online courses. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing, San Francisco, CA, USA, 27 February–2 March 2016; pp. 206–221. [Google Scholar]

- Yu, H.; Miao, C.; Leung, C.; White, T.J. Towards AI-powered personalization in MOOC learning. Npj Sci. Learn. 2017, 2, 15. [Google Scholar] [CrossRef]

- Yousef, A.M.F.; Sunar, A.S. Opportunities and challenges in personalized MOOC experience. In Proceedings of the ACM WEB Science Conference 2015, Web Science Education Workshop, Oxford, UK, 29 July 2015. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).