Deep Learning for Vulnerability and Attack Detection on Web Applications: A Systematic Literature Review

Abstract

:1. Introduction and Background

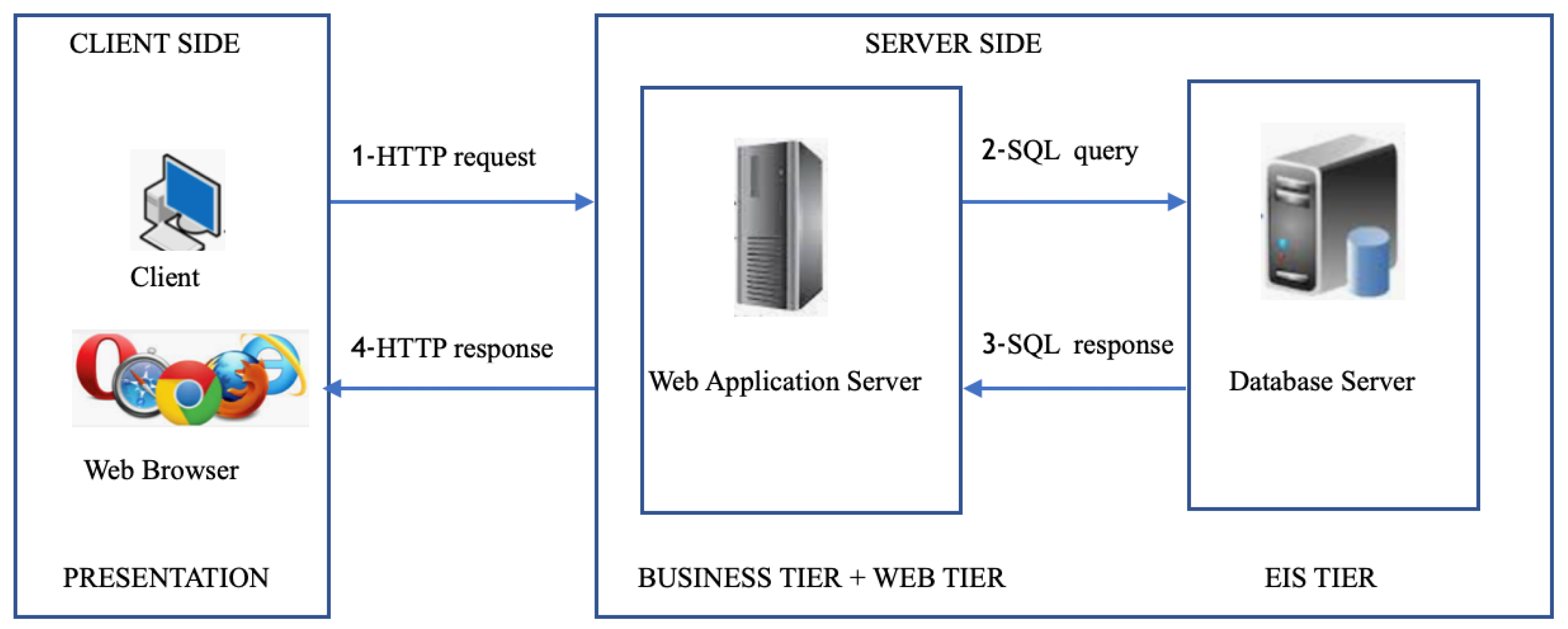

1.1. Web Applications Architecture

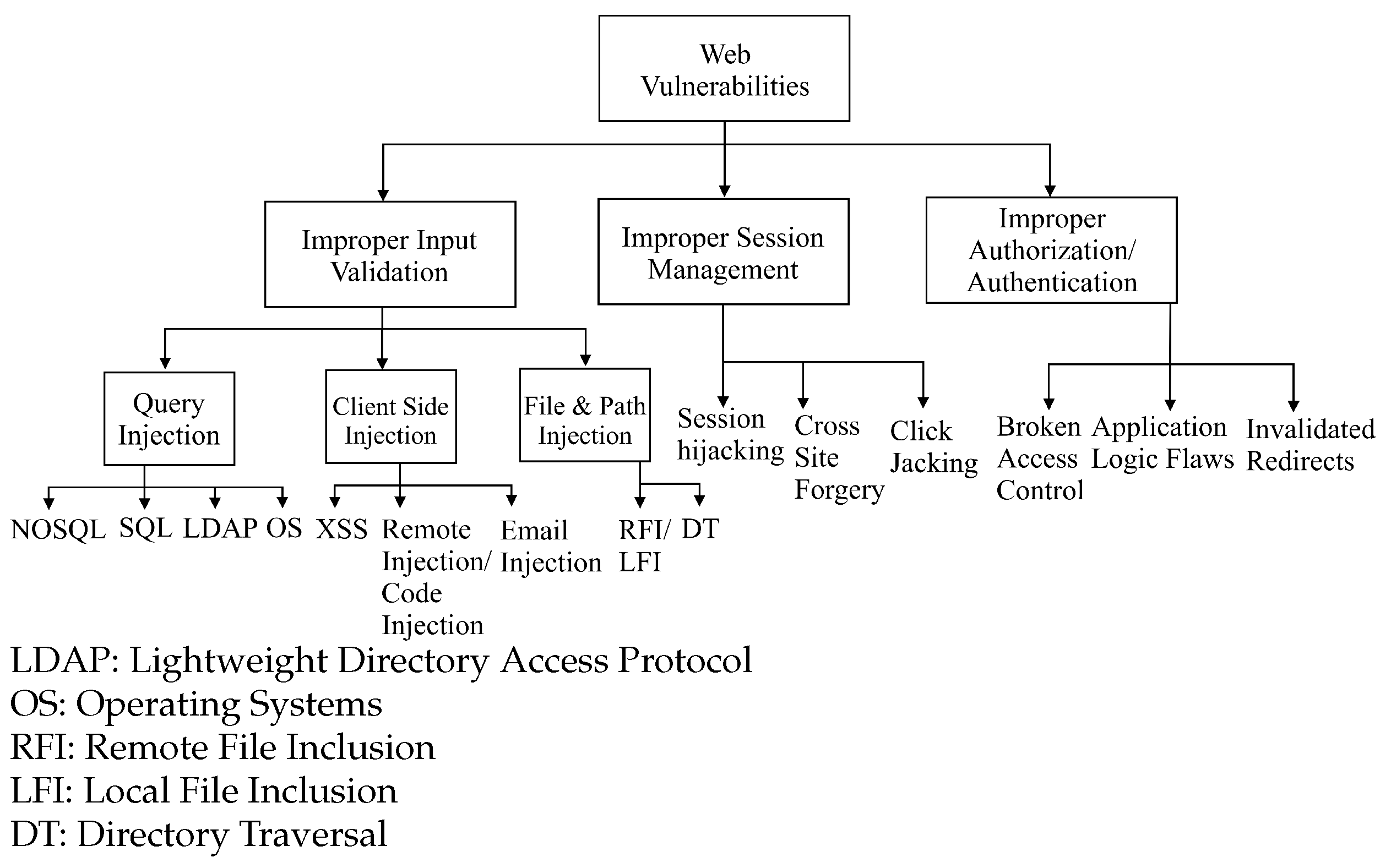

1.2. Web Vulnerabilities

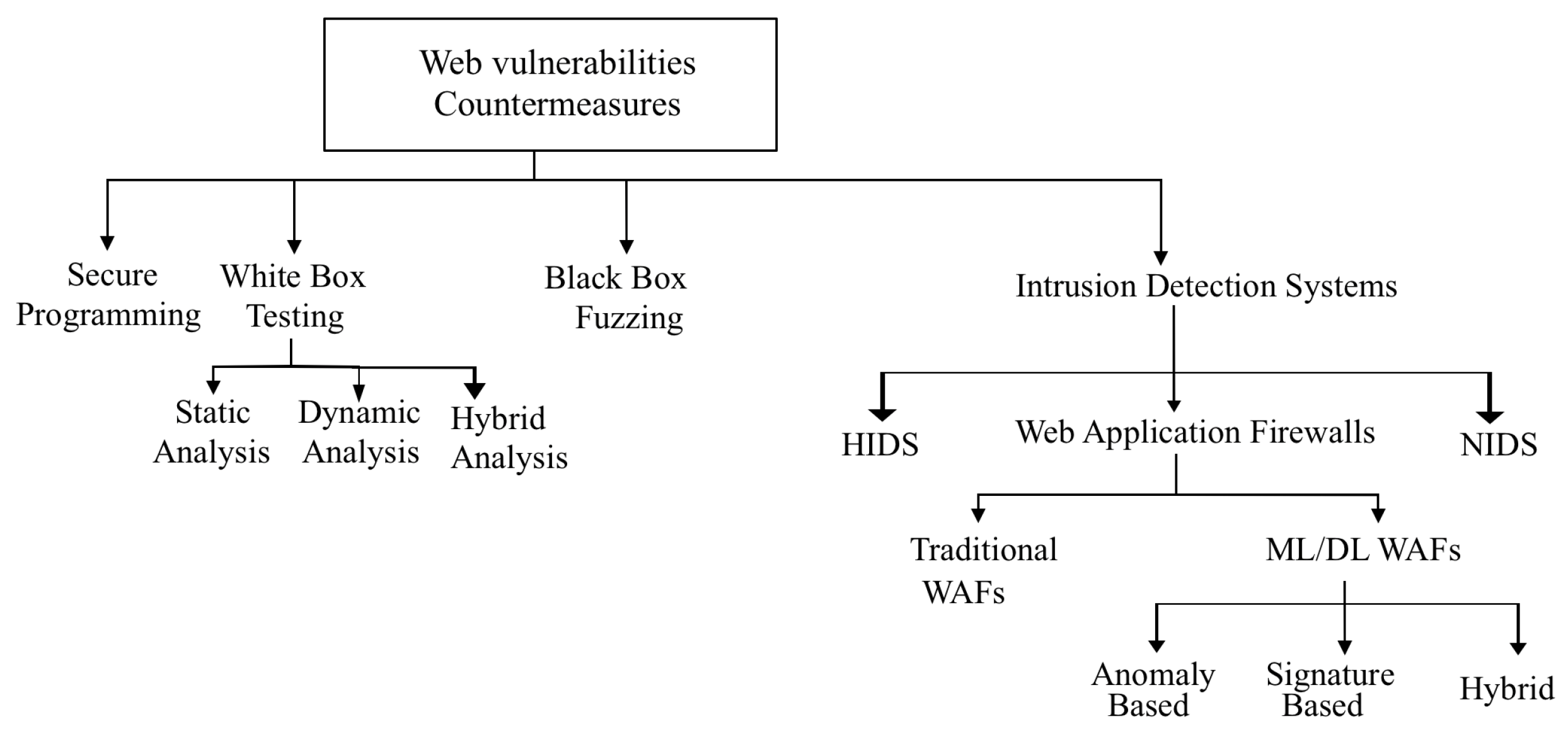

1.3. Web Vulnerabilities Countermeasures

1.3.1. Secure Programming

1.3.2. Static Analysis

1.3.3. Dynamic Analysis

1.3.4. Black-Box Fuzzing

1.3.5. Intrusion Detection Systems (IDS)

- Identifying the Primary Studies (PS) related to the DL-based web attacks detection and getting different insights from the studies.

- Performing a quality analysis on the PS.

- Presenting the results of the investigation including publication information, datasets, detection models, detection performance, research focus, and limitations.

- Summarizing the findings and identifying some interesting opportunities for future work in the domain of DL-based web attacks detection.

2. Related Work

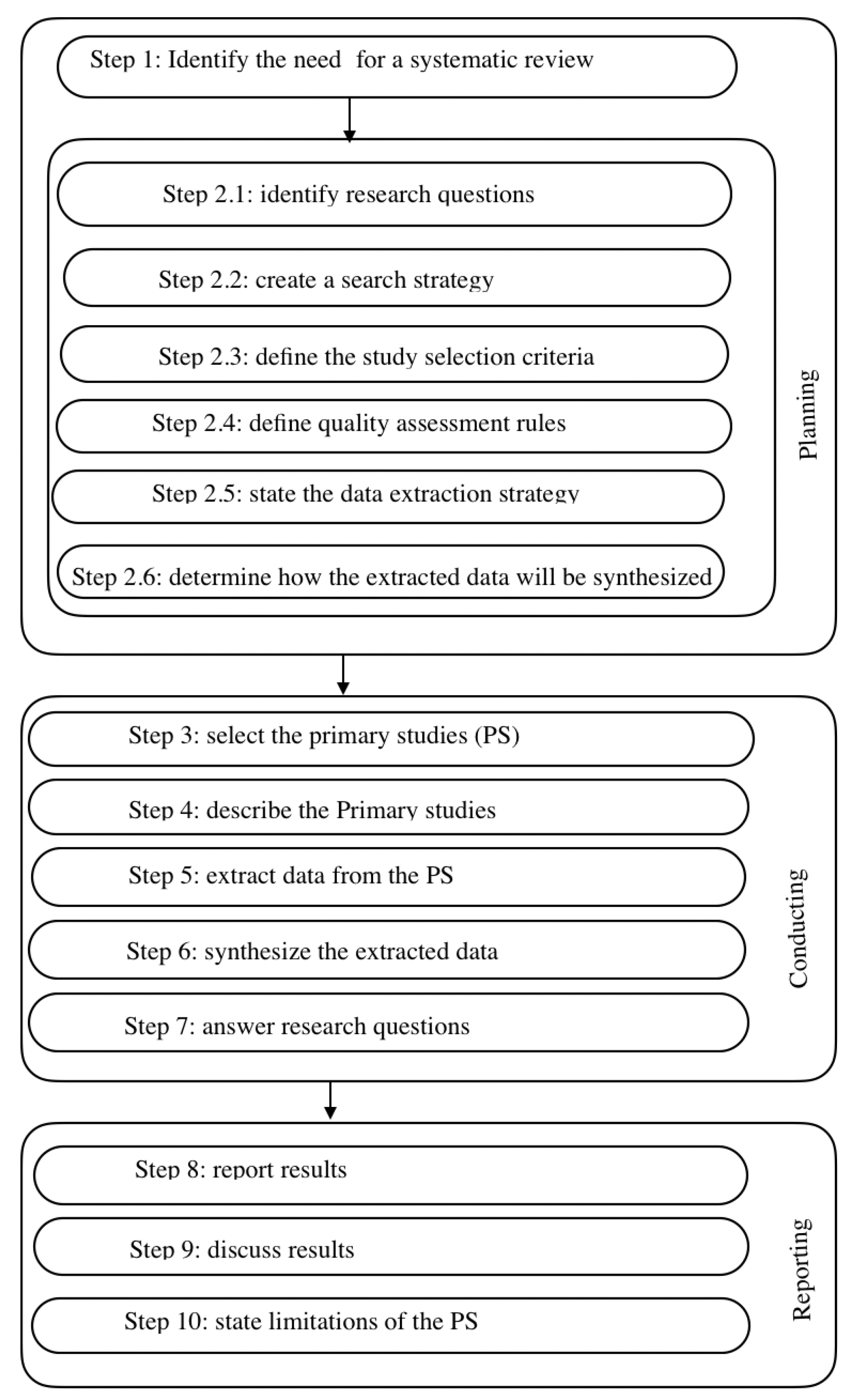

3. Review Methodology

3.1. Research Questions

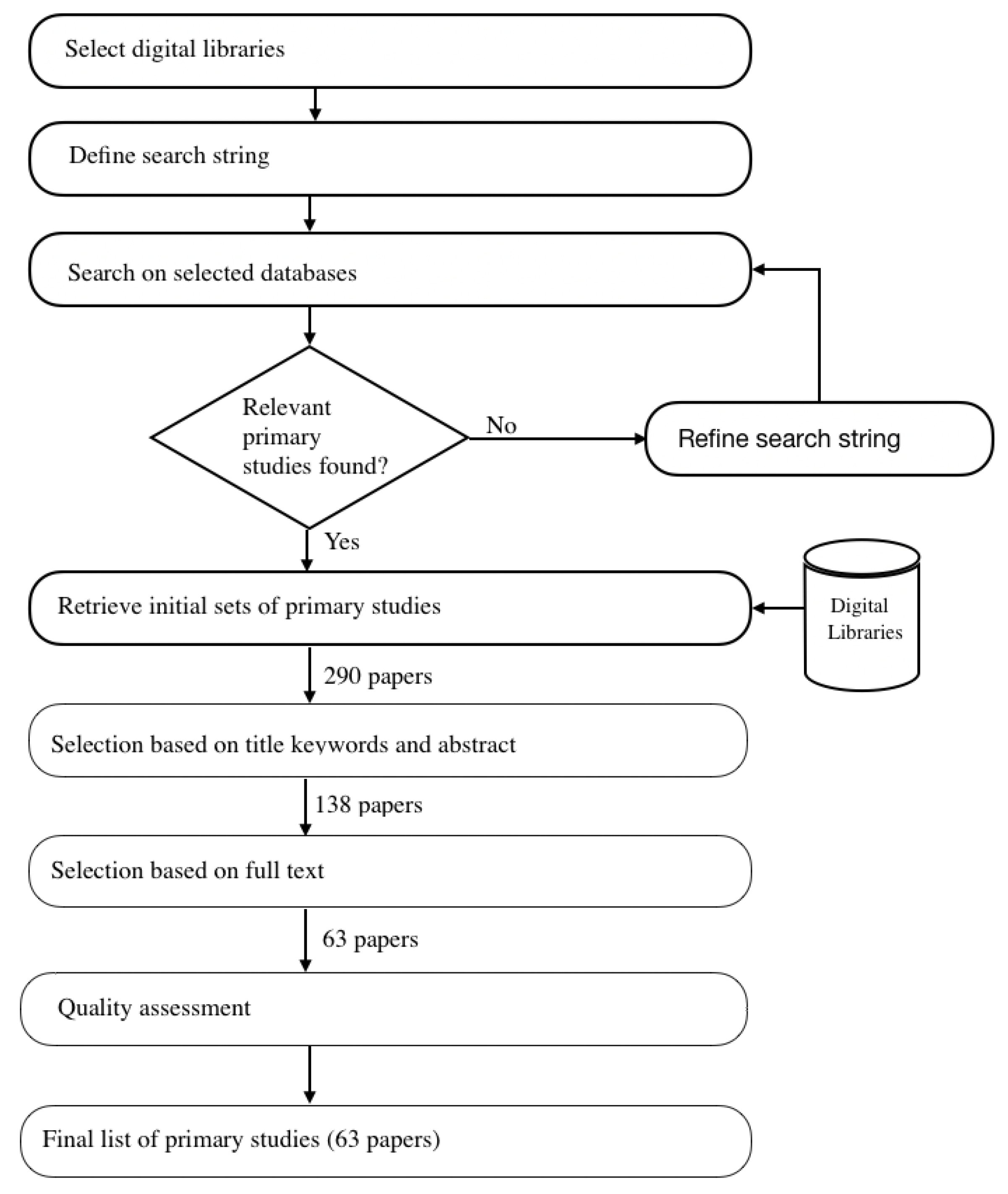

3.2. Search Strategy

- •

- (“deep learning” OR “neural networks”) AND (“web attacks” OR “web security” OR “web application security” OR “web vulnerabilities”)

3.3. Study Selection

- Getting rid of duplicate PS.

- Applying inclusion and exclusion criteria to determine the relevant PS.

- Performing a quality assessment of selected PS.

- •

- Research works include web attacks and Deep Learning terms in title or abstract.

- •

- Research works whose main topic is detecting web attacks using Deep Learning.

- •

- Research works published between 2010 and September 2021.

- •

- Research works that contain a quantitative evaluation of the proposed solutions.

- •

- Studies involve detecting web attacks using methods other than Deep Learning.

- •

- Studies involve the development of Deep Learning models for the detection of Malware, Spam, Network intrusions, or Phishing attacks.

- •

- Studies not considered as published journal papers or conference proceedings.

- •

- Studies written in a language other than English.

3.4. Quality Assessment Criteria

3.5. Data Extraction

3.6. Data Synthesis

4. Results and Discussion

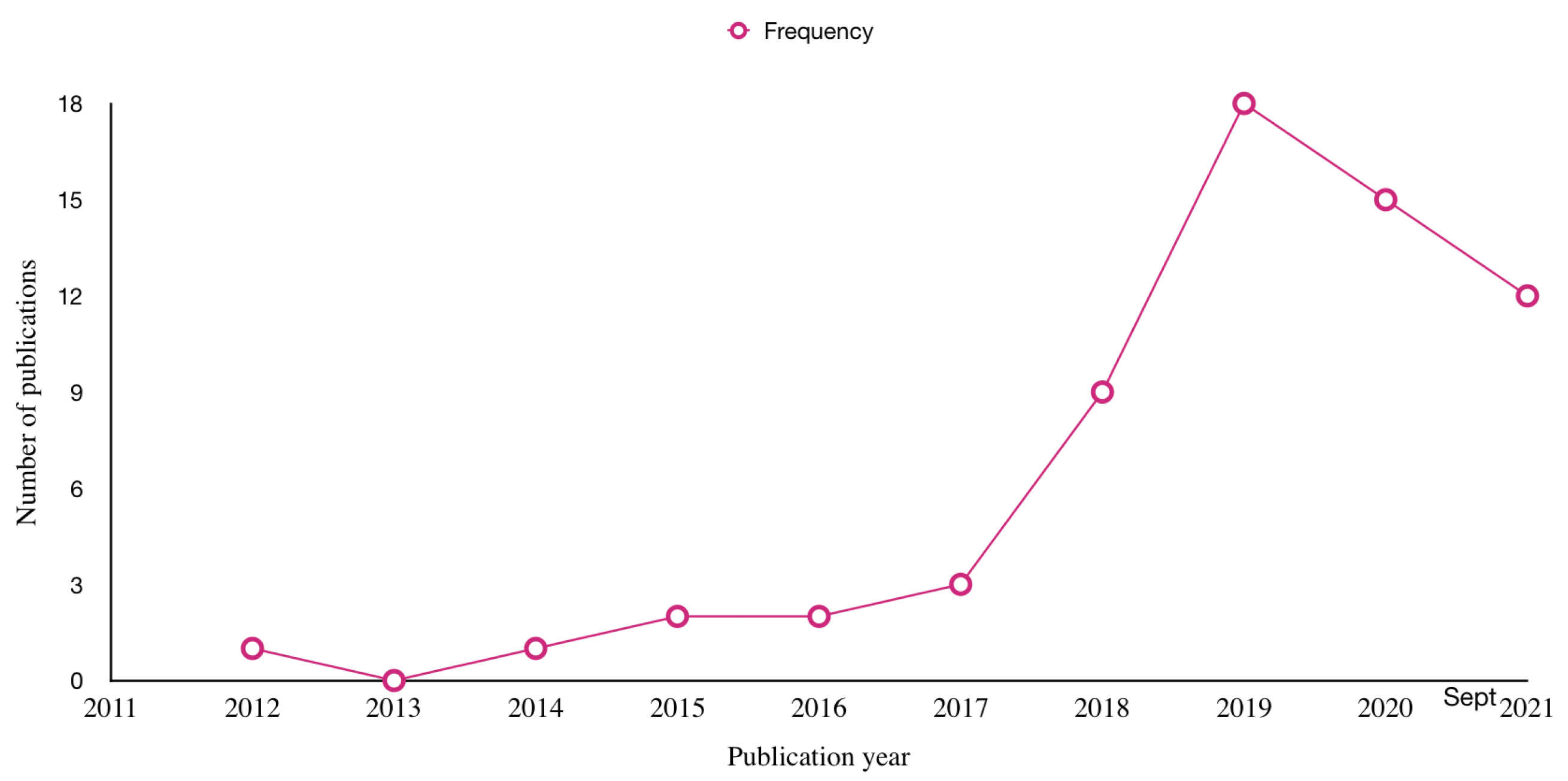

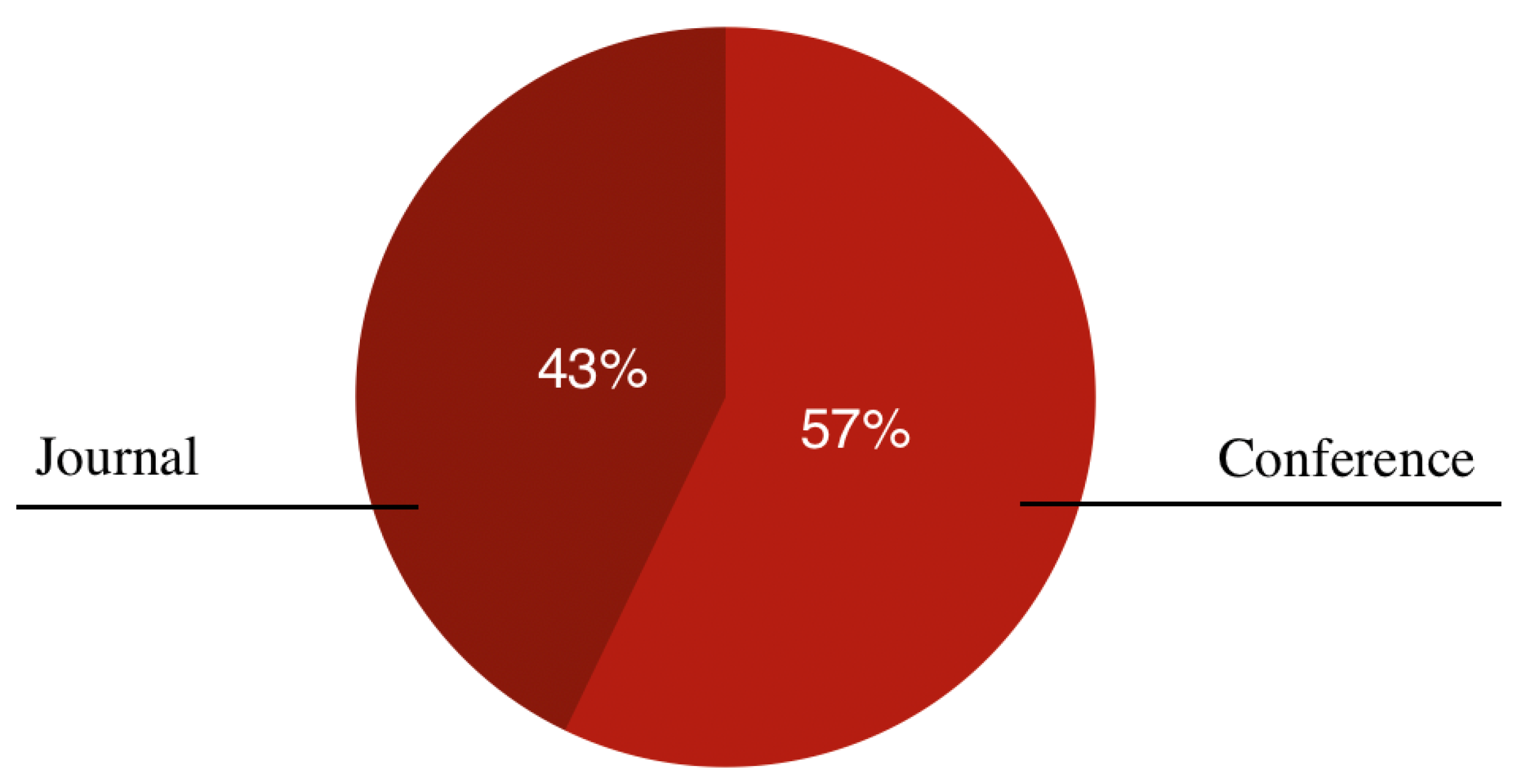

4.1. RQ1: What Are the Trend and Types of Studies on DL-Based Web Attacks Detection?

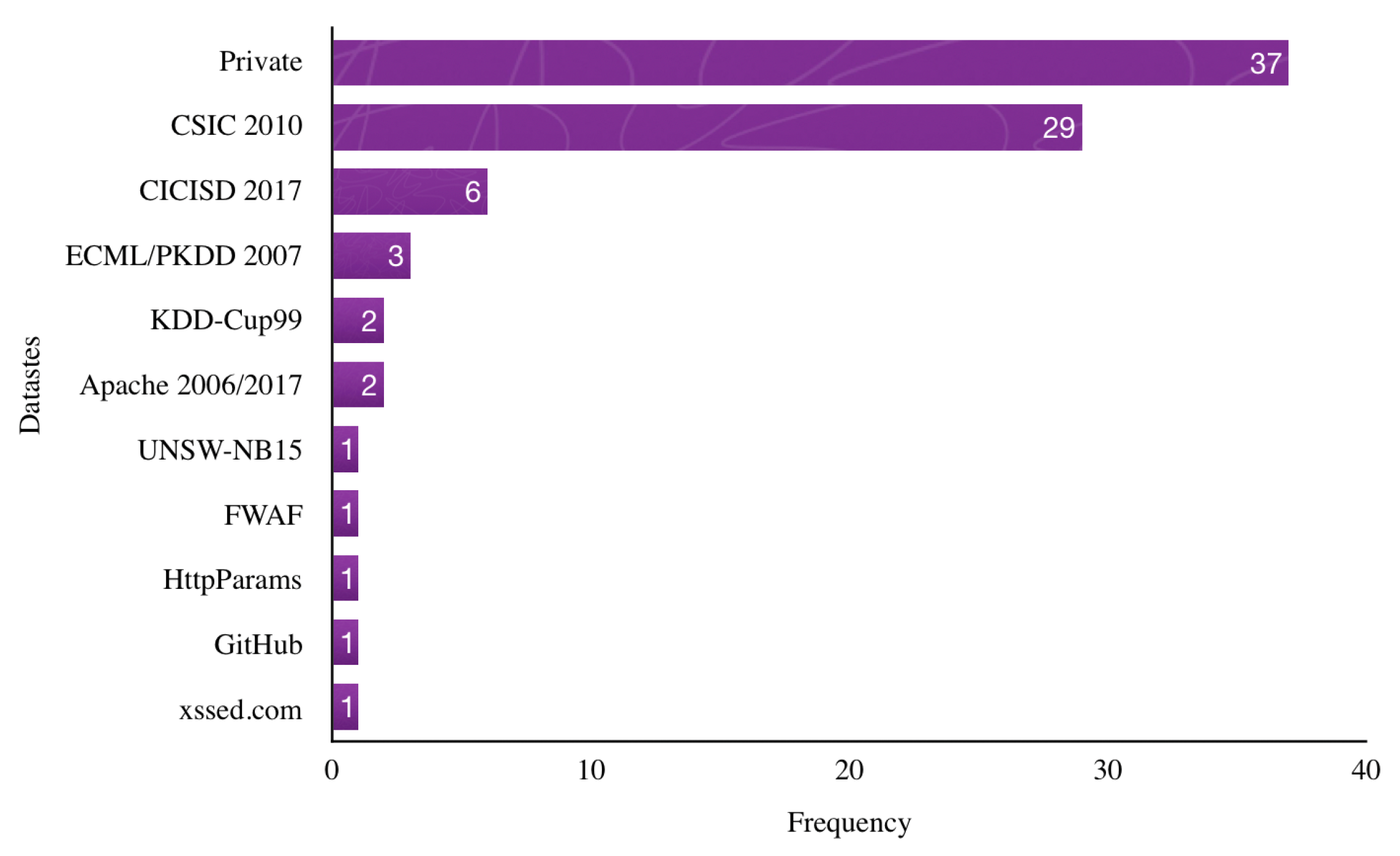

4.2. RQ2: What Datasets Are Used to Evaluate the Proposed Approaches for DL-Based Web Attacks Detection?

- KDD-Cup99: The dataset contains 41 features. It can get in three following versions: (i) complete training set, (ii) 10% of the training set, and (iii) testing set. It is mainly used for building networks intrusion detection models.

- UNSW-NB15: The dataset combines actual modern normal activities and synthetic contemporary attack behaviors. It has nine types of attacks, which are mostly related to network intrusion. The training dataset includes 175,341 instances whereas 82,332 instances are in the testing set. It is also mostly used in networks intrusion detection.

- CICIDS-2017: The Canadian Institute for Cybersecurity created this dataset. It has 2,830,540 distinct instances and 83 features containing 15 class labels (1 normal + 14 attack labels). The dataset contains only 2180 web attacks instances, which means it is insufficient for evaluating a web attacks detection model.

- CSIC-2010: The dataset contains the generated traffic targeted to an e-commerce web application. It is an automatically generated dataset that contains 36,000 normal requests and more than 25,000 anomalous requests (i.e., web attacks).

- ECML/PKDD 2007: The dataset is part of ECML and PKDD conferences on Machine Learning. The dataset contains 35,006 normal traffic and 15,110 malicious web requests. The dataset was developed by collecting real traffic and then processed to mask parameter names and values—replacing them with random values.

4.3. RQ3: What Frameworks and Platforms Are Used to Implement the Proposed Solutions for DL-Based Web Attacks Detection?

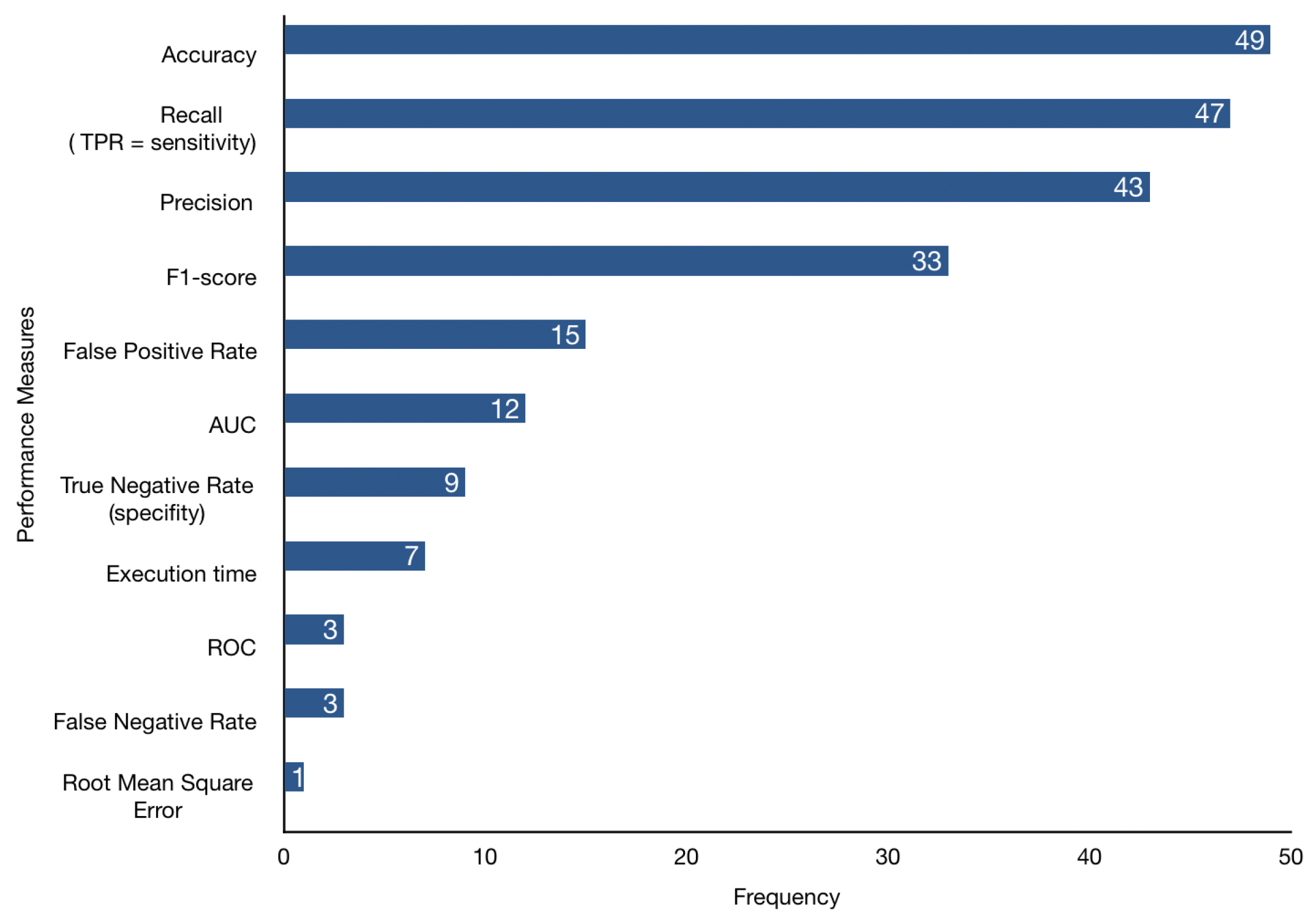

4.4. RQ4: What Performance Metrics Are Used in DL-Based Web Attacks Detection Literature?

- Accuracy: measures the ratio of the number of samples classified correctly over the total number of samples. Accuracy is not useful when the classes are unbalanced (i.e., there are a significantly larger number of examples from one class than from another). However, it does provide valuable insight when the classes are balanced. Usually, it is recommended to use recall and precision along with accuracy.

- Recall or Sensitivity or True Positive Rate or Detection Rate: measures the proportion of actual positives that are correctly identified.The higher value of sensitivity would mean a higher value of true positive and lower value of false negative. The lower value of sensitivity would mean lower value of true positive and higher value of false negatives.

- Precision: measures the number of positive class predictions that actually belong to the positive class. Precision does not quantify how many real positive examples were predicted as belonging to the negative class, that is why it is advisable to compute the True Negative Rate (TNR) metric.

- F1-score: weighted harmonic mean of precision (P) and recall (R) measures. It is recommended to use F1-score rather than accuracy if we need to seek a balance between precision and recall and there is an uneven class distribution:is chosen such that recall is considered times as important as precision. If , then precision and recall are given equal importance. The choice of , and thus the trade-off between precision and recall, depends on the classification problem.

- False Positive Rate: the ratio of all benign samples incorrectly classified as malicious. It is used to plot the ROC curve:In intrusion detection systems, it is important to have a low FPR. Otherwise, the detection system is considered not reliable.

- Area Under the Curve (AUC): measures two-dimensional area under the ROC curve, ranging from 0 to 1, indicating a model’s ability to distinguish between classes. Models should have a high value of AUC, so-called models with good skill. The ROC (Receiver Operating Characteristic) curve is the plot between the TPR (y-axis) and the FPR (x-axis).

- True Negative Rate or Specificity: measures the proportion of actual negatives correctly identified. The higher value of specificity would mean a higher value of true negative and lower false positive rate. The lower value of specificity would mean a lower value of true negative and higher value of false positive.

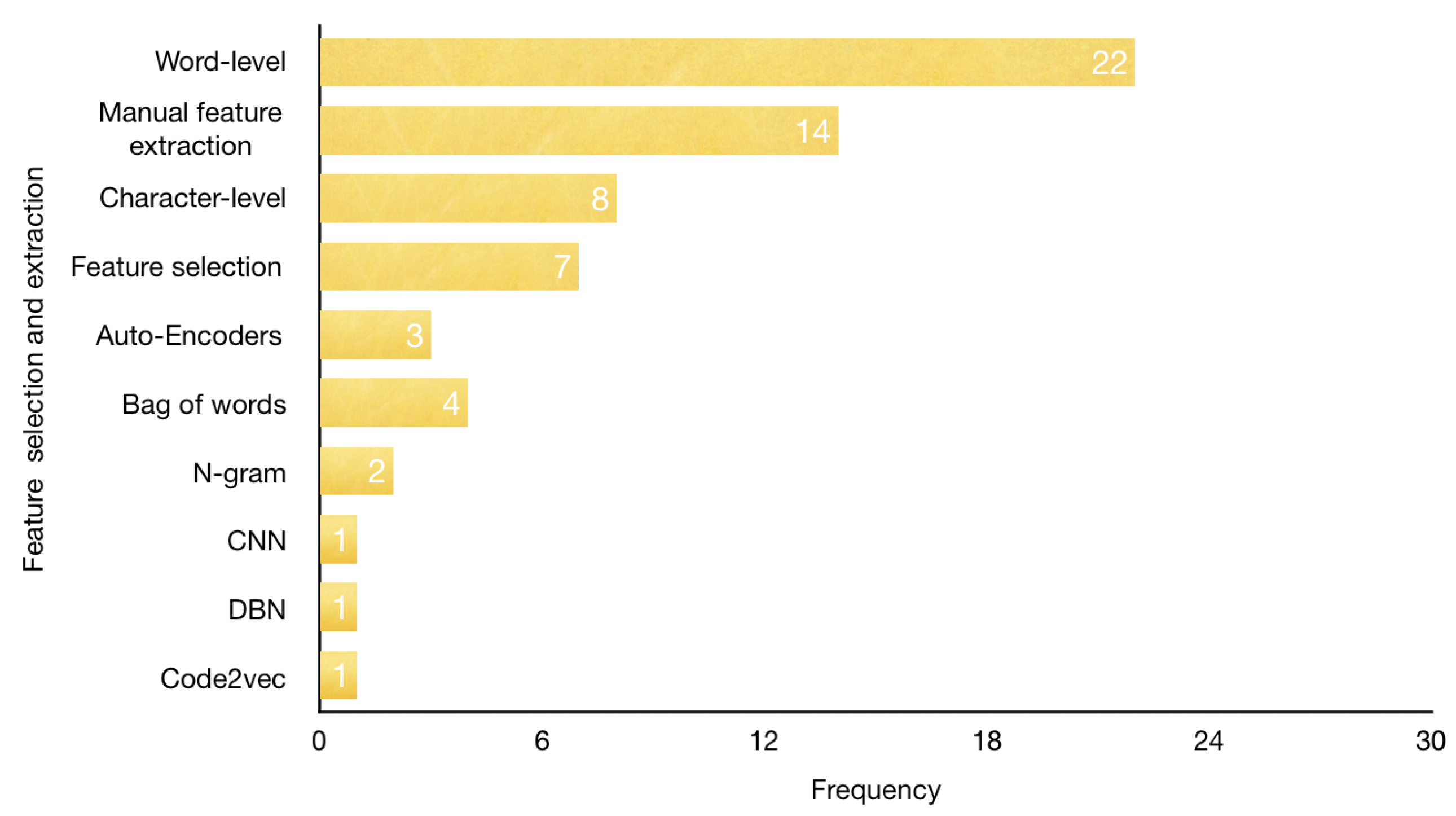

4.5. RQ5: What Are the Feature Selection and Extraction Approaches Used in DL-Based Web Attacks Detection Literature?

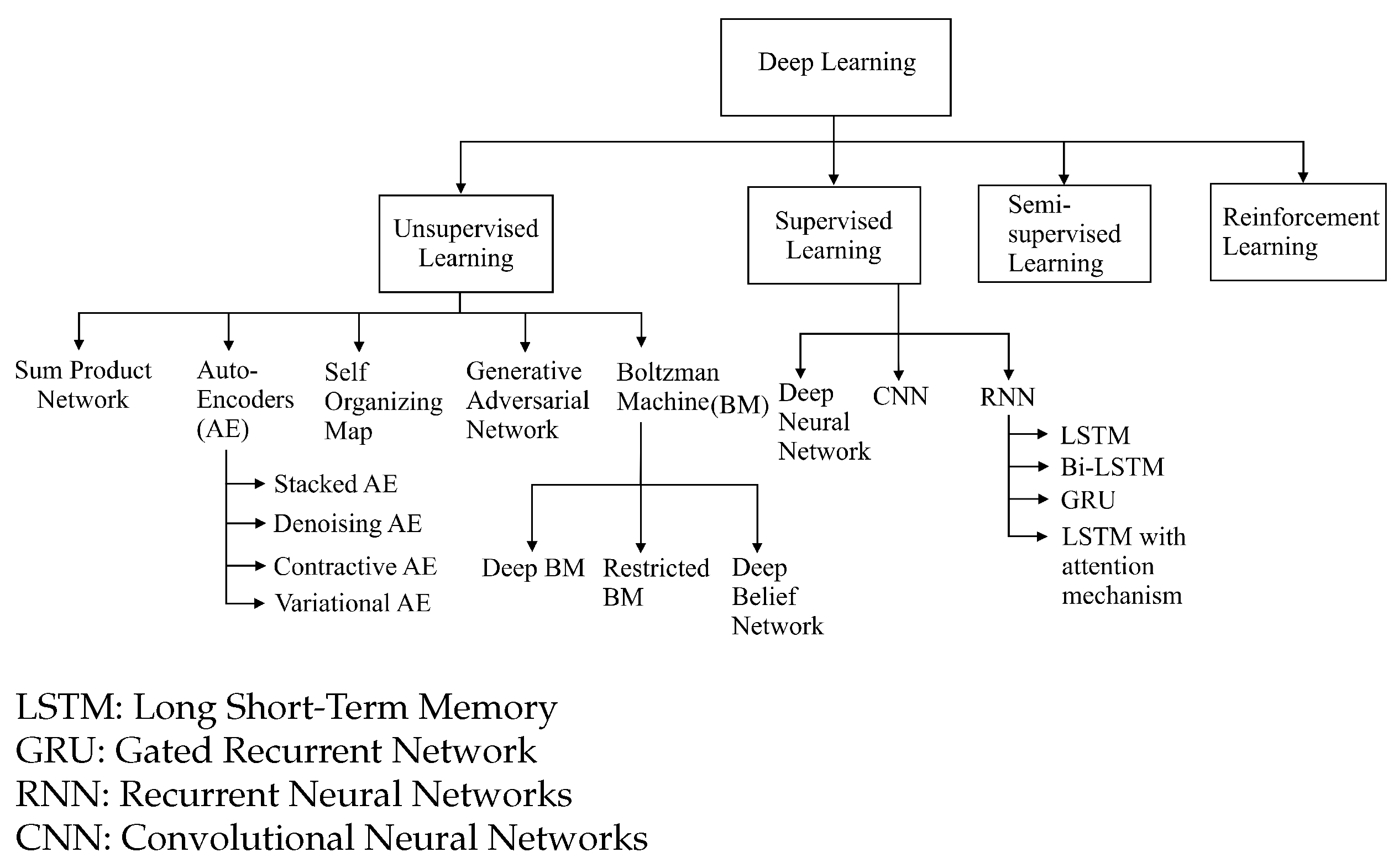

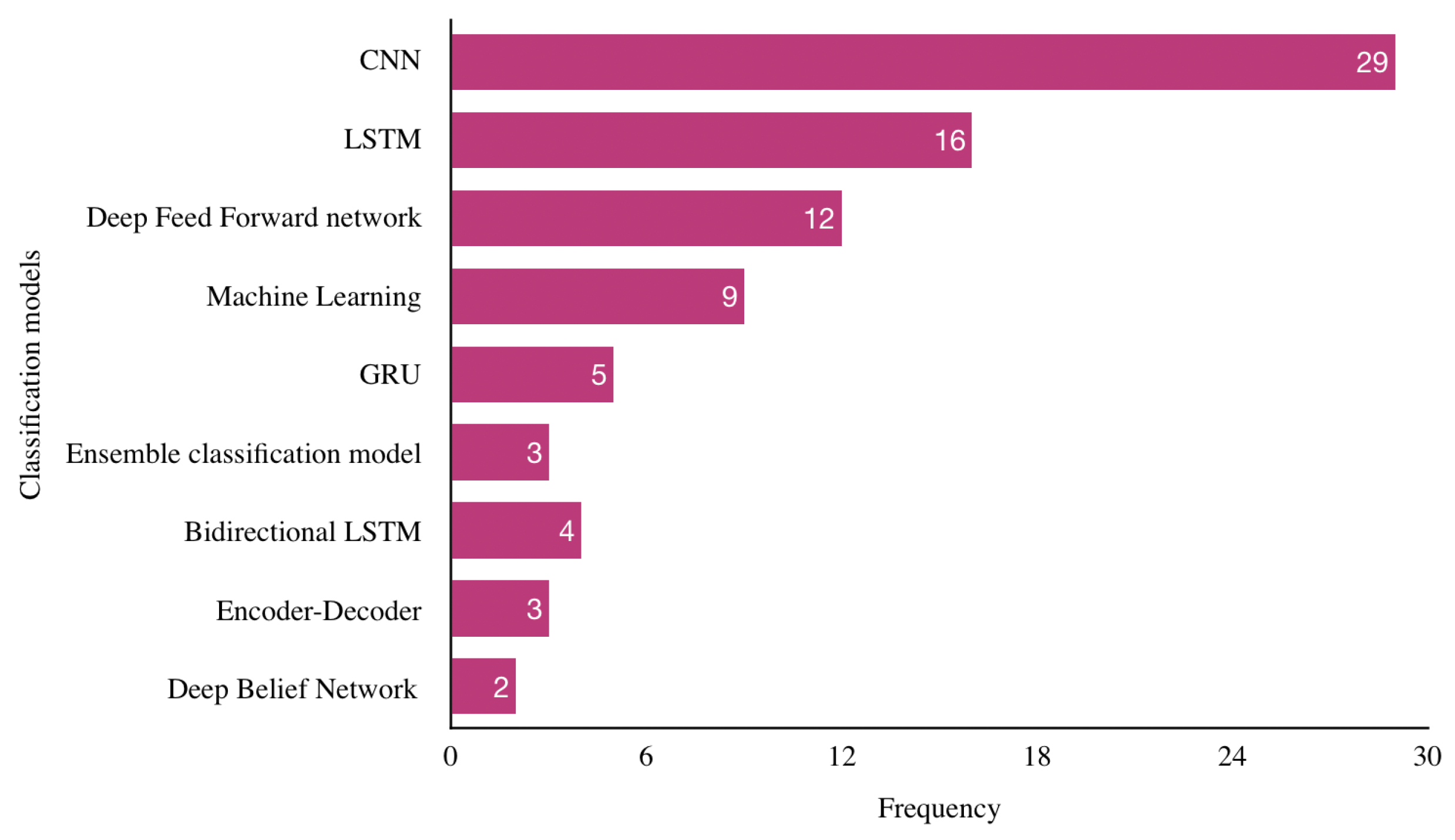

4.6. RQ6: What Classification Models Are Used to Detect Web Vulnerabilities?

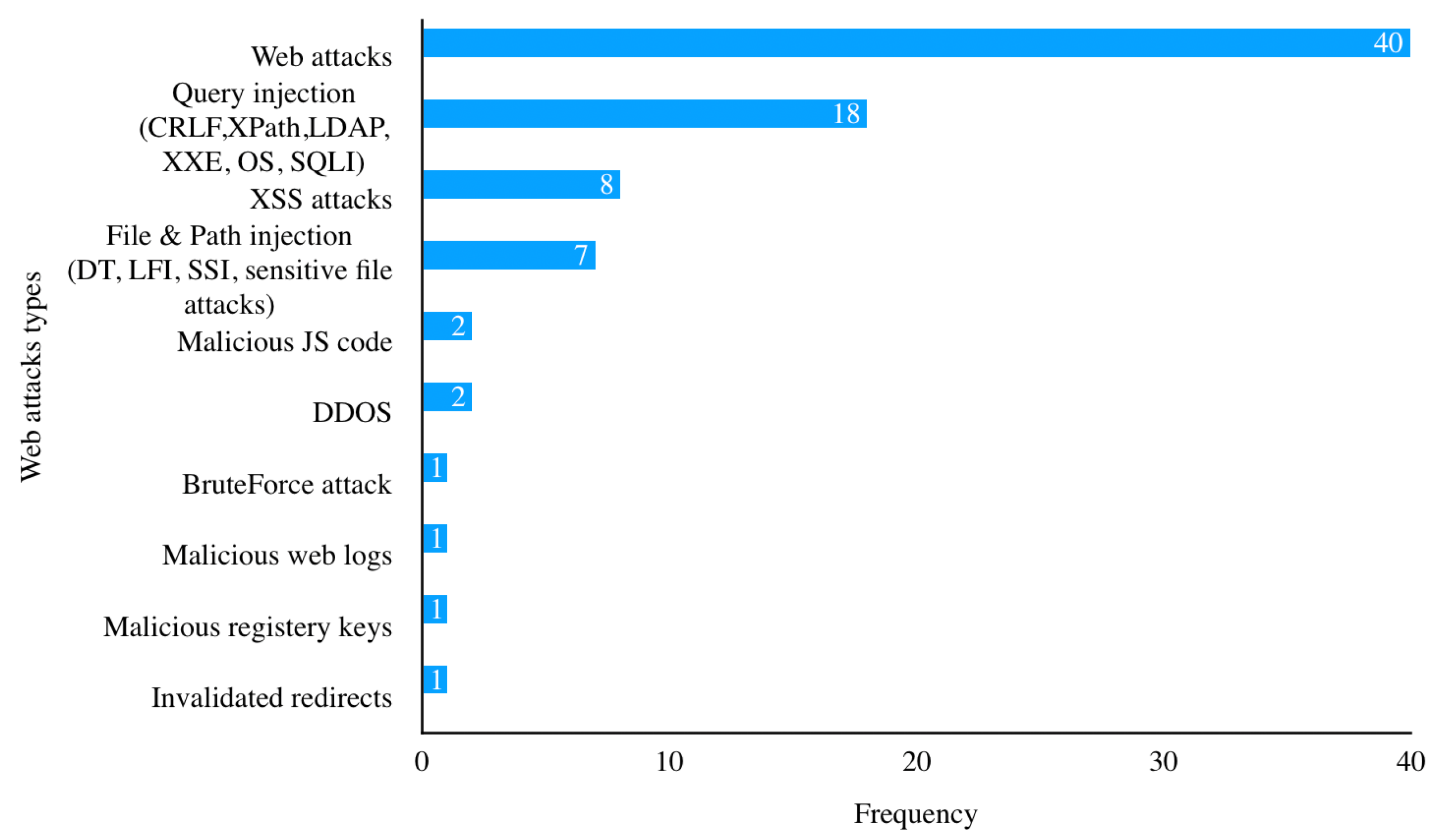

4.7. RQ7: What Types of Web Attacks Do the Proposed Approaches Detect?

4.8. RQ8: What Is the Performance of DL-Based Web Attacks Detection Models?

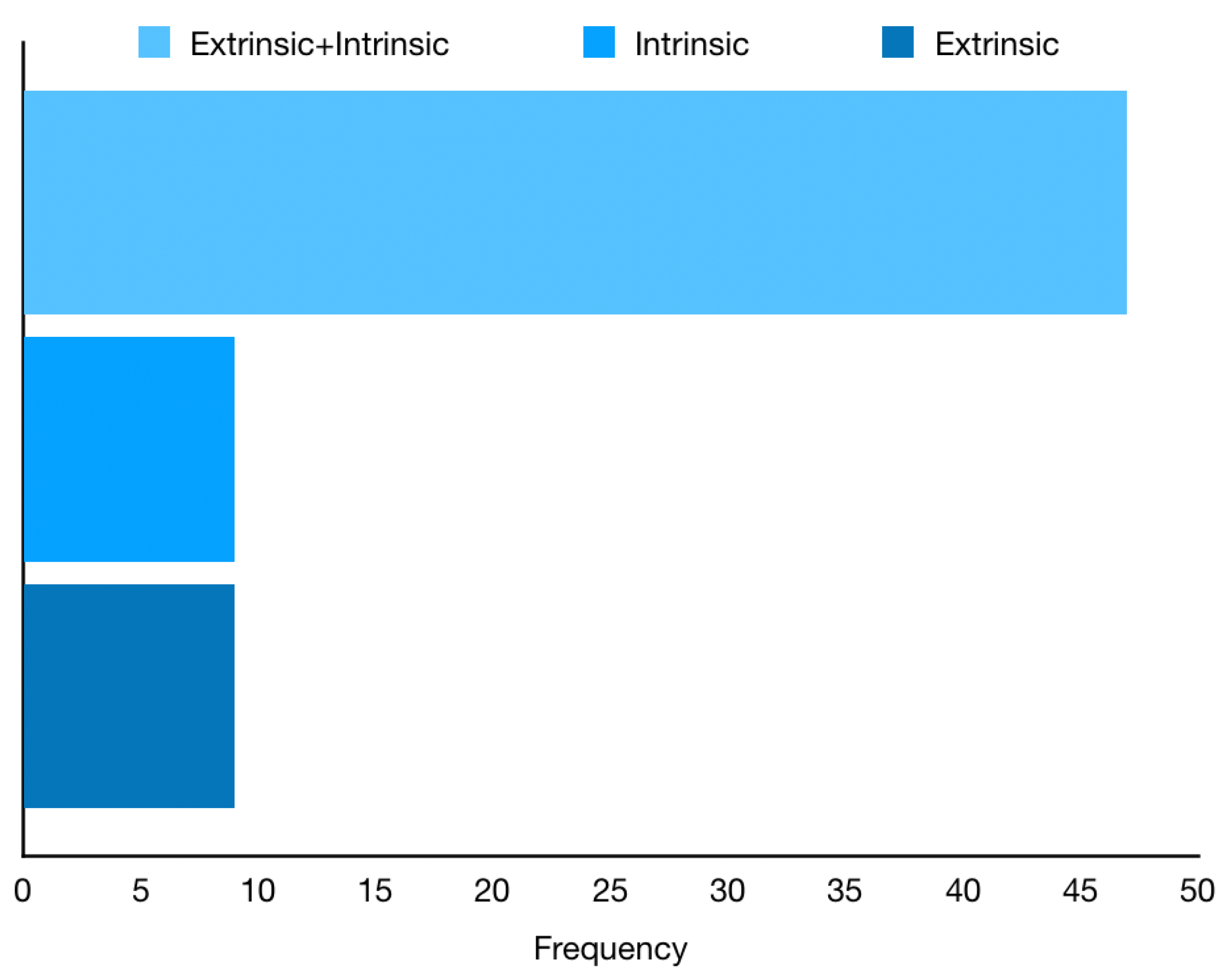

4.9. RQ9 and RQ10: What Is the Research Focus and Limitations According to the PS?

4.9.1. CNN or CNN Combined with LSTM or GRU

4.9.2. Recurrent Neural Networks

4.9.3. Encoder–Decoder Models

4.9.4. Deep Belief Networks

4.9.5. Ensemble Classification Models

4.9.6. Deep Feed Forward Networks

4.9.7. Deep Learning-Based Feature Extraction

5. Limitations

6. Conclusions

- Generate standard public real-world datasets: it is important to generate web attacks detection datasets to resolve the current datasets issues. Indeed, most researchers used private datasets. Additionally, public datasets do not reflect the complexity of real-world web applications and do not include newly discovered web attacks. Moreover, when the same public dataset is adopted, different portions are used for training and testing, which complicates the comparison between approaches. Therefore, there is a need for standard realistic public datasets to allow the research communities to contribute efficiently in this field, to facilitate comparative analyses between research works, and to make the proposed models applicable in real-world web applications.

- Consider the standard classification performance metrics: some studies used a single performance metric (e.g., Accuracy) to evaluate their detection model, which is not sufficient in the case of imbalanced datasets. Moreover, most reviewed studies do not consider computational overhead as an evaluation metric, although it is a very critical issue in real-world web applications. Moreover, few researchers provided a detailed report of false alarms (FPR), which is an important point because it helps in model retraining while saving analysts time. In addition, most studies reported their results, but none of them outlined the threats to the validity of the experiments. Therefore, it is important to identify standard performance metrics that researchers should consider in order to ensure that the proposed models are accurate, cost-effective, reliable, and reproducible.

- Explore advanced DL models in the field of web attacks detection: the existing DL-based web attacks detection literature lacked some advanced DL models. In particular, applying Generative Adversarial Networks (GAN) and Encoders–Decoders to web attacks detection is interesting because they have been successfully exploited in a similar domain that is Networks Intrusion Detection (e.g., Refs. [92,93,94,95]).

- Bridge the expertise in web application security and expertise in Machine Learning in order to build theoretical Machine Learning models tailored for web attacks detection: most existing DL models were designed with other applications in mind. For instance, Convolutional Neural Networks and Recurrent Neural Networks were originally developed to answer the specific requirements of image processing and Natural Language Processing problems, respectively. Additionally, because each web application has its own business logic, it is interesting to have a theoretical Deep Learning model for detecting web attacks without a need to learn each web application separately.

- Support secure learning: Machine Learning models are prone to adversarial attacks. In such attacks, attackers evade intrusion detection systems by exploiting the underlying Machine Learning model. For instance, an adversarial attack can disrupt the model training by contaminating training data with malicious data, thereby tricking the detection model into misclassifying malicious web requests as benign.

- Support online learning: almost all reviewed studies adopted offline learning in building their detection models. In offline and online learning, the model is trained using batch algorithms (i.e., the cost function is computed over a group of instances). However, while in offline learning, the model is tested and validated using batch algorithms, in online learning, the model is tested using real-time data; thereby, the cost function is re-valuated over a single data instance at a time. In industry, models trained online are preferred over models trained offline because the latter are generally evaluated against outdated and simplistic datasets. Therefore, it is important that researchers give more consideration to online learning in order to reduce the gap between research and industry in the DL-based web attacks detection area.

- Support adaptive incremental learning: according to a recent study conducted in 2020 [97], 42% of attacks are zero-day attacks (i.e., new or unknown attacks), while 58% are based on known vulnerabilities. As with traditional detection systems, Machine Learning-based approaches have a difficult time detecting zero-day attacks because they rely on past and known attacks. Thus, it is fundamental to constantly retrain ML models to account for these attacks. However, retraining models from scratch is time-consuming and computationally intensive. Thus, incremental learning is essential, as it will allow updating trained models as new data is generated.

- Generate a corpus for web attacks detection: although DL-based web attacks detection problems are similar to Natural Language Processing (NLP) problems, there exists no corpus yet that can help to develop NLP-like models for web attacks detection problems.

- Define a standard and transparent research methodology: it is important to define a transparent research protocol that researchers should follow when proposing a DL-based method for the detection of web attacks. Such a methodology will improve the quality of research works and facilitate their comparison.

- Develop a common framework for comparing DL-based web attacks detection models: there is a large diversity in performance measures, datasets, and platforms used in reviewed studies, which makes a comparative analysis between research works difficult if not impossible. Therefore, it is fundamental to provide a standardization of datasets, performance metrics, environments, as well as a transparent research methodology that allows comparing the different approaches and evaluating the models’ suitability for real-world web applications.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Technologies, P. Web Applications Vulnerabilities and Threats: Statistics for 2019. 2020. Available online: https://www.ptsecurity.com/ww-en/analytics/web-vulnerabilities-2020/ (accessed on 20 February 2022).

- Noman, M.; Iqbal, M.; Manzoor, A. A Survey on Detection and Prevention of Web Vulnerabilities. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 521–540. [Google Scholar] [CrossRef]

- ASVS. Application Security Verification Standard. Available online: https://www.owasp.org/index.php/ASVS (accessed on 20 February 2022).

- SAMMS. OWASP Software Assurance Maturity Model. Available online: https://www.owasp.org/index.php/SAMM (accessed on 20 February 2022).

- Jovanovic, N.; Kruegel, C.; Kirda, E. Pixy: A static analysis tool for detecting web application vulnerabilities. In Proceedings of the 2006 IEEE Symposium on Security and Privacy (S&P’06), Berkeley/Oakland, CA, USA, 21–24 May 2006. [Google Scholar]

- Medeiros, I.; Neves, N.; Correia, M. Detecting and removing web application vulnerabilities with static analysis and data mining. IEEE Trans. Reliab. 2015, 65, 54–69. [Google Scholar] [CrossRef]

- Sun, F.; Xu, L.; Su, Z. Static Detection of Access Control Vulnerabilities in Web Applications. Available online: https://www.usenix.org/event/sec11/tech/full_papers/Sun.pdf (accessed on 20 February 2022).

- Medeiros, I.; Neves, N.; Correia, M. DEKANT: A static analysis tool that learns to detect web application vulnerabilities. In Proceedings of the 25th International Symposium on Software Testing and Analysis, Saarbrücken, Germany, 18–22 July 2016; pp. 1–11. [Google Scholar]

- Agosta, G.; Barenghi, A.; Parata, A.; Pelosi, G. Automated security analysis of dynamic web applications through symbolic code execution. In Proceedings of the 2012 Ninth International Conference on Information Technology-New Generations, Las Vegas, NV, USA, 16–18 April 2012; pp. 189–194. [Google Scholar]

- Falana, O.J.; Ebo, I.O.; Tinubu, C.O.; Adejimi, O.A.; Ntuk, A. Detection of Cross-Site Scripting Attacks using Dynamic Analysis and Fuzzy Inference System. In Proceedings of the 2020 International Conference in Mathematics, Computer Engineering and Computer Science (ICMCECS), Ayobo, Nigeria, 18–21 March 2020; pp. 1–6. [Google Scholar]

- Wang, R.; Xu, G.; Zeng, X.; Li, X.; Feng, Z. TT-XSS: A novel taint tracking based dynamic detection framework for DOM Cross-Site Scripting. J. Parallel Distrib. Comput. 2018, 118, 100–106. [Google Scholar] [CrossRef]

- Weissbacher, M.; Robertson, W.; Kirda, E.; Kruegel, C.; Vigna, G. Zigzag: Automatically Hardening Web Applications against Client-Side Validation Vulnerabilities. Available online: https://www.usenix.org/system/files/conference/usenixsecurity15/sec15-paper-weissbacher.pdf (accessed on 20 February 2022).

- Ruse, M.E.; Basu, S. Detecting cross-site scripting vulnerability using concolic testing. In Proceedings of the 2013 10th International Conference on Information Technology: New Generations, Las Vegas, NV, USA, 15–17 April 2013; pp. 633–638. [Google Scholar]

- Mouzarani, M.; Sadeghiyan, B.; Zolfaghari, M. Detecting injection vulnerabilities in executable codes with concolic execution. In Proceedings of the 2017 8th IEEE International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 24–26 November 2017; pp. 50–57. [Google Scholar]

- Duchene, F.; Rawat, S.; Richier, J.L.; Groz, R. KameleonFuzz: Evolutionary fuzzing for black-box XSS detection. In Proceedings of the 4th ACM Conference on Data and Application Security and Privacy, San Antonio, TX, USA, 3–5 March 2014; pp. 37–48. [Google Scholar]

- Deepa, G.; Thilagam, P.S.; Khan, F.A.; Praseed, A.; Pais, A.R.; Palsetia, N. Black-box detection of XQuery injection and parameter tampering vulnerabilities in web applications. Int. J. Inf. Secur. 2018, 17, 105–120. [Google Scholar] [CrossRef]

- Pellegrino, G.; Balzarotti, D. Toward Black-Box Detection of Logic Flaws in Web Applications. Available online: https://s3.eurecom.fr/docs/ndss14_pellegrino.pdf (accessed on 20 February 2022).

- Duchene, F.; Groz, R.; Rawat, S.; Richier, J.L. XSS vulnerability detection using model inference assisted evolutionary fuzzing. In Proceedings of the 2012 IEEE Fifth International Conference on Software Testing, Verification and Validation, Montreal, QC, Canada, 17–21 April 2012; pp. 815–817. [Google Scholar]

- Khalid, M.N.; Farooq, H.; Iqbal, M.; Alam, M.T.; Rasheed, K. Predicting web vulnerabilities in web applications based on machine learning. In Proceedings of the International Conference on Intelligent Technologies and Applications, Bahawalpur, Pakistan, 23–25 October 2018. [Google Scholar]

- Anbiya, D.R.; Purwarianti, A.; Asnar, Y. Vulnerability Detection in PHP Web Application Using Lexical Analysis Approach with Machine Learning. In Proceedings of the 2018 5th International Conference on Data and Software Engineering (ICoDSE), Mataram, Indonesia, 7–8 November 2018; pp. 1–6. [Google Scholar]

- Abunadi, I.; Alenezi, M. An Empirical Investigation of Security Vulnerabilities within Web Applications. J. Univers. Comput. Sci. 2016, 22, 537–551. [Google Scholar]

- Berman, D.S.; Buczak, A.L.; Chavis, J.S.; Corbett, C.L. A survey of deep learning methods for cyber security. Information 2019, 10, 122. [Google Scholar] [CrossRef] [Green Version]

- Torres, J.M.; Comesaña, C.I.; Garcia-Nieto, P.J. Machine learning techniques applied to cybersecurity. Int. J. Mach. Learn. Cybern. 2019, 10, 2823–2836. [Google Scholar] [CrossRef]

- Sharma, A.; Singh, A.; Sharma, N.; Kaushik, I.; Bhushan, B. Security countermeasures in web based application. In Proceedings of the 2019 2nd International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kannur, India, 5–6 July 2019; Volume 1, pp. 1236–1241. [Google Scholar]

- Fredj, O.B.; Cheikhrouhou, O.; Krichen, M.; Hamam, H.; Derhab, A. An OWASP top ten driven survey on web application protection methods. In Proceedings of the International Conference on Risks and Security of Internet and Systems, Paris, France, 4–6 November 2020. [Google Scholar]

- Mouli, V.R.; Jevitha, K. Web services attacks and security-a systematic literature review. Procedia Comput. Sci. 2016, 93, 870–877. [Google Scholar] [CrossRef] [Green Version]

- Kaur, J.; Garg, U. A Detailed Survey on Recent XSS Web-Attacks Machine Learning Detection Techniques. In Proceedings of the 2021 2nd Global Conference for Advancement in Technology (GCAT), Bangalore, India, 1–3 October 2021; pp. 1–6. [Google Scholar]

- Kitchenham, B.; Charters, S.M. Guidelines for Performing Systematic Literature Reviews in Software Engineering. Available online: https://www.researchgate.net/profile/Barbara-Kitchenham/publication/302924724_Guidelines_for_performing_Systematic_Literature_Reviews_in_Software_Engineering/links/61712932766c4a211c03a6f7/Guidelines-for-performing-Systematic-Literature-Reviews-in-Software-Engineering.pdf (accessed on 20 February 2022).

- Kitchenham, B. Procedures for performing systematic reviews. Keele UK Keele Univ. 2004, 33, 1–26. [Google Scholar]

- Luo, A.; Huang, W.; Fan, W. A CNN-based Approach to the Detection of SQL Injection Attacks. In Proceedings of the 2019 IEEE/ACIS 18th International Conference on Computer and Information Science (ICIS), Beijing, China, 17–19 June 2019; pp. 320–324. [Google Scholar] [CrossRef]

- Yadav, S.; Subramanian, S. Detection of Application Layer DDoS attack by feature learning using Stacked AutoEncoder. In Proceedings of the 2019 IEEE/ACIS 18th International Conference on Computer and Information Science (ICIS), Beijing, China, 17–19 June 2019; pp. 361–366. [Google Scholar] [CrossRef]

- Luo, C.; Su, S.; Sun, Y.; Tan, Q.; Han, M.; Tian, Z. A convolution-based system for malicious URLS detection. Comput. Mater. Contin. 2020, 62, 399–411. [Google Scholar] [CrossRef]

- Tang, P.; Qiu, W.; Huang, Z.; Lian, H.; Liu, G. Detection of SQL injection based on artificial neural network. Knowl.-Based Syst. 2020, 190, 105528. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, W.D.; Wei, P.C. A deep learning approach for detecting malicious JavaScript code. Secur. Commun. Netw. 2016, 9, 1520–1534. [Google Scholar] [CrossRef] [Green Version]

- Sheykhkanloo, N. Employing Neural Networks for the detection of SQL injection attack. In Proceedings of the 7th International Conference on Security of Information and Networks, Glasgow, Scotland, UK, 9–11 September 2014; pp. 318–323. [Google Scholar] [CrossRef]

- Saxe, J.; Harang, R.; Wild, C.; Sanders, H. A deep learning approach to fast, format-agnostic detection of malicious web content. In Proceedings of the 2018 IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 24 May 2018; pp. 8–14. [Google Scholar] [CrossRef] [Green Version]

- Gong, X.; Zhou, Y.; Bi, Y.; He, M.; Sheng, S.; Qiu, H.; He, R.; Lu, J. Estimating Web Attack Detection via Model Uncertainty from Inaccurate Annotation. In Proceedings of the 2019 6th IEEE International Conference on Cyber Security and Cloud Computing (CSCloud)/2019 5th IEEE International Conference on Edge Computing and Scalable Cloud (EdgeCom), Paris, France, 21–23 June 2019; pp. 53–58. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, B.; Bai, S.; Lu, S.; Lin, Z. A Deep Learning Method to Detect Web Attacks Using a Specially Designed CNN. In Proceedings of the 24th International Conference on Neural Information Processing (ICONIP), Guangzhou, China, 14–18 November 2017; pp. 828–836. [Google Scholar] [CrossRef]

- Wang, J.; Zhou, Z.; Chen, J. Evaluating CNN and LSTM for web attack detection. In Proceedings of the 10th International Conference on Machine Learning and Computing, Macau, China, 26–28 February 2018; pp. 283–287. [Google Scholar] [CrossRef]

- Tian, Z.; Luo, C.; Qiu, J.; Du, X.; Guizani, M. A Distributed Deep Learning System for Web Attack Detection on Edge Devices. IEEE Trans. Ind. Inform. 2020, 16, 1963–1971. [Google Scholar] [CrossRef]

- Saxe, J.; Berlin, K. eXpose: A character-level convolutional neural network with embeddings for detecting malicious URLs, file paths and registry keys. arXiv 2017, arXiv:1702.08568. [Google Scholar]

- Niu, Q.; Li, X. A High-performance Web Attack Detection Method based on CNN-GRU Model. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 12–14 June 2020; pp. 804–808. [Google Scholar] [CrossRef]

- Kaur, S.; Singh, M. Hybrid intrusion detection and signature generation using Deep Recurrent Neural Networks. Neural Comput. Appl. 2020, 32, 7859–7877. [Google Scholar] [CrossRef]

- Kadhim, R.; Gaata, M. A hybrid of CNN and LSTM methods for securing web application against cross-site scripting attack. Indones. J. Electr. Eng. Comput. Sci. 2020, 21, 1022–1029. [Google Scholar] [CrossRef]

- Manimurugan, S.; Manimegalai, P.; Valsalan, P.; Krishnadas, J.; Narmatha, C. Intrusion detection in cloud environment using hybrid genetic algorithm and back propagation neural network. Int. J. Commun. Syst. 2020. [Google Scholar] [CrossRef]

- Smitha, R.; Hareesha, K.; Kundapur, P. A machine learning approach for web intrusion detection: MAMLS perspective. Adv. Intell. Syst. Comput. 2019, 900, 119–133. [Google Scholar] [CrossRef]

- Moradi Vartouni, A.; Teshnehlab, M.; Sedighian Kashi, S. Leveraging deep neural networks for anomaly-based web application firewall. IET Inf. Secur. 2019, 13, 352–361. [Google Scholar] [CrossRef]

- Zhang, K. A machine learning based approach to identify SQL injection vulnerabilities. In Proceedings of the 2019 34th IEEE/ACM International Conference on Automated Software Engineering (ASE), San Diego, CA, USA, 11–15 November 2019; pp. 1286–1288. [Google Scholar] [CrossRef]

- Liu, T.; Qi, Y.; Shi, L.; Yan, J. Locate-then-DetecT: Real-time web attack detection via attention-based deep neural networks. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (IJCAI-19), Macao, China, 10–16 August 2019; pp. 4725–4731. [Google Scholar] [CrossRef] [Green Version]

- Tekerek, A. A novel architecture for web-based attack detection using convolutional neural network. Comput. Secur. 2021, 100, 102096. [Google Scholar] [CrossRef]

- Song, X.; Chen, C.; Cui, B.; Fu, J. Malicious javascript detection based on bidirectional LSTM model. Appl. Sci. 2020, 10, 3440. [Google Scholar] [CrossRef]

- Arshad, M.; Hussain, M. A real-time LAN/WAN and web attack prediction framework using hybrid machine learning model. Int. J. Eng. Technol. (UAE) 2018, 7, 1128–1136. [Google Scholar] [CrossRef]

- Rong, W.; Zhang, B.; Lv, X. Malicious Web Request Detection Using Character-Level CNN. In Machine Learning for Cyber Security; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 6–16. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, H.; Franke, K. Adaptive Intrusion Detection System via online machine learning. In Proceedings of the 2012 12th International Conference on Hybrid Intelligent Systems (HIS), Pune, India, 4–7 December 2012; pp. 271–277. [Google Scholar] [CrossRef]

- Mokbal, F.; Dan, W.; Imran, A.; Jiuchuan, L.; Akhtar, F.; Xiaoxi, W. MLPXSS: An Integrated XSS-Based Attack Detection Scheme in Web Applications Using Multilayer Perceptron Technique. IEEE Access 2019, 7, 100567–100580. [Google Scholar] [CrossRef]

- Kim, A.; Park, M.; Lee, D.H. AI-IDS: Application of Deep Learning to Real-Time Web Intrusion Detection. IEEE Access 2020, 8, 70245–70261. [Google Scholar] [CrossRef]

- Gong, X.; Lu, J.; Zhou, Y.; Qiu, H.; He, R. Model Uncertainty Based Annotation Error Fixing for Web Attack Detection. J. Signal Process. Syst. 2020. [Google Scholar] [CrossRef]

- Vartouni, A.; Kashi, S.; Teshnehlab, M. An anomaly detection method to detect web attacks using Stacked Auto-Encoder. In Proceedings of the 2018 6th Iranian Joint Congress on Fuzzy and Intelligent Systems (CFIS), Kerman, Iran, 28 Februay–2 March 2018; pp. 131–134. [Google Scholar] [CrossRef]

- Deshpande, G.; Kulkarni, S. Modeling and Mitigation of XPath Injection Attacks for Web Services Using Modular Neural Networks. In Proceedings of the 5th International Conference on Advanced Computing, Networking, and Informatics (ICACNI), Goa, India, 1–3 June 2017. [Google Scholar] [CrossRef]

- Liang, J.; Zhao, W.; Ye, W. Anomaly-based web attack detection: A deep learning approach. In Proceedings of the 2017 VI International Conference on Network, Communication and Computing, Kunming, China, 8–10 December 2017; pp. 80–85. [Google Scholar] [CrossRef]

- Jin, X.; Cui, B.; Yang, J.; Cheng, Z. Payload-Based Web Attack Detection Using Deep Neural Network. In Proceedings of the 12th IEEE International Conference on Broadband Wireless Computing, Communicationand Applications (BWCCA), Barcelona, Spain, 8–10 November 2017. [Google Scholar] [CrossRef]

- Althubiti, S.; Nick, W.; Mason, J.; Yuan, X.; Esterline, A. Applying Long Short-Term Memory Recurrent Neural Network for Intrusion Detection. S. Afr. Comput. J. 2018, 56, 136–154. [Google Scholar] [CrossRef]

- Zhang, H.; Zhao, B.; Yuan, H.; Zhao, J.; Yan, X.; Li, F. SQL injection detection based on deep belief network. In Proceedings of the 3rd International Conference on Computer Science and Application Engineering, Sanya, China, 22–24 October 2019; pp. 1–6. [Google Scholar]

- Jana, I.; Oprea, A. AppMine: Behavioral analytics for web application vulnerability detection. In Proceedings of the 2019 ACM SIGSAC Conference on Cloud Computing Security Workshop, London, UK, 11 November 2019; pp. 69–80. [Google Scholar] [CrossRef] [Green Version]

- Xie, X.; Ren, C.; Fu, Y.; Xu, J.; Guo, J. SQL Injection Detection for Web Applications Based on Elastic-Pooling CNN. IEEE Access 2019, 7, 151475–151481. [Google Scholar] [CrossRef]

- Qin, Z.Q.; Ma, X.K.; Wang, Y.J. Attentional Payload Anomaly Detector for Web Applications. In Neural Information Processing; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 588–599. [Google Scholar] [CrossRef]

- Sheykhkanloo, N. SQL-IDS: Evaluation of SQLi attack detection and classification based on machine learning techniques. In Proceedings of the 8th International Conference on Security of Information and Networks, Sochi, Russia, 8–10 September 2015. [Google Scholar] [CrossRef]

- Hao, S.; Long, J.; Yang, Y. BL-IDS: Detecting Web Attacks Using Bi-LSTM Model Based on Deep Learning. In Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 551–563. [Google Scholar] [CrossRef]

- Fidalgo, A.; Medeiros, I.; Antunes, P.; Neves, N. Towards a Deep Learning Model for Vulnerability Detection on Web Application Variants. In Proceedings of the 2020 IEEE International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Porto, Portugal, 24–28 October 2020; pp. 465–476. [Google Scholar] [CrossRef]

- Gong, X.; Lu, J.; Wang, Y.; Qiu, H.; He, R.; Qiu, M. CECoR-Net: A Character-Level Neural Network Model for Web Attack Detection. In Proceedings of the 2019 IEEE International Conference on Smart Cloud (SmartCloud), Tokyo, Japan, 10–12 December 2019; pp. 98–103. [Google Scholar] [CrossRef]

- Ito, M.; Iyatomi, H. Web application firewall using character-level convolutional neural network. In Proceedings of the 2018 IEEE 14th International Colloquium on Signal Processing Its Applications (CSPA), Penang, Malaysia, 9–10 March 2018; pp. 103–106. [Google Scholar] [CrossRef]

- Zhao, J.; Wang, N.; Ma, Q.; Cheng, Z. Classifying Malicious URLs Using Gated Recurrent Neural Networks. In Proceedings of the 12th International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing (IMIS), Abertay University, Matsue, Japan, 4–6 July 2018. [Google Scholar] [CrossRef]

- Tang, R.; Yang, Z.; Li, Z.; Meng, W.; Wang, H.; Li, Q.; Sun, Y.; Pei, D.; Wei, T.; Xu, Y.; et al. ZeroWall: Detecting Zero-Day Web Attacks through Encoder-Decoder Recurrent Neural Networks. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications, Toronto, ON, Canada, 6–9 July 2020; pp. 2479–2488. [Google Scholar] [CrossRef]

- Kuang, X.; Zhang, M.; Li, H.; Zhao, G.; Cao, H.; Wu, Z.; Wang, X. DeepWAF: Detecting Web Attacks Based on CNN and LSTM Models. In Proceedings of the International Symposium on Cyberspace Safety and Security, Guangzhou, China, 1–3 December 2019; pp. 121–136. [Google Scholar]

- Luo, C.; Tan, Z.; Min, G.; Gan, J.; Shi, W.; Tian, Z. A Novel Web Attack Detection System for Internet of Things via Ensemble Classification. IEEE Trans. Ind. Inform. 2021, 17, 5810–5818. [Google Scholar] [CrossRef]

- Fang, Y.; Li, Y.; Liu, L.; Huang, C. DeepXSS: Cross Site Scripting Detection Based on Deep Learning. In Proceedings of the 2018 International Conference on Computing and Artificial Intelligence, Sanya, China, 21–23 December 2018; pp. 47–51. [Google Scholar] [CrossRef]

- Mendonca, R.; Teodoro, A.; Rosa, R.; Saadi, M.; Melgarejo, D.; Nardelli, P.; Rodriguez, D. Intrusion Detection System Based on Fast Hierarchical Deep Convolutional Neural Network. IEEE Access 2021, 9, 61024–61034. [Google Scholar] [CrossRef]

- Yang, W.; Zuo, W.; Cui, B. Detecting Malicious URLs via a Keyword-Based Convolutional Gated-Recurrent-Unit Neural Network. IEEE Access 2019, 7, 29891–29900. [Google Scholar] [CrossRef]

- Jemal, I.; Haddar, M.; Cheikhrouhou, O.; Mahfoudhi, A. Malicious Http Request Detection Using Code-Level Convolutional Neural Network. In Proceedings of the International Conference on Risks and Security of Internet and Systems, Paris, France, 4–6 November 2020; Volume 12528, pp. 317–324. [Google Scholar] [CrossRef]

- Yu, L.; Chen, L.; Dong, J.; Li, M.; Liu, L.; Zhao, B.; Zhang, C. Detecting Malicious Web Requests Using an Enhanced TextCNN. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 13–17 July 2020; pp. 768–777. [Google Scholar] [CrossRef]

- Jemal, I.; Haddar, M.A.; Cheikhrouhou, O.; Mahfoudhi, A. Performance evaluation of Convolutional Neural Network for web security. Comput. Commun. 2021, 175, 58–67. [Google Scholar] [CrossRef]

- Tripathy, D.; Gohil, R.; Halabi, T. Detecting SQL Injection Attacks in Cloud SaaS using Machine Learning. In Proceedings of the 2020 IEEE 6th Intl Conference on Big Data Security on Cloud (BigDataSecurity), IEEE Intl Conference on High Performance and Smart Computing, (HPSC) and IEEE Intl Conference on Intelligent Data and Security (IDS), Baltimore, MD, USA, 25–27 May 2020; pp. 145–150. [Google Scholar] [CrossRef]

- Melicher, W.; Fung, C.; Bauer, L.; Jia, L. Towards a Lightweight, Hybrid Approach for Detecting DOM XSS Vulnerabilities with Machine Learning. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 2684–2695. [Google Scholar] [CrossRef]

- Pan, Y.; Sun, F.; Teng, Z.; White, J.; Schmidt, D.; Staples, J.; Krause, L. Detecting web attacks with end-to-end deep learning. J. Internet Serv. Appl. 2019, 10, 16. [Google Scholar] [CrossRef] [Green Version]

- Manimurugan, S.; Al-Mutairi, S.; Aborokbah, M.M.; Chilamkurti, N.; Ganesan, S.; Patan, R. Effective attack detection in internet of medical things smart environment using a deep belief neural network. IEEE Access 2020, 8, 77396–77404. [Google Scholar] [CrossRef]

- Stephan, J.J.; Mohammed, S.D.; Abbas, M.K. Neural network approach to web application protection. Int. J. Inf. Educ. Technol. 2015, 5, 150. [Google Scholar] [CrossRef] [Green Version]

- Jemal, I.; Haddar, M.; Cheikhrouhou, O.; Mahfoudhi, A. ASCII Embedding: An Efficient Deep Learning Method for Web Attacks Detection. Commun. Comput. Inf. Sci. 2021, 1322, 286–297. [Google Scholar] [CrossRef]

- Maurel, H.; Vidal, S.; Rezk, T. Statically Identifying XSS using Deep Learning. In Proceedings of the SECRYPT 2021-18th International Conference on Security and Cryptography, Online Streaming, 6–8 July 2021. [Google Scholar]

- Karacan, H.; Sevri, M. A Novel Data Augmentation Technique and Deep Learning Model for Web Application Security. IEEE Access 2021, 9, 150781–150797. [Google Scholar] [CrossRef]

- Chen, T.; Chen, Y.; Lv, M.; He, G.; Zhu, T.; Wang, T.; Weng, Z. A Payload Based Malicious HTTP Traffic Detection Method Using Transfer Semi-Supervised Learning. Appl. Sci. 2021, 11, 7188. [Google Scholar] [CrossRef]

- Shahid, W.B.; Aslam, B.; Abbas, H.; Khalid, S.B.; Afzal, H. An enhanced deep learning based framework for web attacks detection, mitigation and attacker profiling. J. Netw. Comput. Appl. 2022, 198, 103270. [Google Scholar] [CrossRef]

- Lin, Z.; Shi, Y.; Xue, Z. Idsgan: Generative adversarial networks for attack generation against intrusion detection. arXiv 2018, arXiv:1809.02077. [Google Scholar]

- Shahriar, M.H.; Haque, N.I.; Rahman, M.A.; Alonso, M. G-ids: Generative adversarial networks assisted intrusion detection system. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 13–17 July 2020; pp. 376–385. [Google Scholar]

- Farahnakian, F.; Heikkonen, J. A deep auto-encoder based approach for intrusion detection system. In Proceedings of the 2018 20th International Conference on Advanced Communication Technology (ICACT), Chuncheon, Korea, 11–14 February 2018; pp. 178–183. [Google Scholar]

- Gharib, M.; Mohammadi, B.; Dastgerdi, S.H.; Sabokrou, M. Autoids: Auto-encoder based method for intrusion detection system. arXiv 2019, arXiv:1911.03306. [Google Scholar]

- Alon, U.; Zilberstein, M.; Levy, O.; Yahav, E. code2vec: Learning distributed representations of code. Proc. ACM Program. Lang. 2019, 3, 1–29. [Google Scholar] [CrossRef] [Green Version]

- Institute, P. Zero Day Attacks. Available online: https://cutt.ly/rYhswNo (accessed on 20 February 2022).

| RQ No. | Question | Motivation |

|---|---|---|

| RQ1-1 | What is the annual number of studies on DL-based web attacks detection? | Estimate the published articles per year on the DL-based web attacks detection. |

| RQ1-2 | What is the percentage of studies published in journals and conferences? | Compare the percentage of studies on the DL-based web attacks detection published in journals and conferences. |

| RQ2 | What datasets are used to evaluate the proposed approaches for DL-based web attacks detection? | Identify the datasets that are commonly used in the evaluation of prediction models. |

| RQ3 | What frameworks and platforms are used to implement the proposed solutions for DL-based web attacks detection? | Give an overview of the available frameworks and platforms used for developing DL-based web attacks detection models. |

| RQ4 | What performance metrics are used in DL-based web attacks detection literature? | Enumerate the most commonly used performance metrics in web intrusion detection systems. |

| RQ5 | What are the feature selection and extraction approaches used in DL-based web attacks detection literature? | Identify the feature extraction and selection approaches used for DL-based web attacks detection models. |

| RQ6 | What classification models are used to detect web vulnerabilities? | Identify the classification models that are commonly used for detecting web attacks. |

| RQ7 | What types of web attacks do the proposed approaches detect? | Identify whether the proposed solutions for DL-based web attacks detection have a general purpose or target a specific type of web attacks. |

| RQ8 | What is the performance of DL-based web attacks detection models? | Report the experimentation details of the proposed DL-based web attacks detection models. |

| RQ9 | What is the research focus of the PS? | Identify the main objective of the PS. |

| RQ10 | What limitations do the proposed solutions for DL-based web attacks detection have according to the authors? | Identify the limitations of the studies as stated by their authors. |

| QQ No. | Question |

|---|---|

| QQ1 | Is the objective of the study clear? |

| QQ2 | Is the data collection procedure clearly defined? |

| QQ3 | Does the study provide any tool or source online? |

| QQ4 | Is there a comparison among techniques? |

| QQ5 | Does the author provide sufficient details about the experiment? |

| QQ6 | Are problems of validity or trust of the results obtained adequately discussed? |

| QQ7 | Does the study clearly define the performance parameters used? |

| QQ8 | Is there a clearly defined relationship between objectives, data obtained, interpretation, and conclusions? |

| ID | Ref | Score | ID | Ref | Score |

|---|---|---|---|---|---|

| PS1 | [30] | 6.5 | PS29 | [31] | 6 |

| PS2 | [32] | 5.5 | PS30 | [33] | 6 |

| PS3 | [34] | 6 | PS31 | [35] | 4 |

| PS4 | [36] | 6 | PS32 | [37] | 4.5 |

| PS5 | [38] | 4 | PS33 | [39] | 4.5 |

| PS6 | [40] | 5.5 | PS34 | [41] | 6 |

| PS7 | [42] | 5 | PS35 | [43] | 4.5 |

| PS8 | [44] | 5 | PS36 | [45] | 4.25 |

| PS9 | [46] | 5 | PS37 | [47] | 5 |

| PS10 | [48] | 5.5 | PS38 | [49] | 5.5 |

| PS11 | [50] | 5 | PS39 | [51] | 6 |

| PS12 | [52] | 5 | PS40 | [53] | 5.5 |

| PS13 | [54] | 5 | PS41 | [55] | 5.75 |

| PS14 | [56] | 6.5 | PS42 | [57] | 6 |

| PS15 | [58] | 4.5 | PS43 | [59] | 4.5 |

| PS16 | [60] | 5.5 | PS44 | [61] | 3.5 |

| PS17 | [62] | 5 | PS45 | [63] | 6.5 |

| PS18 | [64] | 7 | PS46 | [65] | 4 |

| PS19 | [66] | 5 | PS47 | [67] | 5 |

| PS20 | [68] | 5 | PS48 | [69] | 4.5 |

| PS21 | [70] | 6 | PS49 | [71] | 6 |

| PS22 | [72] | 5 | PS50 | [73] | 4.5 |

| PS23 | [74] | 4.75 | PS51 | [75] | 5.5 |

| PS24 | [76] | 6 | PS52 | [77] | 5.5 |

| PS25 | [78] | 5.5 | PS53 | [79] | 5 |

| PS26 | [80] | 5 | PS54 | [81] | 5 |

| PS27 | [82] | 5.5 | PS55 | [83] | 8 |

| PS28 | [84] | 6 | PS56 | [85] | 5 |

| PS57 | [86] | 5 | |||

| PS58 | [87] | 4.5 | PS59 | [88] | 7 |

| PS60 | [50] | 5 | PS61 | [89] | 6.5 |

| PS62 | [90] | 6 | PS63 | [91] | 6 |

| No | Attribute Name | Research Question |

|---|---|---|

| 01. | Study identifier | |

| 02. | Year of publication | RQ1 |

| 03. | Type of study | RQ2 |

| 04. | Datasets | RQ3 |

| 05. | Frameworks | RQ4 |

| 06. | Performance measures | RQ5 |

| 07. | Feature extraction | RQ6 |

| 08. | Classification models | RQ7 |

| 09. | Type of web attacks | RQ8 |

| 10. | Experimental performance of proposed models | RQ9 |

| 11. | Limitations | RQ10 |

| 12. | Main objective | RQ11 |

| ID | Paper | Targeted Web Attacks | Classification Model | Dataset | Classification Type | Performance Metrics | Limitations |

|---|---|---|---|---|---|---|---|

| PS1 | [30] | SQLI | CNN | Combination of CSIC-2010, KDD-Cup99, UNSW-NB15, and private dataset | binary | -Accuracy: 0.9950 -Precision: 0.9898 -F1-score: 0.99 -TPR: 1 | -Restricted datasets -Limited to one type of attack |

| PS2 | [32] | Web attacks injected in HTTP requests | CNN | CSIC-2010 | binary | -Accuracy: 0.9820 -TPR: 0.98 -TNR: 0.97 | -Online learning -Adversarial attacks |

| PS3 | [34] | JavaScript attacks | Logistic regression | Private dataset | binary | -Accuracy: 0.9482 -Precision: 0.949 -TPR: 0.94 | -Minification and obfuscation of JS code -Long training time |

| PS4 | [36] | Malicious HTML pages | DFFN | Private dataset | binary | -ROC: 0.975 | -Establishing the ground truth |

| PS5 | [38] | Web attacks injected in HTTP requests | CNN | CSIC-2010 | binary | -Accuracy: 0.9649 -TPR: 0.934 -FPR: 0.13 | -Consider the whole HTTP request message in the detection system. |

| PS6 | [40] | Web attacks injected in HTTP requests | Weighted average ensemble of ResNet | CSIC-2010 | binary | -Accuracy: 0.9941 -TNR: 0.99 | -Evaluate other ensemble models and experiment with other feature representation techniques |

| PS7 | [42] | Web attacks injected in HTTP requests | CNN + GRU | CSIC-2010 | binary | -Accuracy: 0.99 -Precision: 0.9982 -F1-score: 0.987 -TPR: 0.97 | Not Available |

| PS8 | [44] | XSS attacks | CNN + LSTM | xssed.com | binary | -Accuracy: 0.993 -Precision: 0.999 -F1-score: 0.995 -TPR: 0.99 -AUC: 0.95 | Scarcity of datasets in the field of web security |

| PS9 | [46] | Web attacks injected in HTTP requests | Neural Network | CSIC-2010 | binary | -Accuracy: 0.84 -Precision: 0.83 -F1-score: 0.79 -TPR: 0.82 -AUC: 0.86 | Not available |

| PS10 | [48] | SQLI | CNN | Private dataset | binary | -Accuracy: 0.953 -Precision: 0.954 F1-score: 0.734 -TPR: 0.59 | -Collect a dataset for SQL injection attack detection -Build a word2vec model for PHP source code -Use Control Flow Graph attributes |

| MLP | Private dataset | binary | -Accuracy: 0.953 -Precision: 0.91 -F1-score: 0.746 -TPR: 0.637 | ||||

| PS11 | [50] | Web attacks injected in HTTP requests | CNN | CSIC-2010 | binary | -Accuracy: 0.9797 -Precision: 0.9743 -F1-score: 0.975 -TPR: 0.97 -FPR: 0.03 -TNR: 0.96 -AUC: 0.96 | -Datasets available for evaluating DL-based web attacks detection model are limited -Consider multi-class classification instead of binary classification |

| PS12 | [52] | SQLI and DDoS | Neural Network | Private dataset | multi-class | -Accuracy: 0.97 | -Variable size network -Memory and time constraints |

| PS13 | [54] | Web attacks injected in HTTP requests | Ensemble classification of Neural Network and ML algorithms | CSIC-2010 | binary | -Accuracy: 0.9098 | -Compare the proposed method with other ensemble classification models -Apply the proposed method in malware |

| ECML-PKDD | binary | -Accuracy: 0.9056 | |||||

| PS14 | [56] | -Password guessing and authentication -SQLI -Application vulnerability attack | CNN + LSTM | Private dataset | multi-class | -Accuracy: 0.9807 -Precision: 0.9706 -F1-score: 0.981 -TPR: 0.99 -TNR: 0.99 | -Re-validation and retraining of DL-based misuse intrusion detection tools-Combine misuse detection tools and signature based tools |

| PS15 | [58] | Web attacks injected in HTTP requests | Isolation Forest | CSIC-2010 | binary | -Accuracy: 0.8832 -Precision: 0.8029 -F1-score: 0.841 -TPR: 0.88 -TNR: 0.88 | -Use other deep learning techniques |

| PS16 | [60] | Web attacks injected in GET HTTP requests | LSTM + Multi-Layer Perceptron (MLP) | CSIC-2010 | binary | -Accuracy: 0.9842 -TPR: 0.97 -TNR: 0.99 | -Long URLs are not handled very well -The proposed model can not dynamically leverage between true positives and false positives |

| GRU + MLP | Private dataset | binary | -Accuracy: 0.9856 -TPR: 0.98 -TNR: 0.98 | ||||

| PS17 | [62] | Web attacks injected in HTTP requests | LSTM | CSIC-2010 | binary | -Accuracy: 0.9997 -Precision: 0.995 -TPR: 0.995 | Not Available |

| PS18 | [64] | Web attacks injected in system calls | LSTM | Private dataset | binary | -AUC: 0.96 | -Certain classes of attacks could be missed -Privacy leakage -Retraining ML models for each new considered web application -Adversarial attacks |

| PS19 | [66] | -SQLI -XSS -Brute-Force | GRU based Encoder-Decoder with attention mechanism | CSIC 2010 + CICIDS 2017 | multi-class | -TPR: 0.94 -FPR: 0.003 | Not Available |

| PS20 | [68] | Web attacks injected in HTTP requests | Bi-directional LSTM | CSIC 2010 | binary | -Accuracy: 0.9835 -Precision: 0.99 -F1-score: 0.985 -TPR: 0.98 -FPR: 0.014 | Not Available |

| PS21 | [70] | Web attacks injected in HTTP requests | CNN + LSTM | CSIC 2010 | binary | -Accuracy: 0.9779 -Precision: 0.9854 -F1-score: 0.9872 -TPR: 0.9604 | -Include more web attacks in the dataset -Consider scenario based attacks (i.e., correlated requests) -Deploy the model in a practical web service |

| PS22 | [72] | Web attacks hidden in HTTP requests | GRU | Private dataset | binary | -Accuracy: 0.985 | Not Available |

| PS23 | [74] | Web attacks hidden in HTTP requests | CNN | CSIC 2010 | binary | -Accuracy: 0.9726 -Precision: 0.9771 -F1-score: 0.965 -TPR: 0.95 -FPR: 0.01 | -HTTP requests are misclassified if containing strings that never appear in the training set |

| LSTM | CSIC 2010 | binary | -Accuracy: 0.9699 -Precision: 0.9882 -F1-score: 0.962 -TPR: 0.937 -FPR: 0.007 | -The way in which the request is split into a sequence of words influences false positives occurrence | |||

| CNN-LSTM | CSIC 2010 | binary | -Accuracy: 0.9550 -Precision: 0.9463 -F1-score: 0.944 -TPR: 0.94 -FPR: 0.03 | -Inspect all the fields of the HTTP request | |||

| LSTM-CNN | CSIC 2010 | binary | -Accuracy: 0.9602 -Precision: 0.9652 -F1-score: 0.950 -TPR: 0.935 -FPR: 0.02 | -Use more sophisticated models | |||

| PS24 | [76] | XSS attacks | LSTM | Private dataset | binary | -Precision: 0.995 -F1-score: 0.987 -TPR: 0.979 -AUC: 0.98 | -Collect more XSS attacks |

| PS25 | [78] | Web attacks hidden in HTTP requests | CNN-GRU | Private dataset | binary | -Accuracy: 0.9961 -Precision: 0.9963 -F1-score: 0.9961 -TPR: 0.9958 | -Reduce memory consumption -Online update of the trained model |

| PS26 | [80] | Web attacks hidden in HTTP requests | SVM | CSIC 2010 | binary | -Accuracy: 0.9897 -Precision: 0.9970 -TPR: 0.986 | Not Available |

| PS27 | [82] | SQLI | Random Forest | SQL injection datasets published in Github | binary | -Accuracy: 0.998 -Precision: 0.999 -F1-score: 0.999 -TPR: 0.986 -TNR: 0.999 -AUC: 0.999 | -Non-malicious data is biased toward domain-specific traffic |

| DFFN | SQL injection datasets published in Github | binary | -Accuracy: 0.984 -Precision: 0.934 -F1-score: 0.873 -TPR: 0.820 -TNR: 0.995 -AUC: 0.992 | ||||

| PS28 | [84] | -SQLI -XSS -Object de-serialization | Stacked Denoising Auto-Encoder | Private dataset | multi-class | -Precision: 0.906 -F1-score: 0.918 -TPR: 0.928 | -Investigate more complex neural networks -Detect zero-day attacks -Online updating of trained models -Distributed machine learning analysis |

| PS29 | [31] | DDOS | Stacked Auto-Encoder | Private dataset | multi-class | -TPR: 0.98 -FPR: 0.012 | Not Available |

| PS30 | [33] | SQLI | LSTM | Private dataset | binary | -Accuracy: 0.9917 -Precision: 0.9110 -TPR: 0.99 -FPR: 0.90 | Not Available |

| MLP | Private dataset | binary | -Accuracy: 0.9975 -Precision: 0.9727 -TPR: 0.99 -FPR: 0.26 | ||||

| PS31 | [35] | SQLI | MLP | Private dataset | binary | -Accuracy: 1 -TPR: 1 -TNR: 1 | Not Available |

| PS32 | [37] | Web attacks hidden in web logs | DFFN | Apache 2006 | binary | -Accuracy: 0.9973 -Precision: 0.9976 -F1-Score: 0.995 -TPR: 0.99 | Not Available |

| DFFN | Apache 2017 | binary | -Accuracy: 0.9838 -Precision: 0.9984 -F1-score: 0.972 -TPR: 0.94 | ||||

| PS33 | [39] | Web attacks hidden in HTTP requests | LSTM | CSIC 2010 | binary | -Precision: 0.977 -F1-score: 0.978 -TPR: 0.979 | Not Available |

| CNN | CSIC 2010 | binary | -Precision: 0.986 -F1-score: 0.985 -TPR: 0.983 | ||||

| LSTM+ CNN | CSIC 2010 | binary | -Precision: 0.989 -F1-score: 0.989 -TPR: 0.988 | ||||

| PS34 | [41] | -Malicious URLs -Malicious registry keys -Malicious file paths | CNN | Private dataset | multi-class | -TNR: 0.993 (URLs detection) | -Poor detection performance of some web attacks |

| -TNR: 0.978 (file paths detection) | -Long URLs length induce computational cost overhead | ||||||

| -TNR: 0.992 (registery keys detection) | |||||||

| PS35 | [43] | Web attacks hidden in network traffic | LSTM | CICIDS 2017 | binary | -Accuracy: 0.9908 -TPR: 0.987 -TNR: 0.992 | -Available datasets for web attacks detection are restricted |

| NSL-KDD | binary | -Accuracy: 0.9914 -TPR: 0.995 -TNR: 0.996 | |||||

| CICIDS 2017 | multi-class | -Accuracy: 0.9910 -TPR: 0.994 -TNR: 0.993 | -Reduce detection time by using GPU | ||||

| NSL-KDD | multi-class | -Accuracy: 0.994 -TPR: 0.985 -TNR: 0.992 | |||||

| PS36 | [45] | Web attacks hidden in network traffic | Genetic algorithm and Shallow Neural Network (SNN) | CICIDS-2017 | multi-class | -Accuracy: 0.9790 (web attacks detection) 0.9676 (web attacks detection) -TPR: 0.97 (web attacks detection) | Not Available |

| CICIDS-2017 | multi-class | -Accuracy: 0.9758 (normal traffic) -Precision: 0.9540 (normal traffic) -TPR: 0.96 (normal traffic) | |||||

| PS37 | [47] | Web attacks hidden in HTTP requests | Stacked-AE + Isolation Forest (IF) | CSIC 2010 | binary | -Accuracy: 0.8924 -Precision: 0.8158 -F1-score: 0.853 -TPR: 0.894 -TNR: 0.8911 -AUC: 0.96 | -Extract effective features |

| Deep Belief Network (DBN) + IF | CSIC 2010 | binary | -Accuracy: 0.8687 -Precision: 0.8109 -F1-score: 0.813 -TPR: 0.815 -TNR: 0.89 -AUC: 0.94 | -Port the WAF as cloud service | |||

| Stacked-AE + Elliptic Envelop | ECML-PKDD | binary | -Accuracy: 0.8378 -Precision: 0.8240 -F1-score: 0.842 -TPR: 0.863 -TNR: 0.8117 -AUC: 0.92 | -Extend the proposed method for big data environments and data streams | |||

| DBN + Elliptic Envelop | ECML-PKDD | binary | -Accuracy:0.8413 -Precision: 0.8086 -F1-score:0.849 -TPR: 0.895 -TNR: 0.78 -AUC: 0.94 | ||||

| PS38 | [49] | -SQLI -XSS attacks | CNN | CSIC 2010 | multi-class | -Accuracy: 0.998 -Precision: 1 -F1-score: 0.991 -TPR: 0.982 -FPR: 0 | -The proposed system can only deal with SQLI and XSS attacks |

| CNN | Private dataset | multi-class | -Accuracy: 0.999 -Precision: 1 -F1-score: 0.999 -TPR: 0.986 -FPR: 0 | ||||

| PS39 | [51] | Malicious JS code | Bi-directional LSTM | Private dataset | binary | -Accuracy: 0.9771 -Precision: 0.9868 -F1-score: 0.9829 -TPR: 0.979 | -Static analysis cannot detect malicious JS code generated dynamically -Experiment more complex neural networks |

| PS40 | [53] | -SQLI -RFI -XSS -DT | CNN | Private dataset | multi-class | -Precision: 1 (benign) -TPR: 0.99 (benign) FPR: 0.02 (benign) | -Test the model performance in more practical applications |

| -Precision: 1 (Directory Traversal (DT)) -TPR:1 (DT) | |||||||

| -Precision: 0.9983 (Remote File Inclusion (RFI)) -TPR:1 (RFI) | |||||||

| -Precision: 0.9979 (SQLI) -TPR:1 (SQLI) | |||||||

| -Precision: 1 (XSS) -TPR:1 (XSS) | |||||||

| PS41 | [55] | XSS | DFFN | Private dataset | binary | -Accuracy: 0.9932 Precision: 0.9921 -F1-score: 0.987 -TPR: 0.98 -FPR: 0.31 -AUC: 0.99 | -Deploy the proposed model in a real-time detection system |

| PS42 | [57] | Web attacks injected in web logs and HTTP requests | CNN | Apache 2006 | binary | -Accuracy: 0.9971 -Precision: 0.9964 -F1-score: 0.9921 -TPR: 0.9879 | -Exploit model uncertainty in other security scenarios |

| CSIC 2010 | binary | -Accuracy: 0.9581 -Precision: 0.8612 -F1-score: 0.987 -TPR: 0.9291 | -Combine softmax output and the model uncertainty as a unified standard to evaluate the prediction confidence | ||||

| Apache 2007 | binary | -Accuracy: 0.9959 -Precision: 0.9878 -F1-score: 0.9931 -TPR: 0.9984 | |||||

| PS43 | [59] | XPath injection | LSTM | Private dataset | binary | -TPR: 0.84 -FPR: 0.16 -TNR: 0.83 | -Use other techniques and algorithms to enhance accuracy while considering the important factor of response time |

| PS44 | [61] | Web attacks hidden in URLs | SAE | Private dataset | binary | -Accuracy: 0.99 | -Identify other types of web attacks that appear in user agent strings and cookies |

| RNN | Private dataset | binary | -Accuracy: 0.93 | ||||

| PS45 | [63] | SQLI | CNN | Private dataset | binary | -Accuracy: 0.9993 -Precision: 0.9996 -F1-score: 0.99 -TPR: 0.99 -TNR: 0.99 | -Implement a multi-classification model that is not limited to SQL injection attacks detection |

| PS46 | [59] | SQLI | DFFN | Private dataset | binary | -Accuracy: 0.968 -TPR: 0.032 | Not Available |

| PS47 | [67] | SQLI | LSTM | Private dataset | binary | -Accuracy: 0.9535 -Precision: 0.9651 -TPR: 0.96 | -Use real PHP applications to generate datasets |

| PS48 | [69] | Web attacks hidden in HTTP requests | CNN | CSIC 2010 | binary | -Accuracy: 0.988 | Not Available |

| PS49 | [71] | Web attacks hidden in HTTP requests | Encoder–Decoder | Private dataset | binary | -Precision: 0.9937 (average value) -F1-score: 0.993 (average value) -TPR: 0.99 (average value) | -Class imbalance problem -Poisoning attack |

| PS50 | [73] | SQLI | DBN | Private dataset | binary | -Accuracy: 0.96 | Not Available |

| PS51 | [75] | -XSS -SQLI | MLP network as an ensemble classifer of: CNN, LSTM, and a variation of Residual Networks (ResNet) | CSIC 2010 | multi-class | -Accuracy: 0.9947 -Precision: 0.9970 -TPR: 0.99 -FPR: 0.00 | |

| Private dataset | multi-class | -Accuracy: 0.9998 -Precision: 0.9997 -TPR: 1 -FPR: 0.04 | -Explore other DL models | ||||

| Private dataset | multi-class | -Accuracy: 0.9917 -Precision: 0.9917 -TPR: 0.99 -FPR: 0.00 | -Detect other web attacks | ||||

| PS52 | [77] | Web attacks hidden in network traffic | Tree CNN with Soft-Root-Sign (SRS) activation function | CICIDS 2017 | multi-class | -Accuracy: 0.99 | -Test other activation functions |

| Tree CNN with SRS activation function | private dataset | multi-class | -Accuracy: 0.98 -Precision: 0.95 -F1-score: 0.97 -TPR: 0.97 | -Existing datasets do not have characteristics of IoT devices | |||

| Tree CNN with SRS activation function | private dataset | multi-class | -Accuracy: 0.99 -Precision: 0.98 -F1-score: 0.98 -TPR: 0.98 | ||||

| PS53 | [79] | Web attacks hidden in HTTP requests | CNN | CSIC 2010 | binary | -Accuracy: 0.981 -Precision: 0.977 -F1-score: 0.962 -TPR: 0.97 | -Time overhead in the training and testing phases |

| PS54 | [81] | Web attacks hidden in HTTP requests | CNN with word-level embedding | CSIC 2010 | binary | -Accuracy: 0.976 | -Investigate new embedding approaches |

| CNN with character-level embedding | CSIC 2010 | binary | -Accuracy: 0.961 | ||||

| PS55 | [83] | DOM-XSS attack | DFFN | private dataset (Unconfirmed vulnerabilities) | binary | -Precision: 0.267 -TPR: 0.95 | -Browser-specific vulnerabilities |

| PS56 | [85] | Web attacks hidden in IoT networks | DBN | CICIDS 2017 | multi-class | -Accuracy: 0.987 -Precision: 0.972 -F1-score: 0.97 -TPR: 0.98 | -Detect other attacks against IoTs -Evaluate the model against other intrusion detection datasets |

| PS57 | [86] | Web attacks | DFFN (8 input neurons) | private dataset | binary | -TPR: 0.92 -FPR: 0.07 | -Consider every parameter in web pages -Achieve more coverage of users behavior |

| DFFN (7 input neurons) | private dataset | binary | -TPR: 0.95 -FPR: 0.04 | ||||

| PS58 | [87] | Web attacks hidden in HTTP requests | CNN | CSIC 2010 (train/test split) | binary | -Accuracy: 0.9812 -Precision: 0.9483 -F1-score: 0.962 -TPR: 0.97 | -ASCII code based conversion causes a time overhead in the training and testing phases |

| CSIC 2010 (5-fold cross-validation) | binary | -Accuracy: 0.9824 -AUC: 0.97 | |||||

| CSIC 2010 (10-fold cross-validation) | binary | -Accuracy: 0.9820 -AUC: 0.97 | |||||

| PS59 | [88] | XSS hidden in PHP and JS code | Path Attention [96] (DFFN-based network with attention mechanism) | Private D1 (PHP included as code) and word2vec used for vectorization | binary | -Accuracy: 0.733 -Precision: 0.68 -F1-score: 0.70 -TPR: 0.735 | -Created datasets consider synthetic data only |

| Private D2 (PHP included as text) and word2vec used for vectorization | binary | -Accuracy: 0.707 -Precision: 0.724 -F1-score: 0.615 -TPR: 0.534 | -The current code representation techniques do not take into account the invocation between different files: they only analyze single files | ||||

| Private D1 (JS included as code) and word2vec used for vectorization | binary | -Accuracy: 0.720 -Precision: 0.728 -F1-score: 0.676 -TPR: 0.631 | |||||

| Private D2 (PHP included as code) and code2vec used for vectorization | binary | -Accuracy:0.9538 -Precision: 0.9538 -F1-score: 0.918 -TPR: 0.999 | -The current code representation techniques do not scale to large source code files | ||||

| Private D1 (JS included as code) and code2vec used for vectorization | binary | -Accuracy: 0.797 -Precision: 0.894 F1-score: 0.740 -TPR: 0.632 | |||||

| PS60 | [50] | Web attacks hidden in HTTP requests | CNN | CSIC 2010 | binary | -Accuracy: 0.9684 -Precision: 0.9743 -F1-score: 0.9751 -TPR: 0.9759 -FPR: 0.0368 -TNR: 0.9631 -AUC: 0.9696 | -Consider multi-classification technique using the proposed model |

| PS61 | [89] | -Different web attacks injected in HTTP Web requests | Bi-LSTM | private dataset | binary | -Accuracy: 0.975 -Precision: 0.976 -F1-score: 0.975 -TPR: 0.975 | -Training Bi-LSTM models consume time and computational resources |

| ECML-PKDD | binary | -Accuracy: 0.995 -Precision: 0.994 -F1-score: 0.996 -TPR: 0.998 | -The DA-SANA method does not consider the files uploaded as part of the web HTTP request length | ||||

| ECML-PKDD | binary | -Accuracy: 0.927 -Precision: 0.926 -F1-score: 0.923 -TPR: 0.927 | -The DA-SANA method does not consider the files uploaded as part of the Web Http request length | ||||

| CSIC2010 | multi-class | -Accuracy: 0.986 -Precision: 0.982 -F1-score: 0.981 -TPR: 0.984 | |||||

| PS62 | [90] | -Malicious HTTP requests -XSS -SQLI -DT | CNN | private | binary and multi-class | -Precision: 0.9333 -F1-score: 0.9340 -TPR: 0.9348 | -Consider complex web attacks such as webshell -Detect encrypted malicious HTTP requests -Consider datasets with only normal samples (i.e., anomaly-based detection) -Consider non-textual elements of the HTTP request |

| PS63 | [91] | Web attacks | CNN | private dataset | binary | -Accuracy: 0.9994 -Precision: 0.9995 -F1-score: 0.9993 -TPR: 0.992 | -Implement a deception mechanism that analyzes the characteristics of detected web attacks |

| CSIC2010 | binary | -Accuracy: 0.987 -Precision: 0.994 -F1-score: 0.991 -TPR: 0.988 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alaoui, R.L.; Nfaoui, E.H. Deep Learning for Vulnerability and Attack Detection on Web Applications: A Systematic Literature Review. Future Internet 2022, 14, 118. https://doi.org/10.3390/fi14040118

Alaoui RL, Nfaoui EH. Deep Learning for Vulnerability and Attack Detection on Web Applications: A Systematic Literature Review. Future Internet. 2022; 14(4):118. https://doi.org/10.3390/fi14040118

Chicago/Turabian StyleAlaoui, Rokia Lamrani, and El Habib Nfaoui. 2022. "Deep Learning for Vulnerability and Attack Detection on Web Applications: A Systematic Literature Review" Future Internet 14, no. 4: 118. https://doi.org/10.3390/fi14040118

APA StyleAlaoui, R. L., & Nfaoui, E. H. (2022). Deep Learning for Vulnerability and Attack Detection on Web Applications: A Systematic Literature Review. Future Internet, 14(4), 118. https://doi.org/10.3390/fi14040118