Analysis and Prediction of the IPv6 Traffic over Campus Networks in Shanghai

Abstract

1. Introduction

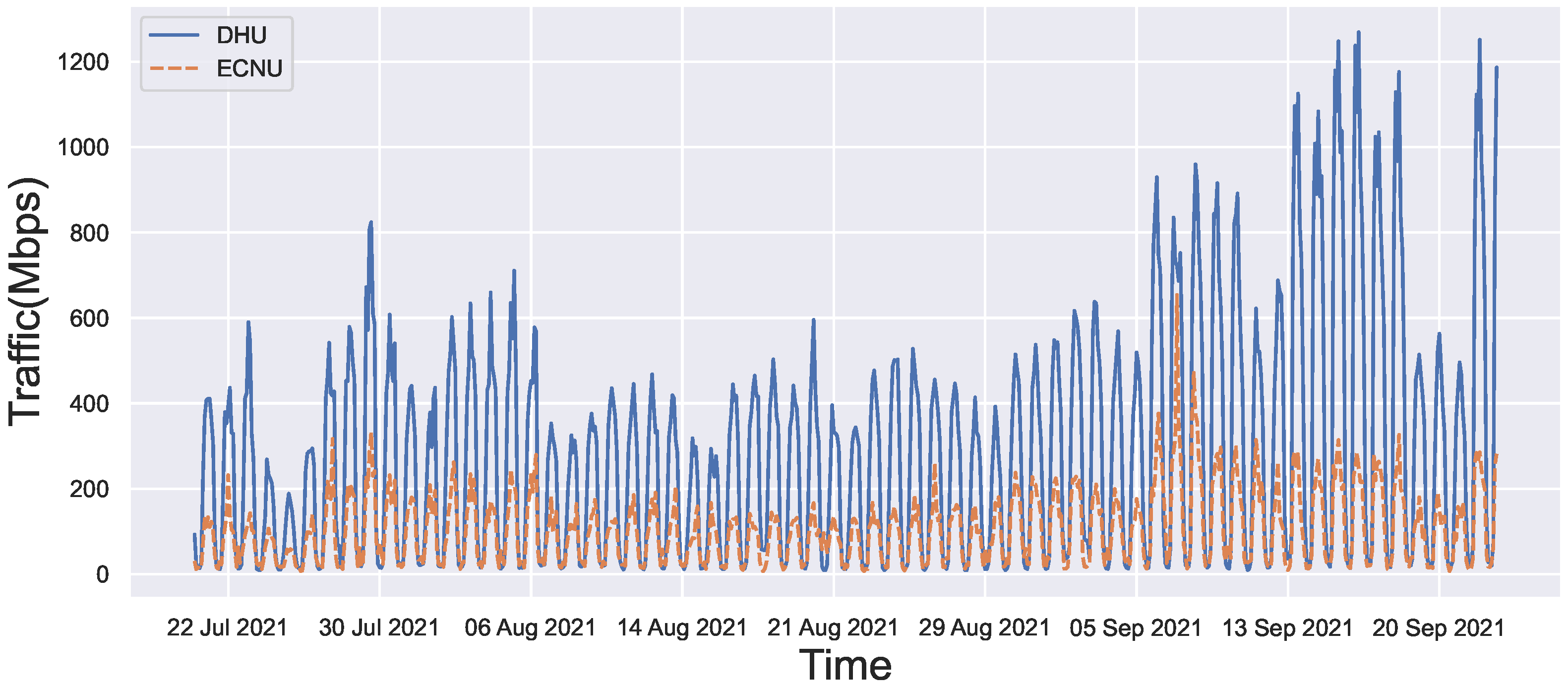

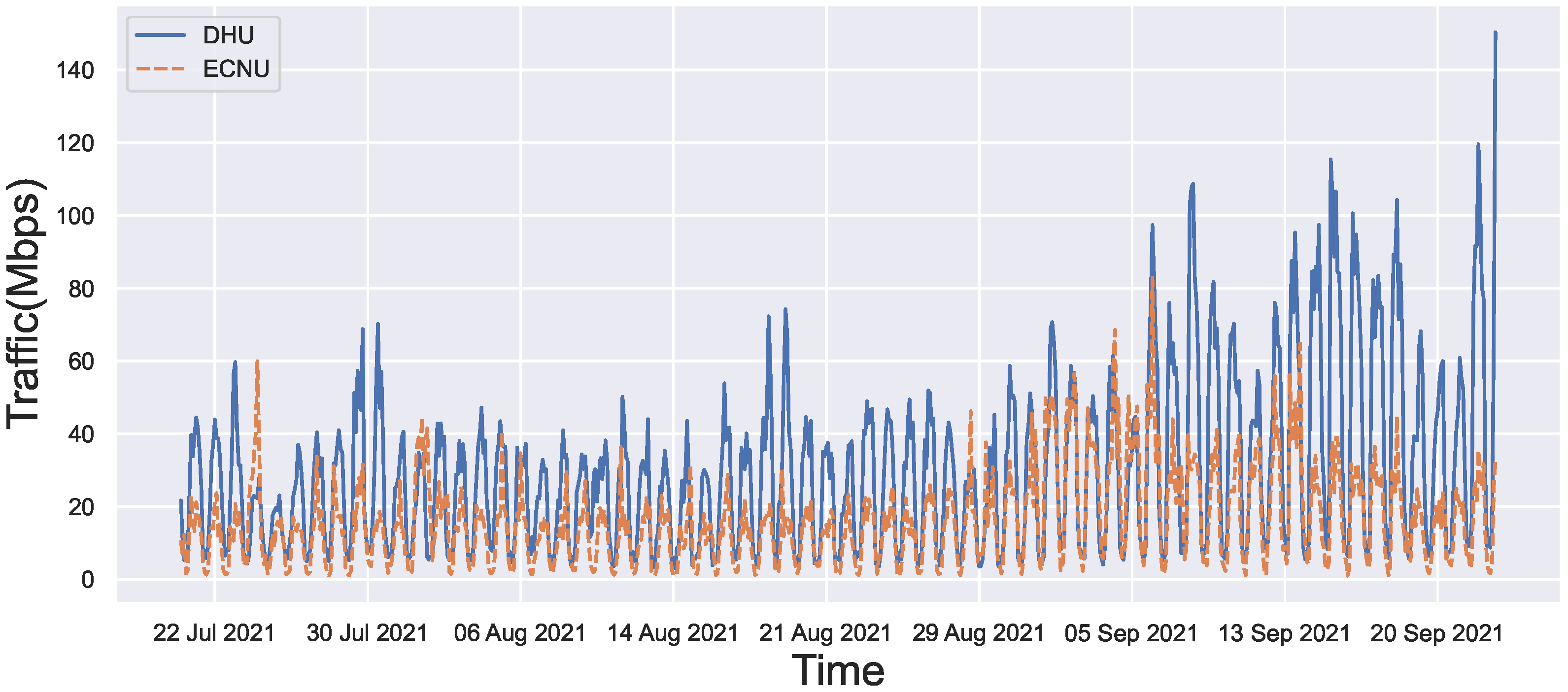

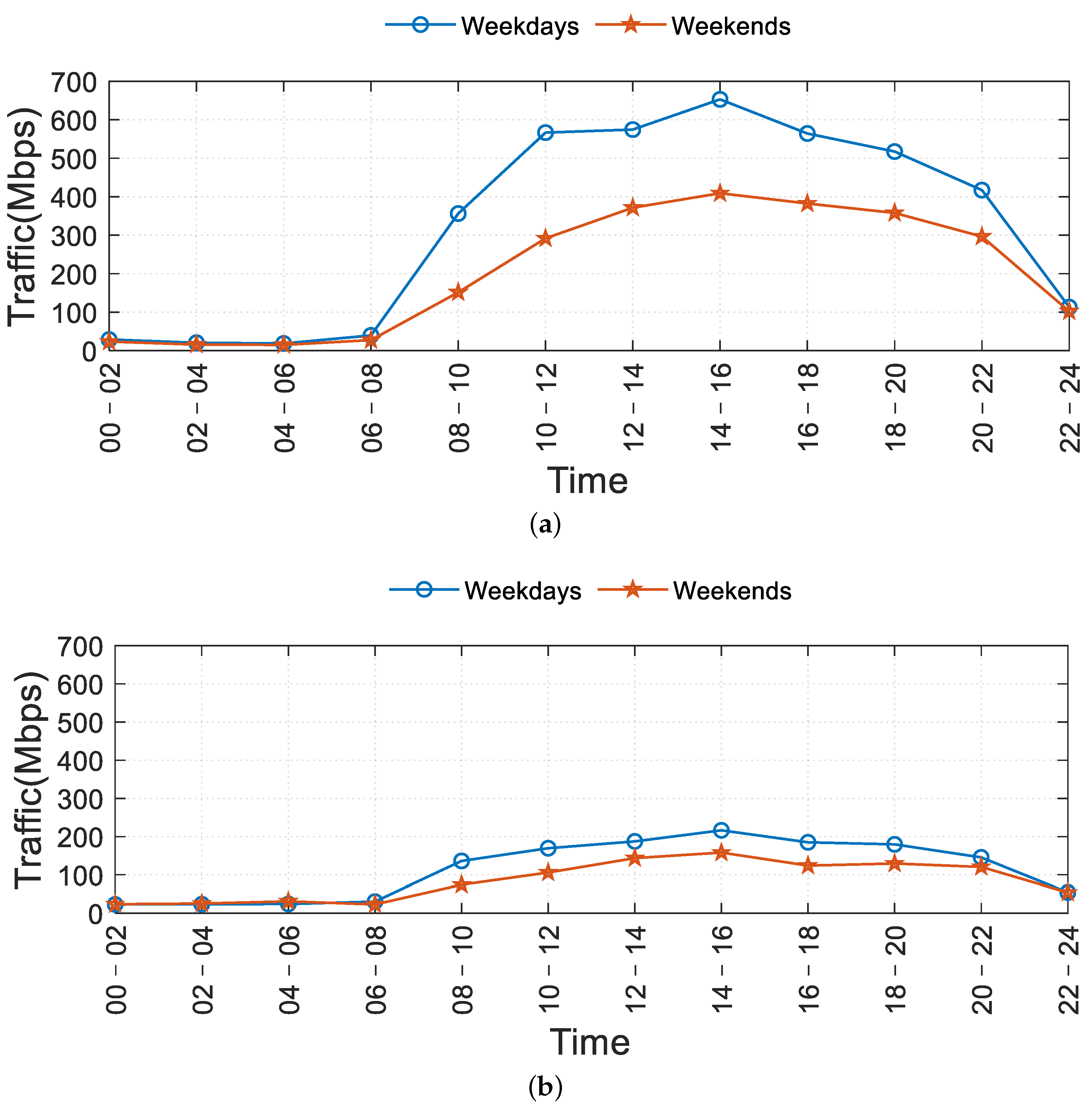

- This paper starts with analyzing the IPv6 traffic characteristics of two universities in Shanghai, i.e., Donghua University (DHU) and East China Normal University (ECNU). For each of these two universities, we show the weekday and weekend usage patterns and self-similarity of the IPv6 traffic and evaluate the correlation between IPv4 traffic and IPv6 traffic.

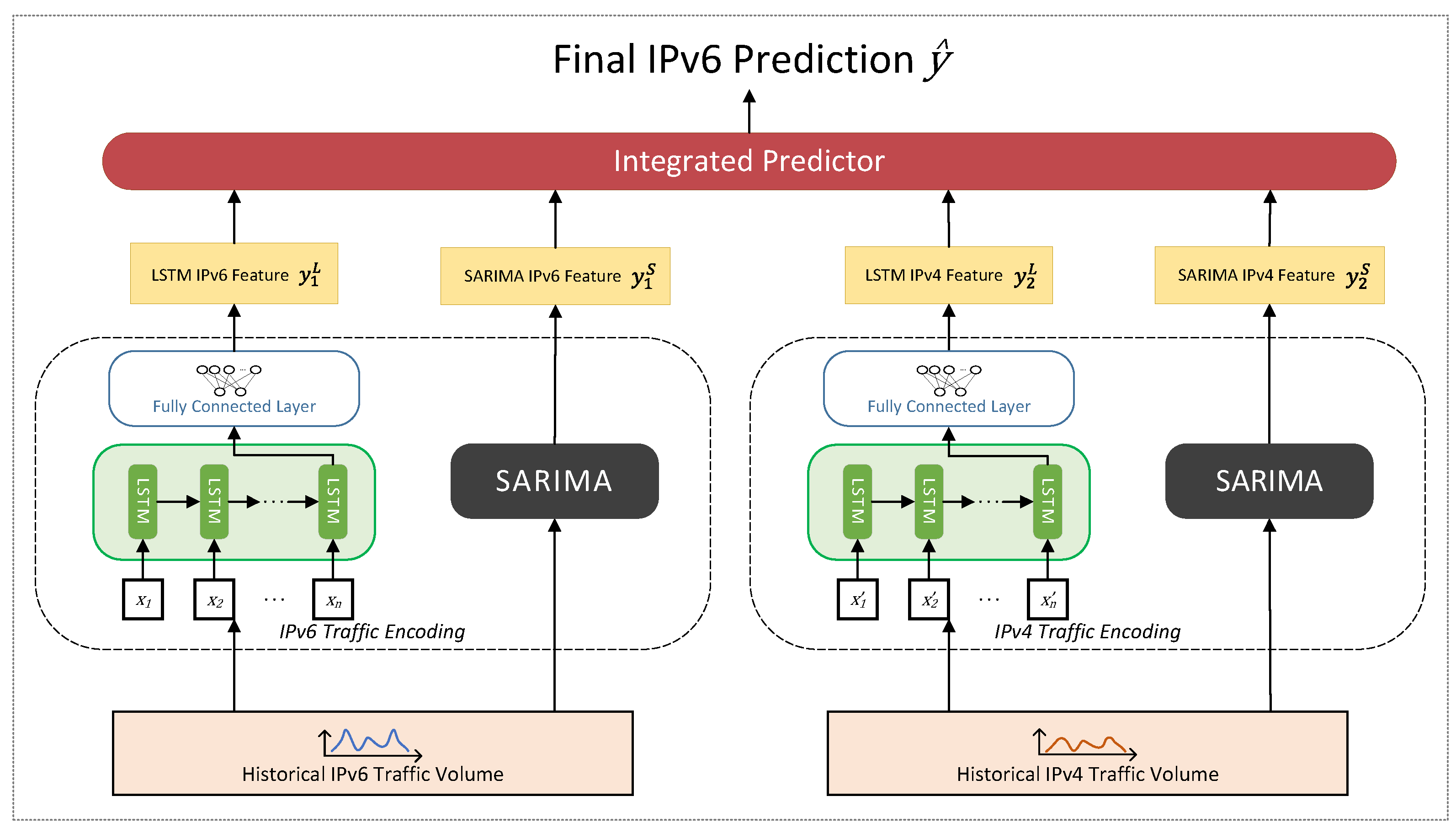

- In addition, we further dig into the problem of IPv6 traffic prediction. A new model named LSTM with seasonal ARIMA for IPv6 (LS6) is proposed to predict IPv6 network traffic with high accuracy. Considering the correlation between IPv6 and IPv4 network traffic, LS6 uses both IPv4 and IPv6 historical traffic data as the model input and leverages both the advantages of statistical and deep learning methods.

- To validate the effectiveness of our LS6 model, we conduct a series of experiments on two real-world traffic datasets. We can see that LS6 performs better than several baselines, including support vector machine (SVM), LSTM, Bi-LSTM, and phased LSTM (PLSTM).

2. Related Work

2.1. Analysis of Network Traffic

2.2. Prediction of Network Traffic

3. Dataset and Traffic Usage Features

3.1. Traffic Patterns of Weekdays and Weekends

3.2. Self-Similarity Analysis

- The aggregate variance method plots the sample variance versus the block size of each aggregation level on a log-log plot. If the series is self-similar, the plot will be a line with slope greater than -1. The H is estimated by .

- method uses the rescaled range statistic ( statistic). The statistic is the range of the cumulative deviations of a time series sequence from its mean, divided by its standard deviation. The method plots the R/S statistic versus the number of points of the aggregated series and the plot should be linear with a slope. The estimation of the Hurst exponent is the slope.

- Periodogram method plots the the spectral density of a time series versus the frequencies on a log-log plot. The slope of the plot is the estimate of H.

3.3. Correlation Analysis

4. IPv6 Traffic Prediction Model

4.1. Problem Formulation

4.2. The LS6 Model

4.2.1. Model Overview

4.2.2. Traffic Encoding

4.2.3. Integrated Predictor

4.3. Learning and Prediction

4.4. Summary

5. Evaluation

5.1. Datasets

5.2. Experimental Setup

- Naive-2h: Naive-2h uses the IPv6 traffic volume of the previous time slot as the predicted value. We use Naive-2h to show the traffic difference between adjacent time slots.

- Naive-24h: Naive-24h uses the IPv6 traffic volume 24 h ago, in other words, the traffic value of the corresponding time slot of the previous day, as the predicted value. We use Naive-24h to show the traffic difference between adjacent days.

- ARIMA: We only use the previous IPv6 traffic data to fit an ARIMA model and then predict the IPv6 traffic volume at the next time slot with ARIMA.

- SARIMA: We only use the previous IPv6 traffic data to fit a SARIMA model which is used as a part of traffic encoding in LS6 and then predict IPv6 traffic volume at the next time slot with SARIMA.

- SVM: SVM is a classic supervised machine learning algorithm which can be used for regression. We only use the IPv6 traffic data to train an SVM and use the output of the SVM as the predicted IPv6 traffic volume.

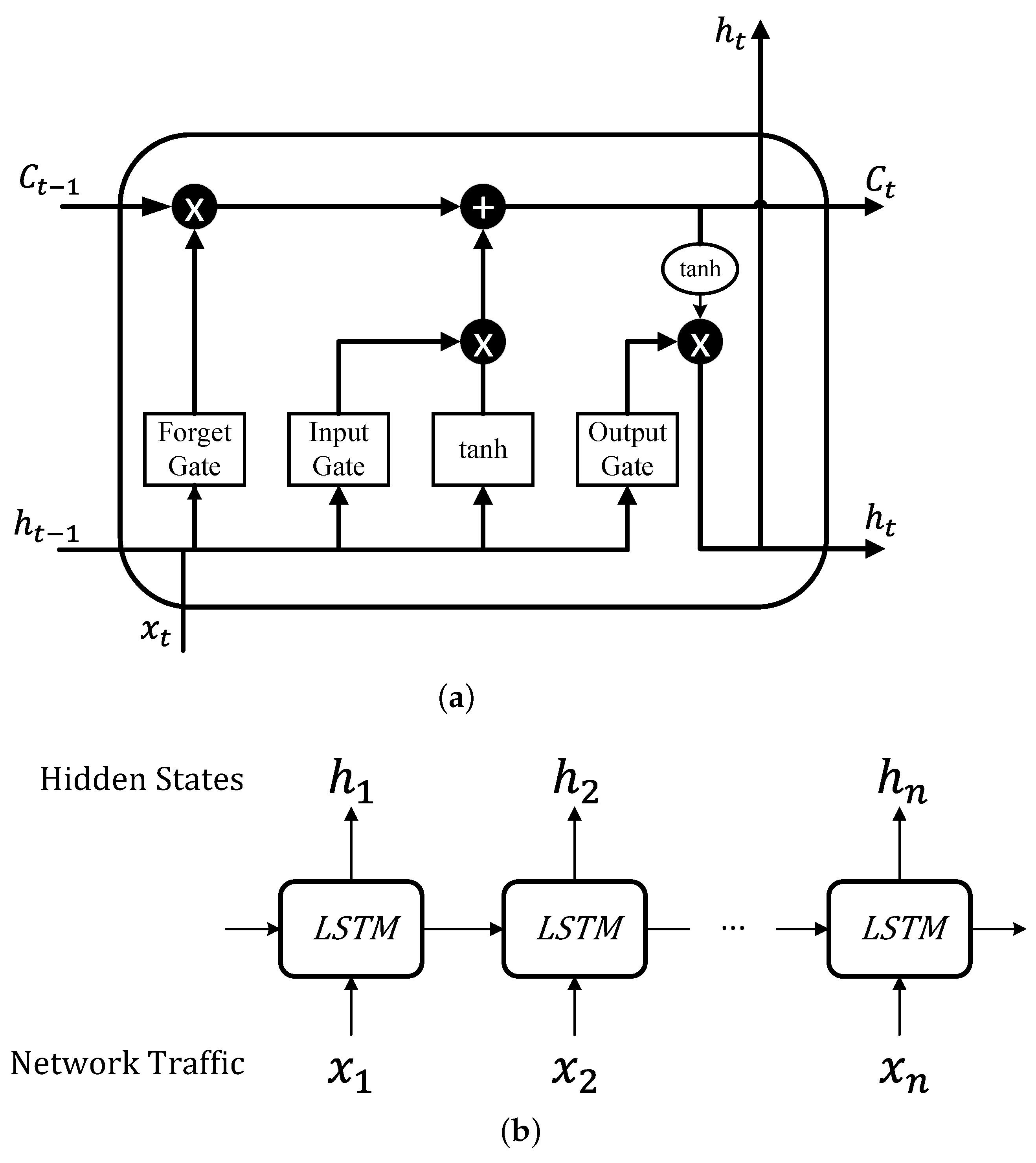

- LSTM: We only use the IPv6 traffic data to train an LSTM network which is used as a part of traffic encoding in LS6. The output of the LSTM network is fed to a fully connected layer to get the predicted IPv6 traffic volume.

- Bi-LSTM [54]: Bidirectional LSTM is a variant of LSTM composed of a forward LSTM and a backward LSTM, which can save information from both the past and future. We train it the same way we train the LSTM network. The output of the Bi-LSTM network is fed to a fully connected layer to get the predicted IPv6 traffic volume.

- PLSTM [55]: Phased LSTM (PLSTM) is a variant of LSTM and extends the LSTM model by adding a new time gate, which achieves faster convergence than the vanilla LSTM on long sequences tasks. It has also been applied in time series prediction [56,57,58,59]. We train it the same way we train the LSTM network. The output of the PLSTM network is fed to a fully connected layer to get the predicted IPv6 traffic volume.

5.3. Result and Analysis

5.4. Ablation Study

6. Discussion

6.1. Training Using Both Datasets

6.2. The Influence of the 24 h Period

6.3. Limitation

7. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, F.; Freeman, D. Towards A User-Level Understanding of IPv6 Behavior. In Proceedings of the 2020 ACM Internet Measurement Conference (IMC), Virtual Event, USA, 27–29 October 2020; pp. 428–442. [Google Scholar]

- Google IPv6 Statistics. Available online: https://www.google.com/intl/en/ipv6/statistics.html (accessed on 13 February 2022).

- Rye, E.C.; Beverly, R.; Claffy, K.C. Follow the scent: Defeating IPv6 prefix rotation privacy. In Proceedings of the 2021 ACM Internet Measurement Conference (IMC), Virtual Event, USA, 2–4 November 2021; pp. 739–752. [Google Scholar]

- Hermann, S.; Fabian, B. A Comparison of Internet Protocol (IPv6) Security Guidelines. Future Internet 2014, 6, 1–60. [Google Scholar] [CrossRef]

- Cui, Y.; Dong, J.; Wu, P.; Wu, J.; Metz, C.; Lee, Y.L.; Durand, A. Tunnel-Based IPv6 Transition. IEEE Internet Comput. 2012, 17, 62–68. [Google Scholar] [CrossRef]

- Fang, R.; Han, G.; Wang, X.; Bao, C.; Li, X.; Chen, Y. Speeding up IPv4 connections via IPv6 infrastructure. In Proceedings of the SIGCOMM’21 Poster and Demo Sessions, Virtual Event, USA, 23–27 August 2021; pp. 76–78. [Google Scholar]

- Joshi, M.; Hadi, T.H. A Review of Network Traffic Analysis and Prediction Techniques. arXiv 2015, arXiv:1507.05722. [Google Scholar]

- Ramakrishnan, N.; Soni, T. Network traffic prediction using recurrent neural networks. In Proceedings of the 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 187–193. [Google Scholar]

- Li, Q.; Qin, T.; Guan, X.; Zheng, Q. Empirical analysis and comparison of IPv4-IPv6 traffic: A case study on the campus network. In Proceedings of the 18th IEEE International Conference on Networks (ICON), Singapore, 12–14 December 2012; pp. 395–399. [Google Scholar]

- Han, C.; Li, Z.; Xie, G.; Uhlig, S.; Wu, Y.; Li, L.; Ge, J.; Liu, Y. Insights into the issue in IPv6 adoption: A view from the Chinese IPv6 Application mix. Concurr. Comput. Pract. Exp. 2016, 28, 616–630. [Google Scholar] [CrossRef]

- Strowes, S.D. Diurnal and Weekly Cycles in IPv6 Traffic. In Proceedings of the 2016 Applied Networking Research Workshop (ANRW), Berlin, Germany, 16 July 2016; pp. 65–67. [Google Scholar]

- Urushidani, S.; Fukuda, K.; Koibuchi, M.; Nakamura, M.; Abe, S.; Ji, Y.; Aoki, M.; Yamada, S. Dynamic Resource Allocation and QoS Control Capabilities of the Japanese Academic Backbone Network. Future Internet 2010, 2, 295–307. [Google Scholar] [CrossRef]

- Valipour, M. Long-term runoff study using SARIMA and ARIMA models in the United States. Meteorol. Appl. 2015, 22, 592–598. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Jiang, W. Internet traffic prediction with deep neural networks. Internet Technol. Lett. 2022, 5, e314. [Google Scholar] [CrossRef]

- Jaffry, S.; Hasan, S.F. Cellular Traffic Prediction using Recurrent Neural Networks. In Proceedings of the 5th IEEE International Symposium on Telecommunication Technologies (ISTT), Shah Alam, Malaysia, 9–11 November 2020; pp. 94–98. [Google Scholar]

- Katris, C.; Daskalaki, S. Dynamic Bandwidth Allocation for Video Traffic Using FARIMA-Based Forecasting Models. J. Netw. Syst. Manag. 2019, 27, 39–65. [Google Scholar] [CrossRef]

- Abbasi, M.; Shahraki, A.; Taherkordi, A. Deep Learning for Network Traffic Monitoring and Analysis (NTMA): A Survey. Comput. Commun. 2021, 170, 19–41. [Google Scholar] [CrossRef]

- Lutu, A.; Perino, D.; Bagnulo, M.; Frias-Martinez, E.; Khangosstar, J. A Characterization of the COVID-19 Pandemic Impact on a Mobile Network Operator Traffic. In Proceedings of the 2020 ACM Internet Measurement Conference (IMC), Virtual Event, USA, 27–29 October 2020; pp. 19–33. [Google Scholar]

- Wang, Z.; Li, Z.; Liu, G.; Chen, Y.; Wu, Q.; Cheng, G. Examination of WAN traffic characteristics in a large-scale data center network. In Proceedings of the 2021 ACM Internet Measurement Conference (IMC), Virtual Event, USA, 2–4 November 2021; pp. 1–14. [Google Scholar]

- Sarrar, N.; Maier, G.; Ager, B.; Sommer, R.; Uhlig, S. Investigating IPv6 Traffic - What Happened at the World IPv6 Day? In Proceedings of the 13th International Conference on Passive and Active Network Measurement (PAM), Vienna, Austria, 12–14 March 2012; pp. 11–20. [Google Scholar]

- Li, F.; An, C.; Yang, J.; Wu, J.; Zhang, H. A study of traffic from the perspective of a large pure IPv6 ISP. Comput. Commun. 2014, 37, 40–52. [Google Scholar] [CrossRef]

- Cao, J.; Li, Z.; Li, J. Financial time series forecasting model based on CEEMDAN and LSTM. Phys. Stat. Mech. Its Appl. 2019, 519, 127–139. [Google Scholar] [CrossRef]

- Karevan, Z.; Suykens, J.A. Transductive LSTM for time-series prediction: An application to weather forecasting. Neural Netw. 2020, 125, 1–9. [Google Scholar] [CrossRef]

- Xie, Q.; Guo, T.; Chen, Y.; Xiao, Y.; Wang, X.; Zhao, B.Y. Deep Graph Convolutional Networks for Incident-Driven Traffic Speed Prediction. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management (CIKM), Virtual Event, Ireland, 19–23 October 2020; pp. 1665–1674. [Google Scholar]

- Madan, R.; Mangipudi, P.S. Predicting computer network traffic: A time series forecasting approach using DWT, ARIMA and RNN. In Proceedings of the 2018 Eleventh International Conference on Contemporary Computing (IC3), Noida, India, 2–4 August 2018; pp. 1–5. [Google Scholar]

- Nassar, S.; Schwarz, K.P.; El-Sheimy, N.; Noureldin, A. Modeling inertial sensor errors using autoregressive (AR) models. Navigation 2004, 51, 259–268. [Google Scholar] [CrossRef]

- Winters, P.R. Forecasting sales by exponentially weighted moving averages. Manag. Sci. 1960, 6, 324–342. [Google Scholar] [CrossRef]

- Harvey, A.C.; Shephard, N. 10 Structural time series models. In Econometrics; Handbook of Statistics; Elsevier: Amsterdam, The Netherlands, 1993; Volume 11, pp. 261–302. [Google Scholar]

- Lara-Benítez, P.; Carranza-García, M.; Riquelme, J.C. An Experimental Review on Deep Learning Architectures for Time Series Forecasting. Int. J. Neural Syst. 2021, 31, 2130001. [Google Scholar] [CrossRef]

- Abdellah, A.R.; Mahmood, O.A.; Kirichek, R.; Paramonov, A.; Koucheryavy, A. Machine Learning Algorithm for Delay Prediction in IoT and Tactile Internet. Future Internet 2021, 13, 304. [Google Scholar] [CrossRef]

- Alzahrani, A.O.; Alenazi, M.J. Designing a Network Intrusion Detection System Based on Machine Learning for Software Defined Networks. Future Internet 2021, 13, 111. [Google Scholar] [CrossRef]

- Ghazal, T.M.; Hasan, M.K.; Alshurideh, M.T.; Alzoubi, H.M.; Ahmad, M.; Akbar, S.S.; Al Kurdi, B.; Akour, I.A. IoT for Smart Cities: Machine Learning Approaches in Smart Healthcare—A Review. Future Internet 2021, 13, 218. [Google Scholar] [CrossRef]

- Thakur, N.; Han, C.Y. A study of fall detection in assisted living: Identifying and improving the optimal machine learning method. J. Sens. Actuator Netw. 2021, 10, 39. [Google Scholar] [CrossRef]

- Vukovic, D.B.; Romanyuk, K.; Ivashchenko, S.; Grigorieva, E.M. Are CDS spreads predictable during the Covid-19 pandemic? Forecasting based on SVM, GMDH, LSTM and Markov switching autoregression. Expert Syst. 2022, 194, 116553. [Google Scholar] [CrossRef] [PubMed]

- Cai, M.; Pipattanasomporn, M.; Rahman, S. Day-ahead building-level load forecasts using deep learning vs. traditional time-series techniques. Appl. Energy 2019, 236, 1078–1088. [Google Scholar] [CrossRef]

- Sagheer, A.; Kotb, M. Time Series Forecasting of Petroleum Production Using Deep LSTM Recurrent Networks. Neurocomputing 2019, 323, 203–213. [Google Scholar] [CrossRef]

- Lim, B.; Zohren, S. Time-series forecasting with deep learning: A survey. Philos. Trans. R. Soc. 2021, 379, 20200209. [Google Scholar] [CrossRef] [PubMed]

- Lim, B.; Zohren, S.; Roberts, S. Enhancing time-series momentum strategies using deep neural networks. J. Financ. Data Sci. 2019, 1, 19–38. [Google Scholar] [CrossRef]

- Grover, A.; Kapoor, A.; Horvitz, E. A Deep Hybrid Model for Weather Forecasting. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), Sydney, NSW, Australia, 10–13 August 2015; pp. 379–386. [Google Scholar]

- Whata, A.; Chimedza, C. A Machine Learning Evaluation of the Effects of South Africa’s COVID-19 Lockdown Measures on Population Mobility. Mach. Learn. Knowl. Extr. 2021, 3, 25. [Google Scholar] [CrossRef]

- Paxson, V.; Floyd, S. Wide area traffic: The failure of Poisson modeling. IEEE/ACM Trans. Netw. 1995, 3, 226–244. [Google Scholar] [CrossRef]

- Willinger, W.; Taqqu, M.S.; Sherman, R.; Wilson, D.V. Self-Similarity Through High-Variability: Statistical Analysis of Ethernet LAN Traffic at the Source Level. In Proceedings of the 1995 ACM SIGCOMM, Cambridge, MA, USA, 28 August–1 September 1995; pp. 100–113. [Google Scholar]

- Karagiannis, T.; Faloutsos, M. SELFIS: A Tool For Self-Similarity and Long-Range Dependence Analysis. In Proceedings of the 1st Workshop on Fractals and Self-Similarity in Data Mining: Issues and Approaches (in KDD), Edmonton, AB, Canada, 23 July 2002; Volume 19. [Google Scholar]

- Benesty, J.; Chen, J.; Huang, Y.; Cohen, I. Pearson correlation coefficient. In Noise Reduction in Speech Processing; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–4. [Google Scholar]

- Myers, L.; Sirois, M.J. Spearman Correlation Coefficients, Differences between. In Encyclopedia of Statistical Sciences; John Wiley & Sons: Hoboken, NJ, USA, 2006; Available online: http://onlinelibrary.wiley.com/doi/10.1002/0471667196.ess5050.pub2/abstract (accessed on 18 October 2022).

- Abdi, H. The Kendall rank correlation coefficient. In Encyclopedia of Measurement and Statistics; SAGE: Thousand Oaks, CA, USA, 2007; pp. 508–510. [Google Scholar]

- Pugach, I.Z.; Pugach, S. Strong correlation between prevalence of severe vitamin D deficiency and population mortality rate from COVID-19 in Europe. Wien. Klin. Wochenschr. 2021, 133, 403–405. [Google Scholar] [CrossRef]

- Tang, K.; Chin, B. Correlations between Control of COVID-19 Transmission and Influenza Occurrences in Malaysia. Public Health 2021, 198, 96–101. [Google Scholar] [CrossRef]

- Qiao, C.; Wang, J.; Wang, Y.; Liu, Y.; Tuo, H. Understanding and Improving User Engagement in Adaptive Video Streaming. In Proceedings of the 2021 IEEE/ACM 29th International Symposium on Quality of Service (IWQoS), Tokyo, Japan, 25–28 June 2021; pp. 1–10. [Google Scholar]

- Gong, Q.; Chen, Y.; He, X.; Zhuang, Z.; Wang, T.; Huang, H.; Wang, X.; Fu, X. DeepScan: Exploiting Deep Learning for Malicious Account Detection in Location-Based Social Networks. IEEE Commun. Mag. 2018, 56, 21–27. [Google Scholar] [CrossRef]

- Ferretti, M.; Fiore, U.; Perla, F.; Risitano, M.; Scognamiglio, S. Deep Learning Forecasting for Supporting Terminal Operators in Port Business Development. Future Internet 2022, 14, 221. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Neil, D.; Pfeiffer, M.; Liu, S. Phased LSTM: Accelerating Recurrent Network Training for Long or Event-based Sequences. In Proceedings of the 2016 Advances in Neural Information Processing Systems (NIPS), Barcelona, Spain, 5–10 December 2016; pp. 3882–3890. [Google Scholar]

- Gong, Q.; Zhang, J.; Chen, Y.; Li, Q.; Xiao, Y.; Wang, X.; Hui, P. Detecting Malicious Accounts in Online Developer Communities Using Deep Learning. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management (CIKM), Beijing, China, 3–7 November 2019; pp. 1251–1260. [Google Scholar]

- Gong, Q.; Chen, Y.; He, X.; Xiao, Y.; Hui, P.; Wang, X.; Fu, X. Cross-site Prediction on Social Influence for Cold-start Users in Online Social Networks. ACM Trans. Web (TWEB) 2021, 15, 1–23. [Google Scholar] [CrossRef]

- Zhan, G.; Xu, J.; Huang, Z.; Zhang, Q.; Xu, M.; Zheng, N. A Semantic Sequential Correlation Based LSTM Model for Next POI Recommendation. In Proceedings of the 20th IEEE International Conference on Mobile Data Management (MDM), Beijing, China, 10–13 June 2019; pp. 128–137. [Google Scholar]

- Donoso-Oliva, C.; Cabrera-Vives, G.; Protopapas, P.; Carrasco-Davis, R.; Estevez, P.A. The effect of phased recurrent units in the classification of multiple catalogues of astronomical light curves. Mon. Not. R. Astron. Soc. 2021, 505, 6069–6084. [Google Scholar] [CrossRef]

- Sepasgozar, S.S.; Pierre, S. Network Traffic Prediction Model Considering Road Traffic Parameters Using Artificial Intelligence Methods in VANET. IEEE Access 2022, 10, 8227–8242. [Google Scholar] [CrossRef]

- Zhang, A.; Liu, Q.; Zhang, T. Spatial-temporal attention fusion for traffic speed prediction. Soft Comput. 2022, 26, 695–707. [Google Scholar] [CrossRef]

- Vivas, E.; Allende-Cid, H.; Salas, R. A Systematic Review of Statistical and Machine Learning Methods for Electrical Power Forecasting with Reported MAPE Score. Entropy 2020, 22, 1412. [Google Scholar] [CrossRef]

- Mao, W.; He, J.; Sun, B.; Wang, L. Prediction of Bearings Remaining Useful Life Across Working Conditions Based on Transfer Learning and Time Series Clustering. IEEE Access 2021, 9, 135285–135303. [Google Scholar] [CrossRef]

| Traffic Category | Method | IPv4 Downstream | IPv6 Downstream | IPv4 Upstream | IPv6 Upstream |

|---|---|---|---|---|---|

| R/S | 0.6703 | 0.6609 | 0.6962 | 0.6957 | |

| DHU | A/V | 0.6936 | 0.6671 | 0.6355 | 0.6984 |

| P | 0.5936 | 0.6315 | 0.5503 | 0.6285 | |

| R/S | 0.7159 | 0.6990 | 0.8104 | 0.8090 | |

| ECNU | A/V | 0.6652 | 0.6698 | 0.8253 | 0.7843 |

| P | 0.5476 | 0.6740 | 0.6771 | 0.7349 |

| Traffic Category | Method | Downstream | Upstream |

|---|---|---|---|

| Pearson | 0.934 | 0.842 | |

| DHU | Spearman | 0.948 | 0.887 |

| Kendall | 0.804 | 0.699 | |

| Pearson | 0.907 | 0.779 | |

| ECNU | Spearman | 0.915 | 0.844 |

| Kendall | 0.740 | 0.660 |

| Dataset | Model | MAPE |

|---|---|---|

| Naive-2h | 0.7664 | |

| Naive-24h | 0.2878 | |

| ARIMA | 0.7556 | |

| SARIMA | 0.2675 | |

| DHU | SVM | 0.7471 |

| LSTM | 0.4557 | |

| Bi-LSTM | 0.3678 | |

| PLSTM | 0.7975 | |

| LS6 | 0.2410 | |

| Naive-2h | 0.6062 | |

| Naive-24h | 0.3546 | |

| ARIMA | 0.7418 | |

| SARIMA | 0.3175 | |

| ECNU | SVM | 0.6998 |

| LSTM | 0.3367 | |

| Bi-LSTM | 0.5479 | |

| PLSTM | 0.4509 | |

| LS6 | 0.3146 |

| Model | MAPE |

|---|---|

| LS6 (w/o SARIMA_v6) | 0.4882 |

| LS6 (w/o SARIMA_v4) | 0.4108 |

| LS6 (w/o LSTM_v6) | 1.2277 |

| LS6 (w/o LSTM_v4) | 0.4045 |

| LS6 (w/o IPv4) | 0.3569 |

| LS6 (w/o SARIMA) | 0.3776 |

| LS6 (w/o LSTM) | 0.7950 |

| LS6 | 0.3146 |

| Dataset | Model | MAPE |

|---|---|---|

| DHU | LS6 | 0.2410 |

| LS6 (combine) | 0.2317 | |

| ECNU | LS6 | 0.3146 |

| LS6 (combine) | 0.2953 |

| Dataset | Model | MAPE (24 h) | MAPE (Directly) |

|---|---|---|---|

| SVM | 0.6432 | 0.7471 | |

| LSTM | 0.4143 | 0.4557 | |

| DHU | Bi-LSTM | 0.4529 | 0.3678 |

| PLSTM | 0.3215 | 0.7975 | |

| LS6 | 0.3998 | 0.2410 | |

| SVM | 0.3992 | 0.6998 | |

| LSTM | 0.3287 | 0.3367 | |

| ECNU | Bi-LSTM | 0.3263 | 0.5479 |

| PLSTM | 0.3453 | 0.4509 | |

| LS6 | 0.3428 | 0.3146 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Z.; Ruan, H.; Cao, Y.; Chen, Y.; Wang, X. Analysis and Prediction of the IPv6 Traffic over Campus Networks in Shanghai. Future Internet 2022, 14, 353. https://doi.org/10.3390/fi14120353

Sun Z, Ruan H, Cao Y, Chen Y, Wang X. Analysis and Prediction of the IPv6 Traffic over Campus Networks in Shanghai. Future Internet. 2022; 14(12):353. https://doi.org/10.3390/fi14120353

Chicago/Turabian StyleSun, Zhiyang, Hui Ruan, Yixin Cao, Yang Chen, and Xin Wang. 2022. "Analysis and Prediction of the IPv6 Traffic over Campus Networks in Shanghai" Future Internet 14, no. 12: 353. https://doi.org/10.3390/fi14120353

APA StyleSun, Z., Ruan, H., Cao, Y., Chen, Y., & Wang, X. (2022). Analysis and Prediction of the IPv6 Traffic over Campus Networks in Shanghai. Future Internet, 14(12), 353. https://doi.org/10.3390/fi14120353