Computer Vision for Fire Detection on UAVs—From Software to Hardware

Abstract

:1. Introduction

2. Fire Detection Using Computer Vision on UAVs

2.1. Related Work

2.2. Fire Detection Framework

3. Literature Review

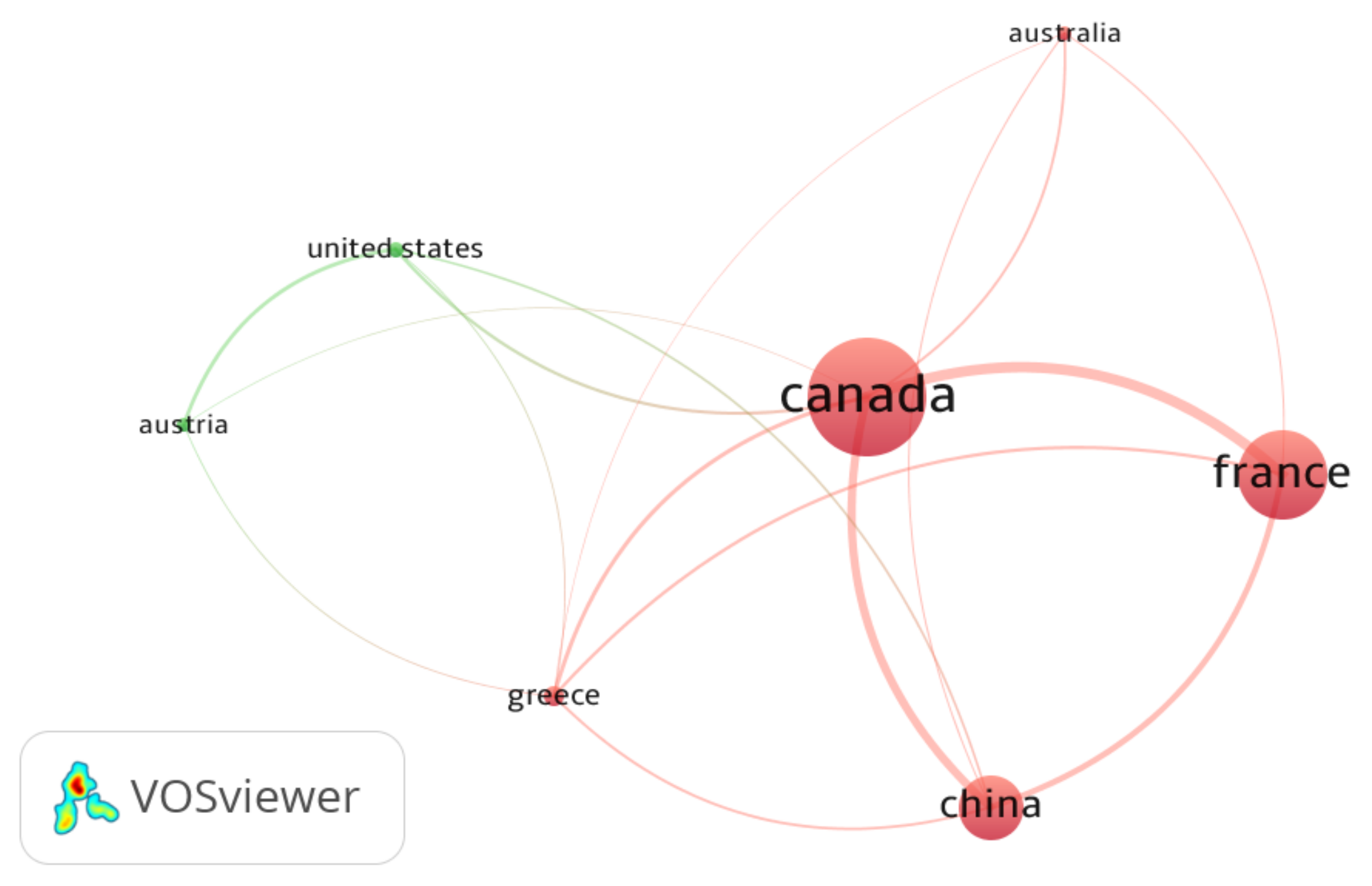

3.1. Research Execution

3.1.1. Research Questions

3.1.2. Research Database

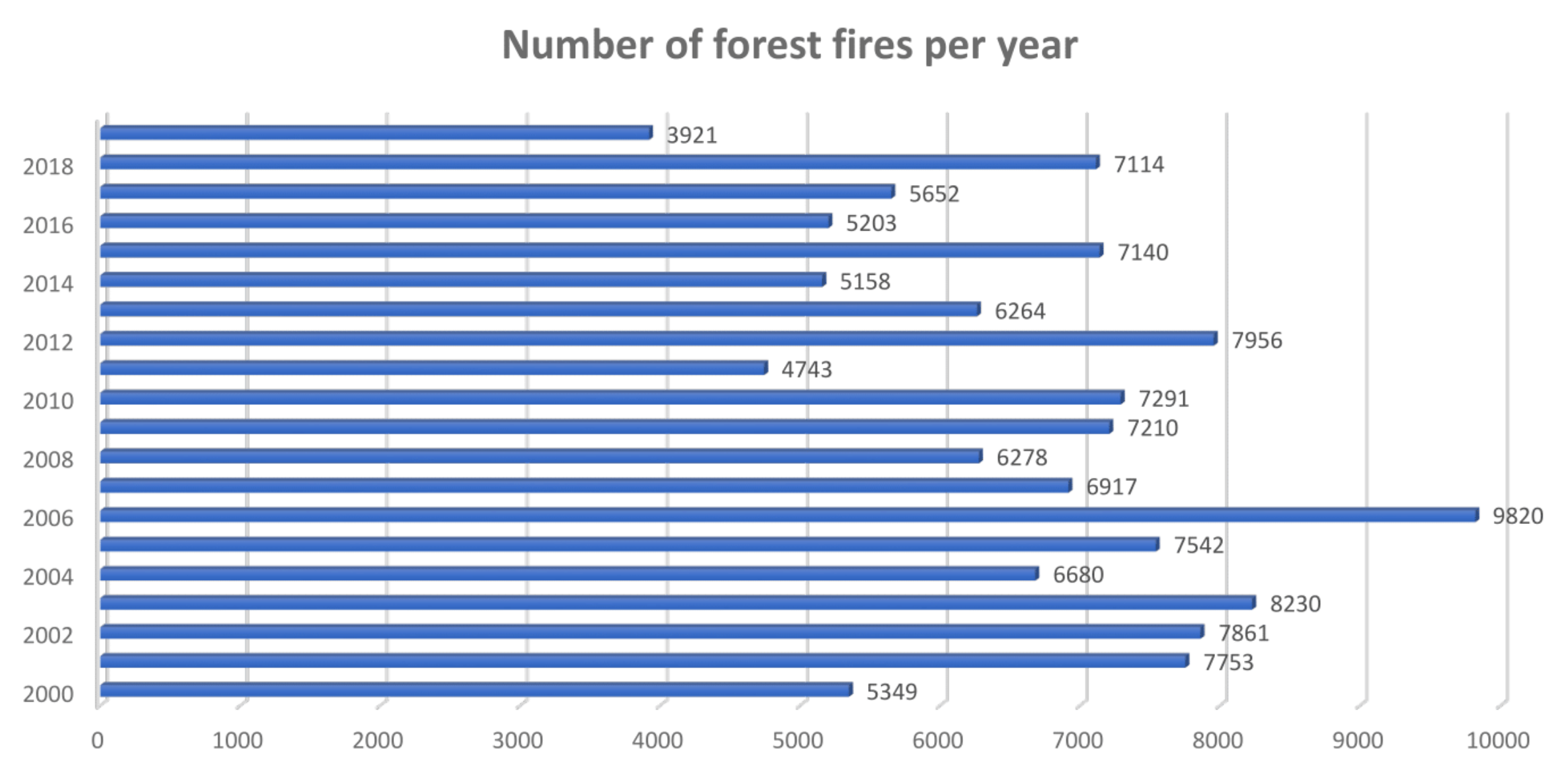

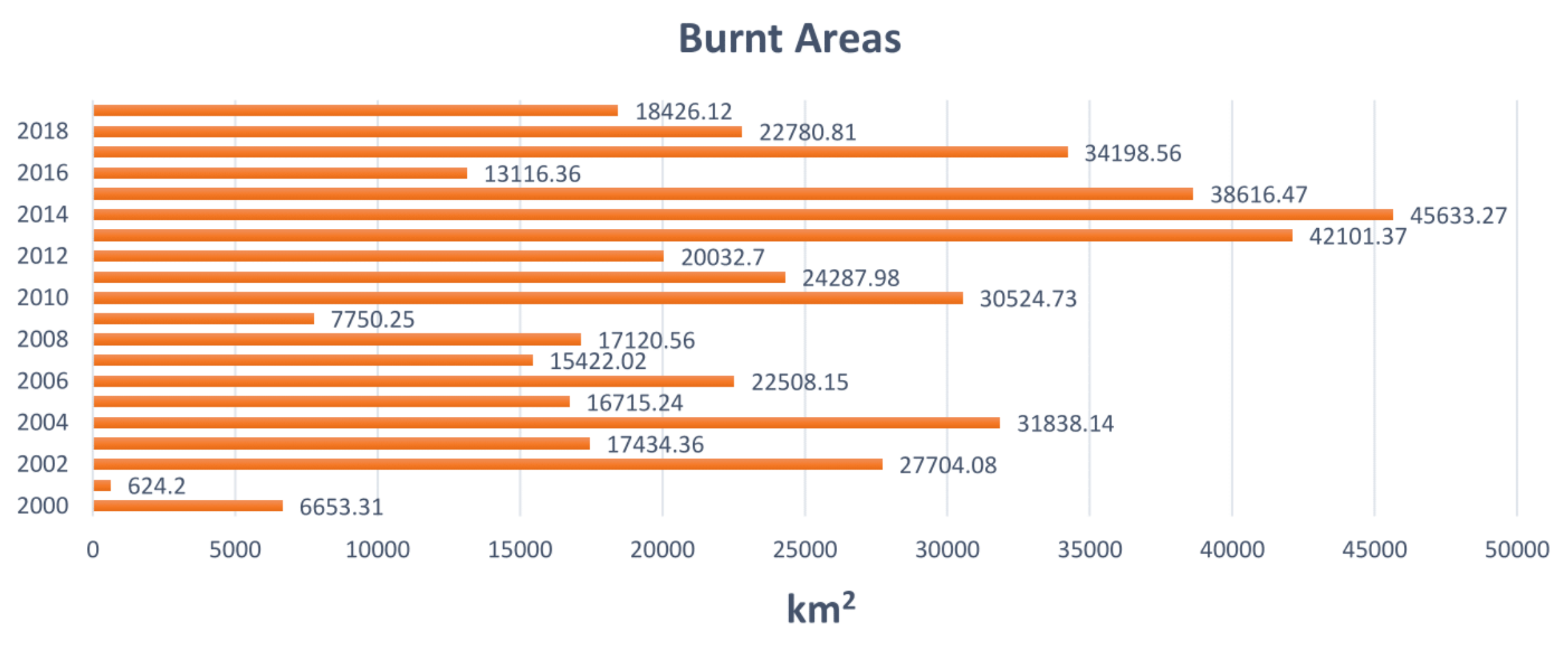

3.2. Research Early Statistics

4. Taxonomy

4.1. Hardware

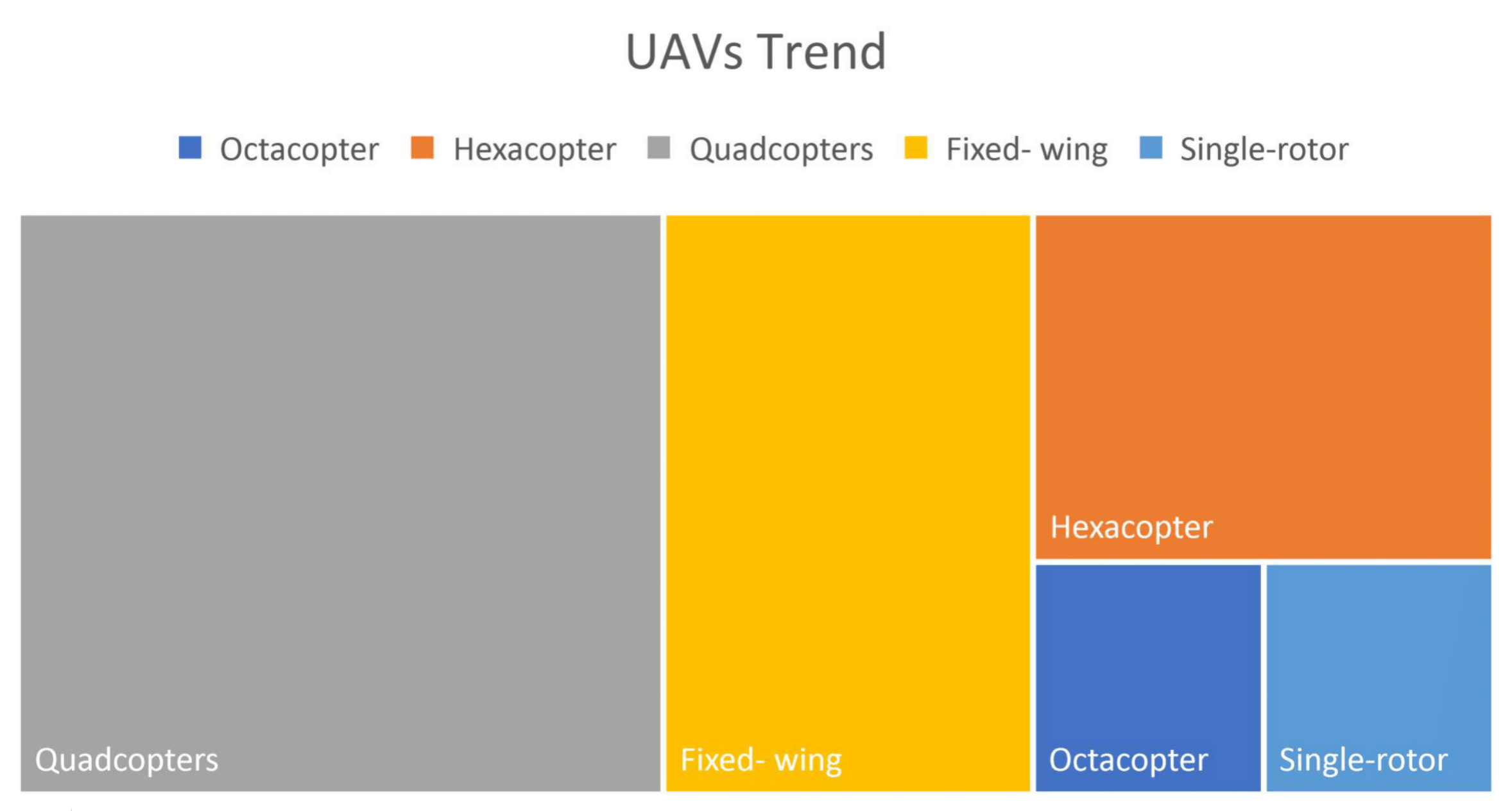

4.1.1. UAVs

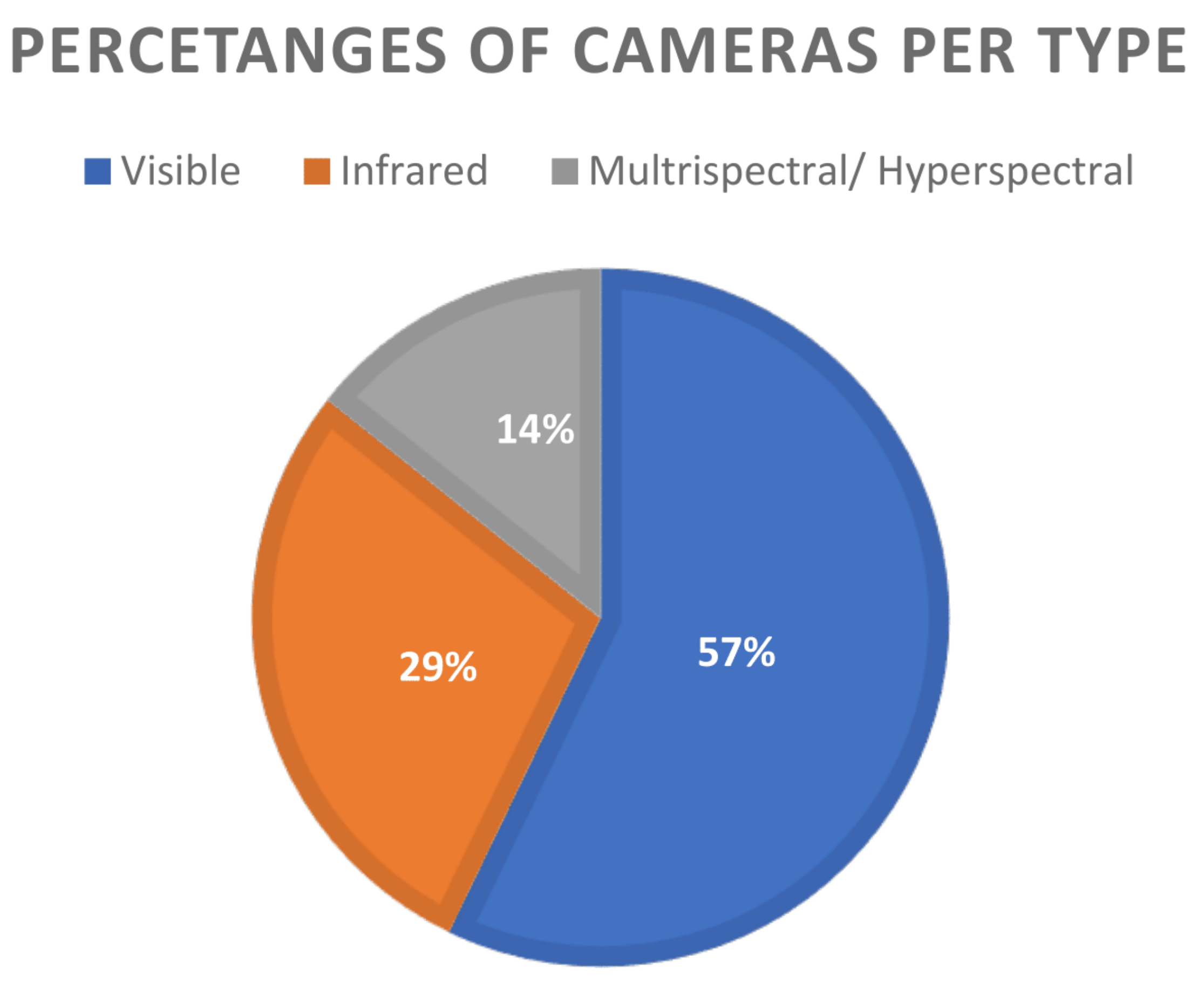

4.1.2. Cameras

4.2. Software/Method

4.3. Datasets

5. Discussion

6. Conclusions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CDD | Charge-Coupled Device |

| CNN | Convolutional Neural Network |

| FFDI | Forest Fire314Detection Index |

| FPGA | Field-Programmable Gate Array |

| GNSS | Global Navigation Satellite System |

| GPS | Global Positioning System |

| HD | High Definition |

| LBP | Local Binary Pattern |

| RGB | Red-Green-Blue |

| ROS | Robot Operating System |

| SVM | Support Vector Machine |

| SVS | Synthetic Vision System |

| UAS | Unmanned Aerial Systems |

| UAV | Unmanned Aerial Vehicle |

| UCAV | Unmanned Combat Aerial Vehicle |

| UV | Ultra Violet |

| YOLO | You Only Look Once |

References

- Mitka, E.; Mouroutsos, S.G. Classification of Drones. Am. J. Eng. Res. (AJER) 2017, 6, 36–41. [Google Scholar]

- Arjomandi, M.; Agostino, S.; Mammone, M.; Nelson, M.; Zhou, T. Classification of Unmanned Aerial Vehicles. Available online: https://www.e-education.psu.edu/geog892/node/5 (accessed on 31 July 2021).

- Mueller, T.J. Fixed and Flapping Wing Aerodynamics for Micro Air Vehicle Applications; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2001. [Google Scholar]

- Boon, M.; Drijfhout, A.; Tesfamichael, S. Comparison of a Fixed-Wing and Multi-Rotor Uav for Environmental Mapping Applications: A Case Study. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 47. [Google Scholar] [CrossRef] [Green Version]

- Fennelly, L.J.; Perry, M.A. Unmanned Aerial Vehicle (Drone) Usage in the 21st Century. In The Professional Protection Officer; Elsevier: Amsterdam, The Netherlands, 2020; pp. 183–189. [Google Scholar]

- Alladi, T.; Chamola, V.; Sahu, N.; Guizani, M. Applications of blockchain in unmanned aerial vehicles: A review. Veh. Commun. 2020, 23, 100249. [Google Scholar] [CrossRef]

- McCarley, J.S.; Wickens, C.D. Human Factors Concerns in UAV Flight. Available online: https://ininet.org/human-factors-concerns-in-uav-flight.html (accessed on 31 July 2021).

- Torres, O.; Ramirez, J.; Barrado, C.; Tristancho, J. Synthetic vision for Remotely Piloted Aircraft in non-segregated airspace. In Proceedings of the 2011 IEEE/AIAA 30th Digital Avionics Systems Conference, Seattle, WA, USA, 16–20 October 2011; pp. 5C4-1. [Google Scholar]

- Çetin, A.E.; Dimitropoulos, K.; Gouverneur, B.; Grammalidis, N.; Günay, O.; Habibo?lu, Y.H.; Töreyin, B.U.; Verstockt, S. Video Fire Detection—Review. Digit. Signal Process. 2013, 23, 1827–1843. [Google Scholar] [CrossRef] [Green Version]

- Frizzi, S.; Kaabi, R.; Bouchouicha, M.; Ginoux, J.M.; Moreau, E.; Fnaiech, F. Convolutional Neural Network for Video Fire and Smoke Detection. In Proceedings of the IECON 2016-42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 23–26 October 2016; pp. 877–882. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Kanellakis, C.; Nikolakopoulos, G. Survey on Computer Vision for UAVs: Current Developments and Trends. J. Intell. Robot. Syst. 2017, 87, 141–168. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Zhang, Y.; Xin, J.; Yi, Y.; Liu, D.; Liu, H. A UAV-Based Forest Fire Detection Algorithm Using Convolutional Neural Network. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 10305–10310. [Google Scholar]

- Martín-Martín, A.; Orduna-Malea, E.; Thelwall, M.; López-Cózar, E.D. Google Scholar, Web of Science, and Scopus: A Systematic Comparison of Citations in 252 Subject Categories. J. Inf. 2018, 12, 1160–1177. [Google Scholar] [CrossRef] [Green Version]

- Zhao, D.; Strotmann, A. Analysis and Visualization of Citation Networks. Synth. Lect. Inf. Concepts Retr. Serv. 2015, 7, 1–207. [Google Scholar] [CrossRef]

- Van Eck, N.J.; Waltman, L. Software Survey: VOSviewer, a Computer Program for Bibliometric Mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef] [Green Version]

- Esfahlani, S.S. Mixed Reality and Remote Sensing Application of Unmanned Aerial Vehicle in Fire and Smoke Detection. J. Ind. Inf. Integr. 2019, 15, 42–49. [Google Scholar] [CrossRef]

- Kyrkou, C.; Theocharides, T. Deep-Learning-Based Aerial Image Classification for Emergency Response Applications Using Unmanned Aerial Vehicles. arXiv 2019, arXiv:1906.08716. [Google Scholar]

- Bilgilioglu, B.B.; Ozturk, O.; Sariturk, B.; Seker, D.Z. Object Based Classification of Unmanned Aerial Vehicle (UAV) Imagery for Forest Fires Monitoring. Fresenius Environ. Bull. 2019, 28, 1011. [Google Scholar]

- Aspragathos, N.; Dogkas, E.; Koutmos, P.; Paterakis, G.; Souflas, K.; Thanellas, G.; Xanthopoulos, N.; Lamprinou, N.; Psarakis, E.Z.; Sartinas, E. THEASIS System for Early Detection of Wildfires in Greece: Preliminary Results from Its Laboratory and Small Scale Tests. In Proceedings of the 2019 29th Annual Conference of the European Association for Education in Electrical and Information Engineering (EAEEIE), Ruse, Bulgaria, 4–6 September 2019; pp. 1–6. [Google Scholar]

- Chamoso, P.; González-Briones, A.; De La Prieta, F.; Corchado, J.M. Computer Vision System for Fire Detection and Report Using UAVs. Available online: http://ceur-ws.org/Vol-2146/paper95.pdf (accessed on 31 July 2021).

- Saadat, M.N.; Husen, M.N. An Application Framework for Forest Fire and Haze Detection with Data Acquisition Using Unmanned Aerial Vehicle. In Proceedings of the 12th International Conference on Ubiquitous Information Management and Communication, Langkawi, Malaysia, 5–7 January 2018; pp. 1–7. [Google Scholar]

- Almeida, M.; Azinheira, J.R.; Barata, J.; Bousson, K.; Ervilha, R.; Martins, M.; Moutinho, A.; Pereira, J.C.; Pinto, J.C.; Ribeiro, L.M. Analysis of Fire Hazard in Campsite Areas. Fire Technol. 2017, 53, 553–575. [Google Scholar] [CrossRef]

- Ciullo, V.; Rossi, L.; Pieri, A. Experimental Fire Measurement with UAV Multimodal Stereovision. Remote Sens. 2020, 12, 3546. [Google Scholar] [CrossRef]

- Shin, J.I.; Seo, W.W.; Kim, T.; Park, J.; Woo, C.S. Using UAV Multispectral Images for Classification of Forest Burn Severity—A Case Study of the 2019 Gangneung Forest Fire. Forests 2019, 10, 1025. [Google Scholar] [CrossRef] [Green Version]

- Ciullo, V.; Rossi, L.; Toulouse, T.; Pieri, A. Fire Geometrical Characteristics Estimation Using a Visible Stereovision System Carried by Unmanned Aerial Vehicle. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; pp. 1216–1221. [Google Scholar]

- Georgiev, G.D.; Hristov, G.; Zahariev, P.; Kinaneva, D. Forest Monitoring System for Early Fire Detection Based on Convolutional Neural Network and UAV Imagery. In Proceedings of the 2020 28th National Conference with International Participation (TELECOM), Sofia, Bulgaria, 29–30 October 2020; pp. 57–60. [Google Scholar]

- Kinaneva, D.; Hristov, G.; Raychev, J.; Zahariev, P. Early Forest Fire Detection Using Drones and Artificial Intelligence. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 1060–1065. [Google Scholar]

- Nebiker, S.; Eugster, H.; Flückiger, K.; Christen, M. Planning and Management of Real-Time Geospatial UAS Missions within a Virtual Globe Environment. Available online: https://pdfs.semanticscholar.org/e165/7605fa9ef451e2152ba012688ed47b992ac4.pdf (accessed on 31 July 2021).

- He, Q.; Chu, C.H.H.; Camargo, A. Architectural Building Detection and Tracking under Rural Environment in Video Sequences Taken by Unmanned Aircraft System (UAS). In Proceedings of the International Conference on Image Processing, Computer Vision, and Pattern Recognition (IPCV), Las Vegas, NV, USA, 16–19 July 2012; p. 1. [Google Scholar]

- Royo Chic, P.; Pastor Llorens, E.; Solé, M.; Lema Rosas, J.M.; López Rubio, J.; Barrado Muxí, C. UAS Architecture for Forest Fire Remote Sensing. Available online: https://upcommons.upc.edu/handle/2117/16549 (accessed on 31 July 2021).

- Kinaneva, D.; Hristov, G.; Raychev, J.; Zahariev, P. Application of Artificial Intelligence in UAV Platforms for Early Forest Fire Detection. In Proceedings of the 2019 27th National Conference with International Participation (TELECOM), Sofia, Bulgaria, 30–31 October 2019; pp. 50–53. [Google Scholar]

- Giordan, D.; Adams, M.S.; Aicardi, I.; Alicandro, M.; Allasia, P.; Baldo, M.; De Berardinis, P.; Dominici, D.; Godone, D.; Hobbs, P.; et al. The Use of Unmanned Aerial Vehicles (UAVs) for Engineering Geology Applications. Bull. Eng. Geol. Environ. 2020, 79, 3437–3481. [Google Scholar] [CrossRef] [Green Version]

- Liang, H. Advances in Multispectral and Hyperspectral Imaging for Archaeology and Art Conservation. Appl. Phys. A 2012, 106, 309–323. [Google Scholar] [CrossRef] [Green Version]

- White, R.A.; Bomber, M.; Hupy, J.P.; Shortridge, A. UAS-GEOBIA Approach to Sapling Identification in Jack Pine Barrens after Fire. Drones 2018, 2, 40. [Google Scholar] [CrossRef] [Green Version]

- Qin, J.; Chao, K.; Kim, M.S.; Lu, R.; Burks, T.F. Hyperspectral and Multispectral Imaging for Evaluating Food Safety and Quality. J. Food Eng. 2013, 118, 157–171. [Google Scholar] [CrossRef]

- Akhloufi, M.A.; Toulouse, T.; Rossi, L.; Maldague, X. Multimodal Three-Dimensional Vision for Wildland Fires Detection and Analysis. In Proceedings of the 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017; pp. 1–6. [Google Scholar]

- Nguyen, A.; Nguyen, H.; Tran, V.; Pham, H.X.; Pestana, J. A Visual Real-Time Fire Detection Using Single Shot MultiBox Detector for UAV-Based Fire Surveillance. In Proceedings of the 2020 IEEE Eighth International Conference on Communications and Electronics (ICCE), Phu Quoc Island, Vietnam, 13–15 January 2021; pp. 338–343. [Google Scholar]

- Sherstjuk, V.; Zharikova, M.; Dorovskaja, I.; Sheketa, V. Assessing Forest Fire Dynamicsin UAV-Based Tactical Monitoring System. In International Scientific Conference—Intellectual Systems of Decision Making and Problem of Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2020; pp. 285–301. [Google Scholar]

- Khachumov, V.M.; Portnov, E.M.; Fedorov, P.A.; Kasimov, R.A.; Linn, A.N. Development of an Accelerated Method for Calculating Streaming Video Data Obtained from UAVs. In Proceedings of the 2020 8th International Conference on Control, Mechatronics and Automation (ICCMA), Moscow, Russia, 6–8 November 2020; pp. 212–216. [Google Scholar]

- Giitsidis, T.; Karakasis, E.G.; Gasteratos, A.; Sirakoulis, G.C. Human and Fire Detection from High Altitude Uav Images. In Proceedings of the 2015 23rd Euromicro International Conference on Parallel, Distributed, and Network-Based Processing, Turku, Finland, 4–6 March 2015; pp. 309–315. [Google Scholar]

- Amanatiadis, A.; Bampis, L.; Karakasis, E.G.; Gasteratos, A.; Sirakoulis, G. Real-Time Surveillance Detection System for Medium-Altitude Long-Endurance Unmanned Aerial Vehicles. Concurr. Comput. Pract. Exp. 2018, 30, e4145. [Google Scholar] [CrossRef]

- Fuentes, S.; Tongson, E.J.; De Bei, R.; Gonzalez Viejo, C.; Ristic, R.; Tyerman, S.; Wilkinson, K. Non-Invasive Tools to Detect Smoke Contamination in Grapevine Canopies, Berries and Wine: A Remote Sensing and Machine Learning Modeling Approach. Sensors 2019, 19, 3335. [Google Scholar] [CrossRef] [Green Version]

- Athanasis, N.; Themistocleous, M.; Kalabokidis, K.; Chatzitheodorou, C. Big Data Analysis in UAV Surveillance for Wildfire Prevention and Management. In European, Mediterranean, and Middle Eastern Conference on Information Systems; Springer: Berlin/Heidelberg, Germany, 2018; pp. 47–58. [Google Scholar]

- Shao, Z.; Li, Y.; Deng, R.; Wang, D.; Zhong, X. Three-Dimensional-Imaging Thermal Surfaces of Coal Fires Based on UAV Thermal Infrared Data. Int. J. Remote Sens. 2021, 42, 672–692. [Google Scholar] [CrossRef]

- Hossain, F.A.; Zhang, Y.M.; Tonima, M.A. Forest Fire Flame and Smoke Detection from UAV-Captured Images Using Fire-Specific Color Features and Multi-Color Space Local Binary Pattern. J. Unmanned Veh. Syst. 2020, 8, 285–309. [Google Scholar] [CrossRef]

- Raveendran, R.; Ariram, S.; Tikanmäki, A.; Röning, J. Development of Task-Oriented ROS-Based Autonomous UGV with 3D Object Detection. In Proceedings of the 2020 IEEE International Conference on Real-Time Computing and Robotics (RCAR), Asahikawa, Japan, 28–29 September 2020; pp. 427–432. [Google Scholar]

- Meng, L.; Peng, Z.; Zhou, J.; Zhang, J.; Lu, Z.; Baumann, A.; Du, Y. Real-Time Detection of Ground Objects Based on Unmanned Aerial Vehicle Remote Sensing with Deep Learning: Application in Excavator Detection for Pipeline Safety. Remote Sens. 2020, 12, 182. [Google Scholar] [CrossRef] [Green Version]

- Kyrkou, C.; Theocharides, T. Emergencynet: Efficient Aerial Image Classification for Drone-Based Emergency Monitoring Using Atrous Convolutional Feature Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1687–1699. [Google Scholar] [CrossRef]

- Qiao, L.; Zhang, Y.; Qu, Y. Pre-Processing for UAV Based Wildfire Detection: A Loss u-Net Enhanced GAN for Image Restoration. In Proceedings of the 2020 2nd International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 23–25 October 2020; pp. 1–6. [Google Scholar]

- Hossain, F.A.; Zhang, Y.; Yuan, C.; Su, C.Y. Wildfire Flame and Smoke Detection Using Static Image Features and Artificial Neural Network. In Proceedings of the 2019 1st International Conference on Industrial Artificial Intelligence (Iai), Shenyang, China, 22–26 July 2019; pp. 1–6. [Google Scholar]

- Garcia Millan, V.E.; Rankine, C.; Sanchez-Azofeifa, G.A. Crop Loss Evaluation Using Digital Surface Models from Unmanned Aerial Vehicles Data. Remote Sens. 2020, 12, 981. [Google Scholar] [CrossRef] [Green Version]

- Rajagopal, A.; Ramachandran, A.; Shankar, K.; Khari, M.; Jha, S.; Lee, Y.; Joshi, G.P. Fine-Tuned Residual Network-Based Features with Latent Variable Support Vector Machine-Based Optimal Scene Classification Model for Unmanned Aerial Vehicles. IEEE Access 2020, 8, 118396–118404. [Google Scholar] [CrossRef]

- Jiao, Z.; Zhang, Y.; Xin, J.; Yi, Y.; Liu, D.; Liu, H. Forest Fire Detection with Color Features and Wavelet Analysis Based on Aerial Imagery. In Proceedings of the 2018 Chinese Automation Congress (CAC), Xi’an, China, 30 November–2 December 2018; pp. 2206–2211. [Google Scholar]

- Cruz, H.; Eckert, M.; Meneses, J.; Martínez, J.F. Efficient Forest Fire Detection Index for Application in Unmanned Aerial Systems (UASs). Sensors 2016, 16, 893. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nagaraj, K.; Sadashiva, T.G.; Ramani, S.K.; Iyengar, S.S. Image Feature Based Smoke Recognition in Mines Using Monocular Camera Mounted on Aerial Vehicles. In Proceedings of the 2017 2nd International Conference On Emerging Computation and Information Technologies (ICECIT), Tumakuru, India, 15–16 December 2017; pp. 1–6. [Google Scholar]

- Zheng, J.; Cao, X.; Zhang, B.; Huang, Y.; Hu, Y. Bi-Heterogeneous Convolutional Neural Network for UAV-Based Dynamic Scene Classification. In Proceedings of the 2017 Integrated Communications, Navigation and Surveillance Conference (ICNS), Herndon, VA, USA, 18–20 April 2017; pp. 5B4-1–5B4-12. [Google Scholar]

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. Computer Vision for Wildfire Research: An Evolving Image Dataset for Processing and Analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef] [Green Version]

- Kamilaris, A.; van den Brink, C.; Karatsiolis, S. Training Deep Learning Models via Synthetic Data: Application in Unmanned Aerial Vehicles. In International Conference on Computer Analysis of Images and Patterns; Springer: Berlin/Heidelberg, Germany, 2019; pp. 81–90. [Google Scholar]

- Kar, A.; Prakash, A.; Liu, M.Y.; Cameracci, E.; Yuan, J.; Rusiniak, M.; Acuna, D.; Torralba, A.; Fidler, S. Meta-Sim: Learning to Generate Synthetic Datasets. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 4551–4560. [Google Scholar]

- Péteri, R.; Fazekas, S.; Huiskes, M.J. DynTex: A Comprehensive Database of Dynamic Textures. Pattern Recognit. Lett. 2010, 31, 1627–1632. [Google Scholar] [CrossRef]

- Derpanis, K.G.; Lecce, M.; Daniilidis, K.; Wildes, R.P. Dynamic Scene Understanding: The Role of Orientation Features in Space and Time in Scene Classification. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1306–1313. [Google Scholar]

- Shroff, N.; Turaga, P.; Chellappa, R. Moving Vistas: Exploiting Motion for Describing Scenes. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1911–1918. [Google Scholar]

- Foggia, P.; Saggese, A.; Vento, M. Real-Time Fire Detection for Video-Surveillance Applications Using a Combination of Experts Based on Color, Shape, and Motion. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1545–1556. [Google Scholar] [CrossRef]

- Gade, R.; Moeslund, T.B. Thermal cameras and applications: A survey. Mach. Vis. Appl. 2014, 25, 245–262. [Google Scholar] [CrossRef] [Green Version]

| Type | Weight |

|---|---|

| Super Heavy | W > 2000 kg |

| Heavy | 200 kg < W ≤ 2000 kg |

| Medium | 50 kg < W ≤ 200 kg |

| Light | 5 kg < W ≤ 50 kg |

| Micro | W ≤ 5 kg |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moumgiakmas, S.S.; Samatas, G.G.; Papakostas, G.A. Computer Vision for Fire Detection on UAVs—From Software to Hardware. Future Internet 2021, 13, 200. https://doi.org/10.3390/fi13080200

Moumgiakmas SS, Samatas GG, Papakostas GA. Computer Vision for Fire Detection on UAVs—From Software to Hardware. Future Internet. 2021; 13(8):200. https://doi.org/10.3390/fi13080200

Chicago/Turabian StyleMoumgiakmas, Seraphim S., Gerasimos G. Samatas, and George A. Papakostas. 2021. "Computer Vision for Fire Detection on UAVs—From Software to Hardware" Future Internet 13, no. 8: 200. https://doi.org/10.3390/fi13080200

APA StyleMoumgiakmas, S. S., Samatas, G. G., & Papakostas, G. A. (2021). Computer Vision for Fire Detection on UAVs—From Software to Hardware. Future Internet, 13(8), 200. https://doi.org/10.3390/fi13080200