1. Introduction

In classical mass society, political reality was shaped by traditional media owned by government institutions and political elites. The situation has changed, and more than once. Political reality itself, which used to be unified for the majority of audiences united by the mass media, has now disintegrated into a number of local areas. A plural network political reality is created by horizontal communities based on their hierarchies. Local realities are constructed in “bubbles”—closed communities with their own hierarchy, distribution of social roles, leadership, etc. Partial identities are formed in them, and partial models of political reality are constructed. Moreover, such models can radically differ from community to community.

A fragmented and disconnected set of networked realities has become a breeding ground for new approaches to the manipulation of public opinion, mass consciousness and finally, electoral choice. This eventually created ground for so-called “special operations in social networks”. This refers to the covert influence on audiences for political purposes.

There are quite a number of studies and papers devoted to the detection of bots during election campaigns [

1,

2,

3,

4], models [

5,

6], algorithms and techniques for detecting artificial activity [

7] and ways to detect information dissemination paths [

8]. However, in all these works there is a wide variety of terms and basic concepts for similar objects or operations. Therefore, it is first necessary to define the basic concepts, select the related concepts, and outline the framework.

It can be said that attempts are being made to manipulate public opinion as part of election campaigns. By manipulating public opinion, we, following Forell, Howard, Monroy-Hernandez, Savage and Mexico, understand the deliberate hidden influence on public opinion in order to form positions and assessments beneficial to the manipulator [

9,

10]. From our point of view, within the framework of general theoretical approaches to the analysis of the processes of manipulation of public opinion in the digital environment, it is necessary to expand and clarify the terminological apparatus. In particular, we propose to use a subjective approach and introduce the concept of a special information operation implemented in social networks (Social Media Special Operations). To solve this problem, we borrow the concept of special operations from the theory of wars and the art of war. There, special operations are defined as “special missions to achieve strategic goals in which the use of conventional armed forces would create unacceptable risks. Overcoming these risks requires special operations forces...” [

11]. In line with this approach, we further suggest that Social Media Special Operations could be defined as a set of coordinated technological solutions implemented by software and means of social engineering that covertly manage the co-consciousness and behavior (both online and offline) of social media users for the benefit of political actors. Among such special operations we suggest to include operations on giving the users an impression or image of large public network support for certain political projects. This illusion creates a high online reputation for these projects and psychologically encourages those who have not yet formed their attitudes to join the majority and support these projects. We propose that special network operations of this type be labelled as operations to generate the illusion of public support or operations to generate induced support for a political project. This interpretation of Social Media Special Operations makes them one of the technologies used for manipulating public opinion in the digital environment.

We propose the term Network Combatants (NC or Combatants). These are active and specifically engaged participants in conflicts or special operations in social media. They could be bots as well as real people who deliberately carry out their activities, in some cases for compensation. The use of the term stems from a basic understanding of Combatants as persons taking a direct part in the combat operations of armed forces during an armed conflict [

12,

13]. In the case of international conflicts, the Geneva Convention is known to give combatants a special status [

14]. In the case of information confrontations on social media, NC confront the so-called “online citizenry”.

Induced support for a project is further understood to mean the network social capital artificially generated for the project through the network activities of the actors (Network Combatants) who create traffic of induced content beneficial to the project in the interested communities. Such engaged actors, or in other words agents of induced activity, can be paid agents from the real world as well as virtual agents or bots.

There are many terms for Network Combatant accounts in the academic literature: (1) Sybils are automatic/semi-automatic profiles designed to mimic human profiles [

15]; (2) Imposters—fake accounts [

16]; (3) False identity—a made-up identity with a stolen photo and/or false information [

17]; (4) A fake-account is an active user account created and monitored by an intruder for illegal purposes, including the collection of personal data [

18]; (5) Social bots—computer-based, robotic programs that pretend to be people [

19]. There are now many publications devoted to the search for traces of artificial activity in political election campaigns. As [

20] points out, the possibility of fake/fake personalities in the political arena certainly existed before. However, the development of digital reality and social networks has made it possible to use induced activity more widely. Bots, trolls, fake or stolen accounts, etc., are all in the election campaign. All this has created additional opportunities for special operations in which the impact is directed at network horizontal communities with their own hierarchies.

When we talk about the dynamics of political consciousness and political behavior of social network users in a specific situation such as election campaign, then we are talking about the fact that these dynamics can unfold in two modes. We denote them as spontaneous (natural) and induced (artificial). In the first mode, competing political actors campaign in all forms allowed by law, including social media. The voter perceives this campaign information and forms his electoral preferences. There is what we call a natural (spontaneous) process of electoral preference formation. However, if any of the competing political actors resort to a special operation to create imaginary support during an election campaign, and these artificial activities are effective, we see an induced dynamic of electoral preference formation. To emphasize an important point once again, these transactions are generally outside the scope of electoral legislation.

This article focuses on two aspects, firstly, on the methodology of identifying induced activity in social media during project implementation, and secondly, on the phenomenon of induced activity itself during political campaigns. In our work we aim not to show that artificial activity in social networks takes place, but rather to focus the attention of both the professional community and the wider public on the fact that it is an extremely common practice and, in our view, it is important to raise the need for such techniques and practices that can conditionally “distinguish” such activity from the general population for the voter. That is, models, techniques and algorithms are needed to ensure that individuals are protected from the effects of special operations.

This paper proposes a model, algorithm and technique for monitoring and diagnosing the activities of combatants that differs from existing approaches and solutions in that it provides detection of special operations Network Combatants during the implementation of a political project.

The paper is structured as follows: the second section presents an overview of relevant works. The third section contains a model of induced activity and methods of monitoring and detection of induced activity. The empirical basis is the results of case studies on the use of induced activity during election campaigns in a major Russian region in 2019. The fourth section contains the results of the experiment and the fifth section, the conclusion and discussion, concludes the paper.

2. Related Works

Since the advent of social media, the research community has been puzzled by the problem of reliably identifying social media fake accounts. Research can be divided into two parts: (1) social and political sciences (who and what a bot is; its scope; social and political functions; case studies devoted to analyzing specific examples of induced activity); (2) technical sciences (methods for detecting bots and fake activity or features of spreading false information). According to the main directions of research, the review of relevant works is divided into two parts: the first part is dedicated to a group of social and political studies, the second part contains a description of models and algorithms of detection of bots or information dissemination.

The first strand of research, in our view, focuses more on the socio-political effects and regulatory aspects of the use of NC.

All terminology is in one way or another based on the functional characteristics of the accounts and is mostly related to information security in the information space. Thus, [

16,

17] states that the main purpose of creating fake accounts involves malicious activities related to extremism, fraud, identity theft, bullying and violence. As highlighted by the researchers in [

17], robot accounts—bots—are most commonly used for malicious activity in an automated mode. In this case, a social media bot is akin to a chatbot in an app; it works according to a predetermined algorithm for human-defined purposes. The difference between a chatbot in apps and a malicious bot is that a “chat-bot” is easily identifiable by its association with a social networking project or channel (community). At the same time there are those fake social media accounts that tend to mimic a real person as much as possible. Detecting them is the main problem. Detecting a bot whose behavior is automated is much easier.

It should be noted that if we characterize a fake account as a social network user who has some inaccurate information on his page, then theoretically all social network accounts can be classified as fake accounts. Because human beings can be inaccurate, they can make mistakes, they have a right to misrepresent themselves on social media. They can put on masks and play games. In our opinion, the problem of distinguishing real accounts from fake ones seems to be more complex, which also lies in the field of communication deontology. This begs the question: where is the line between freedom of expression, role-playing and malicious misleading of other network users?

After all, fake accounts are very often used both for commercial and political purposes. We agree with the researchers who argue that fake accounts are neither good nor bad, rather it is the intention behind them that matters [

21]. Let us consider, for example, the field of commercial applications. This is where fake accounts are used to increase the number of contributors, views, and likes, and there are plenty of exchanges where you can order everything from likes to comments, see for example Amazon Mechanical Turk [

22], qcomment [

23], freelancer [

24], zbj [

25] and buyaccs [

26]. The scientific community uses a special term for such exchanges—crowdturfing [

17,

27,

28,

29,

30]. On these stock exchanges, anyone can buy the services of robotic accounts.

In parallel to the commercial sphere, fake accounts have become more widespread in the field of political communication. Research to identify fake activity is ongoing around the world, with local, regional, and federal elections coming under the scrutiny of researchers. For example, Ferrara et al. analyzed the presence of artificial activity during Trump’s 2016 US election, Macron’s 2017 election in France [

1], Keller and Klinger focused on elections in Germany [

2], Borge-Holthoefer et al. analyzed the presence of artificial activity during the 15 May movement in Spain [

3], Ruediger et al. conducted an extensive study from the 2014 presidential election in Brazil to the referendum vote in 2017 [

4]. It is not just elections that have come to their attention, but also local conflict situations, such as the strike in 2017 or the US impeachment debate and the Catalan referendum. The increasing popularity of the use of fake accounts in political campaigns is clearly demonstrated in the work of Pastor-Galindo and colleagues [

31]. Whereas in 2014 they noted artificial activity in two countries, in 2019 they noted 13 countries where fake Twitter activity was used during election campaigns. The strength of the research to detect such activity is undoubtedly to capture the fact and to draw attention to the problem, the weakness is that researchers focus on the social network Twitter, whereas there are other networks such as Facebook, Instagram, TikTok, YouTube, etc. and that often this research is focused on finding bot farms from robotic accounts.

Summarizing the review of relevant works from the social and political sciences, it should be noted that the heterogeneity of concepts and terms for the same objects leads to collisions. As a consequence, the task of developing an algorithm for detecting induced activity, NC, and signs of special network operations becomes more complicated. It is therefore necessary to classify fake accounts.

The second line of study focuses more on the techno-mathematical realm. Here the category of Influence is put in the center of discussion. Influence in these approaches is interpreted as the process and result of a subject changing the behavior of another subject (individual or collective object of influence). Influence can be seen from two perspectives: (1) on the side of the recipient, (2) on the side of the disseminator of information.

Studies aimed at finding purposeful Influence are divided into those in which the authors look for communities or major nodes in communities. In such a case, the aim is to detect and assess the disseminator and the way the information is disseminated (diffusion). Studies are also divided into those in which the authors assess from the recipient’s reaction or the audience’s response to the Influence.

Let us consider the models and algorithms that we propose to place in the first group (recipient side):

In 1962, a penetration of innovation model was proposed by E. Rogers. This model is still used in research aimed at detecting the management or manipulation of users in social networks [

32]. Algorithms based on this model find innovators and then their contributors in social media.

Frank Bass’ multiple penetration of innovation model: According to Bass’s model [

7] agents in a network can be either active or inactive, i.e., agents have a binary state. Bass distinguishes two types of agents: (1) innovators; (2) imitators. In [

8], the authors propose a new model, an improved version of Bass’s model, which allows identifying influential channels on YouTube.

The spiral of silence is a theory proposed by Noelle-Neumann in 1974 [

33,

34]. His theory claims that people, driven by a fear of isolation, constantly monitor their opinions to assess whether they conform or contradict the majority opinion [

35]. As social networks become more popular for co-social interaction and political participation, the spiral theory of silence in social networks has become a popular subject of research [

34,

36] and can be used to detect Influence in social networks.

Models and algorithms that we propose to classify into the second group (the disseminator side) are, as follows:

Independent cascade models: Such models describe an information cascade, i.e., the dissemination of information through neighbors. To block propagation of the cascade between communities, the maximum value for the link fraction is calculated [

5,

6]. In a cascade, a central node is singled out, and it is basically a disseminator of information. Algorithms based on a cascade model allow for the detection of the most influential agents through their connection to multiple recipients. In [

6], algorithms based on independent information, cascade models are proposed.

Granovetter threshold model (threshold model) [

37]: In [

38], the authors show how the linear threshold model GLT (an evolution of the Granovetter model) can more accurately simulate the dissemination of information in social media and detect disseminators.

Graph models: Social network graph models are used to analyze information dissemination processes, finding communities and related subgroups into which the entire social network can be broken down. In [

39], the authors suggest an approach to detecting bots by visualizing the graph of communication between social network users. In [

40], the authors presented an approach for detecting fake accounts based on their online interactions with other users.

Summarizing the review of relevant models and algorithms for detecting signs of processes and results of a subject changing the behavior of another subject, i.e., the Influence on public opinion, we can say that the most effective would be to measure user activity within a political project. This corresponds, for instance, to Everett Rogers’ penetration of innovation model, Spiral of silence, and the Granovetter model. However, relevant studies have not considered the task of identifying NC (innovators, initiators, agitators) and there is no methodology for detecting induced activity in the known studies.

Summing up the first and second part of the review, we note that a review of relevant works suggests that there are a sufficient number of tools, approaches that can be adapted to the task of detecting induced activity, NC or features of network operations. However, it is necessary to select and combine their strengths to detect induced activity during the implementation of a political project. Our aim in this paper is to propose a methodology for detecting and diagnosing induced support for a political project in social media.

3. Methodology for Identifying and Diagnosing Induced Support for a Political Project

According to most models and algorithms that analyze the reactions of recipients, the entire audience is divided into quiet observers and activists. Thus, the most important difference between Influence actors (Network Combatants) and the civilian population in a political project would be the level of activity: the number of messages, the number of likes, etc. A methodology is needed to separate the civilian population from the combatants in the social network.

The proposed methodology includes an induced activity model, an algorithm for segmenting the audience of a political project by activity level, and a technique for detecting induced activity. Thus, the methodology provides the identification and diagnosis of induced support for a political project.

3.1. Model of Induced Activity in Social Networks

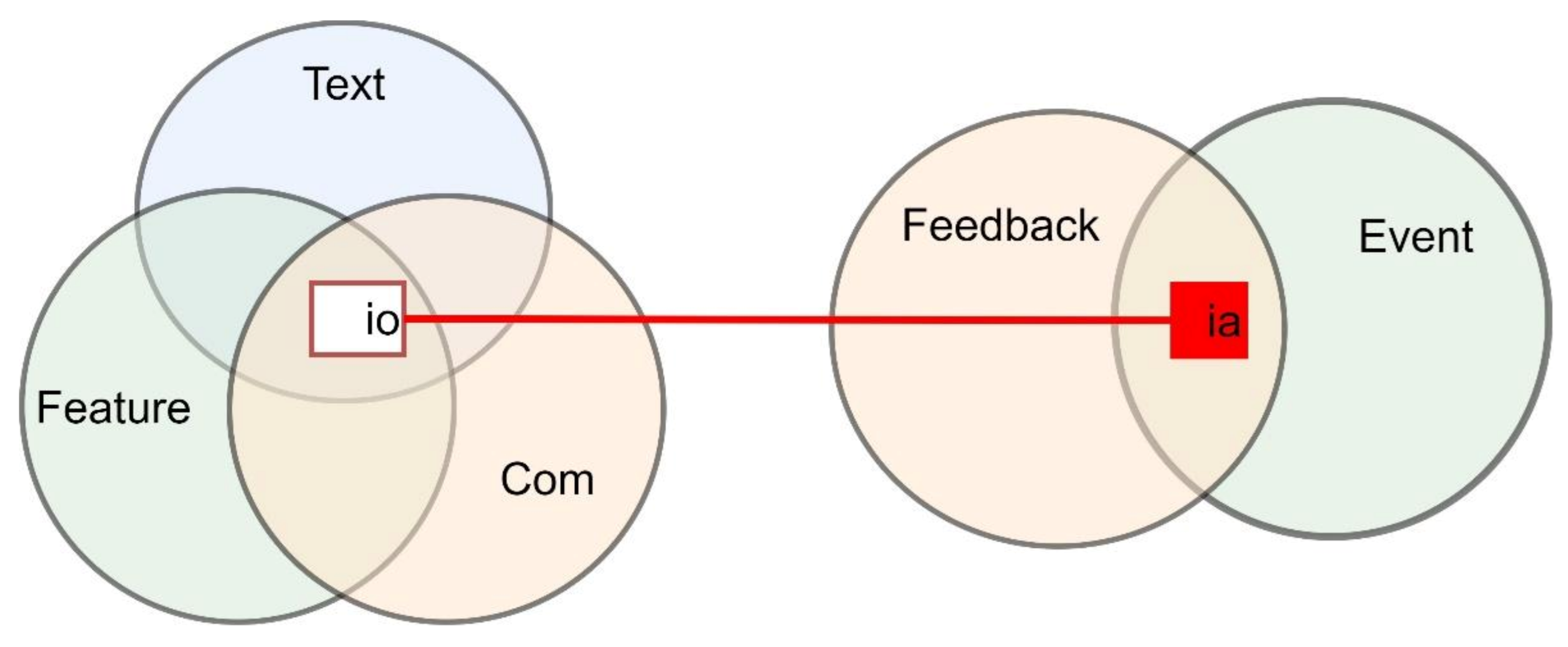

The set-theoretic model of induced activity in a social network includes such basic elements as:

IO—set of information objects;

IA—set of induced activity;

Text—a set of textual attributes of the information object;

Feature—the set of discrete characteristics of the information object;

Com—the set of links between objects in the social network;

Feedback—many signs of feedback from the audience;

Event—event, political project.

The model can be represented as follows (

Figure 1)

Let the information object in social networks have a number of properties:

Let us suppose the following:

It is possible to identify information objects that deviate from the norm, i.e., have anomalous characteristics by textual characteristics, connections, or by discrete features;

It is possible to identify feedback characteristics for information objects that will differ from the norm;

Then:

In accordance with the proposed model, we will formulate the concept of induced activity:

An induced activity is a set of information objects united by textual features, discrete characteristics, and connections with another information objects around one event, activity, or theme, designed to increase the level of feedback from the audience and form a necessary point of view.

The proposed model of induced activity differs from the existing ones in that it takes into account the level of audience feedback in the social network of the political project, connection between informational objects, as well as their textual and discrete characteristics. At the same time, the model allows to form the requirements for the algorithm of segmentation of the audience of the political project.

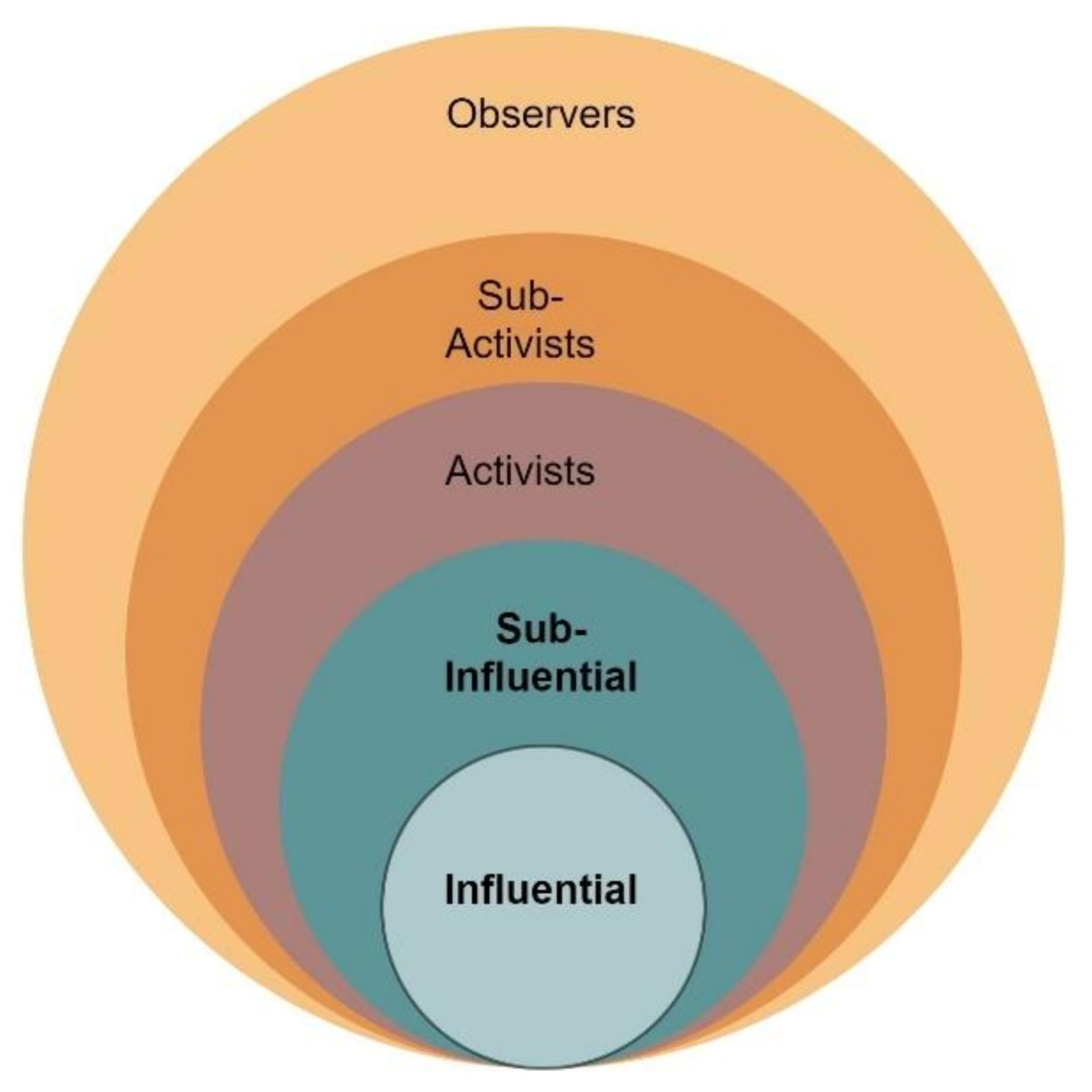

3.2. The Algorithm of Segmentation of the Audience of a Political Project

Let us consider an algorithm that assesses the audience of a political project by their activity on the social network. By activity we mean the number of comments and/or “likes” left by the social network user.

At the first step, the “Passive” segment is distinguished from the whole audience of the political project, which includes users who have viewed the posts, comments, but have never shown communicative activity. Users who are not in the “Passive” segment will be referred to as communicative core (

Figure 2).

The second step in the communication core summarizes the activity of each social network user and then calculates the average of the number of messages left and “likes”. All communicators with activity below the average are classified as “Observer”.

The third step follows the same steps as the second step for users who are not in the “Passive” and “Observer” segments. Users with below average activity are placed in the “Sub-Activist” segment.

The fourth step follows the same steps as the third step for users who are not in the “Passive”, “Observer”, and “Sub-Activist” segments. Users with below average activity will be placed in the “Activist” segment.

The fifth step proceeds in the same way as the fourth step for users who are not in the “Passive”, “Observer”, “Sub-Activist”, and “Activist” segments. Users with below average activity are placed in the “Sub-Influential” segment and above average users are placed in the “Influential” segment.

The algorithm results in six user segments. The segmentation significantly reduces the number of profiles for analysis and comparison when identifying NC. The algorithm identifies the most active group of users who form the online social capital artificially created for a political project. They create induced content traffic and thereby influence public opinion.

The proposed algorithm is an elaboration of the authors’ previous studies, which presented the results of an analysis of information dissemination sources in social networks [

41,

42].

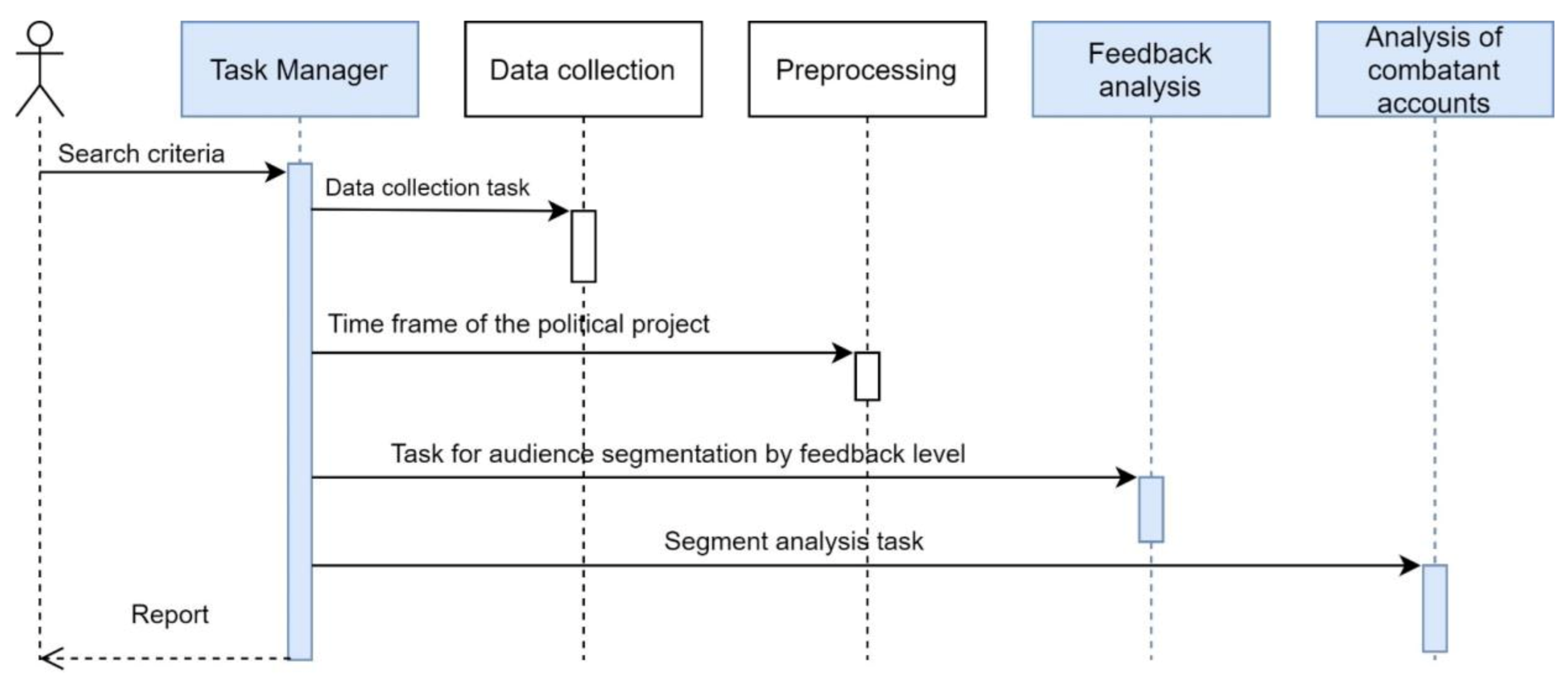

3.3. Technique for Identifying and Diagnosing Induced Support

According to the induced activity model and algorithm of segmentation, the technique for identifying and diagnosing induced support for a political project can be presented as follows (

Figure 3).

According to the proposed technique, to detect induced activity in social networks, five tasks need to be carried out:

To select “Search criteria”—event, activity, topic, and keywords to be searched for on the social network;

To collect data from the social network;

To identify the time frame of the event;

To analyze the level of feedback from the audience;

To analyze of the network combatant accounts

According to the proposed technique, only step 1 is carried out manually or in automatic mode. The remaining four steps can be carried out in automatic or automated mode. The components “data collection” and “data pre-processing” are not special. It is assumed that any available tools, algorithms, commercial designs, etc., can be used for data collection and preprocessing. The proposed technique supports the process of induced activity detection.

4. Experiment

To conduct the experiment, the study received confirmation from experts about the presence of artificial induced activity on the page of the candidate for the position of the head of the city administration (hereinafter referred to as the political project). The task was to detect the actors of induced activity, including combatants, to pass the list to the experts and to obtain confirmation or denial for each actor detected.

4.1. Description of the Stand and the Original Data

A stand with the following characteristics was prepared for the experimental evaluation:

Server supermicro: Intel Xeon CPU E5-2630/6 cores per processor, 12 virtual threads per processor (2 virtual threads per core), 1200–3100 MHz CPU frequency/128GB/HDD4+1Tb. Operating system: Debian Jessie 8.11;

A framework for collecting data from the VKontakte social network, which was developed as part of the grant of RSF #18-71-10094-P in SPC RAS;

Desktop computer: Intel (R) Core (TM) i5-9600CPU 3.10 GHz / DDR16.00G / 128SSD/ 1000HDD; Operating system: “Windows 10 Education Version 20H2”. Microsoft Office Standard 2019.

The data sets contain the following fields:

Project (social network page of a political leader);

Author (identifier of the author of the message);

Name_author (name of the author of the message);

Date (date of message);

Text (message);

Like (the number of “likes” to the message);

Comm (the number of comments on the message);

Repost (the number of “repost” messages);

View (the number of views of the message)

Type (message type: post on page, comment).

All the fields in the dataset are connected to each other, e.g., the “like” field contains information about the author who left it on the page of the political project. It should be noted that all author IDs and names were anonymized.

The data collection was conducted in three iterations, allowing us to examine updates on the political leader’s page, to see traces of moderation (deleted posts) and to further compare the ambivalent history of active participants during and after the election campaign. The data collection period was from 1 March 2019 to 31 December 2019. A total of 222 posts were published on the political leader’s page. More than 130,000 comments and more than 300,000 likes to the posts and comments on the political project page were collected in the study.

4.2. Analysis of Induced Support for a Political Project

The experiment used the algorithm of segmentation of the audience of a political project according to their level of feedback. According to the algorithm, of all the many feedback signs, only comments were taken into account (see

Table 1) [

43].

In total, 21,983 participants showed some activity on the page of the political project. According to the algorithm of segmentation, the following segments and characteristics of the communicative core of a political project were obtained using the comments analysis.

Only five users from the Influential segment are subscribed to the political project page. The numbers of comments left only by the first three Influential users are the following: 7863, 2400, and 2292. Together, 20 users of the Influential segment wrote 20,600 comments.

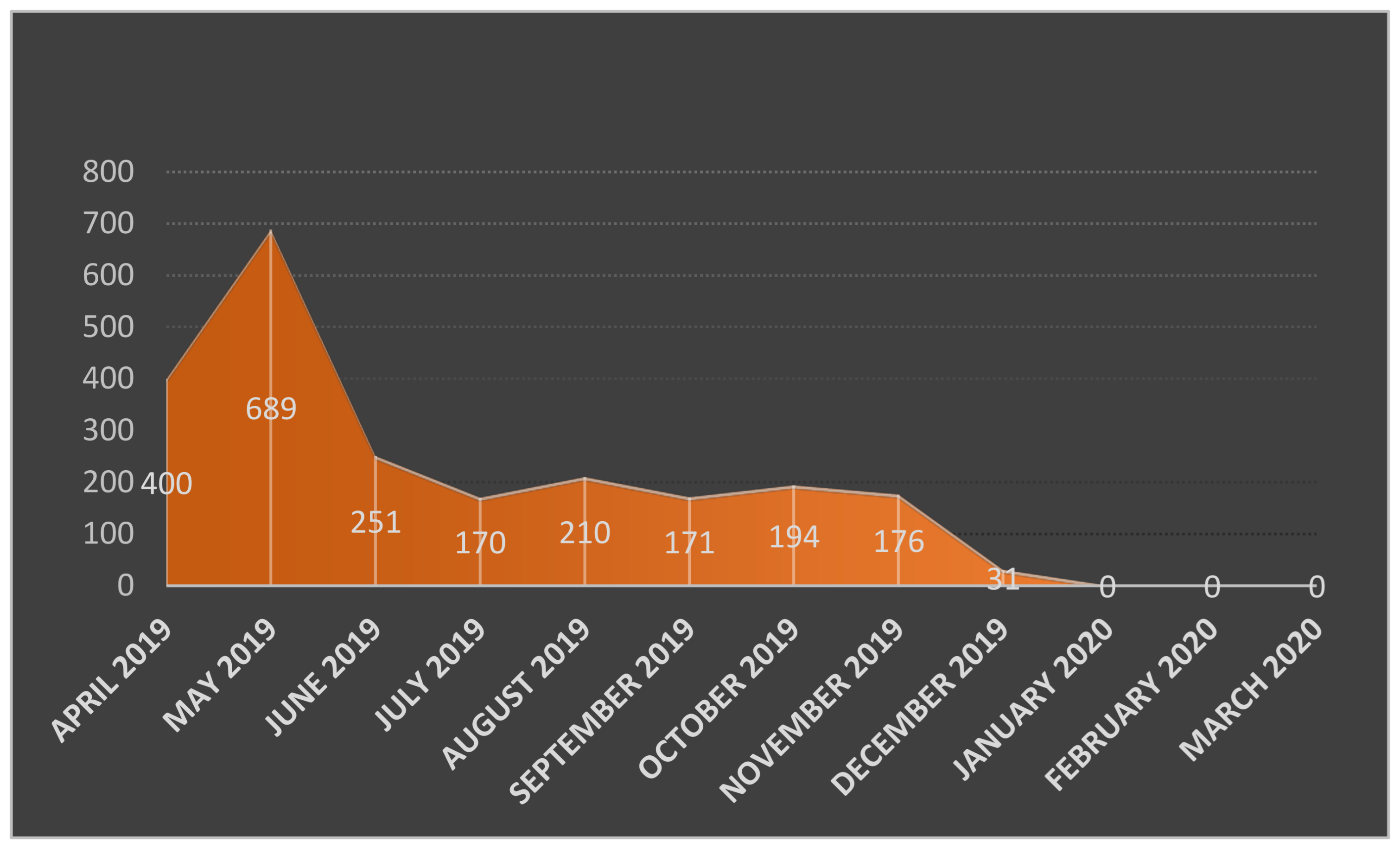

A detailed study was conducted for users who made up the Influential segment. An additional analysis of the profiles of such authors in the social network showed that at the time of their previous activity in the social network, they had other nicknames (for example, Ivan Ivanov was previously called “the Government”). Or they had other avatars or other posts on the wall. After the end of the election campaign, all information from the pages was deleted. Separately, the dynamics of network combatant activity was analyzed by the number of comments. An example of the results of such an analysis on the example of the author “Ivan Ivanov” (

Figure 4).

It is important to note that all 20 Influential essentially acted as Network Combatants (agents of induced activism). They wrote messages only in support of the candidate, and acted either as official representatives of regional government agencies or as extremely active supporters of the candidate. However, it should be stressed that the candidate served as the head of the regional government during the election period.

Content analysis of comments from NC (Influential) revealed that the user “Prist Sergeev” (old username [id58…|Ivan Sor…mov]) wrote messages agitating in support of the subject of the information operation. In addition, co-messaging in support of the candidate during the campaign were actors with the nicknames “Ekaterina G…ova”, “Nina Ko….va”, and “Vyacheslav La…ov”. A retrospective analysis revealed that these users had worked directly in support of the gubernatorial candidate as part of their previous activities on the social network. Thus, all of them were found to have previously participated in processing negative information on the candidate’s page. All of these users wrote their last comments in September 2019, 2–3 days after the end of the elections.

Among the 100 Sub-Influential, 26 users participated in commenting on the candidate’s page for 10 months and ceased their activity immediately after the end of the campaign.

Comments and “like” marks on the page of a candidate on a social network were collected by connecting to the social network API in different sessions, once every two days, during the entire election campaign. Often the comments were deleted by the moderators of the page, but due to the different sessions, the comments were kept in the database for research.

Remarkably, the second and third most popular regions, as reported by social network users, were Uzbekistan and, surprising as it may seem, Barbados. Both extremely far from the region where the campaign took place.

An analysis of user registration dates revealed a sign of induced activity such as a spike in the number of users registered in 2019 in February and March. This was the period when the election campaign kicked off.

The experiment resulted in a list of alleged NC, actors of artificial induced activity on the candidate’s page. This list was presented to the experts engaged for the study, which included professional campaign managers, journalists, and political communication experts. When reviewing the material provided, all experts confirmed that the induced support agents were correctly identified. Thus, the experiment confirms the effectiveness of the methodology.

5. Discussion

In the broader context of the use of the findings, it should be noted that, to date, the electoral laws of most states do not regulate the use of social bots or, more generally, the use of ad hoc social media operations during election campaigns. Meanwhile, research, including that described in this article, shows that this tool is increasingly being used in the arsenal of applied political technology. Moreover, this tool can influence the consciousness and behavior of large groups of voters, especially those who have not yet made a definitive choice and are still in search of a candidate or party to vote for. The bandwagon effect, repeatedly described in electoral technology theory, is that undecided voters join the vote for a potential winner, in favor of whom there appears to be a so-called “climate of opinion” [

44,

45].

In our view, there are to be suggested some useful innovations in the electoral legislation of democratic states to regulate the use of those voter influencing techniques that we discussed in this article. As a first step, we would propose the introduction of mandatory marking of candidate pages with signs of induced activity. This would give voters the right to know if a particular politician or candidate’s page shows features of a specific operation, in particular the presence of bots that create additional induced activity. This gives voters the false impression that the candidate has widespread support on the social network. By doing so, they give the false impression to voters that the candidate has widespread support on social media. The informed voter is then already aware that there is a special online operation in favor of that candidate and he or she, the voter, is able to make a more informed responsible electoral decision.

In addition, candidates who use social bots in their campaigns to generate induced online support could also be encouraged to voluntarily inform voters on their social media pages.

Anyway, from our point of view, the proposed algorithmic approach and related legislative solutions will be able to ensure higher awareness of voters and protect them from the use of shady special network technologies. Thereby, this would improve the quality of electoral democracy and protect individual rights in social networks. Therefore, in the future, the study will be continued and the number of experimental tests for the methodology on other political projects will be increased. We are also going to expand the methodology to investigate special network operations in the field of business projects and in social media market.