Hot Topic Community Discovery on Cross Social Networks

Abstract

1. Introduction

- We conduct hot topic discovery on cross social networks instead of a single social network.

- We propose a new topic model called LB-LDA, which can relieve the sparseness of the topic distribution.

2. Related Work

2.1. Existing Research on Cross Social Network

2.2. Existing Research on Topic Model

2.3. Existing Research Topic Discovery

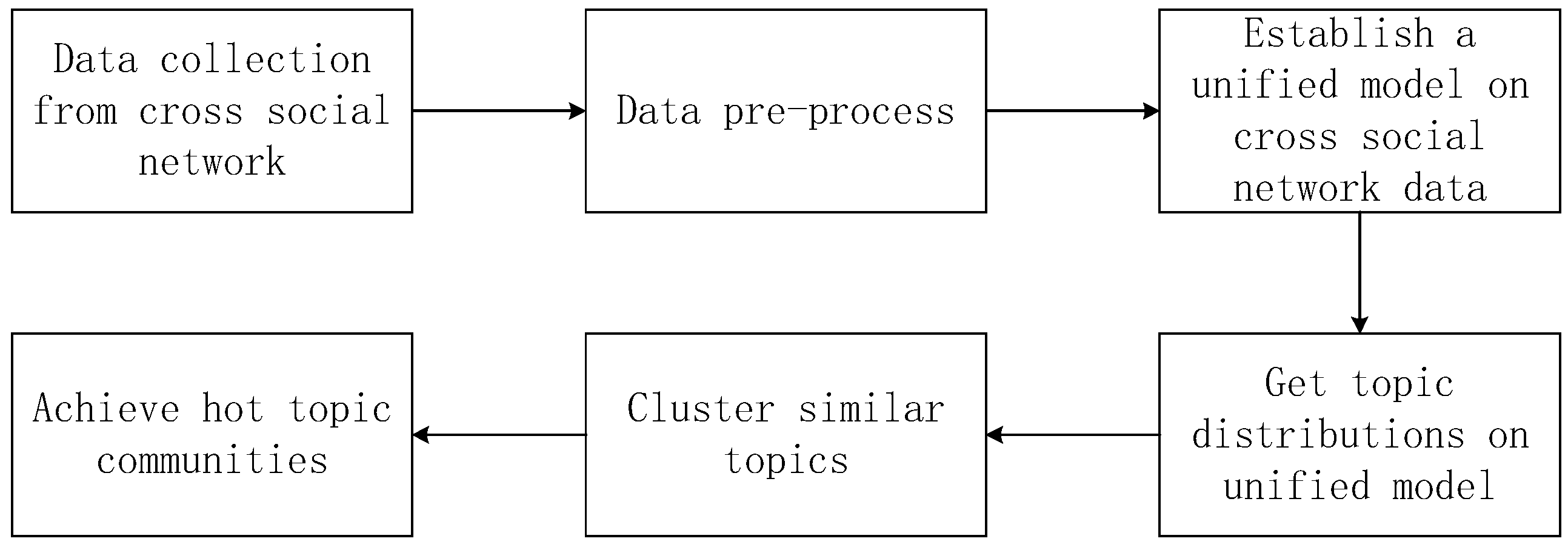

3. Hot Topic Community Discovery Model

3.1. Unified Model Establish Method

3.1.1. Introduction to Cross Social Network

3.1.2. Unified Model Establishment Method

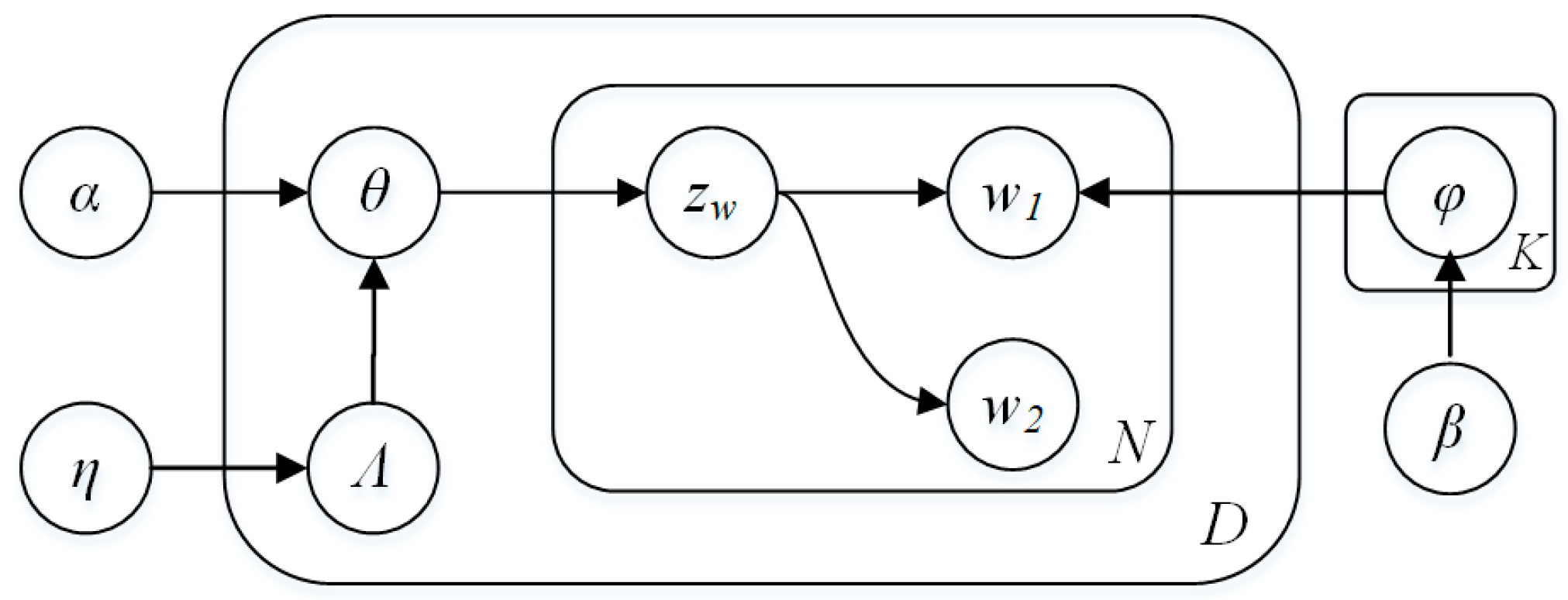

3.2. LB-LDA Model

3.2.1. Definition of Biterm

3.2.2. LB-LDA Model Description

3.2.3. LB-LDA Model Inference

| Algorithm 1 Gibbs Sampling Process. | |

| Input | Enlarged corpus C’, topic labels set T, hyper-parameters , iteration times iter |

| Output | Document-topic distribution , topic-word distribution |

| 1 | Initialize each biterm’s the topic label randomly |

| 2 | For iter_times = 1 to iter |

| 3 | For each document d’ in corpus C’do |

| 4 | For each biterm b in document d’ do |

| 5 | Calculate the probability of each topic label by Equation (1) |

| 6 | Sample b’s topic label based on the result of step 5; |

| 7 | Calculate and based on Equations (2) and (3) |

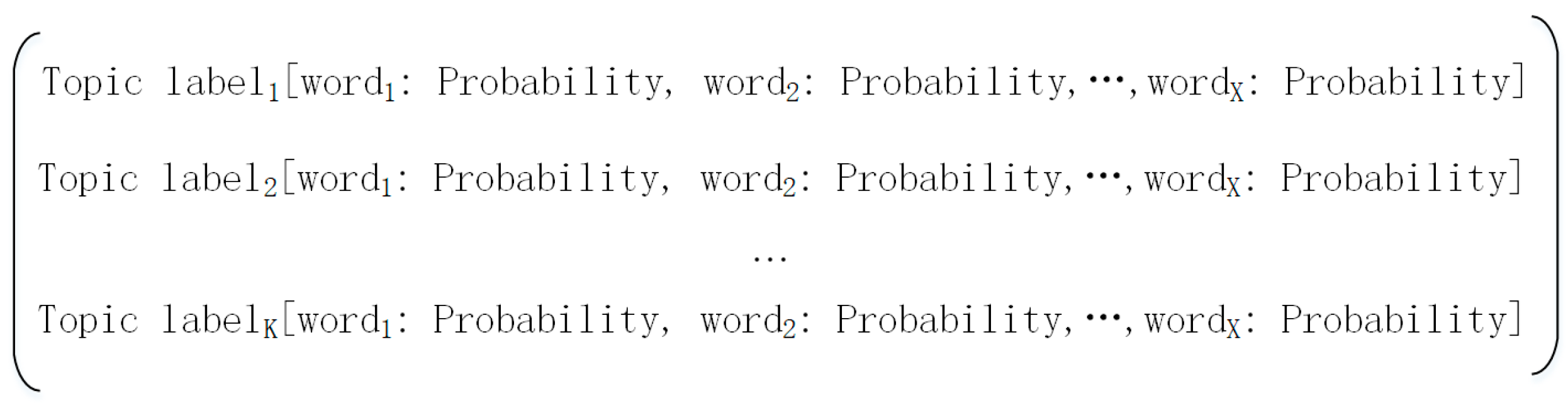

3.3. Topic Similarity Calculation Method on Cross Social Networks

3.3.1. Topic-Word Distribution Dimension Reduction Strategy

3.3.2. Topic Similarity Calculation Method

3.4. Hot Topic Community Discovery Method

3.4.1. Topic Clustering Method

3.4.2. Hot Topic Community Calculation Method

4. Experiment and Results

4.1. Data Collection

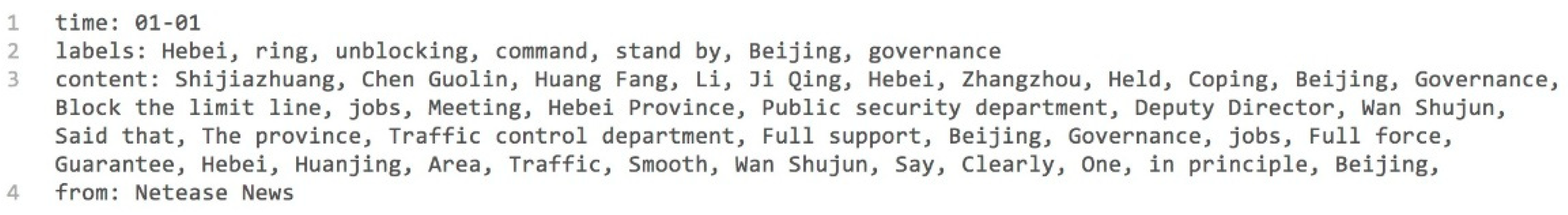

4.1.1. Cross Social Network Dataset

4.1.2. Unified Model Building

4.2. Topic Discovery Experiment Method

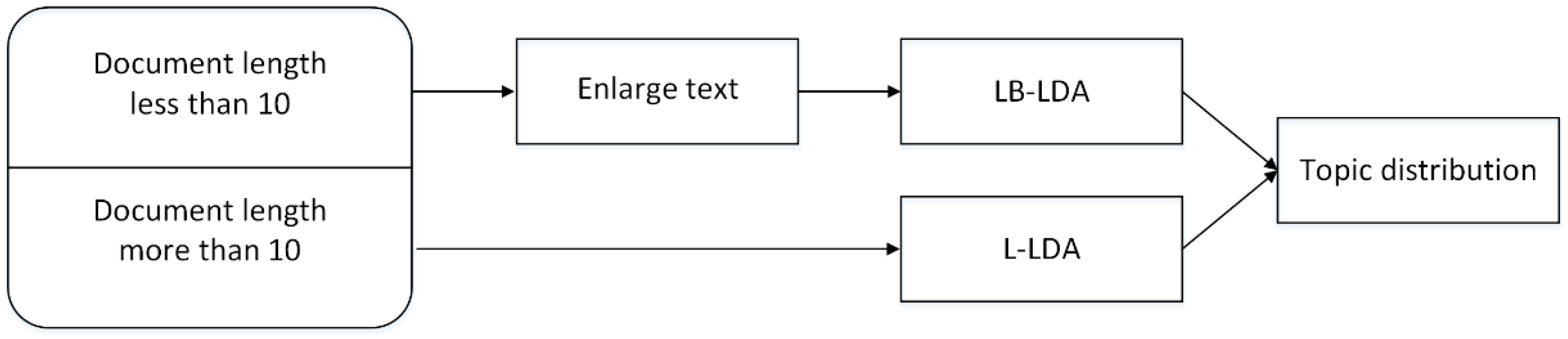

4.2.1. Text Expansion Method

4.2.2. Topic Distribution Calculation Method

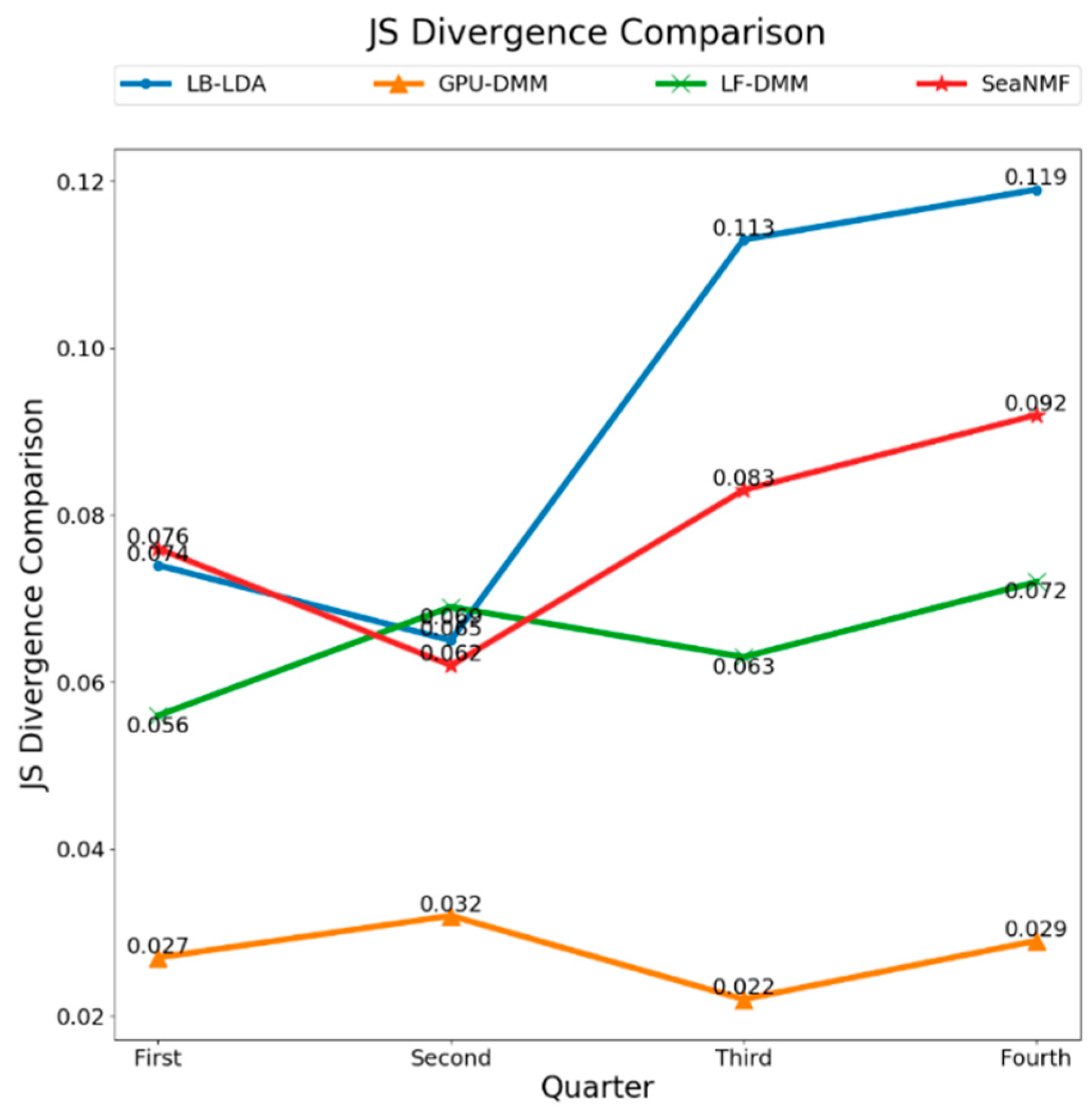

4.2.3. Comparisons with Other Topic Models

4.2.4. Topic Distance Calculation Method

4.3. Hot Topic Community Discovery

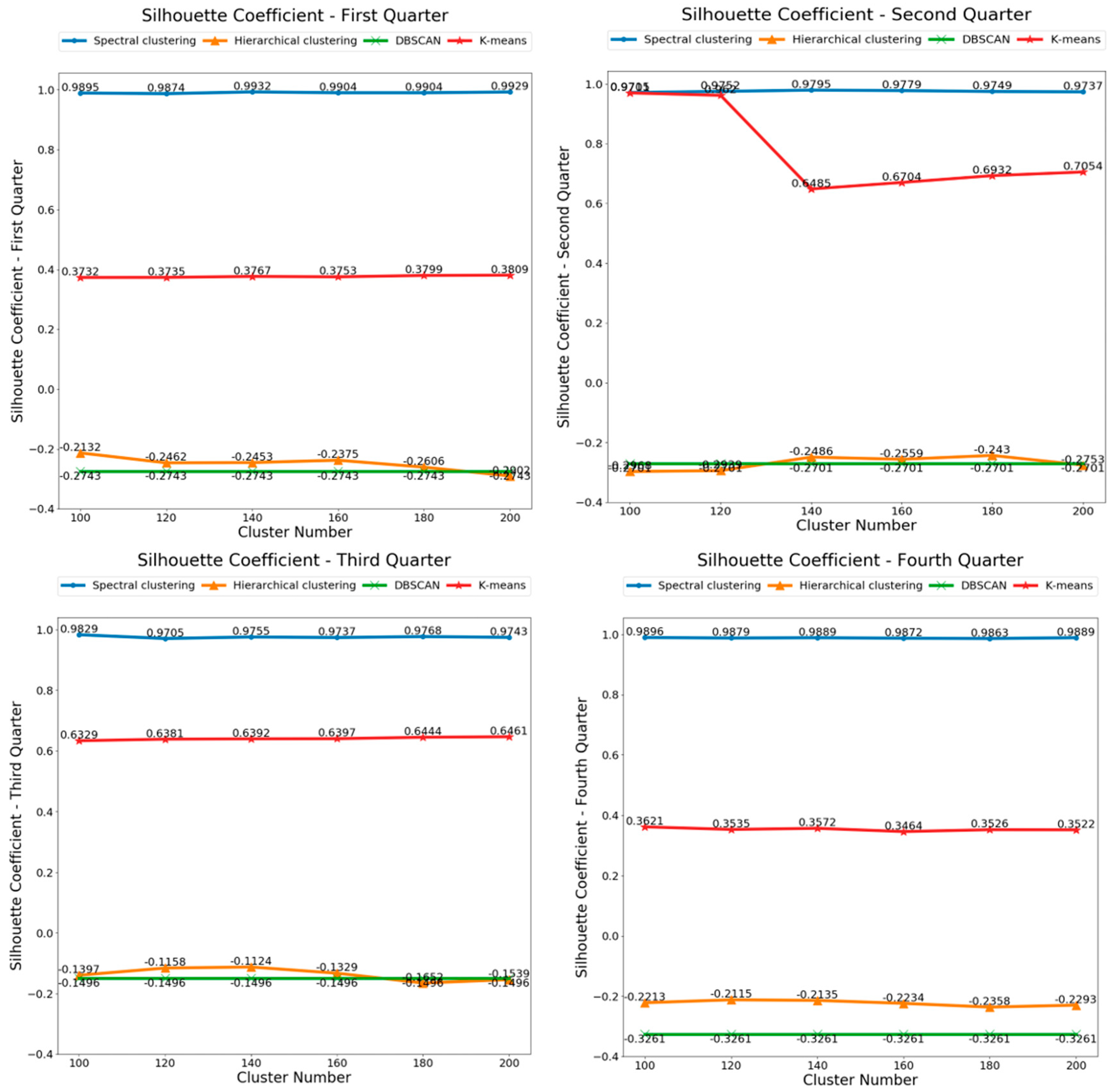

4.3.1. Cluster Method

4.3.2. Evaluation Standard

4.3.3. Comparison of Different Clustering Method

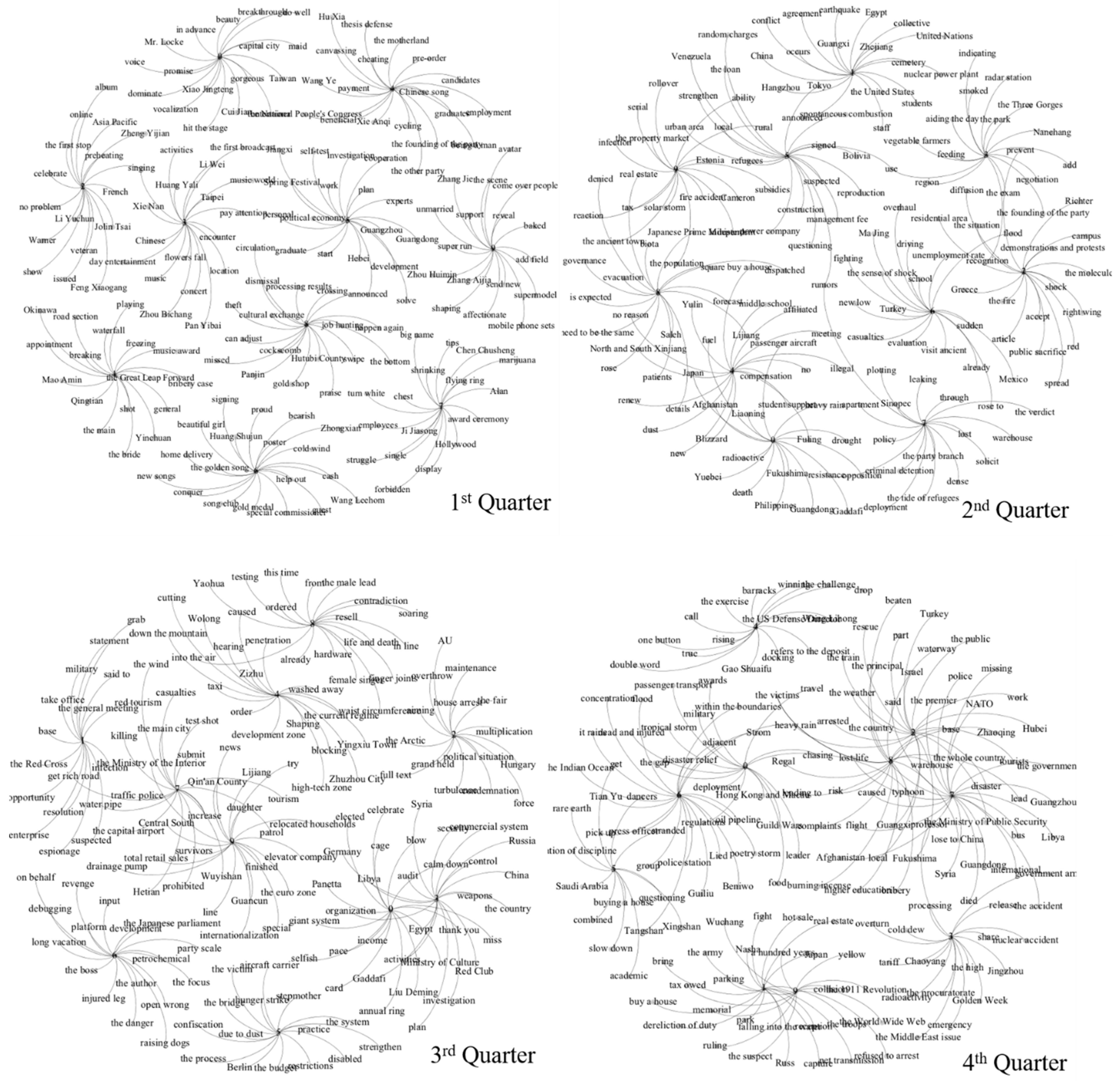

4.3.4. Hot Topic Community Results and Analysis

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Skeels, M.M.; Grudin, J. When social networks cross boundaries: A case study of workplace use of facebook and linkedin. In Proceedings of the ACM 2009 International Conference on Supporting Group Work, Sanibel Island, FL, USA, 10–13 May 2009; pp. 95–104. [Google Scholar]

- Morris, M.R.; Teevan, J.; Panovich, K. A Comparison of Information Seeking Using Search Engines and Social Networks. ICWSM 2010, 10, 23–26. [Google Scholar]

- Dale, S.; Brown, N. Cross Social Network Data Aggregation. US Patent 8,429,277, 2013. [Google Scholar]

- Farseev, A.; Kotkov, D.; Semenov, A.; Veijalainen, J.; Chua, T.S. Cross-social network collaborative recommendation. In Proceedings of the ACM Web Science Conference, Oxford, UK, 28 June–1 July 2015; p. 38. [Google Scholar]

- Tian, Y.; Yuan, J.; Yu, S. SBPA: Social behavior based cross Social Network phishing attacks. In Proceedings of the 2016 IEEE Conference on Communications and Network Security (CNS), Philadelphia, PA, USA, 17–19 October 2016; pp. 366–367. [Google Scholar]

- Shu, K.; Wang, S.; Tang, J.; Wang, Y.; Liu, H. Crossfire: Cross media joint friend and item recommendations. In Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, Los Angeles, CA, USA, 5–9 February 2018; pp. 522–530. [Google Scholar]

- Deerwester, S.; Dumais, S.T.; Furnas, G.W.; Landauer, T.K.; Harshman, R. Indexing by latent semantic analysis. J. Am. Soc. Inf. Sci. 1990, 41, 391–407. [Google Scholar] [CrossRef]

- Hofmann, T. Probabilistic latent semantic analysis. In Artificial Intelligence, Proceedings of the Fifteenth conference on Uncertainty, Stockholm, Sweden, 30 July–1 August 1999; Morgan Kaufmann Publishers Inc.: Burlington, MA, USA, 1999; pp. 289–296. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 138, 993–1022. [Google Scholar]

- Ramage, D.; Hall, D.; Nallapati, R.; Manning, C.D. Labeled LDA: A supervised topic model for credit attribution in multi-labeled corpora. In Proceedings of the 2009 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–7 August 2009; pp. 248–256. [Google Scholar]

- Titov, I.; McDonald, R. Modeling online reviews with multi-grain topic models. In Proceedings of the 17th International Conference on World Wide Web, Beijing, China, 21–25 April 2008; pp. 111–120. [Google Scholar]

- Chen, H.; Yin, H.; Li, X.; Wang, M.; Chen, W.; Chen, T. People opinion topic model: Opinion based user clustering in social networks. In Proceedings of the 26th International Conference on World Wide Web Companion, Perth, Australia, 3–7 April 2017; pp. 1353–1359. [Google Scholar]

- Iwata, T.; Watanabe, S.; Yamada, T.; Ueda, N. Topic Tracking Model for Analyzing Consumer Purchase Behavior. IJCAI 2009, 9, 1427–1432. [Google Scholar]

- Kurashima, T.; Iwata, T.; Hoshide, T.; Takaya, N.; Fujimura, K. Geo topic model: Joint modeling of user’s activity area and interests for location recommendation. In Proceedings of the Sixth ACM international Conference on Web Search and Data Mining, New York City, NY, USA, 4–8 February 2013; pp. 375–384. [Google Scholar]

- Chemudugunta, C.; Smyth, P.; Steyvers, M. Modeling general and specific aspects of documents with a probabilistic topic model. In Advances in Neural Information Processing Systems 19, Proceedings of the Twentieth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 4–7 December 2006; The MIT Press: Cambridge, MA, USA, 2007; pp. 241–248. [Google Scholar]

- Lin, C.; He, Y. Joint sentiment/topicmodel for sentiment analysis. In Proceedings of the 18th ACM Conference on Information and Knowledge Management, Hong Kong, China, 2–6 November 2009; pp. 375–384. [Google Scholar]

- Wang, S.; Chen, Z.; Liu, B. Mining aspect-specific opinion using a holistic lifelong topic model. In Proceedings of the 25th International Conference on World Wide Web, Montreal, QC, Canada, 11–15 April 2016; pp. 167–176. [Google Scholar]

- Cheng, X.; Yan, X.; Lan, Y.; Guo, J. Btm: Topic modeling over short texts. IEEE Trans. Knowl. Data Eng. 2014, 26, 2928–2941. [Google Scholar] [CrossRef]

- Wang, X.; McCallum, A.; Wei, X. Topical n-grams: Phrase and topic discovery, with an application to information retrieval. In Proceedings of the Seventh IEEE International Conference on Data Mining (ICDM 2007), Omaha, NE, USA, 28–31 October 2007; pp. 697–702. [Google Scholar]

- Vaca, C.K.; Mantrach, A.; Jaimes, A.; Saerens, M. A time-based collective factorization for topic discovery and monitoring in news. In Proceedings of the 23rd international conference on World Wide Web, Seoul, Korea, 7–11 April 2014; pp. 527–538. [Google Scholar]

- Li, J.; Ma, X. Research on hot news discovery model based on user interest and topic discovery. In Cluster Computing; Springer: Berlin, Germany, 2018; pp. 1–9. [Google Scholar]

- Liu, Z.H.; Hu, G.L.; Zhou, T.H.; Wang, L. TDT_CC: A Hot Topic Detection and Tracking Algorithm Based on Chain of Causes. In Proceedings of the International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Sendai, Japan, 26–28 November 2018; pp. 27–34. [Google Scholar]

- Torgerson, W.S. Theory and Methods of Scaling; Wiley: New York, NY, USA, 1958. [Google Scholar]

- Li, C.; Wang, H.; Zhang, Z.; Sun, A.; Ma, Z. Topic modeling for short texts with auxiliary word embeddings. In Proceedings of the 39th International ACM SIGIR conference on Research and Development in Information Retrieval, Pisa, Italy, 17–21 July 2016; pp. 165–174. [Google Scholar]

- Nguyen, D.Q.; Billingsley, R.; Du, L.; Johnson, M. Improving topic models with latent feature word representations. Trans. Assoc. Comput. Linguist. 2015, 3, 299–313. [Google Scholar] [CrossRef]

- Shi, T.; Kang, K.; Choo, J.; Reddy, C.K. Short-Text Topic Modeling via Non-negative Matrix Factorization Enriched with Local Word-Context Correlations. In Proceedings of the 2018 World Wide Web Conference on World Wide Web, Lyon, France, 23–27 April 2018; pp. 1105–1114. [Google Scholar]

| Element | Meaning |

|---|---|

| The number of biterms in document d, excluding biterm i | |

| The number of biterms in document d, for which topic label is k, excluding biterm i | |

| The number of words in the corpus for which topic label is k, excluding this word | |

| The number of word in the corpus for which topic label is k, excluding this word | |

| The number of word in the corpus for which topic label is k, excluding this word |

| Time | Doc Number | Label Number | Min Length | Max Length | Average Length | QQ Zone | Sina Weibo | Netease News |

|---|---|---|---|---|---|---|---|---|

| 1st Quarter | 3708 | 7676 | 5 | 1162 | 40.22 | 1105 | 1271 | 1332 |

| 2nd Quarter | 3338 | 9397 | 3 | 1486 | 34.13 | 1206 | 1057 | 1075 |

| 3rd Quarter | 4057 | 9348 | 6 | 2360 | 48.47 | 1368 | 1197 | 1492 |

| 4th Quarter | 3590 | 7648 | 5 | 1711 | 49.69 | 1127 | 1075 | 1388 |

| Time | Part of Frequently Occurring Topic Labels |

|---|---|

| 1st Quarter | singing party, new song, Chinese pop, Hollywood, music scene, ceremony, cold wind, poster, earn money, cooperation, cultural exchange, spring festival, commerce |

| 2nd Quarter | Japan, radioactivity, casualties, earthquake, refugee, foreboding, resignation, opposition, snow, demonstration, biota, fuel, fire accident, indiscriminate charging |

| 3rd Quarter | Libyan, weapon, Gaddafi, kill, Red Cross, director, military, base interest, country, Ministry of Culture, condemn, money, celebrate, central weather station |

| 4th Quarter | 1911 Revolution, 100years, Wuchang, army, challenge, commemorate, government, Prime Minister rain, Guangdong, tropical storm, risk, disaster relief |

| National News | International News |

|---|---|

| Drunk driving is punishable | Earthquake happens in Japan |

| The Ningbo-Wenzhou railway traffic accident | Gaddafi captured and killed |

| The celebration of 1911 Revolution | NATO bombs Libya |

| The most restrictive property market in history | The death of Steve Jobs |

| Tiangong-1 successfully launched | US Army kills Bin Laden |

| Social Network | Hot Topics |

|---|---|

| QQ Zone | song, music, food, QQ farm, QQ ranch, study, Shanghai, Alipay, Friday, children, graduation, school, go to work, help, student, overtime, shopping, classmates, earthquake, job, books, money |

| Sina Weibo | Test, holiday, sunny, Japan, earthquake, snow, share, People’s Daily, music, game, Test, government, panda, rain, Red Cross, military, weapon, army, 1911 Revolution, nuclear power plant |

| Netease News | News, economic, China, South, fire, Hollywood, casualties, Japan, earthquake, Red Cross, Syria, 1911 Revolution, railway accident |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Zhang, B.; Chang, F. Hot Topic Community Discovery on Cross Social Networks. Future Internet 2019, 11, 60. https://doi.org/10.3390/fi11030060

Wang X, Zhang B, Chang F. Hot Topic Community Discovery on Cross Social Networks. Future Internet. 2019; 11(3):60. https://doi.org/10.3390/fi11030060

Chicago/Turabian StyleWang, Xuan, Bofeng Zhang, and Furong Chang. 2019. "Hot Topic Community Discovery on Cross Social Networks" Future Internet 11, no. 3: 60. https://doi.org/10.3390/fi11030060

APA StyleWang, X., Zhang, B., & Chang, F. (2019). Hot Topic Community Discovery on Cross Social Networks. Future Internet, 11(3), 60. https://doi.org/10.3390/fi11030060