High-Level Smart Decision Making of a Robot Based on Ontology in a Search and Rescue Scenario

Abstract

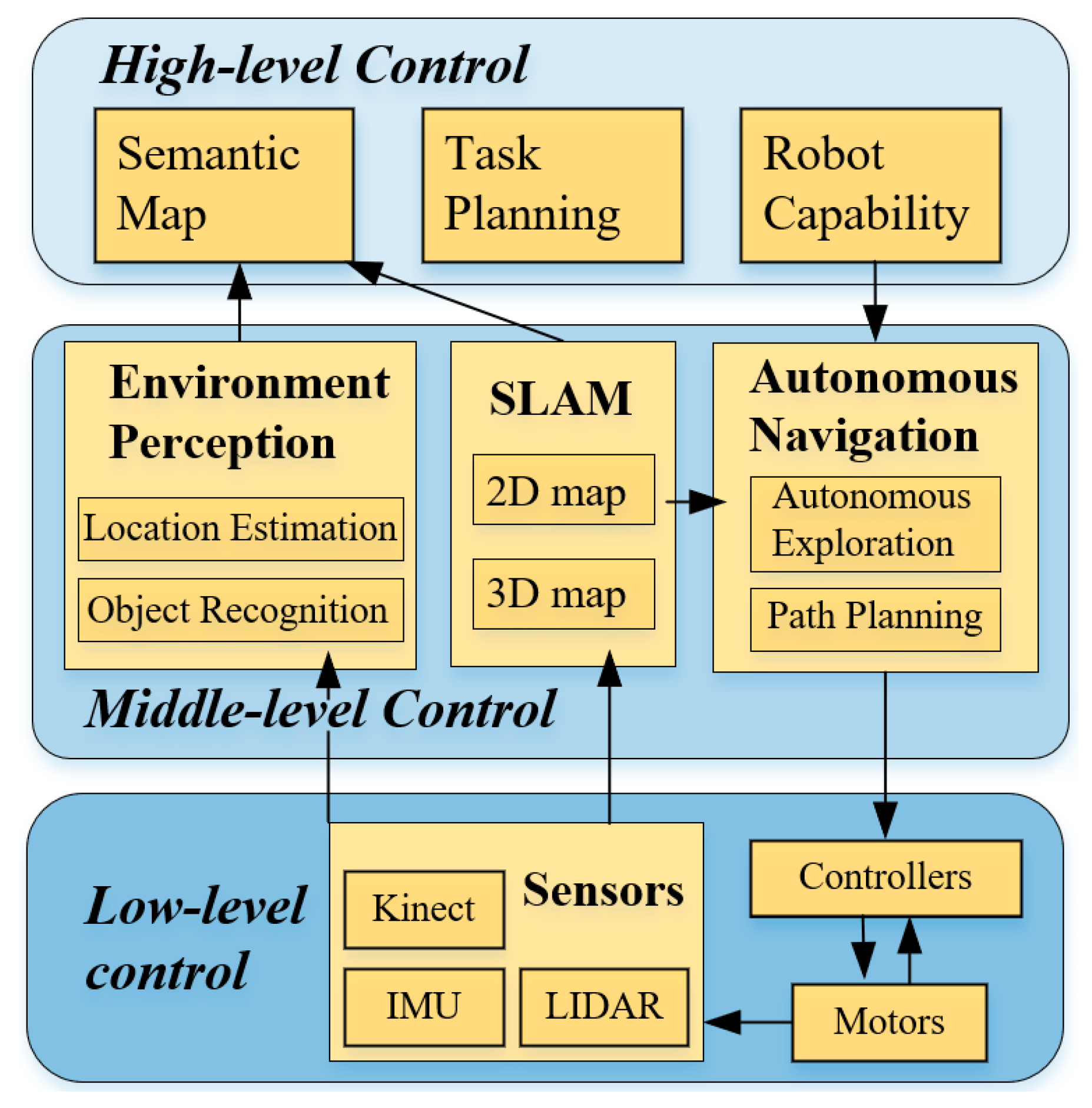

:1. Introduction

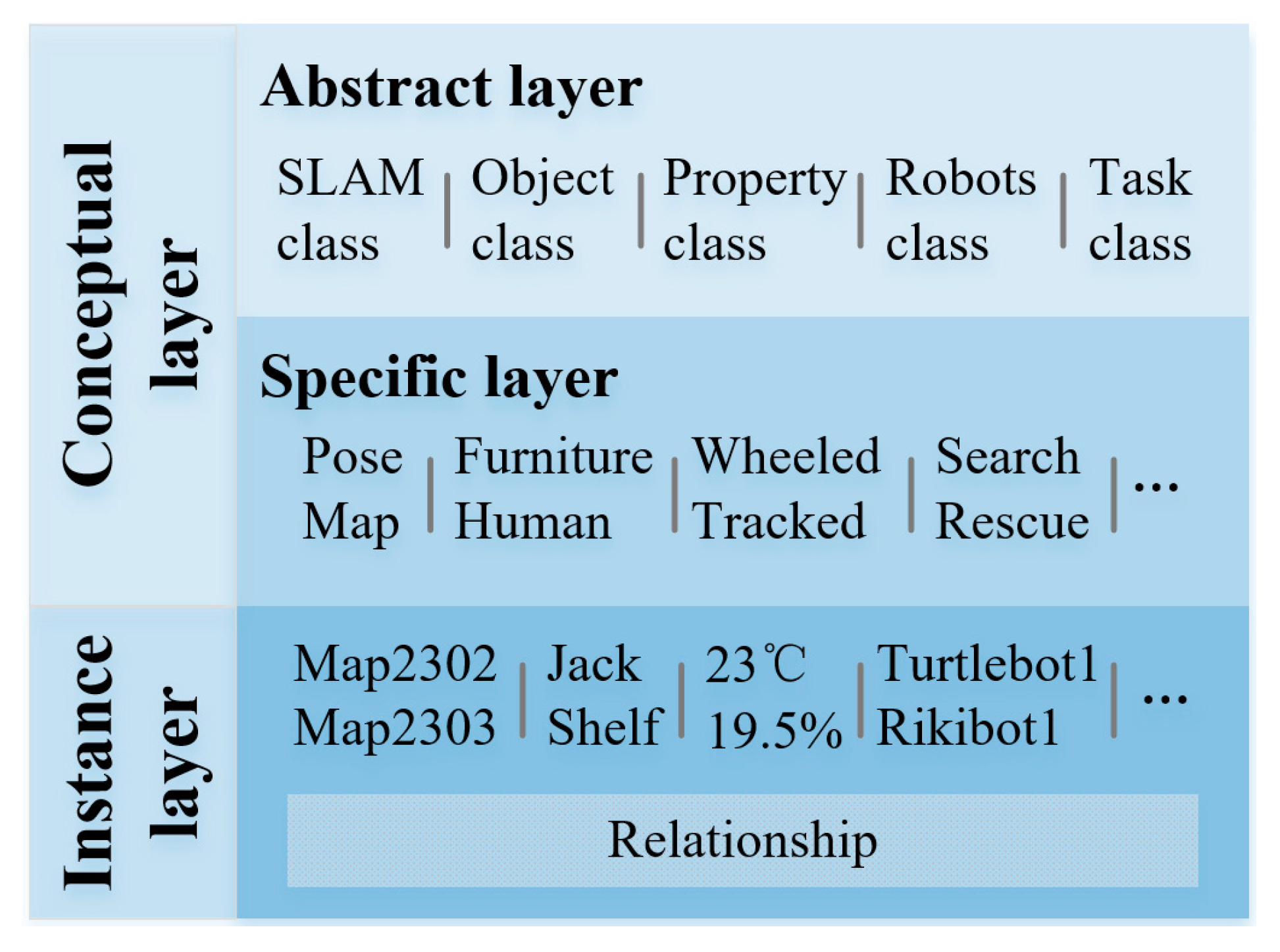

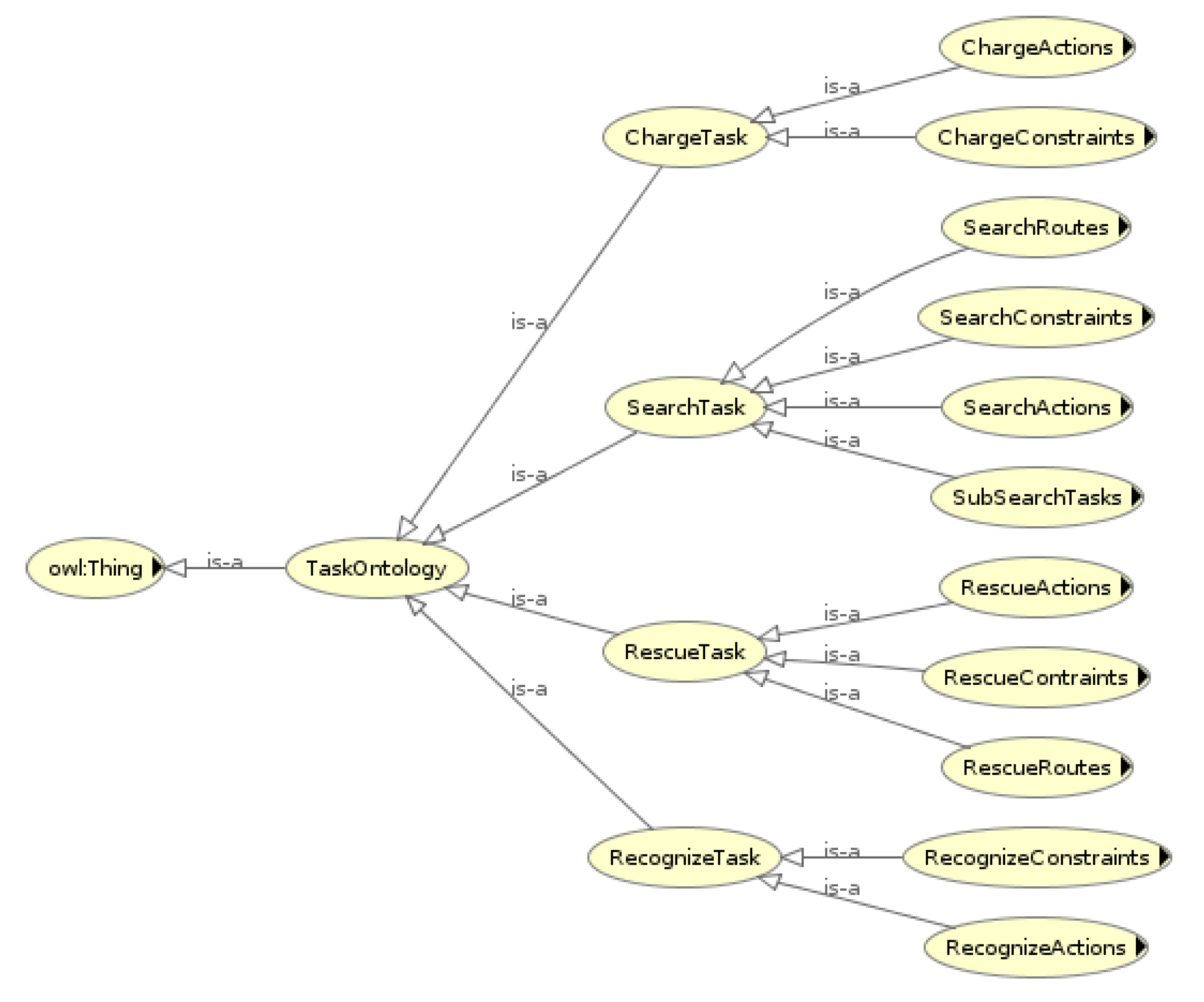

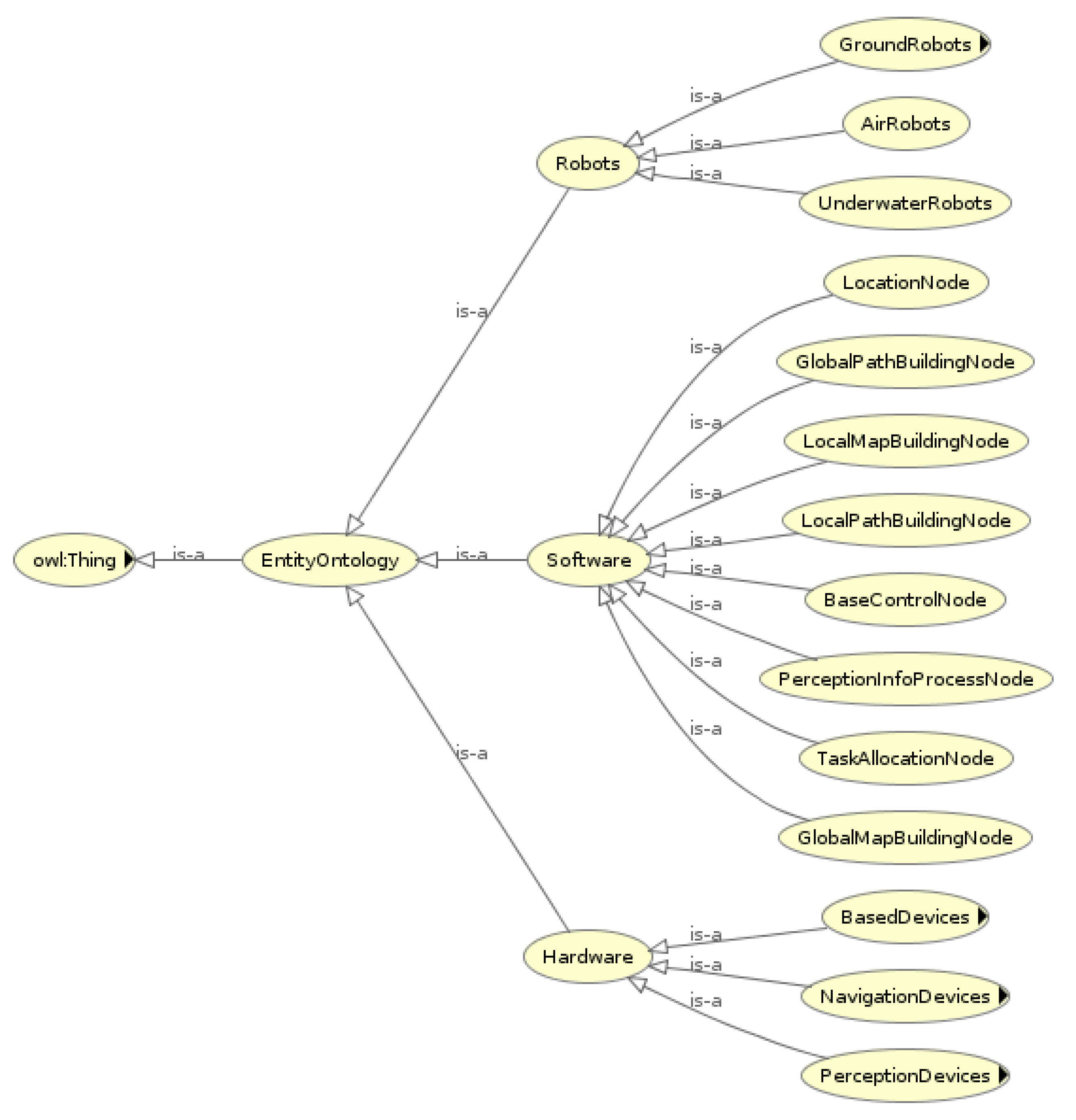

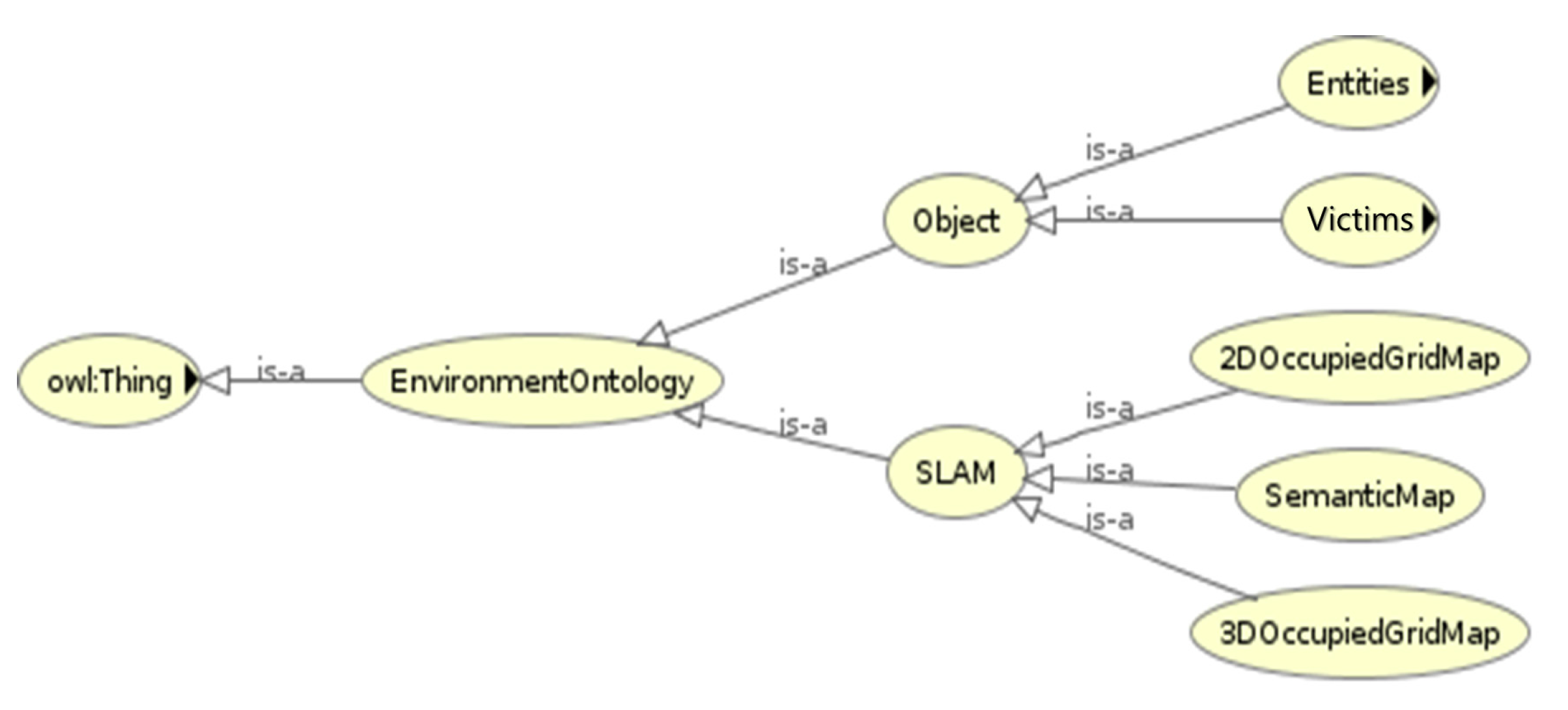

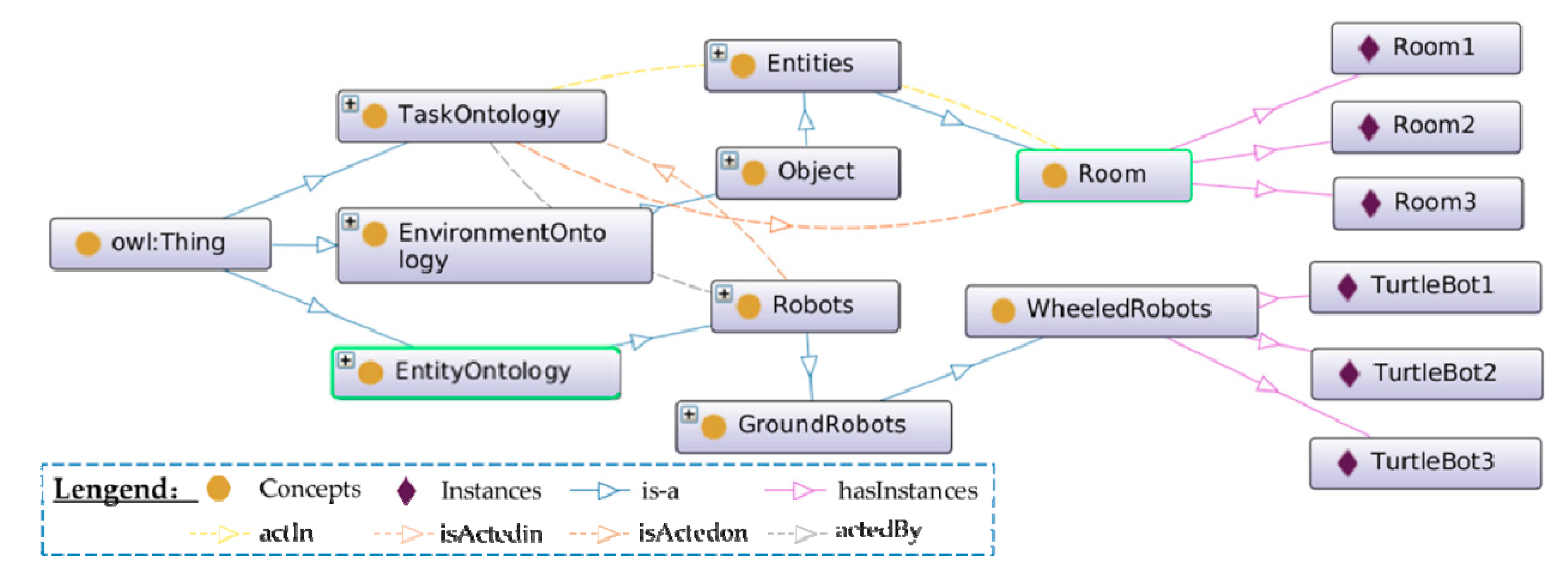

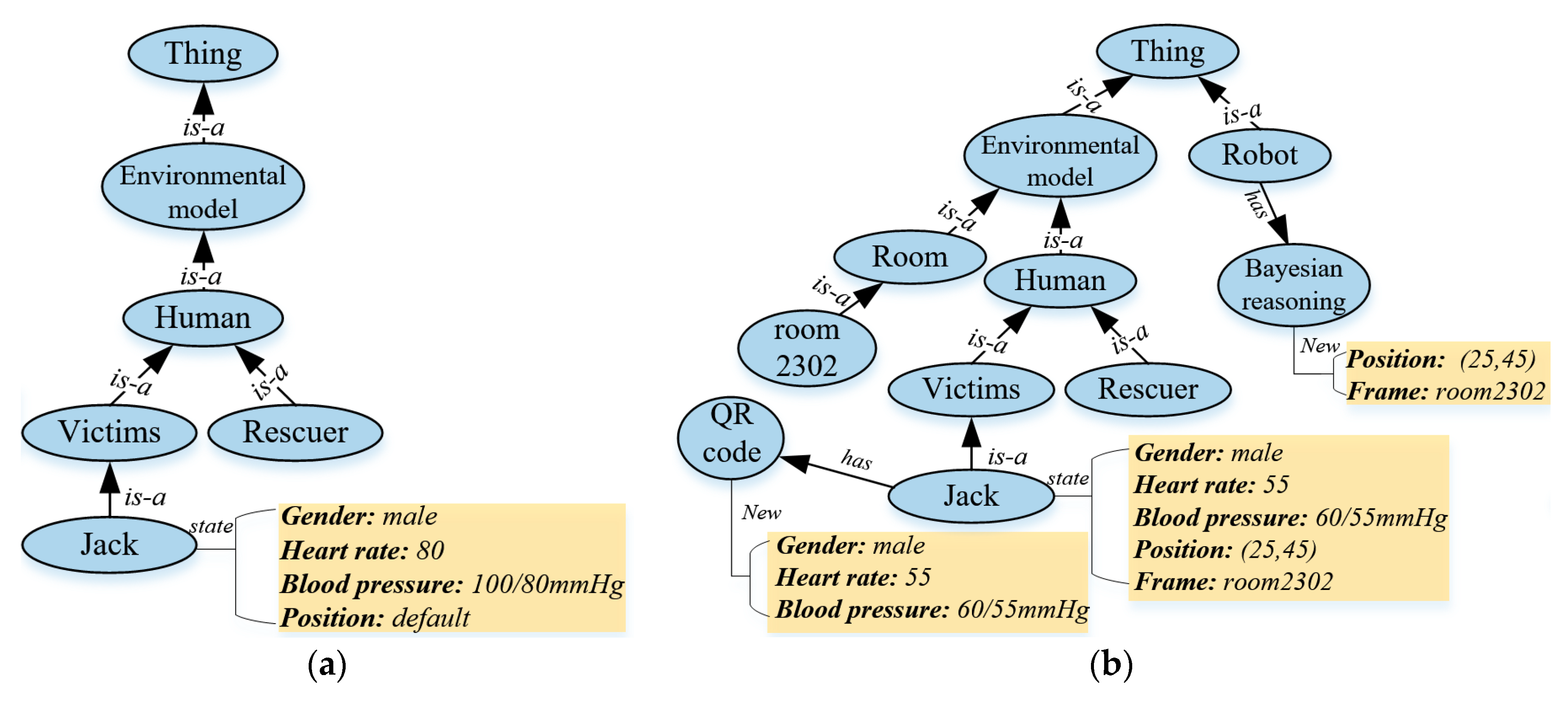

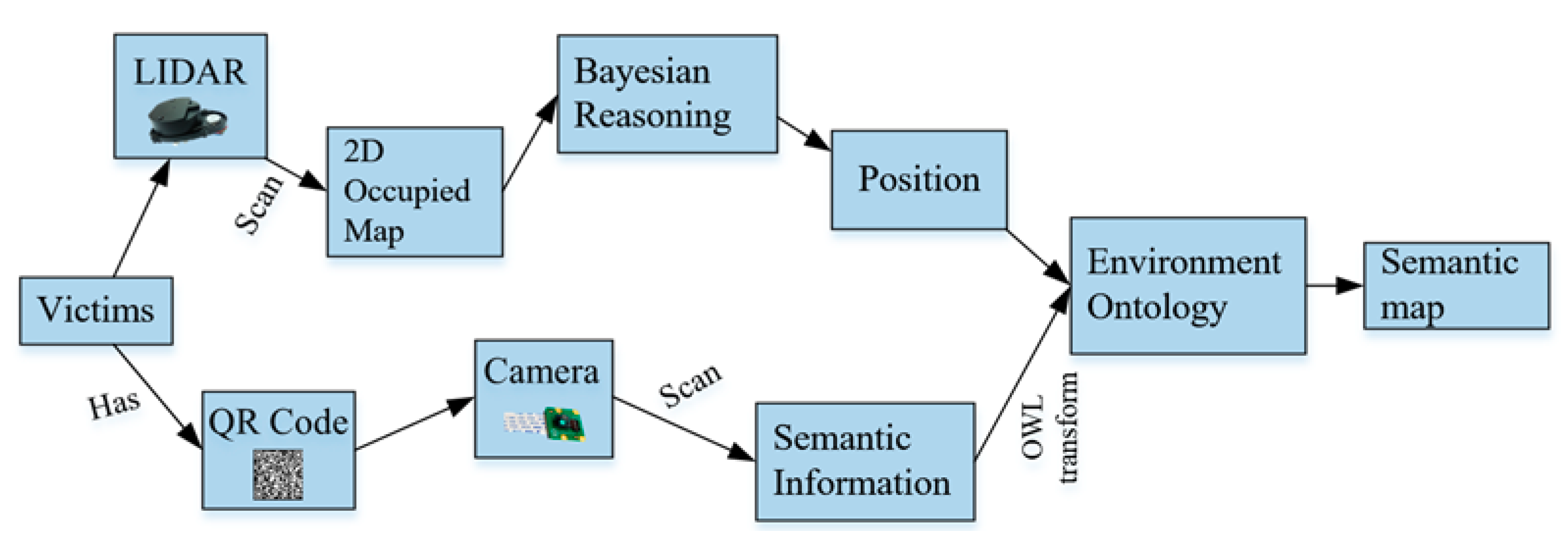

2. Ontology in SAR Scenario

2.1. The Definition

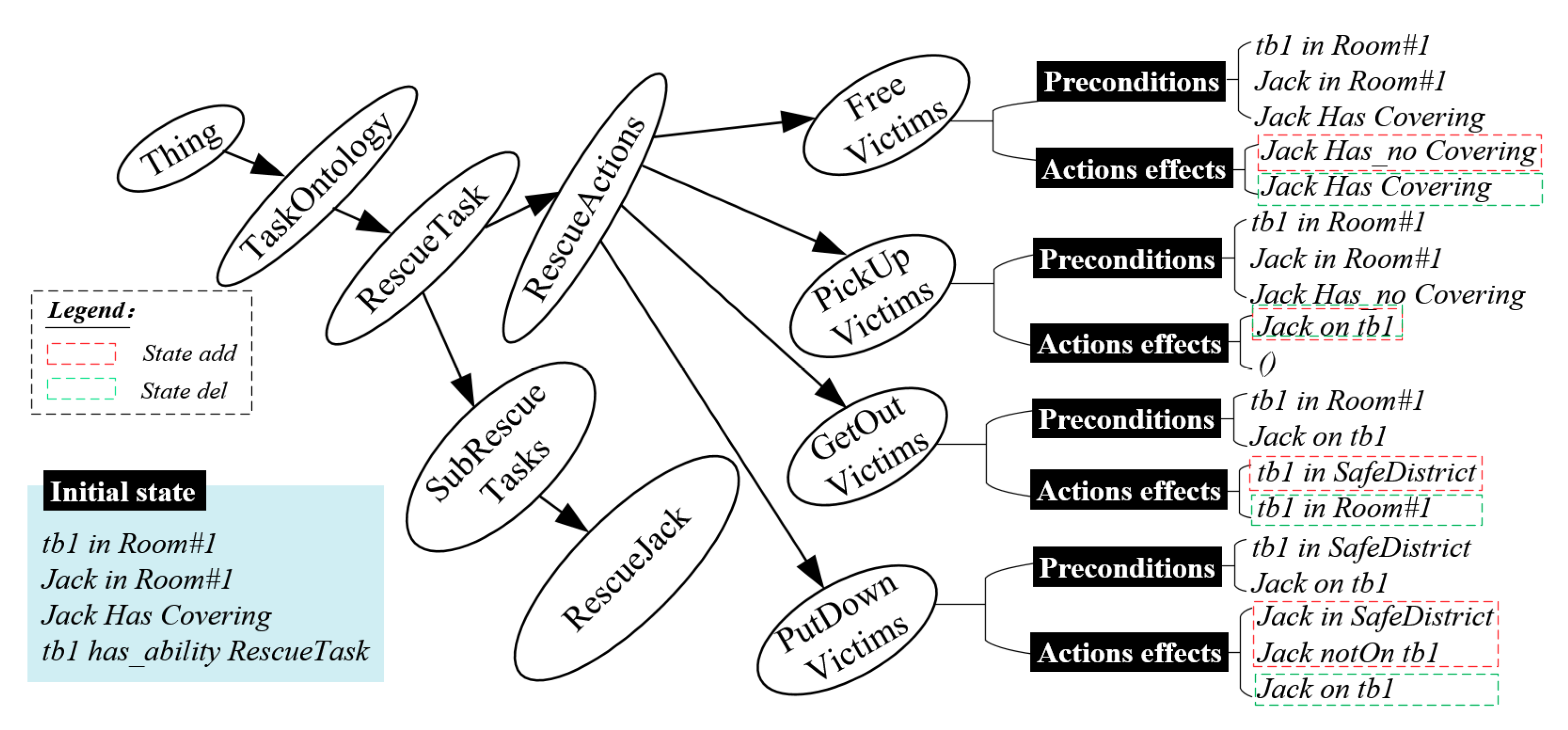

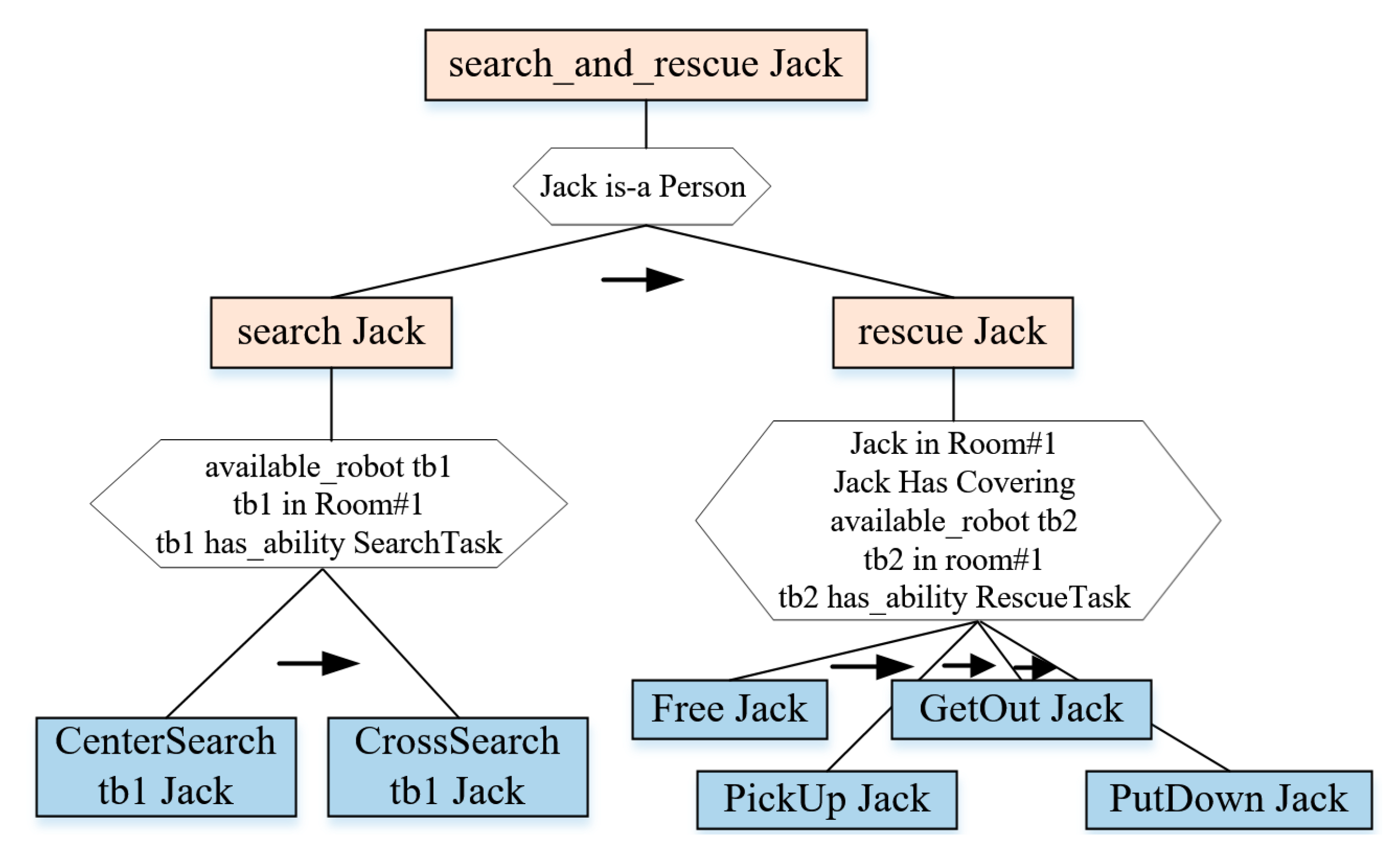

2.2. The Ontology in SAR

- Class: RescueJack

- SubClassOf:

- SubRescueTasks

- (subAction some FreeVictim)

- and (subAction some PickUpVictim)

- and (subAction some GetOutVictim)

- and (subAction some PutDownVictim)

- Class: RescueJack

- SubClassOf:

- SubRescueTasks

- (subAction some FreeVictim)

- and (subAction some PickUpVictim)

- and (subAction some GetOutVictim)

- and (subAction some PutDownVictim)

- and (orderingConstraints value RescueActions12)

- and (orderingConstraints value RescueActions13)

- and (orderingConstraints value RescueActions14)

- and (orderingConstraints value RescueActions23)

- and (orderingConstraints value RescueActions24)

- and (orderingConstraints value RescueActions34)

- Individuals: RescueActions12

- Types:

- PartialOrdering-Strict

- Annotations:

- occursAfterInOrdering PickUpVictim

- occursBeforeInOrdering FreeVictim

3. Methods

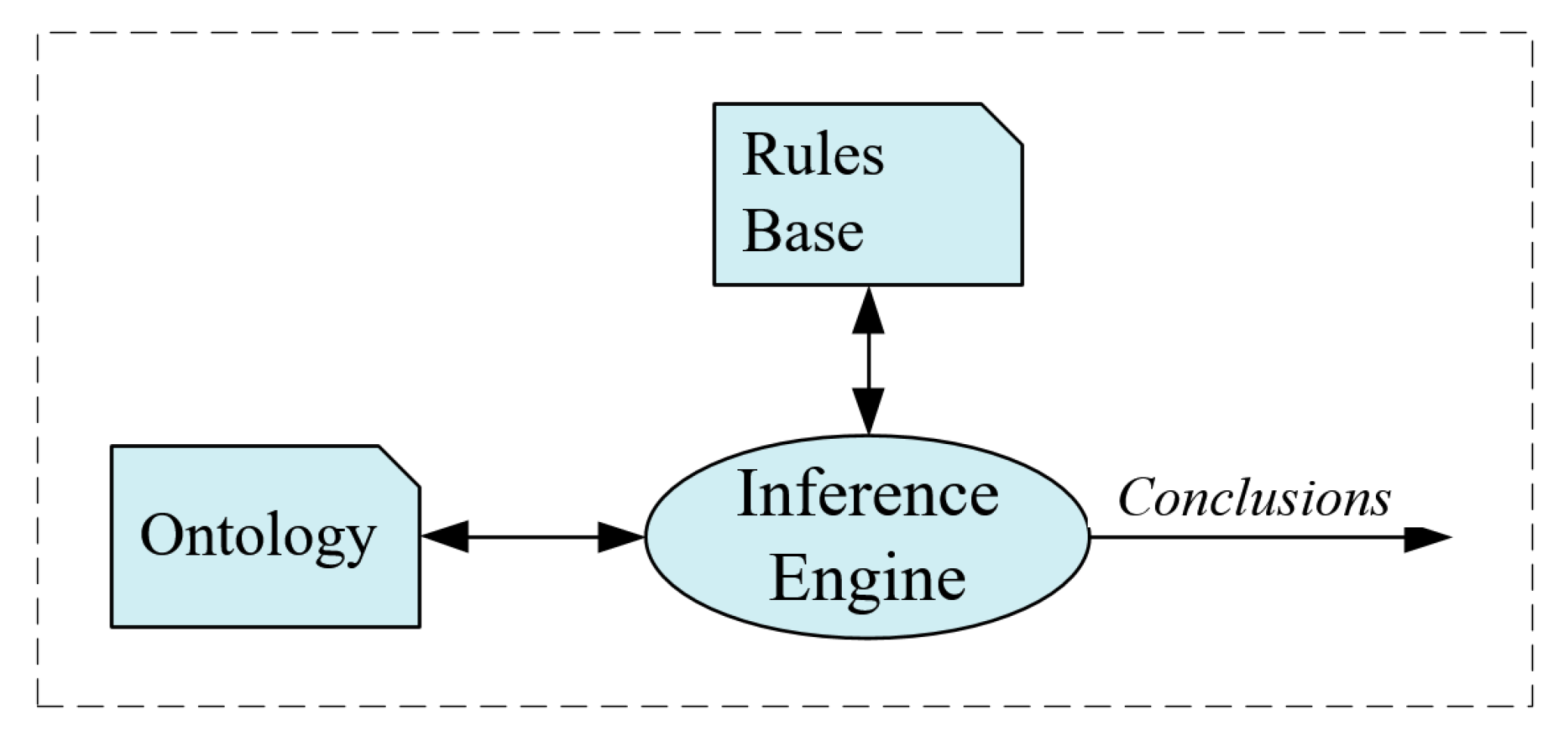

3.1. Reasoning Based on SWRL Rules

3.1.1. Structure and Syntax of SWRL Rules

3.1.2. The Constructions of SWRL Rules

- Extract the key rule knowledge of robot automatic search and rescue from relevant books, literature and manuals, and form the rule knowledge in the form of natural language;

- Declare this rule knowledge in the formal description language and specify the Precondition of the SWRL rule;

- Determine the types and instances of the concepts involved in the rule knowledge.

- Rule_1: If the initial position of SAR robot is the center of SAR map, the robot will perform the center search route.

- Rule_2: If the initial position of SAR robot is the Corridor of SAR map, the robot will perform the cross search route.

- Rule_3: If the initial position of SAR robot is the Room of SAR map, the robot will perform the square search route.

- Rule_1: WheeledRobots(?WR) ^ hasAbility (?A, ?WR) ^ Center(?ER) ^ hasPosition(?WR, ?ER) ^ SearchTask(?ST) ^ CenterSearch(?SR) -> ChooseSearchRoute(?SR, ?ST)

- Rule_2: WheeledRobots(?WR) ^ hasAbility (?A, ?WR) ^ Corridor(?ER) ^ hasPosition(?WR, ?ER) ^ SearchTask(?ST) ^ CrossSearch(?SR) -> ChooseSearchRoute(?SR, ?ST)

- Rule_3: WheeledRobots(?WR) ^ hasAbility (?A, ?WR) ^ Room(?ER) ^ hasPosition(?WR, ?ER) ^ SearchTask(?ST) ^ SquareSearch(?SR) -> ChooseSearchRoute(?SR, ?ST)

3.1.3. Robot Task Reasoning Based on JESS

3.2. Task Planning Algorithm Based on Ontology

| Algorithm 1 An ontology-based task planning algorithm | |

| Input: s: the initial state; t: the task reasoned by JESS; O: the ontology knowledge | |

| Output:: A plan for accomplishing the t from the initial state; | |

| 1: | procedure generate a plan for accomplishing the t |

| 2: | . |

| 3: | function task_planning (t) |

| 4: | if t is a primitive task then |

| 5: | modify s by deleting del(t) and adding add(t) |

| 6: | append t to P |

| 7: | else |

| 8: | for all subtask in subtasks(t) do |

| 9: | if preconditions(subtask) matches the s then |

| 10: | task_planning (subtask) |

| 11: | return P |

| 12: | end procedure |

3.3. The Update of Ontology

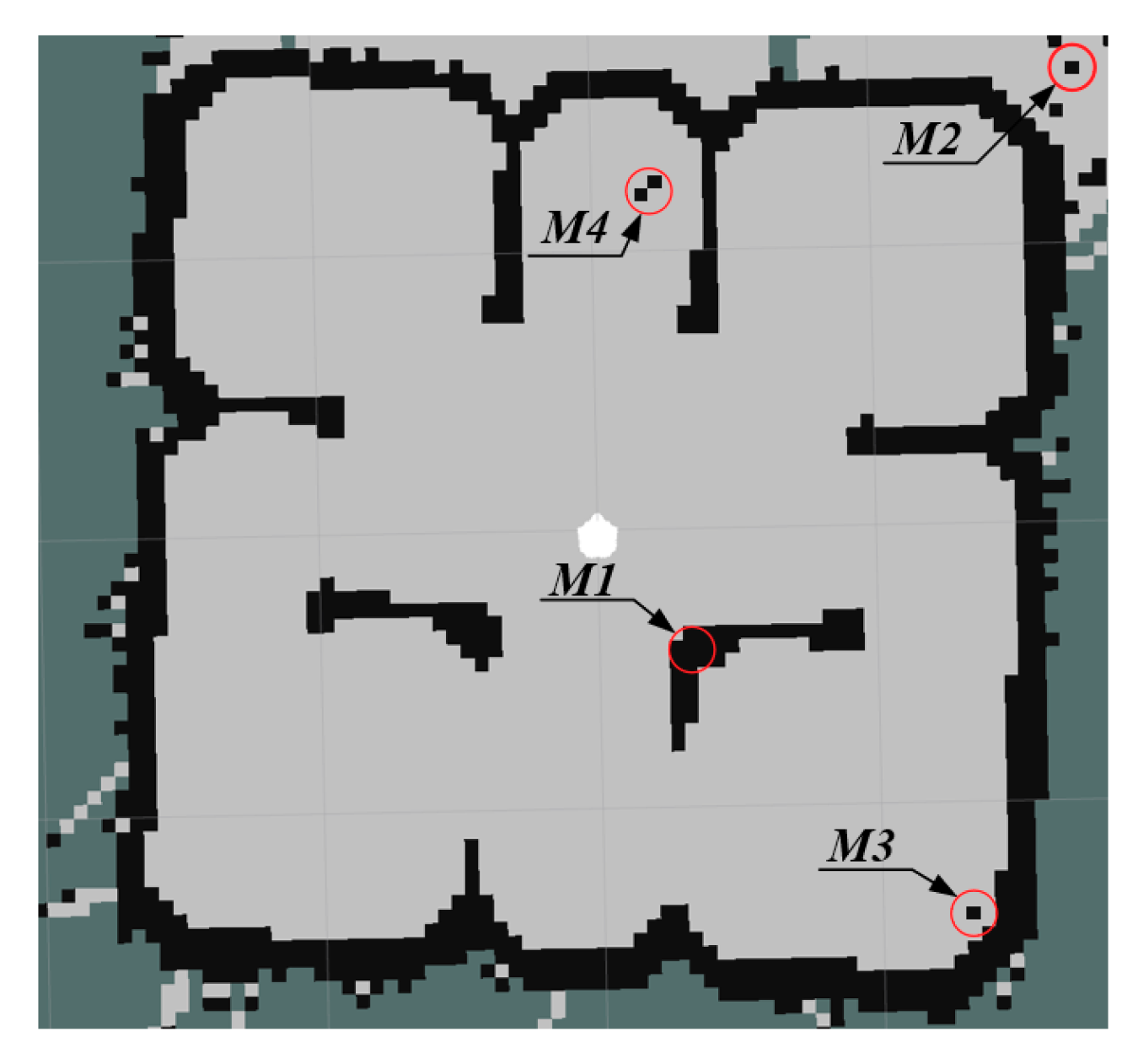

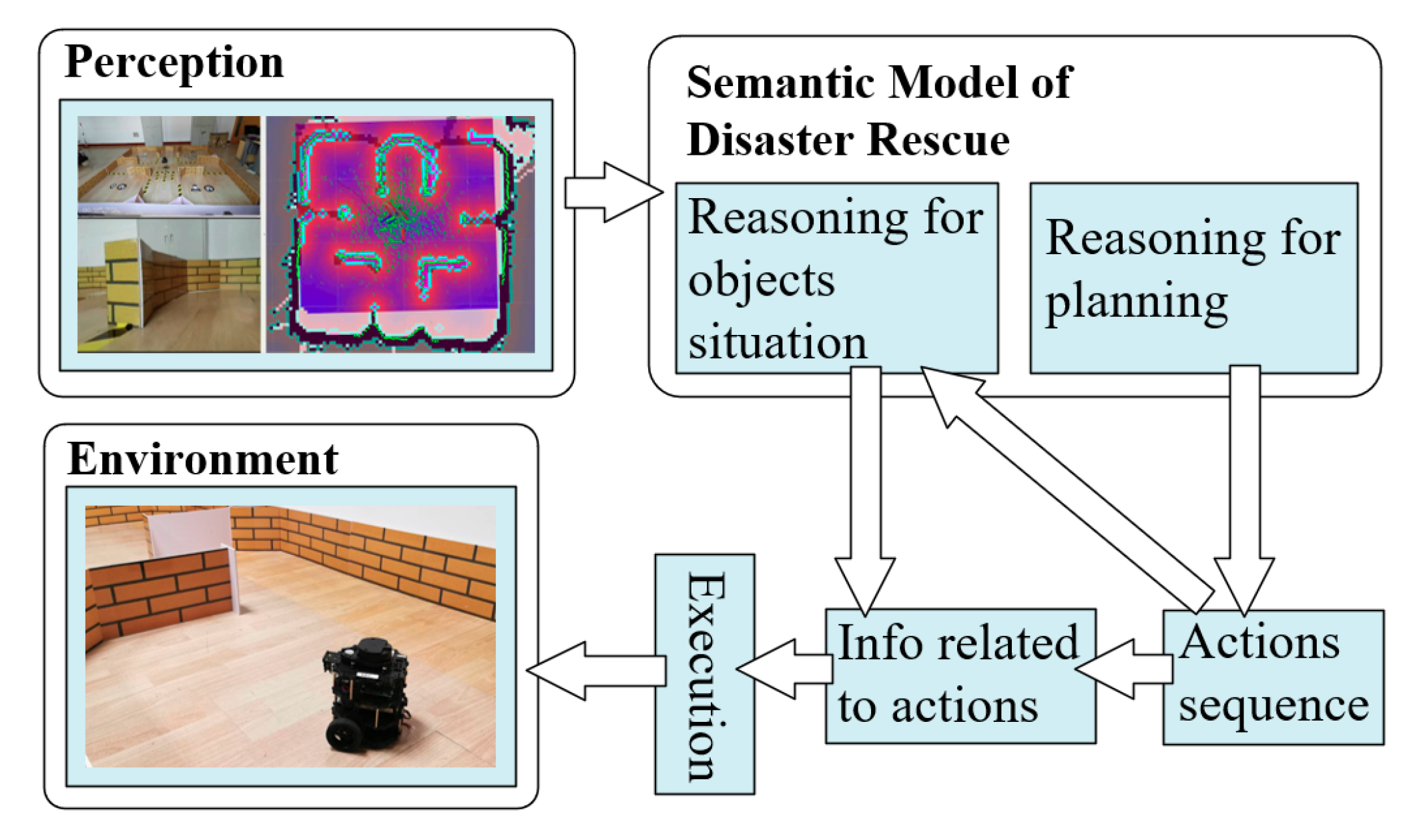

4. A Study Case Based on the Semantic Model

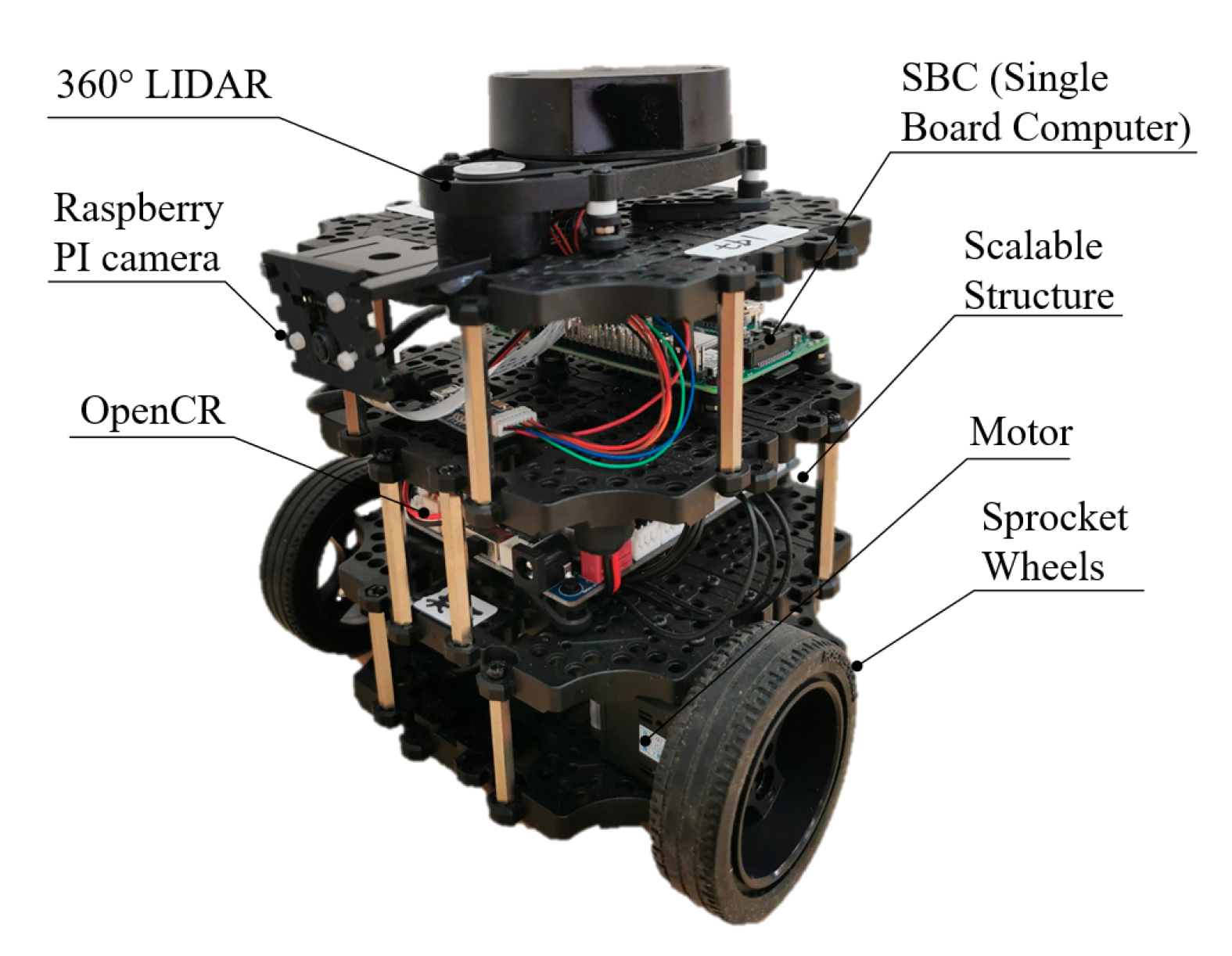

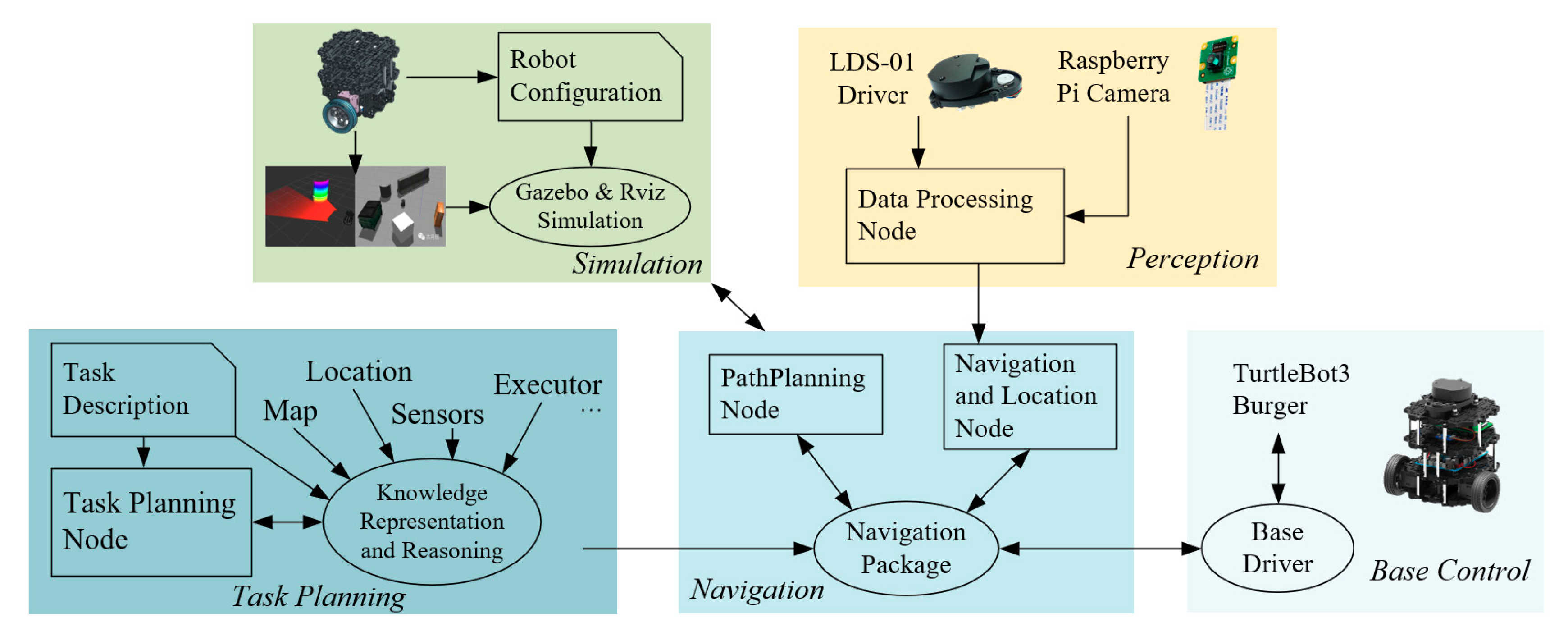

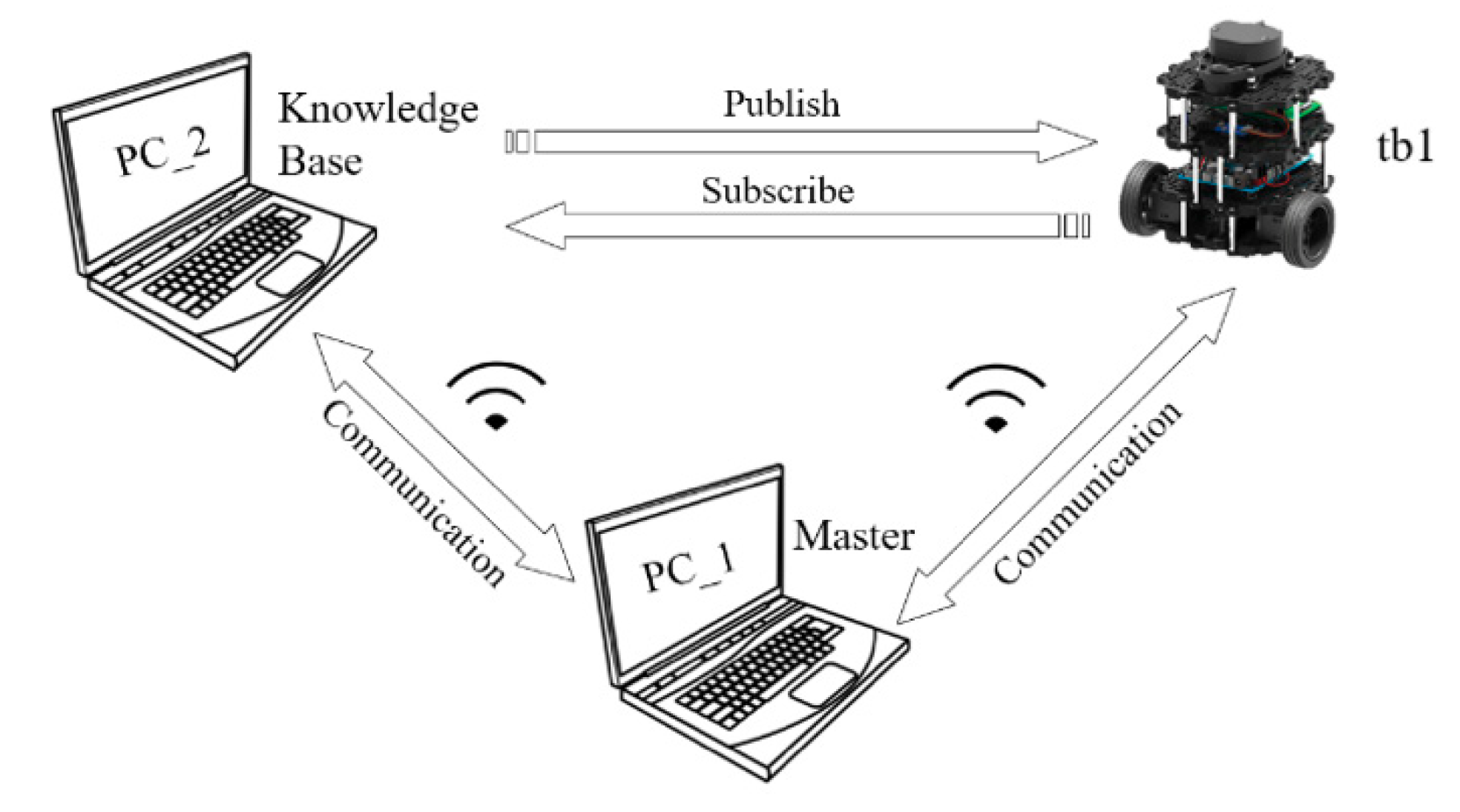

4.1. Hardware and Software

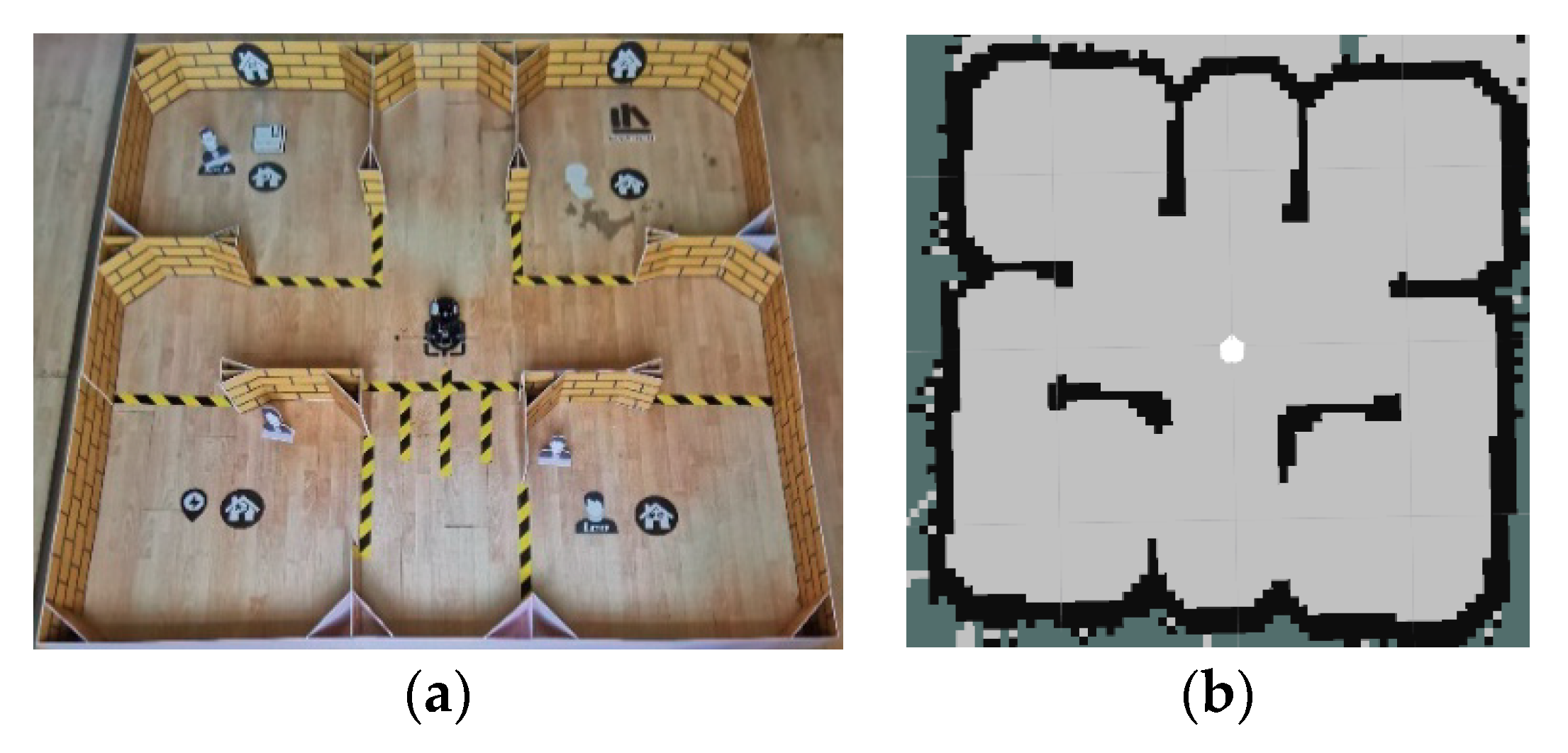

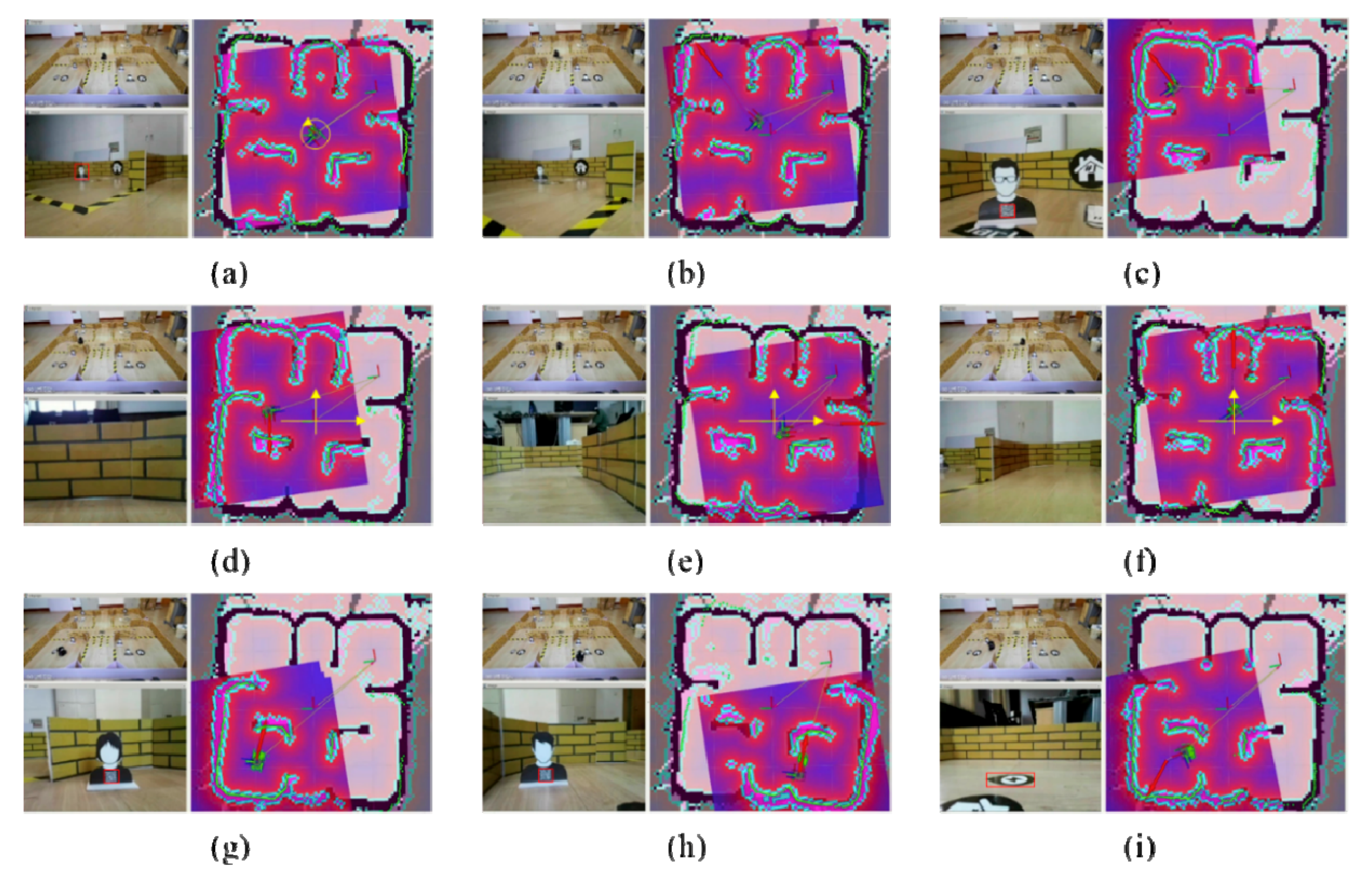

4.2. A Study Case

5. Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT press: Cambridge, MA, USA, 2016. [Google Scholar]

- Yang, P.-C.; Sasaki, K.; Suzuki, K.; Kase, K.; Sugano, S.; Ogata, T. Repeatable folding task by humanoid robot worker using deep learning. IEEE Robot. Autom. Lett. 2016, 2, 397–403. [Google Scholar] [CrossRef]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Ibarz, J.; Quillen, D. Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Robot. Res. 2018, 37, 421–436. [Google Scholar] [CrossRef]

- Shiang, C.W.; Tee, F.S.; Halin, A.A.; Yap, N.K.; Hong, P.C. Ontology reuse for multiagent system development through pattern classification. Softw. Pract. Exp. 2018, 48, 1923–1939. [Google Scholar] [CrossRef]

- El-Sappagh, S.; Alonso, J.M.; Ali, F.; Ali, A.; Jang, J.-H.; Kwak, K.-S. An ontology-based interpretable fuzzy decision support system for diabetes diagnosis. IEEE Access 2018, 6, 37371–37394. [Google Scholar] [CrossRef]

- Liu, J.; Li, Y.; Tian, X.; Sangaiah, A.K.; Wang, J. Towards Semantic Sensor Data: An Ontology Approach. Sensors 2019, 19, 1193. [Google Scholar] [CrossRef]

- Wen, Y.; Zhang, Y.; Huang, L.; Zhou, C.; Xiao, C.; Zhang, F.; Peng, X.; Zhan, W.; Sui, Z. Semantic Modelling of Ship Behavior in Harbor Based on Ontology and Dynamic Bayesian Network. ISPRS Int. J. Geo-Inf. 2019, 8, 107. [Google Scholar] [CrossRef]

- Ibrahim, M.E.; Yang, Y.; Ndzi, D.L.; Yang, G.; Al-Maliki, M. Ontology-based personalized course recommendation framework. IEEE Access 2019, 7, 5180–5199. [Google Scholar] [CrossRef]

- Zhao, J.; Gao, J.; Zhao, F.; Liu, Y. A Search-and-Rescue Robot System for Remotely Sensing the Underground Coal Mine Environment. Sensors 2017, 17, 2426. [Google Scholar] [CrossRef]

- Jorge, V.A.M.; Granada, R.; Maidana, R.G.; Jurak, D.A.; Heck, G.; Negreiros, A.P.F.; dos Santos, D.H.; Goncalves, L.M.G.; Amory, A.M. A Survey on Unmanned Surface Vehicles for Disaster Robotics: Main Challenges and Directions. Sensors 2019, 19, 44. [Google Scholar] [CrossRef]

- Bujari, A.; Calafate, C.; Cano, J.-C.; Manzoni, P.; Palazzi, C.; Ronzani, D. A Location-Aware Waypoint-Based Routing Protocol for Airborne DTNs in Search and Rescue Scenarios. Sensors 2018, 18, 3758. [Google Scholar] [CrossRef]

- Murphy, R.R.; Tadokoro, S.; Nardi, D.; Jacoff, A.; Fiorini, P.; Choset, H.; Erkmen, A.M. Search and Rescue Robotics; Springer: berlin, Germany, 2008. [Google Scholar]

- Casper, J. Human-Robot Interactions during the Robot-Assisted Urban Search and Rescue Response at the World Trade Center. IEEE Trans. Syst. Manand Cybern. Part B 2003, 33, 367. [Google Scholar] [CrossRef] [PubMed]

- Tenorth, M. Knowledge Processing for Autonomous Robots. Ph.D. Thesis, Technische Universität München, Munich, Germany, 2011. [Google Scholar]

- Tenorth, M.; Beetz, M. KNOWROB—knowledge processing for autonomous personal robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 4261–4266. [Google Scholar]

- Tenorth, M.; Beetz, M.J.A.I. Representations for Robot Knowledge in the Knowrob Framework; Elsevier: Amsterdam, The Netherlands, 2017; p. 247. [Google Scholar]

- Lemaignan, S. Grounding the Interaction: Knowledge Management for Interactive Robots. Ki-Künstl. Intell. 2013, 27, 183–185. [Google Scholar] [CrossRef] [Green Version]

- Lemaignan, S.; Ros, R.; Mösenlechner, L.; Alami, R.; Beetz, M. ORO, a knowledge management platform for cognitive architectures in robotics. In IROS; IEEE: Piscataway, NJ, USA, 2010; pp. 3548–3553. [Google Scholar]

- Li, X.; Bilbao, S.; Martín-Wanton, T.; Bastos, J.; Rodriguez, J. SWARMs ontology: A common information model for the cooperation of underwater robots. Sensors 2017, 17, 569. [Google Scholar] [CrossRef] [PubMed]

- Galindo, C.; Fernández-Madrigal, J.-A.; González, J.; Saffiotti, A. Robot task planning using semantic maps. Robot. Auton. Syst. 2008, 56, 955–966. [Google Scholar] [CrossRef] [Green Version]

- Landa-Torres, I.; Manjarres, D.; Bilbao, S.; Del Ser, J. Underwater robot task planning using multi-objective meta-heuristics. Sensors 2017, 17, 762. [Google Scholar] [CrossRef]

- Sadik, A.R.; Urban, B. An Ontology-Based Approach to Enable Knowledge Representation and Reasoning in Worker–Cobot Agile Manufacturing. Future Internet 2017, 9, 90. [Google Scholar] [CrossRef]

- Diab, M.; Akbari, A.; Ud Din, M.; Rosell, J. PMK—A Knowledge Processing Framework for Autonomous Robotics Perception and Manipulation. Sensors 2019, 19, 1166. [Google Scholar] [CrossRef]

- Schlenoff, C.; Prestes, E.; Madhavan, R.; Goncalves, P.; Li, H.; Balakirsky, S.; Kramer, T.; Miguelanez, E. An IEEE standard ontology for robotics and automation. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems; IEEE: Piscataway, NJ, USA, 2012; pp. 1337–1342. [Google Scholar]

- Khandelwal, P.; Zhang, S.; Sinapov, J.; Leonetti, M.; Thomason, J.; Yang, F.; Gori, I.; Svetlik, M.; Khante, P.; Lifschitz, V. Bwibots: A platform for bridging the gap between ai and human–robot interaction research. Int. J. Robot. Res. 2017, 36, 635–659. [Google Scholar] [CrossRef]

- Khandelwal, P.; Yang, F.; Leonetti, M.; Lifschitz, V.; Stone, P. Planning in Action Language BC while Learning Action Costs for Mobile Robots. In ICAPS; 2014; Available online: https://www.google.com.hk/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&ved=2ahUKEwiA7raOs8XlAhXmw4sBHftHAj0QFjAAegQIAxAC&url=https%3A%2F%2Fwww.aaai.org%2Focs%2Findex.php%2FICAPS%2FICAPS14%2Fpaper%2Fdownload%2F7940%2F8051&usg=AOvVaw3WzKhs4jdWnrXRjbeVwhv8 (accessed on 31 October 2019).

- Lee, J.; Lifschitz, V.; Yang, F. Action Language BC: Preliminary Report. In IJCAI; 2013; pp. 983–989. Available online: https://pdfs.semanticscholar.org/7e94/11f2645d738acdd584f2b39867547f22de3b.pdf. (accessed on 31 October 2019).

- IsaacSaito. Wiki: ROS [EB/OL]. Available online: http://wiki.ros.org/ROS/ (accessed on 23 April 2013).

- OWL Working Group. OWL-Semantic Web Standard [EB/OL]. Available online: https://www.w3.org/OWL/ (accessed on 31 October 2019).

- Otero-Cerdeira, L.; Rodríguez-Martínez, F.; Gómez-Rodríguez, A. Definition of an ontology matching algorithm for context integration in smart cities. Sensors 2014, 14, 23581–23619. [Google Scholar] [CrossRef]

- Lee, S.; Kim, I. A Robotic Context Query-Processing Framework Based on Spatio-Temporal Context Ontology. Sensors 2018, 18, 3336. [Google Scholar] [CrossRef]

- Meng, X.; Wang, F.; Xie, Y.; Song, G.; Ma, S.; Hu, S.; Bai, J.; Yang, Y. An Ontology-Driven Approach for Integrating Intelligence to Manage Human and Ecological Health Risks in the Geospatial Sensor Web. Sensors 2018, 18, 3619. [Google Scholar] [CrossRef] [PubMed]

- Oliveira, T.; Gonçalves, F.; Novais, P.; Satoh, K.; Neves, J. OWL-based acquisition and editing of computer-interpretable guidelines with the CompGuide editor. Expert Syst. 2019, 36, e12276. [Google Scholar] [CrossRef]

- Gasmi, H.; Bouras, A. Ontology-based education/industry collaboration system. IEEE Access 2017, 6, 1362–1371. [Google Scholar] [CrossRef]

- Horrocks, I.; Patel-Schneider, P.F.; Boley, H.; Tabet, S.; Grosof, B.; Dean, M. SWRL: A semantic web rule language combining OWL and RuleML. W3C Member Submiss. 2004, 21, 1–31. [Google Scholar]

- Friedman-Hill, E. The Rule Engine for the Java Platform. 2008. Available online: https://jessrules.com/doc/71/ (accessed on 31 October 2019).

| Atom | Description |

|---|---|

| hasAbility(?A, ?WR) | WR has the A ability |

| hasAction(?ST, ?WR) | WR performs the ST task |

| hasPosition(?WR, ?ER) | WR locates in ER |

| WheeledRobots(?WR) | WR is a wheeled robot |

| ChooseSearchRoute(?SR, ?ST) | the ST task choose the SR search route |

| Victims(?V) | V is a victim |

| Center(?ER) | ER is the center of SAR map |

| Corridor(?ER) | ER is the corridor of SAR map |

| Room(?ER) | ER is the room of SAR map |

| SquareSearch(?SR) | SR is the square search route |

| CrossSearch(?SR) | SR is the cross search route |

| CenterSearch(?SR) | SR is the center search route |

| SearchTask(?ST) | ST is the search task |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, X.; Zhang, Y.; Chen, J. High-Level Smart Decision Making of a Robot Based on Ontology in a Search and Rescue Scenario. Future Internet 2019, 11, 230. https://doi.org/10.3390/fi11110230

Sun X, Zhang Y, Chen J. High-Level Smart Decision Making of a Robot Based on Ontology in a Search and Rescue Scenario. Future Internet. 2019; 11(11):230. https://doi.org/10.3390/fi11110230

Chicago/Turabian StyleSun, Xiaolei, Yu Zhang, and Jing Chen. 2019. "High-Level Smart Decision Making of a Robot Based on Ontology in a Search and Rescue Scenario" Future Internet 11, no. 11: 230. https://doi.org/10.3390/fi11110230

APA StyleSun, X., Zhang, Y., & Chen, J. (2019). High-Level Smart Decision Making of a Robot Based on Ontology in a Search and Rescue Scenario. Future Internet, 11(11), 230. https://doi.org/10.3390/fi11110230