Identification of Larch Caterpillar Infestation Severity Based on Unmanned Aerial Vehicle Multispectral and LiDAR Features

Abstract

1. Introduction

- Reveal the most sensitive feature sets (multispectral vegetation index and LiDAR features) of larch caterpillar infestations;

- Construct a high-precision model for effectively recognizing the severity of larch caterpillar infestation;

- Map the distribution of the severity of larch caterpillar pests on a single-plant scale in the experimental area and characterize their spatial distribution according to topographic features.

2. Materials and Methods

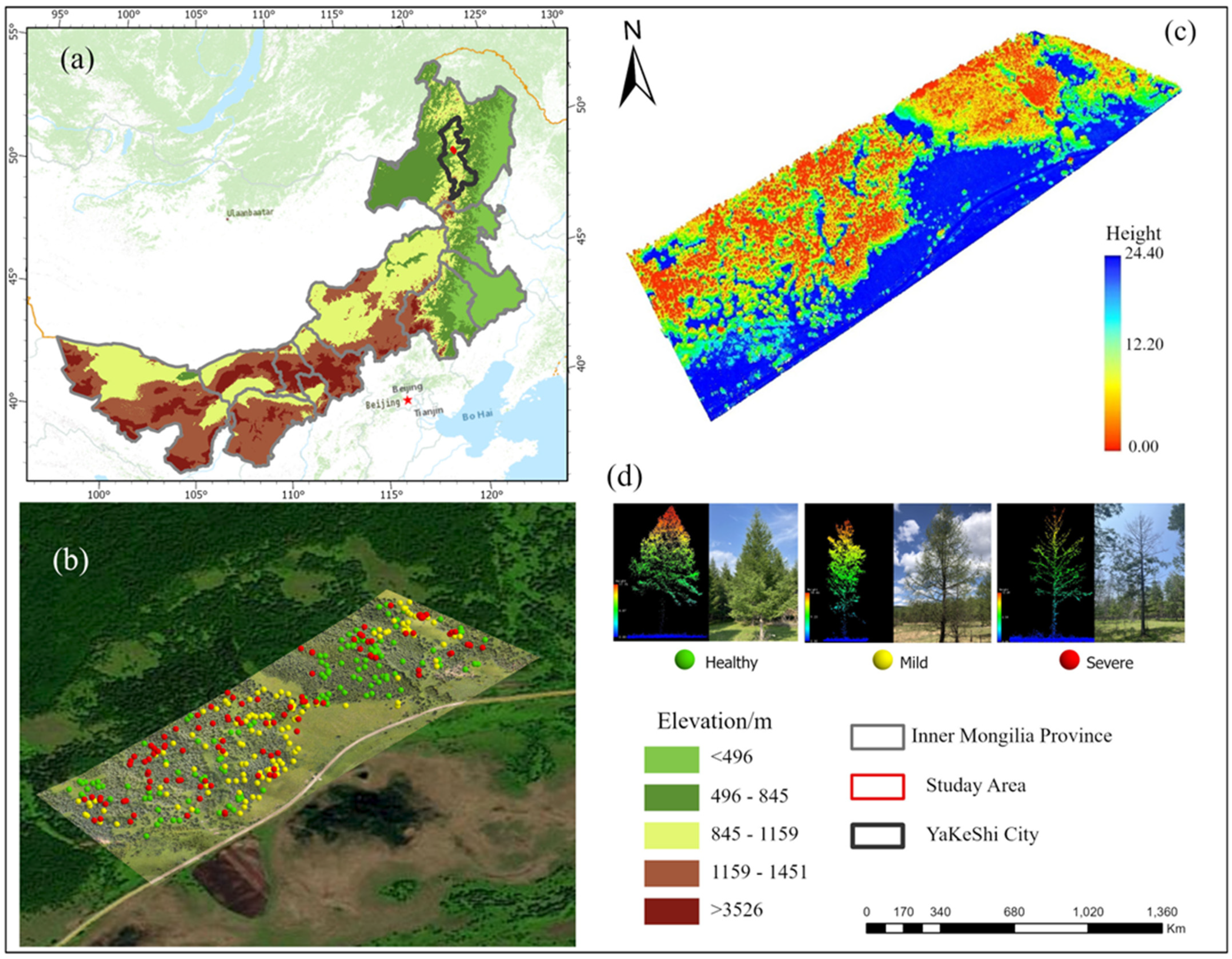

2.1. Study Area

2.2. Data Acquisition

2.2.1. Ground Survey Data

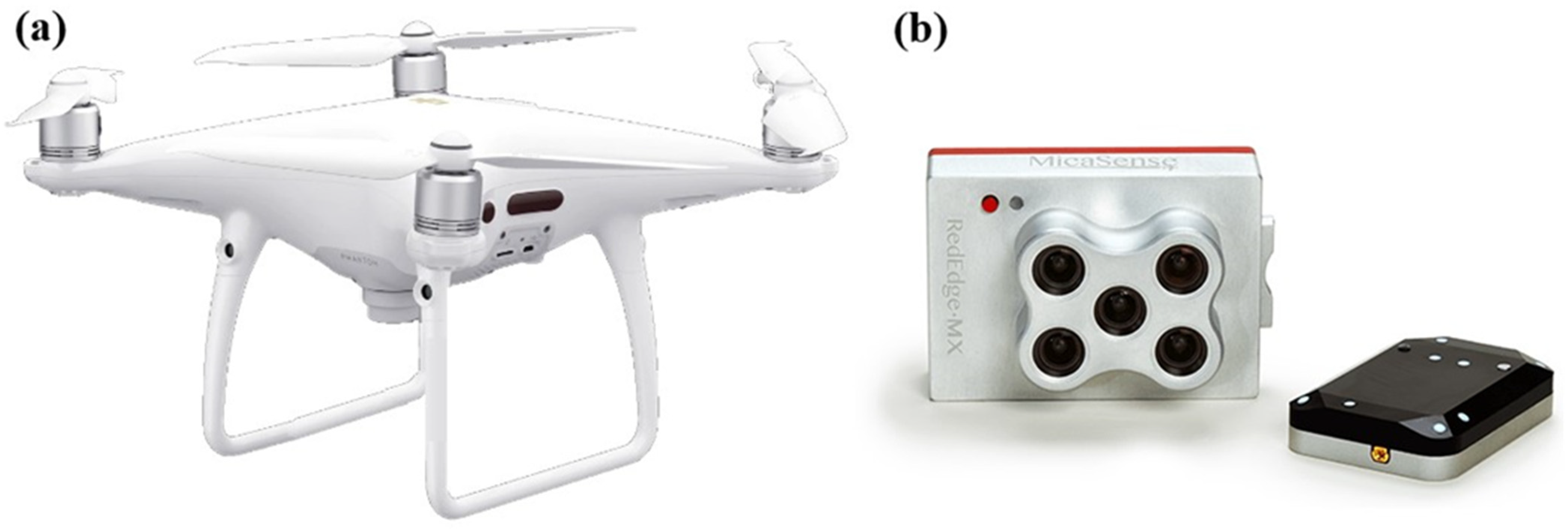

2.2.2. UAV Multispectral Data

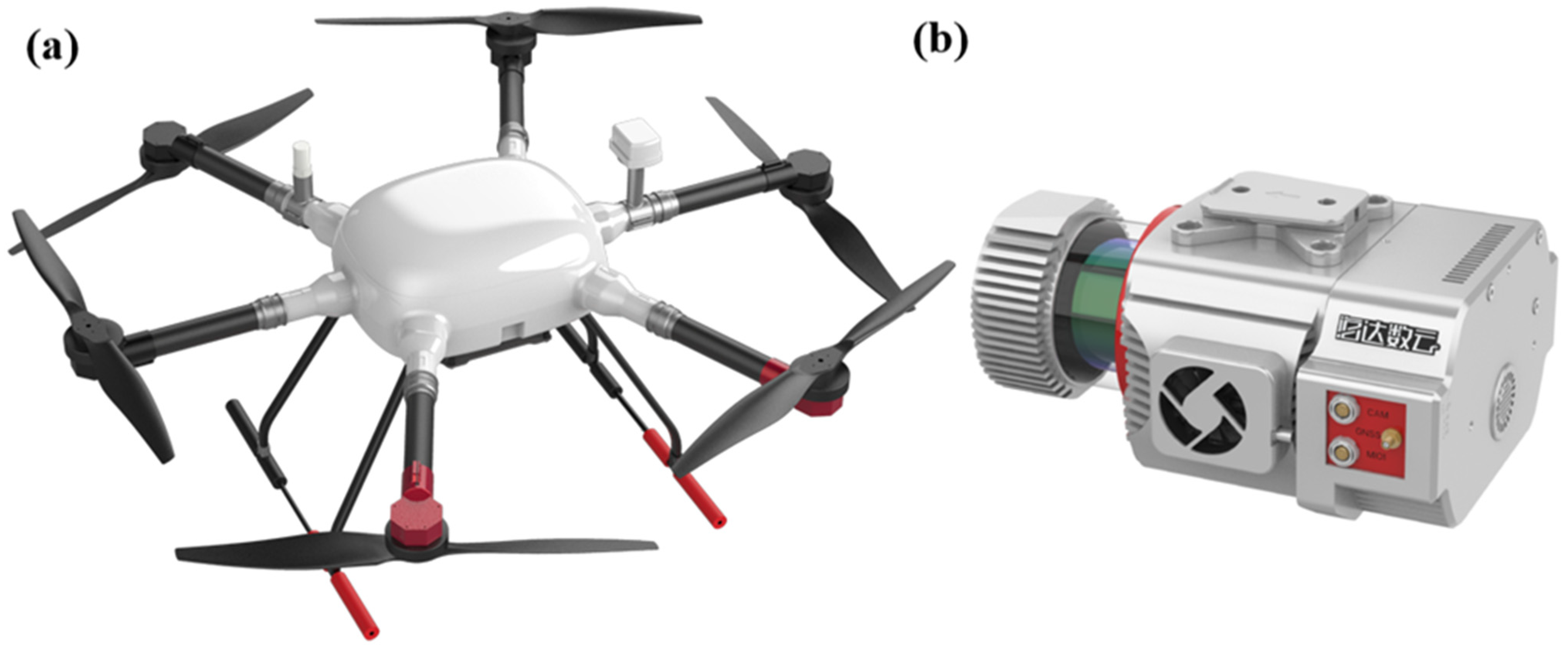

2.2.3. UAV LiDAR Data

2.3. Research Methods

2.3.1. Multispectral Vegetation Index Calculation

2.3.2. LiDAR Feature Calculation

2.3.3. Sensitive Feature Selection Method

2.3.4. Recognition Model

2.3.5. Evaluation of Model Accuracy

3. Results

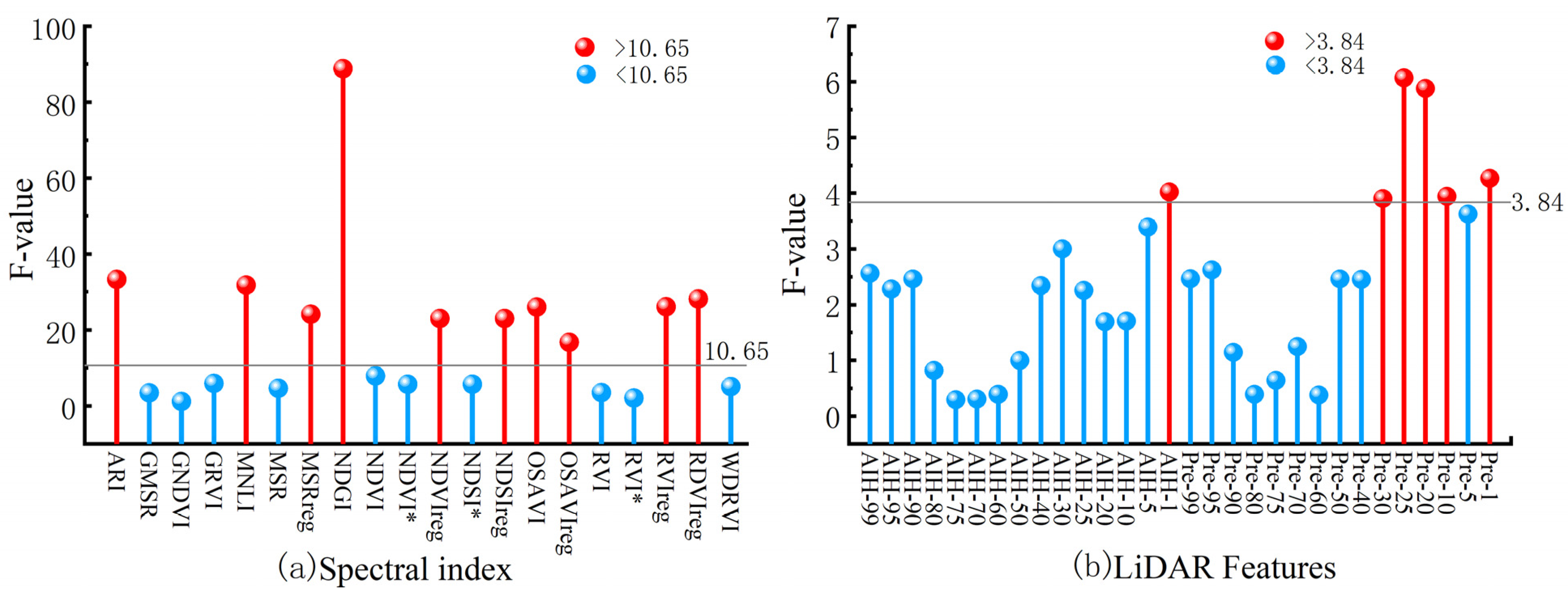

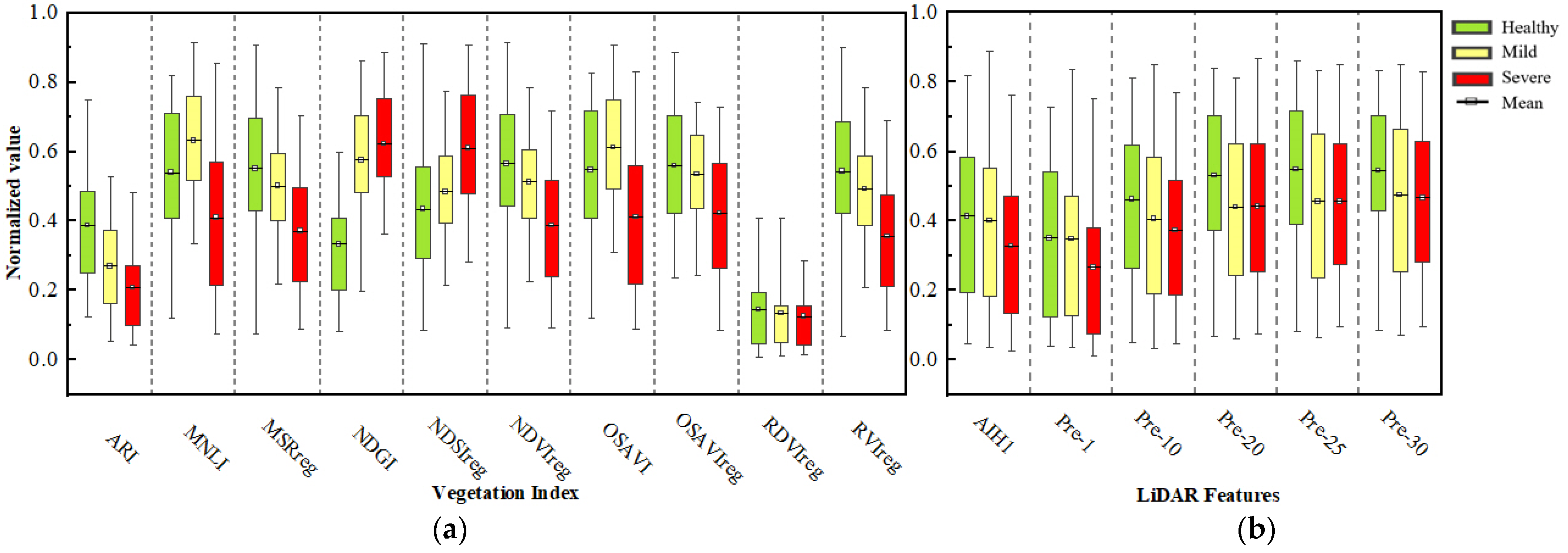

3.1. Sensitivity Analysis of Multispectral Vegetation Indices and LiDAR Features

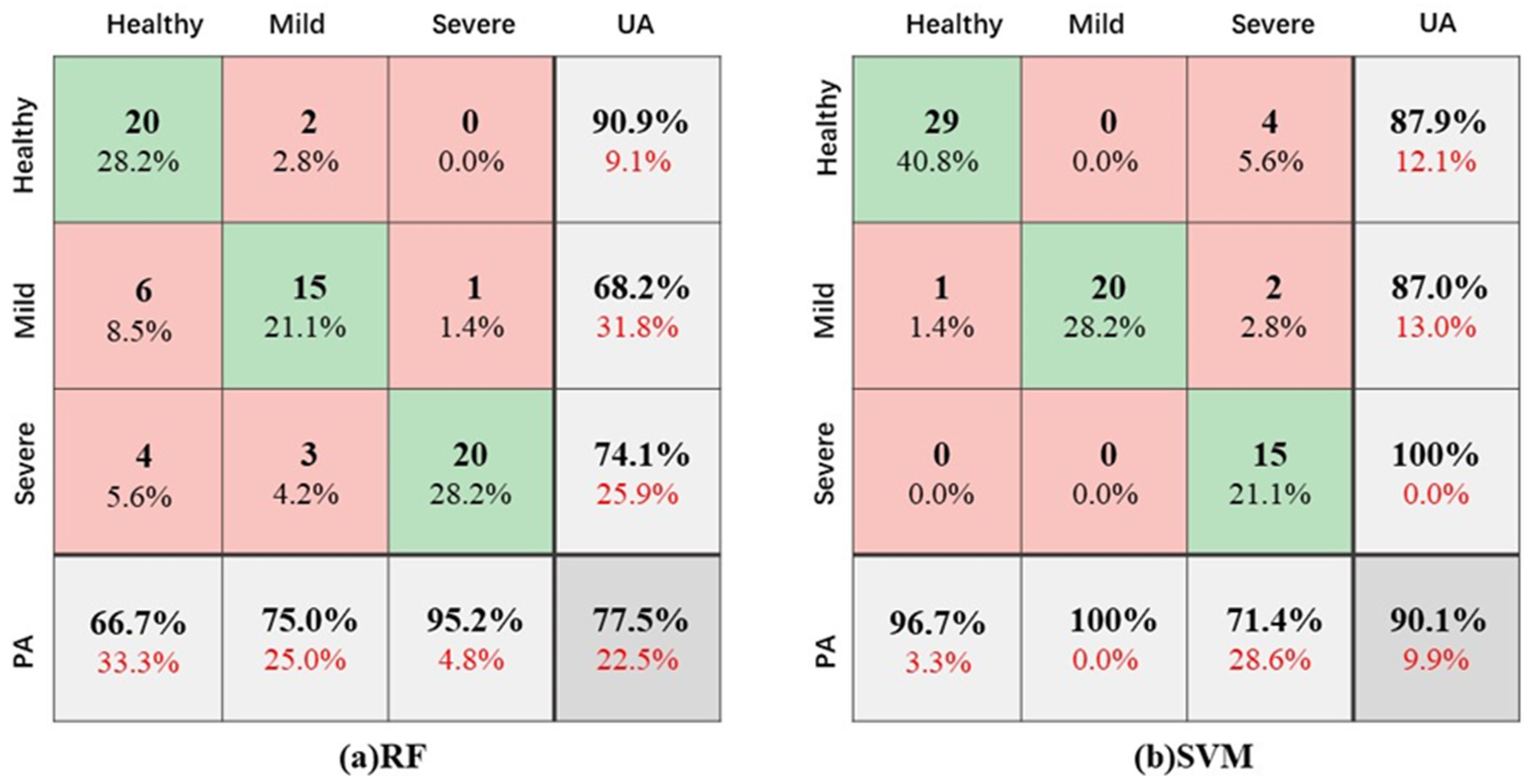

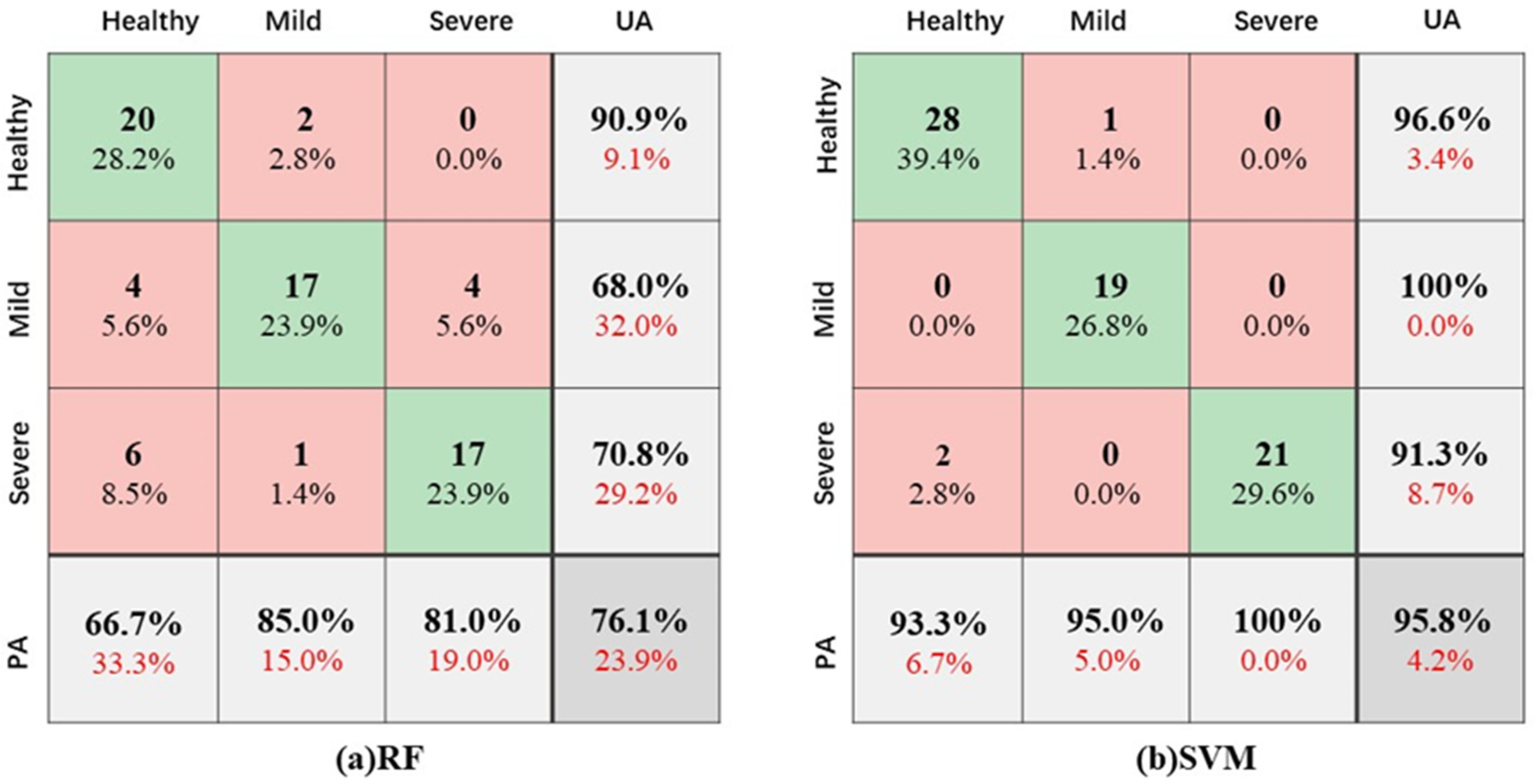

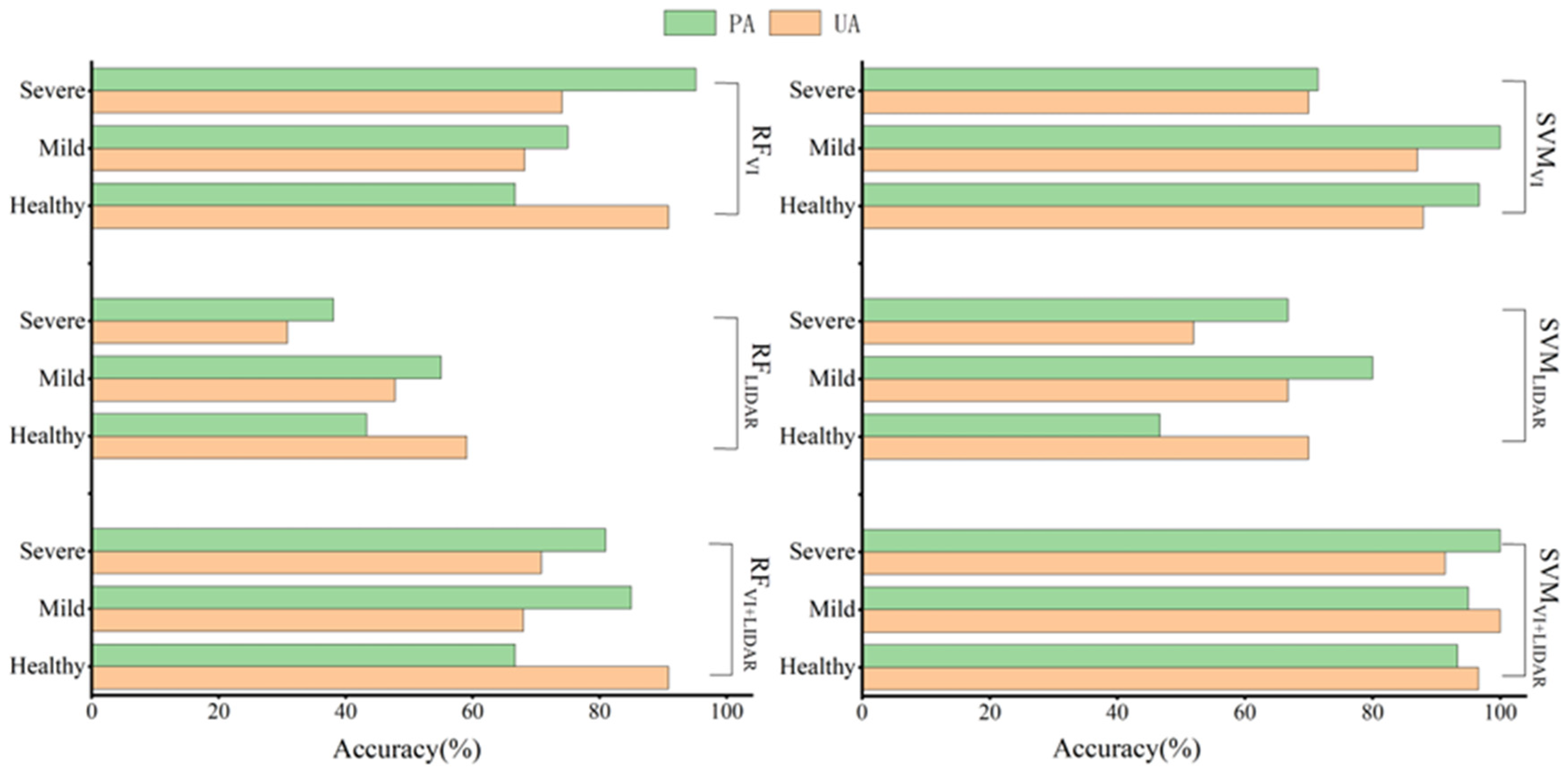

3.2. Larch Caterpillar Infestation Severity Recognition Model Results

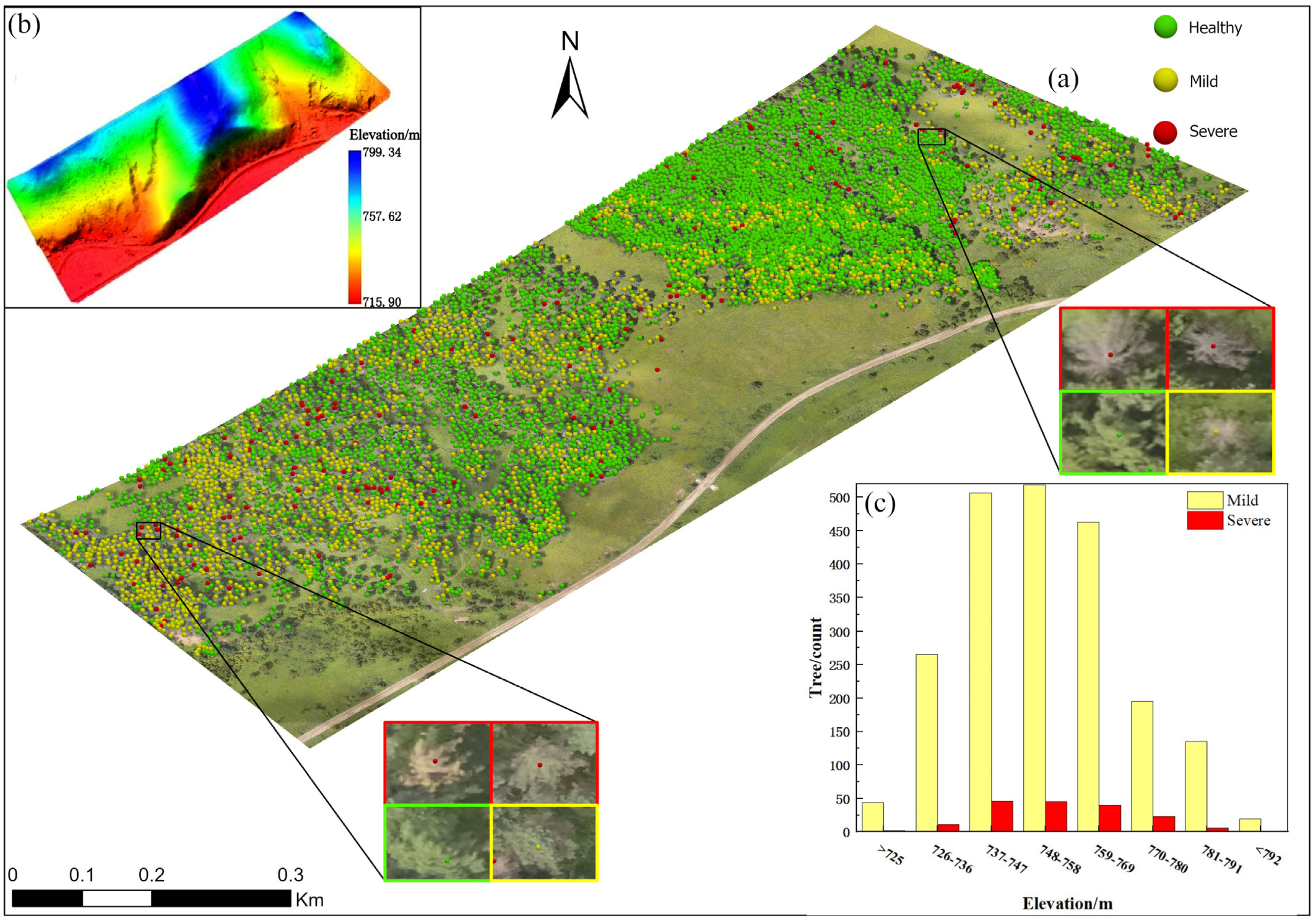

3.3. Distribution of Larch Caterpillar Infestation Severity Based on the Single-Plant Scale

4. Discussion

4.1. Multispectral Vegetation Indices and Sensitivity of LiDAR Features

4.2. Accuracy of Larch Caterpillar Pest Severity Monitoring Models

5. Conclusions

- (1)

- Ten multispectral vegetation indices and six LiDAR features were selected by the ANOVA test, of which the strongest sensitivities were the NDGI and Pre_25%. The vegetation indices calculated from the NIR and red-edge bands accounted for the largest number (6/10); the strong sensitivity of the 25% height variable was correlated with the date of collection of the experimental data and the growth cycle of larch caterpillars;

- (2)

- Among the six monitoring models with different feature sets derived from the study, the SVMVI+LIDAR model has the highest integrated accuracy, with OA, KAPPA, Rmacro, and F1macro above 0.95, and the overall accuracy of the model is improved by 5.63% and 33.77% compared with SVMVI and SVMLIDAR, respectively. It can be seen that Multisource remote sensing data synergy is an important way to improve the accuracy of pest recognition;

- (3)

- A high-precision monitoring model (SVMVI+LIDAR) was used to map the severity distribution of larch caterpillar infestation in the study area based on a single-plant scale, and the trend of infestation was analyzed according to the topographic characteristics. It was found that the severity of larch caterpillar infestation tended to increase with decreasing elevation and that control should be carried out first on mildly and severely damaged canopies at low elevations to protect healthy canopies at high elevations.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, H.; Hu, Y.; Chang, Y.; Ren, C.; Liu, M.; Liu, N. Advances in the researches of Dendrolimus superans of Daxing’an Mountain of China. J. Northwest For. Univ. 2011, 26, 119–127. [Google Scholar]

- Huang, X.-J. Remote Sensing Identification and Monitoring of Larch Needle Pests Based on Ground Hyperspectral Data. Ph.D. Thesis, Lanzhou University, Lanzhou, China, 2019. [Google Scholar]

- Sun, H.; Zhao, M.L.; Wu, L.Q.; Zhang, Y.W.; Deng, T.; Zhang, N.; Zhou, Y.; Wen, S. Effects of different ratio structures of mixed forests on the occurrence number, probability and distribution of Dendrolimus superans Butler. J. Shanxi Agric. Univ. (Nat. Sci. Ed.) 2022, 42, 106–113. [Google Scholar]

- Chen, K.Y.; Wang, J.J.; He, Y.J.; Zhang, L.W. Assessment of forest carbon storage and carbon sequestration potential in key state-owned forest areas of the Great Khingan Mountains, Heilongjiang Province. J. Ecol. Environ. 2022, 31, 1725–1734. [Google Scholar] [CrossRef]

- Pan, Z.; Zhang, L.J.; Sun, J.B.; Wang, X.L.; Pan, Z.C.; Zhao, Y.S.; Yu, W.X. Thinking after the survey of Greater Khingan Mountains Forest Area. J. Northeast. For. Univ. 2004, 32, 101–102. [Google Scholar] [CrossRef]

- Liu, Y.; Li, C.X.; Wang, Z.C.; Zhao, Y.Z.; Li, Y.X. Stand Spatial Structure Characteristics of Two Main Natural Secondary Forests in Daxing’an Mountains. Northeast. For. Univ. 2020, 48, 128–134. [Google Scholar] [CrossRef]

- Center for Biological Hazard Prevention and Control, State Forestry and Grassland Administration, China (Ed.) Forestry Pest Monitoring and Forecasting 2022; China Forestry Press: Beijing, China, 2023; p. 264. [Google Scholar]

- Wu, Y. Research on the Estimation of Forest Parameters Based on Remote Sensing Image Data: A Case Study of Chun’an County, Zhejiang Province. Master’s Thesis, Zhejiang University, Hangzhou, China, 2021. [Google Scholar] [CrossRef]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallance, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Guo, A.; Ye, H.C.; Huang, W.J.; Qian, B.X.; Wang, J.J.; Lan, Y.B.; Wang, S.Z. Inversion of maize leaf area index from UAV hyperspectral and multispectral imagery. Comput. Electron. Agric. 2023, 212, 108020. [Google Scholar] [CrossRef]

- Bai, L.-G.; Huang, X.-J.; Dashzebegd, G.; Ariunaad, M.; Nanzadd, T.; Dorjsuren, A.; Bao, G.; Tong, S.Q.; Bao, Y.H.; Yin, S.; et al. Estimation of the population density of Erannis jacobsoni (Lepidoptera Geometridae) based on hyperspectral features. Acta Entomol. Sin. 2021, 64, 711–721. [Google Scholar] [CrossRef]

- Huang, X.-J.; Xie, Y.-W.; Bao, Y.-H.; Bao, G.; Qing, S.; Bao, Y.-L. Estimation of Leaf Loss Rate in Larch Infested with Erannis jacobsoni Djak Based on Differential Spectral Continuous Wavelet Coefficient. Spectrosc. Spectr. Anal. 2019, 39, 2732–2738. [Google Scholar]

- Yu, R.; Luo, Y.; Li, H.; Yang, H.; Huang, H.; Yu, L.; Ren, L. Three-Dimensional Convolutional Neural Network Model for Early Detection of Pine Wilt Disease Using UAV-Based Hyperspectral Images. Remote Sens. 2021, 13, 4065. [Google Scholar] [CrossRef]

- Huo, L.; Lindberg, E.; Bohlin, J.; Persson, H.J. Assessing the detectability of European spruce bark beetle green attack in multispectral drone images with high spatial- and temporal resolutions. Remote Sens. Environ. 2023, 287, 113484. [Google Scholar] [CrossRef]

- Lei, S.; Luo, J.; Tao, X.; Qiu, Z. Remote Sensing Detecting of Yellow Leaf Disease of Arecanut Based on UAV Multisource Sensors. Remote Sens. 2021, 13, 4562. [Google Scholar] [CrossRef]

- Zhang, N.; Chai, X.; Li, N.; Zhang, J.; Sun, T. Applicability of UAV-based optical imagery and classification algorithms for detecting pine wilt disease at different infection stages. GISci. Remote Sens. 2023, 60, 2170479. [Google Scholar] [CrossRef]

- Lin, Q.; Huang, H.; Wang, J.; Huang, K.; Liu, Y. Detection of Pine Shoot Beetle (PSB) Stress on Pine Forests at Individual Tree Level using UAV-Based Hyperspectral Imagery and Lidar. Remote Sens. 2019, 11, 2540. [Google Scholar] [CrossRef]

- Zhao, X.; Qi, J.; Xu, H.; Yu, Z.; Yuan, L.; Chen, Y.; Huang, H. Evaluating the potential of airborne hyperspectral LiDAR for assessing forest insects and diseases with 3D Radiative Transfer Modeling. Remote Sens. Environ. 2023, 297, 113759. [Google Scholar] [CrossRef]

- Dash, J.P.; Watt, M.S.; Paul, T.S.H.; Morgenroth, J.; Pearse, G.D. Early Detection of Invasive Exotic Trees Using UAV and Manned Aircraft Multispectral and LiDAR Data. Remote Sens. 2019, 11, 1812. [Google Scholar] [CrossRef]

- Niu, Y.; Li, X.; Zhang, J.; Ma, D.; Ji, J.; Xuan, K.; Jiang, Y.; Wang, C.; Deng, H.; Liu, J. Inversion of Leaf Area Index in Winter Wheat by Merging UAV LiDAR with Multispectral Remote Sensing Data. Acta Pedol. Sin. 2022, 59, 161–171. [Google Scholar]

- Wu, P.Q.; Ren, G.B.; Zhang, C.F.; Wang, H.; Liu, S.W.; Ma, Y. Fine identification and biomass estimation of mangroves based on UAV multispectral and LiDAR. Natl. Remote Sens. Bull. 2022, 26, 1169–1181. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, Y.; Yu, S.; Su, R.; A, R. Research of Extraction Method of Forest Tree Height Based on Unmanned Aerial Vehicle LiDAR and Multispectral Data. For. Eng. 2023, 39, 29–36. [Google Scholar] [CrossRef]

- Yao, Y.; Qin, H.M.; Zhang, Z.M.; Wang, W.M.; Zhou, W.Q. The classification of subtropical forest tree species based on UAV multispectral remote sensing data. Aeta Ecol. Sin. 2022, 42, 3666–3677. [Google Scholar]

- Su, J.; Liu, C.; Hu, X.; Xu, X.; Guo, L.; Chen, W.H. Spatio-temporal monitoring of wheat yellow rust using UAV multispectral imagery. Comput. Electron. Agric. 2019, 167, 105035. [Google Scholar] [CrossRef]

- Severtson, D.; Callow, N.; Flower, K.; Neuhaus, A.; Olejnik, M.; Nansen, C. Unmanned aerial vehicle canopy reflectance data detects potassium deficiency and green peach aphid susceptibility in canola. Precis. Agric. 2016, 17, 659–677. [Google Scholar] [CrossRef]

- Ye, H.; Huang, W.; Huang, S.; Gui, B.; Dong, Y.; Guo, A.; Ren, Y.; Jin, Y. Recognition of Banana Fusarium Wilt Based on UA V Remote Sensing. Remote Sens. 2020, 12, 938. [Google Scholar] [CrossRef]

- Zolkos, S.G.; Goetz, S.J.; Dubayah, R. A meta-analysis of terrestrial aboveground biomass estimation using LiDAR remote sensing. Remote Sens. Environ. 2013, 128, 289–298. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Chivkunova, O.B.; Merzlyak, M.N. Nondestructive estimation of anthocyanins and chlorophylls in anthocyanic leaves. Am. J. Bot. 2009, 96, 1861–1868. [Google Scholar] [CrossRef] [PubMed]

- Knyazikhin, Y.; Martonchik, J.V.; Myneni, R.B.; Diner, D.J.; Running, S.W. Synergistic algorithm for estimating vegetation canopy leaf area index and fraction of absorbed photosynthetically active radiation from MODIS and MISR data. J. Geophys. Res. Atmos. 1998, 103, 32257–32275. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Peng, G.; Ruiliang, P.; Biging, G.S.; Larrieu, R. Estimation of forest leaf area index using vegetation indices derived from Hyperion hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1355–1362. [Google Scholar] [CrossRef]

- Slater, P.N.; Jackson, R.D. Atmospheric effects on radiation reflected from soil and vegetation as measured by orbital sensors using various scanning directions. Appl. Opt. 1982, 21, 3923–3931. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.M. Evaluation of Vegetation Indices and a Modified Simple Ratio for Boreal Applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Mirik, M.; Ansley, R.J.; Michels, G.J.; Elliott, N.C. Spectral vegetation indices selected for quantifying Russian wheat aphid (Diuraphis noxia) feeding damage in wheat (Triticum aestivum L.). Precis. Agric. 2012, 13, 501–516. [Google Scholar] [CrossRef]

- Richardson, A.D.; Duigan, S.P.; Berlyn, G.P. An evaluation of noninvasive methods to estimate foliar chlorophyll content. New Phytol. 2002, 153, 185–194. [Google Scholar] [CrossRef]

- Rondeaux, C.; Steven, M.D.; Baret, F. Optimized of Soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Rouse, J.W.; Has, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the Third ERTS Symposium, NASA SP-351, NASA, Washington, DC, USA, 10–14 December 1973; Volume 1, pp. 309–317. [Google Scholar]

- Gitelson, A.; Merzlyak, M.N. Quantitative estimation of chlorophyll-a using reflectance spectra: Experiments with autumn chestnut and maple leaves. J. Photochem. Photobiol. B Biol. 1994, 22, 247–252. [Google Scholar] [CrossRef]

- Ortiz, S.M.; Breidenbach, J.; Kändler, G. Early Detection of Bark Beetle Green Attack Using TerraSAR-X and RapidEye Data. Remote Sens. 2013, 5, 1912–1931. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of leaf-area index from quality of radiation on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Major, D.J.; Baret, F.; Guyot, G. A ratio vegetation index adjusted for soil brightness. Int. J. Remote Sens. 1990, 11, 727–740. [Google Scholar] [CrossRef]

- Yang, N.; Cui, W.; Zhang, Z.; Zhang, J.; Chen, J.; Du, R.; Lao, C.; Zhou, Y. Soil salinity inversion at different depths using improved spectral index with UAV multispectral remote sensing. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2020, 36, 13–21, (In Chinese with English Abstract). [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide Dynamic Range Vegetation Index for Remote Quantification of Biophysical Characteristics of Vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [PubMed]

- Varin, M.; Chalghaf, B.; Joanisse, G. Object-Based Approach Using Very High Spatial Resolution 16-Band WorldView-3 and LiDAR Data for Tree Species Classification in a Broadleaf Forest in Quebec, Canada. Remote Sens. 2020, 12, 3092. [Google Scholar] [CrossRef]

- Hsu, C.W.; Chang, C.C.; Lin, C.J. A Practical Guide to Support Vector Classificatio; Department of Computer Science and Information Engineering National Taiwan University: Taipei, Taiwan, 2008. [Google Scholar]

- Zhou, K.-J.; Zhang, S.-R. Support vector machines based classification algorithm. Comput. Eng. Appl. 2009, 45, 159–162. [Google Scholar] [CrossRef]

- Kharuk, V.I.; Ranson, K.J.; Fedotova, E.V. Spatial pattern of Siberian silkmoth outbreak and taiga mortality. Scand. J. For. Res. 2007, 22, 531–536. [Google Scholar] [CrossRef]

- Wang, L.; Luo, Y.Q.; Zhang, X.L.; Ma, Q.Y.; Heliovaara, K.; Jia, L.Q. Application development of remote sensing technology in the assessment of forest pest disaster. World For. Res. 2008, 21, 37–43. [Google Scholar] [CrossRef]

- Bhanu, B.; Lin, Y. Genetic algorithm based feature selection for target detection in SAR images. Image Vis. Comput. 2003, 21, 591–608. [Google Scholar] [CrossRef]

- Jones, H.G.; Vaughan, R.A. Remote Sensing of Vegetation: Principles, Techniques, and Applications; Oxford University Press: Oxford, UK, 2010; p. 353. [Google Scholar]

- Tian, Q.-J.; Min, X.-J. Advances in study on vegetation indices. Adv. Earth Sci. 1998, 13, 327–333. [Google Scholar] [CrossRef]

- Jełowicki, Ł.; Sosnowicz, K.; Ostrowski, W.; Osińska-Skotak, K.; Bakuła, K. Evaluation of Rapeseed Winter Crop Damage Using UAV-Based Multispectral Imagery. Remote Sens. 2020, 12, 2618. [Google Scholar] [CrossRef]

- Feng, W.; Wu, Y.; He, L.; Ren, X.; Wang, Y.; Hou, G.; Wang, Y.; Liu, W.; Guo, T. An optimized non-linear vegetation index for estimating leaf area index in winter wheat. Precis. Agric. 2019, 20, 1157–1176. [Google Scholar] [CrossRef]

- Sytar, O.; Bruckova, K.; Kovar, M.; Zivcak, M.; Hemmerich, L.; Brestic, M. Nondestructive detection and biochemical quantification of buckwheat leaves using visible (VIS) and near–infrared (NIR) hyperspectral reflectance imaging. J. Cent. Eur. Agric. 2017, 18, 864–878. [Google Scholar] [CrossRef]

- Song, B.; Park, K. Detection of Aquatic Plants Using Multispectral UAV Imagery and Vegetation Index. Remote Sens. 2020, 12, 387. [Google Scholar] [CrossRef]

- Burns, B.W.; Green, V.S.; Hashem, A.A.; Massey, J.H.; Shew, A.M.; Adviento-Borbe, M.A.; Milad, M. Determining nitrogen deficiencies for maize using various remote sensing indices. Precis. Agric 2022, 23, 791–811. [Google Scholar] [CrossRef]

- Fan, Y.; Feng, H.; Jin, X.; Yue, J.; Liu, Y.; Li, Z.; Feng, Z.; Song, X.; Yang, G. Estimation of the nitrogen content of potato plants based on morphological parameters and visible light vegetation indices. Front. Plant Sci. 2022, 13, 1012070. [Google Scholar] [CrossRef]

- Park, T. Potential Lidar Height, Intensity, and Ratio Parameters for Plot Dominant Species Discrimination and Volume Estimation. Remote Sens. 2020, 12, 3266. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, J. Calendar of Control of Major Forest Pests in Daxing’anling, Inner Mongolia; Northeast Forestry University Press: Harbin, China, 2017; p. 166. [Google Scholar]

- Barnes, C.; Balzter, H.; Barrett, K.; Eddy, J.; Milner, S.; Suarez, J.C. Airborne laser scanning and tree crown fragmentation metrics for the assessment of Phytophthora ramorum infected larch forest stands. For. Ecol. Manag. 2017, 404, 294–305. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Jin, Y.; Ye, H.; Huang, W.; Dong, Y.; Fan, L.; Ma, H.; Jiang, J. Remote sensing monitoring of areca yellow leaf disease based on UAV multispectral images. Trans. CSAE 2020, 36, 54–61. [Google Scholar]

- Yang, G.; He, Y.; Feng, X.; Li, X.; Zhang, J.; Yu, Z. Methods and new research progress of remote sensing monitoring of crop disease and pest stress using unmanned aerial vehicle. Smart Agric. 2022, 4, 1–16. [Google Scholar]

- Mäyrä, J.; Keski-Saari, S.; Kivinen, S.; Tanhuanpaa, T.; Hurskainen, P.; Kullberg, P.; Poikolainen, L.; Viinikka, A.; Tuominen, S.; Kumoula, T.; et al. Tree species classification from airborne hyperspectral and LiDAR data using 3D convolutional neural networks. Remote Sens. Environ. 2021, 256, 112322. [Google Scholar] [CrossRef]

- Song, Y.; Chen, B.; Wang, Q.; Wang, G.; Wang, J.; Liu, H.; Zheng, D.; Li, J.; Chen, Z.; Sun, L. Monitoring of cotton Verticillium wilt based on unmanned aerial vehicle multispectral images. Cotton Sci. 2023, 35, 87–100. [Google Scholar] [CrossRef]

- Lin, X.; Sun, J.; Yang, L.; Liu, H.; Wang, T.; Zhao, H. Application of UAV Multispectral Remote Sensing to Monitor Damage Level of Leaf-feeding Insect Pests of Oak. J. Northeast. For. Univ. 2023, 51, 138–144. [Google Scholar] [CrossRef]

- Yang, L.; Huang, X.J.; Bao, Y.H.; Bao, G.; Tong, S.Q.; Sudubilig. Effects of UAV flight altitude on the accuracy of monitoring Dendrolimus superans pests by remote sensing. J. Nanjing For. Univ. (Nat. Sci. Ed.) 2023, 47, 13–22. [Google Scholar] [CrossRef]

- Zheng, Q.; Huang, W.; Cui, X.; Shi, Y.; Liu, L. New spectral index for detecting wheat yellow rust using Sentinel-2 multispectral imagery. Sensors 2018, 18, 868. [Google Scholar] [CrossRef] [PubMed]

- Bergmüller, K.O.; Vanderwel, M.C. Predicting Tree Mortality Using Spectral Indices Derived from Multispectral UAV Imagery. Remote Sens. 2022, 14, 2195. [Google Scholar] [CrossRef]

| Project | Performance Parameters | |

|---|---|---|

| System Performance | Absolute precision | ±5 cm |

| Weight | 4.5 kg | |

| Laser Sweep Unit | Laser Safety Levels | Level 1 |

| Maximum field of view | ±330° | |

| Angular resolution | 0.001° | |

| Data update rate | 200 Hz | |

| Maximum transmitting point frequency | 750 KHz | |

| Multi-echo function | 15 times | |

| Maximum range | 1350 m@60% | |

| Number | Vegetation Index | Formulation | References |

|---|---|---|---|

| 1 | Anthocyanin Reflectance Index (ARI) | 1/B2 − 1/B4 | Gitelson A A2009 [29] |

| 2 | Green Modified Simple Ratio (GMSR) | (B5/B2 − 1)/(B5/B2 + 1)0.5 | Knyazikhin Y1998 [30] |

| 3 | Green Normalized Difference Vegetation Index (GNDVI) | (B5 − B2)/(B5 + B2) | Gitelson A A1996 [31] |

| 4 | Green Ratio Vegetation Index (GRVI) | B5/B2 | Gitelson A A2002 [32] |

| 5 | Modifies Nonlinear vegetation index (MNLI) | 1.5(B50.5 − B3)/(B50.5 + B3 + 0.5) | Peng G2003 [33] |

| 6 | Modified Simple Ratio (MSR) | (B5/B3 − 1)/(B5/B3) 0.5 + 1) | Philip N1982 [34] |

| 7 | Modified Simple Ratio–red edge (MSRreg) | (B5/B4 − 1)/(B5/B4 + 1)0.5 | Chen J M1996 [35] |

| 8 | Normalized Difference Green Index (NDGI) | (B2 − B3)/(B2 + B3) | Mirik M2012 [36] |

| 9 | Normalized Difference Salinity Index* (NDSI*) | (B3 − B4)/(B3 + B4) | Richardson A D2002 [37] |

| 10 | Normalized Difference Salinity Index-Red Edge (NDSIreg) | (B4 − B5)/(B4 + B5) | Rondeaux C1996 [38] |

| 11 | Normalized Difference Vegetation Index (NDVI) | (B5 − B3)/(B5 + B3) | Rouse J W1974 [39] |

| 12 | Normalized Difference Vegetation Index* (NDVI*) | (B4 − B3)/(B4 + B3) | Gitelson A 1994 [40] |

| 13 | Normalized Difference Vegetation Index-Red Edge (NDVIreg) | (B5 − B4)/(B5 + B4) | Ortiz S M2013 [41] |

| 14 | Optimize Soil-adjusted Vegetation Index (OSAVI) | (B5 − B3)/(B5 + B3 + 0.16) | Rondeaux C1996 [38] |

| 15 | Optimize Soil-adjusted Vegetation Index-Red Edge (OSAVIreg) | (B5 − B4)/(B5 + B4 + 0.16) | Rondeaux C1996 [38] |

| 16 | Ratio Vegetation Index (RVI) | B5/B3 | Jordan C F1969 [42] |

| 17 | Ratio Vegetation Index* (RVI*) | B4/B3 | Major D J1990 [43] |

| 18 | Ratio Vegetation Index-Red Edge (RVIreg) | B5/B4 | Yang Ning 2020 [44] |

| 19 | Renormalized Difference Vegetation Index-Red Edge (RDVIreg) | (B5 − B4)/(B5 + B4)0.5 | Broge N H2001 [45] |

| 20 | Wide Dynamic Range Vegetation Index (WDRVI) | (0.1B5 − B3)/(0.1B5 + B3) | Gitelson A A2004 [46] |

| Model | RFVI | SVMVI | RFLIDAR | SVMLIDAR | RFVI+LIDAR | SVMVI+LIDAR | |

|---|---|---|---|---|---|---|---|

| Indicator | |||||||

| OA | 0.7746 | 0.9014 | 0.4510 | 0.6200 | 0.7606 | 0.9577 | |

| Kappa | 0.7150 | 0.8610 | 0.3748 | 0.5509 | 0.7012 | 0.9384 | |

| Rmacro | 0.7772 | 0.9161 | 0.4590 | 0.6284 | 0.7658 | 0.9595 | |

| F1macro | 0.7723 | 0.8947 | 0.4507 | 0.6235 | 0.7601 | 0.9594 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He-Ya, S.; Huang, X.; Zhou, D.; Zhang, J.; Bao, G.; Tong, S.; Bao, Y.; Ganbat, D.; Tsagaantsooj, N.; Altanchimeg, D.; et al. Identification of Larch Caterpillar Infestation Severity Based on Unmanned Aerial Vehicle Multispectral and LiDAR Features. Forests 2024, 15, 191. https://doi.org/10.3390/f15010191

He-Ya S, Huang X, Zhou D, Zhang J, Bao G, Tong S, Bao Y, Ganbat D, Tsagaantsooj N, Altanchimeg D, et al. Identification of Larch Caterpillar Infestation Severity Based on Unmanned Aerial Vehicle Multispectral and LiDAR Features. Forests. 2024; 15(1):191. https://doi.org/10.3390/f15010191

Chicago/Turabian StyleHe-Ya, Sa, Xiaojun Huang, Debao Zhou, Junsheng Zhang, Gang Bao, Siqin Tong, Yuhai Bao, Dashzebeg Ganbat, Nanzad Tsagaantsooj, Dorjsuren Altanchimeg, and et al. 2024. "Identification of Larch Caterpillar Infestation Severity Based on Unmanned Aerial Vehicle Multispectral and LiDAR Features" Forests 15, no. 1: 191. https://doi.org/10.3390/f15010191

APA StyleHe-Ya, S., Huang, X., Zhou, D., Zhang, J., Bao, G., Tong, S., Bao, Y., Ganbat, D., Tsagaantsooj, N., Altanchimeg, D., Enkhnasan, D., Ariunaa, M., & Guo, J. (2024). Identification of Larch Caterpillar Infestation Severity Based on Unmanned Aerial Vehicle Multispectral and LiDAR Features. Forests, 15(1), 191. https://doi.org/10.3390/f15010191