A Marker-Controlled Watershed Algorithm for the Intelligent Picking of Long Jujubes in Trees

Abstract

:1. Introduction

- An algorithm that overcomes the influence of illumination is proposed. In a natural environment, secondary mapping is used to reduce the influence of illumination on the image.

- A marker-controlled watershed algorithm is proposed, which emphasizes the selection of marker images and mask images to solve the phenomenon of over-segmentation. An energy-driven approach is introduced to select the appropriate mask image, obtain stable and effective gradient information, and overcome the impact of environmental change.

- An algorithm is provided for the target segmentation of intelligent picking. This provides visual theoretical support for intelligent picking robots and promotes the development of forest and fruit economies.

2. Materials and Methods

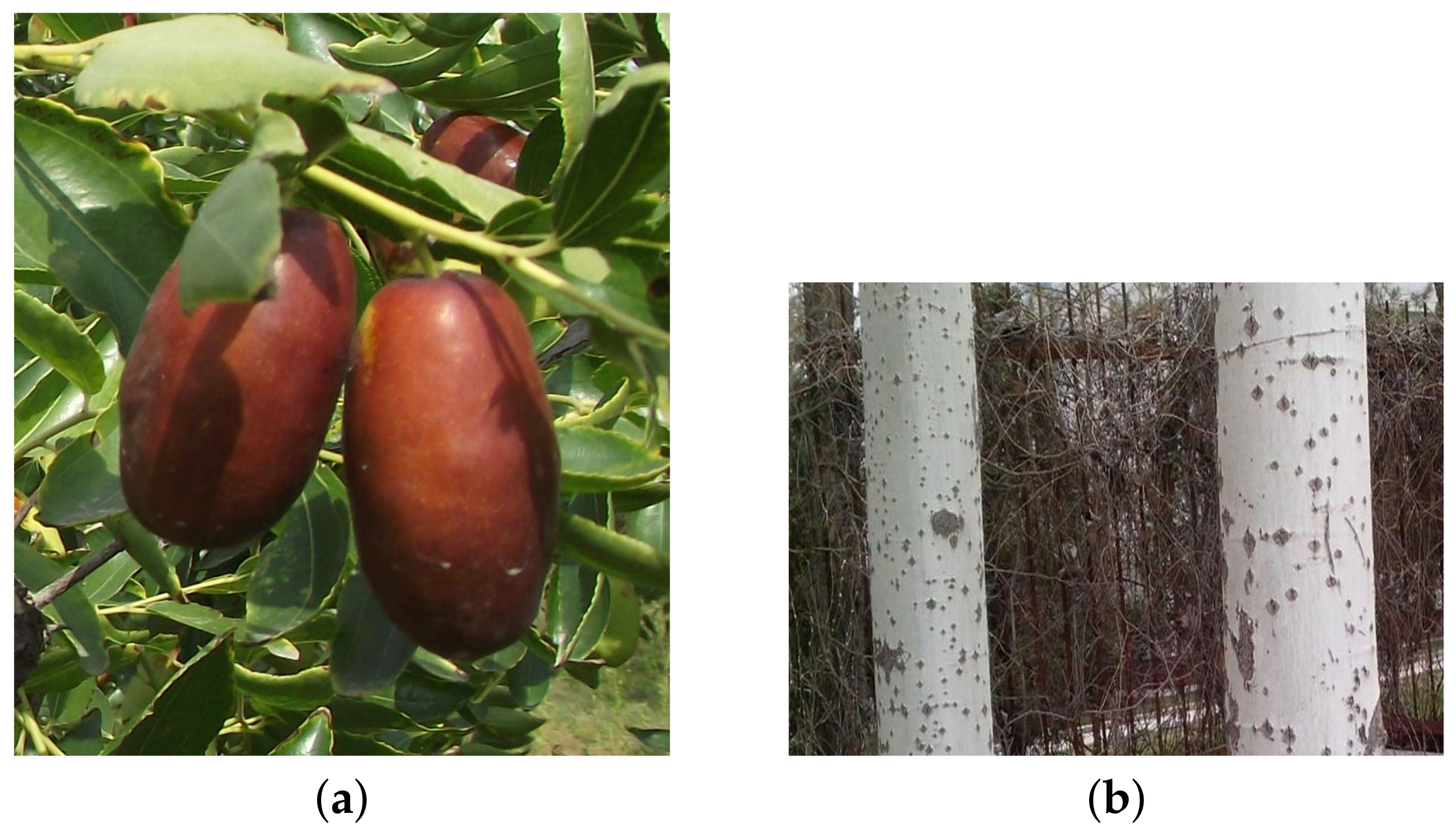

2.1. Experimental Materials

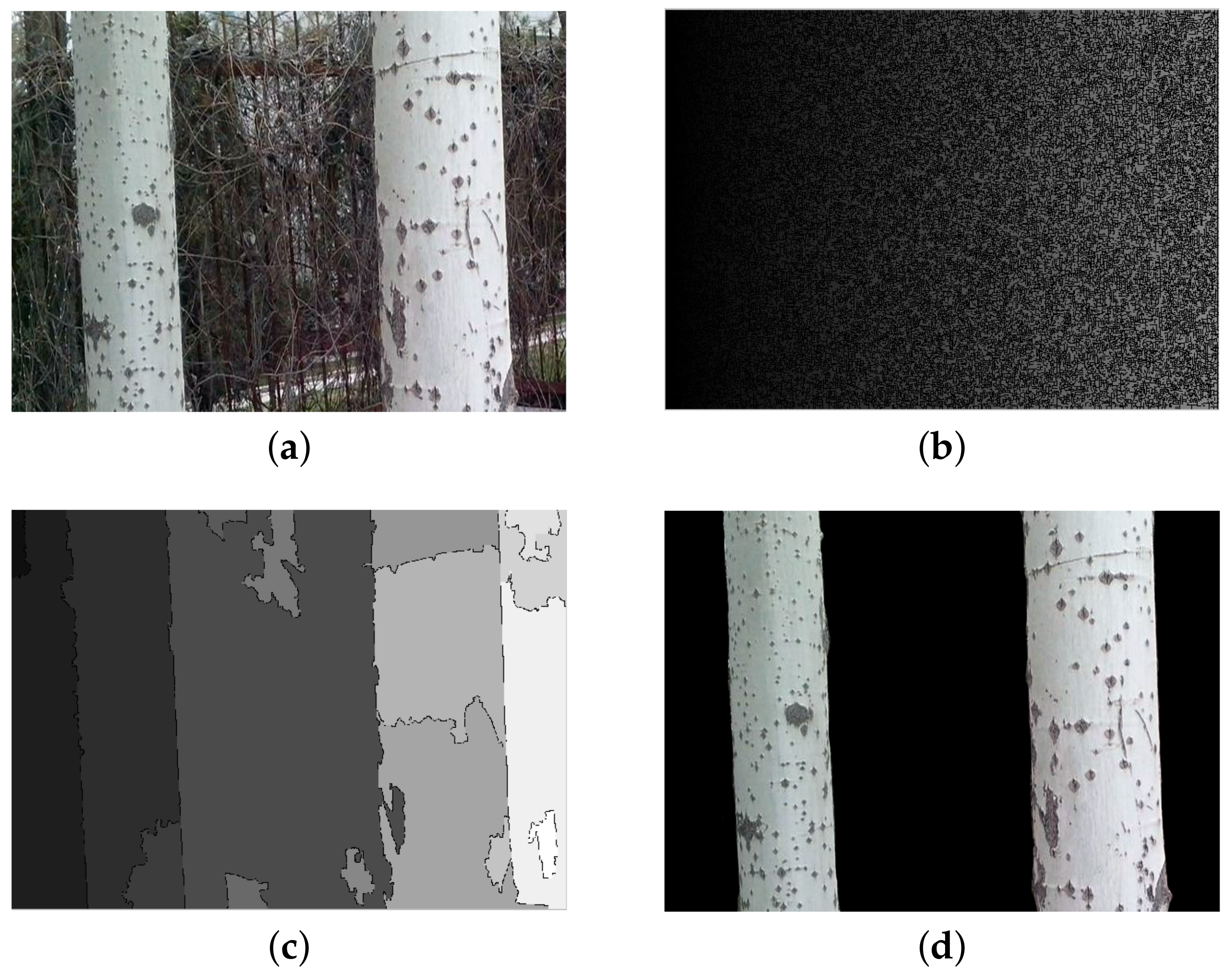

2.2. Image Pre-Processing

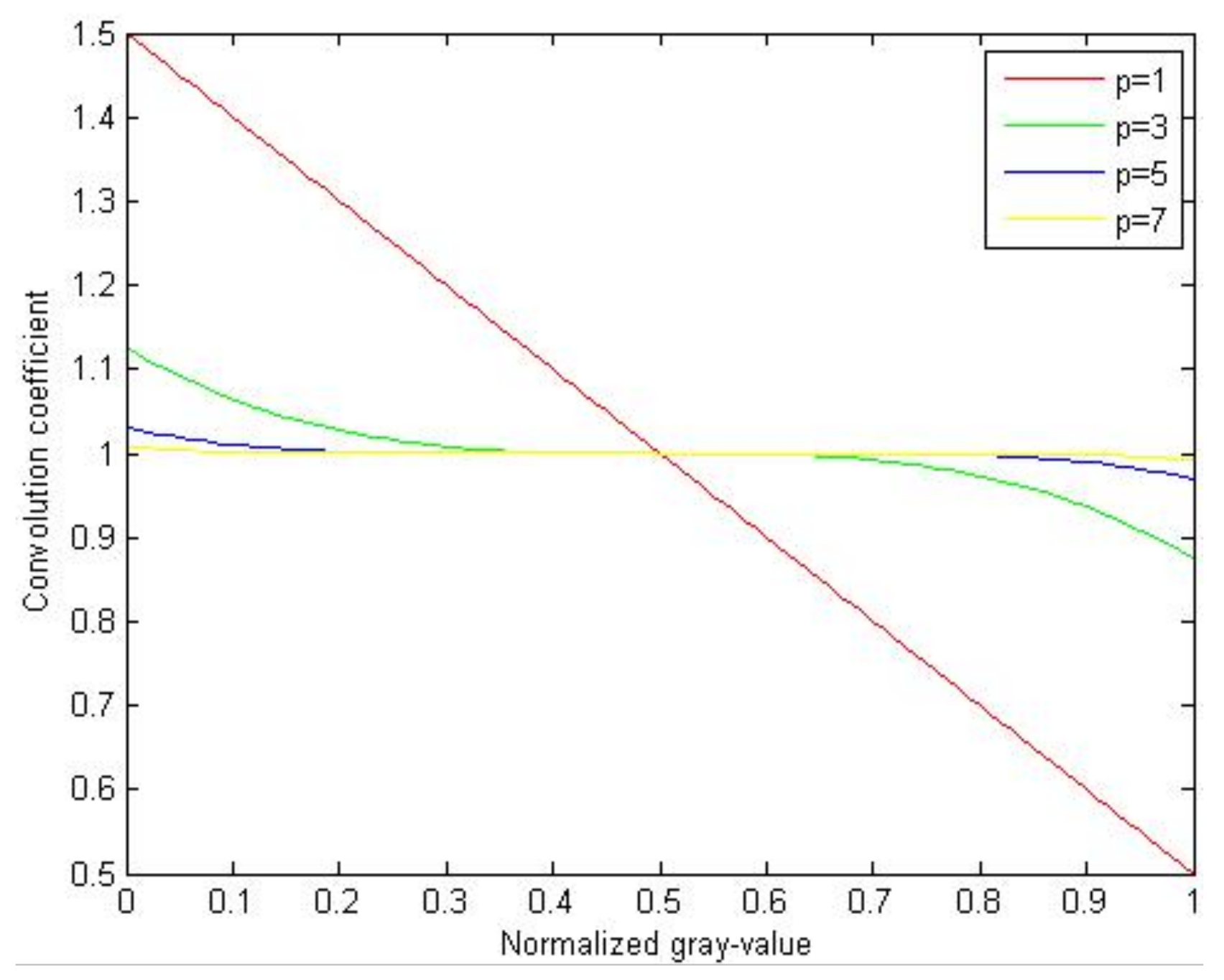

2.2.1. Convolution Coefficient Function

- the brightness value is lower than 0.5, which requires a mapping coefficient greater than 1;

- the brightness value is greater than 0.5, which requires a mapping coefficient greater than 1;

- the brightness value is close to 0.5, which requires a mapping coefficient close to 1. In this situation, the lighting has no influence on the brightness value.

2.2.2. Determination of Parameters

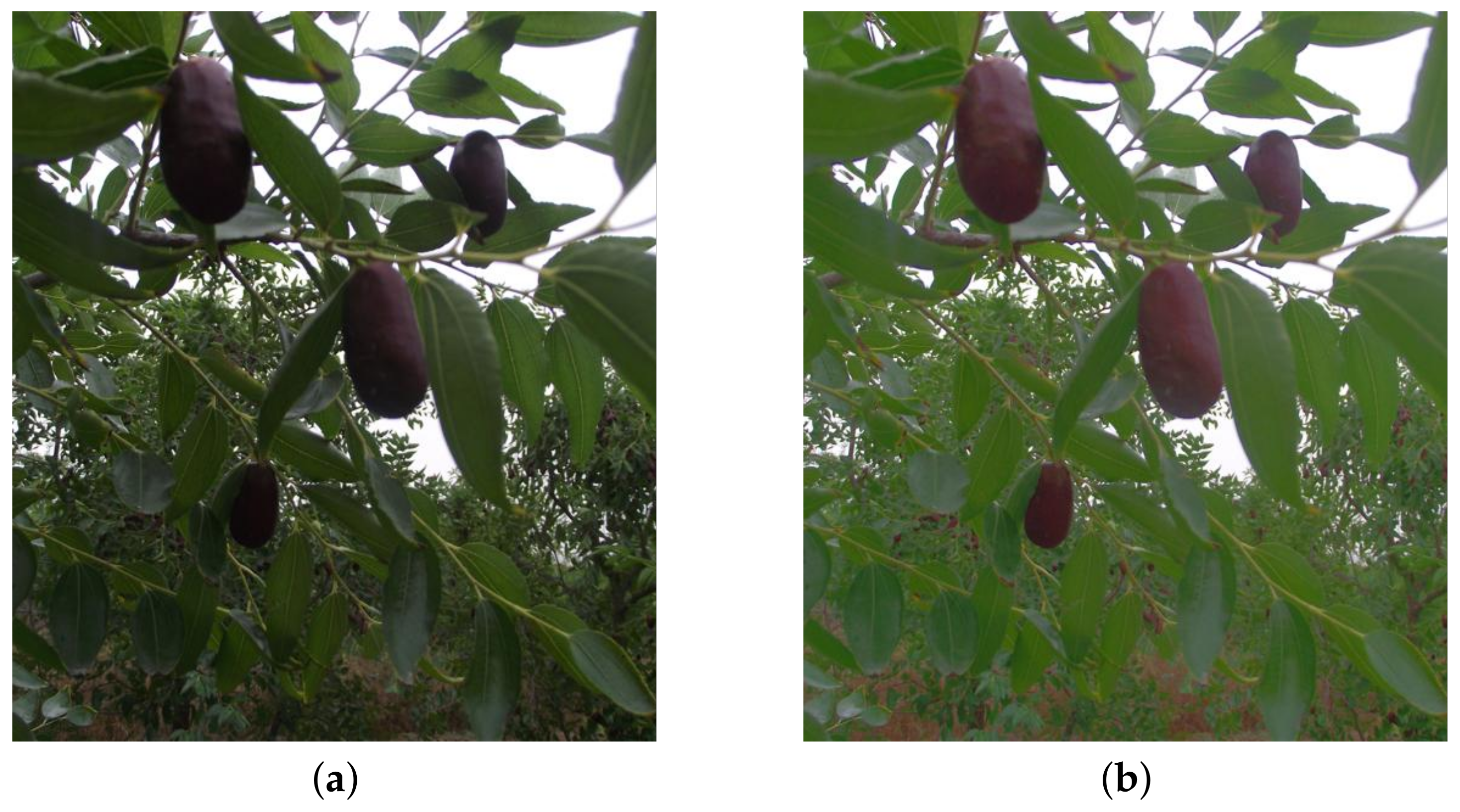

2.2.3. Mapping Result

2.3. Experimental Method

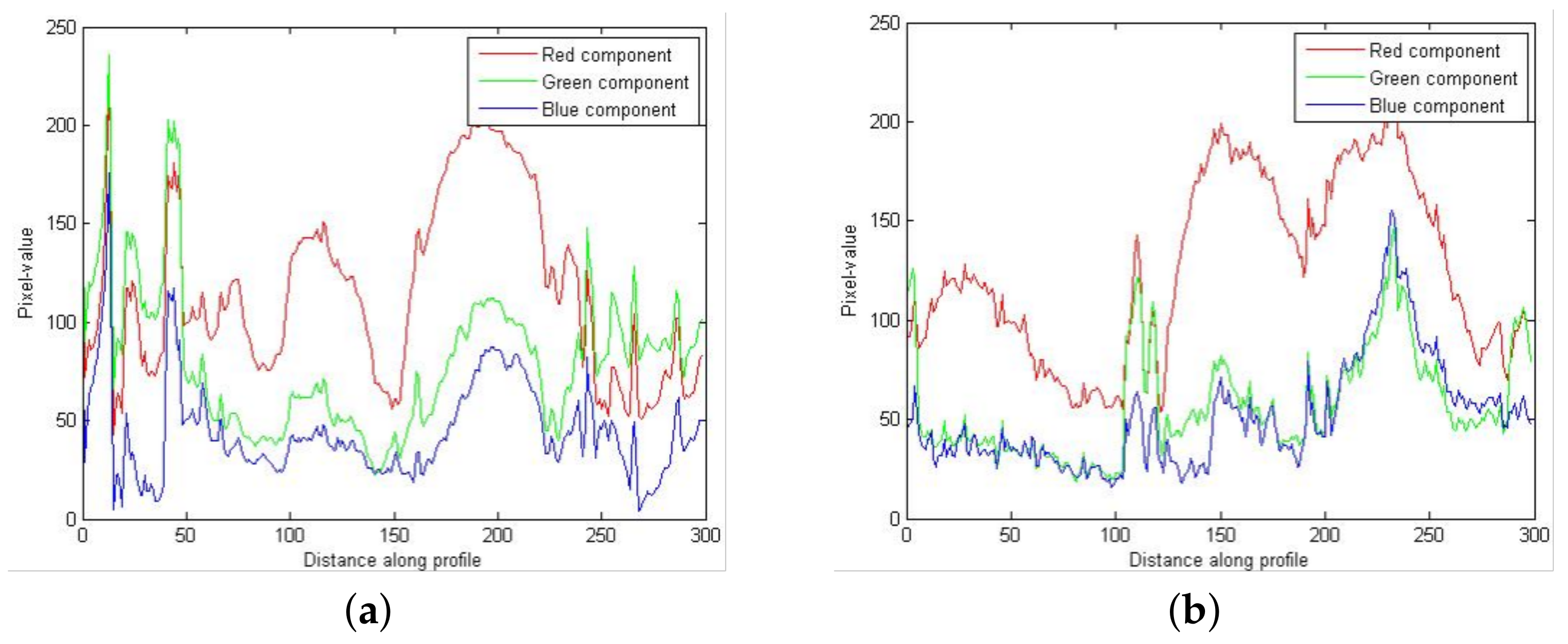

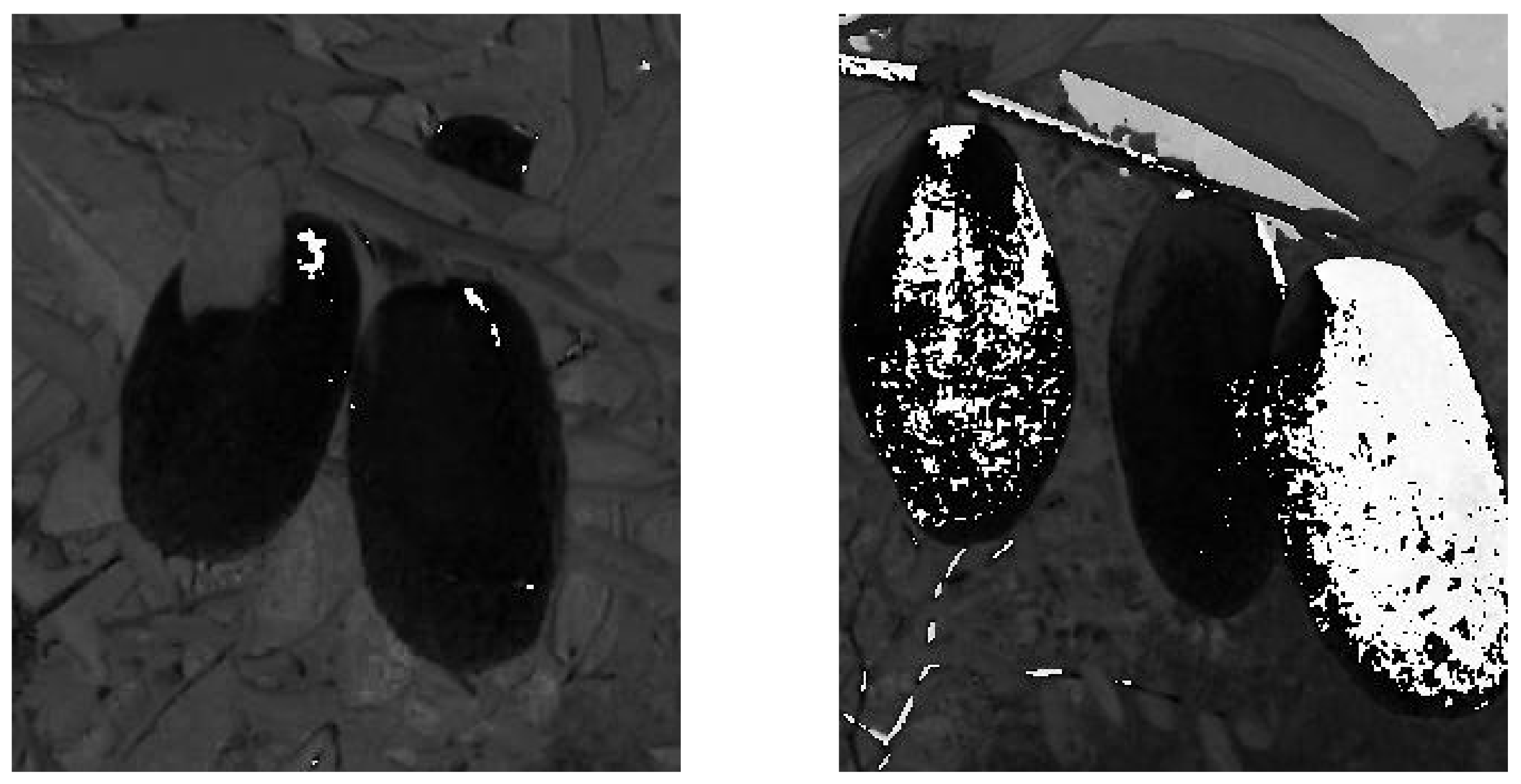

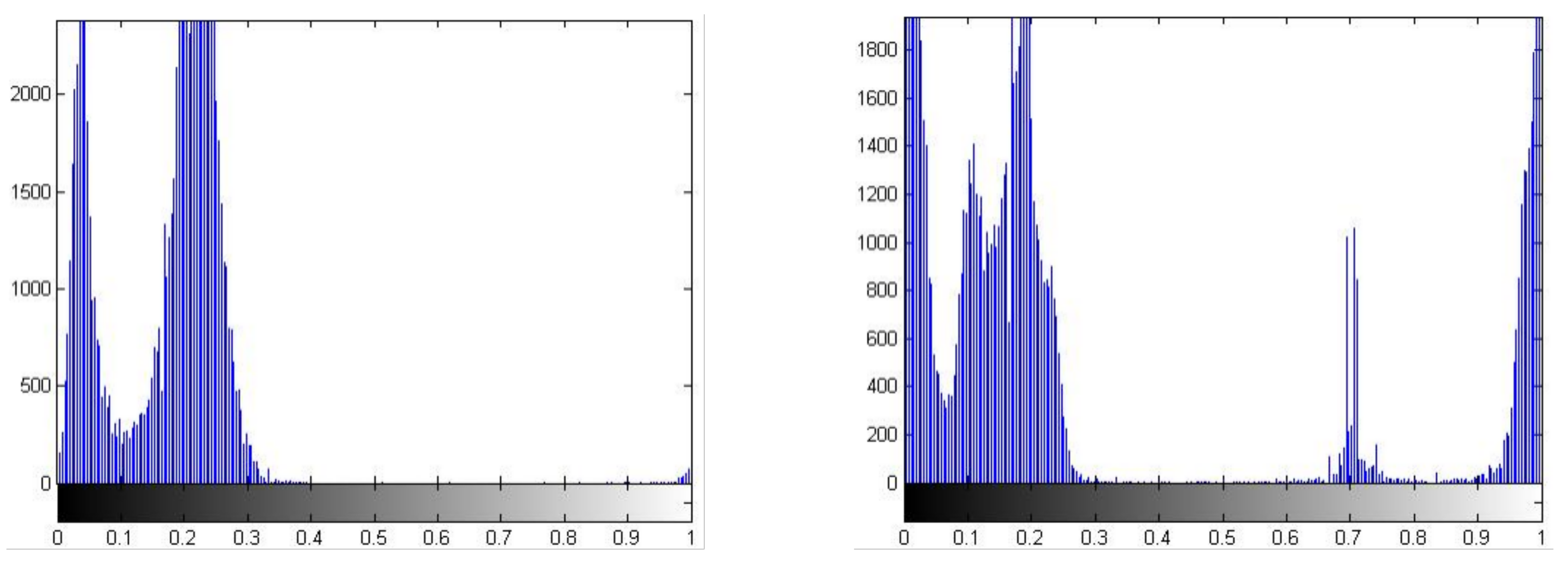

2.3.1. Characteristic Analysis

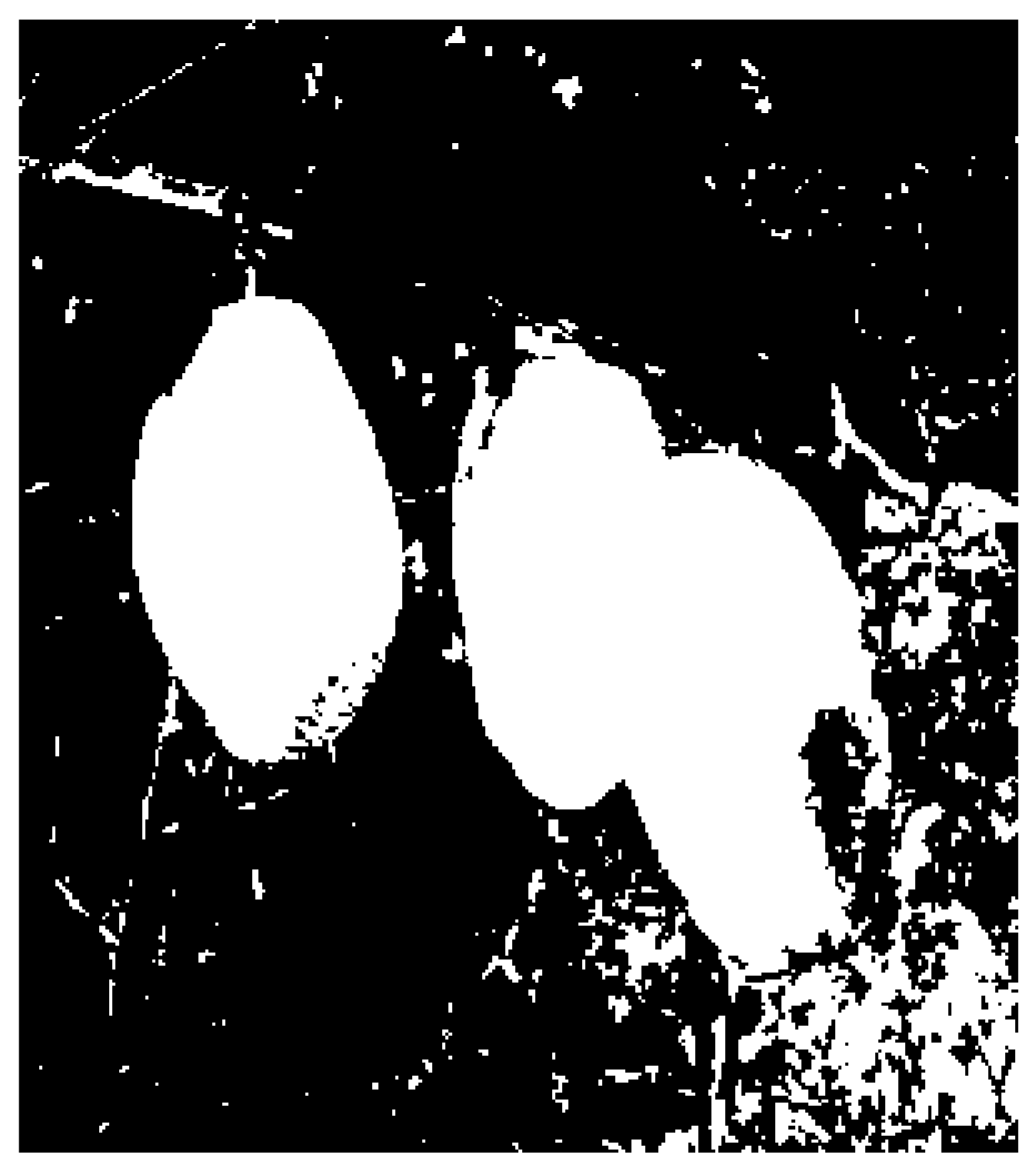

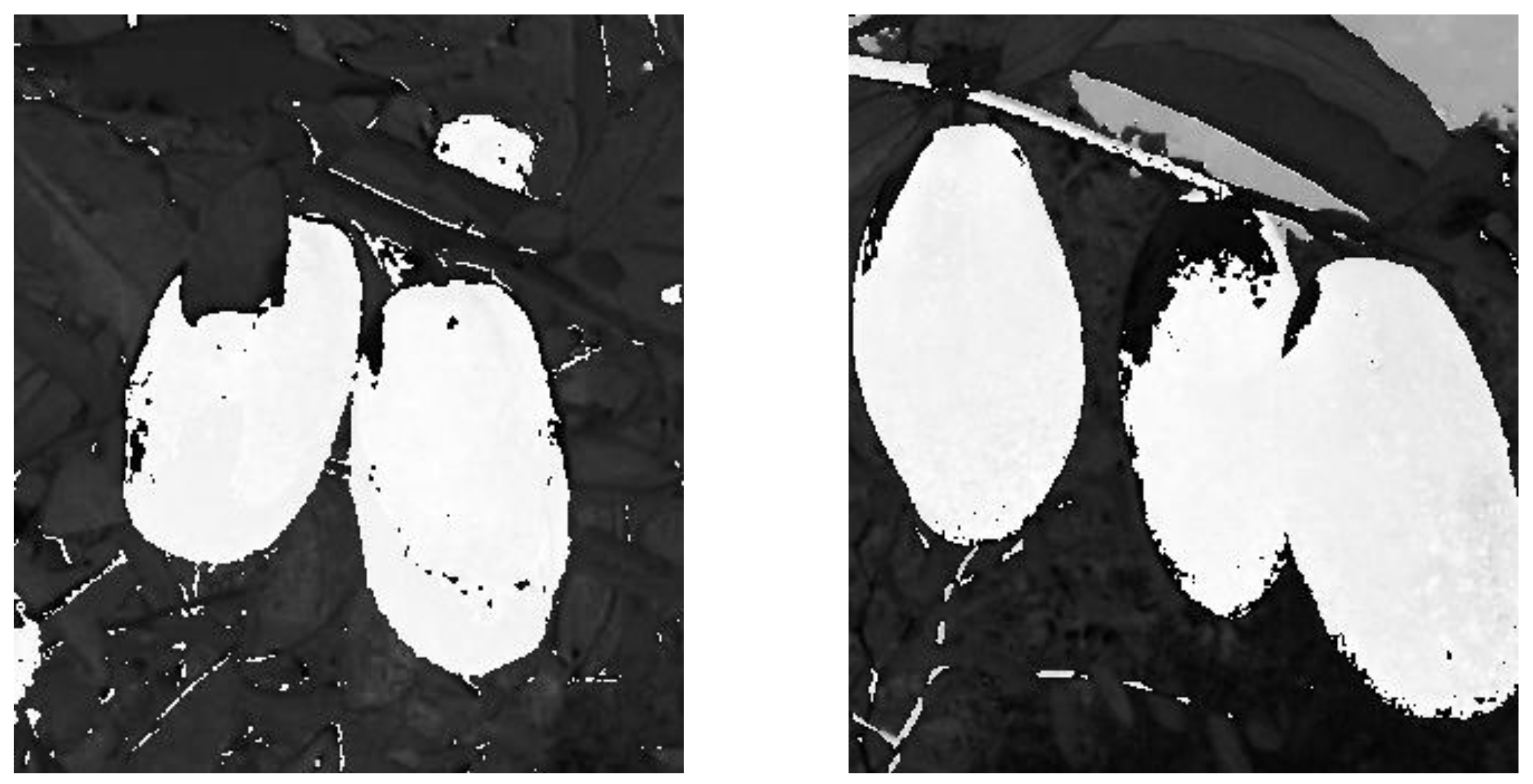

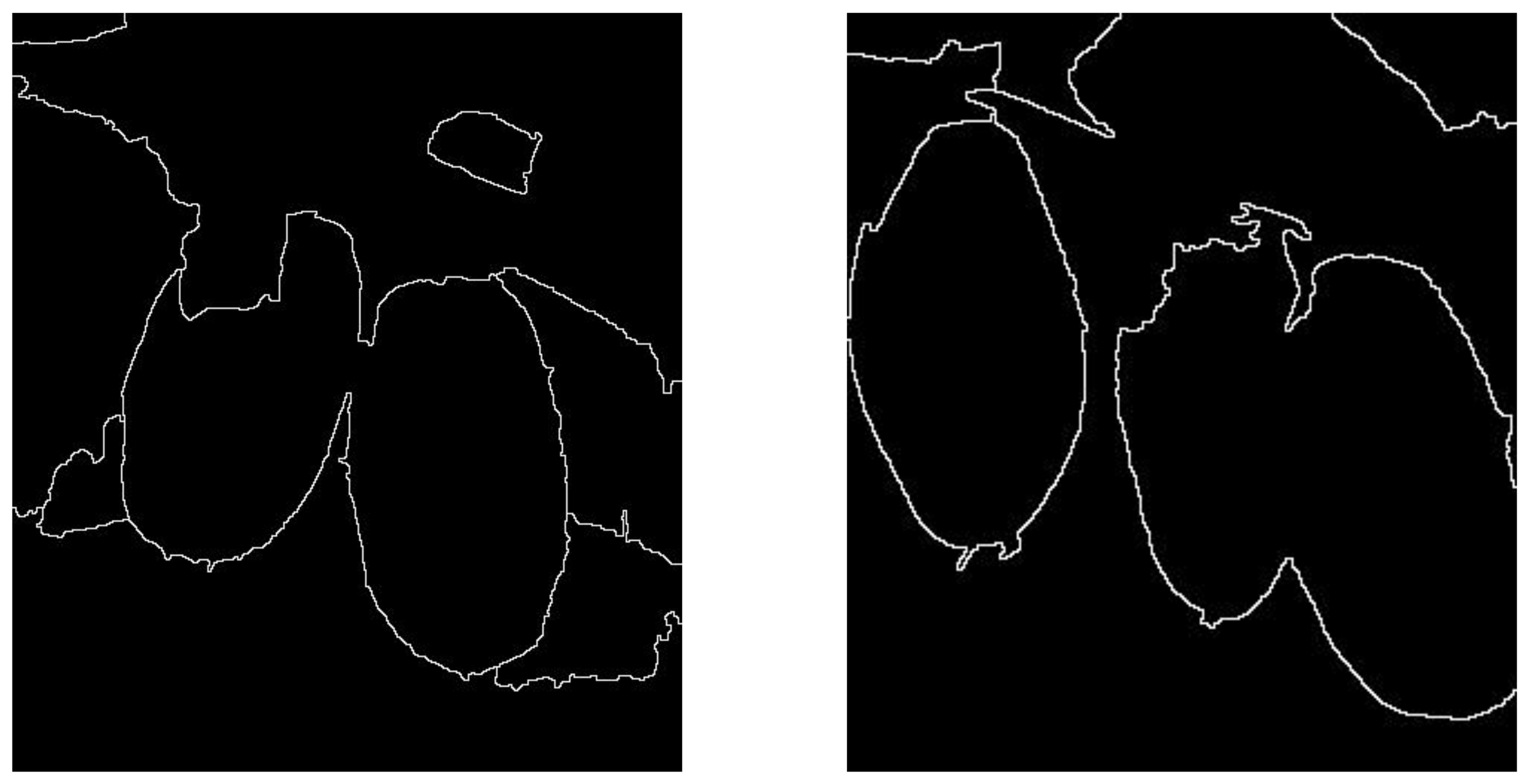

2.3.2. The Marker Image

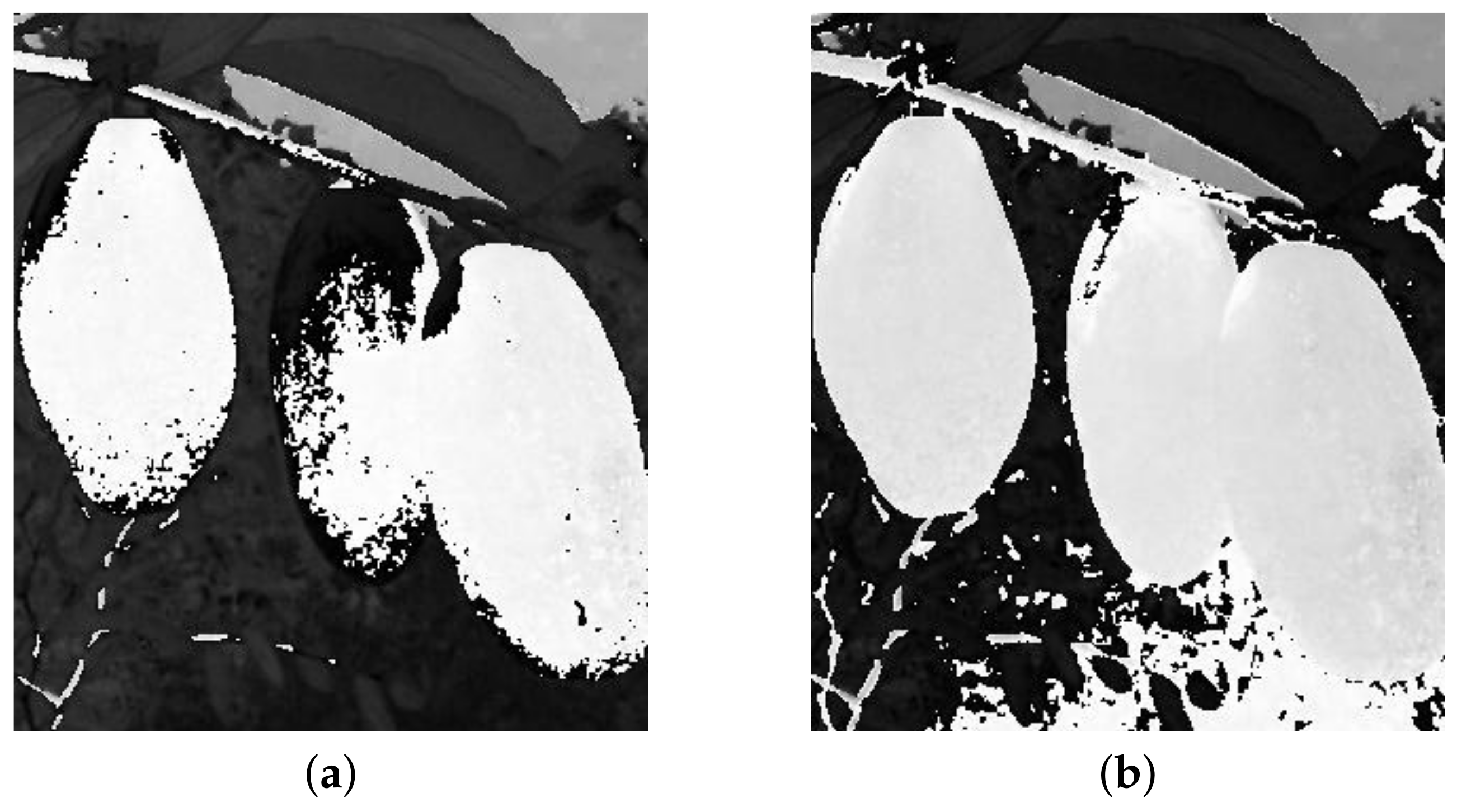

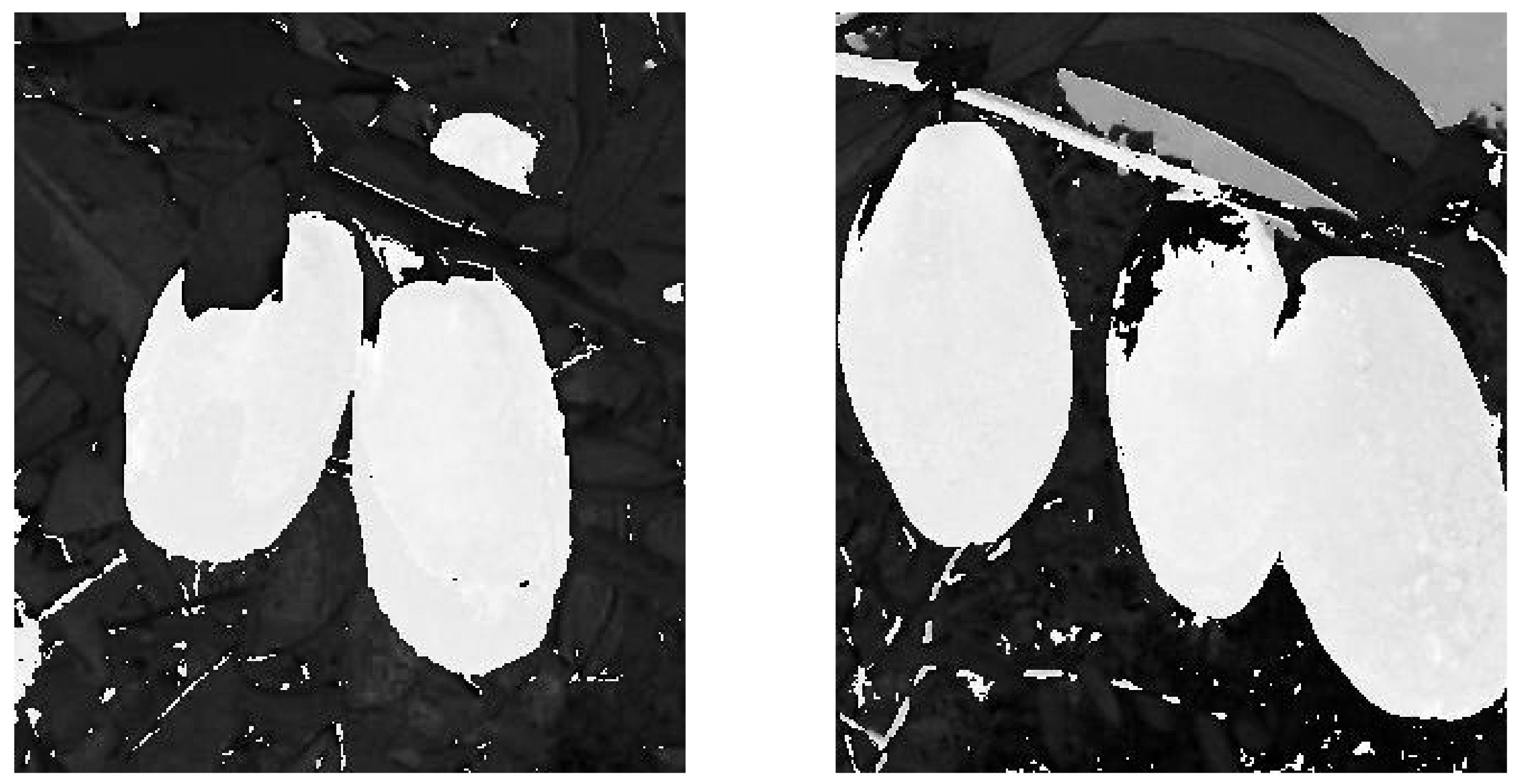

2.3.3. The Mask Image

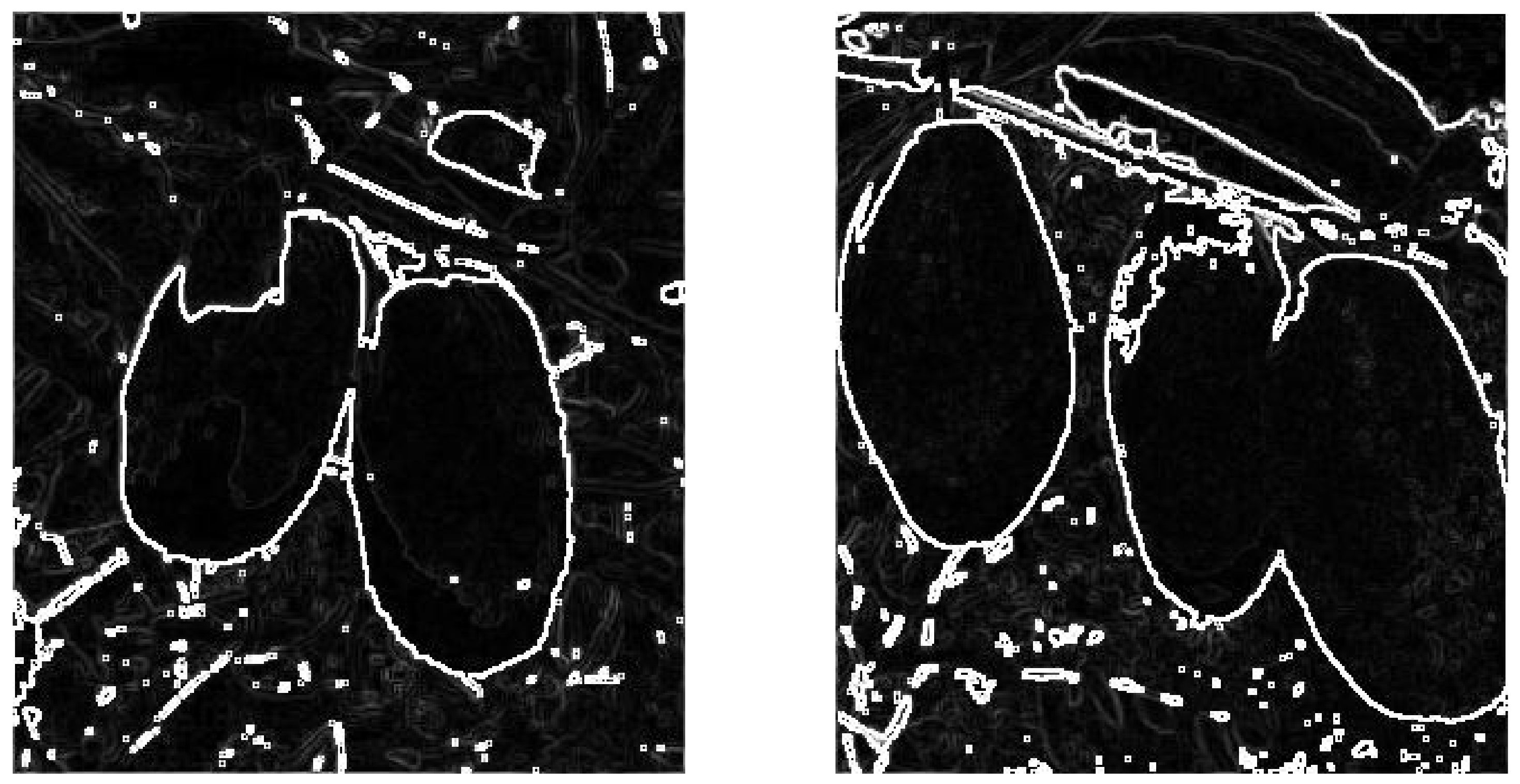

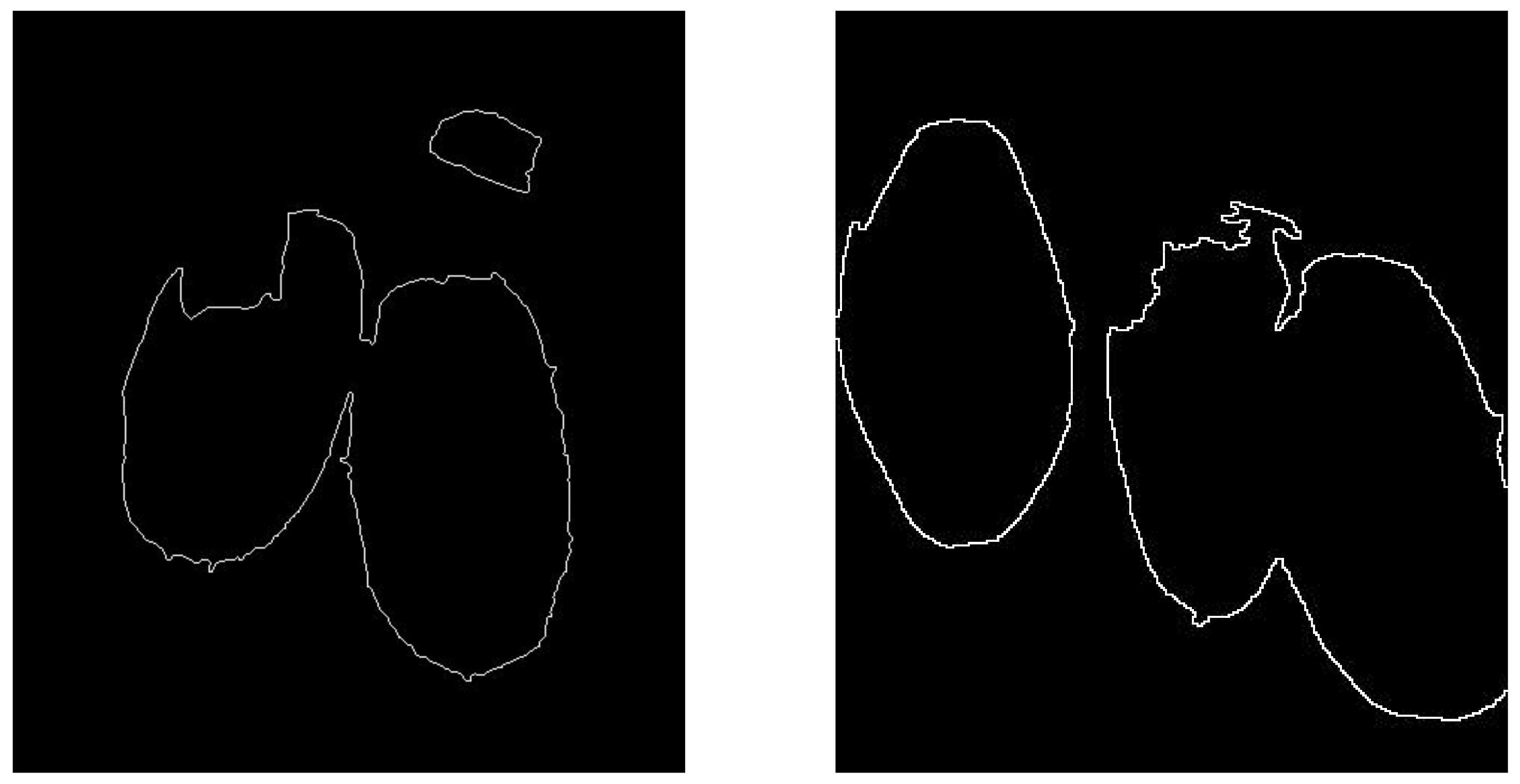

2.3.4. Segmentation by the Marker-Controller Watershed Algorithm

3. Results

3.1. Segmentation Results of the Long Jujubes

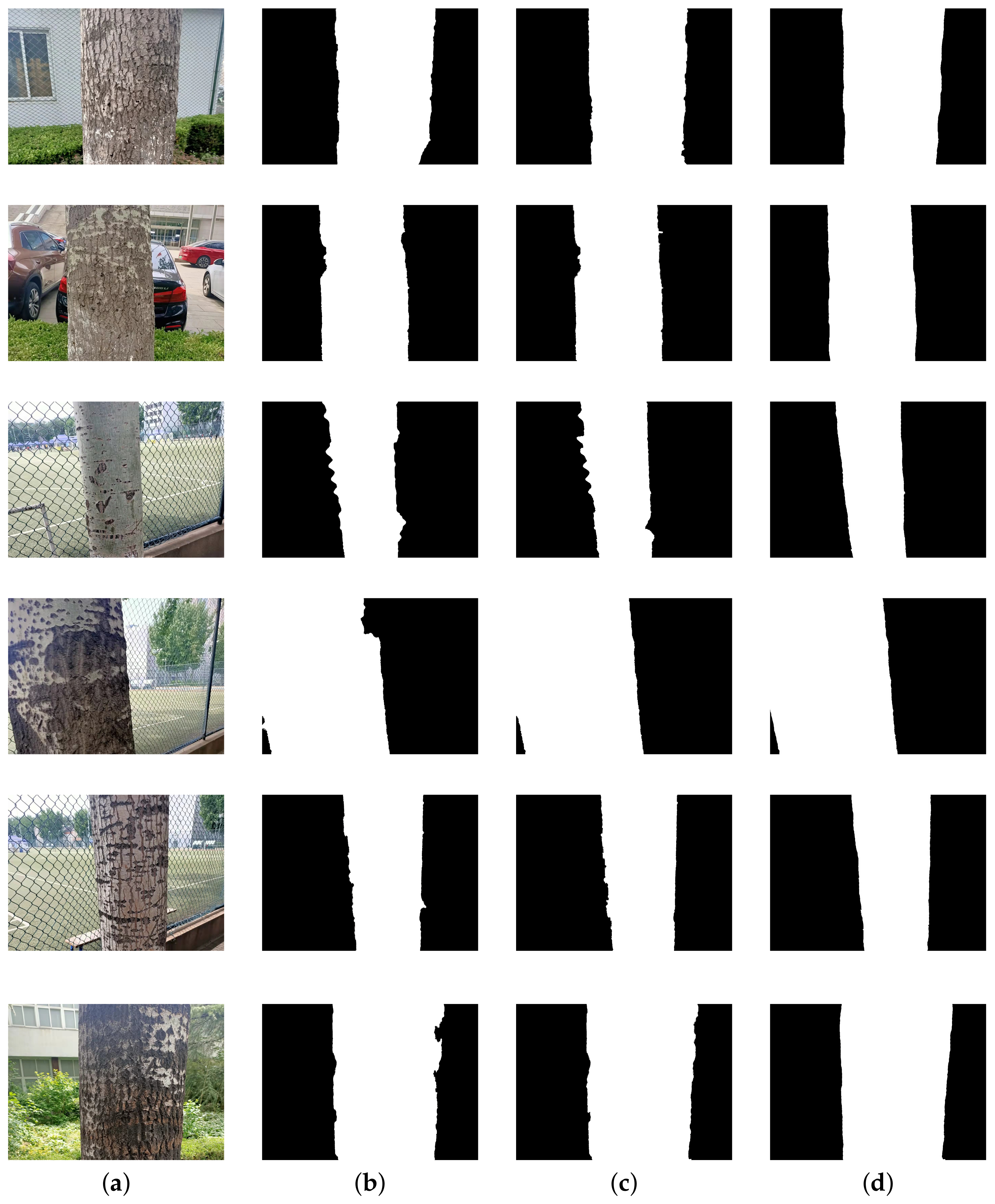

3.2. Segmentation Results of Trees

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, L.; Liu, Q.Y. The methods summary of optimal segmentation scale selection in high-resolution remote sensing images multi-scale segmentation. Geomat. Spat. Inf. Technol. 2015, 38, 166–169. [Google Scholar]

- Sezgin, M.; Sankur, B. Survey over image thresholding techniques and quantitative performance evaluation. J. Electron. Imaging 2004, 13, 146–165. [Google Scholar]

- Liao, P.S.; Chen, T.S.; Chuang, P.C. A Fast Algorithm for Multilevel Thresholding. J. Inf. Sci. Eng. 2001, 17, 713–727. [Google Scholar]

- Wang, Y.T.; Dai, Y.P.; Xue, J.R.; Liu, B.H.; Ma, C.H.; Gao, Y.Y. Research of segmentation method on color image of Lingwu long jujubes based on the maximum entropy. Eurasip J. Image Video Process. 2017, 34, 34. [Google Scholar] [CrossRef]

- Bao, S.; Chuang, C.S. Feature Sensitive Label Fusion With Random Walker for Atlas-Based Image Segmentation. IEEE Trans. Image Process. 2017, 26, 2797–2810. [Google Scholar] [CrossRef]

- Zhao, Q.H.; Wang, Y.; Li, Y. Voronoi tessellation-based regionalised segmentation for colour texture image. IET Comput. Vis. 2016, 10, 613–622. [Google Scholar] [CrossRef]

- Chen, Y.J.; Li, J.; Zhang, H. Non-local-based spatially constrained hierarchical fuzzy C-means method for brain magnetic resonance imaging segmentation. IET Image Process. 2016, 10, 865–876. [Google Scholar] [CrossRef]

- Pereyra, M.; McLaughlin, S. Fast Unsupervised Bayesian Image Segmentation With Adaptive Spatial Regularisation. IEEE Trans. Image Process. 2017, 26, 2577–2587. [Google Scholar] [CrossRef] [Green Version]

- Caldairou, B.; Rousseau, F.; Passat, N. A non-local fuzzy segmentation method: Application to brain MRI. Pattern Recognit. 2011, 44, 1916–1927. [Google Scholar] [CrossRef] [Green Version]

- Peng, H.X.; Zou, X.J.; Chen, L.J. Fast Recognition of Multiple Color Target of Litchi Image in Field Environment Based on Double Otsu Algorithm. Trans. Chin. Soc. Agric. Eng. 2014, 45, 61–68. [Google Scholar]

- Chen, Z.B.; Qiu, T.S.; Ruan, S.U. FCM and Level Set Based Segmentation Method for Brain MR Images. Acta Electron. Sin. 2008, 36, 1733–1736. [Google Scholar]

- Du, Z.W.; Wu, T.; Peng, X. A Novel Recognition Approach for Mobile Image Fusing Inertial Sensors. Acta Electron. Sin. 2015, 41, 1394–1404. [Google Scholar]

- Kang, X.D.; Wang, H.; Guo, J.; Yu, W.Y. Unsupervised deep learning method for color image recognition. J. Comput. Appl. 2015, 35, 2636–2639. [Google Scholar]

- Zhao, X.M.; Li, Y.; Zhao, Q.H. Self-adaptive FLICM algorithm for gray image segmentation with unknown number of clusters. Control Decis. 2017, 32, 262–268. [Google Scholar]

- Shen, X.J.; Zhang, H.; Chen, H.P. Fast recursive multi-thresholding algorithm. J. Jilin Univ. (Eng. Technol. Ed.) 2016, 46, 528–534. [Google Scholar]

- Wang, Y.T.; Li, W.B.; Pang, S.; Kan, J.M. Segmentation method of lingwu long jujubes based on L*a*b color space. Telkomnika-Indones. J. Electr. Eng. 2013, 11, 5344–5351. [Google Scholar] [CrossRef]

- Wang, Y.T.; Kan, J.M.; Li, W.B. Image segmentation and maturity recognition algorithm based on color features of Lingwu long jujube. Adv. J. Food Sci. Technol. 2013, 5, 1625–1631. [Google Scholar] [CrossRef]

- Guo, J.B.; Zhou, J.L.; Han, H.Z. Shadow suppression method for background subtraction. J. Liaoning Tech. Univ. 2005, 24, 104–106. [Google Scholar]

- Hu, Y.; Zhao, X. Shadow Detection and Removal in Single Outdoor Nature Scenes. J. Nanjing Univ. Sci. Technol. (Nat. Sci.) 2011, 35, 1–5. [Google Scholar]

- Min, H.Q.; Lv, J.M.; Luo, R.H. Adaptive Shadow Detection Based on GMM and MRF. J. South China Univ. Technol. (Nat. Sci. Ed.) 2011, 39, 115–120. [Google Scholar]

- Song, H.B.; Zhang, W.Y.; Zhang, X.X.; Zou, R.Z. Shadow removal method of apples based on fuzzy set theory. Trans. Chin. Soc. Agric. Eng. 2014, 31, 135–141. [Google Scholar]

- Xu, L.M.; Lv, J.D. Bayberry image segmentation based on homomorphic filtering and K-means clustering algorithm. Trans. Chin. Soc. Agric. Eng. 2015, 31, 202–208. [Google Scholar]

- Xiong, J.T.; Zou, X.J.; Wang, H.J. Recognition of ripe litchi in different illumination condition based on Retinex image enhancement. Trans. Chin. Soc. Agric. Eng. 2013, 29, 170–178. [Google Scholar]

- Qian, J.P.; Yang, X.T.; Wu, X.M. Mature apple recognition based on hybrid color space in natural scene. Trans. Chin. Soc. Agric. Eng. 2012, 28, 137–142. [Google Scholar]

- Dai, Y.P.; Wang, Y.T.; Xue, J.R.; Gao, Y.Y.; Liu, B.H.; Ma, C.H. Research on the Method of Segmentation On Color Image of Standing Trees. Comput. Appl. Softw. 2017, 34, 224–227. [Google Scholar]

- Dai, Y.P.; Wang, Y.T.; Xue, J.R.; Gao, Y.Y.; Liu, B.H.; Ma, C.H. Research of Segmentation Method on Image of Lingwu Long Jujubes Based on a New Extraction Model of Hue. IEEE Sens. J. 2017, 17, 6029–6036. [Google Scholar] [CrossRef]

| No. | Statistical Region | Minimum | Maximum |

|---|---|---|---|

| 1 | target | >0.05(98.65%) | 0.4235 |

| background | 0 | 0.2039 | |

| 2 | target | >0.05(97.45%) | 0.5176 |

| background | 0 | 0.3255 | |

| 3 | target | >0.05(98.78%) | 0.4235 |

| background | 0 | 0.2039 | |

| 4 | target | >0.05(96.79%) | 0.4824 |

| background | 0 | 0.3214 | |

| 5 | target | >0.05(98.28%) | 0.4667 |

| background | 0 | 0.3029 | |

| 6 | target | >0.05(97.10%) | 0.4118 |

| background | 0 | 0.3078 | |

| 7 | target | >0.05(94.38%) | 0.4510 |

| background | 0 | 0.2510 | |

| 8 | target | >0.05(96.34%) | 0.4980 |

| background | 0 | 0.3343 | |

| 9 | target | >0.05(97.48%) | 0.4431 |

| background | 0 | 0.3098 | |

| 10 | target | >0.05(98.52%) | 0.5333 |

| background | 0 | 0.3020 |

| No. | The Number of | The Number of | The Number of | The Rate of | The Rate of |

|---|---|---|---|---|---|

| Artificial Segments | Missing Segments | Erroneous Segments | Missing Segmentation | Error Segmentation | |

| 1 | 11,680 | 352 | 34 | 3.014% | 0.291% |

| 2 | 28,144 | 872 | 154 | 3.098% | 0.547% |

| 3 | 25,170 | 1328 | 1362 | 5.276% | 5.407% |

| 4 | 34,558 | 2871 | 87 | 8.308% | 0.252% |

| 5 | 6338 | 273 | 29 | 4.307% | 0.458% |

| 6 | 8536 | 310 | 46 | 3.632% | 0.539% |

| 7 | 20,409 | 1422 | 115 | 6.968% | 0.563% |

| 8 | 23,113 | 5429 | 14 | 23.489% | 0.061% |

| 9 | 25,368 | 1399 | 154 | 5.515% | 0.607% |

| 10 | 44,769 | 2419 | 0 | 5.403% | 0% |

| 11 | 25,066 | 881 | 54 | 3.515% | 0.215% |

| 12 | 17,599 | 826 | 16 | 4.693% | 0.091% |

| 13 | 15,283 | 1012 | 31 | 6.622% | 0.203% |

| 14 | 17,937 | 621 | 0 | 3.462% | 0% |

| 15 | 14,903 | 267 | 63 | 1.791% | 0.423% |

| 16 | 6881 | 202 | 0 | 2.936% | 0% |

| 17 | 11,904 | 2419 | 31 | 20.321% | 0.260% |

| 18 | 9798 | 215 | 17 | 2.194% | 0.174% |

| 19 | 22,903 | 591 | 51 | 2.580% | 0.223% |

| 20 | 24,041 | 1783 | 79 | 7.416% | 0.329% |

| 21 | 14,828 | 518 | 25 | 3.493% | 0.169% |

| 22 | 24,187 | 837 | 89 | 3.461% | 0.368% |

| 23 | 13,207 | 296 | 1051 | 2.241% | 7.958% |

| 24 | 12,611 | 2103 | 62 | 16.676% | 0.492% |

| 25 | 9121 | 1043 | 126 | 11.435% | 1.381% |

| 26 | 27,862 | 7847 | 69 | 3.040% | 0.248% |

| 27 | 10,865 | 588 | 9 | 5.412% | 0.083% |

| 28 | 22,448 | 591 | 145 | 2.632% | 0.646% |

| 29 | 16,013 | 629 | 68 | 3.928% | 0.425% |

| 30 | 15,735 | 638 | 22 | 4.052 | 0.140% |

| Model | Segmentation Accuracy |

|---|---|

| OTSU | 81.91% |

| Maximum entropy | 82.14% |

| Watershed algorithm [25] | 80.54% |

| Improved maximum entropy [4] | 89.60% |

| Algorithm based hue model [26] | 92.69% |

| Marker-controlled watershed algorithm (ours) | 94.70% |

| Model | Segmentation Accuracy |

|---|---|

| OTSU | 74.19% |

| Maximum entropy | 75.21% |

| Improved watershed algorithm [25] | 88.42% |

| marker-controlled watershed algorithm (ours) | 93.20% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, Y.; Meng, L.; Wang, S.; Sun, F. A Marker-Controlled Watershed Algorithm for the Intelligent Picking of Long Jujubes in Trees. Forests 2022, 13, 1063. https://doi.org/10.3390/f13071063

Dai Y, Meng L, Wang S, Sun F. A Marker-Controlled Watershed Algorithm for the Intelligent Picking of Long Jujubes in Trees. Forests. 2022; 13(7):1063. https://doi.org/10.3390/f13071063

Chicago/Turabian StyleDai, Yingpeng, Lingfeng Meng, Songfeng Wang, and Fushan Sun. 2022. "A Marker-Controlled Watershed Algorithm for the Intelligent Picking of Long Jujubes in Trees" Forests 13, no. 7: 1063. https://doi.org/10.3390/f13071063

APA StyleDai, Y., Meng, L., Wang, S., & Sun, F. (2022). A Marker-Controlled Watershed Algorithm for the Intelligent Picking of Long Jujubes in Trees. Forests, 13(7), 1063. https://doi.org/10.3390/f13071063