Exploring the Potential of Unmanned Aerial Vehicle (UAV) Remote Sensing for Mapping Plucking Area of Tea Plantations

Abstract

:1. Introduction

2. Materials and Methods

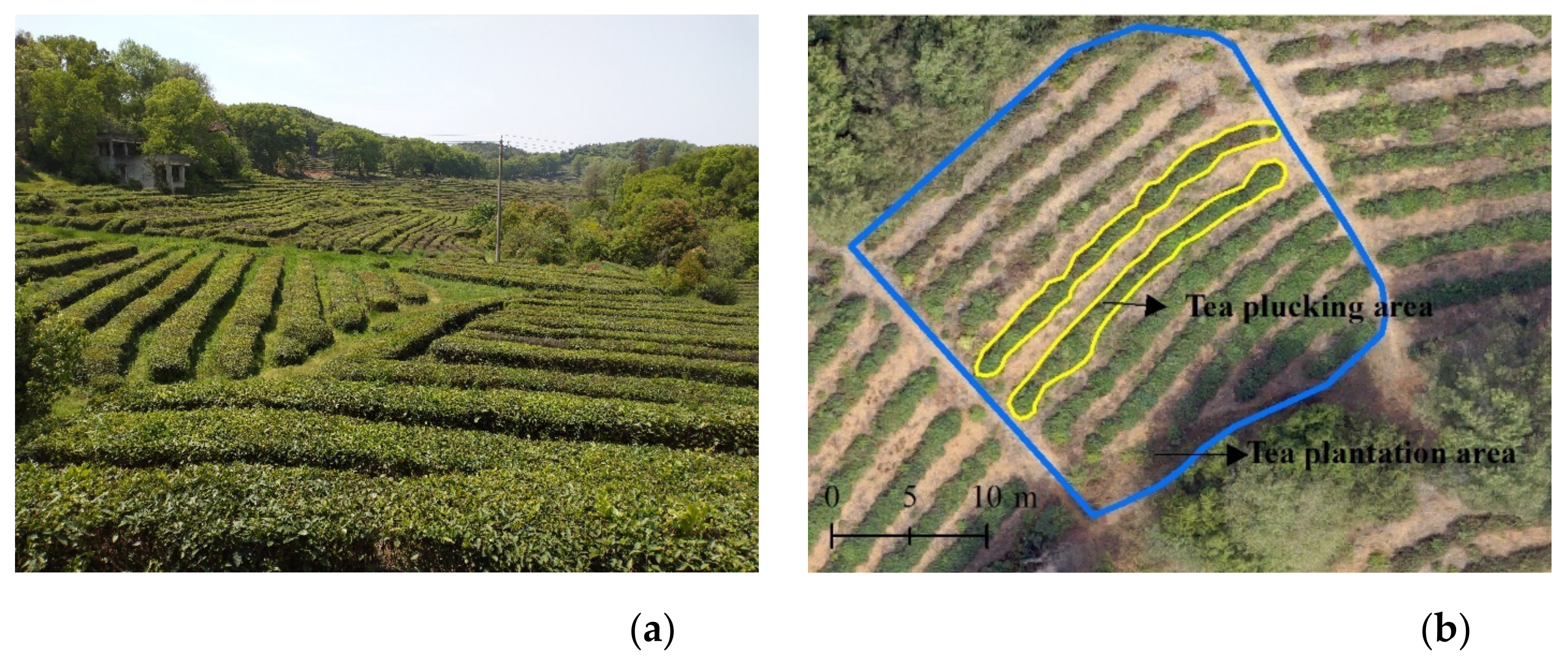

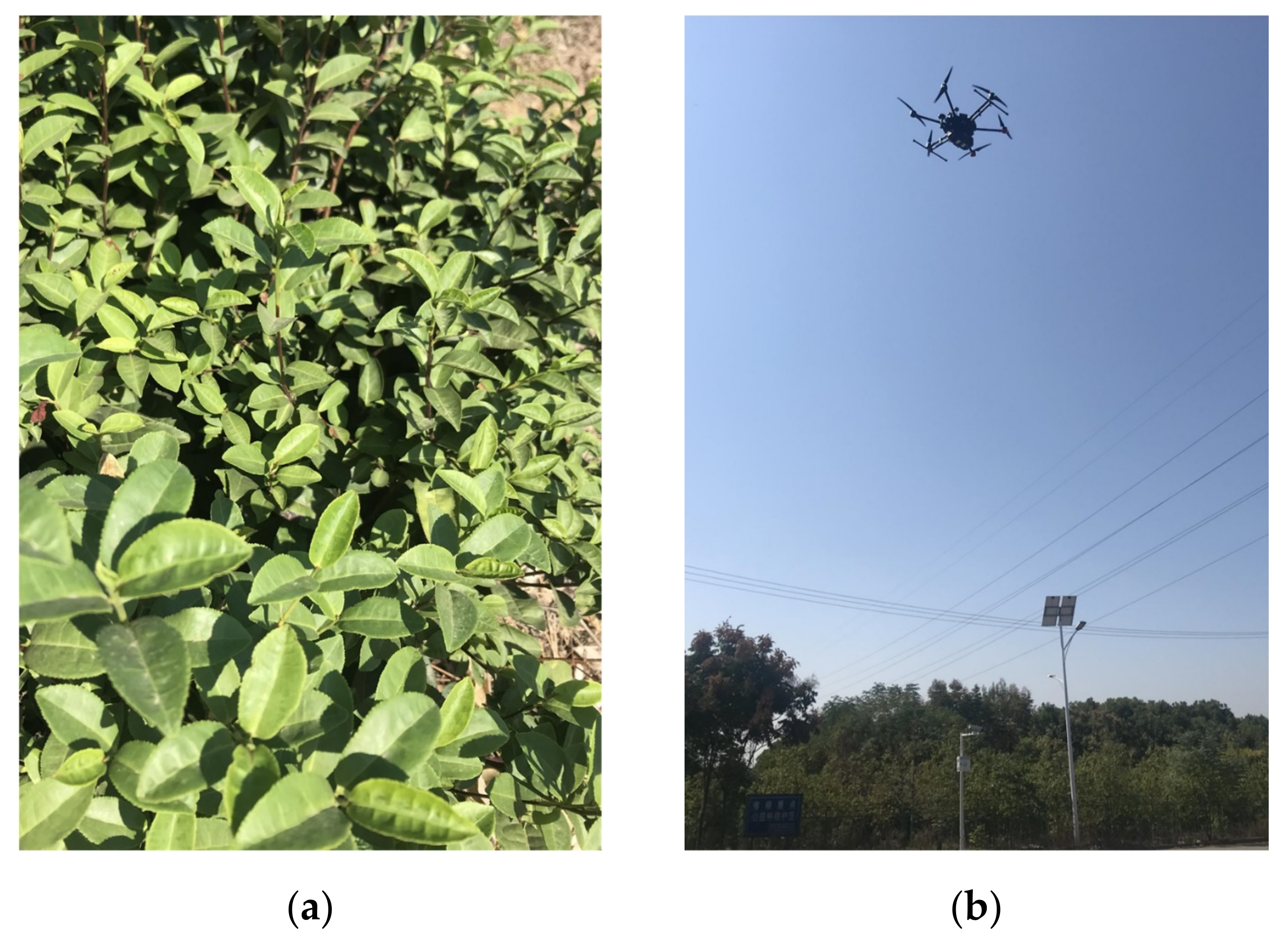

2.1. Study Area

2.2. UAV Data and Feature Extraction

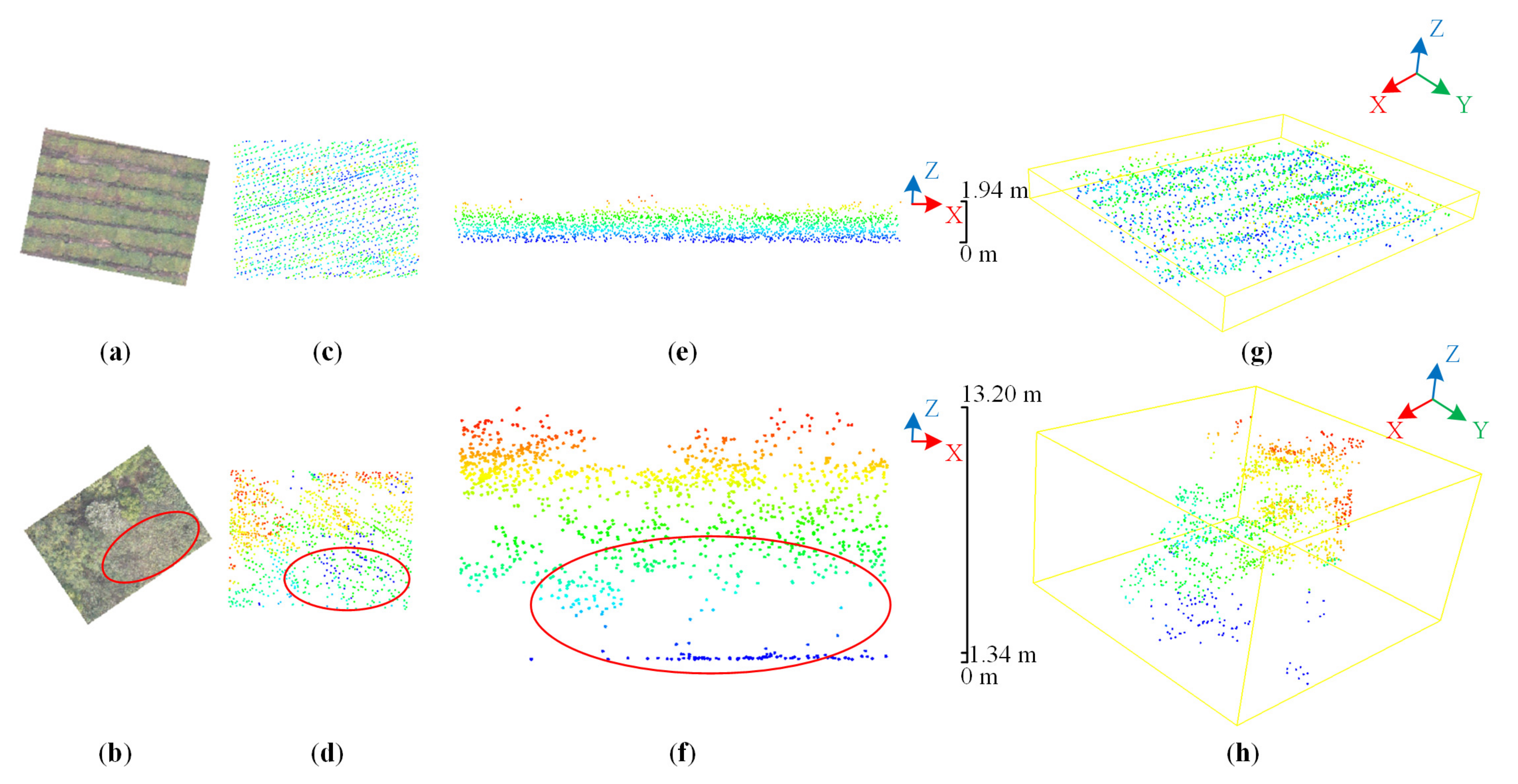

2.2.1. Lidar Point Clouds

2.2.2. Optical Imagery and Photogrammetric Point Clouds

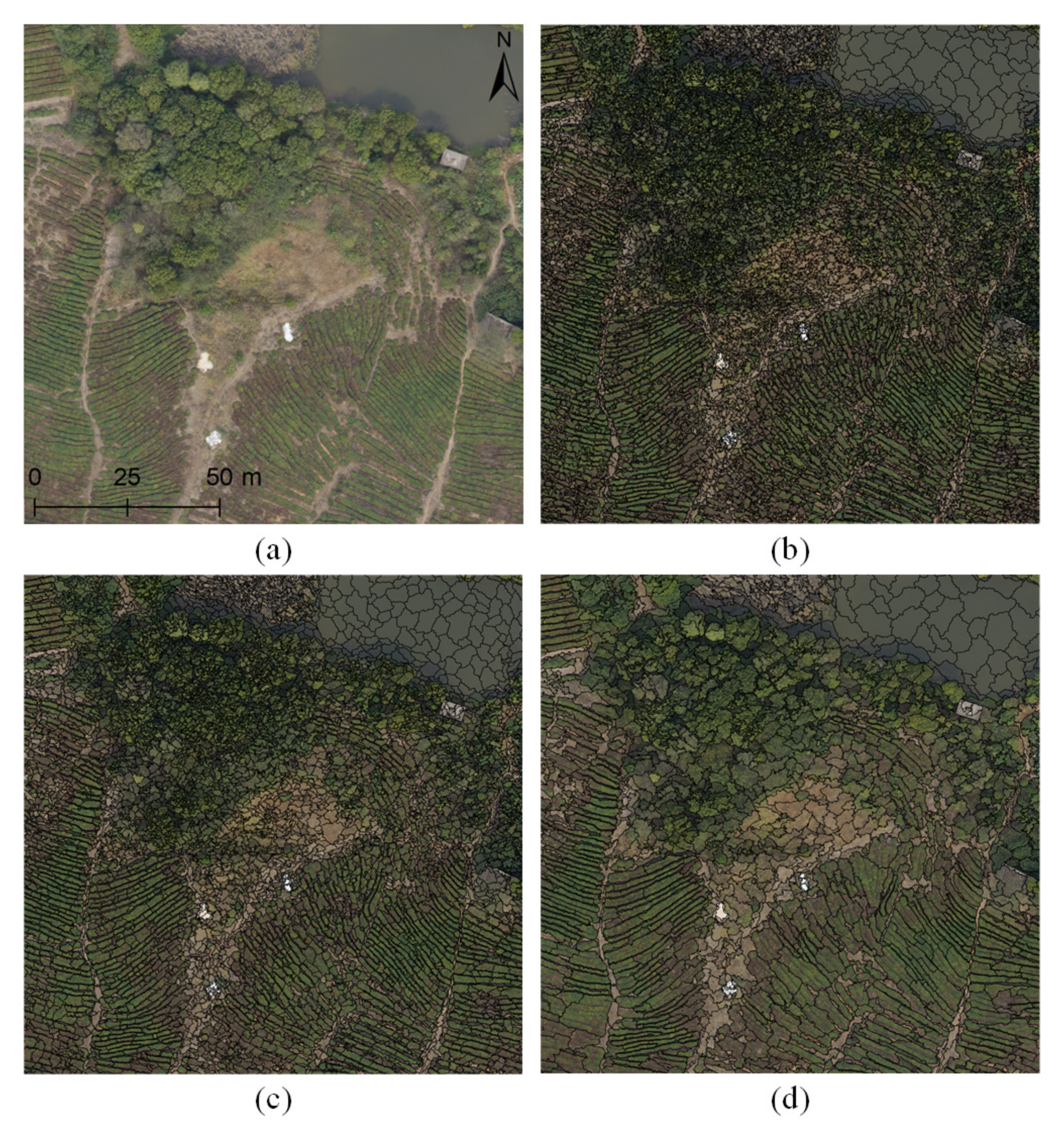

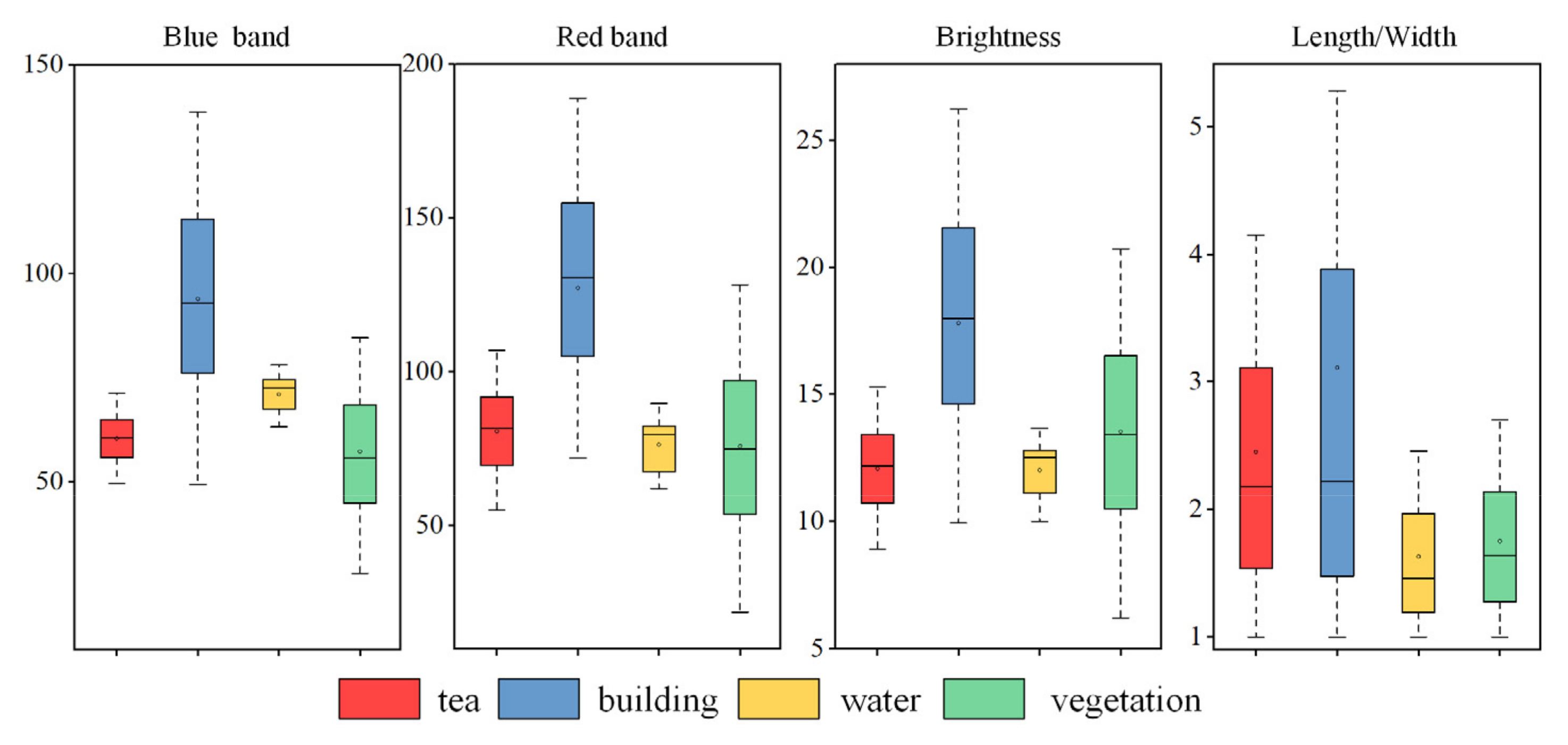

2.2.3. Feature Selection

2.3. Classification Models

2.4. Classification Algorithms

2.5. Accuracy Assessment

3. Results

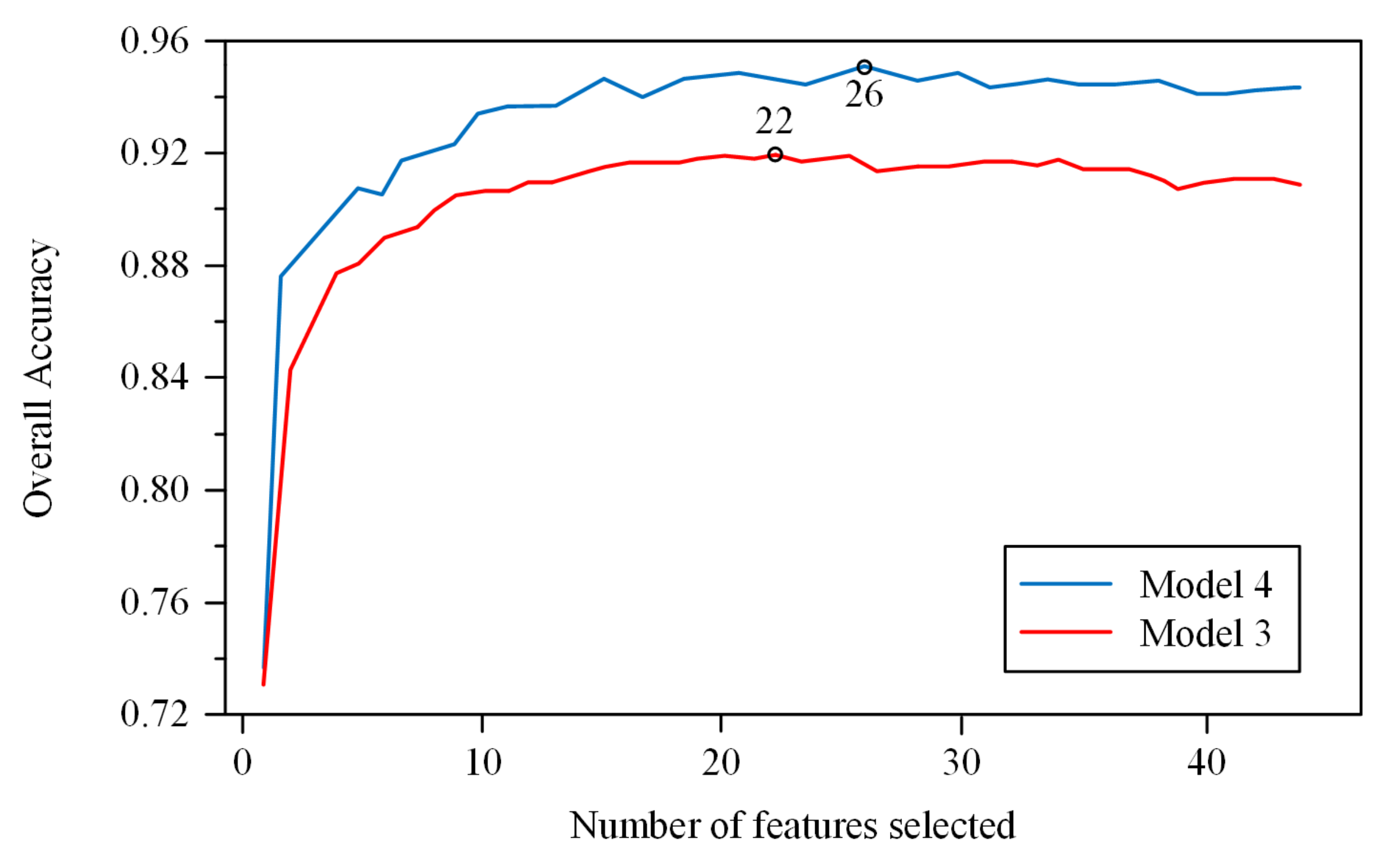

3.1. Feature Selection

3.2. Accuracy Assessment

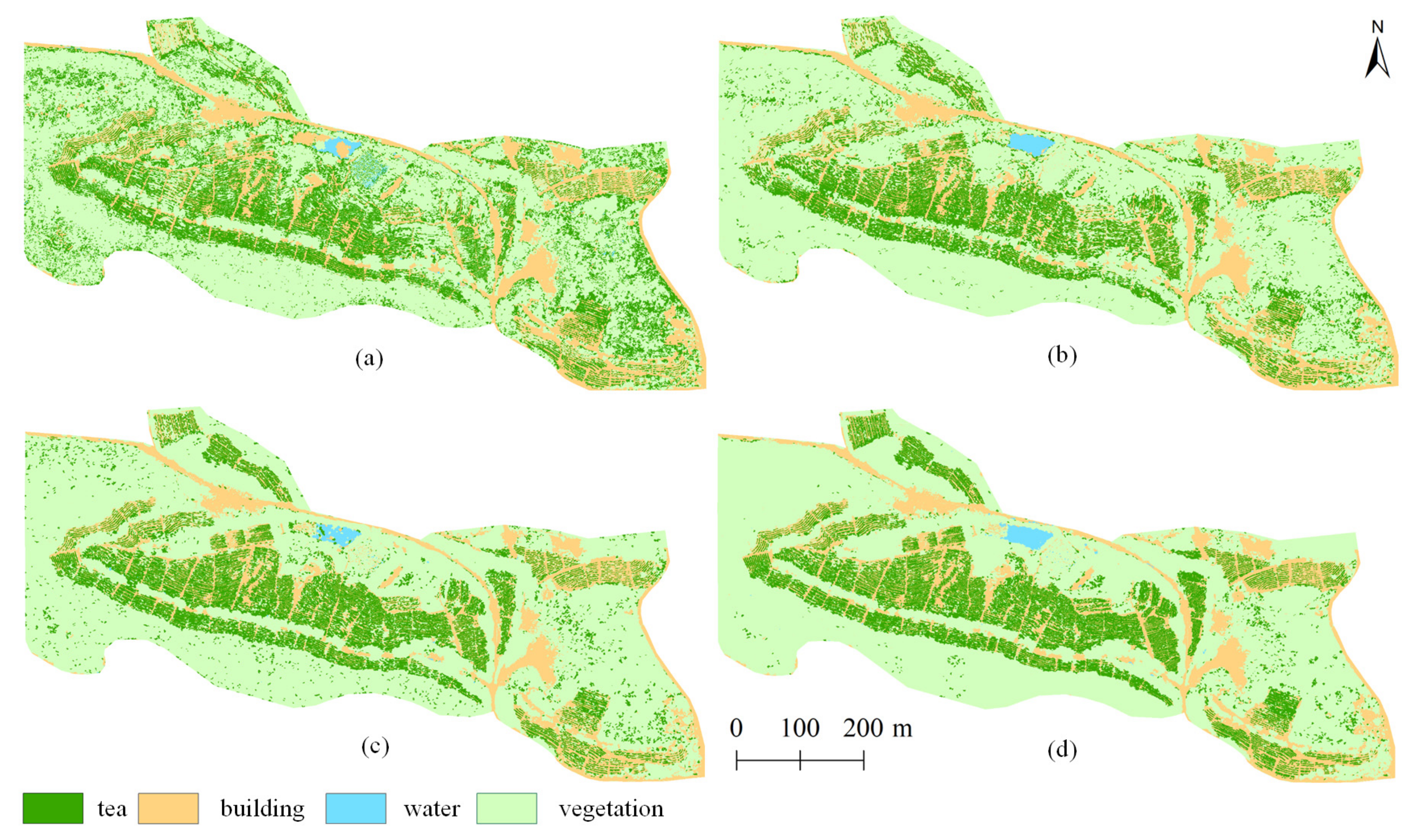

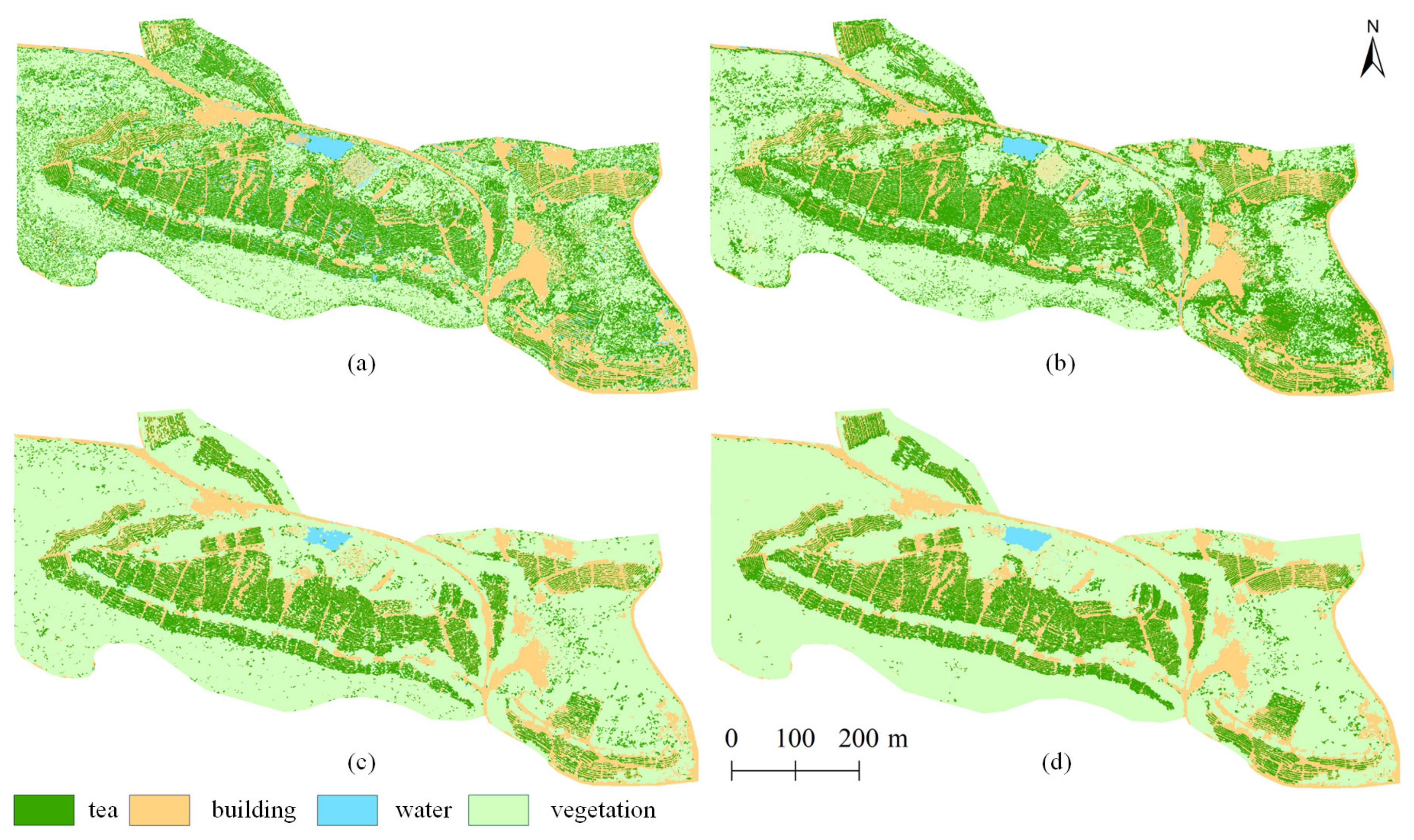

3.3. Visual Assessment

3.3.1. Global Assessment

3.3.2. Local Assessment

3.4. Feature Importance

3.5. Cost–Benefit Analysis

4. Discussion

4.1. Model and Classification Algorithm Analyses

4.2. UAV Remote Sensing for Mapping the Plucking Area of Tea Plantations

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Dutta, R.; Stein, A.; Bhagat, R.M. Integrating satellite images and spectroscopy to measuring green and black tea quality. Food Chem. 2011, 127, 866–874. [Google Scholar] [CrossRef]

- Xiang, J.; Zhi, X. Spatial structure and evolution of tea trade in the world from 1946 to 2016. Conomic Geogr. 2020, 40, 123–130. [Google Scholar]

- Wang, B.; Li, J.; Jin, X.; Xiao, H. Mapping tea plantations from multi-seasonal Landsat-8 OLI imageries using a random forest classifier. J. Indian Soc. Remote Sens. 2019, 47, 1315–1329. [Google Scholar] [CrossRef]

- Snapir, B.; Waine, T.W.; Corstanje, R.; Redfern, S.; De Silva, J.; Kirui, C. Harvest monitoring of Kenyan Tea Plantations with X-band SAR. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 930–938. [Google Scholar] [CrossRef]

- Bian, M.; Skidmore, A.K.; Schlerf, M.; Wang, T.; Liu, Y.; Zeng, R.; Fei, T. Predicting foliar biochemistry of tea (Camellia sinensis) using reflectance spectra measured at powder, leaf and canopy levels. ISPRS-J. Photogramm. Remote Sens. 2013, 78, 148–156. [Google Scholar] [CrossRef]

- Alvarez-Taboada, F.; Paredes, C.; Julián-Pelaz, J. Mapping of the invasive species hakea sericea using unmanned aerial vehicle (UAV) and WorldView-2 imagery and an object-oriented approach. Remote Sens. 2017, 9, 913. [Google Scholar] [CrossRef] [Green Version]

- Guo, Q.; Su, Y.; Hu, T.; Zhao, X.; Wu, F.; Li, Y.; Liu, J.; Chen, L.; Xu, G.; Lin, G.; et al. An integrated UAV-borne lidar system for 3D habitat mapping in three forest ecosystems across China. Int. J. Remote Sens. 2017, 38, 2954–2972. [Google Scholar] [CrossRef]

- Wang, D.; Wan, B.; Liu, J.; Su, Y.; Guo, Q.; Qiu, P.; Wu, X. Estimating aboveground biomass of the mangrove forests on northeast Hainan Island in China using an upscaling method from field plots, UAV-LiDAR data and Sentinel-2 imagery. Int. J. Appl. Earth Obs. Geoinf. 2020, 85, 101986. [Google Scholar] [CrossRef]

- Navarro, A.; Young, M.; Allan, B.; Carnell, P.; Macreadie, P.; Ierodiaconou, D. The application of unmanned aerial vehicles (UAVs) to estimate above-ground biomass of mangrove ecosystems. Remote Sens. Environ. 2020, 242, 111747. [Google Scholar] [CrossRef]

- Shao, Z.; Zhang, L.; Wang, L. Stacked sparse autoencoder modeling using the synergy of airborne LiDAR and satellite optical and SAR data to map forest above-ground biomass. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 5569–5582. [Google Scholar] [CrossRef]

- Husson, E.; Lindgren, F.; Ecke, F. Assessing biomass and metal contents in riparian vegetation along a pollution gradient using an unmanned aircraft system. Water Air Soil Pollut. 2014, 225, 1957. [Google Scholar] [CrossRef]

- Senthilnath, J.; Kandukuri, M.; Dokania, A.; Ramesh, K.N. Application of UAV imaging platform for vegetation analysis based on spectral-spatial methods. Comput. Electron. Agric. 2017, 140, 8–24. [Google Scholar] [CrossRef]

- Wang, D.; Wan, B.; Qiu, P.; Zuo, Z.; Wang, R.; Wu, X. Mapping height and aboveground biomass of mangrove forests on Hainan Island using UAV-LiDAR sampling. Remote Sens. 2019, 11, 2156. [Google Scholar] [CrossRef] [Green Version]

- Pourshamsi, M.; Garcia, M.; Lavalle, M.; Balzter, H. A machine-learning approach to PolInSAR and LiDAR data fusion for improved tropical forest canopy height estimation using NASA AfriSAR Campaign data. IEEE J. Sel. Top. Appl. Earth Observ. 2018, 11, 3453–3463. [Google Scholar] [CrossRef]

- Akar, Ö.; Güngör, O. Integrating multiple texture methods and ndvi to the random forest classification algorithm to detect tea and hazelnut plantation areas in northeast Turkey. Int. J. Remote Sens. 2015, 36, 442–464. [Google Scholar] [CrossRef]

- Dihkan, M.; Guneroglu, N.; Karsli, F.; Guneroglu, A. Remote sensing of tea plantations using an SVM classifier and pattern-based accuracy assessment technique. Int. J. Remote Sens. 2013, 34, 8549–8565. [Google Scholar] [CrossRef]

- Zhu, J.; Pan, Z.; Wang, H.; Huang, P.; Sun, J.; Qin, F.; Liu, Z. An improved multi-temporal and multi-feature tea plantation identification method using Sentinel-2 imagery. Sensors 2019, 19, 2087. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chuang, Y.-C.M.; Shiu, Y.-S. A comparative analysis of machine learning with WorldView-2 Pan-Sharpened imagery for tea crop mapping. Sensors 2016, 16, 594. [Google Scholar] [CrossRef] [Green Version]

- Zhao, X.; Guo, Q.; Su, Y.; Xue, B. Improved progressive TIN densification filtering algorithm for airborne LiDAR data in forested areas. ISPRS-J. Photogramm. Remote Sens. 2016, 117, 79–91. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.; McGaughey, R.J.; Andersen, H.-E.; Schreuder, G. Tree species differentiation using intensity data derived from leaf-on and leaf-off airborne laser scanner data. Remote Sens. Environ. 2009, 113, 1575–1586. [Google Scholar] [CrossRef]

- Ritchie, J.C.; Evans, D.L.; Jacobs, D.; Everitt, J.H.; Weltz, M.A. Measuring canopy structure with an airborne laser altimeter. Trans. ASAE 1993, 36, 1235–1238. [Google Scholar] [CrossRef]

- Qiu, P.; Wang, D.; Zou, X.; Yang, X.; Xie, G.; Xu, S.; Zhong, Z. Finer resolution estimation and mapping of mangrove biomass using UAV LiDAR and worldview-2 data. Forests 2019, 10, 871. [Google Scholar] [CrossRef] [Green Version]

- Shi, Y.; Wang, T.; Skidmore, A.K.; Heurich, M. Important LiDAR metrics for discriminating forest tree species in Central Europe. ISPRS-J. Photogramm. Remote Sens. 2018, 137, 163–174. [Google Scholar] [CrossRef]

- Richardson, J.J.; Moskal, L.M.; Kim, S.-H. Modeling approaches to estimate effective leaf area index from aerial discrete-return LIDAR. Agric. For. Meteorol. 2009, 149, 1152–1160. [Google Scholar] [CrossRef]

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Castilla, G.; Hay, G.J. Image objects and geographic objects. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin/Heidelberg, Gremany, 2008; pp. 91–110. [Google Scholar]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- White, J.C.; Tompalski, P.; Coops, N.C.; Wulder, M.A. Comparison of airborne laser scanning and digital stereo imagery for characterizing forest canopy gaps in coastal temperate rainforests. Remote Sens. Environ. 2018, 208, 1–14. [Google Scholar] [CrossRef]

- Wang, D.; Wan, B.; Qiu, P.; Su, Y.; Guo, Q.; Wang, R.; Sun, F.; Wu, X. Evaluating the performance of Sentinel-2, Landsat 8 and Pléiades-1 in mapping mangrove extent and species. Remote Sens. 2018, 10, 1468. [Google Scholar] [CrossRef] [Green Version]

- Pham, L.T.H.; Brabyn, L. Monitoring mangrove biomass change in Vietnam using SPOT images and an object-based approach combined with machine learning algorithms. ISPRS-J. Photogramm. Remote Sens. 2017, 128, 86–97. [Google Scholar] [CrossRef]

- Granitto, P.M.; Furlanello, C.; Biasioli, F.; Gasperi, F. Recursive feature elimination with random forest for PTR-MS analysis of agroindustrial products. Chemom. Intell. Lab. Syst. 2006, 83, 83–90. [Google Scholar] [CrossRef]

- Chadwick, J. Integrated LiDAR and IKONOS multispectral imagery for mapping mangrove distribution and physical properties. Int. J. Remote Sens. 2011, 32, 6765–6781. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, X. WorldView-2 satellite imagery and airborne LiDAR data for object-based forest species classification in a cool temperate rainforest environment. In Developments in Multidimensional Spatial Data Models; Abdul Rahman, A., Boguslawski, P., Gold, C., Said, M.N., Eds.; Springer: Berlin/Heidelberg, Gremany, 2013; pp. 103–122. [Google Scholar]

- Candare, R.J.; Japitana, M.; Cubillas, J.E.; Ramirez, C.B. Mapping of high value crops through an object-based svm model using lidar data and orthophoto in Agusan del Norte Philippines. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III-7, 165–172. [Google Scholar] [CrossRef] [Green Version]

- Raczko, E.; Zagajewski, B. Comparison of support vector machine, random forest and neural network classifiers for tree species classification on airborne hyperspectral APEX images. Eur. J. Remote Sens. 2017, 50, 144–154. [Google Scholar] [CrossRef] [Green Version]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS-J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Yu, H. Support vector machine. In Encyclopedia of Database Systems; Liu, L., ÖZsu, M.T., Eds.; Springer: Boston, MA, USA, 2009; pp. 2890–2892. [Google Scholar]

- Ahmed, O.S.; Franklin, S.E.; Wulder, M.A.; White, J.C. Characterizing stand-level forest canopy cover and height using Landsat time series, samples of airborne LiDAR, and the random forest algorithm. ISPRS-J. Photogramm. Remote Sens. 2015, 101, 89–101. [Google Scholar] [CrossRef]

- Raschka, S.; Patterson, J.; Nolet, C. Machine learning in python: Main developments and technology trends in data science, machine learning, and artificial intelligence. Information 2020, 11, 193. [Google Scholar] [CrossRef] [Green Version]

- Ghosh, A.; Joshi, P.K. A comparison of selected classification algorithms for mapping bamboo patches in lower Gangetic plains using very high resolution WorldView 2 imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 298–311. [Google Scholar] [CrossRef]

- Jones, T.G.; Coops, N.C.; Sharma, T. Assessing the utility of airborne hyperspectral and LiDAR data for species distribution mapping in the coastal Pacific Northwest, Canada. Remote Sens. Environ. 2010, 114, 2841–2852. [Google Scholar] [CrossRef]

- Liu, L.; Coops, N.C.; Aven, N.W.; Pang, Y. Mapping urban tree species using integrated airborne hyperspectral and LiDAR remote sensing data. Remote Sens. Environ. 2017, 200, 170–182. [Google Scholar] [CrossRef]

- Li, Q.; Wong, F.K.K.; Fung, T. Classification of mangrove species using combined WordView-3 and LiDAR data in Mai Po Nature Reserve, Hong Kong. Remote Sens. 2019, 11, 2114. [Google Scholar] [CrossRef] [Green Version]

- Pitt, D.G.; Woods, M.; Penner, M. A comparison of point clouds derived from stereo imagery and airborne laser scanning for the area-based estimation of forest inventory attributes in boreal Ontario. Can. J. Remote Sens. 2014, 40, 214–232. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; Tompalski, P.; Crawford, P.; Day, K.J.K. Updating residual stem volume estimates using ALS- and UAV-acquired stereo-photogrammetric point clouds. Int. J. Remote Sens. 2017, 38, 2938–2953. [Google Scholar] [CrossRef]

- Filippelli, S.K.; Lefsky, M.A.; Rocca, M.E. Comparison and integration of lidar and photogrammetric point clouds for mapping pre-fire forest structure. Remote Sens. Environ. 2019, 224, 154–166. [Google Scholar] [CrossRef]

- White, J.C.; Coops, N.C.; Wulder, M.A.; Vastaranta, M.; Hilker, T.; Tompalski, P. Remote sensing technologies for enhancing forest inventories: A review. Can. J. Remote Sens. 2016, 42, 619–641. [Google Scholar] [CrossRef] [Green Version]

- Li, N.; Zhang, D.; Li, L.; Zhang, Y. Mapping the spatial distribution of tea plantations using high-spatiotemporal-resolution imagery in northern Zhejiang, China. Forests 2019, 10, 856. [Google Scholar] [CrossRef] [Green Version]

- Kulawardhana, R.W.; Popescu, S.C.; Feagin, R.A. Fusion of lidar and multispectral data to quantify salt marsh carbon stocks. Remote Sens. Environ. 2014, 154, 345–357. [Google Scholar] [CrossRef]

| Lidar Metrics | Implication | |

|---|---|---|

| Height metrics | HMean | Mean of heights |

| HSD, HVAR | Standard deviation of heights, variance of heights | |

| HAAD | Average absolute deviation of heights | |

| HIQ | Interquartile distance of percentile height, H75th–H25th | |

| Percentile heights (H1, H5, H10, H20, H25, H30, H40, H50, H60, H70, H75, H80, H90, H95, H99) | Height percentiles. Point clouds are sorted according to the elevation. 15 height percentile metrics ranging from 1 to 99% height | |

| Canopy height model value | Value of CHM: | |

| Canopy volume metrics | CC0.2m | Canopy cover above 0.2 m |

| Gap | Canopy volume-related metric | |

| Leaf area index | Dimensionless quantity that characterizes plant canopies | |

| Density metrics | Canopy return density (D0, D1, D2, D3, D4, D5, D6, D7, D8, D9) | The proportion of points above the quantiles to the total number of points |

| Scale | Shape/Color | Compactness/Smoothness | Number of Objects |

|---|---|---|---|

| 20 | 0.3/0.7 | 0.5/0.5 | 145,945 |

| 20 | 0.2/0.8 | 0.5/0.5 | 153,366 |

| 20 | 0.1/0.9 | 0.5/0.5 | 149,075 |

| 30 | 0.3/0.7 | 0.5/0.5 | 63,705 |

| 30 | 0.2/0.8 | 0.5/0.5 | 67,822 |

| 30 | 0.1/0.9 | 0.5/0.5 | 67,152 |

| 40 | 0.3/0.7 | 0.5/0.5 | 35,660 |

| 40 | 0.2/0.8 | 0.5/0.5 | 38,463 |

| 40 | 0.1/0.9 | 0.5/0.5 | 38,418 |

| Feature | Implication |

|---|---|

| Spectral mean values (RGB) | The average of the spectral luminance values of all pixels in a wavelength band within an image object. |

| Brightness | Reflects the total spectral luminance difference among image objects. |

| Length/width | Represented by a minimal outsourcing rectangle. |

| Shape index | Used to reflect the smoothness of image object boundaries. |

| Textural feature | Entropy, contrast, homogeneity, and correlation, calculated through gray-level co-occurrence matrix (GLCM) with a distance of 1 [29]. GLCM is a matrix used to count the correlations between the gray levels of two pixels at a given spacing and orientation in an image. |

| Model 1 SVM | |||||

| Building | Tea | Vegetation | Water | UA | |

| building | 762 | 1 | 58 | 15 | 91.15% |

| tea | 42 | 867 | 485 | 2 | 62.10% |

| vegetation | 132 | 496 | 2164 | 4 | 77.40% |

| water | 6 | 0 | 4 | 38 | 79.17% |

| PA | 80.90% | 63.56% | 79.82% | 64.40% | |

| Kappa: 0.59 OA: 75.47% | |||||

| Model 2 SVM | |||||

| Building | Tea | Vegetation | Water | UA | |

| building | 786 | 3 | 48 | 6 | 93.24% |

| tea | 45 | 1062 | 308 | 0 | 75.05% |

| vegetation | 111 | 299 | 2355 | 2 | 85.11% |

| water | 0 | 0 | 0 | 51 | 1 |

| PA | 83.44% | 77.86% | 86.87% | 86.44% | |

| Kappa: 0.73 OA: 83.80% | |||||

| Model 3 SVM | |||||

| Building | Tea | Vegetation | Water | UA | |

| building | 886 | 35 | 51 | 0 | 91.15% |

| tea | 32 | 1142 | 97 | 0 | 89.85% |

| vegetation | 22 | 192 | 2570 | 6 | 92.11% |

| water | 3 | 1 | 3 | 53 | 88.33% |

| PA | 93.96% | 83.36% | 94.45% | 89.83% | |

| Kappa: 0.86 OA: 91.32% | |||||

| Model 4 SVM | |||||

| Building | Tea | Vegetation | Water | UA | |

| building | 874 | 24 | 35 | 4 | 93.28% |

| tea | 37 | 1143 | 103 | 0 | 89.09% |

| vegetation | 131 | 197 | 2573 | 4 | 91.73% |

| water | 0 | 0 | 0 | 51 | 1 |

| PA | 92.78% | 83.80% | 94.90% | 86.30% | |

| Kappa: 0.86 OA: 91.43% | |||||

| Model 1 RF | |||||

| Building | Tea | Vegetation | Water | UA | |

| building | 690 | 4 | 151 | 0 | 81.66% |

| tea | 109 | 1061 | 705 | 1 | 56.56% |

| vegetation | 126 | 299 | 1852 | 4 | 81.20% |

| water | 17 | 0 | 3 | 54 | 72.97% |

| PA | 73.25% | 77.79% | 68.31% | 91.53 | |

| Kappa: 0.56 OA: 72.04% | |||||

| Model 2 RF | |||||

| Building | Tea | Vegetation | Water | UA | |

| building | 799 | 15 | 157 | 0 | 82.29% |

| tea | 95 | 1101 | 326 | 1 | 72.30% |

| vegetation | 245 | 245 | 2222 | 5 | 88.80% |

| water | 18 | 3 | 6 | 53 | 66.25% |

| PA | 84.82% | 80.72% | 81.96% | 100% | |

| Kappa: 0.71 OA: 82.25% | |||||

| Model 3 RF | |||||

| Building | Tea | Vegetation | Water | UA | |

| building | 844 | 29 | 37 | 2 | 93.57% |

| tea | 42 | 1209 | 122 | 3 | 87.86% |

| vegetation | 55 | 142 | 2562 | 5 | 92.70% |

| water | 3 | 0 | 0 | 49 | 96.08% |

| PA | 89.50% | 88.25% | 94.16% | 83.05% | |

| Kappa: 0.86 OA: 91.58% | |||||

| Model 4 RF | |||||

| Building | Tea | Vegetation | Water | UA | |

| building | 335 | 21 | 36 | 0 | 93.76% |

| tea | 2 | 1281 | 78 | 0 | 91.90% |

| vegetation | 1 | 62 | 2597 | 3 | 96.00% |

| water | 4 | 0 | 0 | 56 | 88.89% |

| PA | 97.95% | 93.91% | 95.80% | 94.92% | |

| Kappa: 0.91 OA: 94.39% | |||||

| Component | Detailed Costs | ||

|---|---|---|---|

| UAV Images | UAV Images and Lidar | On-Ground Survey Method | |

| Equipment | UAV: 5384 | UAV: 5384 | Tape measures: 100 |

| Camera: 3053 | Lidar: 5000 | Rangefinder: 600 | |

| RTK: 3000 | Camera: 3053 | RTK: 3000 | |

| RTK: 3000 | |||

| Data collection | staff salaries: 2560 | staff salaries: 17,024 | staff salaries: 48,000 |

| vehicle hire cost: 400 | vehicle hire cost: 2000 | vehicle hire cost: 10,000 | |

| Time consumed | 128 person-hours | 448 person-hours | 1600 person-hours |

| Total | 14,397 | 35,461 | 61,700 |

| Total (per km²) | 360 | 886 | 1543 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Q.; Wan, B.; Cao, Z.; Zhang, Q.; Wang, D. Exploring the Potential of Unmanned Aerial Vehicle (UAV) Remote Sensing for Mapping Plucking Area of Tea Plantations. Forests 2021, 12, 1214. https://doi.org/10.3390/f12091214

Zhang Q, Wan B, Cao Z, Zhang Q, Wang D. Exploring the Potential of Unmanned Aerial Vehicle (UAV) Remote Sensing for Mapping Plucking Area of Tea Plantations. Forests. 2021; 12(9):1214. https://doi.org/10.3390/f12091214

Chicago/Turabian StyleZhang, Qingfan, Bo Wan, Zhenxiu Cao, Quanfa Zhang, and Dezhi Wang. 2021. "Exploring the Potential of Unmanned Aerial Vehicle (UAV) Remote Sensing for Mapping Plucking Area of Tea Plantations" Forests 12, no. 9: 1214. https://doi.org/10.3390/f12091214

APA StyleZhang, Q., Wan, B., Cao, Z., Zhang, Q., & Wang, D. (2021). Exploring the Potential of Unmanned Aerial Vehicle (UAV) Remote Sensing for Mapping Plucking Area of Tea Plantations. Forests, 12(9), 1214. https://doi.org/10.3390/f12091214