2.1. Mean Reversion of Profitability

As enterprises operate in a competitive environment, the economic theory predicts that their profitability rates revert to the economy-wide mean and converge. Despite the fact that businesses strive to maintain any competitive advantage and avoid mean reversion (

Porter 1985), they are often governed by the economic laws of competition (

Aghion et al. 2001;

Aghion 2002). New entrants provide competition for firms with higher performance, hence cutting future rents. Similarly, underperforming enterprises either survive by increasing their profitability or fail, resulting in a reversion to the mean of profitability. Accounting return rates exhibit signs of mean reversion, according to an extensive body of accounting research.

Beaver (

1970) found that firms with high ROEs face a subsequent fall in ROE, but firms with low ROEs experience subsequent growth, albeit at a slower rate.

Penman (

1991) discovered that although ROA reverts to the mean, it also has a persistent component that enables enterprises with a high ROA to continue to outperform in the future.

The existence of a mean reversion in profitability and growth (

Freeman et al. 1982;

Nissim and Penman 2001) towards the economy-wide mean is the key finding of prior research. However, the optimal way to model such mean reversion remains unanswered. Most of the research is predicated on the assumption that all enterprises in the economy exhibit the same level of mean reversion and return to a standard.

Numerous subsequent studies have endeavored to comprehend the drivers of business profitability time-series features—see

Kothari (

2001) for a review. According to these studies, the reversion of the mean of accounting returns is influenced by both firm- and industry-level variables. Firm-level drivers include firm size (

Lev 1983), future investment opportunities (

Nissim and Penman 2001), and measurement errors in accounting (

Penman and Zhang 2002).

Given the significance of membership in this sector,

Fairfield et al. (

2009) investigated whether a mean-reverting model at the industry level enhances the precision of profitability and growth estimates. While they found that industry-level analyses are incrementally informative for forecasting growth, forecasts of profitability are not improved by industry-level analyses.

Healy et al. (

2014) investigated the effect of cross-country disparities in product, capital, and labor market competition, as well as profit management, on the mean reversion of accounting return on assets. Using a sample of 48,465 unique enterprises from 49 nations from 1997 to 2008, they discovered, as predicted by the economic theory, that accounting for the mean of returns reverts faster in countries with higher product and capital market competition. In contrast, countries with more competitive labor markets experience a delayed return to the mean. The relationship between variations in country in labor market competition, earnings management, and mean reversion in accounting returns varies with business performance. When unexpected returns are favorable, country labor competition promotes a mean reversion, but when unexpected returns are negative, it decreases it. Accounting returns in nations with a higher mean earnings management reverts more slowly for profitable organizations and more quickly for loss-making businesses. Thus, incentives for earnings management = to slow or speed up the mean reversion of accounting returns are amplified in nations with a high earnings management tendency. Overall, these results indicate that national factors explain the mean reversion of accounting returns and are, therefore, relevant to firm valuation.

2.2. Machine Learning

During the past thirty years since

Ou and Penman (

1989) reported their findings, computing power, and machine learning techniques have advanced dramatically, allowing researchers to examine whether additional independent variables and more computer-intensive methodologies are useful for predicting future earnings. Over the past two decades, statisticians have developed dozens of forecasting methods, each with its own advantages and disadvantages. The conventional stepwise logistic model (

Ou and Penman 1989), elastic net (

Zou and Hastie 2005), and random forest model are these strategies (

Breiman 2001).

The evolution of profit forecasting reflects not only the growth of accounting studies but also the development of statistics and computer science. Early studies predicted future earnings using random walk and time-series models (

Ball and Watts 1972). Previous studies included more fundamental data in linear regression (

Deschamps and Mehta 1980) or logistic regression-based prediction models (

Ou and Penman 1989).

Numerous studies, in light of the rapid growth of computer science, have revealed the remarkable potential of machine learning models, which might significantly improve the accuracy of a firm’s earnings forecasts and subsequently generate abnormal profits.

The forecasting performance of regression models is contingent upon several variables, including the functional form, the choice of predictors, the choice of estimator, and the behavior of the error term. Perhaps a few of these limiting variables are responsible for the inferior performance of regression models compared to the random walk model in out-of-sample predictions. For financial analysts, a vast body of research, as summarized by

Ramnath et al. (

2008), demonstrates that analysts are systematically optimistic in their projections, either due to incentive-driven strategic reporting or innate cognitive bias.

Machine learning (ML) approaches are more flexible than regression methods because they do not rely on limiting statistical and economic assumptions and are not influenced by cognitive biases. Instead, they utilize past data patterns and trends to generate forecasts. ML focuses on maximizing the accuracy of prediction (

Mullainathan and Spiess 2017). While the term machine learning is widely used, several machine learning methods have been more adept at handling econometric difficulties in the data, such as multicollinearity and nonlinearity, than regression-based methods. In addition, many ML approaches do not require the user to provide a functional form beforehand, resulting in additional flexibility to discover a functional form that best matches the data. As a compromise, many ML approaches are more opaque than conventional regression. Thus, a researcher who selects ML over regression often improves the accuracy of out-of-sample forecasts but sacrifices interpretability. Given the issue at hand, which is enhancing the accuracy of out-of-sample profit estimates, we believe this is a worthwhile tradeoff.

Recent applications of random forests and stochastic gradient boosting have shown surprising results (

Zhou 2012;

Mullainathan and Spiess 2017). Both methods, which are based on ensemble learning, combine a large number of decision tree estimators. A key aspect of these methods, as opposed to regressions, is their capacity to estimate models with a greater number of predictors than observations. In addition, the theoretical literature provides little direction for the selection of crucial financial variables and functional forms in financial statement analysis. High-dimensional predictor sets may enter in a nonlinear manner with several interactions.

By contrast, machine learning algorithms are specifically built to tolerate complicated relationships and cast a wide net in their specification search. They give a strong out-of-sample predictive performance by employing “regularization” (e.g., tweaking a parameter like the number of decision trees in random forests) for model selection and overfitting mitigation.

Decision trees are a prominent method for incorporating nonlinearities and interactions in statistical learning. Contrary to regressions, trees are designed to group observations with comparable predictors and are constructed nonparametrically. The forecast is the mean of the outcome variable within each group.

Two approaches are used to regularize decision trees in random forests. Initially, in the bootstrap aggregation technique, also known as “bagging” (

Breiman 2001), a tree is constructed based on each

n distinct data bootstrap samples. There are

n projections for a particular observation, and the final forecast is the simple average of

n predictions. The tendency of trees to overfit individual bootstrap samples renders their forecasts inefficient.

Anand et al. (

2019) investigated whether classification trees, as a machine learning technique, can produce out-of-sample profitability estimates that are superior to random walk forecasts. Out-of-sample forecasts of directional changes (either increases or decreases) are generated for the following five profitability measures: return on equity (ROE), return on assets (ROA), return on net operating assets (RNOA), cash flow from operations (CFO), and Free Cash Flow (FCF). Based on a minimal set of independent variables, their methodology obtains classification accuracies ranging from 57 to 64% as opposed to 50% for the random walk, and the proportional differences between ML and random walk are highly significant. In addition, they found that the predictive ability of their ML approach is unaffected by a five-year forecast horizon. Furthermore, they found that the two cash-flow measures (CFO and FCF), particularly when accruals are included in the prediction of cash flows, have a greater classification accuracy than the three earnings-based measures of profitability (ROE, ROA, and RNOA). Overall, their findings suggest that ML approaches have the potential to be utilized for predicting profitability.

Xinyue et al. (

2020) exhaustively evaluated the feasibility and suitability of adopting the machine learning model for forecasting company earnings and compared their results with analysts’ consensus estimates and traditional statistical models with logistic regression. They discovered that their approach only surpasses logistic regression methods and cannot surpass analysts.

In their work,

Kureljusic and Reisch (

2022) used publicly available information for European enterprises and new machine-learning algorithms to estimate future revenues in an IFRS environment, investigating the advantages of predictive analytics for both the preparers and users of financial predictions. Their empirical findings, based on 3000 firm-year data from 2010 to 2019, show that machine learning gives revenue estimates that are as accurate or more accurate than those of financial experts. Yet, their sample size is very small to employ machine-learning algorithms and generalize their results.

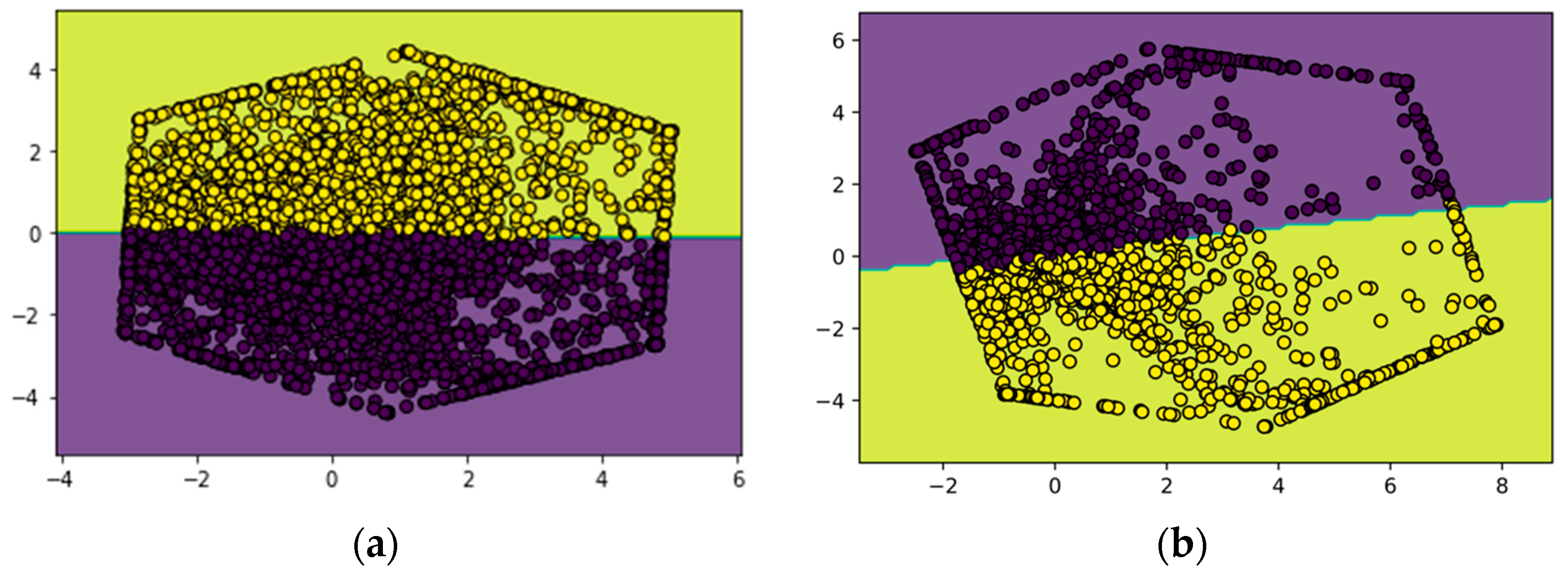

We build upon the existing literature in several ways. We were motivated by the growing literature on machine learning and earnings prediction. We introduce in the literature the concept of the nested cross-validation of algorithms. In the relevant literature, we spotted the following gaps. Both

Anand et al. (

2019),

Bao et al. (

2020), and

Chen et al. (

2022) made arbitrary splits in the training and test samples. These splits affect the reported performance of their algorithms (

Rácz et al. 2021). With the nested cross-validation, we tackle this drawback, and we report more robust results. In addition to this, relevant studies use “random walk”

1 as a benchmark; this comparison is “unfair” as all complex machine learning algorithms can beat the random walk. We create a benchmark, starting with a simple algorithm, and then employ more complex algorithms to examine whether we can beat this benchmark. Furthermore, we expand the study of

Anand et al. (

2019) by examining the mean reversion of earnings not only within industries but also within countries and the entire sample of firms.