The Sequence of Steps: A Key Concept Missing in Surgical Training—A Systematic Review and Recommendations to Include It

Abstract

1. Introduction

2. Methods

2.1. Research Questions

2.2. Protocol

2.3. Eligibility Criteria

2.4. Search Strategy

2.5. Study Selection

2.6. Data Collection

2.7. Validity of Assessment’s Instruments

2.8. Quality Assessment

3. Results

3.1. Study Selection

3.2. Characteristics of Included Articles

3.3. Strategies to Teach the Standard Sequence of Steps

3.4. Strategies to Assess the Learning of the Sequence of Steps

3.5. Instruments to Assess the Learning of the Sequence of Steps

3.6. Outcomes to Assess the Learning of the Sequence of Steps

3.7. Validity of Instruments to Assess the Sequence of Steps

3.8. Effectiveness of Strategies to Teach the Standard Sequence of Steps

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

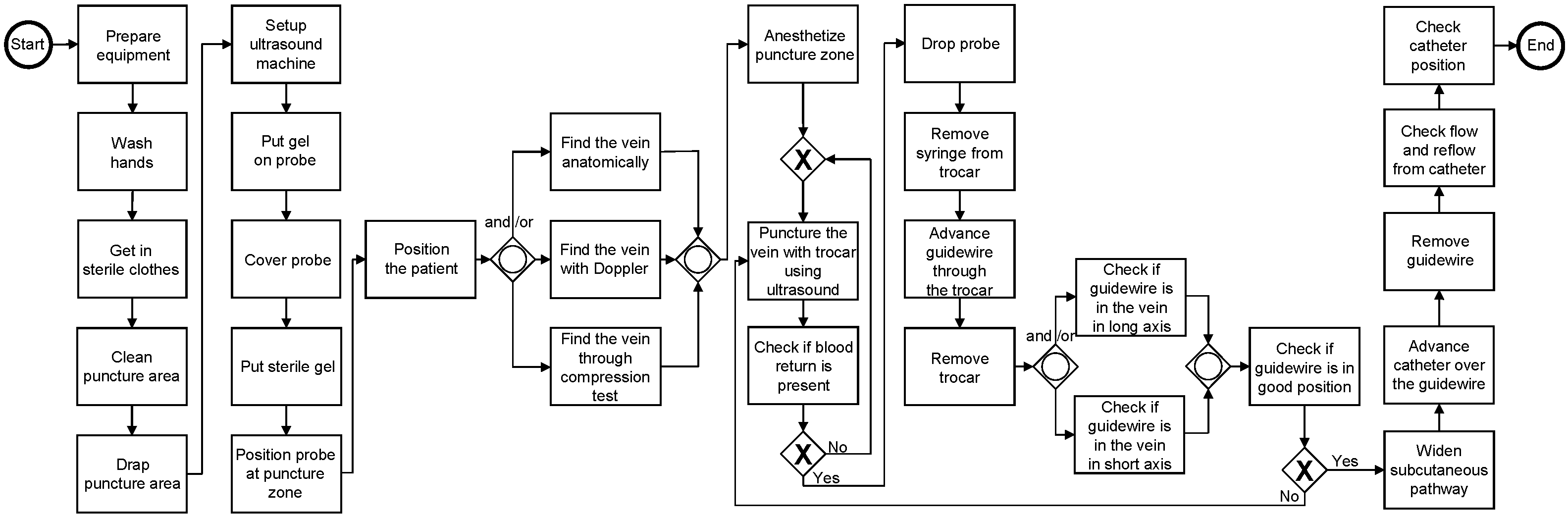

| BPMN | Business Process Model and Notation |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses statement |

| EMBASE | Excerpta Medica dataBASE |

| CINAHL | Cumulative Index to Nursing and Allied Health Literature |

| MERSQI | Medical Education Research Study Quality Instrument |

| SPM | Surgical Process Model |

References

- Bloom, B.S.; Krathwohl, D.R. Taxonomy of Educational Objectives: The Classification of Educational Goals by a committee of college and university examiners. In Handbook I: Cognitive Domain; Longman: New York, NY, USA, 1956. [Google Scholar]

- Menix, K.D. Domains of learning: Interdependent components of achievable learning outcomes. J. Contin. Educ. Nurs. 1996, 27, 200–208. [Google Scholar] [CrossRef] [PubMed]

- Shaker, D. Cognitivism and psychomotor skills in surgical training: From theory to practice. Int. J. Med. Educ. 2018, 9, 253–254. [Google Scholar] [CrossRef] [PubMed]

- Oermann, M.H. Psychomotor skill development. J. Contin. Educ. Nurs. 1990, 21, 202–204. [Google Scholar] [CrossRef] [PubMed]

- da Costa, G.O.F.; Rocha, H.A.L.; de Moura Júnior, L.G.; Medeiros, F.d.C. Taxonomy of educational objectives and learning theories in the training of laparoscopic surgical techniques in a simulation environment. Rev. Col. Bras. Cir. 2018, 45, e1954. [Google Scholar]

- Apramian, T.; Cristancho, S.; Watling, C.; Ott, M.; Lingard, L. Thresholds of Principle and Preference: Exploring Procedural Variation in Postgraduate Surgical Education. Acad. Med. 2015, 90, S70–S76. [Google Scholar] [CrossRef]

- Apramian, T.; Cristancho, S.; Watling, C.; Ott, M.; Lingard, L. “They Have to Adapt to Learn”: Surgeons’ Perspectives on the Role of Procedural Variation in Surgical Education. J. Surg. Educ. 2016, 73, 339–347. [Google Scholar] [CrossRef] [PubMed]

- Golden, A.; Alaska, Y.; Levinson, A.T.; Davignon, K.R.; Lueckel, S.N.; Lynch, K.A.J.; Jay, G.; Kobayashi, L. Simulation-Based Examination of Arterial Line Insertion Method Reveals Interdisciplinary Practice Differences. Simul. Healthc. 2020, 15, 89–97. [Google Scholar] [CrossRef] [PubMed]

- de la Fuente, R.; Fuentes, R.; Munoz-Gama, J.; Riquelme, A.; Altermatt, F.R.; Pedemonte, J.; Corvetto, M.; Sepúlveda, M. Control-flow analysis of procedural skills competencies in medical training through process mining. Postgrad. Med. J. 2020, 96, 250–256. [Google Scholar] [CrossRef]

- Neumuth, T. Surgical process modeling. Innov. Surg. Sci. 2017, 2, 123–137. [Google Scholar] [CrossRef]

- Neumuth, T.; Jannin, P.; Strauss, G.; Meixensberger, J.; Burgert, O. Validation of knowledge acquisition for surgical process models. J. Am. Med. Inform. Assoc. 2009, 16, 72–80. [Google Scholar] [CrossRef]

- Rojas, E.; Munoz-Gama, J.; Sepúlveda, M.; Capurro, D. Process mining in healthcare: A literature review. J. Biomed. Inform. 2016, 61, 224–236. [Google Scholar] [CrossRef]

- Légaré, F.; Freitas, A.; Thompson-Leduc, P.; Borduas, F.; Luconi, F.; Boucher, A.; Witteman, H.O.; Jacques, A. The Majority of Accredited Continuing Professional Development Activities Do Not Target Clinical Behavior Change. Acad. Med. 2015, 90, 197–202. [Google Scholar] [CrossRef]

- Fecso, A.B.; Szasz, P.; Kerezov, G.; Grantcharov, T.P. The Effect of Technical Performance on Patient Outcomes in Surgery. Ann. Surg. 2017, 265, 492–501. [Google Scholar] [CrossRef]

- Leotsakos, A.; Zheng, H.; Croteau, R.; Loeb, J.M.; Sherman, H.; Hoffman, C.; Morganstein, L.; O’Leary, D.; Bruneau, C.; Lee, P.; et al. Standardization in patient safety: The WHO High 5s project. Int. J. Qual. Health Care 2014, 26, 109–116. [Google Scholar] [CrossRef]

- Skjold-Ødegaard, B.; Søreide, K. Standardization in surgery: Friend or foe? Br. J. Surg. 2020, 107, 1094–1096. [Google Scholar] [CrossRef]

- de la Fuente, R.; Fuentes, R.; Munoz-Gama, J.; Dagnino, J.; Sepúlveda, M. Delphi Method to Achieve Clinical Consensus for a BPMN Representation of the Central Venous Access Placement for Training Purposes. Int. J. Environ. Res. Public Health 2020, 17, 3889. [Google Scholar] [CrossRef]

- Frank, J.R.; Snell, L.S.; Cate, O.T.; Holmboe, E.S.; Carraccio, C.; Swing, S.R.; Harris, P.; Glasgow, N.J.; Campbell, C.; Dath, D.; et al. Competency-based medical education: Theory to practice. Med. Teach. 2010, 32, 638–645. [Google Scholar] [CrossRef]

- McKinley, R.K.; Strand, J.; Ward, L.; Gray, T.; Alun-Jones, T.; Miller, H. Checklists for assessment and certification of clinical procedural skills omit essential competencies: A systematic review. Med. Educ. 2008, 42, 338–349. [Google Scholar] [CrossRef]

- Lalys, F.; Jannin, P. Surgical process modelling: A review. Int. J. Comput. Assist. Radiol. Surg. 2014, 9, 495–511. [Google Scholar] [CrossRef]

- Cook, D.A.; Hamstra, S.J.; Brydges, R.; Zendejas, B.; Szostek, J.H.; Wang, A.T.; Erwin, P.J.; Hatala, R. Comparative effectiveness of instructional design features in simulation-based education: Systematic review and meta-analysis. Med. Teach. 2013, 35, e867–e898. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. BMJ 2009, 339, b2535. [Google Scholar] [CrossRef]

- Bramer, W.M.; Rethlefsen, M.L.; Kleijnen, J.; Franco, O.H. Optimal database combinations for literature searches in systematic reviews: A prospective exploratory study. Syst. Rev. 2017, 6, 245. [Google Scholar] [CrossRef]

- Borgersen, N.J.; Naur, T.M.H.; Sørensen, S.M.D.; Bjerrum, F.; Konge, L.; Subhi, Y.; Thomsen, A.S.S. Gathering Validity Evidence for Surgical Simulation. Ann. Surg. 2018, 267, 1063–1068. [Google Scholar] [CrossRef]

- Cook, D.A.; Reed, D.A. Appraising the quality of medical education research methods: The Medical Education Research Study Quality Instrument and the Newcastle-Ottawa Scale-Education. Acad. Med. 2015, 90, 1067–1076. [Google Scholar] [CrossRef]

- Reed, D.A.; Cook, D.A.; Beckman, T.J.; Levine, R.B.; Kern, D.E.; Wright, S.M. Association Between Funding and Quality of Published Medical Education Research. JAMA 2007, 298, 1002–1009. [Google Scholar] [CrossRef]

- Chapman, D.M.; Marx, J.A.; Honigman, B.; Rosen, P.; Cavanaugh, S.H. Emergency Thoracotomy: Comparison of Medical Student, Resident, and Faculty Performances on Written, Computer, and Animal-model Assessments. Acad. Emerg. Med. 1994, 1, 373–381. [Google Scholar] [CrossRef]

- Lammers, R.L. Learning and Retention Rates after Training in Posterior Epistaxis Management. Acad. Emerg. Med. 2008, 15, 1181–1189. [Google Scholar] [CrossRef]

- Lehmann, R.; Seitz, A.; Bosse, H.M.; Lutz, T.; Huwendiek, S. Student perceptions of a video-based blended learning approach for improving pediatric physical examination skills. Ann. Anat.-Anat. Anz. 2016, 208, 179–182. [Google Scholar] [CrossRef]

- Lehmann, R.; Thiessen, C.; Frick, B.; Bosse, H.M.; Nikendei, C.; Hoffmann, G.F.; Tönshoff, B.; Huwendiek, S. Improving Pediatric Basic Life Support Performance Through Blended Learning With Web-Based Virtual Patients: Randomized Controlled Trial. J. Med. Internet Res. 2015, 17, e162. [Google Scholar] [CrossRef]

- Balayla, J.; Bergman, S.; Ghitulescu, G.; Feldman, L.S.; Fraser, S.A. Knowing the operative game plan: A novel tool for the assessment of surgical procedural knowledge. Can. J. Anesth. Can. D’anesthésie 2012, 55, S158–S162. [Google Scholar] [CrossRef]

- Guerlain, S.; Green, K.B.; LaFollette, M.; Mersch, T.C.; Mitchell, B.A.; Poole, G.R.; Calland, J.F.; Lv, J.; Chekan, E.G. Improving surgical pattern recognition through repetitive viewing of video clips. IEEE Trans. Syst. Man Cybern. Part A Syst. Humans 2004, 34, 699–707. [Google Scholar] [CrossRef]

- Brenner, M.; Hoehn, M.; Pasley, J.; Dubose, J.; Stein, D.; Scalea, T. Basic endovascular skills for trauma course. J. Trauma Acute Care Surg. 2014, 77, 286–291. [Google Scholar] [CrossRef] [PubMed]

- Cheung, J.J.H.; Kulasegaram, K.M.; Woods, N.N.; Moulton, C.a.; Ringsted, C.V.; Brydges, R. Knowing How and Knowing Why: Testing the effect of instruction designed for cognitive integration on procedural skills transfer. Adv. Health Sci. Educ. 2018, 23, 61–74. [Google Scholar] [CrossRef] [PubMed]

- Aragon, C.E.; Zibrowski, E.M. Does Exposure to a Procedural Video Enhance Preclinical Dental Student Performance in Fixed Prosthodontics? J. Dent. Educ. 2008, 72, 67–71. [Google Scholar] [CrossRef] [PubMed]

- Zendejas, B.; Wang, A.T.; Brydges, R.; Hamstra, S.J.; Cook, D.A. Cost: The missing outcome in simulation-based medical education research: A systematic review. Surgery 2013, 153, 160–176. [Google Scholar] [CrossRef]

- Nicholls, D.; Sweet, L.; Muller, A.; Hyett, J. Teaching psychomotor skills in the twenty-first century: Revisiting and reviewing instructional approaches through the lens of contemporary literature. Med. Teach. 2016, 38, 1056–1063. [Google Scholar] [CrossRef]

- Anderson, L.W.; Krathwohl, D.R.; Airasian, P.W.; Cruikshank, K.A.; Mayer, R.E.; Pintrich, P.R.; Raths, J.; Wittrock, M.C. (Eds.) A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Longman: New York, NY, USA, 2001. [Google Scholar]

- Gilbert, F.W.; Harkins, J.; Agrrawal, P.; Ashley, T. Internships as clinical rotations in business: Enhancing access and options. Int. J. Bus. Educ. 2021, 162, 126–140. [Google Scholar]

- Sullivan, M.E.; Yates, K.A.; Inaba, K.; Lam, L.; Clark, R.E. The Use of Cognitive Task Analysis to Reveal the Instructional Limitations of Experts in the Teaching of Procedural Skills. Acad. Med. 2014, 89, 811–816. [Google Scholar] [CrossRef]

- Vedula, S.S.; Hager, G.D. Surgical data science: The new knowledge domain. Innov. Surg. Sci. 2017, 2, 109–121. [Google Scholar] [CrossRef]

- Skiena, S.S. The Data Science Design Manual; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Bowen, D.K.; Song, L.; Tasian, G.E. Patterns of CT and US utilization for nephrolithiasis in the emergency department. J. Urol. 2019, 201, e98–e99. [Google Scholar] [CrossRef]

- Rao, A.; Tait, I.; Alijani, A. Systematic review and meta-analysis of the role of mental training in the acquisition of technical skills in surgery. Am. J. Surg. 2015, 210, 545–553. [Google Scholar] [CrossRef]

- Wallace, L.; Raison, N.; Ghumman, F.; Moran, A.; Dasgupta, P.; Ahmed, K. Cognitive training: How can it be adapted for surgical education? Surgeon 2017, 15, 231–239. [Google Scholar] [CrossRef] [PubMed]

- Ellaway, R.H.; Pusic, M.V.; Galbraith, R.M.; Cameron, T. Developing the role of big data and analytics in health professional education. Med. Teach. 2014, 36, 216–222. [Google Scholar] [CrossRef] [PubMed]

- Munoz-Gama, J.; Galvez, V.; de la Fuente, R.; Sepúlveda, M.; Fuentes, R. Interactive Process Mining for Medical Training. In Interactive Process Mining in Healthcare; Fernandez-Llatas, C., Ed.; Springer: Cham, Switzerland, 2021; pp. 233–242. [Google Scholar]

- van der Aalst, W. Process Mining: Data Science in Action; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Crebbin, W.; Beasley, S.W.; Watters, D.A.K. Clinical decision making: How surgeons do it. ANZ J. Surg. 2013, 83, 422–428. [Google Scholar] [CrossRef]

- Lateef, F. Clinical Reasoning: The Core of Medical Education and Practice. Int. J. Intern. Emerg. Med. 2018, 1, 1015. [Google Scholar]

- Osterweil, L.J.; Conboy, H.M.; Clarke, L.A.; Avrunin, G.S. Process-Model-Driven Guidance to Reduce Surgical Procedure Errors: An Expert Opinion. Semin. Thorac. Cardiovasc. Surg. 2019, 31, 453–457. [Google Scholar] [CrossRef]

| Authors | Instructional Strategy | Outcome | Effectiveness |

|---|---|---|---|

| Aragon and Zibrowski [35] | Video demonstration | Grades obtained in the practical exam (evaluators rate students using a twenty-eight items instrument). | Effective only for one of the three procedures analyzed. * |

| Guerlain et al. [32] | Video demonstration | Adherence to the standard sequence of steps (participants answered questions about the sequence of steps). | Effective, it improved performance in procedural questions. * |

| Lammers [28] | Informing subjects of all performance and sequence errors immediately | Adherence to the standard sequence of steps (evaluators counted the number of sequence errors). | There were no significant differences between the control and experimental group. |

| Lehmann et al. [29] | Video demonstration | Benefits for learning (participants answered an open question). | Videos showed a concrete and standard sequence of steps. |

| Authors | Strategy | Outcome | Validity of Instrument |

|---|---|---|---|

| Balayla et al. [31] | Participants say the steps aloud. | Omissions (evaluators counted these errors and discounted them from the checklist’s total score). | Content, response, and consequential validity. |

| Brenner et al. [33] | Performing the procedure in a virtual simulator. | Adherence to the standard sequence of steps (evaluators used a 5-point Likert scale). | None. |

| Chapman et al. [27] | · Students write the steps. · Performing the procedure in a computer simulation. · Performing the procedure on an animal model, and saying the steps aloud. | Adherence to the standard sequence of steps and omissions (both outcomes were evaluated by assigning a score to each step using a rating scale). | Content validity for all assessment strategies. Internal structure validity for animal and computer assessment. |

| Cheung et al. [34] | Participants write the steps in the proper sequence. | Adherence to the standard sequence of steps (evaluators assigned points to each participant). | None. |

| Guerlain et al. [32] | Students answer a test with questions about the sequence aspect. | Adherence to the standard sequence of steps. | None. |

| Lammers [28] | Performing the procedure on a model. | Adherence to the standard sequence of steps (evaluators counted the number of sequence errors). | Response validity. |

| Lehmann et al. [30] | Performing the procedure on a mannequin. | Adherence to the standard sequence of steps (evaluators rated each step through a rating scale, considering whether the step was omitted, done in the correct or incorrect position). | Content and response validity. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Galvez-Yanjari, V.; de la Fuente, R.; Munoz-Gama, J.; Sepúlveda, M. The Sequence of Steps: A Key Concept Missing in Surgical Training—A Systematic Review and Recommendations to Include It. Int. J. Environ. Res. Public Health 2023, 20, 1436. https://doi.org/10.3390/ijerph20021436

Galvez-Yanjari V, de la Fuente R, Munoz-Gama J, Sepúlveda M. The Sequence of Steps: A Key Concept Missing in Surgical Training—A Systematic Review and Recommendations to Include It. International Journal of Environmental Research and Public Health. 2023; 20(2):1436. https://doi.org/10.3390/ijerph20021436

Chicago/Turabian StyleGalvez-Yanjari, Victor, Rene de la Fuente, Jorge Munoz-Gama, and Marcos Sepúlveda. 2023. "The Sequence of Steps: A Key Concept Missing in Surgical Training—A Systematic Review and Recommendations to Include It" International Journal of Environmental Research and Public Health 20, no. 2: 1436. https://doi.org/10.3390/ijerph20021436

APA StyleGalvez-Yanjari, V., de la Fuente, R., Munoz-Gama, J., & Sepúlveda, M. (2023). The Sequence of Steps: A Key Concept Missing in Surgical Training—A Systematic Review and Recommendations to Include It. International Journal of Environmental Research and Public Health, 20(2), 1436. https://doi.org/10.3390/ijerph20021436