Public Mental Health Approaches to Online Radicalisation: An Empty Systematic Review

Abstract

1. Introduction

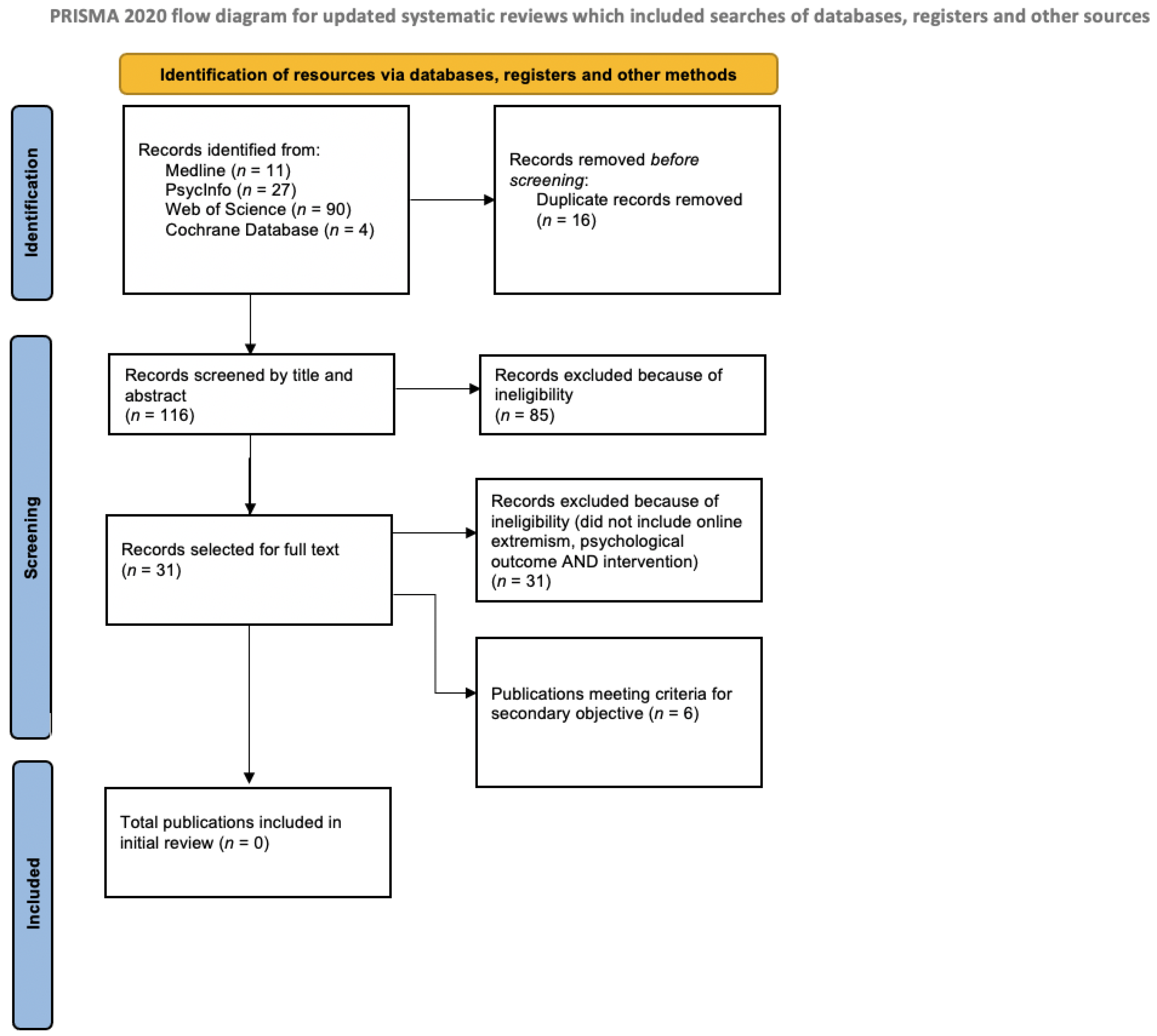

2. Materials and Methods

2.1. Eligibility Criteria

2.2. Population, Intervention, Control, Outcome (PICO)

3. Results

3.1. Data Extraction and Analysis

3.2. Quality Assessment

| Name | Country/ Milieu | Type of Publication | Summary | Methodology | Central Finding/Argument | Reasons for Primary Objective Exclusion/ Secondary Objective Relevance/ Quality Assessment (QA) See Appendix A |

|---|---|---|---|---|---|---|

| Schmitt et al., 2018 [38] | German/ Islamist, USA/ Far-right | Information network analysis; peer-reviewed journal article | An online information network analysis of the links between online extremist content and counter extremist messages given that the quantity of extremist messages vastly outnumbers counter messages, both use similar keywords and automated algorithms may bundle the two types of messages together: counter messages closely or even directly link to extremist content. | The authors used an information online network analysis to explore what might hinder a successful intervention addressing online radicalisation. Videos from each campaign (8 from counter Islamist, 4 from counter far-right) were listed and treated as “seeds” for data collection using the online tool YTDT Video Network. For each list of “seeds” related videos and metadata were retrieved, including view count, rating and crawl depth to 2. To reduce biased results due to the researchers’ own search history, browser history and cookies were deleted before retrieving the data. | Extremist messages were only two clicks away from counter messaging. Aiming to integrate the role of recommendation algorithms into the “selective exposure” paradigm, the authors suggest that the algorithm filtering and gatekeeping functions directing content and users toward one another, including user amplification through sharing and “likes”, as well as the overwhelmingly larger volume of online extremist compared to counter extremist content, together pose almost insurmountable challenges to online interventions targeting or countering extremist content. | Excluded because of nonreporting of the testing of an intervention. Relevance: the article addressed online extremist content, its relationship with user behaviour and attitudinal shift and analyses of interventions used. Public mental health approaches might utilise online counter messages as part of an intervention and several obstacles to counter messaging efficacy were identified. QA: all components were present. |

| Rusnalasari et al., 2018 [39] | Indonesia/ Islamist | Cross-sectional analysis; peer-reviewed conference paper | An analysis of the relationships amongst literacy and belief in and practice of the Indonesian national ideology of Pancasila and literacy in extremist ideological language, with the view of demonstrating that belief and literacy correlate with reduced vulnerability to online radicalising content; belief and literacy were negatively correlated with vulnerability. | The authors developed and reliability tested two new measures and then used them to explore if high literacy in a national ideology coupled with literacy regarding language used in extreme ideologies decreased vulnerability to (or offered protection against) online radicalisation. | High levels of literacy in national ideology and extremist ideologies was not found to reduce vulnerability to or offer protection against online radicalisation. | Excluded because of not reporting the testing of an intervention. Relevance: the paper addressed online extremist content, its relationship with language outcomes in the cognitive domain and theorised the type of intervention that may be useful within education settings QA: some components were present. |

| Bouzar and Laurent, 2019 [40] | France/ Islamist | Retrospective single-case study analysis; peer-reviewed journal article | A qualitative interdisciplinary analysis of the radicalisation of and disengagement intervention with “Hamza”, a 15-year-old French citizen who attempted several times to leave the country to prepare an attack on France; an analysis concluded that Hamza’s life course and related trauma experiences led to radicalisation through the interaction of 3 cumulative processes: emotional, relational and cognitive–ideological. | The authors retrospectively reported on the use of inter-disciplinary (psychological, social, political and religious) thematic analyses of semi-directive interviews and online communications with extremist recruiters to identify the radicalisation stages that led to a young person’s attempts to commit extremist violence and the conditions necessary for a successful intervention. | Based on the successful outcome of this case study, it was argued for the efficacy of a multidisciplinary intervention that analyses an individual’s life trajectory (rather than only one or two time points) informed by two first steps: (i) thematic analyses of semi-structured interviews with parents and the radicalised individual; (ii) when permission is granted and access is legal, thematic analyses of mobile phone and computer records revealing the frequency, content and patterns of engagement between the individual and the extremist recruiters. | Excluded because of not describing in detail the intervention methodology beyond the initial steps. Relevance: the article addressed online extremist content, its relationship with several psychological domains including affect related trauma and outlined the outcome of the first steps of an intervention. QA: several components were present implicitly and a few were absent. |

| Siegel et al., 2019 [41] | Global/ Several | Narrative review; book chapter; chapter proposal and subsequent drafts were reviewed by three editors who had to agree that a draft met quality and relevance criteria for the book | A book chapter reviewing pathways to and risk factors for radicalisation, theoretical explanations as to why youth may become radicalised and recommended intervention approaches and examples in six overlapping arenas (family, school, prison, community, internet and government); review concludes that trauma-informed approaches across the six interacting systems are required. | The authors offered a chapter-length overview of reducing terrorism and preventing radicalisation in six overlapping arenas: family, school, prison, community, internet and government (the latter referring to diverse services at the international, national and local levels, depending on the country and region, e.g., resource provision to schools, prisoner aftercare, public–private partnerships, financial support services, internet monitoring and law enforcement). | Identified five arenas overlapping with the digital arena in which interventions should be located (family, school, prison, community and government) and argued that two needed approaches are largely absent: trauma informed and resilience promotion. | Excluded because of the absence of the reported testing of the intervention; specific interventions simply mentioned as examples Relevance: the chapter addressed online extremist content, its relationship to trauma and theoretical areas where interventions may take place. QA: not applicable. |

| Tremblay, 2020 [42] | Global/ Extreme right-wing, far-right | Narrative review; nonpeer-reviewed editorial | An editorial focussing on the alt-right movement, using the terrorist attacks in Christchurch in 2019 as an example: the attack was “A sign of our digital era and social-mediatized gaze”, having been live streamed on Facebook and widely shared across the virtual community. The development of inclusive habitats, governance, systems and processes were identified as significant goals for health promotion to foster “peaceful, just and inclusive societies which are free from fear, racism, violation and other violence”. | The author provided a very brief high-level analysis focusing on the intersectionality of discrimination and oppression with radicalisation in the digital, political and social spheres. | Argued for multisector partnerships with public mental health promotion approaches to reduce discrimination, oppression and radicalisation in the digital, political and social spheres. | Excluded because of the absence of the reported testing of the relevant interventions. Relevance: the editorial addressed online extremist content and areas within public mental health promotion where interventions may take place. QA: not applicable. |

| Schmitt et al., 2021 | Germany/ Anti-refugee | Between-subjects design; peer-reviewed journal article | The first study examining the effects of two different narrative structures, one-sided (counter only) or two-sided (extremist and counter) using the persuasion technique of narrative involvement operationalised as two different types of protagonists (approachable or distant/neutral). The narrative focused on a controversial topic (how to deal with the number of refugees in Germany) and the effect of each narrative structure on attitude change was measured; participants who read the two-sided narrative showed less reactance; the smaller the reactance, the more they felt involved in the narrative which, in turn, led to more positive attitudes towards refugees; variations in the protagonist failed to show an effect. | The authors drew on findings from the earlier Schmitt et al. (2018) [39] study, theoretical concepts and studies around one-sided versus two-sided narratives, and narrative involvement, to examine the factors involved in persuasiveness. Measured manipulations (one- and two-sided narratives; identification with protagonist), attitude change, freedom threat and narrative involvement. No control groups, follow-up or behavioural change measures. | Reported less reactance from a two-sided versus one-sided narrative, that is, from a narrative that included an extremist as well as a counter message. Less reactance was accompanied by increasing narrative involvement (measured as transportation into the narrative and identification with the main character) and self-reported positive attitudinal change toward refugees. | Excluded because of the absence of the reported testing of an intervention. Relevance: the article reported a study that could inform an intervention design using counter messaging. Addressed online extremist content, its relationship with user behaviour and attitudinal shift and analyses of the psychological mechanisms involved in mediating the effects of different narrative structures. QA: Most of the components were present. |

3.3. Range of Online Content

Type of Online Content across Publications

3.4. Who Accesses Online Content

Target Populations across the Publications

3.5. Online Radicalisation and System-Wide Frameworks

Interactions between Extremist Content and the Online/Offline Space

4. Discussion

4.1. Spatial Formations

4.2. Identity Politics

4.3. Intergenerational Change and Continuity

4.4. Reciprocal Radicalisation

4.5. An Argument for the Development of the Public Mental Health Approach

4.6. Potential Biases and Errors in the Review Process

Statement of Bias and Reflexivity

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Quality Assurance: Qualitative Data (Bouzar and Laurent [40]) | |

| 1. Epistemological position. Did the authors provide philosophical grounding for what has been done and why? e.g., post positivist; realist; interpretative; and constructivist. | Implicitly, through the insistence of a multidisciplinary approach, which inherently involved different epistemologies, i.e., social, psychological, psychoanalytical, geopolitical and religious. |

| 2. Was bias addressed, if so, how? | Implicitly, through the need to have social, psychological, psychoanalytical, geopolitical and religious analyses (i.e., a multidisciplinary analysis) to understand the radicalisation stages that lead to a commitment to extremist violence. |

| 3. Was it ensured that “valid” and “credible” accounts were given that reflect an accurate portrayal of the social reality? How? | Yes, with quotes from the semi-structured interviews. |

| 4. Was there a quality assurance checklist? | Not stated. |

| 5. Was there ongoing quality assurance, e.g., fieldnotes and field diaries. | Not stated. |

| 6. Reflexivity of researcher’s position: was there transparency in the decisions made and the assumptions held? | Implied through the multidisciplinarity but not stated. |

| 7. Comprehensiveness of approach: was the study systematically designed, conducted and analysed? | NA—retrospective case study. |

| Quality Assurance: Quantitative Data | Schmitt et al. [38] | Rusnalasari et al. [39] | Schmitt et al. [43] |

| Why was the study conducted (is it a clearly focused question that addresses population, intervention and outcomes?) | Yes | Yes | Yes |

| What type of study (does the study design match the question asked. Intervention questions are best answered with randomised controlled trials. Is it an RCT?) | Yes | Yes | Yes |

| What are the study characteristics (can this be answered by PICO?) Population, Intervention, Control, Outcome) | Yes | Yes | Yes |

| Was bias addressed? What was done to address bias? | Yes | No | No |

| Are the results valid? (i.e., treatment effect, p-value) | Yes | No | Yes |

| Can clear conclusions be made? (can the results be generalised?) | Within stated limitations | Cannot tell | Within stated limitations |

References

- Gill, P.; Clemmow, C.; Hetzel, F.; Rottweiler, B.; Salman, N.; Van Der Vegt, I.; Corner, E. Systematic review of mental health problems and violent extremism. J. Forensic Psychiatry Psychol. 2021, 32, 51–78. [Google Scholar] [CrossRef]

- Pathé, M.T.; Haworth, D.J.; Goodwin, T.A.; Holman, A.G.; Amos, S.J.; Winterbourne, P.; Day, L. Establishing a joint agency response to the threat of lone-actor grievance-fuelled violence. J. Forensic Psychiatry Psychol. 2018, 29, 37–52. [Google Scholar] [CrossRef]

- Augestad Knudsen, R. Measuring radicalisation: Risk assessment conceptualisations and practice in England and Wales. Behav. Sci. Terror. Political Aggress. 2020, 12, 37–54. [Google Scholar] [CrossRef]

- Bhui, K. Flash, the emperor and policies without evidence: Counter-terrorism measures destined for failure and societally divisive. BJPsych Bull. 2016, 40, 82–84. [Google Scholar] [CrossRef][Green Version]

- Summerfield, D. Mandating doctors to attend counter-terrorism workshops is medically unethical. BJPsych Bull. 2016, 40, 87–88. [Google Scholar] [CrossRef]

- Hurlow, J.; Wilson, S.; James, D.V. Protesting loudly about Prevent is popular but is it informed and sensible? BJPsych Bull. 2016, 40, 162–163. [Google Scholar] [CrossRef] [PubMed]

- Koehler, D. Family counselling, de-radicalization and counter-terrorism: The Danish and German programs in context. In Countering Violent Extremism: Developing an Evidence-Base for Policy and Practice; Hedaya and Curtin University: Perth, West Australia, 2015; pp. 129–138. [Google Scholar]

- NIHR Conceptual framework for Public Mental Health. Available online: https://www.publicmentalhealth.co.uk (accessed on 13 January 2023).

- DeMarinis, V. Public Mental Health Promotion in a Public Health Paradigm as a Framework for Countering Violent Extremism; Cambridge Scholars Publishing: Newcastle upon Tyne, UK, 2018. [Google Scholar]

- Hogg, M.A. Uncertain self in a changing world: A foundation for radicalisation, populism, and autocratic leadership. Eur. Rev. Soc. Psychol. 2021, 32, 235–268. [Google Scholar] [CrossRef]

- Bakir, V. Psychological operations in digital political campaigns: Assessing Cambridge Analytica’s psychographic profiling and targeting. Front. Commun. 2020, 5, 67. [Google Scholar] [CrossRef]

- Bello, W.F. Counterrevolution: The Global Rise of the Far Right; Fernwood Publishing: Halifax, NS, Canada, 2019. [Google Scholar]

- Moskalenko, S.; González, J.F.G.; Kates, N.; Morton, J. Incel ideology, radicalization and mental health: A survey study. J. Intell. Confl. Warf. 2022, 4, 1–29. [Google Scholar] [CrossRef]

- Marwick, A.; Clancy, B.; Furl, K. Far-Right Online Radicalization: A Review of the Literature. Bull. Technol. Public Life 2022. [Google Scholar] [CrossRef]

- Nilan, P. Young People and the Far Right; Palgrave Macmillan: Singapore, 2021. [Google Scholar]

- Pohl, E.; Riesmeyer, C. See No Evil, Fear No Evil: Adolescents’ Extremism-related Media Literacies of Islamist Propaganda on Instagram. J. Deradicalization 2023, 34, 50–84. [Google Scholar]

- Boucher, V. Down the TikTok Rabbit Hole: Testing the TikTok Algorithm’s Contribution to Right Wing Extremist Radicalization. Ph.D. Thesis, Queen’s University, Kingston, ON, Canada, 2022. [Google Scholar]

- Isaak, J.; Hanna, M.J. User data privacy: Facebook, Cambridge Analytica, and privacy protection. Computer 2018, 51, 56–59. [Google Scholar] [CrossRef]

- Bhui, K.S.; Hicks, M.H.; Lashley, M.; Jones, E. A public health approach to understanding and preventing violent radicalization. BMC Med. 2012, 10, 16. [Google Scholar] [CrossRef] [PubMed]

- Rousseau, C.; Aggarwal, N.K.; Kirmayer, L.J. Radicalization to violence: A view from cultural psychiatry. Transcult. Psychiatry 2021, 58, 603–615. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, N.K. Questioning the current public health approach to countering violent extremism. Glob. Public Health 2019, 14, 309–317. [Google Scholar] [CrossRef]

- Derfoufi, Z. Radicalization’s Core. Terror. Political Violence 2022, 34, 1185–1206. [Google Scholar] [CrossRef]

- Fledderus, M.; Bohlmeijer, E.T.; Smit, F.; Westerhof, G.J. Mental health promotion as a new goal in public mental health care: A randomized controlled trial of an intervention enhancing psychological flexibility. Am. J. Public Health 2010, 100, 2372. [Google Scholar] [CrossRef]

- Boyd-McMillan, E.; DeMarinis, V. Learning Passport: Curriculum Framework (IC-ADAPT SEL High Level Programme Design); Cambridge University Press & Cambridge Assessment: Cambridge, UK, 2020. [Google Scholar]

- Bailey, G.M.; Edwards, P. Rethinking ‘radicalisation’: Microradicalisations and reciprocal radicalisation as an intertwined process. J. Deradicalization 2017, 10, 255–281. [Google Scholar]

- Gianmarco, T.; Nardi, A. Vaccine hesitancy in the era of COVID-19. Public Health 2021, 194, 245–251. [Google Scholar]

- Roose, J.M.; Cook, J. Supreme men, subjected women: Gender inequality and violence in jihadist, far right and male supremacist ideologies. Stud. Confl. Terror. 2022, 1–29. [Google Scholar] [CrossRef]

- Kruglanski, A.W.; Gunaratna, R.; Ellenberg, M.; Speckhard, A. Terrorism in time of the pandemic: Exploiting mayhem. Glob. Secur. Health Sci. Policy 2020, 5, 121–132. [Google Scholar] [CrossRef]

- Ackerman, G.; Peterson, H. Terrorism and COVID-19. Perspect. Terror. 2020, 14, 59–73. [Google Scholar]

- Collins, J. Mobilising Extremism in Times of Change: Analysing the UK’s Far-Right Online Content During the Pandemic. Eur. J. Crim. Policy Res. 2023, 1–23. [Google Scholar] [CrossRef] [PubMed]

- Bramer, W.M.; Rethlefsen, M.L.; Kleijnen, J.; Franco, O.H. Optimal database combinations for literature searches in systematic reviews: A prospective exploratory study. Syst. Rev. 2017, 6, 245. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Int. J. Surg. 2021, 88, 105906. [Google Scholar] [CrossRef]

- Aggarwal, R.; Ranganathan, P. Study designs: Part 4–interventional studies. Perspect. Clin. Res. 2019, 10, 137. [Google Scholar] [CrossRef]

- Yaffe, J.; Montgomery, P.; Hopewell, S.; Shepard, L.D. Empty reviews: A description and consideration of Cochrane systematic reviews with no included studies. PLoS ONE 2012, 7, e36626. [Google Scholar] [CrossRef]

- Reynolds, J.; Kizito, J.; Ezumah, N.; Mangesho, P.; Allen, E.; Chandler, C. Quality assurance of qualitative research: A review of the discourse. Health Res. Policy Syst. 2011, 9, 43. [Google Scholar] [CrossRef]

- Oliveras, I.; Losilla, J.M.; Vives, J. Methodological quality is underrated in systematic reviews and meta-analyses in health psychology. J. Clin. Epidemiol. 2017, 86, 59–70. [Google Scholar] [CrossRef]

- Stroup, D.F.; Berlin, J.A.; Morton, S.C.; Olkin, I.; Williamson, G.D.; Rennie, D.; Moher, D.; Becker, B.J.; Sipe, T.A.; Thacker, S.B. Meta-analysis of observational studies in epidemiology: A proposal for reporting. JAMA 2000, 283, 2008–2012. [Google Scholar] [CrossRef]

- Schmitt, J.B.; Rieger, D.; Rutkowski, O.; Ernst, J. Counter-messages as prevention or promotion of extremism?! The potential role of YouTube: Recommendation algorithms. J. Commun. 2018, 68, 780–808. [Google Scholar] [CrossRef]

- Rusnalasari, Z.D.; Algristian, H.; Alfath, T.P.; Arumsari, A.D.; Inayati, I. Students vulnerability and literacy analysis terrorism ideology prevention. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2018; Volume 1028, No. 1; p. 012089. [Google Scholar]

- Bouzar, D.; Laurent, G. The importance of interdisciplinarity to deal with the complexity of the radicalization of a young person. In Annales Medico-Psychologiques; Elsevier: Paris, France, 2019; Volume 177, No. 7; pp. 663–674. [Google Scholar]

- Siegel, A.; Brickman, S.; Goldberg, Z.; Pat-Horenczyk, R. Preventing future terrorism: Intervening on youth radicalization. In An International Perspective on Disasters and Children’s Mental Health; Springer: Geneva, Switzerland, 2019; pp. 391–418. [Google Scholar]

- Tremblay, M.C. The wicked interplay of hate rhetoric, politics and the internet: What can health promotion do to counter right-wing extremism? Health Promot. Int. 2020, 35, 1–4. [Google Scholar] [CrossRef] [PubMed]

- Schmitt, J.B.; Caspari, C.; Wulf, T.; Bloch, C.; Rieger, D. Two sides of the same coin? The persuasiveness of one-sided vs. two-sided narratives in the context of radicalization prevention. SCM Stud. Commun. Media 2021, 10, 48–71. [Google Scholar] [CrossRef]

- Krieger, N. Discrimination and health inequities. Int. J. Health Serv. Plan. Adm. Eval. 2014, 44, 643–710. [Google Scholar] [CrossRef]

- WHO Commission on Social Determinants of Health; World Health Organization. Closing the Gap in a Generation: Health Equity through Action on the Social Determinants of Health: Commission on Social Determinants of Health Final Report; World Health Organization: Geneva, Switzerland, 2008.

- Wilkinson, R.; Pickett, K. The spirit level. In Why Equality Is Better for Everyone; Penguin: London, UK, 2010. [Google Scholar]

- Allen, C.E. Threat of Islamic Radicalization to the Homeland, Testimony before the US Senate Committee on Homeland Security and Government Affairs; Senate Committee on Homeland Security and Governmental Affairs: Washington, DC, USA, 2007.

- United Nations General Assembly. 59th Session, A/59/565. Available online: http://hrlibrary.umn.edu/instree/report.pdf (accessed on 19 March 2023).

- Braddock, K.; Dillard, J.P. Meta-analytic evidence for the persuasive effect of narratives on beliefs, attitudes, intentions, and behavior. Commun. Monogr. 2016, 83, 446–467. [Google Scholar] [CrossRef]

- Cohen, J. Defining identification: A theoretical look at the identification of audiences with media characters. Mass Commun. Soc. 2001, 4, 245–264. [Google Scholar] [CrossRef]

- Moyer-Gusé, E.; Jain, P.; Chung, A.H. Reinforcement or reactance? Examining the effect of an explicit persuasive appeal following an entertainment-education narrative. J. Commun. 2013, 62, 1010–1027. [Google Scholar] [CrossRef]

- Jonathan, C. Audience identification with media characters. In Psychology of Entertainment; Routledge: Milton, UK, 2013; pp. 183–197. [Google Scholar]

- Green, M.C.; Brock, T.C. The role of transportation in the persuasiveness of public narratives. J. Personal. Soc. Psychol. 2000, 79, 701–721. [Google Scholar] [CrossRef]

- Allen, M. Meta-analysis comparing the persuasiveness of one-sided and two-sided messages. West. J. Speech Commun. 1991, 55, 390–404. [Google Scholar] [CrossRef]

- Hovland, C.I.; Lumsdaine, A.A.; Sheffield, F.D. Experiments on Mass Communication (Studies in Social Psychology in World War II, Vol. 3.); Princeton University Press: Princeton, NJ, USA, 1948. [Google Scholar]

- Gregory, A.L.; Piff, P.K. Finding uncommon ground: Extremist online forum engagement predicts integrative complexity. PLoS ONE 2021, 16, e0245651. [Google Scholar] [CrossRef]

- Robinson, N.; Whittaker, J. Playing for Hate? Extremism, terrorism, and videogames. Stud. Confl. Terror. 2020, 1–36. [Google Scholar] [CrossRef]

- Zhao, N.; Zhou, G. Social media use and mental health during the COVID-19 pandemic: Moderator role of disaster stressor and mediator role of negative affect. Appl. Psychol. Health Well-Being 2020, 12, 1019–1038. [Google Scholar] [CrossRef] [PubMed]

- Leong, L.Y.; Hew, T.S.; Ooi, K.B.; Lee, V.H.; Hew, J.J. A hybrid SEM-neural network analysis of social media addiction. Expert Syst. Appl. 2019, 133, 296–316. [Google Scholar] [CrossRef]

- Tutgun-Ünal, A.; Deniz, L. Development of the social media addiction scale. AJIT-E Bilişim Teknol. Online Derg. 2015, 6, 51–70. [Google Scholar] [CrossRef]

- Garcia, M.B.; Juanatas, I.C.; Juanatas, R.A. TikTok as a Knowledge Source for Programming Learners: A New Form of Nanolearning? In Proceedings of the 2022 10th International Conference on Information and Education Technology (ICIET), Matsue, Japan, 9–11 April 2022; IEEE: Danvers, MA, USA, 2022; pp. 219–223. [Google Scholar]

- Duflos, M.; Giraudeau, C. Using the intergenerational solidarity framework to understand the grandparent–grandchild relationship: A scoping review. Eur. J. Ageing 2021, 19, 233–262. [Google Scholar] [CrossRef]

- Cetrez, Ö.; DeMarinis, V.; Pettersson, J.; Shakra, M. Integration: Policies, Practices, and Experiences, Sweden Country Report. Working papers Global Migration: Consequences and Responses; EU Horizon 2020 Country Report for RESPOND project; Uppsala University: Uppsala, Sweden, 2020. [Google Scholar]

- Whiting, P.; Savović, J.; Higgins, J.P.; Caldwell, D.M.; Reeves, B.C.; Shea, B.; Davies, P.; Kleijnen, J.; Churchill, R.; ROBIS Group. ROBIS: A new tool to assess risk of bias in systematic reviews was developed. J. Clin. Epidemiol. 2016, 69, 225–234. [Google Scholar] [CrossRef]

- Wren-Lewis, S.; Alexandrova, A. Mental health without well-being. In The Journal of Medicine and Philosophy: A Forum for Bioethics and Philosophy of Medicine; Oxford University Press: New York, NY, USA, 2021; Volume 46, No. 6; pp. 684–703. [Google Scholar]

- Westerhof, G.J.; Keyes, C.L.M. Mental Illness and Mental Health: The Two Continua Model Across the Lifespan. J. Adult Dev. 2010, 17, 110–119. [Google Scholar] [CrossRef]

| Extremism Keywords | Online Keywords | Intervention Keywords | n | |

|---|---|---|---|---|

| Medline via OVID | (“Radical Islam*” OR “Islamic Extrem*” OR Radicali* OR “Homegrown Terror*” OR “Homegrown Threat*” OR “Violent Extrem*” OR Jihad* OR Indoctrinat* OR Terrori* OR “White Supremacis* †” OR Neo-Nazi OR “Right-wing Extrem*” OR “Left-wing Extrem*” OR “Religious Extrem*” OR Fundamentalis* OR Anti-Semitis* OR Nativis* OR Islamophob* OR Eco-terror* OR “Al Qaida-inspired” OR “ISIS-inspired” OR Anti-Capitalis*).ti,ab ‡. OR terrorism/ | (“CYBERSPACE” OR “TELECOMMUNICATION systems” OR “INFORMATION technology “ OR “INTERNET” OR “VIRTUAL communit*” OR “ELECTRONIC discussion group*” OR “social media” OR “social networking” OR online OR bebo OR facebook OR nstagram OR linkedin OR meetup OR pinterest OR reddit OR snapchat OR tumblr OR xing OR twitter OR yelp OR youtube OR TikTok OR gab OR odysee OR telegram OR clubhouse OR BeReal OR Twitter OR WhatsApp OR WeChat OR “Sina Weibo” OR 4Chan).ti,ab. OR internet/OR social media/OR online social networking/ | (“Public mental health” OR “care in the community” OR “mental health service*” OR “educational service*” OR “social service*” OR “public service partnership*” OR “primary care referral” OR “referral pathways” OR “clinical program*” OR “health promotion” OR prevention).ti,ab. OR community mental health services/OR health promotion/ | 11 |

| PsycInfo via Ebscohost | TI (“Radical Islam*” OR “Islamic Extrem*” OR Radicali* OR “Homegrown Terror*” OR “Homegrown Threat*” OR “Violent Extrem*” OR Jihad* OR Indoctrinat* OR Terrori* OR “White Supremacis*” OR Neo-Nazi OR “Right-wing Extrem*” OR “Left-wing Extrem*” OR “Religious Extrem*” OR Fundamentalis* OR Anti-Semitis* OR Nativis* OR Islamophob* OR Eco-terror* OR “Al Qaida-inspired” OR “ISIS-inspired” OR Anti-Capitalis*) OR AB (“Radical Islam*” OR “Islamic Extrem*” OR Radicali* OR “Homegrown Terror*” OR “Homegrown Threat*” OR “Violent Extrem*” OR Jihad* OR In doctrinat* OR Terrori* OR “White Supremacis*” OR Neo-Nazi OR “Right-wing Extrem*” OR “Left-wing Extrem*” OR “Religious Extrem*” OR Fundamentalis* OR Anti-Semitis* OR Nativis* OR Islamophob* OR Eco-terror* OR “Al Qaida-inspired” OR “ISIS-inspired” OR Anti-Capitalis*) OR (DE “Terrorism”) OR (DE “Extremism”) | TI (“CYBERSPACE” OR “TELECOMMUNICATION systems” OR “INFORMATION technology “ OR “INTERNET” OR “VIRTUAL communit*” OR “ELECTRONIC discussion group*” OR “social media” OR “social networking” OR online OR bebo OR facebook OR nstagram OR linkedin OR meetup OR pinterest OR reddit OR snapchat OR tumblr OR xing OR twitter OR yelp OR youtube OR TikTok OR gab OR odysee OR telegram OR clubhouse OR BeReal OR Twitter OR WhatsApp OR WeChat OR “Sina Weibo” OR 4Chan) OR AB (“CYBERSPACE” OR “TELECOMMUNICATION systems” OR “INFORMATION technology “ OR “INTERNET” OR “VIRTUAL communit*” OR “ELECTRONIC discussion group*” OR “social media” OR “social networking” OR online OR bebo OR facebook OR nstagram OR linkedin OR meetup OR pinterest OR reddit OR snapchat OR tumblr OR xing OR twitter OR yelp OR youtube OR TikTok OR gab OR odysee OR telegram OR clubhouse OR BeReal OR Twitter OR WhatsApp OR WeChat OR “Sina Weibo” OR 4Chan) OR (DE “Internet”) OR (DE “Social Media”) OR (DE “Online Social Networks”) | TI (“Public mental health” OR “care in the community” OR “mental health service*” OR “educational service*” OR “social service*” OR “public service partnership*” OR “primary care referral” OR “referral pathways” OR “clinical program*” OR “health promotion” OR prevention) OR AB (“Public mental health” OR “care in the community” OR “mental health service*” OR “educational service*” OR “social service*” OR “public service partnership*” OR “primary care referral” OR “referral pathways” OR “clinical program*” OR “health promotion” OR prevention) OR DE “Public Mental Health” OR DE “Mental Health Services” OR DE “Social Services” OR DE “Health Promotion” AND DE “Prevention” OR DE “Preventive Health Services” OR DE “Preventive Mental Health Services” | 27 |

| Web of Science (Core Collection) | TS = (“Radical Islam*” OR “Islamic Extrem*” OR Radicali* OR “Homegrown Terror*” OR “Homegrown Threat*” OR “Violent Extrem*” OR Jihad* OR Indoctrinat* OR Terrori* OR “White Supremacis*” OR Neo-Nazi OR “Right-wing Extrem*” OR “Left-wing Extrem*” OR “Religious Extrem*” OR Fundamentalis* OR Anti-Semitis* OR Nativis* OR Islamophob* OR Eco-terror* OR “Al Qaida-inspired” OR “ISIS-inspired” OR Anti-Capitalis*) | TS = (“CYBERSPACE” OR “TELECOMMUNICATION systems” OR “INFORMATION technology” OR “INTERNET” OR “VIRTUAL communit*” OR “ELECTRONIC discussion group*” OR “social media” OR “social networking” OR online OR bebo OR facebook OR nstagram OR linkedin OR meetup OR pinterest OR reddit OR snapchat OR tumblr OR xing OR twitter OR yelp OR youtube OR TikTok OR gab OR odysee OR telegram OR clubhouse OR BeReal OR Twitter OR WhatsApp OR WeChat OR “Sina Weibo” OR 4Chan) | TS = (“Public mental health” OR “care in the community” OR “mental health service*” OR “educational service*” OR “social service*” OR “public service partnership*” OR “primary care referral” OR “referral pathways” OR “clinical program*” OR “health promotion” OR prevention) | 90 |

| Cochrane Library | Radical*, Extrem*, Terrorism, Neo Nazi, terror*, homegrown, jihad, indoctrin* supremacis*, right wing, left wing, religious, fundamentalis*anti-semeti*, nativis*, Islam*, Al-Qaida, ISIS, Anti-capitalis* | 4 | ||

| Total | 132 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mughal, R.; DeMarinis, V.; Nordendahl, M.; Lone, H.; Phillips, V.; Boyd-MacMillan, E. Public Mental Health Approaches to Online Radicalisation: An Empty Systematic Review. Int. J. Environ. Res. Public Health 2023, 20, 6586. https://doi.org/10.3390/ijerph20166586

Mughal R, DeMarinis V, Nordendahl M, Lone H, Phillips V, Boyd-MacMillan E. Public Mental Health Approaches to Online Radicalisation: An Empty Systematic Review. International Journal of Environmental Research and Public Health. 2023; 20(16):6586. https://doi.org/10.3390/ijerph20166586

Chicago/Turabian StyleMughal, Rabya, Valerie DeMarinis, Maria Nordendahl, Hassan Lone, Veronica Phillips, and Eolene Boyd-MacMillan. 2023. "Public Mental Health Approaches to Online Radicalisation: An Empty Systematic Review" International Journal of Environmental Research and Public Health 20, no. 16: 6586. https://doi.org/10.3390/ijerph20166586

APA StyleMughal, R., DeMarinis, V., Nordendahl, M., Lone, H., Phillips, V., & Boyd-MacMillan, E. (2023). Public Mental Health Approaches to Online Radicalisation: An Empty Systematic Review. International Journal of Environmental Research and Public Health, 20(16), 6586. https://doi.org/10.3390/ijerph20166586