The Eyewitness Community Survey: An Engaging Citizen Science Tool to Capture Reliable Data while Improving Community Participants’ Environmental Health Knowledge and Attitudes

Abstract

1. Introduction

2. Materials and Methods

2.1. Overall Design

2.2. Creation of and Training to Use the ECS

2.3. Observations Made with the ECS

2.4. ECS and Focus Group Participants

2.5. Statistical Analysis

3. Results

3.1. Study Participants

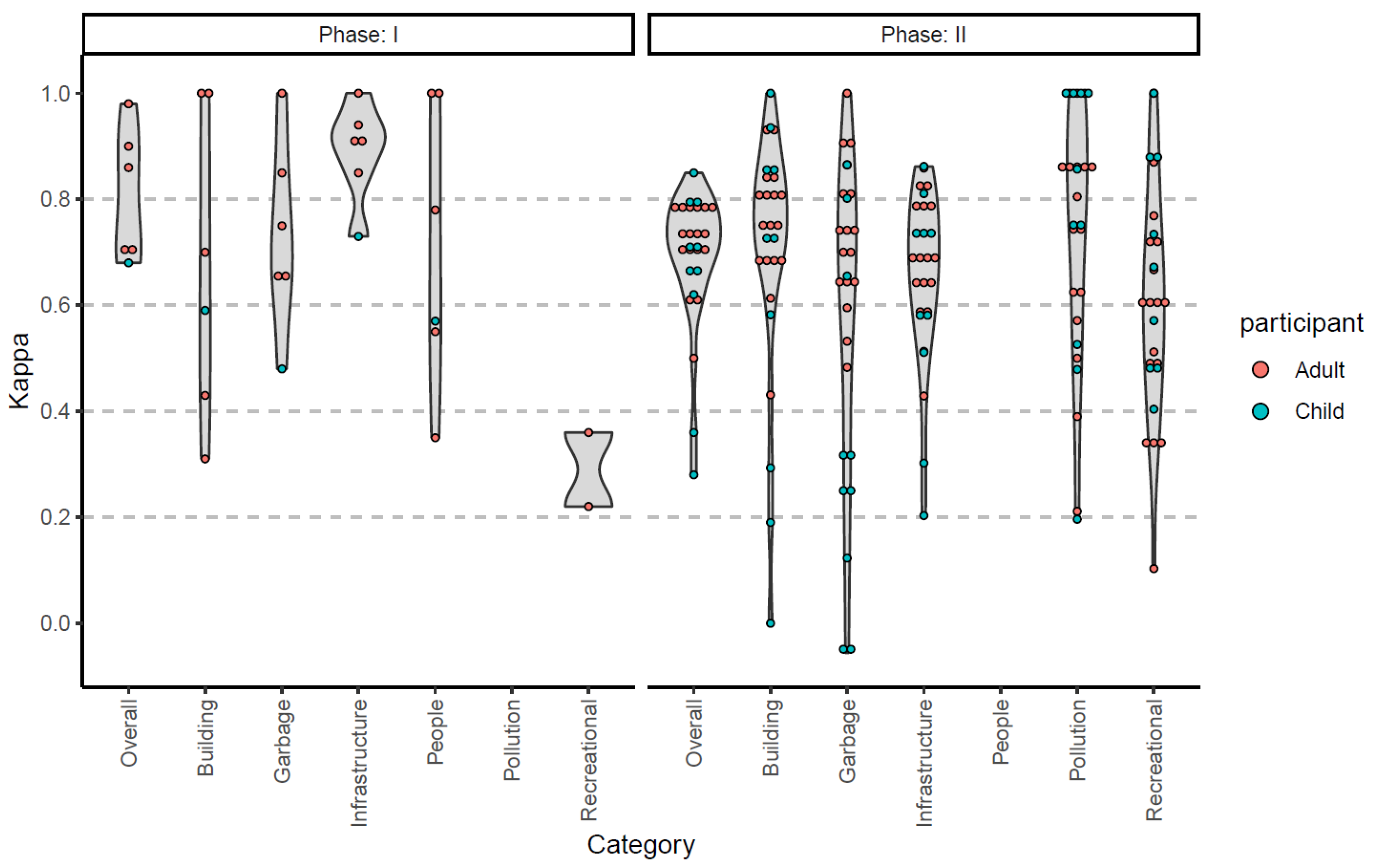

3.2. Intra-Rater Reliability

3.3. Inter-Rater Reliability

3.4. Impact on Participants’ Knowledge and Attitudes

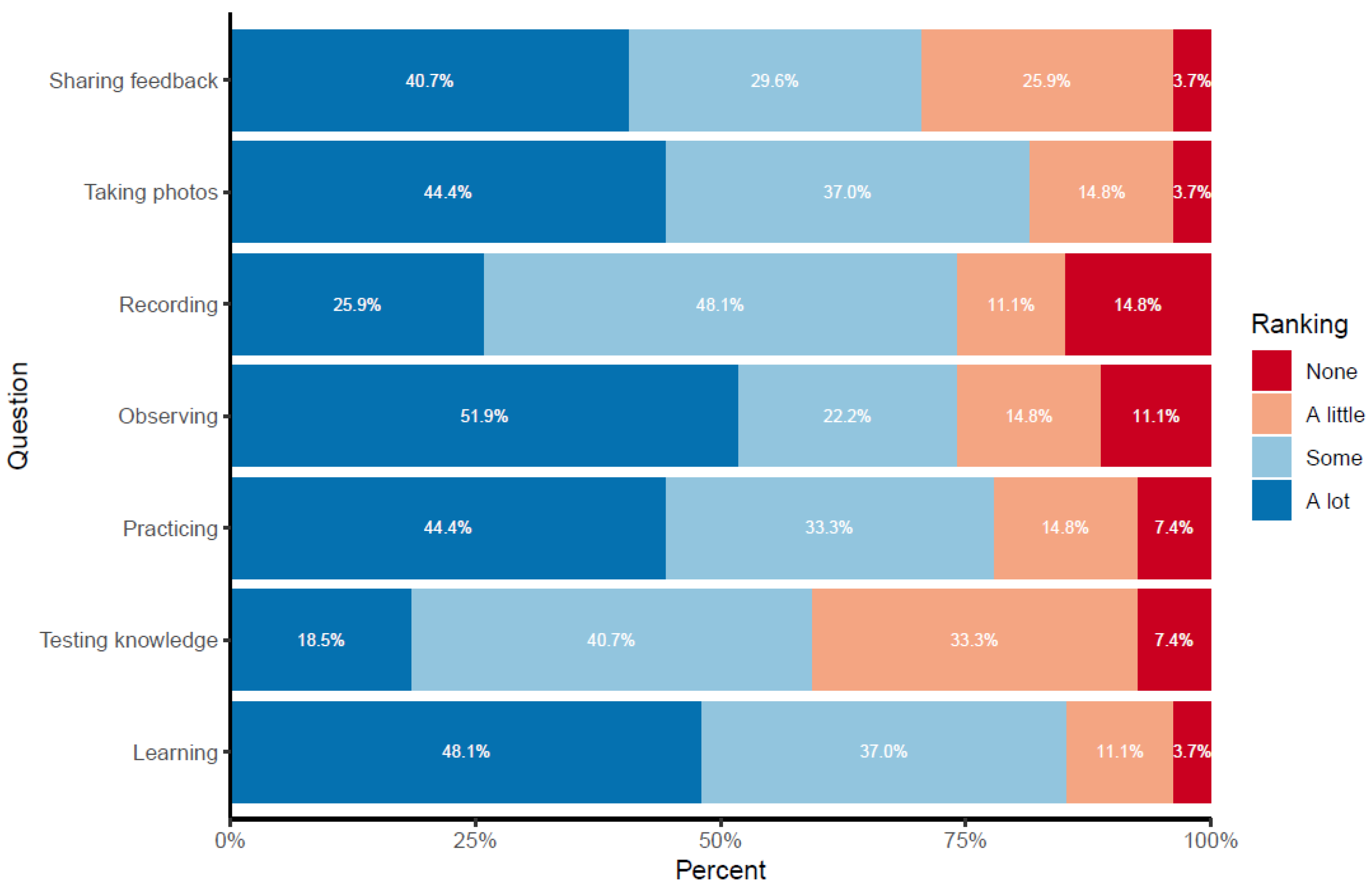

3.5. Phase II Participants’ Perceptions about the ECS

“Another great thing about citizen science is that you don’t have to have a degree or have to come from a certain background, just be a member of the community and you have to take interest and try to improve your own health and the health of the community, gives you an opportunity just to be more engaged.”

“…. because you can use the same skills and methods to help your own community and yourself. Like by doing this citizen science you can like doesn’t have to be exact project we did but you could still use those skills in helping your community.”

“The video stories were fun to watch but we basically learnt the most from the practice surveys [modules] in the beginning.”

“In the surveys, there are a lot of like options while you’re answering the questions to click on pictures [for help in how to interpret the question] and that was really helpful to me.”

“The first time I did the ECS I was by myself and like by the 4th and 5th observation, I was kind of tired of it. But when I did it this time with my partner, cause we were like we were talking and you know, hanging out in between the ECS as we walked to a different locations and I didn’t feel the same fatigue with it. I actually enjoyed it.”

“For me, in the program kind of my personal goals were to like focus more on like looking at my community, like different parts of it, like the good and the bad of it.”

“The reason I did it was because when I started doing it, I was in quarantine from school and so I was pretty bored and so gave me a nice thing to do.”

“I’m personally interested in medicine….., and I can see myself using those insights to think about like if someone comes into a doctor’s office, not just what information is immediately available. Like if they come in with complaints about asthma, and you are treating them, there might be more to think about in terms of where they live and what their community is like. Those insights might be useful to me.”

“I’m a first year college student so I came kind of undecided my first semester and now I’m applying to the environmental public health major. It has influenced the way that I want to pursue a career in health care, but also, knowing how the environment affects our health. I think from doing these, being a part of this project, it has helped me see how the community effects our health and that is something very important to take into account when seeing a patient or someone who goes to seek care.”

“I kind of have a new appreciation for planning and like urban planning for communities. Like I never knew the amount of trash cans directly affect someone’s health so if there’s not enough trash cans and people start littering, that can go in the water which can affect people’s health like I just thought it was super interesting how it all connects one way or another to one person’s health.”

“I definitely took notice of all the privileges that I have in my own community because I live in a place where there’s like stores and parks and all of that stuff and I know that some people don’t have that.”

4. Discussion

4.1. Limitations

4.2. Considerations for Future Use of the ECS

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pruss-Ustun, A.; Wolf, J.; Corvalan, C.; Neville, T.; Bos, R.; Neira, M. Diseases due to unhealthy environments: An updated estimate of the global burden of disease attributable to environmental determinants of health. J. Public Health 2017, 39, 464–475. [Google Scholar] [CrossRef] [PubMed]

- Crowley, R.; Mathew, S.; Hilden, D.; Health; Public Policy Committee of the American College of Public Health; Public Policy Committee of the American College of Physicians. Environmental Health: A Position Paper From the American College of Physicians. Ann. Intern. Med. 2022, 175, 1591–1593. [Google Scholar] [CrossRef] [PubMed]

- Gura, T. Citizen science: Amateur experts. Nature 2013, 496, 259–261. [Google Scholar] [CrossRef]

- Albagli, S.; Iwama, A. Citizen science and the right to research: Building local knowledge of climate change impacts. Humanit. Soc. Sci. Commun. 2022, 9, 39. [Google Scholar] [CrossRef]

- Aylward, B.L.; Milford, K.M.; Storey, K.E.; Nykiforuk, C.I.J.; Raine, K.D. Citizen science in monitoring food environments: A qualitative collective case study of stakeholders’ experiences during the Local Environment Action on Food project in Alberta, Canada. BMC Public Health 2022, 22, 665. [Google Scholar] [CrossRef]

- Asingizwe, D.; Poortvliet, P.M.; van Vliet, A.J.H.; Koenraadt, C.J.M.; Ingabire, C.M.; Mutesa, L.; Leeuwis, C. What do people benefit from a citizen science programme? Evidence from a Rwandan citizen science programme on malaria control. Malar. J. 2020, 19, 283. [Google Scholar] [CrossRef]

- Receveur, A.; Poulet, L.; Dalmas, B.; Gonçalves, B.; Vernay, A. Citizen science: How to extend reciprocal benefits from the project community to the broader socio-ecological system. Quant. Plant Biol. 2022, 3, e20. [Google Scholar] [CrossRef]

- Mori, A.S.; Suzuki, K.F.; Hori, M.; Kadoya, T.; Okano, K.; Uraguchi, A.; Muraoka, H.; Sato, T.; Shibata, H.; Suzuki-Ohno, Y.; et al. Perspective: Sustainability challenges, opportunities and solutions for long-term ecosystem observations. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2023, 378, 20220192. [Google Scholar] [CrossRef]

- Marks, L.; Laird, Y.; Trevena, H.; Smith, B.J.; Rowbotham, S. A Scoping Review of Citizen Science Approaches in Chronic Disease Prevention. Front. Public Health 2022, 10, 743348. [Google Scholar] [CrossRef]

- Eisen, L.; Eisen, R.J. Benefits and Drawbacks of Citizen Science to Complement Traditional Data Gathering Approaches for Medically Important Hard Ticks (Acari: Ixodidae) in the United States. J. Med. Entomol. 2021, 58, 1–9. [Google Scholar] [CrossRef]

- English, P.B.; Richardson, M.J.; Garzón-Galvis, C. From Crowdsourcing to Extreme Citizen Science: Participatory Research for Environmental Health. Annu. Rev. Public Health 2018, 39, 335–350. [Google Scholar] [CrossRef] [PubMed]

- Bonney, R.; Phillips, T.B.; Ballard, H.L.; Enck, J.W. Can citizen science enhance public understanding of science? Public Underst. Sci. 2016, 25, 2–16. [Google Scholar] [CrossRef] [PubMed]

- Carson, S.; Rock, J.; Smith, J. Sediments and Seashores—A Case Study of Local Citizen Science Contributing to Student Learning and Environmental Citizenship. Front. Educ. 2021, 6, 674883. [Google Scholar] [CrossRef]

- Peter, M.; Diekotter, T.; Kremer, K.; Hoffler, T. Citizen science project characteristics: Connection to participants’ gains in knowledge and skills. PLoS ONE 2021, 16, e0253692. [Google Scholar] [CrossRef]

- Liñán, S.; Salvador, X.; Álvarez, A.; Comaposada, A.; Sanchez, L.; Aparicio, N.; Rodero, I.; Piera, J. A new theoretical engagement framework for citizen science projects: Using a multi-temporal approach to address long-term public engagement challenges. Environ. Res. Lett. 2022, 17, 105006. [Google Scholar] [CrossRef]

- Walker, D.W.; Smigaj, M.; Tani, M. The benefits and negative impacts of citizen science applications to water as experienced by participants and communities. WIREs Water 2021, 8, e1488. [Google Scholar] [CrossRef]

- Land-Zandstra, A.; Agnello, G.; Gültekin, Y.S. Participants in Citizen Science. In The Science of Citizen Science; Vohland, K., Land-Zandstra, A., Ceccaroni, L., Lemmens, R., Perelló, J., Ponti, M., Samson, R., Wagenknecht, K., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 243–259. [Google Scholar]

- Martin, L.J.; Hill, V.; Maples, C.; Baker, T.; Elshaer, S.; Butsch Kovacic, M. Shared Purpose: Leveraging a Community Academic Partnership to Increase Local Environmental Health Awareness via Community Science. J. Particip. Res. Methods 2022, 3, 38475. [Google Scholar] [CrossRef]

- We Engage 4 Health—Environmental Community Survey. Available online: https://weengage4health.life/ecs/ (accessed on 3 January 2023).

- Butsch Kovacic, M.; Gertz, S.E. Leveraging Stories to Promote Health and Prevent Cancer. Cancer Prev. Res. 2022, 15, 419–422. [Google Scholar] [CrossRef] [PubMed]

- Harris, P.A.; Taylor, R.; Minor, B.L.; Elliott, V.; Fernandez, M.; O’Neal, L.; McLeod, L.; Delacqua, G.; Delacqua, F.; Kirby, J.; et al. The REDCap consortium: Building an international community of software platform partners. J. Biomed. Inform. 2019, 95, 103208. [Google Scholar] [CrossRef]

- Harris, P.A.; Taylor, R.; Thielke, R.; Payne, J.; Gonzalez, N.; Conde, J.G. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J. Biomed. Inform. 2009, 42, 377–381. [Google Scholar] [CrossRef]

- We Engage 4 Health—Citizen Science RAP. Available online: https://weengage4health.life/program/citizen-science-rap-sessions-cs-rap/ (accessed on 3 January 2023).

- McHugh, M.L. Interrater reliability: The kappa statistic. Biochem. Med. 2012, 22, 276–282. [Google Scholar] [CrossRef]

- Bonney, R.; Shirk, J.L.; Phillips, T.B.; Wiggins, A.; Ballard, H.L.; Miller-Rushing, A.J.; Parrish, J.K. Citizen science. Next steps for citizen science. Science 2014, 343, 1436–1437. [Google Scholar] [CrossRef] [PubMed]

- Jackel, D.; Mortega, K.G.; Darwin, S.; Brockmeyer, U.; Sturm, U.; Lasseck, M.; Moczek, N.; Lehmann, G.U.C.; Voigt-Heucke, S.L. Community engagement and data quality: Best practices and lessons learned from a citizen science project on birdsong. J. Ornithol. 2023, 164, 233–244. [Google Scholar] [CrossRef]

- Danielsen, F.; Jensen, P.M.; Burgess, N.D.; Altamirano, R.; Alviola, P.A.; Andrianandrasana, H.; Brashares, J.S.; Burton, A.C.; Coronado, I.; Corpuz, N.; et al. A Multicountry Assessment of Tropical Resource Monitoring by Local Communities. BioScience 2014, 64, 236–251. [Google Scholar] [CrossRef]

- Collins, S.A.; Sullivan, M.; Bray, H.J. Exploring scientists’ perceptions of citizen science for public engagement with science. J. Sci. Commun. 2022, 7, 21070201. [Google Scholar] [CrossRef]

- Kosmala, M.; Wiggins, A.; Swanson, A.; Simmons, B. Assessing data quality in citizen science. Front. Ecol. Environ. 2016, 14, 551–560. [Google Scholar] [CrossRef]

- Ozana, S.; Burda, M.; Hykel, M.; Malina, M.; Prasek, M.; Barta, D.; Dolny, A. Dragonfly Hunter CZ: Mobile application for biological species recognition in citizen science. PLoS ONE 2019, 14, e0210370. [Google Scholar] [CrossRef]

- Garbarino, J.; Mason, C.E. The Power of Engaging Citizen Scientists for Scientific Progress. J. Microbiol. Biol. Educ. 2016, 17, 7–12. [Google Scholar] [CrossRef]

- Makuch, K.E.; Aczel, M.R. Children and citizen science. In Citizen Science; Hecker, S., Haklay, M., Bowser, A., Makuch, Z., Vogel, J., Bonn, A., Eds.; UCL Press: London, UK, 2018; pp. 391–409. [Google Scholar]

- Rosas, L.G.; Rodriguez Espinosa, P.; Montes Jimenez, F.; King, A.C. The Role of Citizen Science in Promoting Health Equity. Annu. Rev. Public Health 2022, 43, 215–234. [Google Scholar] [CrossRef]

- Varaden, D.; Leidland, E.; Lim, S.; Barratt, B. "I am an air quality scientist"—Using citizen science to characterise school children’s exposure to air pollution. Environ. Res. 2021, 201, 111536. [Google Scholar] [CrossRef]

- Grootjans, S.J.M.; Stijnen, M.M.N.; Kroese, M.; Ruwaard, D.; Jansen, I. Citizen science in the community: Gaining insight in community and participant health in four deprived neighbourhoods in the Netherlands. Health Place 2022, 75, 102798. [Google Scholar] [CrossRef]

- Allf, B.C.; Cooper, C.B.; Larson, L.R.; Dunn, R.R.; Futch, S.E.; Sharova, M.; Cavalier, D. Citizen Science as an Ecosystem of Engagement: Implications for Learning and Broadening Participation. Bioscience 2022, 72, 651–663. [Google Scholar] [CrossRef] [PubMed]

- Appenfeller, L.R.; Lloyd, S.; Szendrei, Z. Citizen science improves our understanding of the impact of soil management on wild pollinator abundance in agroecosystems. PLoS ONE 2020, 15, e0230007. [Google Scholar] [CrossRef]

- Cohn, N. Your Brain on Comics: A Cognitive Model of Visual Narrative Comprehension. Top. Cogn. Sci. 2020, 12, 352–386. [Google Scholar] [CrossRef]

- Roche, J.; Bell, L.; Galvao, C.; Golumbic, Y.N.; Kloetzer, L.; Knoben, N.; Laakso, M.; Lorke, J.; Mannion, G.; Massetti, L.; et al. Citizen Science, Education, and Learning: Challenges and Opportunities. Front. Sociol. 2020, 5, 613814. [Google Scholar] [CrossRef] [PubMed]

- Sandhaus, S.; Kaufmann, D.; Ramirez-Andreotta, M. Public Participation, Trust and Data Sharing: Gardens as Hubs for Citizen Science and Environmental Health Literacy Efforts. Int. J. Sci. Educ. B Commun. Public Engagem. 2019, 9, 54–71. [Google Scholar] [CrossRef]

- Brickle, M.B.; Evans-Agnew, R. Photovoice and Youth Empowerment in Environmental Justice Research: A Pilot Study Examining Woodsmoke Pollution in a Pacific Northwest Community. J. Community Health Nurs. 2017, 34, 89–101. [Google Scholar] [CrossRef]

- Grant, T.; Croce, E.; Matsui, E.C. Asthma and the social determinants of health. Ann. Allergy Asthma Immunol. 2022, 128, 5–11. [Google Scholar] [CrossRef] [PubMed]

- Javed, Z.; Haisum Maqsood, M.; Yahya, T.; Amin, Z.; Acquah, I.; Valero-Elizondo, J.; Andrieni, J.; Dubey, P.; Jackson, R.K.; Daffin, M.A.; et al. Race, Racism, and Cardiovascular Health: Applying a Social Determinants of Health Framework to Racial/Ethnic Disparities in Cardiovascular Disease. Circ. Cardiovasc. Qual. Outcomes 2022, 15, e007917. [Google Scholar] [CrossRef]

- Lebow-Skelley, E.; Scott Tomlinson, M.; Charles, S.; Fuller, C.; Ames, B.; Pearson, M.A. A Collaborative Approach to Address Racism in a Community-Academic Partnership. Prev. Chronic Dis. 2023, 20, E47. [Google Scholar] [CrossRef]

- Wilkins, D.; Schulz, A.J. Antiracist Research and Practice for Environmental Health: Implications for Community Engagement. Environ. Health Perspect. 2023, 131, 55002. [Google Scholar] [CrossRef] [PubMed]

- MacLeod, C.J.; Scott, K. Mechanisms for enhancing public engagement with citizen science results. People Nat. 2021, 3, 32–50. [Google Scholar] [CrossRef]

- Herodotou, C.; Aristeidou, M.; Sharples, M.; Scanlon, E. Designing citizen science tools for learning: Lessons learnt from the iterative development of nQuire. Res. Pract. Technol. Enhanc. Learn. 2018, 13, 4. [Google Scholar] [CrossRef] [PubMed]

- Kreitmair, K.V.; Magnus, D.C. Citizen Science and Gamification. Hastings Cent. Rep. 2019, 49, 40–46. [Google Scholar] [CrossRef] [PubMed]

- Pazzaglia, C.; Camedda, C.; Ugenti, N.V.; Trentin, A.; Scalorbi, S.; Longobucco, Y. Community Health Assessment Tools Adoptable in Nursing Practice: A Scoping Review. Int. J. Environ. Res. Public Health 2023, 20, 1667. [Google Scholar] [CrossRef] [PubMed]

| Phase I | Phase II | |

|---|---|---|

| N | 7 | 27 |

| Age (Mean ± SD) | 17.3 ± 3.3 | 16.8 ± 3.1 |

| Gender (n % female) | 5 (71.4) | 16 (59.3) |

| Race | ||

| Asian | 0 (0) | 7 (25.9) |

| White | 7 (100) | 20 (74.1) |

| Ethnicity (% Latino) | 2 (28.6) | 3 (11.1) |

| Education level (n %) | ||

| 4–12th | 2 (28.6) | 18 (66.7) |

| High School | 4 (57.1) | 6 (22.2) |

| College | 1 (14.3) | 3 (11.1) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Butsch Kovacic, M.; Elshaer, S.; Baker, T.A.; Hill, V.; Morris, E.; Mabisi, K.; Snider, I.; Gertz, S.; Hershberger, S.; Martin, L.J. The Eyewitness Community Survey: An Engaging Citizen Science Tool to Capture Reliable Data while Improving Community Participants’ Environmental Health Knowledge and Attitudes. Int. J. Environ. Res. Public Health 2023, 20, 6374. https://doi.org/10.3390/ijerph20146374

Butsch Kovacic M, Elshaer S, Baker TA, Hill V, Morris E, Mabisi K, Snider I, Gertz S, Hershberger S, Martin LJ. The Eyewitness Community Survey: An Engaging Citizen Science Tool to Capture Reliable Data while Improving Community Participants’ Environmental Health Knowledge and Attitudes. International Journal of Environmental Research and Public Health. 2023; 20(14):6374. https://doi.org/10.3390/ijerph20146374

Chicago/Turabian StyleButsch Kovacic, Melinda, Shereen Elshaer, Theresa A. Baker, Vincent Hill, Edith Morris, Keren Mabisi, Ian Snider, Susan Gertz, Susan Hershberger, and Lisa J. Martin. 2023. "The Eyewitness Community Survey: An Engaging Citizen Science Tool to Capture Reliable Data while Improving Community Participants’ Environmental Health Knowledge and Attitudes" International Journal of Environmental Research and Public Health 20, no. 14: 6374. https://doi.org/10.3390/ijerph20146374

APA StyleButsch Kovacic, M., Elshaer, S., Baker, T. A., Hill, V., Morris, E., Mabisi, K., Snider, I., Gertz, S., Hershberger, S., & Martin, L. J. (2023). The Eyewitness Community Survey: An Engaging Citizen Science Tool to Capture Reliable Data while Improving Community Participants’ Environmental Health Knowledge and Attitudes. International Journal of Environmental Research and Public Health, 20(14), 6374. https://doi.org/10.3390/ijerph20146374