Abstract

Artificial intelligence (AI) has the potential to either reduce or exacerbate occupational safety and health (OSH) inequities in the workplace, and its impact will be mediated by numerous factors. This paper anticipates challenges to ensuring that the OSH benefits of technological advances are equitably distributed among social groups, industries, job arrangements, and geographical regions. A scoping review was completed to summarize the recent literature on AI’s role in promoting OSH equity. The scoping review was designed around three concepts: artificial intelligence, OSH, and health equity. Scoping results revealed 113 articles relevant for inclusion. The ways in which AI presents barriers and facilitators to OSH equity are outlined along with priority focus areas and best practices in reducing OSH disparities and knowledge gaps. The scoping review uncovered priority focus areas. In conclusion, AI’s role in OSH equity is vastly understudied. An urgent need exists for multidisciplinary research that addresses where and how AI is being adopted and evaluated and how its use is affecting OSH across industries, wage categories, and sociodemographic groups. OSH professionals can play a significant role in identifying strategies that ensure the benefits of AI in promoting workforce health and wellbeing are equitably distributed.

1. Introduction

Artificial intelligence (AI) is at the core of the development of “Cyber-Physical Systems” that characterize the current paradigm shift in the world of work known as the Fourth Industrial Revolution [1]. As such, understanding the impact of AI on the health, safety, and wellbeing of workers is essential to the field of occupational safety and health [2]. While not uniformly defined, artificial intelligence (AI) refers to systems built to execute intellectual processes of humans such as reasoning, identifying meaning, generalizing information, or learning from experience. Employers use numerous applications of AI to streamline business processes and increase worker productivity and safety. Algorithms that make recruiting and hiring decisions are increasingly common ways to identify the fit of job candidate. These algorithms use natural language processing to extract information from resumes to create a database of potential hires [3,4]. Algorithms that rely on facial recognition are used to screen video interviews for a job candidate’s body language, speech cadence, and communication skills [5]. Human resource conversational bots and smart assistants manage onboarding and responding to employee questions, thereby reducing the workload for strained human resource professionals [6]. AI allows for real-time data collection to monitor workers and identify exposure risk. [7]. Environmental sensors and biosensors collect data on biological, physical, or chemical changes and convert them into measurable signals that flag early warning signs of occupational disease or distress [8]. Robotics in manufacturing, healthcare, transportation, and logistics, among other industries, rely on AI and sensor technologies to remove human contact from dangerous or risky work tasks [9]. While there is evidence describing the potential of AI to increase productivity and protect the health and safety of workers in different industry settings [10], the ways in which AI either reduces or exacerbates occupational health inequities in the workplace must be explored.

Occupational safety and health (OSH) inequities are avoidable differences in work-related injury and illness incidence, morbidity, and mortality that are closely linked with social, economic, and environmental disadvantage resulting from structural and historical discrimination or exclusion [11]. Social and economic structures can lead to occupational health inequities in a variety of ways, including the overrepresentation of workers from certain social groups in dangerous occupations [12,13], language barriers [14], differential treatment on the job [15], and limited access to resources, including technologies, that help protect workers on the job [16,17]. For example, research suggests that wages, education, race, and place of birth are all associated with OSH outcomes such as employment in high injury/illness occupations, mortality [10], and job insecurity [13]. In addition, U.S.-based studies suggest that workers who are from certain racialized ethnic minority groups, such as Black, American Indian/Alaskan Native, and Hispanic, have a high school degree or less, or are foreign-born are at disproportionate risk for negative OSH outcomes [10,18].

The Artificial Intelligence and Occupational Safety and Health Equity Research Gap

The same social structures that contribute to occupational health inequities influence the development, distribution, and integration of new technologies at work [14]. Understanding and accounting for the influence these exclusionary social structures play in the development and implementation of new technologies is essential in order to reduce their likelihood of aggravating existing occupational health inequities and unlock their potential to mitigate them [14]. The growing presence of AI at work, along with changing workforce demographics, shifting work arrangements, the digital skills gap, and increasing technological job displacement, makes understanding the relationship between AI and occupational health inequities a central question in Future of Work (FoW) research [19]. The FoW initiative was established by the U.S. National Institute for Occupational Safety and Health (NIOSH) in 2019 to prepare OSH professionals to address future workplace exposures and hazards. A research agenda for the FoW initiative was published in 2021, identifying a need to further explore the equitable distribution of technology-related OSH risks and benefits (Goal 7, Objectives 1–2) [20]. This study begins to address the research gap by exploring the role of AI in reducing or exacerbating occupational health inequities within the context of the FoW. A scoping review of the literature, thematic representation of the findings, and discussion of considerations for OSH professionals to address AI and OSH equity are included in this review.

2. Materials and Methods

In this study, a scoping literature review was completed to summarize the recent literature on AI’s role in OSH equity. A scoping literature review aims to synthesize the research in a topic area in order to serve as a starting point for future research [21]. Scoping provides more flexibility than a systematic review or meta-analysis, as it accounts for more diverse and relevant literature (e.g., gray literature) [22]. This literature review was guided by two priori assumptions: (1) AI likely contributes to OSH inequities; and (2) OSH professionals may not be fully prepared to address the health and wellbeing impacts of AI in workplaces. The following research questions (RQs) guided the analysis of full-text literature.

- RQ 1: How can AI be used to promote OSH equity?

- RQ 2: How does AI present barriers or challenges to OSH equity?

- RQ 3: What are best practices for addressing emerging OSH equity challenges related to AI?

- What are gaps in addressing AI and OSH equity?

- What are key issues for OSH/Industrial Hygiene professionals to understand around AI?

The delimiting of a literature search topic ensures that the search remains manageable. At the onset of the literature search, keywords and concepts were identified as they relate to an AI system’s ability to promote health equity [23,24]. The keywords and concepts related to AI, OSH, and equity presented in Table 1 were identified to guide the search frame.

Table 1.

Literature search keywords and concepts.

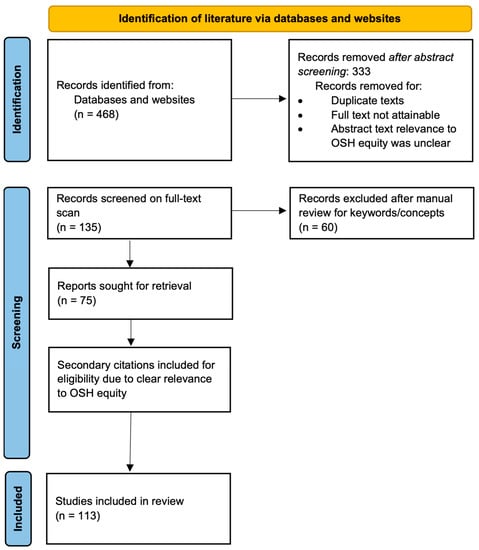

The scoping review search strategy is demonstrated in Figure 1 using an adapted PRISMA methodology [28]. This strategy was used to retrieve peer-reviewed, white and gray literature, industry-specific papers, think tank reports, and other related publications. Publications used in the scoping literature review were assessed for eligibility and inclusion. The search strategy was designed around the aforementioned keywords/concepts.

Figure 1.

This adapted version of the PRISMA guidelines demonstrates the scoping review search strategy used in this review in order to illustrate the process used and the resulting articles.

Through an iterative process and as themes were identified in the literature, the initial search terms were amended and additional relevant literature was included [23]. An iterative process was used to ensure that we captured as much of the relevant literature as possible during the identification step of our search. Keywords describing these concepts were searched for in online databases and on the websites of the Top 50 think tanks as represented by the University of Pennsylvania Think Tank Index Report [29]. The Think Tank Index Report is identified through an international survey of approximately 2000 scholars, funders, policy makers, and journalists, who rank over 6500 think tanks using evidence-informed criteria developed by the University of Pennsylvania Think Tanks and Civil Societies Program. Direct searches on the websites of U.S.-based government agencies were completed. Government agencies were identified by two study team members based on existing records of AI use and/or research related to the NIOSH FoW initiative. Accordingly, governmental literature reflects the U.S. landscape. All search sources are listed in Table 2. When available, controlled vocabulary (e.g., Medical Subject Headings) was employed. Search terms (Table 3) were used to search through article content for inclusion. Key events were searched for upon identification in the literature. An example of a key event is the failure of the Maneuvering Characteristic Augmentation System (MCAS) of Boeing 737 Max airplanes, resulting in two crashes. The MCAS AI system was designed to activate and assist a pilot under certain circumstances; however, technical issues resulted in fatal accidents [30]. Our inclusion criteria required publication during 2005–2021 to account for advances in AI and in AI’s role in the workplace. Publication type (i.e., empirical, commentaries, etc.) was not limited in the scoping review. Peer-reviewed, white, and grey literature publications were translated into English. Duplicate publications were excluded.

Table 2.

Search sources.

Table 3.

Scoping review search terms.

The initial scoping results revealed 468 articles, with 135 articles screened on abstract. Full text screening included 135 articles: 79 peer-reviewed journal articles, 32 pieces of white literature, and 24 pieces of gray literature. Full-text screening identified 75 articles for inclusion: 31 peer-reviewed journal articles, 29 pieces of white literature, and 15 pieces of gray literature. Secondary citations were searched based on in-text references to the previously identified keywords/concepts. These 38 secondary citations were included in the scoping review, and did not need to meet all inclusion criteria (e.g., date of publication). In total, 113 articles were analyzed (n = 113): 49 peer-reviewed journal articles, 39 from the white literature, and 25 from the gray literature. A single reviewer identified the articles for inclusion, and the study team was consulted at each stage of the search. If a keyword and/or concept was referenced at least one time in the article, its use was recorded in an Excel spreadsheet. Author, year, title, source type (e.g., peer review), knowledge type (e.g., empirical), methods, key points, and pertinent quotes were collected, and the ways in which the article answered the RQs were recorded. Appendix A includes a table of the 113 articles included in the scoping review analysis.

3. Results

Of the 468 articles initially identified in the scoping review, 75 articles were selected for inclusion. Through full-text screening of the 75 articles, an additional 38 articles were identified as secondary citations. The 38 secondary citations were included in the analysis for a total sample of 113 articles (see Appendix A). The 113 articles were analyzed for reference to the keywords and concepts used to guide the search (see Table 1). The keyword and concept most frequently referenced in the reviewed literature was job security (n = 58), while confidentiality (n = 39) was the least frequently mentioned. The frequency of each keyword/concept is presented in Table 4. Human-centered system design (n = 48) is essential to building trusted systems that will not create or exacerbate OSH concerns caused by AI, and may even ameliorate them; this includes creating explainable AI. Explainable AI, where users are able to understand the operations, the outputs of the system, and how they are used, can improve trust and efficiency. This reduces the “black box” problem, which obscures the inner workings of AI systems, causing distrust [31,32,33]. The concept of bias was mentioned in 35% (n = 40) of the screened full-text articles. AI systems, notably algorithms, collect, maintain, analyze, and share protected worker information.

Table 4.

Frequency of Keywords/Concepts by Source Type.

Job security was added as a fifth conceptual domain prior to full-text screening because it was frequently noted in the literature. The literature suggests that AI affects job security because it may be an existential threat to segments of the workforce, possibly resulting in mass unemployment in certain industries and occupations [34,35,36,37]. In spite of this, research suggests that the advent of new technologies may merely transition occupations and create new jobs, with only temporary job insecurity [38,39,40,41,42,43]. Privacy (n = 43) remains a critical issue in AI implementation and use, and is discussed further below [44,45,46,47,48,49].

The results of the literature review demonstrate certainty that AI has a significant role in the FoW. However, the role of AI is divergent, as it can both facilitate OSH equity and create barriers to it. For example, AI used in factories and warehouses may use “machine vision” to reduce the risk of robot–human collision; however these AI systems often fail to recognize darker skin tones, which increases injury/fatality risk for workers who are Black, Indigenous, or people of color (BIPOC) [43,50].

In the course of our review, three significant themes emerged around AI’s ability to promote and present barriers to OSH equity (RQs 1&2): (1) AI’s Impact on High-Risk Industries; (2) Data Use and Algorithmic Integrity; and (3) Societal Shifts. Best practices to address emerging challenges in OSH equity (RQ 3) are described as well. A summative interpretation of the literature, recommendations for future research, and considerations for OSH professionals are described in the discussion section.

3.1. Promoting OSH Equity: AI’s Impact on High-Risk Industries and Precarious Work

In response to RQ 1, AI may be both a barrier and facilitator to OSH. AI has significant potential to improve OSH equity, particularly in high-risk industries such as construction, manufacturing, mining, and oil and gas transportation. AI may reduce the need for workers to engage in dirty, dangerous, or monotonous work, or to work in extreme conditions such as poor weather and emergency or disaster situations [31,51,52,53]. These jobs are more likely to be occupied by workers that are from racialized ethnic minority groups, have a high school degree or less, are foreign-born, and receive low wages [13]. Traumatic occupational injuries are geographically clustered; clusters of occupational injuries are correlated directly with immigrant communities and urban poverty in the U.S. [54]. Indicative of health inequities and OSH disparities, certain communities take on a higher burden of dangerous work and traumatic injuries. By reducing exposure to hazardous conditions in these industries, AI has the potential to reduce occupational health inequities for workers from these communities.

AI technologies may enhance OSH for workers in high-risk industries through various safety optimization systems. AI’s ability to collect real-time exposure data may improve exposure estimates, predict adverse events, and reduce the impact of hazards [31,38,55,56,57,58]. Examples of such AI technologies include operator alert systems, remote imaging technology, and use of environmental sensors and biosensors to measure exposure levels [59,60,61]. However, reliance on AI systems presents concerns for workers as well. Certain AI applications, such as productivity trackers that monitor activity, present increased instances of surveillance and control for worker populations already experiencing lower levels of socioeconomic status (SES) [62,63]. In the FoW, the potential misuse of workforce data is likely to increase as AI integration becomes ubiquitous [64,65]. For example, jobs that require GPS tracking (e.g., platform-based rideshare drivers) may reveal protected personal information about an employee (i.e., sexuality or religion) that can be used to discriminate against them [66]. Low-wage workers, workers without collective bargaining units, temporary workers, and workers in precarious jobs may be more likely to work in industries that use wearable data collection devices, which may track workflow efficiency or be used to monitor wellness initiatives [67]. Thus, due to the nature of their work arrangements, these workers may be more likely to have their data exposed and may be susceptible to security violations. This requires algorithmic integrity in the form of proper systems to curb the mishandling and misuse of received data in order to reduce bias [48,68].

3.2. Barriers and Facilitators to OSH Equity: Data Use and Algorithmic Integrity

Scheduling and hiring AI systems are notably discussed in the literature as ways to potentially reduce gender, racial, ability, and age bias in the workplace. However, previous research has demonstrated that AI hiring tools are more susceptible to inherent bias and discrimination than anticipated [32,69,70]. AI has been shown to automatically discover hidden patterns in natural language datasets, leading systems to capture patterns that reflect human biases such as racism, sexism, ageism, and ableism [71]. To the degree that AI reduces the consideration of these patterns in the decision-making process, it could help reduce bias and inequities in hiring and scheduling at work. Alternately, recognition of these patterns could reinforce or amplify existing biases present in society if these patterns influence the AI decision-making process. While the effects of algorithmic bias are not yet delineated in the literature, there is reason for concern. The ability to recognize patterns in human diversity is one way AI can reinforce existing bias and occupational health inequities. For example, speech recognition AI, which has been used in hiring, has demonstrated clear biases against African Americans and groups with dialectical and regional speech variations [44].

Conversely, machine learning algorithms that do not account for human diversity can reinforce existing bias and occupational health inequities as well. For example, AI facial analysis has shown clear disparities across skin color, failing to detect darker skin tones, and is highly concerning for people with disabilities due to failure to recognize craniofacial differences or mobility devices [8,43,72]. Certain algorithms have misidentified darker-skinned women as often as 35% of the time and darker-skinned men 12% of the time, which is much higher than the same rates for Caucasians [73,74]. As such, any benefits from this AI facial analysis would disproportionally favor able-bodied individuals with lighter skin tones, thereby reinforcing existing social inequities.

3.3. Barriers and Facilitators to OSH Equity: Societal Shifts

AI implementation at work will result in societal shifts related to job security that will be influenced by existing social inequalities related to unemployment, digital divides, and skill gaps. These societal shifts are likely to disproportionally impact workers with lower SES, thereby creating additional barriers to OSH equity and aggravating existing social inequalities that are detrimental to health. Early in the scoping review search process, job security emerged as a conceptual domain due to its recurrence in the literature.

Workers are at risk of being outperformed by AI [75]. AI has the potential to cause the loss of jobs in certain industries. As much as 40%–50% of the workforce, or more in developing countries, is vulnerable to technological job displacement [8,76]. In care roles, for example, research suggests that the elderly may prefer robots to humans for certain tasks [77,78,79].

Numerous predictions have been offered on how AI will impact unemployment, with the most frequently noted being that AI may cause short-term instability and job losses in certain sectors while creating jobs in others [33,36,80,81,82]. Others predict that AI will affect both lower-skilled workers (e.g., drivers, security, and cleaners) and highly skilled workers (e.g., lawyers, physicians, analysts, and managers) [67,83]. Certain sectors, such as trucking, will be particularly hard hit; other such sectors include the service industry and healthcare. However, AI’s impact on unemployment may be exaggerated, and the successful retooling and reskilling of labor may mitigate job loss [37,39]. AI’s use across industries, workplaces, and society requires consideration of which social groups may have limited opportunities to build the skills necessary to succeed in the FoW.

While automation may increase productivity and create new jobs, these new jobs might not be equitably distributed across racial groups, genders, age groups, U.S. geographical regions, or skill levels [32,40,84,85]. Those with higher education or better access to job training and workforce development programs are more likely to succeed in the FoW [83,86]. Employers are likely to experience immediate skills gaps, and highly skilled workers with knowledge of AI will be more valued and employable than those without AI skills [86,87].

The digital divide will likely increase existing education, skill [34,88,89,90,91], OSH, and income inequities [45,73,82,92]. The digital divide and technological displacement may be most apparent in historically marginalized communities, for example, in rural and low-income communities. These communities will experience the most significant disruptions to job security (the same American communities most negatively impacted by IT-era changes) [84,93]. Digitally-oriented metropolitan areas with large populations that are better educated will experience less disruption from AI integration. Employees of small businesses (i.e., businesses employing fewer than eleven individuals) may be less likely to have their OSH concerns reported to state and federal agencies [94]; therefore, the impact of AI on these workers is likely to be understudied. Existing AI systems have only been evaluated at a small number of worksites with limited geographic diversity within the U.S., which reduces generalizability and lessons learned for use in rural, low-income communities, small businesses, or low-resourced organizations [95,96,97].

The digital divide is compounded in historically marginalized communities by existing differences in school resources that correlate with residential and income segregation in low-income communities. High-resourced schools are better poised to prepare students for changes to the labor market and provide them with the skills necessary to successfully adapt to technological advances at work such as the growing reliance on AI [89]. Wealth inequality may escalate as AI investors and workers from high-resourced communities subsume the majority share of income growth [98]. Conversely, technological job displacement, at least initially, will disproportionally impact workers from low-resourced communities, leading to increased job insecurity. Perceived job insecurity and anxiety over new skills has been likened to a public health crisis [99]. Increases in depression, suicide, and alcohol and drug abuse, including opioid-related death, may occur, especially among individuals from low-resourced communities that already experience health inequities [31,100,101,102].

3.4. Best Practices for Emerging Challenges

The literature included in the scoping review often provided recommendations for successful AI implementation, which have been summarized below to suggest best practices for emerging challenges related to AI. Accordingly, companies that develop and use AI must be aware of the ethical, legal, and societal impacts of AI integration in the workplace [41,98,103]. The literature suggests that it is possible to design AI in a way that promotes equity and reduces biases using an iterative risk informed approach to research, design, and implementation [104]. Human-centered systems consider how humans will interface with the AI, which reduces biases and places realistic demands on workers. Consequently, there is less likelihood of health and safety concerns in such a situation because the AI is developed with consideration of specific cultural, economic, and social environments [92].

An ethical code or framework for justice in AI development and implementation was frequently recommended in the literature, and would facilitate OSH equity [74,105]. Ethicists [43,69] or third-party auditors have been recommended to ensure appropriate use of AI [36,42,106]. Algorithmic audits assess a system for bias, accuracy, robustness, interpretability, privacy characteristics, and other unintended consequences [34]. In order to reduce bias, the diversification of algorithm training data and ensuring that training data mirrors the demographic diversity of the population with respect to which the algorithm is used are fundamental to equity [46,65,107]. There is an existing need to diversify the AI workforce (i.e., developers, coders/programmers) and provide equitable opportunities for employment to ensure more representative AI [108]. Providing education on AI development to BIPOC while engaging workers in the development of AI through participatory approaches to AI design and implementation may facilitate the use of more diverse algorithmic training data.

In light of the impending skills gap, opportunities for AI skills training [105,109], retooling of labor [34,87], and continuing educational opportunities that are accessible to all workers [83,110], especially those from historically underserved communities, are recommended to promote OSH equity. “Future-proofing” workers includes training that builds soft skills, foundational skills, and technical/occupational skills related to the FoW [84]. Support in finding roles that complement AI systems or enable transitioning to new jobs as needed may assist those subject to job displacement [90,111]. Pathways to emerging skills, such as computer operation technology, software development technology, mechanical manufacturing, automatic control, and intelligent control, can be developed; apprenticeships and alternative learning models may be especially effective for adult learners [112,113]. Education systems can be adapted to ensure that all communities are prepared for the future of work and that all students have the digital skills necessary to succeed in the workforce. Inequitable opportunities for education as a result of racial or gender bias reinforce and perpetuate health inequities by limiting access to job opportunities and healthcare [89,114].

Certain communities, particularly those that are smaller, low-income, rural, or historically underserved, will need support in order to adjust to the potential negative impacts of AI [85]. Serious economic and labor market disruption can be mitigated by intentional community reinforcements. Universal basic income [34,45,47] and increased social safety nets are considered viable options for reducing income inequality or the impacts of job displacement [112,115]. Universal internet access can increase equity; it has been suggested that telecommunication firms, internet providers, and satellite companies be incentivized in order to expand and improve their networks in underserved communities [74,88,116].

4. Discussion

This scoping review has identified ways in which AI promotes OSH equity. AI tools such as biosensors [8,10,31,72] and wearable technologies [48,73,102], can reduce the impact of workplace hazards through continuous monitoring of workers’ chemical, physical, biological, and ergonomic risk while on the job. AI integration in the workplace can improve OSH outcomes, particularly in high-risk industries [14,87,92]. Algorithmic recruiting, hiring, and scheduling all have the potential to reduce historical or systemic biases, although AI hiring tools are more susceptible to inherent bias and discrimination than anticipated [44,67,107]. It is essential to incorporate inclusive research and design practices when developing and implementing AI applications for the workplace [117]. Additional research on how human-centered systems design is operationalized to improve worker safety may further justify its use in the development of AI.

Facilitators of OSH equity include efforts to involve employees in decision-making around AI [118], hiring corporate ethicists or conducting audits of AI systems [37,43,46,96], and using representative algorithm training data [106,107]. Social safety nets such as universal basic income [45,47] and universal internet access [92,113] may improve equity in communities that experience negative impacts of AI integration.

One barrier to OSH equity is that reliance on certain AI systems creates privacy and confidentiality concerns and increased worker surveillance that can disadvantage workers if safeguards on use of the data generated by these systems are not implemented [62,63]. Privacy and confidentiality concerns can be mitigated by increased transparency of power structures, algorithmic audits, and multidisciplinary participatory approaches to AI design, implementation, maintenance, and evaluation.

Concerns around job security [34,75], the inequitable distribution of new jobs [45,83], and increased income disparities [88,89,92] associated with AI are well-founded and associated with negative health effects [100,101]. The use of AI in the workplace may further disadvantage populations of the American workforce that already experience structural vulnerabilities, such as women, BIPOC persons, rural workers, and those experiencing job precarity or displacement [14,116].

As technology advances, a risk that the OSH benefits will not be equitably distributed exists, which may aggravate existing occupational health inequities or create new ones. OSH professionals must understand and account for the societal shifts caused by AI in the workplace as they develop programs to promote the safety, health, and wellbeing of their workers. AI may cause short-term instability and job losses in certain industry sectors and demographic groups while creating jobs in others [33,80,81]. Robust and applied measures of the current and future effects of AI job displacement and job creation are currently in development [31]. Economists assert that the impact of new technologies is conceptualized as enabling labor to be more productive if technology implementation is gradual [87]. Mechanisms to facilitate the rapid retooling of labor in pace with AI development and expansion are necessary in order to reduce OSH inequities.

4.1. Recommendations for Future Research

This paper begins to identify knowledge gaps on AI and OSH equity that can inform FoW research. Knowledge gaps include limited research on the practical effects of existing AI used in workplaces. This includes algorithmic data representation to reduce bias as well as how AI systems are evaluated for their impact on the safety, health, and wellbeing of workers [43,44,75]. The methods used to evaluate and explain the accuracy of AI, along with the metrics, standards, and guidelines for the ethical use of AI, are lacking as well. Future research is needed to explore issues relating to technological job displacement and mitigating the digital divide. In addition, research is needed that considers where AI is being adopted and how its use is affecting workers across industries, wage categories, and sociodemographic groups. Relatedly, research on the trajectory of AI’s use and impact in different industrial sectors may be useful in the FoW initiative, prioritizing the development of future research, OSH, and skills-building initiatives.

Current and future efforts to understand the impact of AI on equity outcomes are further complicated by the limited equity-related variables in current OSH data collection systems [119]. For example, Occupational Safety and Health Administration illness and injury logs, Bureau of Labor Statistics’ Survey of Occupational Injuries and Illnesses, workers’ compensation data systems do not collect race/ethnicity data, and other equity variables (e.g., education, income level, geographic location, etc.) may be limited [120]. Several ways have been proposed to address this, including: (1) collecting race/ethnicity data in these systems; (2) linking OSH data to systems that do include race/ethnicity; and (3) using algorithms to predict race/ethnicity from other available data points, such as name and address [121]. As with any data related to demographic characteristics, privacy concerns and potential misuse based on social bias are of concern. Conversely, AI has recently proven useful during the COVID-19 pandemic to overcome limitations of public health data collection systems with respect to workers from historically underrepresented groups. Specifically, a recent study used artificial intelligence to analyze news reports of COVID-19 workplace outbreaks to identify social factors that are often not fully captured in public health data systems (such as race, ethnicity, and nativity) that could potentially have affected disease transmission [122].

4.2. Considerations for OSH Professionals

While AI’s use in the workplace is inescapable, OSH professionals must consider how the development and use of these tools impact equity. Accordingly, assembling a diverse and multidisciplinary team of collaborators and including workers in the process may promote OSH equity in the development and implementation of AI tools in the workplace. A social approach to addressing the equity impacts of AI requires a paradigm shift for OSH professionals [117]. Understanding how social structures circumscribe the development, implementation, and impact of AI in the workplace is an essential first step to ensuring equitable distribution of the benefits of AI in the workforce and realizing its potential to reduce OSH inequities. Understanding the complexities of AI will assist OSH professionals in the protection of worker wellbeing and the promotion of health equity. This may require the training of OSH professionals to be better prepared to support workers in the FoW.

An OSH approach that anticipates trends using strategic foresight or proactive intervention can help to mitigate the negative effects of AI on OSH equity [38,45,60,84]. OSH professionals might consider the effects of AI integration on workers’ lived experiences and on low-resourced communities and organizations, including an understanding of how asymmetrical power relationships along axes such as race, ethnicity, sex, gender, nativity, and class impact the distribution of work-related benefits and risks of AI.

4.3. Limitations

This study was a scoping review; therefore, the focus was on the breadth rather than the depth of information. As a result, an assessment of the methodological limitations and risk of bias in the evidence was not performed. Inclusion criteria around date of publication (i.e., 2005–2021) may have limited our search results. The sample largely consisted of U.S.-based literature, limiting our ability to perform a comparison with the international context of AI and OSH equity. International comparison of OSH equity is, however, quite difficult due to the diversity of labor markets, employment, and working conditions globally [123,124].

There is limited research on AI that has explicitly studied or mentioned issues of health equity. Although our search uncovered three case studies, the articles included in the review were largely theoretical or conceptual as opposed to applied research [97,125,126]. The empirical studies relied on large datasets or policy analysis to make claims about AI, OSH, and equity in the FoW. This lack of applied research limits our understanding of the practical outcomes and limitations of AI. Relatedly, qualitative research was significantly lacking, which further limits our understanding of workers’ lived experience.

5. Conclusions

AI implementation is already pervasive in many industries, and its use is rapidly expanding [127,128]. AI systems are tools used by employers primarily to make workplaces more efficient and effective. For example, in healthcare AI is used to improve diagnoses and treatment precision [96], while in business AI tools track productivity to maximize profit [56]. While research is limited, study team assumptions related to AI as a potential contributor to health disparities (i.e., unemployment, wages, etc.) were confirmed by the literature.

As with any tool, AI’s impact depends on how it is used, and whether it reduces or exacerbates inequities is determined by its development, application, and evaluation. While AI may lead to safer workplaces through the use of assistive technology, concerns around bias in AI programming, recruitment and hiring, job insecurity and unemployment, and personal data use demonstrate a need to further explore the intermediate influence mechanisms of AI on workforce health and equity. To that end, this scoping literature review recognizes that significant changes in the FoW are inevitable, and as such begins to elucidate barriers and facilitators of AI in promoting OSH equity. AI’s role in OSH equity is vastly understudied. There is an urgent need for multidisciplinary research that addresses where and how AI is being adopted and evaluated and how its use is affecting OSH across industries, wage categories, and sociodemographic groups. OSH professionals can play a significant role in identifying strategies to ensure the benefits of AI in promoting workforce health and wellbeing are equitably distributed.

The findings and conclusions in this report are those of the authors, and do not necessarily represent the official position of the National Institute for Occupational Safety and Health or Centers for Disease Control and Prevention.

Author Contributions

Conceptualization, E.F., M.A.F., P.P., and J.A.V.; writing—original draft preparation, E.F.; writing—review and editing, M.A.F., P.P., and J.A.V.; supervision, J.A.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Acknowledgments

The authors would like to thank Stephen Bertke, Andrea Steege, and Steven Wurzelbacher of NIOSH, who provided helpful comments on earlier drafts of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

The scoping review included 113 articles, which were all reviewed (N = 113). Table A1 details the initial 75 lead articles identified via full-text screening. Secondary citations (n = 38) are included as further evidence, and did not need to meet all inclusion criteria (i.e., date of publication). In total, one hundred and thirteen articles were reviewed (N = 113). Use of keywords and concepts in each article is recorded below.

Table A1.

Literature included in scoping review.

Table A1.

Literature included in scoping review.

| Authors | Article Title | Year | Lead Article vs. Secondary Citation | Keywords and Concepts |

|---|---|---|---|---|

| Acemoglu, D., Restrepo, P. | The Wrong Kind of AI? Artificial Intelligence and the Future of Labor Demand [80] | 2019 | Lead | Bias, security, privacy, confidentiality |

| Administrative Conference of the United States | Symposium on Artificial Intelligence in Federal Agencies [46] | 2020 | Lead | Human-centered system design, privacy, confidentiality, bias |

| Agrawal, A., Gans, J.S., Goldfarb, A. | Artificial Intelligence: The Ambiguous Labor Market Impact of Automating Prediction [111] | 2019 | Lead | Privacy, confidentiality, bias |

| Agrawal, A., Gans, J.S., Goldfarb, A. | The Economics of Artificial Intelligence: An Agenda [129] | 2019 | Lead | Job security, human-centered systems design |

| Agrawal, A., Gans, J.S., Goldfarb, A. | Economic Policy for Artificial Intelligence [82] | 2018 | Lead | Bias |

| Ajunwa, I., Crawford, K., Schultz, J. | Limitless Worker Surveillance [66] | 2017 | Lead | Privacy, confidentiality, bias |

| Alexander-Kearns, M. Peterson, M., Cassady, A. | The Impact of Vehicle Automation on Carbon Emissions [130] | 2016 | Lead | Privacy |

| Amann, J., et al. | Explainability for Artificial Intelligence in Healthcare: A Multidisciplinary Perspective [131] | 2020 | Secondary | Human-centered systems design |

| Ambegaokar, S., Podesfinski, R., Wagner, J. | Improving Customer Service in Health and Human Services Through Technology [113] | 2018 | Lead | Privacy |

| Autor, D., Goldin, C., Katz, L. | Extending the Race between Education and Technology [132] | 2020 | Secondary | Bias |

| Baldassarre, A., et al. | Biosensors in Occupational Safety and Health Management: A Narrative Review [8] | 2020 | Lead | Human-centered systems design |

| Biggers, B.E.A. | Curbing Widespread Discrimination by Artificial Intelligence Hiring Tools: An Ex Ante Solution [67] | 2020 | Lead | Privacy, Confidentiality, Bias, Human-centered system design |

| Boden, L., Spieler, E., Wagner, G. | The Changing Structure of Work: Implications for Workplace Health and Safety in the US [133] | 2016 | Secondary | Job security |

| Bollier, D. | Artificial Intelligence Comes of Age [47] | 2017 | Lead | Privacy, confidentiality, bias, job security |

| Brynjolfsson, E., Mitchell, T. | What Can Machine Learning Do? Workforce Implications [125] | 2017 | Lead | Job security |

| Buczak, A., et al. | Genetic Algorithm Convergence Study for Sensor Network Optimization [55] | 2001 | Secondary | N.A. 1 |

| Caliskan, A. | Detecting and Mitigating Bias in Natural Language Processing [69] | 2021 | Lead | Privacy, confidentiality, bias, human-centered system design |

| Caliskan, A., Bryson, J., Narayanan, A. | Semantics Derived Automatically from Language Corpora Contain Human-Like Biases [68] | 2017 | Secondary | Bias, job security, human-centered systems design |

| Carter, R.A. | Digital Initiatives Connect Safety Challenges and Solutions [73] | 2018 | Lead | Privacy, confidentiality |

| Chakkravarthy, R. | Artificial Intelligence for Construction safety [58] | 2019 | Lead | Privacy, confidentiality, bias |

| Chapman, L., Brustein, J. | A.I. Has a Race Problem [71] | 2018 | Secondary | Bias |

| Chin, C. | Assessing Employer Intent When AI Hiring Tools are Biased [106] | 2019 | Lead | Privacy, confidentiality, bias, job security |

| Chopra, A., Gurwitz, E. | Modernizing America’s Workforce Data Architecture [134] | 2017 | Lead | Human-centered system design, job security, privacy, confidentiality |

| Colvin, G. | Humans Are Underrated: What High Achievers Know That Brilliant Machines Never Will [70] | 2016 | Secondary | Job security |

| Congressional Research Service | Overview of Artificial Intelligence [135] | 2017 | Lead | Privacy, human-centered systems design |

| Congressional Research Service | Science and Technology Issues in the 116th Congress [79] | 2019 | Lead | Confidentiality, bias |

| Congressional Research Service | Artificial Intelligence: Background, Selected Issues, and Policy Considerations [32] | 2021 | Lead | Privacy, bias, confidentiality, job security, human-centered systems design |

| CyberCorps | Scholarship for Service [136] | n.d. | Secondary | Job security |

| Darvish B., et al. | Safe Machine Learning and Defeating Adversarial Attacks [137] | 2019 | Secondary | Human-centered systems design |

| Davis, S., Von Wachter, T. | Recessions and the Costs of Job Loss [127] | 2011 | Secondary | Job security |

| DeCanio, S. | Robots and Humans—Complements or Substitutes? [138] | 2016 | Lead | Job security |

| Deloitte | Investing in Trustworthy AI [33] | 2021 | Lead | Privacy, confidentiality, bias, job security |

| Deming, D. | The Growing Importance of Social Skills in the Labor Market [139] | 2017 | Secondary | Job security, bias |

| Engler, A. | Auditing Employment Algorithms for Discrimination [44] | 2021 | Lead | Privacy, confidentiality, bias |

| European Agency for Safety and Health at Work | OSH and the Future of Work: Benefits and Risks of Artificial Intelligence Tools in Workplaces [126] | 2019 | Secondary | Bias, privacy, confidentiality, job security, human-centered systems design |

| Felknor, S., et al. | How Will the Future of Work Shape the OSH Professional of the Future? A Workshop Summary [24] | 2020 | Lead | Human-centered systems design |

| Frey, C., Osborne, M. | The Future of Employment: How Susceptible are Jobs to Computerisation? [75] | 2017 | Secondary | Job security, bias |

| Gibbons, E. | Toward a More Equal World: The Human Rights Approach to Extending the Benefits of Artificial Intelligence [92] | 2021 | Lead | Human-centered systems design |

| Gibney, E. | The Battle for Ethical AI at the World’s Biggest Machine-Learning Conference [140] | 2020 | Secondary | Bias, human-centered systems design |

| Government Accountability Office | Artificial Intelligence: An Accountability Framework for Federal Agencies and Other Entities [105] | 2021 | Lead | Human-centered systems design, collective engagement, bias, privacy, confidentiality |

| Guan, C., et al. | Taking an (Embodied) Cue from Community Health: Designing Dementia Caregiver Support Technology to Advance Health [141] | 2021 | Lead | Job security |

| Heerink, M., et al. | Assessing Acceptance of Assistive Social Agent Technology by Older Adults: the Almere Model [77] | 2010 | Secondary | Privacy, confidentiality |

| Hemphill, T. | Human Compatible: Artificial Intelligence and the Problem of Control by Stuart Russell [142] | 2020 | Lead | Human-centered systems design |

| Hemphill, T. | Responding to Fears of AI [74] | 2021 | Lead | Privacy, confidentiality, human-centered systems design |

| Hendrickson, C., Muro, M., Galston, W. | Countering the Geography of Discontent: Strategies for Left-Behind Places [93] | 2018 | Secondary | Job security |

| Herzenberg, S. | Towards an AI Economy That Works for All [87] | 2019 | Secondary | Job security, human-centered systems design |

| Hollingsworth, A., Ruhm, C., Simon, K. | Macroeconomic Conditions and Opioid Abuse [100] | 2017 | Secondary | Job security |

| Holzer, H.J. | Will Robots Make Job Training (and Workers) Obsolete? Workforce Development in an Automating Labor Market [34] | 2017 | Lead | Job security |

| Howard, J. | Artificial Intelligence: Implications for the Future of Work [31] | 2019 | Lead | Human-centered systems design, privacy, confidentiality, bias |

| Howard, J., et al. | Industrial Exoskeletons: Need for Intervention Effectiveness Research [52] | 2020 | Secondary | Human-centered systems design |

| Howard, J., Murashov, V., Branche, C. | Unmanned Aerial Vehicles in Construction and Worker Safety [51] | 2018 | Secondary | Confidentiality |

| Hubbs, C. | The Dangers of Government-Funded Artificial Intelligence [143] | 2019 | Lead | Confidentiality; human-centered systems design |

| Ilic-Godfrey, S. | Artificial Intelligence: Taking on a Bigger Role in our Future Security [56] | 2021 | Lead | Job security |

| Information Technology Industry Council; Internet Association; U.S. Chamber Technology Engagement Center | Coalition Letter on the National Artificial Intelligence Initiative Act of 2020 [144] | 2021 | Lead | Human-centered systems design, job security |

| International Labour Organization | Safety and Health at the Heart of the Future of Work: Building on 100 Years of Experience [40] | 2019 | Secondary | Job security, privacy, confidentiality |

| Kaivo Oja, J., Roth, S., Westerlund, L. | Futures of Robotics [42] | 2016 | Lead | Human-centered systems design |

| Kaushal, A., Altman, R. Langlotz, K. | Geographic Distribution of US Cohorts Used to Train Deep Learning Algorithms [95] | 2020 | Lead | Human-centered systems design, bias |

| Keystone Research Center | Towards an AI Economy that Works for All [87] | 2019 | Lead | Bias, human-centered systems design |

| Klamer, T., Allouch, S. | Acceptance and Use of a Social Robot by Elderly Users in a Domestic Environment [78] | 2010 | Secondary | N.A. |

| Kleinberg, J., Ludwig, J., Mullainathany, S., Sunstein, C.R. | Discrimination in the Age of Algorithms [62] | 2019 | Lead | Job security, privacy, confidentiality, bias |

| Korinek, A., Stiglitz, J.E. | Artificial Intelligence and Its Implications for Income Distribution and Unemployment [35] | 2017 | Lead | Job security |

| Landsbergis, P. | Work Organization, Job Insecurity, and Occupational Health Disparities [19] | 2013 | Secondary | Job security |

| Landsbergis, P. | Assessing the Contribution of Working Conditions to Socioeconomic Disparities in Health: A Commentary [18] | 2010 | Secondary | Job security |

| Lee, C., Huang, G.-H., Ashford, S.J. | Job Insecurity and the Changing Workplace: Recent Developments and the Future Trends in Job Insecurity Research [81] | 2017 | Lead | Job security |

| Letourneau-Guillon, L., Camiranda, D., Guilbert, F., Forghani, R. | Artificial Intelligence Applications for Workflow, Process Optimization and Predictive Analytics [145] | 2020 | Lead | Confidentiality |

| Levesque, E.M. | The Role of AI in Education and the Changing US Workforce [88] | 2018 | Lead | Job security, human-centered systems design |

| Litan, R. | How to Adjust to Automation [86] | 2018 | Lead | Job security |

| Manyika, J., Bughin, J. | The Promise and Challenge of the Age of Artificial Intelligence [41] | 2018 | Secondary | Bias, privacy, confidentiality, job security, human-centered systems design |

| Marlar, J. | Assessing the Impact of New Technologies on the Labor Market: Key Constructs, Gaps, and Data Collection Strategies for the Bureau of Labor Statistics [39] | 2020 | Lead | Privacy, confidentiality, bias, job security |

| Matheny, M., et al. | Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril [146] | 2019 | Secondary | Bias, privacy, confidentiality, job security, human-centered systems design |

| McGee, R., Thompson, N. | Unemployment and Depression Among Emerging Adults in 12 States [101] | 2015 | Secondary | Job security |

| McKay, C., Pollack, E., Fitzpayne, A. | Automation and a Changing Economy [89] | 2019 | Lead | Job security, privacy, bias, human-centered systems design |

| Miller, K. | Tech that Adapts to People: A Tug in the Right Direction [147] | 2020 | Lead | Privacy |

| Mishel, L., Bivens, J. | Identifying the Policy Levers Generating Wage Suppression and Wage Inequality [112] | 2021 | Lead | Job security |

| Murashov, V., Hearl, F., Howard, J. | Working Safely with Robot Workers: Recommendations for the New Workplace [53] | 2016 | Secondary | Human-centered systems design |

| Muro, M. | Countering the Geographical Impacts of Automation: Computers, AI, and Place Disparities [84] | 2019 | Lead | Job security |

| Muro, M., Whiton, J., Maxim, R. | What Jobs are Affected by AI? Better-paid, Better-educated Workers Face the Most Exposure [91] | 2019 | Lead | Job security |

| Nag, A., Mukhopadhyay, S.C., Kosel, J. | Wearable Flexible Sensors: A Review [48] | 2017 | Lead | Privacy |

| NORA Traumatic Injury Prevention Cross-Sector Council | National Occupational Research Agenda for Traumatic Injury Prevention [59] | 2018 | Lead | Human-centered systems design bias, privacy, confidentiality |

| Osoba, O.A., Welser IV, W. | The Risks of Artificial Intelligence to Security and the Future of Work [45] | 2017 | Lead | Human-centered systems design, job security |

| Papst, J. | How to Maximize People Power with Digital Capabilities [148] | 2020 | Lead | Privacy, confidentiality, job security |

| Parker, A.G. | Achieving Health Equity: The Power & Pitfalls of Intelligent Interfaces [149] | 2021 | Lead | Job security |

| Persons, T.M. | Artificial Intelligence: Emerging Opportunities, Challenges, and Implications for Policy and Research [104] | 2018 | Lead | Collective engagement |

| Pishgar, M., et al. | Redeca: A Novel Framework to Review Artificial Intelligence and its Applications in Occupational Safety and Health [10] | 2021 | Lead | Bias, job security |

| Pishgar, M., et al. | Pathological Voice Classification Using Mel-Cepstrum Vectors and Support Vector Machine [57] | 2018 | Secondary | N.A. |

| Pratt, D. | Occupational Health and the Rural Worker [94] | 1990 | Secondary | N.A. |

| Quaadman, T. | Four Policies that Government Can Pursue to Advance Trustworthy AI [150] | 2021 | Lead | Human-centered systems design |

| Ren, X., Spina, G., Faber, B., Geraedts, A. | Engaging Stakeholders to Design an Intelligent Decision Support Tool in the Occupational Health Context [118] | 2020 | Lead | Human-centered systems design |

| Rogers, R., Bryant, D., Howard, A. | Robot Gendering: Influences on Trust, Occupational Competency, and Preference of Robot Over Human [76] | 2020 | Lead | Job security, privacy |

| Saraçyakupoğlu, T. | The Adverse Effects of Implementation of the Novel Systems in the Aviation Industry in Pursuit of Maneuvering Characteristics Augmentation System [30] | 2020 | Secondary | Human-centered systems design |

| Savela, N., Turja, T., Oksanen, A. | Social Acceptance of Robots in Different Occupational Fields: A Systematic Literature Review [151] | 2018 | Secondary | Privacy |

| Schulte, P., Howard, J. | The Impact of Technology on Work and the Workforce [98] | 2019 | Secondary | Job security |

| Schulte, P.A., Delclos, G., Felknor, S.A., Chosewood, L.C. | Toward an Expanded Focus for Occupational Safety and Health: A Commentary [108] | 2019 | Lead | Job security, human-centered systems design |

| Sedo, S.A., Belzer, M. | Why do Long Distance Truck Drivers Work Extremely Long Hours? [152] | 2018 | Secondary | Job security |

| Shaw, J., Rudzicz, F., Jamieson, T., Goldfarb, A. | Artificial Intelligence and the Implementation Challenge [117] | 2019 | Lead | Privacy, confidentiality, bias |

| Sheffey, A. | Elon Musk says we need universal basic income because ‘in the future, physical work will be a choice’ [36] | 2021 | Secondary | Job security |

| Smarr, C., et al. | Older Adults’ Preferences for and Acceptance of Robot Assistance for Everyday Living Tasks [153] | 2012 | Secondary | Job security |

| Sorenson, G., et al. | The Future of Research on Work, Safety, Health and Wellbeing: A Guiding Conceptual Framework [83] | 2021 | Lead | Bias, privacy, job security |

| Sterenberg, M. | Can New Technology Incentivize Farmers to Capture Carbon in Their Soil? [60] | 2021 | Lead | Job security, human-centered systems design |

| Tai, M.C.-T. | The Impact of Artificial Intelligence on Human Society and Bioethics [97] | 2020 | Lead | Human-centered systems design, bias, privacy, confidentiality |

| Tamers, S.L., et al. | Envisioning the Future of Work to Safeguard the Safety, Health, and Well-being of the Workforce: A Perspective from the CDC’s National Institute for Occupational Safety and Health [72] | 2020 | Lead | Human-centered systems design, job security |

| Todolí-Signes, A. | Making Algorithms Safe for Workers: Occupational Risks Associated with Work Managed by Artificial Intelligence [102] | 2021 | Lead | Privacy, confidentiality, bias, human-centered systems design |

| US Chamber of Commerce | U.S. Chamber Principles on Artificial Intelligence [49] | 2019 | Lead | Privacy, confidentiality, job security, human-centered systems design |

| US Department of Defense | Summary of the Department of Defense Artificial Intelligence Strategy [103] | 2018 | Lead | Job security, human-centered systems design |

| Varian, H. | Artificial Intelligence, Economics, and Industrial Organization [90] | 2018 | Lead | Human-centered systems design |

| Vietas, J. | The Role of Artificial Intelligence in the Future of Work [154] | 2021 | Lead | Privacy, confidentiality, bias |

| Wang, F., Hu, M., Zhu, M. | Threat or Opportunity—Analysis of the Impact of Artificial Intelligence on Future Employment [110] | 2020 | Lead | Job security |

| West, D. | The Role of Corporations in Addressing AI’s Ethical Dilemmas [43] | 2018 | Lead | Privacy, confidentiality, bias, human-centered systems design |

| White House, The | The Biden Administration Launches the National Artificial Intelligence Research Resource Task Force [155] | 2021 | Secondary | Privacy, job security |

| Wolfe, J., Engdahl, L., Shierholz, H. | Domestic Workers Chartbook: A Comprehensive Look at the Demographics, Wages, Benefits, and Poverty Rates of the Professionals Who Care for Our Family Members and Clean Our Homes [156] | 2020 | Secondary | Job security |

| World Health Organization | Ethics and Governance of Artificial Intelligence for Health [37] | n.d. | Lead | Job security, confidentiality, bias, human-centered systems design |

| Wu, E., et al. | How Medical AI Devices are Evaluated: Limitations and Recommendations from an Analysis of FDA Approvals [96] | 2021 | Lead | Bias, confidentiality, human-centered systems design |

| Zhang, D., et al. | Artificial Intelligence Index Report [107] | 2021 | Lead | Human-centered systems design, privacy, confidentiality |

1 Secondary citations included in the scoping review were not required to meet all inclusion criteria.

References

- Bloem, J.; van Doorn, M.; Duivestein, S.; Excoffier, D.; Maas, R.; van Ommeren, E. The Fourth Industrial Revolution; Sogeti: Daayton, OH, USA, 2014. Available online: https://www.sogeti.com/globalassets/global/special/sogeti-things3en.pdf (accessed on 12 December 2022).

- Min, J.; Kim, Y.; Lee, S.; Jang, T.-W.; Kim, I.; Song, J. The Fourth Industrial Revolution and Its Impact on Occupational Health and Safety, Worker’s Compensation and Labor Conditions. Saf. Health Work 2019, 10, 400–408. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Yencha, C. Examining Perceptions towards Hiring Algorithms. Technol. Soc. 2022, 68, 101848. [Google Scholar] [CrossRef]

- Sinha, A.K.; Amir Khusru Akhtar, M.; Kumar, A. Resume Screening Using Natural Language Processing and Machine Learning: A Systematic Review. In Machine Learning and Information Processing; Swain, D., Pattnaik, P.K., Athawale, T., Eds.; Springer: Singapore, 2021; pp. 207–214. [Google Scholar]

- Suen, H.-Y.; Hung, K.-E.; Lin, C.-L. Intelligent Video Interview Agent Used to Predict Communication Skill and Perceived Personality Traits. Hum. Cent. Comput. Inf. Sci. 2020, 10, 3. [Google Scholar] [CrossRef]

- Majumder, S.; Mondal, A. Are Chatbots Really Useful for Human Resource Management? Int. J. Speech Technol. 2021, 24, 969–977. [Google Scholar] [CrossRef]

- Ramos, D.; Cotrim, T.; Arezes, P.; Baptista, J.; Rodrigues, M.; Leitão, J. Frontiers in Occupational Health and Safety Management. Int. J. Environ. Res. Public Health 2022, 19, 10759. [Google Scholar] [CrossRef] [PubMed]

- Baldassarre, A.; Mucci, N.; Lecca, L.I.; Tomasini, E.; Parcias-do-Rosario, M.J.; Pereira, C.T.; Arcangeli, G.; Oliveira, P.A.B. Biosensors in Occupational Safety and Health Management: A Narrative Review. Int. J. Environ. Res. Public Health 2020, 17, e2461. [Google Scholar] [CrossRef] [PubMed]

- Arana-Landín, G.; Laskurain-Iturbe, I.; Iturrate, M.; Landeta-Manzano, B. Assessing the Influence of Industry 4.0 Technologies on Occupational Health and Safety. Heliyon 2023, 9, e13720. [Google Scholar] [CrossRef]

- Pishgar, M.; Issa, S.F.; Sietsema, M.; Pratap, P.; Darabi, H. Redeca: A Novel Framework to Review Artificial Intelligence and Its Applications in Occupational Safety and Health. Int. J. Environ. Res. Public Health 2021, 18, 6705. [Google Scholar] [CrossRef]

- NIOSH. Occupational Health Equity. Available online: https://www.cdc.gov/niosh/programs/ohe/default.html (accessed on 6 December 2021).

- Orrenius, P.; Zavodny, M. Do Immigrants Work In Riskier Jobs? Demography 2009, 46, 535–551. [Google Scholar] [CrossRef]

- Steege, A.L.; Baron, S.L.; Marsh, S.M.; Menéndez, C.C.; Myers, J.R. Examining Occupational Health and Safety Disparities Using National Data: A Cause for Continuing Concern. Am. J. Ind. Med. 2014, 57, 527–538. [Google Scholar] [CrossRef]

- Flynn, M.A.; Check, P.; Steege, A.L.; Sivén, J.M.; Syron, L.N. Health Equity and a Paradigm Shift in Occupational Safety and Health. Int. J. Environ. Res. Public Health 2021, 19, 349. [Google Scholar] [CrossRef]

- Okechukwu, C.A.; Souza, K.; Davis, K.D.; de Castro, A.B. Discrimination, Harassment, Abuse, and Bullying in the Workplace: Contribution of Workplace Injustice to Occupational Health Disparities. Am. J. Ind. Med. 2014, 57, 573–586. [Google Scholar] [CrossRef]

- Cunningham, T.R.; Guerin, R.J.; Keller, B.M.; Flynn, M.A.; Salgado, C.; Hudson, D. Differences in Safety Training among Smaller and Larger Construction Firms with Non-Native Workers: Evidence of Overlapping Vulnerabilities. Saf. Sci. 2018, 103, 62–69. [Google Scholar] [CrossRef]

- O’Connor, T.; Flynn, M.A.; Weinstock, D.; Zanoni, J. Education and Training for Underserved Populations. In Proceedings of the Eliminating Health and Safety Disparities at Work Conference, Chicago, IL, USA, 14–15 September 2011; Association of Occupational and Environmental Clinics: Chicago, IL, USA, 2011. [Google Scholar]

- Landsbergis, P.A. Assessing the Contribution of Working Conditions to Socioeconomic Disparities in Health: A Commentary. Am. J. Ind. Med. 2010, 53, 95–103. [Google Scholar] [CrossRef]

- Landsbergis, P.A.; Grzywacz, J.G.; LaMontagne, A. Work Organization, Job Insecurity, and Occupational Health Disparities. Am. J. Ind. Med. 2012, 57, 495–515. [Google Scholar] [CrossRef]

- Tamers, S.L.; Pana-Cryan, R.; Ruff, T.; Streit, J.M.K.; Flynn, M.A.; Childress, A.; Chang, C.-C.; Novicki, E.; Ray, T.; Fosbroke, D.E.; et al. The NIOSH Future of Work Initiative Research Agenda; U.S. Department of Health and Human Services; Public Health Service, Centers for Disease Control and Prevention; National Institute for Occupational Safety and Health: Cincinnati, OH, USA, 2021.

- Peterson, J.; Pearce, P.F.; Ferguson, L.A.; Langford, C.A. Understanding Scoping Reviews: Definition, Purpose, and Process. J. Am. Assoc. Nurse Pract. 2017, 29, 12–16. [Google Scholar] [CrossRef]

- Arksey, H.; O’Malley, L. Scoping Studies: Towards a Methodological Framework. Int. J. Soc. Res. Methodol. Theory Pract. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Buis, J.J.W.; Post, G.; Visser, V.R. Academic Skills for Interdisciplinary Studies; Amsterdam University Press: Amsterdam, The Netherlands, 2016; ISBN 978-90-485-3394-7. [Google Scholar]

- Felknor, S.A.; Streit, J.M.K.; Chosewood, L.C.; McDaniel, M.; Schulte, P.A.; Delclos, G.L.; On Behalf of The Workshop Presenters And Participants. How Will the Future of Work Shape the OSH Professional of the Future? A Workshop Summary. Int. J. Environ. Res. Public Health 2020, 17, e7154. [Google Scholar] [CrossRef] [PubMed]

- McDermott, P.; Dominguez, C.; Kasdaglis, M.R.; Trahan, I. Human-Machine Teaming Systems Engineering Guide; MITRE: Bedford, MA, USA, 2018. [Google Scholar]

- National Academies of Sciences, Engineering, and Medicine. Human-AI Teaming: State-of-the-Art and Research Needs; The National Academies Press: Washington, DC, USA, 2022; ISBN 978-0-309-27017-5. [Google Scholar]

- International Organization for Standardizations ISO 3534-1:2006 Statistics—Vocabulary and Symbols—Part 1: General Statistical Terms and Terms Used in Probability. Available online: https://www.iso.org/obp/ui/#iso:std:iso:3534:-1:ed-2:v2:en (accessed on 16 January 2023).

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Holub, J. Guides: Public Policy Research Think Tanks 2019: Top Think Tanks US. Available online: https://guides.library.upenn.edu/c.php?g=1035991&p=7509974 (accessed on 22 November 2021).

- Saraçyakupoğlu, T. The Adverse Effects of Implementation of the Novel Systems in the Aviation Industry in Pursuit of Maneuvering Characteristics Augmentation System (MCAS). Available online: http://95.183.203.22/xmlui/handle/11363/2336 (accessed on 20 November 2021).

- Howard, J. Artificial Intelligence: Implications for the Future of Work. Am. J. Ind. Med. 2019, 62, 917–926. [Google Scholar] [CrossRef] [PubMed]

- Harris, L.A. Artificial Intelligence: Background, Selected Issues, and Policy Considerations; Congressional Research Service: Washington, DC, USA, 2021; p. 47.

- Deloitte AI Institute Investing in Trustworthy AI|Deloitte US. Available online: https://www2.deloitte.com/us/en/pages/consulting/articles/investing-in-ai-trust.html (accessed on 21 November 2021).

- Holzer, H. Will Robots Make Job Training (and Workers) Obsolete? Workforce Development in an Automating Labor Market. Available online: https://www.brookings.edu/research/will-robots-make-job-training-and-workers-obsolete-workforce-development-in-an-automating-labor-market/ (accessed on 21 November 2021).

- Korinek, A.; Stiglitz, J.E. Artificial Intelligence and Its Implications for Income Distribution and Unemployment. In The Economics of Artificial Intelligence: An Agenda; University of Chicago Press: Chicago, IL, USA, 2018; pp. 349–390. ISBN 978-0-226-61347-5. [Google Scholar]

- Sheffey, A. Elon Musk Says We Need Universal Basic Income Because “in the Future, Physical Work Will Be a Choice”. Available online: https://www.businessinsider.com/elon-musk-universal-basic-income-physical-work-choice-2021-8 (accessed on 21 November 2021).

- World Health Organization Ethics and Governance of Artificial Intelligence for Health. Available online: https://www.who.int/publications-detail-redirect/9789240029200 (accessed on 1 November 2021).

- CDC. CDC Social Determinants of Health. Available online: https://www.cdc.gov/socialdeterminants/index.htm (accessed on 6 December 2021).

- Marlar, J. Assessing the Impact of New Technologies on the Labor Market: Key Constructs, Gaps, and Data Collection Strategies for the Bureau of Labor Statistics: U.S. Bureau of Labor Statistics. Available online: https://www.bls.gov/bls/congressional-reports/assessing-the-impact-of-new-technologies-on-the-labor-market.htm (accessed on 21 November 2021).

- International Labour Organization. Safety and Health at the Heart of the Future of Work: Building on 100 Years of Experience; International Labour Organization (ILO): Geneva, Switzerland, 2019. [Google Scholar]

- Manyika, J.; Bughin, J. AI Problems and Promises. Available online: https://www.mckinsey.com/featured-insights/artificial-intelligence/the-promise-and-challenge-of-the-age-of-artificial-intelligence (accessed on 21 November 2021).

- Kaivo-Oja, J.; Roth, S.; Westerlund, L. Futures of Robotics. Human Work in Digital Transformation. Int. J. Technol. Manag. 2017, 73, 176–205. [Google Scholar] [CrossRef]

- West, D.M. The Role of Corporations in Addressing AI’s Ethical Dilemmas; A Blueprint for the Future of AI: 2018–2019; Brookings Institution: Washington, DC, USA, 2018. [Google Scholar]

- Engler, A. Auditing Employment Algorithms for Discrimination; Brookings Institution: Washington, DC, USA, 2021. [Google Scholar]

- Osoba, O.A.; Welser, W.I., IV. The Risks of Artificial Intelligence to Security and the Future of Work; RAND Corporation: Santa Monica, CA, USA, 2017. [Google Scholar]

- Administrative Conference of the United States Symposium on Artificial Intelligence in Federal Agencies. Available online: https://www.acus.gov/meetings-and-events/event/symposium-artificial-intelligence-federal-agencies (accessed on 21 November 2021).

- Bollier, D. Artificial Intelligence Comes of Age: The Promise and Challenge of Integrating AI into Cars, Healthcare and Journalism; Communications and Society; The Aspen Institute: Washington, DC, USA, 2017; pp. 1–65. [Google Scholar]

- Nag, A.; Mukhopadhyay, S.C.; Kosel, J. Wearable Flexible Sensors: A Review. IEEE Sens. J. 2017, 17, 3949–3960. [Google Scholar] [CrossRef]

- US Chamber of Commerce: Principles on Artificial Intelligence. Available online: https://iapp.org/resources/article/u-s-chamber-of-commerce-principles-on-artificial-intelligence/ (accessed on 21 November 2021).

- Moore, P.V. OSH and the Future of Work: Benefits and Risks of Artificial Intelligence Tools in Workplaces. In Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management. Human Body and Motion; Duffy, V.G., Ed.; Springer International Publishing: Cham, Switzerland, 2019; pp. 292–315. [Google Scholar]

- Howard, J.; Murashov, V.; Branche, C.M. Unmanned Aerial Vehicles in Construction and Worker Safety. Am. J. Ind. Med. 2018, 61, 3–10. [Google Scholar] [CrossRef]

- Howard, J.; Murashov, V.V.; Lowe, B.D.; Lu, M.-L. Industrial Exoskeletons: Need for Intervention Effectiveness Research. Am. J. Ind. Med. 2020, 63, 201–208. [Google Scholar] [CrossRef] [PubMed]

- Murashov, V.; Hearl, F.; Howard, J. Working Safely with Robot Workers: Recommendations for the New Workplace. J. Occup. Environ. Hyg. 2016, 13, D61–D71. [Google Scholar] [CrossRef]

- Forst, L.; Friedman, L.; Chin, B.; Madigan, D. Spatial Clustering of Occupational Injuries in Communities. Am. J. Public Health 2015, 105 (Suppl. S3), S526–S533. [Google Scholar] [CrossRef]

- Buczak, A.L.; Wang, H.; Darabi, H.; Jafari, M.A. Genetic Algorithm Convergence Study for Sensor Network Optimization. Inf. Sci. 2001, 133, 267–282. [Google Scholar] [CrossRef]

- Ilic-Godfrey, S. Artificial Intelligence: Taking on a Bigger Role in Our Future Security: Beyond the Numbers: U.S. Bureau of Labor Statistics. Available online: https://www.bls.gov/opub/btn/volume-10/investigation-and-security-services.htm (accessed on 21 November 2021).

- Pishgar, M.; Karim, F.; Majumdar, S.; Darabi, H. Pathological Voice Classification Using Mel-Cepstrum Vectors and Support Vector Machine. In Proceedings of the 2018 IEEE International Conference on Big Data, Seattle, WA, USA, 10–13 December 2018; pp. 5267–5271. [Google Scholar] [CrossRef]

- Chakkravarthy, R. Artificial Intelligence for Construction Safety—ProQuest. Prof. Saf. 2019, 64, 46. [Google Scholar]

- CDC. National Occupational Research Agenda Traumatic Injury Prevention; National Occupational Research Agenda Traumatic Injury Prevention Cross-Sector Council: Cincinnati, OH, USA, 2018; pp. 1–16.

- Sternberg, M. Can New Technology Incentivize Farmers to Capture Carbon in Their Soil? Available online: https://news.climate.columbia.edu/2021/07/21/can-new-technology-incentivize-farmers-to-capture-carbon-in-their-soil/ (accessed on 21 November 2021).

- Autor, D.; Salomons, A. Is Automation Labor-Displacing? Productivity Growth, Employment, and the Labor Share; National Bureau of Economic Research: Cambridge, MA, USA, 2018; p. w24871. [Google Scholar] [CrossRef]

- Kleinberg, J.; Ludwig, J.; Mullainathan, S.; Sunstein, C.R. Discrimination in The Age Of Algorithms; Working Paper Series; National Bureau of Economic Research: Cambridge, MA, USA, 2019. [Google Scholar] [CrossRef]

- Zheng, Y.-L.; Ding, X.-R.; Poon, C.C.Y.; Lo, B.P.L.; Zhang, H.; Zhou, X.-L.; Yang, G.-Z.; Zhao, N.; Zhang, Y.-T. Unobtrusive Sensing and Wearable Devices for Health Informatics. IEEE Trans. Biomed. Eng. 2014, 61, 1538–1554. [Google Scholar] [CrossRef]

- Tindale, L.C.; Chiu, D.; Minielly, N.; Hrincu, V.; Talhouk, A.; Illes, J. Wearable Biosensors in the Workplace: Perceptions and Perspectives. Front. Digit. Health 2022, 4, 2–8. [Google Scholar] [CrossRef] [PubMed]

- Bernhardt, A.; Suleiman, R.; Kresge, L. Data and Algorithms at Work: The Case for Worker Technology Rights; UC Berkeley Labor Center: Berkeley, CA, USA, 2021. [Google Scholar]

- Ajunwa, I.; Crawford, K.; Schultz, J. Limitless Worker Surveillance. Calif. Law Rev. 2017, 105, 735. [Google Scholar] [CrossRef]

- Biggers, B.E.A. Curbing Widespread Discrimination by Artificial Intelligence Hiring Tools: An Ex-Ante Solution. Boston Coll. Intellect. Prop. Technol. Forum J. 2020, 2020, 10. [Google Scholar]

- Caliskan, A.; Bryson, J.J.; Narayanan, A. Semantics Derived Automatically from Language Corpora Contain Human-like Biases. Science 2017, 356, 183–186. [Google Scholar] [CrossRef] [PubMed]

- Caliskan, A. Detecting and Mitigating Bias in Natural Language Processing; AI and Bias; Brookings Institution: Washington, DC, USA, 2021. [Google Scholar]

- Colvin, G. Humans Are Underrated: What High Achievers Know That Brilliant Machines Never Will; Penguin: New York, NY, USA, 2016; ISBN 978-0-14-310837-5. [Google Scholar]

- Chapman, L.; Brustein, J.A.I. Has a Race Problem—Bloomberg. Available online: https://www.bloomberg.com/news/articles/2018-06-26/ai-has-a-race-problem (accessed on 21 November 2021).

- Tamers, S.L.; Streit, J.; Pana-Cryan, R.; Ray, T.; Syron, L.; Flynn, M.A.; Castillo, D.; Roth, G.; Geraci, C.; Guerin, R.; et al. Envisioning the Future of Work to Safeguard the Safety, Health, and Well-Being of the Workforce: A Perspective from the CDC’s National Institute for Occupational Safety and Health. Am. J. Ind. Med. 2020, 63, 1065–1084. [Google Scholar] [CrossRef] [PubMed]

- Carter, R. Digital Initiatives Connect Safety Challenges and Solutions. Available online: https://www.e-mj.com/features/digital-initiatives-connect-safety-challenges-and-solutions/ (accessed on 21 November 2021).

- Hemphill, T. Responding to Fears of AI. Available online: https://www.cato.org/regulation/summer-2021/responding-fears-ai (accessed on 21 November 2021).

- Frey, C.B.; Osborne, M.A. The Future of Employment: How Susceptible Are Jobs to Computerisation? Technol. Forecast. Soc. Change 2017, 114, 254–280. [Google Scholar] [CrossRef]

- Rogers, K.; Bryant, D.; Howard, A. Robot Gendering: Influences on Trust, Occupational Competency, and Preference of Robot Over Human. Ext. Abstr. Hum. Factors Computing Syst. 2020, 2020, 1–7. [Google Scholar] [CrossRef]

- Heerink, M.; Krose, B.; Evers, V.; Wielinga, B. Assessing Acceptance of Assistive Social Agent Technology by Older Adults: The Almere Model. Int. J. Soc. Robot. 2010, 2, 361–375. [Google Scholar] [CrossRef]

- Klamer, T.; Allouch, S.B. Acceptance and Use of a Social Robot by Elderly Users in a Domestic Environment. In Proceedings of the 4th International Conference on Pervasive Computing Technologies for Healthcare, Munich, Germany, 22 March 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Gottron, F.; Angadjivand, S.; Bracmort, K.; Carter, N.T.; Comay, L.B.; Cowan, T.; Dabrowska, A.; Figliola, P.M.; Finklea, K.; Fischer, E.A.; et al. Science and Technology Issues in the 116th Congress; Congressional Research Service: Washington, DC, USA, 2019; p. 53.

- Acemoglu, D.; Restrepo, P. Artificial Intelligence, Automation, and Work. In The Economics of Artificial Intelligence: An Agenda; University of Chicago Press: Chicago, IL, USA, 2018; pp. 197–236. [Google Scholar]

- Lee, C.; Huang, G.-H.; Ashford, S.J. Job Insecurity and the Changing Workplace: Recent Developments and the Future Trends in Job Insecurity Research. Annu. Rev. Organ. Psychol. Organ. Behav. 2018, 5, 335–359. [Google Scholar] [CrossRef]

- Agrawal, A.K.; Gans, J.S.; Goldfarb, A. Economic Policy for Artificial Intelligence; Working Paper Series; National Bureau of Economic Research: Cambridge, MA, USA, 2018. [Google Scholar] [CrossRef]

- Sorensen, G.; Dennerlein, J.T.; Peters, S.E.; Sabbath, E.L.; Kelly, E.L.; Wagner, G.R. The Future of Research on Work, Safety, Health and Wellbeing: A Guiding Conceptual Framework. Soc. Sci. Med. 2021, 269, 113593. [Google Scholar] [CrossRef]

- Muro, M. Countering the Geographical Impacts of Automation: Computers, AI, and Place Disparities; A Blueprint for the Future of AI; Brookings Institution: Washington, DC, USA, 2019. [Google Scholar]

- Parker, C.B. Artificial Intelligence in the Workplace. Stanford University; Stanford News: Stanford, CA, USA, 2018. [Google Scholar]

- Litan, R.E. How to Adjust to Automation; A Blueprint for the Future of AI; Brookings Institution: Washington, DC, USA, 2018. [Google Scholar]

- Herzenberg, S.; Alic, J. Towards an AI Economy That Works for All. A Report of the Keystone Research Center Future of Work Project; The Keystone Research Center: Harrisburg, PA, USA, 2019. [Google Scholar]

- Levesque, E.M. The Role of AI in Education and the Changing US Workforce; A Blueprint for the Future of AI; Brookings Institution: Washington, DC, USA, 2018. [Google Scholar]

- Mckay, C.; Pollack, E.; Fitzpayne, A. Automation and a Changing Economy; Future of Work Initiative; Aspen Institute: Washington, DC, USA, 2019; p. 41. [Google Scholar]

- Varian, H. Artificial Intelligence, Economics, and Industrial Organization; National Bureau of Economic Research: Cambridge, MA, USA, 2018; p. 24. [Google Scholar]

- Muro, M.; Whiton, J.; Maxim, R. What Jobs Are Affected by AI? Better-Paid, Better-Educated Workers Face the Most Exposure; Brookings Institution: Washington, DC, USA, 2019; p. 46. [Google Scholar]

- Gibbons, E.D. Toward a More Equal World: The Human Rights Approach to Extending the Benefits of Artificial Intelligence. IEEE Technol. Soc. Mag. 2021, 40, 25–30. [Google Scholar] [CrossRef]