The Effect of Surgical Masks on the Featural and Configural Processing of Emotions

Abstract

:1. Introduction

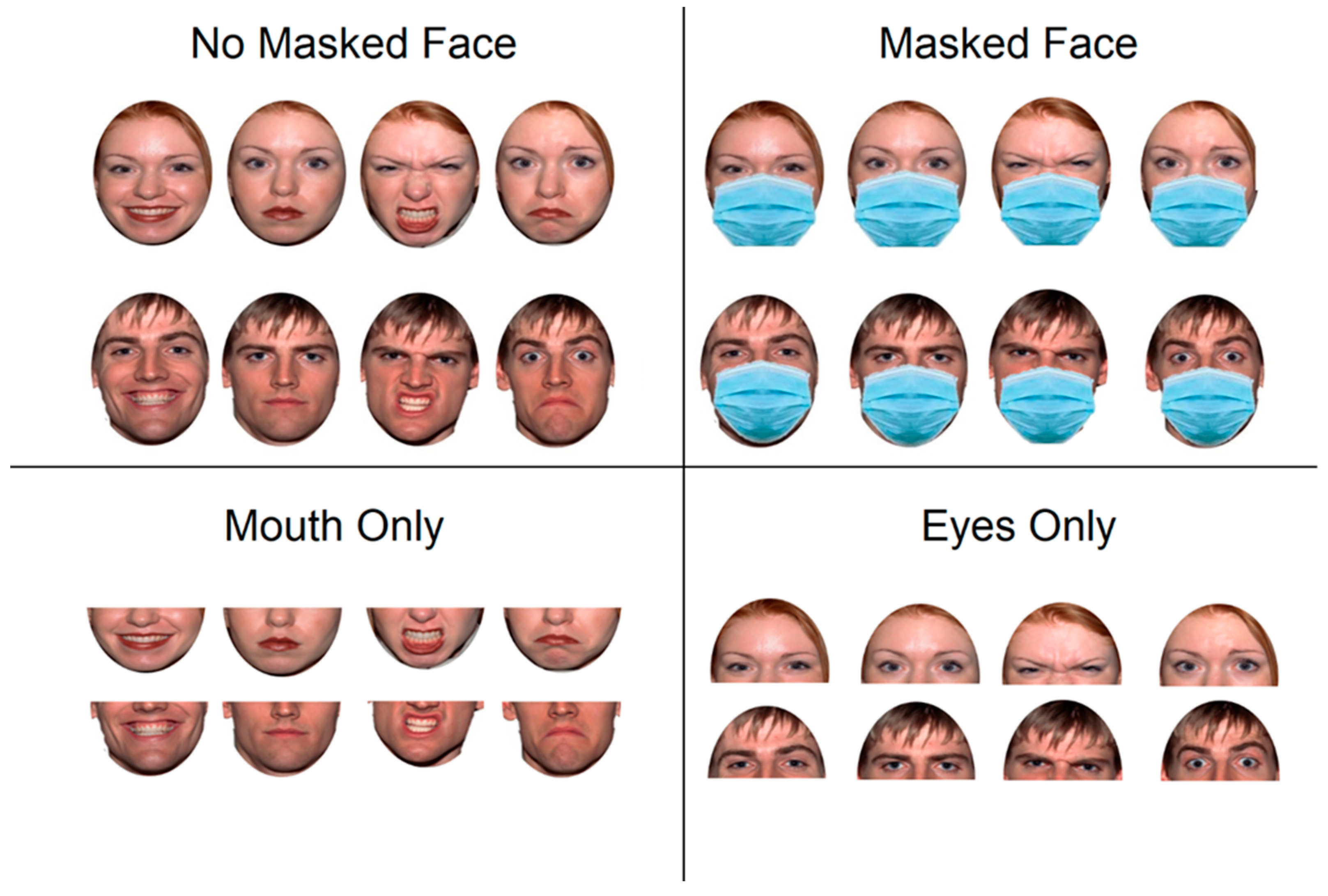

2. Materials and Methods

2.1. Participants

2.2. Procedure

2.3. Statistical Analyses

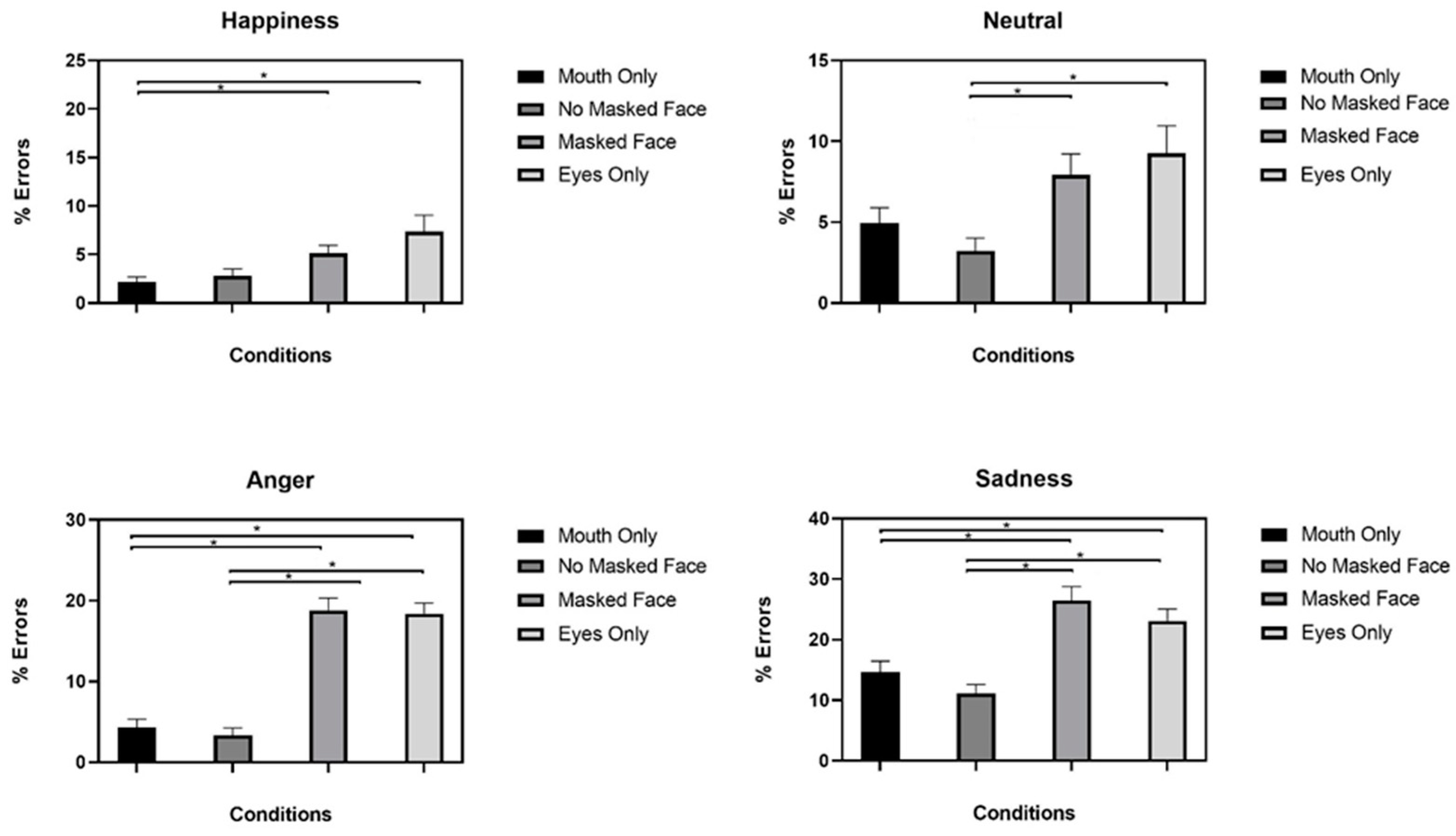

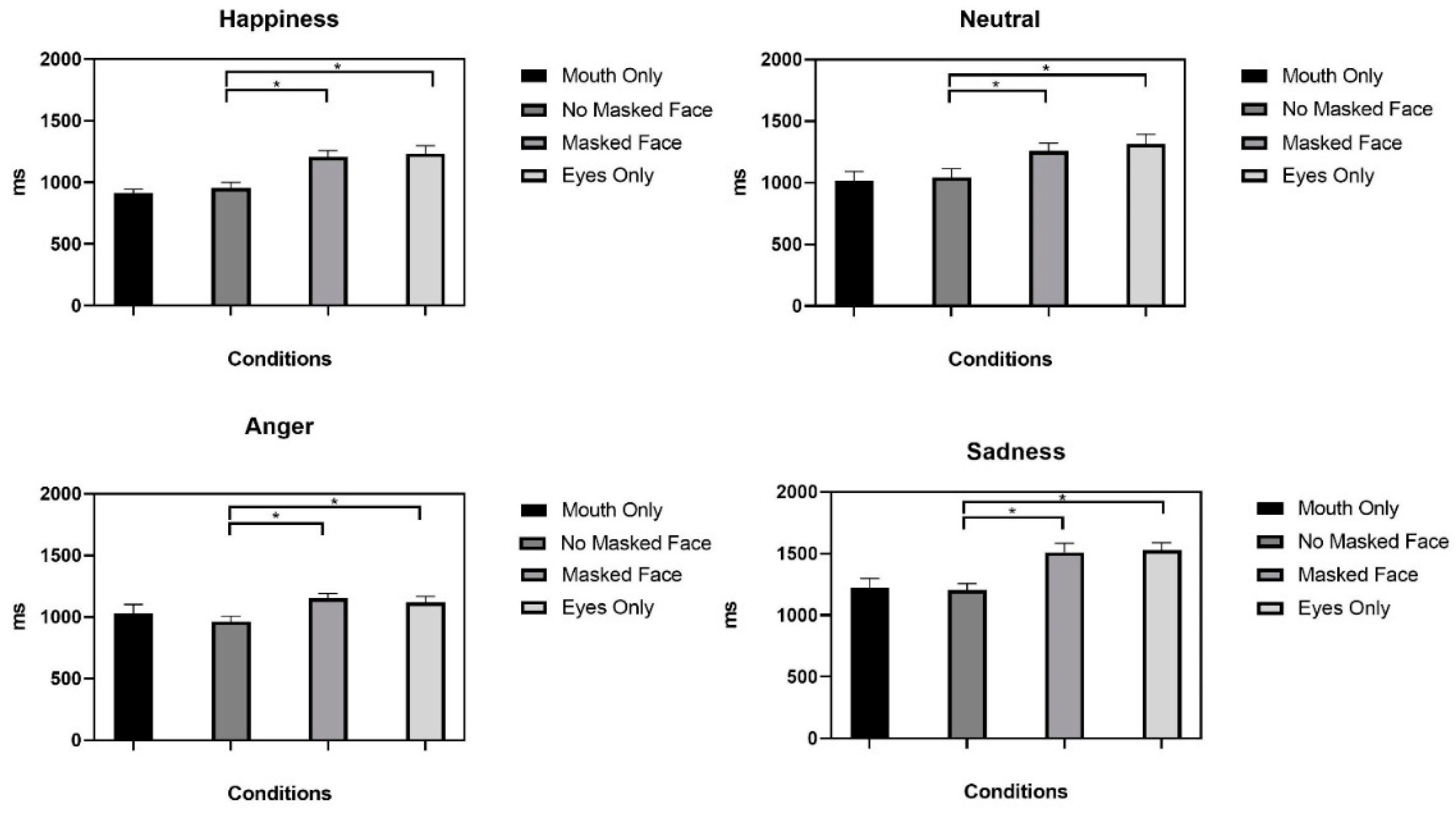

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chu, D.K.; Akl, E.A.; Duda, S.; Solo, K.; Yaacoub, S.; Schünemann, H.J.; El-harakeh, A.; Bognanni, A.; Lotfi, T.; Loeb, M.; et al. Physical distancing, face masks, and eye protection to prevent person-to-person transmission of SARS-CoV-2 and COVID-19: A systematic review and meta-analysis. Lancet 2020, 395, 1973–1987. [Google Scholar] [CrossRef]

- Grundmann, F.; Epstude, K.; Scheibe, S. Face masks reduce emotion-recognition accuracy and perceived closeness. PLoS ONE 2021, 16, e0249792. [Google Scholar] [CrossRef] [PubMed]

- Mheidly, N.; Fares, M.Y.; Zalzale, H.; Fares, J. Effect of Face Masks on Interpersonal Communication During the COVID-19 Pandemic. Front. Public Health 2020, 8, 582191. [Google Scholar] [CrossRef] [PubMed]

- Boraston, Z.; Blakemore, S.-J.; Chilvers, R.; Skuse, D. Impaired sadness recognition is linked to social interaction deficit in autism. Neuropsychologia 2007, 45, 1501–1510. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. Are there basic emotions? Psychol. Rev. 1992, 99, 550–553. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Measuring facial movement. Environ. Psychol. Nonverbal Behav. 1976, 1, 56–75. [Google Scholar] [CrossRef]

- Ekman, P.; Freisen, W.V.; Ancoli, S. Facial signs of emotional experience. J. Pers. Soc. Psychol. 1980, 39, 1125–1134. [Google Scholar] [CrossRef] [Green Version]

- Wegrzyn, M.; Vogt, M.; Kireclioglu, B.; Schneider, J.; Kissler, J. Mapping the emotional face. How individual face parts contribute to successful emotion recognition. PLoS ONE 2017, 12, e0177239. [Google Scholar] [CrossRef] [Green Version]

- Bombari, D.; Mast, F.W.; Lobmaier, J.S. Featural, configural, and holistic face-processing strategies evoke different scan patterns. Perception 2009, 38, 1508–1521. [Google Scholar] [CrossRef]

- Rossion, B. The composite face illusion: A whole window into our understanding of holistic face perception. Vis. Cogn. 2013, 21, 139–253. [Google Scholar] [CrossRef]

- Murphy, J.; Gray, K.L.H.; Cook, R. The composite face illusion. Psychon. Bull. Rev. 2016, 24, 245–261. [Google Scholar] [CrossRef]

- White, M. Parts and Wholes in Expression Recognition. Cogn. Emot. 2000, 14, 39–60. [Google Scholar] [CrossRef]

- Bombari, D.; Schmid, P.; Mast, M.S.; Birri, S.; Mast, F.W.; Lobmaier, J.S. Emotion recognition: The role of featural and configural face information. Q. J. Exp. Psychol. 2013, 66, 2426–2442. [Google Scholar] [CrossRef]

- Ruggiero, F.; Dini, M.; Cortese, F.; Vergari, M.; Nigro, M.; Poletti, B.; Priori, A.; Ferrucci, R. Anodal Transcranial Direct Current Stimulation over the Cerebellum Enhances Sadness Recognition in Parkinson’s Disease Patients: A Pilot Study. Cerebellum 2021, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Pegna, A.; Khateb, A.; Lazeyras, F.; Seghier, M. Discriminating emotional faces without primary visual cortices involves the right amygdala. Nat. Neurosci. 2004, 8, 24–25. [Google Scholar] [CrossRef] [PubMed]

- Vassallo, S.; Cooper, S.L.; Douglas, J.M. Visual scanning in the recognition of facial affect: Is there an observer sex difference? J. Vis. 2009, 9, 11. [Google Scholar] [CrossRef]

- Clark, U.; Neargarder, S.; Cronin-Golomb, A. Visual exploration of emotional facial expressions in Parkinson’s disease. Neuropsychologia 2010, 48, 1901–1913. [Google Scholar] [CrossRef] [Green Version]

- Jack, R.E.; Blais, C.; Scheepers, C.; Schyns, P.G.; Caldara, R. Cultural Confusions Show that Facial Expressions Are Not Universal. Curr. Biol. 2009, 19, 1543–1548. [Google Scholar] [CrossRef] [Green Version]

- Kret, M.E.; de Gelder, B. Islamic headdress influences how emotion is recognized from the eyes. Front. Psychol. 2012, 3, 110. [Google Scholar] [CrossRef] [Green Version]

- Graham, D.L.; Ritchie, K.L. Making a Spectacle of Yourself: The Effect of Glasses and Sunglasses on Face Perception. Perception 2019, 48, 461–470. [Google Scholar] [CrossRef]

- Kramer, R.S.S.; Ritchie, K. Disguising Superman: How Glasses Affect Unfamiliar Face Matching. Appl. Cogn. Psychol. 2016, 30, 841–845. [Google Scholar] [CrossRef] [Green Version]

- Blais, C.; Roy, C.; Fiset, D.; Arguin, M.; Gosselin, F. The eyes are not the window to basic emotions. Neuropsychologia 2012, 50, 2830–2838. [Google Scholar] [CrossRef]

- Carbon, C.-C. Wearing Face Masks Strongly Confuses Counterparts in Reading Emotions. Front. Psychol. 2020, 11, 2526. [Google Scholar] [CrossRef]

- Freud, E.; Stajduhar, A.; Rosenbaum, R.S.; Avidan, G.; Ganel, T. The COVID-19 pandemic masks the way people perceive faces. Sci. Rep. 2020, 10, 22344. [Google Scholar] [CrossRef] [PubMed]

- Bani, M.; Russo, S.; Ardenghi, S.; Rampoldi, G.; Wickline, V.; Nowicki, S.; Strepparava, M.G. Behind the Mask: Emotion Recognition in Healthcare Students. Med. Sci. Educ. 2021, 31, 1273–1277. [Google Scholar] [CrossRef] [PubMed]

- Tsantani, M.; Podgajecka, V.; Gray, K.L.H.; Cook, R. How does the presence of a surgical face mask impair the perceived intensity of facial emotions? PLoS ONE 2022, 17, e0262344. [Google Scholar] [CrossRef] [PubMed]

- Pfattheicher, S.; Nockur, L.; Böhm, R.; Sassenrath, C.; Petersen, M.B. The Emotional Path to Action: Empathy Promotes Physical Distancing and Wearing of Face Masks During the COVID-19 Pandemic. Psychol. Sci. 2020, 31, 1363–1373. [Google Scholar] [CrossRef]

- Carragher, D.J.; Hancock, P.J.B. Surgical face masks impair human face matching performance for familiar and unfamiliar faces. Cogn. Res. Princ. Implic. 2020, 5, 59. [Google Scholar] [CrossRef]

- Marini, M.; Ansani, A.; Paglieri, F.; Caruana, F.; Viola, M. The impact of facemasks on emotion recognition, trust attribution and re-identification. Sci. Rep. 2021, 11, 5577. [Google Scholar] [CrossRef]

- Fitousi, D.; Rotschild, N.; Pnini, C.; Azizi, O. Understanding the Impact of Face Masks on the Processing of Facial Identity, Emotion, Age, and Gender. Front. Psychol. 2021, 12, 4668. [Google Scholar] [CrossRef]

- Taylor, G.J. Alexithymia: Concept, measurement, and implications for treatment. Am. J. Psychiatry 1984, 141, 725–732. [Google Scholar] [CrossRef] [PubMed]

- Prkachin, G.C.; Casey, C.; Prkachin, K.M. Alexithymia and perception of facial expressions of emotion. Pers. Individ. Differ. 2009, 46, 412–417. [Google Scholar] [CrossRef]

- Bird, G.; Press, C.; Richardson, D. The role of alexithymia in reduced eye-fixation in autism spectrum conditions. J. Autism Dev. Disord. 2011, 41, 1556–1564. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cuve, H.C.; Castiello, S.; Shiferaw, B.; Ichijo, E.; Catmur, C.; Bird, G. Alexithymia explains atypical spatiotemporal dynamics of eye gaze in autism. Cognition 2021, 212, 104710. [Google Scholar] [CrossRef]

- Rosenberg, N.; Ihme, K.; Lichev, V.; Sacher, J.; Rufer, M.; Grabe, H.J.; Kugel, H.; Pampel, A.; Lepsien, J.; Kersting, A.; et al. Alexithymia and automatic processing of facial emotions: Behavioral and neural findings. BMC Neurosci. 2020, 21, 23. [Google Scholar] [CrossRef]

- Tottenham, N.; Tanaka, J.W.; Leon, A.C.; McCarry, T.; Nurse, M.; Hare, T.; Marcus, D.J.; Westerlund, A.; Casey, B.; Nelson, C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Res. 2009, 168, 242–249. [Google Scholar] [CrossRef] [Green Version]

- Rief, W.; Heuser, J.; Fichter, M.M. What does the Toronto alexithymia scale TAS-R measure? J. Clin. Psychol. 1996, 52, 423–429. [Google Scholar] [CrossRef]

- Froming, K.; Levy, M.; Schaffer, S.; Ekman, P. The Comprehensive Affect Testing System. Psychol Software, Inc., 2006. Available online: https://psycnet.apa.org/doiLanding?doi=10.1037%2Ft06823-000 (accessed on 12 December 2021).

- Vermeulen, N.; Luminet, O.; De Sousa, M.C.; Campanella, S. Categorical perception of anger is disrupted in alexithymia: Evidence from a visual ERP study. Cogn. Emot. 2008, 22, 1052–1067. [Google Scholar] [CrossRef]

- Marler, H.; Ditton, A. “I’m smiling back at you”: Exploring the impact of mask wearing on communication in healthcare. Int. J. Lang. Commun. Disord. 2021, 56, 205–214. [Google Scholar] [CrossRef]

- Ziccardi, S.; Crescenzo, F.; Calabrese, M. “What Is Hidden behind the Mask?” Facial Emotion Recognition at the Time of COVID-19 Pandemic in Cognitively Normal Multiple Sclerosis Patients. Diagnostics 2021, 12, 47. [Google Scholar] [CrossRef]

- Ricciardi, L.; Comandini, F.V.; Erro, R.; Morgante, F.; Bologna, M.; Fasano, A.; Ricciardi, D.; Edwards, M.J.; Kilner, J. Facial emotion recognition and expression in Parkinson’s disease: An emotional mirror mechanism? PLoS ONE 2017, 12, e0169110. [Google Scholar] [CrossRef] [Green Version]

- Høyland, A.L.; Nærland, T.; Engstrøm, M.; Lydersen, S.; Andreassen, O.A. The relation between face-emotion recognition and social function in adolescents with autism spectrum disorders: A case control study. PLoS ONE 2017, 12, e0186124. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Loth, E.; Garrido, L.; Ahmad, J.; Watson, E.; Duff, A.; Duchaine, B. Facial expression recognition as a candidate marker for autism spectrum disorder: How frequent and severe are deficits? Mol. Autism 2018, 9, 7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grahlow, M.; Rupp, C.I.; Derntl, B. The impact of face masks on emotion recognition performance and perception of threat. PLoS ONE 2022, 17, e0262840. [Google Scholar] [CrossRef] [PubMed]

- de Gelder, B.; Van den Stock, J. The bodily expressive action stimulus test (BEAST). Construction and validation of a stimulus Basis for measuring perception of whole body expression of emotions. Front. Psychol. 2011, 2, 181. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Demographic Variable | Data |

|---|---|

| Sample Size | 31 (16 M) |

| Age (Years; Mean ± SD) | 32 ± 11 |

| Education (Years; Mean ± SD) | 17 ± 4 |

| TAS- Score (Mean ± SD) | 39.5 ± 9.37 |

| Emotions | Face Manipulation Conditions | Mean Difference | SD | t | df | Sig. |

|---|---|---|---|---|---|---|

| Happiness | NM 2.82 ± 3.94 MO 2.15 ± 3.01 | 0.67 | 5.05 | 0.74 | 30 | 0.465 |

| Happiness | NM 2.82 ± 3.94 M 5.10 ± 4.65 | −2.28 | 5.14 | −2.47 | 30 | 0.019 |

| Happiness | NM 2.82 ± 3.94 EO 7.39 ± 9.36 | −4.56 | 10.50 | −2.42 | 30 | 0.022 |

| Neutral | NM 3.22 ± 4.39 MO 4.97 ± 5.09 | −1.74 | 6.86 | −1.42 | 30 | 0.167 |

| Neutral | NM 3.22 ± 4.39 M 7.93 ± 7.16 | −4.70 | 8.17 | −3.20 | 30 | 0.003 * |

| Neutral | NM 3.22 ± 4.39 EO 9.27 ± 9.36 | −6.04 | 9.18 | −3.67 | 30 | 0.001 * |

| Anger | NM 3.36 ± 4.86 MO 4.30 ± 5.74 | −0.94 | 6.15 | −0.85 | 30 | 0.401 |

| Anger | NM 3.36 ± 4.86 M 18.81 ± 8.32 | −15.45 | 8.41 | −10.23 | 30 | <0.001 * |

| Anger | NM 3.36 ± 4.86 EO 18.41 ± 7.27 | −15.05 | 7.19 | −11.66 | 30 | <0.001 * |

| Sadness | NM 11.15 ± 8.22 MO 14.65 ± 10.08 | −3.49 | 9.19 | −2.12 | 30 | 0.043 |

| Sadness | NM 11.15 ± 8.22 M 26.48 ± 12.57 | −15.32 | 15.86 | −5.38 | 30 | <0.001 * |

| Sadness | NM 11.15 ± 8.22 EO 23.11 ± 10.90 | −11.96 | 14.01 | −4.75 | 30 | <0.001 * |

| Emotions | Face Manipulation Conditions (ms; Mean ± SD) | Mean Difference | SD | t | df | Sig. |

|---|---|---|---|---|---|---|

| Happiness | NM 957.33 ± 243.10 MO 916.41 ± 167.18 | 40.92 | 188.19 | 1.21 | 30 | 0.235 |

| Happiness | NM 957.33 ± 243.10 M 1209.19 ± 281.68 | −251.86 | 243.84 | −5.75 | 30 | <0.001 * |

| Happiness | NM 957.33 ± 243.10 EO 1233.80 ±367.66 | −276.46 | 364.50 | −4.23 | 30 | <0.001 * |

| Neutral | NM 1042.88 ± 385.10 MO1016.86 ± 419.07 | 26.02 | 196.80 | 0.74 | 30 | 0.467 |

| Neutral | NM 1042.88 ± 385.10 M 1258.00 ± 351.75 | −215.12 | 298.40 | −4.01 | 30 | <0.001 * |

| Neutral | NM 1042.88 ± 385.10 EO 1313.94 ± 444.31 | −271.06 | 317.21 | −4.76 | 30 | <0.001 * |

| Anger | NM 964.94 ± 223.95 MO 1031.59 ± 390.74 | −66.64 | 256.85 | −1.45 | 30 | 0.159 |

| Anger | NM 964.94 ± 223.95 M 1151.74 ± 219.49 | −186.80 | 178.61 | −5.82 | 30 | <0.001 * |

| Anger | NM 964.94 ± 223.95 EO 1118.97 ± 266.11 | −154.03 | 193.98 | −4.42 | 30 | <0.001 * |

| Sadness | NM 1203.64 ± 304.12 MO 1223.72 ± 419.99 | −20.07 | 249.88 | −0.45 | 30 | 0.658 |

| Sadness | NM 1203.64 ± 304.12 M 1511.57 ± 400.47 | −307.92 | 385.49 | −4.45 | 30 | <0.001 * |

| Sadness | NM 1203.64 ± 304.12 EO 1527.12 ± 346.59 | −323.47 | 366.80 | −4.91 | 30 | <0.001 * |

| Face Manipulation Conditions | Difficulty Describing Feelings | Difficulty Identifying Feelings | Externally-Oriented Thinking | TAS-20 Total | |

|---|---|---|---|---|---|

| MO | Pearson r | 0.49 ** | 0.38 * | 0.16 | 0.48 ** |

| p-value | 0.005 | 0.034 | 0.383 | 0.006 | |

| EO | Pearson r | 0.24 | 0.35 | 0.18 | 0.37 * |

| p-value | 0.199 | 0.056 | 0.326 | 0.038 | |

| NM | Pearson r | 0.53 ** | 0.36 * | 0.09 | 0.48 ** |

| p-value | 0.002 | 0.050 | 0.608 | 0.007 | |

| MF | Pearson r | 0.07 | 0.07 | 0.08 | 0.08 |

| p-value | 0.705 | 0.726 | 0.661 | 0.656 |

| Mean | S.D. | Range | |

|---|---|---|---|

| TAS-20 | 39.5 | 9.4 | 26–67 |

| Difficulty Describing Feelings | 11.1 | 3.8 | 5–23 |

| Difficulty Identifying Feeling | 13.5 | 5.0 | 7–25 |

| Externally-Oriented Thinking | 16.4 | 3.0 | 13–27 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maiorana, N.; Dini, M.; Poletti, B.; Tagini, S.; Rita Reitano, M.; Pravettoni, G.; Priori, A.; Ferrucci, R. The Effect of Surgical Masks on the Featural and Configural Processing of Emotions. Int. J. Environ. Res. Public Health 2022, 19, 2420. https://doi.org/10.3390/ijerph19042420

Maiorana N, Dini M, Poletti B, Tagini S, Rita Reitano M, Pravettoni G, Priori A, Ferrucci R. The Effect of Surgical Masks on the Featural and Configural Processing of Emotions. International Journal of Environmental Research and Public Health. 2022; 19(4):2420. https://doi.org/10.3390/ijerph19042420

Chicago/Turabian StyleMaiorana, Natale, Michelangelo Dini, Barbara Poletti, Sofia Tagini, Maria Rita Reitano, Gabriella Pravettoni, Alberto Priori, and Roberta Ferrucci. 2022. "The Effect of Surgical Masks on the Featural and Configural Processing of Emotions" International Journal of Environmental Research and Public Health 19, no. 4: 2420. https://doi.org/10.3390/ijerph19042420

APA StyleMaiorana, N., Dini, M., Poletti, B., Tagini, S., Rita Reitano, M., Pravettoni, G., Priori, A., & Ferrucci, R. (2022). The Effect of Surgical Masks on the Featural and Configural Processing of Emotions. International Journal of Environmental Research and Public Health, 19(4), 2420. https://doi.org/10.3390/ijerph19042420