Promoting Healthcare Workers’ Adoption Intention of Artificial-Intelligence-Assisted Diagnosis and Treatment: The Chain Mediation of Social Influence and Human–Computer Trust

Abstract

1. Introduction

2. Theoretical Background and Research Hypotheses

2.1. Theoretical Background

2.1.1. The Unified Theory of Acceptance and Use of Technology

2.1.2. Human–Computer Trust Theory

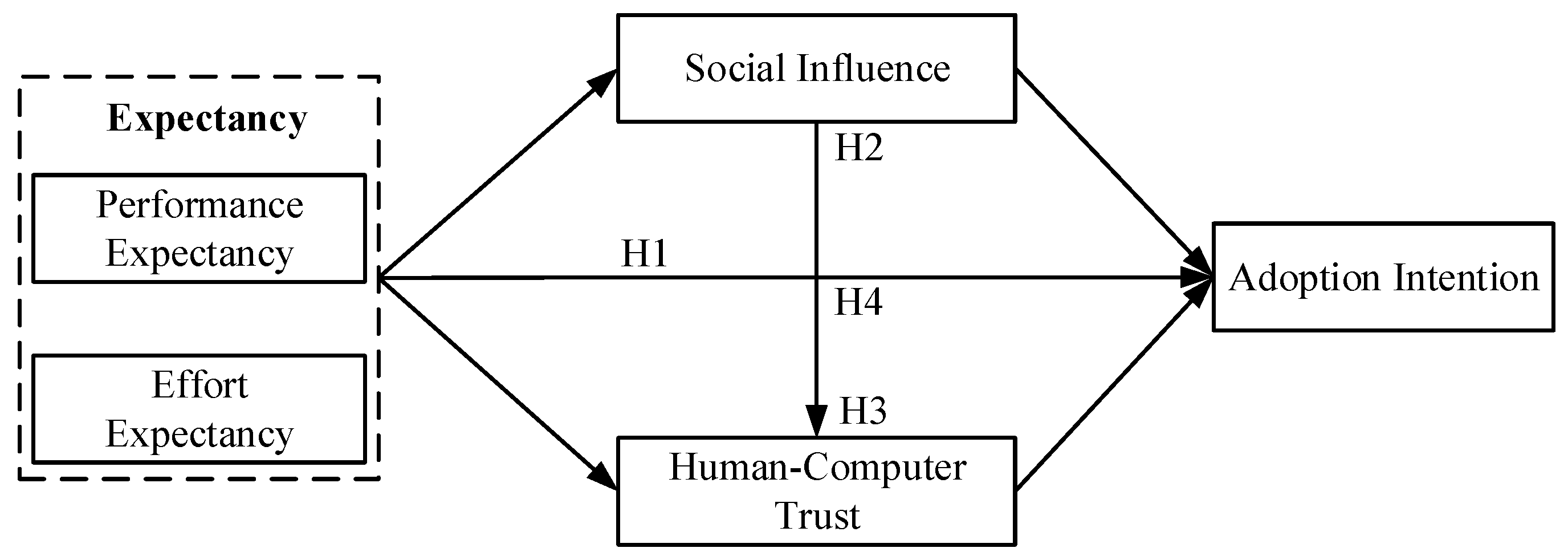

2.2. Research Hypotheses

2.2.1. Expectancy and Adoption Intention

2.2.2. The Mediating Role of Social Influence

2.2.3. The Mediating Role of Human–Computer Trust

2.2.4. The Chain Mediation Model of Social Influence and HCT

3. Materials and Methods

3.1. Participants and Date Collection

3.2. Measures

3.3. Data Analysis

4. Results

4.1. Descriptive Statistics

4.2. Confirmatory Factor Analysis

4.3. Structural Model Testing

5. Discussion

5.1. Theoretical Implications

5.2. Practical Implications

5.3. Limitations and Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Constructs | Variables | Measurement Items |

|---|---|---|

| Performance Expectancy (PE) | PE1 | AI-assisted diagnosis and treatment will enhance the efficiency of my medical consultation process. |

| PE2 | AI-assisted diagnosis and treatment will make my work more efficient. | |

| PE3 | AI-assisted diagnosis and treatment will provide me with new abilities that I did not have before. | |

| PE4 | AI-assisted diagnosis and treatment will expand my existing knowledge base and provide new ideas. | |

| Effort Expectancy (EE) | EE1 | I think the openness of AI-assisted diagnosis and treatment is clear and unambiguous. |

| EE2 | I can skillfully use AI-assisted diagnosis and treatment. | |

| EE3 | I think getting the information I need through AI-assisted diagnosis and treatment is easy for me. | |

| EE4 | AI-assisted diagnosis and treatment doesn’t take much of my energy. | |

| Social Influence (SI) | SI1 | People around me use AI-assisted diagnosis and treatment. |

| SI2 | People who are important to me think that I should use AI-assisted diagnosis and treatment. | |

| SI3 | My professional interaction with my peers requires knowledge of AI-assisted diagnosis and treatment. | |

| Human–Computer Trust (HCT) | HCT1 | I believe AI-assisted diagnosis and treatment will help me do my job. |

| HCT2 | I trust AI-assisted diagnosis and treatment to understand my work needs and preferences. | |

| HCT3 | I believe AI-assisted diagnosis and treatment is an effective tool. | |

| HCT4 | I think AI-assisted diagnosis and treatment works well for diagnostic and treatment purposes. | |

| HCT5 | I believe AI-assisted diagnosis and treatment has all the functions I expect in a medical procedure. | |

| HCT6 | I can always rely on AI-assisted diagnosis and treatment. | |

| HCT7 | I can trust the reference information provided by AI-assisted diagnosis and treatment. | |

| Adoption Intention (ADI) | ADI1 | I am willing to learn and use AI-assisted diagnosis and treatment. |

| ADI2 | I intend to use AI-assisted diagnosis and treatment in the future. | |

| ADI3 | I would advise people around me to use AI-assisted diagnosis and treatment. |

References

- Malik, P.; Pathania, M.; Rathaur, V.K. Overview of artificial intelligence in medicine. J. Fam. Med. Prim. Care 2019, 8, 2328–2331. [Google Scholar] [CrossRef]

- Brynjolfsson, E.; Mcafee, A. What’s driving the machine learning explosion? Harv. Bus. Rev. 2017, 1, 1–31. [Google Scholar]

- He, J.; Baxter, S.L.; Xu, J.; Xu, J.M.; Zhou, X.; Zhang, K. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 2019, 25, 30–36. [Google Scholar] [CrossRef] [PubMed]

- Huo, W.; Zheng, G.; Yan, J.; Sun, L.; Han, L. Interacting with medical artificial intelligence: Integrating self-responsibility attribution, human-computer trust, and personality. Comput. Hum. Behav. 2022, 132, 107253. [Google Scholar] [CrossRef]

- Vinod, D.N.; Prabaharan, S.R.S. Data science and the role of Artificial Intelligence in achieving the fast diagnosis of COVID-19. Chaos Solitons Fractals 2020, 140, 110182. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.W.; Stanley, K.; Att, W. Artificial intelligence in dentistry: Current applications and future perspectives. Quintessence Int. 2020, 51, 248–257. [Google Scholar] [CrossRef]

- Hung, K.; Montalvao, C.; Tanaka, R.; Kawai, T.; Bornstein, M.M. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofac. Radiol. 2020, 49, 20190107. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef] [PubMed]

- Casalegno, F.; Newton, T.; Daher, R.; Abdelaziz, M.; Lodi-Rizzini, A.; Schürmann, F.; Krejci, I.; Markram, H. Caries detection with near-infrared transillumination using deep learning. J. Dent. Res. 2019, 98, 1227–1233. [Google Scholar] [CrossRef]

- Holsinger, F.C. A flexible, single-arm robotic surgical system for transoral resection of the tonsil and lateral pharyngeal wall: Next-generation robotic head and neck surgery. Laryngoscope 2016, 126, 864–869. [Google Scholar] [CrossRef] [PubMed]

- Genden, E.M.; Desai, S.; Sung, C.K. Transoral robotic surgery for the management of head and neck cancer: A preliminary experience. Head Neck 2009, 31, 283–289. [Google Scholar] [CrossRef]

- Shademan, A.; Decker, R.S.; Opfermann, J.D.; Leonard, S.; Krieger, A.; Kim, P.C. Supervised autonomous robotic soft tissue surgery. Sci. Transl. Med. 2016, 8, 337ra64. [Google Scholar] [CrossRef]

- Yu, K.H.; Beam, A.L.; Kohane, I.S. Artificial intelligence in healthcare. Nat. Biomed. Eng. 2018, 2, 719–731. [Google Scholar] [CrossRef] [PubMed]

- Qin, Y.; Zhou, R.; Wu, Q.; Huang, X.; Chen, X.; Wang, W.; Wang, X.; Xu, H.; Zheng, J.; Qian, S.; et al. The effect of nursing participation in the design of a critical care information system: A case study in a Chinese hospital. BMC Med. Inform. Decis. Mak. 2017, 17, 1–12. [Google Scholar] [CrossRef]

- Vockley, M. Game-changing technologies: 10 promising innovations for healthcare. Biomed. Instrum. Technol. 2017, 51, 96–108. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Vanman, E.J.; Kappas, A. “Danger, Will Robinson!” The challenges of social robots for intergroup relations. Soc. Personal. Psychol. Compass. 2019, 13, e12489. [Google Scholar] [CrossRef]

- Robin, A.L.; Muir, K.W. Medication adherence in patients with ocular hypertension or glaucoma. Expert Rev. Ophthalmol. 2019, 14, 199–210. [Google Scholar] [CrossRef]

- Lötsch, J.; Kringel, D.; Ultsch, A. Explainable artificial intelligence (XAI) in biomedicine: Making AI decisions trustworthy for physicians and patients. Biomedinformatics 2021, 2, 1. [Google Scholar] [CrossRef]

- Dietvorst, B.J.; Simmons, J.P.; Massey, C. Algorithm aversion: People erroneously avoid algorithms after seeing them err. J. Exp. Psychol. Gen. 2014, 144, 114–126. [Google Scholar] [CrossRef]

- Wang, H.; Tao, D.; Yu, N.; Qu, X. Understanding consumer acceptance of healthcare wearable devices: An integrated model of UTAUT and TTF. Int. J. Med. Inform. 2020, 139, 104156. [Google Scholar] [CrossRef] [PubMed]

- Dhagarra, D.; Goswami, M.; Kumar, G. Impact of trust and privacy concerns on technology acceptance in healthcare: An Indian perspective. Int. J. Med. Inform. 2020, 141, 104164. [Google Scholar] [CrossRef]

- Fernandes, T.; Oliveira, E. Understanding consumers’ acceptance of automated technologies in service encounters: Drivers of digital voice assistants adoption. J. Bus. Res. 2021, 122, 180–191. [Google Scholar] [CrossRef]

- Alsyouf, A.; Ishak, A.K.; Lutfi, A.; Alhazmi, F.N.; Al-Okaily, M. The role of personality and top management support in continuance intention to use electronic health record systems among nurses. Int. J. Environ. Res. Public Health 2022, 19, 11125. [Google Scholar] [CrossRef]

- Pikkemaat, M.; Thulesius, H.; Nymberg, V.M. Swedish primary care physicians’ intentions to use telemedicine: A survey using a new questionnaire–physician attitudes and intentions to use telemedicine (PAIT). Int. J. Gen. Med. 2021, 14, 3445–3455. [Google Scholar] [CrossRef] [PubMed]

- Hossain, A.; Quaresma, R.; Rahman, H. Investigating factors influencing the physicians’ adoption of electronic health record (EHR) in healthcare system of Bangladesh: An empirical study. Int. J. Inf. Manag. 2019, 44, 76–87. [Google Scholar] [CrossRef]

- Alsyouf, A.; Ishak, A.K. Understanding EHRs continuance intention to use from the perspectives of UTAUT: Practice environment moderating effect and top management support as predictor variables. Int. J. Electron. Healthc. 2018, 10, 24–59. [Google Scholar] [CrossRef]

- Fan, W.; Liu, J.; Zhu, S.; Pardalos, P.M. Investigating the impacting factors for the healthcare professionals to adopt artificial intelligence-based medical diagnosis support system (AIMDSS). Ann. Oper. Res. 2020, 294, 567–592. [Google Scholar] [CrossRef]

- Bawack, R.E.; Kamdjoug, J.R.K. Adequacy of UTAUT in clinician adoption of health information systems in developing countries: The case of Cameroon. Int. J. Med. Inform. 2018, 109, 15–22. [Google Scholar] [CrossRef]

- Adenuga, K.I.; Iahad, N.A.; Miskon, S. Towards reinforcing telemedicine adoption amongst clinicians in Nigeria. Int. J. Med. Inform. 2017, 104, 84–96. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.F.; Cheng, T.J. Exploring critical factors influencing physicians’ acceptance of mobile electronic medical records based on the dual-factor model: A validation in Taiwan. BMC Med. Inform. Decis. Mak. 2015, 15, 1–12. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Hsieh, P.J. Healthcare professionals’ use of health clouds: Integrating technology acceptance and status quo bias perspectives. Int. J. Med. Inform. 2015, 84, 512–523. [Google Scholar] [CrossRef] [PubMed]

- Wu, L.; Li, J.Y.; Fu, C.Y. The adoption of mobile healthcare by hospital’s professionals: An integrative perspective. Decis. Support Syst. 2011, 51, 587–596. [Google Scholar] [CrossRef]

- Egea, J.M.O.; González, M.V.R. Explaining physicians’ acceptance of EHCR systems: An extension of TAM with trust and risk factors. Comput. Hum. Behav. 2011, 27, 319–332. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Huang, C.Y.; Yang, M.C. Empirical investigation of factors influencing consumer intention to use an artificial intelligence-powered mobile application for weight loss and health management. Telemed. e-Health 2020, 26, 1240–1251. [Google Scholar] [CrossRef]

- Gerli, P.; Clement, J.; Esposito, G.; Mora, L.; Crutzen, N. The hidden power of emotions: How psychological factors influence skill development in smart technology adoption. Technol. Forecast. Soc. Chang. 2022, 180, 121721. [Google Scholar] [CrossRef]

- Fernández-Llamas, C.; Conde, M.A.; Rodríguez-Lera, F.J.; Rodríguez-Sedano, F.J.; García, F. May I teach you? Students’ behavior when lectured by robotic vs. human teachers. Comput. Hum. Behav. 2018, 80, 460–469. [Google Scholar] [CrossRef]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.; De Visser, E.J.; Parasuraman, R. A meta-analysis of factors affecting trust in human-robot interaction. Hum. Factors 2011, 53, 517–527. [Google Scholar] [CrossRef]

- Glikson, E.; Woolley, A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Choi, J.K.; Ji, Y.G. Investigating the importance of trust on adopting an autonomous vehicle. Int. J. Hum-Comput. Int. 2015, 31, 692–702. [Google Scholar] [CrossRef]

- Madsen, M.; Gregor, S. Measuring human-computer trust. In Proceedings of the 11th Australasian Conference on Information Systems, Brisbane, Australia, 6–8 December 2000; Volume 53, pp. 6–8. [Google Scholar]

- Van Lange, P.A. Generalized trust: Four lessons from genetics and culture. Curr. Dir. Psychol. 2015, 24, 71–76. [Google Scholar] [CrossRef]

- Zhang, T.; Tao, D.; Qu, X.; Zhang, X.; Zeng, J.; Zhu, H.; Zhu, H. Automated vehicle acceptance in China: Social influence and initial trust are key determinants. Transp. Res. Pt. C-Emerg. Technol. 2020, 112, 220–233. [Google Scholar] [CrossRef]

- Fishbein, M.; Ajzen, I. Belief, Attitude, Intention, and Behavior: An Introduction to Theory and Research; Addison-Wesley: Reading, MA, USA, 1975. [Google Scholar]

- Ajzen, I. Perceived behavioral control, self-efficacy, locus of control and the theory of planned behavior. J. Appl. Soc. Psychol. 2002, 32, 665–683. [Google Scholar] [CrossRef]

- Hardjono, T.; Deegan, P.; Clippinger, J.H. On the design of trustworthy compute frameworks for self-organizing digital institutions. In Proceedings of the International Conference on Social Computing and Social Media, Crete, Greece, 22–27 June 2014; pp. 342–353. [Google Scholar] [CrossRef]

- Huijts, N.M.; Molin, E.J.; Steg, L. Psychological factors influencing sustainable energy technology acceptance: A review-based comprehensive framework. Renew. Sust. Energ. Rev. 2012, 16, 525–531. [Google Scholar] [CrossRef]

- Liu, P.; Yang, R.; Xu, Z. Public acceptance of fully automated driving: Effects of social trust and risk/benefit perceptions. Risk Anal. 2019, 39, 326–341. [Google Scholar] [CrossRef]

- Robinette, P.; Howard, A.M.; Wagner, A.R. Effect of robot performance on human-robot trust in time-critical situations. IEEE Trans. Hum.-Mach. Syst. 2017, 47, 425–436. [Google Scholar] [CrossRef]

- Johnson, P. Human Computer Interaction: Psychology, Task Analysis and Software Engineering; McGraw-Hill: London, UK, 1992. [Google Scholar] [CrossRef]

- Williams, A.; Sherman, I.; Smarr, S.; Posadas, B.; Gilbert, J.E. Human trust factors in image analysis. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, San Diego, CA, USA, 20–24 July 2018; pp. 3–12. [Google Scholar] [CrossRef]

- Nordheim, C.B.; Følstad, A.; Bjørkli, C.A. An initial model of trust in chatbots for customer service-findings from a questionnaire study. Interact. Comput. 2019, 31, 317–335. [Google Scholar] [CrossRef]

- Li, X.; Hess, T.J.; Valacich, J.S. Using attitude and social influence to develop an extended trust model for information systems. ACM SIGMIS Database Database Adv. Inf. Syst. 2006, 37, 108–124. [Google Scholar] [CrossRef]

- Li, W.; Mao, Y.; Zhou, L. The impact of interactivity on user satisfaction in digital social reading: Social presence as a mediator. Int. J. Hum-Comput. Int. 2021, 37, 1636–1647. [Google Scholar] [CrossRef]

- Ifinedo, P. Applying uses and gratifications theory and social influence processes to understand students’ pervasive adoption of social networking sites: Perspectives from the Americas. Int. J. Inf. Manag. 2016, 36, 192–206. [Google Scholar] [CrossRef]

- Shiferaw, K.B.; Mehari, E.A. Modeling predictors of acceptance and use of electronic medical record system in a resource limited setting: Using modified UTAUT model. Inform. Med. Unlocked 2019, 17, 100182. [Google Scholar] [CrossRef]

- Oldeweme, A.; Märtins, J.; Westmattelmann, D.; Schewe, G. The role of transparency, trust, and social influence on uncertainty reduction in times of pandemics: Empirical study on the adoption of COVID-19 tracing apps. J. Med. Internet Res. 2021, 23, e25893. [Google Scholar] [CrossRef]

- Alsyouf, A.; Lutfi, A.; Al-Bsheish, M.; Jarrar, M.T.; Al-Mugheed, K.; Almaiah, M.A.; Alhazmi, F.N.; Masa’deh, R.E.; Anshasi, R.J.; Ashour, A. Exposure Detection Applications Acceptance: The Case of COVID-19. Int. J. Environ. Res. Public Health 2022, 19, 7307. [Google Scholar] [CrossRef] [PubMed]

- Allam, H.; Qusa, H.; Alameer, O.; Ahmad, J.; Shoib, E.; Tammi, H. Theoretical perspective of technology acceptance models: Towards a unified model for social media applciations. In Proceedings of the 2019 Sixth HCT Information Technology Trends (ITT), Ras Al Khaimah, United Arab Emirates, 20–21 November 2019; pp. 154–159. [Google Scholar] [CrossRef]

- Hengstler, M.; Enkel, E.; Duelli, S. Applied artificial intelligence and trust-The case of autonomous vehicles and medical assistance devices. Technol. Forecast. Soc. Chang. 2016, 105, 105–120. [Google Scholar] [CrossRef]

- Choung, H.; David, P.; Ross, A. Trust in AI and its role in the acceptance of AI technologies. Int. J. Hum.-Comput. Int. 2022, 1–13. [Google Scholar] [CrossRef]

- De Angelis, F.; Pranno, N.; Franchina, A.; Di Carlo, S.; Brauner, E.; Ferri, A.; Pellegrino, G.; Grecchi, E.; Goker, F.; Stefanelli, L.V. Artificial intelligence: A new diagnostic software in dentistry: A preliminary performance diagnostic study. Int. J. Environ. Res. Public Health 2022, 19, 1728. [Google Scholar] [CrossRef] [PubMed]

- Shin, D. How do people judge the credibility of algorithmic sources? AI Soc. 2022, 37, 81–96. [Google Scholar] [CrossRef]

- Hoff, K.A.; Masooda, B. Trust in automation: Integrating empirical evidence on factors that influence trust. Hum. Factors 2015, 57, 407–434. [Google Scholar] [CrossRef]

- Liu, L.L.; He, Y.M.; Liu, X.D. Investigation on patients’ cognition and trust in artificial intelligence medicine. Chin. Med. Ethics. 2019, 32, 986–990. [Google Scholar]

- Yang, C.C.; Li, C.L.; Yeh, T.F.; Chang, Y.C. Assessing older adults’ intentions to use a smartphone: Using the meta-unified theory of the acceptance and use of technology. Int. J. Environ. Res. Public Health 2022, 19, 5403. [Google Scholar] [CrossRef] [PubMed]

- Prakash, A.V.; Das, S. Medical practitioner’s adoption of intelligent clinical diagnostic decision support systems: A mixed-methods study. Inf. Manag. 2021, 58, 103524. [Google Scholar] [CrossRef]

- Siau, K.; Wang, W. Building trust in artificial intelligence, machine learning, and robotics. Cut. Bus. Technol. J. 2018, 31, 47–53. [Google Scholar]

- Walczak, R.; Kludacz-Alessandri, M.; Hawrysz, L. Use of telemedicine echnology among general practitioners during COVID-19: A modified technology acceptance model study in Poland. Int. J. Environ. Res. Public Health 2022, 19, 10937. [Google Scholar] [CrossRef] [PubMed]

- Du, C.Z.; Gu, J. Adoptability and limitation of cancer treatment guidelines: A Chinese oncologist’s perspective. Chin. Med. J. 2012, 125, 725–727. [Google Scholar] [CrossRef]

- Kijsanayotin, B.; Pannarunothai, S.; Speedie, S.M. Factors influencing health information technology adoption in Thailand’s community health centers: Applying the UTAUT model. Int. J. Med. Inform. 2009, 78, 404–416. [Google Scholar] [CrossRef]

- Huqh, M.Z.U.; Abdullah, J.Y.; Wong, L.S.; Jamayet, N.B.; Alam, M.K.; Rashid, Q.F.; Husein, A.; Ahmad, W.M.A.W.; Eusufzai, S.Z.; Prasadh, S.; et al. Clinical Applications of Artificial Intelligence and Machine Learning in Children with Cleft Lip and Palate-A Systematic Review. Int. J. Environ. Res. Public Health 2022, 19, 10860. [Google Scholar] [CrossRef]

- Damoah, I.S.; Ayakwah, A.; Tingbani, I. Artificial intelligence (AI)-enhanced medical drones in the healthcare supply chain (HSC) for sustainability development: A case study. J. Clean Prod. 2021, 328, 129598. [Google Scholar] [CrossRef]

- Li, X.; Cheng, M.; Xu, J. Leaders’ innovation expectation and nurses’ innovation behaviour in conjunction with artificial intelligence: The chain mediation of job control and creative self-efficacy. J. Nurs. Manag. 2022. [Google Scholar] [CrossRef]

- Martikainen, S.; Kaipio, J.; Lääveri, T. End-user participation in health information systems (HIS) development: Physicians’ and nurses’ experiences. Int. J. Med. Inform. 2020, 137, 104117. [Google Scholar] [CrossRef]

- Brislin, R.W. Translation and content analysis of oral and written material. In Handbook of Cross-Cultural Psychology: Methodology; Triandis, H.C., Berry, J.W., Eds.; Allyn and Bacon: Boston, MA, USA, 1980; pp. 389–444. [Google Scholar]

- Fornell, C.; Larcker, D.F. Structural equation models with unobservable variables and measurement error: Algebra and statistics. J. Mark. Res. 1981, 18, 382–388. [Google Scholar] [CrossRef]

- Gulati, S.; Sousa, S.; Lamas, D. Modelling trust in human-like technologies. In Proceedings of the 9th Indian Conference on Human Computer Interaction, Allahabad, India, 16–18 December 2018; pp. 1–10. [Google Scholar] [CrossRef]

- Zhou, H.; Long, L. Statistical remedies for common method biases. Adv. Psychol. Sci. 2004, 12, 942–950. [Google Scholar] [CrossRef]

- Meade, A.W.; Watson, A.M.; Kroustalis, C.M. Assessing common methods bias in organizational research. In Proceedings of the 22nd Annual Meeting of the Society for Industrial and Organizational Psychology, New York, NY, USA, 27 April 2007; pp. 1–10. [Google Scholar]

- Aguirre-Urreta, M.I.; Hu, J. Detecting common method bias: Performance of the Harman’s single-factor test. ACM SIGMIS Database: Database Adv. Inf. Syst. 2019, 50, 45–70. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.Y.; Podsakoff, N.P. Common method biases in behavioral research: A critical review of the literature and recommended remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef]

- Hayes, A.F. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach; Guilford Publications: New York, NY, USA, 2013. [Google Scholar]

- Byrne, B.M. Structural Equation Modeling with AMOS: Basic Concepts, Applications, and Programming, 3rd ed.; Routledge: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Chin, W.W.; Newsted, P.R. Structural equation modeling analysis with small samples using partial least squares. In Statistical Strategies for Small Sample Research; Hoyle, R.H., Ed.; Sage Publications: Thousand Oaks, CA, USA, 1999; Volume 1, pp. 307–341. [Google Scholar]

- Hayes, A.F.; Preacher, K.J. Statistical mediation analysis with a multi-categorical independent variable. Br. J. Math. Stat. Psychol. 2014, 67, 451–470. [Google Scholar] [CrossRef]

- Wu, B.; Chen, X. Continuance intention to use MOOCs: Integrating the technology acceptance model (TAM) and task technology fit (TTF) model. Comput. Hum. Behav. 2017, 67, 221–232. [Google Scholar] [CrossRef]

- Lin, Y.H.; Huang, G.S.; Ho, Y.L.; Lou, M.F. Patient willingness to undergo a two-week free trial of a telemedicine service for coronary artery disease after coronary intervention: A mixed-methods study. J. Nurs. Manag. 2020, 28, 407–416. [Google Scholar] [CrossRef]

- Raymond, L.; Castonguay, A.; Doyon, O.; Paré, G. Nurse practitioners’ involvement and experience with AI-based health technologies: A systematic review. Appl. Nurs. Res. 2022, 151604. [Google Scholar] [CrossRef]

- Chuah, S.H.W.; Rauschnabel, P.A.; Krey, N.; Nguyen, B.; Ramayah, T.; Lade, S. Wearable technologies: The role of usefulness and visibility in smartwatch adoption. Comput. Hum. Behav. 2016, 65, 276–284. [Google Scholar] [CrossRef]

- Lysaght, T.; Lim, H.Y.; Xafis, V.; Ngiam, K.Y. AI-assisted decision-making in healthcare: The application of an ethics framework for big data in health and research. Asian Bioeth. Rev. 2019, 11, 299–314. [Google Scholar] [CrossRef] [PubMed]

- Im, I.; Hong, S.; Kang, M.S. An international comparison of technology adoption: Testing the UTAUT model. Inf. Manag. 2011, 48, 1–8. [Google Scholar] [CrossRef]

- Li, J.; Ma, Q.; Chan, A.H.; Man, S. Health monitoring through wearable technologies for older adults: Smart wearables acceptance model. Appl. Ergon. 2019, 75, 162–169. [Google Scholar] [CrossRef] [PubMed]

- Webb, T.L.; Sheeran, P. Does changing behavioral intentions engender behavior change? A meta-analysis of the experimental evidence. Psychol. Bull. 2006, 132, 249–268. [Google Scholar] [CrossRef] [PubMed]

| Authors | Context | Theoretical Basis | Region | Key Findings |

|---|---|---|---|---|

| Alsyouf et al. (2022) [23] | Nurses’ continuance intention of EHR | UTAUT, ECT, FFM | Jordan | Performance expectancy as a mediating variable on the relationships between the different personality dimensions and continuance intention, specifically conscientiousness as a moderator. |

| Pikkemaat et al. (2021) [24] | Physicians’ adoption intention of telemedicine | TPB | Sweden | Attitudes and perceived behavioral control being significant predictors for physicians to use telemedicine. |

| Hossain et al. (2019) [25] | Physicians’ adoption intention of EHR | Extended UTAUT | Bangladesh | Social influence, facilitating conditions, and personal innovativeness in information technology had a significant influence on physicians’ adoption intention to adopt the EHR system. |

| Alsyouf and Ishak (2018) [26] | Nurses’ continuance intention to use EHR | UTAUT and TMS | Jordan | Effort expectancy, performance expectancy, and facilitating conditions positively influence nurses’ continuance intention to use and top management support as significant and negatively related to nurses’ continuance adoption intention. |

| Fan et al. (2018) [27] | Healthcare workers’ adoption intention of AIMDSS | UTAUT, TTF, trust theory | China | Initial trust mediates the relationship between UTAUT factors and behavioral intentions. |

| Bawack and Kamdjoug (2018) [28] | Clinicians’ adoption intention of HIS | Extended UTAUT | Cameroon | Performance expectancy, effort expectancy, social influence, and facilitating conditions have a positive direct effect on clinicians’ adoption intention of HIS. |

| Adenuga et al. (2017) [29] | Clinicians’ adoption intention of telemedicine | UTAUT | Nigeria | Performance expectancy, effort expectancy, facilitating condition, and reinforcement factor have significant effects on clinicians’ adoption intention of telemedicine. |

| Liu and Cheng (2015) [30] | Physicians’ adoption intention of MEMR | The dual-factor model | Taiwan | Physicians’ intention to use MEMRs is significantly and directly related to perceived ease of use and perceived usefulness, but perceived threat has a negative influence on physicians’ adoption intention. |

| Hsieh (2015) [31] | Healthcare professionals’ adoption intention of health clouds | TPB and Status quo bias theory | Taiwan | Attitude, subjective norm, and perceived behavior control are shown to have positive and direct effects on healthcare professionals’ intention to use the health cloud. |

| Wu et al. (2011) [32] | Healthcare professionals’ adoption intention of mobile healthcare | TAM and TPB | Taiwan | Perceived usefulness, attitude, perceived behavioral control, and subjective norm have a positive effect on healthcare professionals’ adoption intention of mobile healthcare. |

| Egea and González (2011) [33] | Physicians’ acceptance of EHCR | Extended TAM | Southern Spain | Trust fully mediated the influences of perceived risk and information integrity perceptions on physicians’ acceptance of EHCR systems. |

| Characteristics | Frequency (f) | Percentage (%) |

|---|---|---|

| Gender | ||

| Male | 99 | 28.9 |

| Female | 244 | 71.1 |

| Age | ||

| ≤20 | 37 | 10.8 |

| 21–30 | 105 | 30.6 |

| 31–40 | 122 | 35.6 |

| 41–50 | 57 | 16.6 |

| ≥51 | 22 | 6.4 |

| Marital status | ||

| Single | 118 | 34.4 |

| Married | 163 | 47.5 |

| Divorced | 62 | 18.1 |

| Education | ||

| High school | 27 | 7.9 |

| Junior college | 66 | 19.2 |

| University | 169 | 49.3 |

| Master and above | 81 | 23.6 |

| Clinical experience | ||

| <1 | 23 | 6.7 |

| 1–5 | 133 | 38.8 |

| 6–10 | 86 | 25.1 |

| 11–15 | 54 | 15.7 |

| 16–20 | 32 | 9.3 |

| >20 | 15 | 4.4 |

| Position | ||

| Doctor | 152 | 44.3 |

| Nurse | 124 | 36.2 |

| Medical technician | 67 | 19.5 |

| Type of hospital | ||

| tertiary | 198 | 57.7 |

| secondary | 145 | 42.3 |

| M | SD | AVE | PE | EE | SI | HCT | ADI | |

|---|---|---|---|---|---|---|---|---|

| PE | 3.96 | 0.75 | 0.697 | 0.835 | ||||

| EE | 3.11 | 0.97 | 0.779 | 0.276 ** | 0.883 | |||

| SI | 3.53 | 0.75 | 0.800 | 0.583 ** | 0.391 ** | 0.894 | ||

| HCT | 3.44 | 0.72 | 0.623 | 0.559 ** | 0.558 ** | 0.451 ** | 0.789 | |

| ADI | 3.70 | 0.72 | 0.650 | 0.441 ** | 0.261 ** | 0.511 ** | 0.604 ** | 0.806 |

| Model | Variables | χ2/df | GFI | NFI | RFI | CFI | RMSEA |

|---|---|---|---|---|---|---|---|

| Five-factor model | PE, EE, SI, HCT, ADI | 2.213 | 0.901 | 0.937 | 0.915 | 0.964 | 0.068 |

| Four-factor model | PE + EE, SI, HCT, ADI | 3.021 | 0.851 | 0.903 | 0.884 | 0.933 | 0.088 |

| Three-factor model | PE + EE, SI + HCT, ADI | 4.676 | 0.763 | 0.845 | 0.821 | 0.873 | 0.118 |

| Two-factor model | PE + EE + SI + HCT, ADI | 6.469 | 0.648 | 0.778 | 0.752 | 0.805 | 0.144 |

| One-factor model | PE + EE + SI + HCT + ADI | 10.870 | 0.481 | 0.621 | 0.583 | 0.642 | 0.194 |

| Effect | X = PE | X = EE | ||||

|---|---|---|---|---|---|---|

| Point Estimate | Boot SE | 95%CI | Point Estimate | Boot SE | 95%CI | |

| Total indirect effect of X on ADI | 0.349 | 0.064 | [0.230, 0.481] | 0.463 | 0.064 | [0.332, 0.585] |

| Indirect 1: X → SI → ADI | 0.261 | 0.061 | [0.147, 0.386] | 0.335 | 0.061 | [0.213, 0.456] |

| Indirect 2: X → HCT → ADI | 0.043 | 0.020 | [0.010, 0.088] | 0.088 | 0.033 | [0.027, 0.157] |

| Indirect 3: X → SI → HCT → ADI | 0.045 | 0.020 | [0.011, 0.090] | 0.040 | 0.017 | [0.012, 0.077] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, M.; Li, X.; Xu, J. Promoting Healthcare Workers’ Adoption Intention of Artificial-Intelligence-Assisted Diagnosis and Treatment: The Chain Mediation of Social Influence and Human–Computer Trust. Int. J. Environ. Res. Public Health 2022, 19, 13311. https://doi.org/10.3390/ijerph192013311

Cheng M, Li X, Xu J. Promoting Healthcare Workers’ Adoption Intention of Artificial-Intelligence-Assisted Diagnosis and Treatment: The Chain Mediation of Social Influence and Human–Computer Trust. International Journal of Environmental Research and Public Health. 2022; 19(20):13311. https://doi.org/10.3390/ijerph192013311

Chicago/Turabian StyleCheng, Mengting, Xianmiao Li, and Jicheng Xu. 2022. "Promoting Healthcare Workers’ Adoption Intention of Artificial-Intelligence-Assisted Diagnosis and Treatment: The Chain Mediation of Social Influence and Human–Computer Trust" International Journal of Environmental Research and Public Health 19, no. 20: 13311. https://doi.org/10.3390/ijerph192013311

APA StyleCheng, M., Li, X., & Xu, J. (2022). Promoting Healthcare Workers’ Adoption Intention of Artificial-Intelligence-Assisted Diagnosis and Treatment: The Chain Mediation of Social Influence and Human–Computer Trust. International Journal of Environmental Research and Public Health, 19(20), 13311. https://doi.org/10.3390/ijerph192013311