Applying a User Centred Design Approach to Optimise a Workplace Initiative for Wide-Scale Implementation

Abstract

1. Introduction

2. Methods

2.1. Methodological Approach and Participants

2.2. Redesign Process and Data Collection

2.2.1. Workplace Users

2.2.2. Practice and Policy Partners

2.2.3. Core Expert Team

2.3. Analysis

3. Results

3.1. Optimising Program Reach

“...make it more fun and interactive”Champion 112

3.2. Measuring Program Effectiveness

“People did not complete [the] survey because they feared what would happen to their data”Champion 121

“Maybe a little summary at the beginning of the survey on what the survey was aiming to achieve and goals at the end of the survey”Champion 126

”…need for feedback on the activities that were put in place…we did not get outcomes”Champion 102

3.3. Enhancing and Tracking Adoption

“need to be able to promote it more…to get management on board”Champion 102

3.4. Supporting Champion Implementation

“I have been using many of the posters available and think some more would be good, as I am rotating them around so they don’t become part of the furniture. I think it’s more useful for the posters to have a tip (e.g., walk over rather than sending an email) instead of a general statement (e.g., take a stand for your health)”Champion 168

“We are a small dynamic team, in a small office and already had all the equipment necessary. We could have jumped a few of the steps in the program.”Champion 422

3.5. Measuring Program Maintenance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shelton, R.C.; Lee, M.; Brotzman, L.E.; Crookes, D.; Jandorf, L.; Erwin, D.; Gage-Bouchard, E.A. Use of social network analysis in the development, dissemination, implementation, and sustainability of health behavior interventions for adults: A systematic review. Soc. Sci Med. 2019, 220, 81–101. [Google Scholar] [CrossRef] [PubMed]

- Stirman, S.W.; Miller, C.J.; Toder, K.; Calloway, A. Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implement. Sci. 2013, 8, 65. [Google Scholar] [CrossRef] [PubMed]

- Escoffery, C.; Lebow-Skelley, E.; Haardoerfer, R.; Haardoerfer, R.; Boing, E.; Udelson, H.; Wood, R.; Hartman, M.; Fernandez, M.E.; Mullen, P.D. A systematic review of adaptations of evidence-based public health interventions globally. Implement. Sci. 2018, 13, 125. [Google Scholar] [CrossRef] [PubMed]

- Tabak, R.G.; Khoong, E.C.; Chambers, D.A.; Brownson, R.C. Bridging research and practice: Models for dissemination and implementation research. Am. J. Prev. Med. 2012, 43, 337–350. [Google Scholar] [CrossRef] [PubMed]

- Escoffery, C.; Lebow-Skelley, E.; Udelson, H.; A Böing, E.; Wood, R.; Fernandez, M.E.; Mullen, P.D. A scoping study of frameworks for adapting public health evidence-based interventions. Transl. Behav. Med. 2019, 9, 1–10. [Google Scholar] [CrossRef]

- Dopp, A.R.; Parisi, K.E.; Munson, S.A.; Lyon, A.R. Aligning implementation and user-centered design strategies to enhance the impact of health services: Results from a concept mapping study. Implement. Sci. Commun. 2020, 1, 17. [Google Scholar] [CrossRef]

- Lyon, A.R.; Koerner, K. User-Centered Design for Psychosocial Intervention Development and Implementation. Clin. Psychol. 2016, 23, 180–200. [Google Scholar] [CrossRef]

- Chambers, D.A.; Glasgow, R.E.; Stange, K.C. The dynamic sustainability framework: Addressing the paradox of sustainment amid ongoing change. Implement. Sci. 2013, 8, 117. [Google Scholar] [CrossRef]

- Martin, B.; Hanington, B.M. Universal Methods of Design: 100 Ways to Research Complex Problems. In Develop Innovative Ideas and Design Effective Solutions; Rockport Publishers: Beverly, NJ, USA, 2012. [Google Scholar]

- The Field Guide to Human-Centered Design, 1st ed.; IDEO.org.: San Francisco, CA, USA, 2015.

- Hekler, E.B.; Klasnja, P.; Riley, W.T.; Buman, M.P.; Huberty, J.; Rivera, D.E.; Martin, C.A. Agile science: Creating useful products for behavior change in the real world. Transl. Behav. Med. 2016, 6, 317–328. [Google Scholar] [CrossRef]

- Dopp, A.R.; Parisi, K.E.; Munson, S.A.; Lyon, A.R. A glossary of user-centered design strategies for implementation experts. Transl. Behav. Med. 2019, 9, 1057–1064. [Google Scholar] [CrossRef]

- Leask, C.F.; Sandlund, M.; Skelton, D.A.; Altenburg, T.M.; Cardon, G.; Chinapaw, M.J.M.; De Bourdeaudhuij, I.; Verloigne, M.; Chastin, S.F.M. Framework, principles and recommendations for utilising participatory methodologies in the co-creation and evaluation of public health interventions. Res. Involv. Engagem. 2019, 5, 2. [Google Scholar] [CrossRef] [PubMed]

- Healy, G.N.; Goode, A.D.; Abbott, A.; Burzic, J.; Clark, B.K.; Dunstan, D.W.; Eakin, E.G.; Frith, M.; Gilson, N.D.; Gao, L.; et al. Supporting Workers to Sit Less and Move More Through the Web-Based BeUpstanding Program: Protocol for a Single-Arm, Repeated Measures Implementation Study. JMIR Res. Protoc. 2020, 9, 15756. [Google Scholar] [CrossRef] [PubMed]

- Healy, G.N.; Goode, A.; Schultz, D.; Lee, D.; Leahy, B.; Dunstan, D.W.; Gilson, N.D.; Eakin, E.G. The BeUpstanding Program: Scaling up the Stand Up Australia Workplace Intervention for Translation into Practice. AIMS Public Health 2016, 3, 341–347. [Google Scholar] [CrossRef] [PubMed]

- Healy, G.N.; Winkler, E.A.H.; Goode, A.D. A RE-AIM evaluation in early adopters to iteratively improve the online BeUpstanding program supporting workers to sit less and move more. BMC Public Health 2021, 21, 1916. [Google Scholar] [CrossRef] [PubMed]

- Healy, G.N.; Eakin, E.G.; Winkler, E.A.; Hadgraft, N.; Dunstan, D.W.; Gilson, N.D.; Goode, A.D. Assessing the Feasibility and Pre-Post Impact Evaluation of the Beta (Test) Version of the BeUpstanding Champion Toolkit in Reducing Workplace Sitting: Pilot Study. JMIR Form. Res. 2018, 2, 17. [Google Scholar] [CrossRef]

- Glasgow, R.E.; Harden, S.M.; Gaglio, B.; Rabin, B.; Smith, M.L.; Porter, G.C.; Ory, M.G.; Estabrooks, P.A. RE-AIM Planning and Evaluation Framework: Adapting to New Science and Practice With a 20-Year Review. Front. Public Health 2019, 7, 64. [Google Scholar] [CrossRef]

- Glasgow, R.E.; Battaglia, C.; McCreight, M.; Ayele, R.A.; Rabin, B.A. Making Implementation Science More Rapid: Use of the RE-AIM Framework for Mid-Course Adaptations Across Five Health Services Research Projects in the Veterans Health Administration. Front. Public Health 2020, 8, 194. [Google Scholar] [CrossRef]

- Goode, A.D.; Eakin, E.G. Dissemination of an evidence-based telephone-delivered lifestyle intervention: Factors associated with successful implementation and evaluation. Transl. Behav. Med. 2013, 3, 351–356. [Google Scholar] [CrossRef][Green Version]

- Healy, G.N.; Eakin, E.G.; Owen, N.; Lamontagne, A.D.; Moodie, M.; Winkler, E.A.; Fjeldsoe, B.S.; Wiesner, G.; Willenberg, L.; Dunstan, D.W. A Cluster Randomized Controlled Trial to Reduce Office Workers’ Sitting Time: Effect on Activity Outcomes. Med. Sci. Sports Exerc. 2016, 48, 1787–1797. [Google Scholar] [CrossRef]

- Neuhaus, M.; Healy, G.N.; Fjeldsoe, B.S.; Lawler, S.; Owen, N.; Dunstan, D.W.; Lamontagne, A.D.; Eakin, E.G. Iterative development of Stand Up Australia: A multi-component intervention to reduce workplace sitting. Int. J. Behav. Nutr. Phys. Act. 2014, 11, 21. [Google Scholar] [CrossRef]

- Robinson, M.; Tilford, S.; Branney, P.; Kinsella, K. Championing mental health at work: Emerging practice from innovative projects in the UK. Health Promot. Int. 2014, 29, 583–595. [Google Scholar] [CrossRef] [PubMed]

- Hadgraft, N.T.; Willenberg, L.; LaMontagne, A.D.; Malkoski, K.; Dunstan, D.W.; Healy, G.N.; Moodie, M.; Eakin, E.G.; Owen, N.; Lawler, S.P. Reducing occupational sitting: Workers’ perspectives on participation in a multi-component intervention. Int. J. Behav. Nutr. Phys. Act. 2017, 14, 73. [Google Scholar] [CrossRef] [PubMed]

- Brakenridge, C.L.; Healy, G.N.; Hadgraft, N.T.; Young, D.C.; Fjeldsoe, B. Australian employee perceptions of an organizational-level intervention to reduce sitting. Health Promot. Int. 2018, 33, 968–979. [Google Scholar] [CrossRef] [PubMed]

- Goode, A.D.; Hadgraft, N.T.; Neuhaus, M.; Healy, G.N. Perceptions of an online ‘train-the-champion’ approach to increase workplace movement. Health Promot. Int. 2019, 34, 1179–1190. [Google Scholar] [CrossRef]

- Hanington, B.; Martin, B. Universal methods of design expanded and revised: 125 Ways to research complex problems. In Develop Innovative Ideas and Design Effective Solutions; Rockport Publishers: Beverly, NJ, USA, 2019. [Google Scholar]

- Tschimmel, K. Design thinking as an effective toolkit for innovation. In Proceedings of the XXIII ISPIM Conference: Action for Innovation: Innovating from Experience, Barcelona, Spain, 17–20 June 2012. [Google Scholar]

- Mullane, S.L.; Epstein, D.R.; Buman, M.P. The ‘House of Quality for Behavioral Science’-a user-centered tool to design behavioral interventions. Transl. Behav. Med. 2019, 9, 810–818. [Google Scholar] [CrossRef]

- Linnan, L.; Fisher, E.B.; Hood, S. The power and potential of peer support in workplace interventions. Am. J. Health Promot. 2013, 28, TAHP-2–TAHP-10. [Google Scholar]

- LaMontagne, A.D.; Noblet, A.J.; Landsbergis, P.A. Intervention development and implementation: Understanding and addressing barriers to organisational-level interventions. In Improving Organisational Interventions for Stress and Well-Being; Biron, C., Karanika-Murray, M., Cooper, C., Eds.; Routledge: Mliton Park, UK, 2012. [Google Scholar]

- Waltz, T.J.; Powell, B.J.; Matthieu, M.M.; Damschroder, L.J.; Chinman, M.J.; Smith, J.L.; Proctor, E.K.; Kirchner, J.E. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: Results from the Expert Recommendations for Implementing Change (ERIC) study. Implement. Sci. 2015, 10, 109. [Google Scholar] [CrossRef]

- Lyon, A.R.; Bruns, E.J. User-Centered Redesign of Evidence-Based Psychosocial Interventions to Enhance Implementation-Hospitable Soil or Better Seeds? JAMA Psychiatry 2019, 76, 3–4. [Google Scholar] [CrossRef]

- Minkler, M.; Wallerstein, N. Community-Based Participatory Research for Health: From Process to Outcomes; John Wiley & Sons: New Jersey, NJ, USA, 2011. [Google Scholar]

- Bazzano, A.N.; Martin, J.; Hicks, E.; Faughnan, M.; Murphy, L. Human-centred design in global health: A scoping review of applications and contexts. PLoS ONE 2017, 12, e0186744. [Google Scholar] [CrossRef]

| Stakeholder Group | Who They Were | How They Contributed |

|---|---|---|

| Core expert team |

| Led redesign and integration of changes within online toolkit |

| Policy and practice partners |

| Identified priority needs of organisations and provided formal and informal feedback through stakeholder meetings and emails and telephone calls with core team |

| Workplace end users |

| Provided formal and informal feedback via online survey data, email and phone feedback, direct discovery interviews |

| Term * | Example(s) of What Was Done |

|---|---|

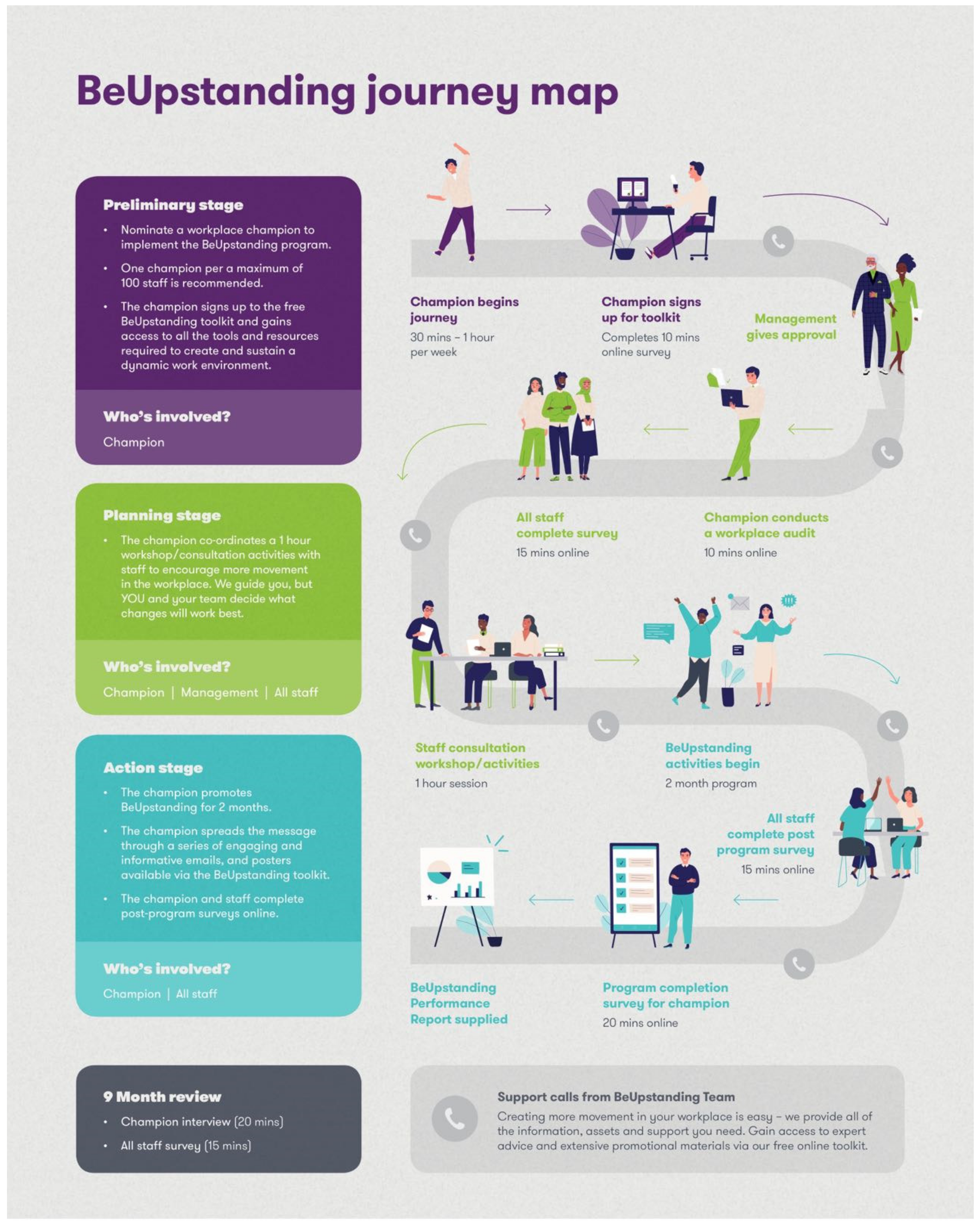

| Apply process maps to system-level behaviour | Mapped all champion interactions that occur with the toolkit (e.g., journey map: when and how champion interacts with toolkit guide and resources), and staff interations with the program |

| Apply task analysis to user behaviour | Ideated and defined engagement strategies to be built into the toolkit for champions (e.g., incentives for completing tasks including customised champion certificate) |

| Collect qualitative survey data on users | Champion and staff surveys from soft launch included open text data collection around what worked well and barriers to implementation |

| Conduct co-creation sessions | Researchers and interaction designer mocked up prototypes of intervention elements (e.g., data reports) and sought feedback from users |

| Conduct design charette sessions with stakeholders | Members of the core team participated in intensive workshops to redesign program and toolkit elements |

| Conduct competitive user experience research | Business product development expert and researchers asked workplaces about other health and wellbeing programs they used or were aware of during discovery interviews |

| Conduct focus groups about user perspectives | Obtained management, champion and staff perspectives through discovery interviews |

| Conduct heuristic evaluation | Engaged design thinking and user-centred design expert to redesign the intervention toolkit and associated collateral (e.g., downloaded reports, information and tip sheets) |

| Conduct interpretation sessions with stakeholders | Discussion held at regular partner meetings concerning any conflicting perspectives of workplaces vs. partner/funders on desired look and feel and features of the toolkit and program |

| Conduct interviews about user perspectives | Obtained management, champion and staff perspectives on features |

| Conduct observational field visits | Observed workplaces through direct discovery interviews and field visits |

| Define target users and their needs | Core team identified and spoke directly with various stakeholders/users to redesign elements of the program and product based on problems they identified |

| Define work flows | Defined the process by which a champion takes up the program, enlists their team and delivers and evaluates it |

| Design in teams | Included interaction designer, software developer, business and product development expert and behaviour science experts in core team |

| Develop a user research plan | The research team planned this phase of work from the inception of the project with corresponding data collection methods, tools and personnel identified |

| Develop experience models | Profiles of workplaces were created (e.g., small with one team, large with multiple teams taking part in BeUpstanding) |

| Develop personas and scenarios | Profiles of the main users were created (i.e., researchers, overseers/management, champions, staff) |

| Engage in cycles of rapid prototyping | Mock-ups of the toolkit elements and collateral were created by the interaction designer and feedback sought |

| Engage in iterative development | Revised toolkit dashboard elements and collateral based on feedback and tested the generalisability of improvements/changes by asking different stakeholders to review |

| Examine automatically generated data | Objective user data (e.g., tasks completed by user champions) was collected automatically by the implementation platform |

| Prepare and present user research reports | Findings about the needs of each of the users were presented to the partners during regular stakeholder meetings and via emailed reports |

| Recruit potential users | Engaged users in different types of user research (via discovery interviews, workshops etc)to understand their needs, preferences and ideas for solutions |

| Stakeholder | Priorities for Redesign |

|---|---|

| Researchers |

|

| Policy and practice partners |

|

| Users of the toolkit (i.e., those delivering and evaluating the program) |

|

| Users of the program (i.e., staff taking part in BeUpstanding) |

|

| RE-AIM Dimension | Early Adopter Version Challenges | Improvements for Optimising the Program | Personnel Support Required to Make Enhancements |

|---|---|---|---|

| Reach | Lack of engaging materials to support champions to recruit and encourage staff | Revision of online and printed support materials to help champions invite and engage staff in the program (e.g., emails, posters). | Research team; interaction and graphic designer |

| Inaccurate assessment of team numbers (a key denominator | Champions were given the ability to adjust and correct their initial data entry (provided in the champion profile survey) on their team numbers. Team numbers were visible in the survey portal and used to inform response rates. | Software developer | |

| Effectiveness | Non-optimal staff survey response rate | Additional online content provided in the toolkit around the importance of evaluation. Desired response rates added to staff survey portal. | Research team; software developer |

| Data feedback did not match expectations of champion/management end-user | Increased real-time feedback provided through staff survey portal. Incentive provided through bespoke reports for the workplace audit and following completion of the program completion survey. | Research team identified data points, graphic designer designed report, software developer integrated report. | |

| Adoption | Limited business case for program Confusing champion journey | The free resources on the boarding page were refined and added to, including an animation of the program able to be shared with management.One page and two page infographics developed to capture time key actions and time commitment required from champions | Graphic designer, videographer, business consultants, research teamGraphic designer, research team and business developer |

| Multi-stage onboarding process | Onboarding streamlined and simplified | Business developer, interaction designer, software developer | |

| Minimal recruitment channels with teams purposely approached and chosen by research staff | Development of a communications strategy (including recruitment goals, suggested target groups and recruitment avenues) and a communications package (including key content and graphics) for core partners to promote the program nationally to champions and worksites through existing networks and channels | Business consultants developed the comms and marketing strategy and package after consultation with each of the partners | |

| Implementation | Program requirements and core steps not explicit | Development of champion journey infographics; dashboard redesigned to include more signposting and visual cues; collateral organised in weekly guide | Interaction and graphic designer; business consultants; research team |

| Implementation data poorly captured | Addition of new survey for champions and new hard coded data entry with incentive (i.e., poster) to capture strategies and staff engagement in the workshop | Research team; software developer | |

| Maintenance | No data captured | Staff survey portal (accessible by champions) expanded to include sustainability surveys. Sustainability audit (and report) added. Design features (e.g., lock and fade) to help avoid incorrectly times data completion incorporated into dashboard | Research team developed content; graphic designer developed report; software developer integrated into toolkit. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Goode, A.D.; Frith, M.; Hyne, S.A.; Burzic, J.; Healy, G.N. Applying a User Centred Design Approach to Optimise a Workplace Initiative for Wide-Scale Implementation. Int. J. Environ. Res. Public Health 2022, 19, 8096. https://doi.org/10.3390/ijerph19138096

Goode AD, Frith M, Hyne SA, Burzic J, Healy GN. Applying a User Centred Design Approach to Optimise a Workplace Initiative for Wide-Scale Implementation. International Journal of Environmental Research and Public Health. 2022; 19(13):8096. https://doi.org/10.3390/ijerph19138096

Chicago/Turabian StyleGoode, Ana D., Matthew Frith, Sarah A. Hyne, Jennifer Burzic, and Genevieve N. Healy. 2022. "Applying a User Centred Design Approach to Optimise a Workplace Initiative for Wide-Scale Implementation" International Journal of Environmental Research and Public Health 19, no. 13: 8096. https://doi.org/10.3390/ijerph19138096

APA StyleGoode, A. D., Frith, M., Hyne, S. A., Burzic, J., & Healy, G. N. (2022). Applying a User Centred Design Approach to Optimise a Workplace Initiative for Wide-Scale Implementation. International Journal of Environmental Research and Public Health, 19(13), 8096. https://doi.org/10.3390/ijerph19138096