Abstract

CDC’s National Environmental Public Health Tracking Program (Tracking Program) receives administrative data annually from 25–30 states to track potential environmental exposures and to make data available for public access. In 2019, the CDC Tracking Program conducted a cross-sectional survey among principal investigators or program managers of the 26 funded programs to improve access to timely, accurate, and local data. All 26 funding recipients reported having access to hospital inpatient data, and most states (69.2%) regularly update data user agreements to receive the data. Among the respondents, 15 receive record-level data with protected health information (PHI) and seven receive record-level data without PHI. Regarding geospatial resolution, approximately 50.0% of recipients have access to the street address or census tract information, 34.6% have access to ZIP code, and 11.5% have other sub-county geographies (e.g., town). Only three states receive administrative data for their residents from all border states. The survey results will help the Tracking Program to identify knowledge gaps and perceived barriers to the use and accessibility of administrative data for the CDC Tracking Program. The information collected will inform the development of resources that can provide solutions for more efficient and timely data exchange.

1. Introduction

The Centers for Disease and Control and Prevention (CDC) National Environmental Public Health Tracking Program (Tracking Program) collects, analyzes, and distributes standardized data on many environmental health topics from a variety of national, state, and local partners [1,2]. The Tracking Program drives public health actions that protect people from harm resulting from exposure to environmental contaminants [3,4]. Nearly all states collect inpatient discharge and emergency department (ED) visit data from every licensed healthcare facility within their state. Inpatient and ED data are a major source of health outcomes data for the program’s National Environmental Public Health Tracking Network, a web-based system of environmental health data and information. The data from states are essential for filling the “environmental health data gap” to document potential links between environmental hazards and illness and disease [5].

The Tracking Program annually receives inpatient and ED data from 25–30 states. Health outcomes are reported for patients treated for asthma, chronic obstructive pulmonary disease, carbon monoxide poisoning, heart attack, and heat stress illness. Inpatient and ED data, along with some other types, such as data on ambulatory surgery visits, are often referred to collectively as administrative data [6], hospital data, or hospital discharge data, and are an important source of information on morbidities. The Tracking Program uses the data to inform policy and practice decisions and enhance the local analytical workforce and information infrastructure. Administrative data can also play a critical role in environmental public health surveillance, strategic planning, and public health response [7].

The National Association of Health Data Organizations (NAHDO) has been a Tracking Program partner since 2007. NAHDO has worked toward improving access to hospital data sources for measuring health outcomes [8]. The quality of hospital data affects the usefulness of the data [9,10]. Improving the timeliness and validity of existing hospital data sources is a mutual goal of the Tracking Program and its partners to increase the capacity to produce standardized and actionable data and measures [9,10,11,12].

The goal of this survey was to understand the knowledge gaps and perceived barriers to use and accessibility of hospital data for the CDC Tracking Program. The survey results will be used to evaluate the Tracking Program’s ongoing data call process and routine data validation and data sharing practices. The results will also help the Tracking Program improve standardized guidance and evaluation activities to support recipients in submitting data to the program.

2. Materials and Methods

2.1. Survey Design

The CDC tracking program conducted a cross-sectional survey among principal investigators or program managers of the 26 funded programs to improve access to timely, accurate, and local data. The tracking program and NAHDO collaboratively designed a survey instrument to assess common and unique barriers to the exchange and use of administrative data used by the Tracking Program. The 38 survey questionnaire was designed to assess six aspects of administrative data: (1) data source (2 questions), (2) data providers and user agreement (11 questions), (3) acquired data attributes (6 questions), (4) data from bordering states (7 questions), (5) data quality and validation (9 questions), and (6) partnership with data agency or organization (3 questions).

2.2. Data Collection

The draft survey questionnaire was pilot tested with three volunteer states (Connecticut, Massachusetts, and Michigan). A total 46 survey questionnaire was finalized based on feedback from those states on survey content, logic, clarity, structure, and response time. All feedbacks from the pilot test are shown in Supplement Table S1.

A pre-notice was sent by email to all 26 recipients one week before launch to announce the 2019 survey. Participating states included: Arizona, California, Colorado, Connecticut, Florida, Iowa, Kansas, Kentucky, Louisiana, Missouri, Maine, Maryland, Massachusetts, Michigan, Minnesota, New Hampshire, New Jersey, New Mexico, New York City, New York State, Oregon, Rhode Island, Utah, Vermont, Washington, and Wisconsin. The survey pre-notice asked that each recipient identify the principal investigator or program manager with the most knowledge about their administrative data. The pre-notice explained the purpose of the survey and how to respond.

Survey questions were emailed to respondents before launch so they could gather information. At launch a week later, a survey invitation was emailed to recipients. It included a hyperlink to an electronic version of the survey that respondents could use instead of the paper version, whichever the participants chose to complete. Nonresponsive recipients received a weekly reminder email each of the two weeks after launch. A survey closing note was sent to all recipients three weeks after launch.

2.3. Statistical Methods

Data were analyzed using descriptive statistics and summarized by the following data characteristics: type, provider, schedule, protected health information (PHI) inclusion, spatial resolution, elements to identify transfers, duplicates removal, how data problems are corrected, exclusions, and use for the Tracking Program. Data agreement types, time lag in the data, and information sharing with border states were also described.

3. Results

A total of 26 professionals from 26 tracking programs responded to the survey (100% response rate). Among those respondents, 16 (61.5%) had more than 4 years of professional experience with tracking programs, 5 (19.2%) had more than 13 years of experience, and 5 (19.2%) had less than 3 years of experience.

All 26 state programs (25 states and New York City) currently receive or access inpatient discharge data, but four states do not have access to ED visit data. In addition to the required inpatient or ED data, some states have access to observational stay data (eight states) or an all-payer claims database (six states). Eight states receive data from their hospital association; the other states receive data from their state health department, agency, commission, or board (Table 1). Among respondents, 15 (57.7%) received record-level data with protected health information, 9 (26.9%) received record-level data without PHI, and 2 (7.7%) received data with aggregated PHI. Survey results also showed that 8 (30.8%) respondents have access to street addresses, 3 (11.5%) have access to census tract information, and 14 (53.8%) have access to ZIP code or other sub-county geographies such as a town (Table 1).

Table 1.

Characteristics of 26 participating U.S. states *—Centers for Disease Control and Prevention (CDC) Public Health Tracking Program, 2019.

Transfer cases are identifiable by all but one state. Sixteen states (61.5%) have a combination of variables for identifying transfer cases, such as age, date of birth, and date of admission. Six states (23.1%) receive patient hospital identification (ID), and three states (11.5%) receive flags for transfer from data providers. Among respondents, 16 strongly emphasized the need for additional data elements (e.g., patient ID, full residential address, and geocoded data) to process data efficiently and identify transfer cases or sub-county geographies. Data providers (n = 12, 46.2%) or state programs (n = 9, 34.6%) remove duplicate data as a validation procedure. Almost one third of the programs (9, 33.3%) ask data providers to clean data by correcting errors and resubmitting after they validate the data (Table 1).

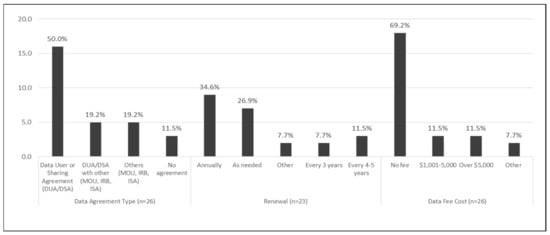

Most states (88.5%) establish and maintain at least one data user agreement (DUA) or various types of combined agreements: 13 states only have a DUA or data sharing agreement (DSA) in place (50.0%), 5 states maintain a DUA with other combined agreements (e.g., memorandum of understanding, interdepartmental service agreement, or institutional review board exemption) (19.2%), 5 states have other types of agreements (19.2%), and 3 states do not have any agreement (11.5%) (Figure 1).

Figure 1.

Data Sharing Agreement, 26 participating U.S. states *—CDC Public Health Tracking Program, 2019. * Twenty-five states and New York City.

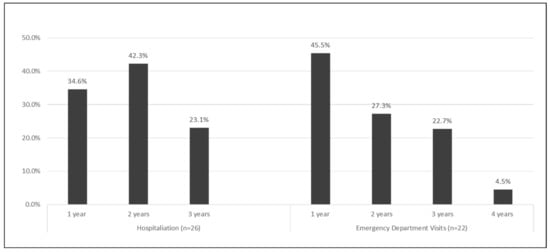

Most of the states receive data from their suppliers annually (61.5%) or quarterly (30.8%). Most states do not pay a fee to access the data, but six states pay more than $1000 for data access (Figure 1). The most recent period for which a program had received data ranged from 2015 to 2019. For the 2019 Tracking Program data call, 20 states were able to submit hospitalization data up to 2017 and emergency department (ED) visits data (76.9%, 72.8%) (Figure 2).

Figure 2.

Data Lag Period, 26 participating U.S. states * —CDC Public Health Tracking Program, 2019. * Twenty-five states and New York City.

Only three states (11.5%) receive data from all their bordering states, and six states (23.1%) receive data from some bordering states (Table 2). Six states (Florida, Maine, Maryland, Massachusetts, New York State, and Oregon) attempted to obtain border data but still do not have border data. Data from bordering states typically lag 1–2 years behind in-state data collection. Seventeen recipients (65.4%) do not receive any hospital and ED data when their residents are admitted to hospitals or ED from bordering states. The survey found that some states pay to obtain data on residents discharged from facilities in bordering states. Nine states and one city (Arizona, California, Colorado, Connecticut, Iowa, Kentucky, Louisiana, New Jersey, New York City, Rhode Island, and Utah) never attempt to obtain border data.

Table 2.

Exchange of health tracking data from bordering states—Centers for Disease Control and Prevention (CDC) Public Health Tracking Program, 2019.

4. Discussion

This survey revealed common and unique barriers for exchange of administrative data in the Environmental Public Health Tracking Program. Challenges to exchanging data with the tracking program that recipients encounter include (1) timeliness, (2) data granularity, (3) data acquisition from bordering states, and (4) data cleaning.

4.1. Timeliness

Along with comparability, completeness, and validity, timeliness is one of the most important data quality indicators [8,9]. Rapid reporting is a vital priority in cancer registry, injury prevention, birth defect registry, and immunization surveillance programs [11,12,13,14,15]. Real-time epidemic predictive modeling relies on timely data to forecast geographic disease spread and obtain case counts to inform better public health interventions, and is especially valuable during disasters and outbreaks [16,17].

The survey showed that challenges with receiving data from the provider, complex internal data processes, or incomplete data are responsible for data lag. To decrease lag time and variation from internal data processes across the state programs, a standardized DUA or DSA with a shared time frame is highly recommended, whether partnering intra-agency, inter-agency, or externally [18]. A national standard could include requirements for data layout and format, data quality benchmarks, a defined process for making corrections (especially for critical elements), and methods and timelines for exchanging data. State partners benefit from establishing working relationships and formal agreements with their hospital data suppliers. Data stewards might benefit from similar defined processes within their state data acquisition requirements and contracts.

Several studies have demonstrated the feasibility of chronic disease surveillance using data from electronic health records (EHR) [19,20]. EHR data tend to be more timely than data from traditional public health surveys or systems and can be available at a finer geographic level [20]. A recent study provided evidence of nationwide surveillance of asthma prevalence and asthma ED visits using EHR data from a multisite collaboration across the US [21,22,23]. Asthma prevalence estimates produced using EHR data were comparable to those produced using data from established national and state-level surveys [21]. Timely health outcome data can support responses to public health incidents such as wildfires, extreme heat, or other extreme weather events [23], and Tracking Program partners could explore obtaining EHR data in collaboration with their state recipients for more timely administrative data at a finer geographic level than county.

4.2. Data Granularity

State programs have attempted to receive data elements such as patient ID, Social Security Number, and full residential address or geocoded information. The survey showed that 16 (61.5%) of the state programs have successfully obtained the record-level identifiable data set with PHI or health outcomes at a finer geographic level (e.g., census tract, ZIP code). However, restrictions on the use of identifiable or sub-county level data vary by state, data source, or data supplier. Patient identifiers can be an important element for spotting duplicate records and out-of-state hospitalizations or transfer cases across hospitals or states.

A growing need to better understand the relationships between environment, behavior, and health has led to increased demand for small-area data [24,25]. Many state programs traditionally track aggregated hospital data by county, but recently are providing additional support for small-area data sharing [26]. Despite current limitations, small geographic area data that can be easily accessed and updated have become essential for targeting public health programs and services.

To access critical data elements, state programs need effective communication with their data suppliers (i.e., hospital associations and state agencies). A first step might be to determine whether the data steward has sufficiently granular data to support more detailed analysis. If so, programs could engage in process improvement through establishing or updating contractual requirements, preferably using a standardized data agreement. If granular data are not available, one course of action is partnering with the data steward to suggest and implement process improvement plans. A standard guidance and analyses plan for data granularity should be shared with tracking programs and partners to protect patient confidentiality and privacy, in accordance with relevant federal and state laws and regulations and department policies [24,27]. Data stewards and users should consider the challenges of obtaining health-related data in small geographic areas to balance the needs for data sharing and privacy [27,28].

4.3. Acquiring Data from Border States

States often have not received data for residents who were hospitalized or visited an ED located in the bordering states. Having timely and complete data for all residents is important to calculate annual trends. The survey showed that cross-border data exchange is complicated and varies by state, making it difficult to summarize (Table 2). Only three states exchanged data with bordering states, and 12 programs have not attempted to access data from bordering states. Complete data are important for environmental studies seeking to understand proximity to the health care services. In addition to the socio-economic status or patient-level factors such as race/ethnicity, the travel distance to the treating facility is likely to affect the course of diagnosis, subsequent treatment, and disease outcomes [29]. Acquiring all cross-jurisdictional data is essential to estimate health outcomes and to capture all exposures related to environmental hazards.

The cross-border exchange of health data involves ethical, regulatory, and organizational issues converging on technical aspects such as interoperability and cybersecurity [30]. The State and Territorial Exchange of Vital Events (STEVE) system, for example, quickly provides vital records data to other jurisdictions and authorized public health and administrative programs, while also ensuring the security and privacy of the data during transmission [31]. Surveillance data exchange between the public health departments of the District of Columbia, Maryland, and Virginia reduced the number of cases misclassified as District of Columbia residents and reduced the number of cases with duplicates [32]. CDC’s tracking program and NAHDO can help state health departments communicate with each other and develop an internal data user agreement process to enable data exchange with bordering states. NAHDO also can assist with process development, process improvement, and collaboration among interested data stewards and hospital associations.

4.4. Data Cleaning

Data validation is critical for ensuring valid analytic results for any projects using health records and environmental monitored data [33,34]. Challenges can result from the initial delay from the responders, staff turnover, high caseloads, lack of resources, and competing prioritization in the health department [34]. It is critical to learn if data cleaning and duplicate resolution processes are inconsistent across the tracking state programs. Only 46.2% of state programs did data cleaning work before submitting data. The tracking program has provided tools, documents, and technical support for data cleaning. The program also encourages recipients to use generic SAS software scripts that Tracking program developed and a validation protocol for basic data cleaning and standardized data preparation.

After state programs submit their annual administrative data, the tracking program performs multiple layers of data validation to improve data quality. These data validation processes include a simple check for duplicates, descriptive statistics, and trend analysis that compare current data to archived data. In 2018, state programs resubmitted 1–2% of 217 total data feeds because the data failed validation checks.

As a part of the approach to data cleaning and routine analysis, the tracking program initiated a journal club to share and develop a framework for routine analysis, from data validation to analyses. State programs voluntarily present their work with data and receive feedback from the peer data scientists and a CDC senior statistician. Tracking program recipients and partners also participate in various hospitalization content workgroups to share data needs and expertise across the collaborative network.

The tracking program’s data provide valuable information for environmental health response, understanding disease related to environmental hazards, and taking actions to prevent or control diseases [4]. The program provides recommendations on best practices for data exchange and how to address barriers and gaps it identifies. However, states greatly vary in the effort they put into setting up new data user agreements or in amending established agreements. Coordinated efforts are needed to assess variation in data use agreements between states, and significant effort is needed to standardize such agreements.

Current data exchange methods prompt important questions about how to securely share more complete, timely, and accurate de-identified and linkable data with the tracking program. Tracking programs can gain a baseline understanding of state administrative data by accessing NAHDO’s state data agency profiles (an inventory of agency governance, laws, policies, and data scope) [8].

5. Conclusions

Results from this survey will help the tracking program understand perceived barriers to use and accessibility of administrative data for the CDC tracking program. The state profiles and information collected will inform the development of resources that can provide solutions for more efficient and timely data exchange. The collected information will be used to improve the ongoing data call process, including routine data validation and data sharing practices. The information can also enhance standardized guidance and evaluation activities to support recipients in submitting data to the tracking program.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/ijerph18084356/s1, Table S1. Feedback from the Pilot.

Author Contributions

M.S. had primary responsibility for drafting of the manuscript, conducting data analysis, and interpretation of the results. C.H. and H.S. contributed to the discussion. M.S. and H.S. developed survey questionnaires and helped communicate with NAHDO and 26 recipient programs. All authors have read and agreed to the published version of the manuscript.

Funding

Funding for this manuscript was made possible (in part) by (CDC-RFA-EH18-1802) from the Centers for Disease Control and Prevention (CDC). The views expressed in written publications do not necessarily reflect the official policies of the Department of Health and Human Services, nor does mention of trade names, commercial practices, or organizations imply endorsement by the U.S. Government.

Institutional Review Board Statement

This survey was determined to be a routine public health activity for public health surveillance by CDC’s Human Subject Research Office. This project was approved as a new generic information clearance collection (GenIC) request titled, “Formative research to identify common and unique barriers to the exchange of hospital inpatient and ED data” under Office of Management and Budget Control No. 0920-1154.

Informed Consent Statement

Not applicable.

Acknowledgments

The authors acknowledge Denise Love and Emily Sullivan, former NAHDO director and project director, for their expertise on administration data and working the survey questionnaires. The authors also thank program investigators and program managers in 26 Tracking programs (25 States and one city) for answering the survey questions timely and collaboratively.

Conflicts of Interest

The authors declare no conflict of interest.

References

- McGeehin, M.A.; Qualters, J.R.; Niskar, A.S. National environmental public health tracking program: Bridging the information gap. Environ. Health Perspect. 2004, 112, 1409–1413. [Google Scholar] [CrossRef]

- Better Information for Better Health. Available online: https://www.cdc.gov/nceh/tracking/BetterInfoBetterHealth.html (accessed on 7 July 2020).

- Eatman, S.; Strosnider, H.M. CDC’s National Environmental Public Health Tracking Program in Action: Case Studies From State and Local Health Departments. J. Public Health Manag Pract. 2017, 23 (Suppl. 5), S9–S17. [Google Scholar] [CrossRef]

- Qualters, J.R.; Strosnider, H.M.; Bell, R. Data to action: Using environmental public health tracking to inform decision making. J. Public Health Manag Pract. 2015, 21 (Suppl. 2), S12–S22. [Google Scholar] [CrossRef]

- Charleston, A.E.; Wall, P.; Kassinger, C.; Edwards, P.O. Implementing the environmental public health tracking network: Accomplishments, challenges, and directions. J. Public Health Manag Pract. 2008, 14, 507–514. [Google Scholar] [CrossRef] [PubMed]

- Healthcare Cost and Utilization Project (HCUP). Available online: https://www.ahrq.gov/data/hcup/index.html#:~:text=HCUP%20databases%20are%20derived%20from,%2C%20private%20insurance%2C%20uninsured)%2C(under“OverviewofHCUP”) (accessed on 1 March 2021).

- Love, D.; Rudolph, B.; Shah, G.H. Lessons learned in using hospital discharge data for state and national public health surveillance: Implications for Centers for Disease Control and prevention tracking program. J. Public Health Manag Pract. 2008, 14, 533–542. [Google Scholar] [CrossRef] [PubMed]

- NAHDO Partner Agencies. Available online: https://www.nahdo.org/ (accessed on 7 July 2020).

- Sadoughi, F.; Mahmoudzadeh-Sagheb, Z.; Ahmadi, M. Strategies for improving the data quality in national hospital discharge data system: A delphi study. Acta Inform. Med. 2013, 21, 261–265. [Google Scholar] [CrossRef]

- Huser, V.; Kahn, M.G.; Brown, J.S.; Gouripeddi, R. Methods for examining data quality in healthcare integrated data repositories. Pac. Symp Biocomput. 2018, 23, 628–633. [Google Scholar] [PubMed]

- Bray, F.; Parkin, D.M. Evaluation of data quality in the cancer registry: Principles and methods. Part I: Comparability, validity and timeliness. Eur. J. Cancer 2009, 45, 747–755. [Google Scholar] [CrossRef] [PubMed]

- Silva, G.; Bartholomay, P.; Cruz, O.G.; Garcia, L.P. Evaluation of data quality, timeliness and acceptability of the tuberculosis surveillance system in Brazil’s micro-regions. Cienc. Saude Coletiva 2017, 22, 3307–3319. [Google Scholar] [CrossRef] [PubMed]

- Engstrom, K.; Sill, D.N.; Schauer, S.; Malinowski, M.; Martin, E.; Hayney, M.S. Timeliness of data entry in Wisconsin Immunization Registry by Wisconsin pharmacies. J. Am. Pharm. Assoc. 2020, 60, 618–623. [Google Scholar] [CrossRef]

- Bennett, S.; Grenier, D.; Medaglia, A. The Canadian Paediatric Surveillance Program: A framework for the timely data collection on head injury secondary to suspected child maltreatment. Am. J. Prev Med. 2008, 34 (Suppl. 4), S140–S142. [Google Scholar] [CrossRef]

- Hook, E.B. Timely monthly surveillance of birth prevalence rates of congenital malformations and genetic disorders ascertained by registries or other systematic data bases. Teratology 1990, 41, 177–184. [Google Scholar] [CrossRef]

- Desai, A.N.; Kraemer, M.U.G.; Bhatia, S.; Cori, A.; Nouvellet, P.; Herringer, M.; Cohn, E.L.; Carrion, M.; Brownstein, J.S.; Madoff, L.C.; et al. Real-time Epidemic Forecasting: Challenges and Opportunities. Health Secur. 2019, 17, 268–275. [Google Scholar] [CrossRef]

- Chaix, B. Mobile Sensing in Environmental Health and Neighborhood Research. Annu. Rev. Public Health 2018, 39, 367–384. [Google Scholar] [CrossRef] [PubMed]

- Lawpoolsri, S.; Kaewkungwal, J.; Khamsiriwatchara, A.; Sovann, L.; Sreng, B.; Phommasack, B.; Kitthiphong, V.; Lwin Nyein, S.; Win Myint, N.; Dang Vung, N.; et al. Data quality and timeliness of outbreak reporting system among countries in Greater Mekong subregion: Challenges for international data sharing. PLoS Negl. Trop. Dis. 2018, 12, e0006425. [Google Scholar] [CrossRef] [PubMed]

- Klompas, M.; Cocoros, N.M.; Menchaca, J.T.; Erani, D.; Hafer, E.; Herrick, B.; Josephson, M.; Lee, M.; Payne Weiss, M.D.; Zambarano, B.; et al. State and Local Chronic Disease Surveillance Using Electronic Health Record Systems. Am. J. Public Health 2017, 107, 1406–1412. [Google Scholar] [CrossRef] [PubMed]

- Perlman, S.E.; McVeigh, K.H.; Thorpe, L.E.; Jacobson, L.; Greene, C.M.; Gwynn, R.C. Innovations in Population Health Surveillance: Using Electronic Health Records for Chronic Disease Surveillance. Am. J. Public Health 2017, 107, 853–857. [Google Scholar] [CrossRef]

- Tarabichi, Y.; Goyden, J.; Liu, R.; Lewis, S.; Sudano, J.; Kaelber, D.C. A step closer to nationwide electronic health record-based chronic disease surveillance: Characterizing asthma prevalence and emergency department utilization from 100 million patient records through a novel multisite collaboration. J. Am. Med. Inform. Assoc. 2020, 27, 127–135. [Google Scholar] [CrossRef]

- Classen, D.; Li, M.; Miller, S.; Ladner, D. An Electronic Health Record-Based Real-Time Analytics Program for Patient Safety Surveillance And Improvement. Health Aff. 2018, 37, 1805–1812. [Google Scholar] [CrossRef]

- Namulanda, G.; Qualters, J.; Vaidyanathan, A.; Roberts, E.; Richardson, M.; Fraser, A.; McVeigh, K.H.; Patterson, S. Electronic health record case studies to advance environmental public health tracking. J. Biomed. Inform. 2018, 79, 98–104. [Google Scholar] [CrossRef]

- Hindin, R.; Buchanan, D.R.; Bosompra, K.; Robinson, F. Data for empowerment! The application of small area analysis in community health education and evaluation. Int. Q. Community Health Educ. 1997, 17, 5–23. [Google Scholar] [CrossRef] [PubMed]

- Saunders, P.; Campbell, P.; Webster, M.; Thawe, M. Analysis of Small Area Environmental, Socioeconomic and Health Data in Collaboration with Local Communities to Target and Evaluate ‘Triple Win’ Interventions in a Deprived Community in Birmingham UK. Int. J. Environ. Res. Public Health 2019, 16, 4331. [Google Scholar] [CrossRef] [PubMed]

- Werner, A.K.; Strosnider, H.; Kassinger, C.; Shin, M.; Sub-County Data Project, W. Lessons Learned From the Environmental Public Health Tracking Sub-County Data Pilot Project. J. Public Health Manag. Pract. 2018, 24, E20–E27. [Google Scholar] [CrossRef] [PubMed]

- Hole, D.J.; Lamont, D.W. Problems in the interpretation of small area analysis of epidemiological data: The case of cancer incidence in the West of Scotland. J. Epidemiol. Community Health 1992, 46, 305–310. [Google Scholar] [CrossRef] [PubMed]

- Holmes, J.H.; Soualmia, L.F.; Seroussi, B. A 21st Century Embarrassment of Riches: The Balance Between Health Data Access, Usage, and Sharing. Yearb Med. Inform. 2018, 27, 5–6. [Google Scholar] [CrossRef]

- Vetterlein, M.W.; Loppenberg, B.; Karabon, P.; Dalela, D.; Jindal, T.; Sood, A.; Chun, F.K.; Trinh, Q.D.; Menon, M.; Abdollah, F. Impact of travel distance to the treatment facility on overall mortality in US patients with prostate cancer. Cancer 2017, 123, 3241–3252. [Google Scholar] [CrossRef]

- Nalin, M.; Baroni, I.; Faiella, G.; Romano, M.; Matrisciano, F.; Gelenbe, E.; Martinez, D.M.; Dumortier, J.; Natsiavas, P.; Votis, K.; et al. The European cross-border health data exchange roadmap: Case study in the Italian setting. J. Biomed. Inform. 2019, 94, 103183. [Google Scholar] [CrossRef]

- State and Territorial Exchange of Vital Events (STEVE). Available online: https://www.naphsis.org/steve (accessed on 1 March 2021).

- Hamp, A.D.; Doshi, R.K.; Lum, G.R.; Allston, A. Cross-Jurisdictional Data Exchange Impact on the Estimation of the HIV Population Living in the District of Columbia: Evaluation Study. JMIR Public Health Surveill 2018, 4, e62. [Google Scholar] [CrossRef]

- Shamsipour, M.; Farzadfar, F.; Gohari, K.; Parsaeian, M.; Amini, H.; Rabiei, K.; Hassanvand, M.S.; Navidi, I.; Fotouhi, A.; Naddafi, K.; et al. A framework for exploration and cleaning of environmental data--Tehran air quality data experience. Arch. Iran. Med. 2014, 17, 821–829. [Google Scholar]

- Welch, G.; von Recklinghausen, F.; Taenzer, A.; Savitz, L.; Weiss, L. Data Cleaning in the Evaluation of a Multi-Site Intervention Project. eGEMs 2017, 5, 4. [Google Scholar] [CrossRef][Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).