Abstract

Joining worldwide efforts to understand the relationship between driving emotion and behavior, the current study aimed at examining the influence of emotions on driving intention transition. In Study 1, taking a car-following scene as an example, we designed the driving experiments to obtain the driving data in drivers’ natural states, and a driving intention prediction model was constructed based on the HMM. Then, we analyzed the probability distribution and transition probability of driving intentions. In Study 2, we designed a series of emotion-induction experiments for eight typical driving emotions, and the drivers with induced emotion participated in the driving experiments similar to Study 1. Then, we obtained the driving data of the drivers in eight typical emotional states, and the driving intention prediction models adapted to the driver’s different emotional states were constructed based on the HMM severally. Finally, we analyzed the probabilistic differences of driving intention in divers’ natural states and different emotional states, and the findings showed the changing law of driving intention probability distribution and transfer probability caused by emotion evolution. The findings of this study can promote the development of driving behavior prediction technology and an active safety early warning system.

1. Introduction

Traffic safety continues to come into focus with the ever-increasing use of cars in modern society, and traffic accidents are becoming a major factor causing human injuries [1]. Although the human, social and economic cost of traffic accidents is largely preventable, there has been insufficient action to combat this global challenge [2]. It has been proven that human factors predict a greater amount of variance in road accidents than vehicle and road factors do [3]. More concretely, research showed that 90% of rear-end collisions and 60% of frontal collisions would be avoided if the driver realized the danger and took effective measures one second in advance [4]. However, in car driving activities, human drivers have inherent limitations in perception, decision-making, and behavior execution. Even experienced drivers may ignore important information in the driving environment and make wrong judgments about traffic safety situation, and then adopt inappropriate or unsafe driving behaviors [5]. Therefore, it is very important to monitor and predict driving behavior through the on-board intelligence system, and to evaluate whether the driving behavior can keep the car in a safe state in a specific environment [6]. Many researchers in the field of automotive active safety have begun to build driving behavior identification and prediction models [7,8,9]. However, due to the complexity of the human behavior mechanism, there is still a lack of driving behavior prediction algorithms that can be applied to vehicle intelligence systems. In recent years, researchers argued that driver’s affective factors have close associations with road accidents [10,11]. Psychologists now widely accept that it is impossible for people to think or perform an action without engaging their emotional system, at least unconsciously [12,13]. Considering the previous driving behavior prediction models often ignore emotion that is a key factor affects driving behavior, the practical studies on the influence of emotion on driving behavior and related applications for driving behavior prediction are urgently needed [14,15]. The purpose of this study was to reveal the differences in driving intention transitions caused by driver’s emotion evolutions—i.e., to study the generation and transition differences in driving intentions when a is driver faced with the same or similar driving environments but in different emotional states. The intention is the psychological variable that has the closest relationship with real behavior [16] and is a measurement index, with high accuracy, for the human behavior prediction [17]. We expected that the research results could provide theoretical support for the construction of driving behavior prediction models that consider emotional factors.

In this study, the driving intention prediction model for a driver’s natural state, and eight typical driving emotion states, based on the Hidden Markov Model (HMM), were established and trained. In the intention prediction model, we used a hidden Markov chain to describe the random process of a driver’s intentions changing with time, and took the vehicle driving data corresponding to the driver’s different intention transitions as the observed value of the hidden Markov chain. On the premise of model accuracy verification, we extracted the probability distribution of different driving intentions, the transition probability matrix between different driving intentions, and the probability distribution of driving intentions corresponding to different observation values from the HMMs. For the driver’s natural state and different emotional states, we obtained the overall differences in driving intention probability distribution and transition probability under different emotional states through the comparative analysis. For the probability distribution of driving intention corresponding to different observed values, the Bayesian formula was used to transform it into the probability distribution of driving intention under different driving environments (observation values)—i.e., the probability distribution of driving intention generated by drivers under different environmental states. Finally, we compared and analyzed the probability distribution of driving intention under the same driving environment parameters but different emotional states and obtained the driving intention transition law caused by emotional evolution.

2. Study 1-A: Driving Intention Prediction Model Based on HMM

2.1. Materials and Methods

2.1.1. Experiment Design

- Participants

Sixty-two drivers (33 males and 29 females) aged from 20 to 48 (M = 28.61, SD = 6.83) participated in this study. The participants recruited were undergraduate students, urban residents and taxi drivers in Zibo City, China. All of the participants were licensed drivers and their driving experience ranged from 1 to 16 years (M = 4.47, SD = 2.98).

- Measurement of Driver’s Expected Speed and Distance

The expected speed and expected distance are important parameters in driving behavior studies [18]. Expected speed means the maximum safe driving speed that the driver wants to achieve when the vehicle is running free from constraints of other vehicles [19]. Expected distance is a concept in the car-following theory [20,21,22,23]. The expected distance mentioned in this study was the distance from the head of the following car to the rear of the leading car in the car-following scene. We used the virtual driving platform (Figure 1b) to measure the participants’ expected speed and distance. We constructed a free driving scene to test the participants’ expected speed. In this scene, traffic flow and intersection signals were not set, and the speed limit was 60 km/h. We asked the participants to drive at their own speed on the virtual road under the condition of obeying traffic rules and ensuring driving safety. The driving time was 5 min, and we took the average driving speed of the middle 3 min as the expected speed of each participant. Besides, we constructed a car-following scene to test the participants’ expected distance. The traffic flow and intersection signals were also not set. In this scenario, a virtual vehicle was set to drive at a uniform speed at 60 km/h. Participants were required to follow the vehicle in the virtual environment. Under the condition of obeying basic traffic rules and ensuring driving safety, the participants can freely choose the driving speed and following distance. The driving was 5 min, and we took the average distance between the two cars of the middle 3 min as the expected distance of each participant.

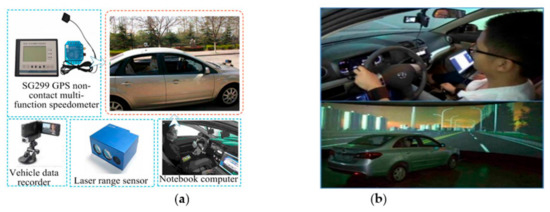

Figure 1.

Experiment equipment. (a) Actual experiment vehicle; (b) Virtual driving platform.

- Driving Experiment

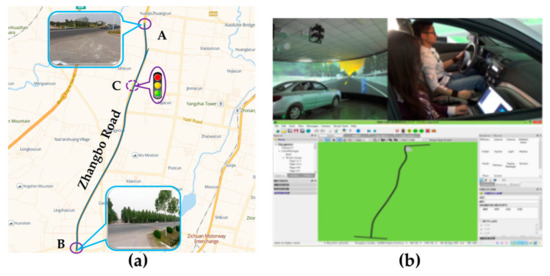

The driving experiments included actual driving and virtual driving experiment. We used two experiment vehicles equipped with a multi-function speed-measuring instrument, laser range sensor, video capture system, and notebook computer for the actual driving experiments (Figure 1a), and used a virtual driving platform for the virtual driving experiment (Figure 1b). In the actual driving experiment, we used two vehicles to simulate the car-following scene. One of the vehicles, as the front vehicle, ran normally along the experiment route with a velocity of 60 ± 5 km/h and a participant drove the following one. The urban roads, which are full of intersections (signalized and no signalized) and corners, were not the ideal experimental environment. Considering the high safety risks involved in driving experiments, the enclosed expressway was not selected either. Finally, we chose a semi-enclosed road section of Zhangbo Road (Figure 2a) that was located far out of the city and connected the Zhangdian District and Boshan District as the actual driving experiment route. The whole length of the route was about 9.8 km (between points A and B in Figure 2a) and had a 70 km/h speed limit. The experiment vehicles were essentially independent of the crossing or merging effects of the vehicles on other roads except for a signalized intersection (due to regional conditions, the probability of signalized intersection was minimized), which was noted as point C in Figure 2a. In order to avoid the mandatory effect of the signalized intersection on driving intention, we did not use the data collected from 100 m before and after the intersection. We organized the actual driving experiments in off-peak periods under good weather and road conditions. We applied the virtual driving platform (Figure 1b) to carry out the virtual driving experiment. The virtual road section was structured (Figure 2b) in the platform according to the road basic properties and traffic volume of the actual experiment route. We created the car-following scene and collected the driving data in the platform.

Figure 2.

Experiment route. (a) Actual driving route; (b) Virtual road section.

- Data Pre-Processing

Each participant took part in a 10-min actual driving experiment and a 10-min virtual driving experiment. Table 1 shows the data obtained through the experiment. To describe the relationship between vehicle state and driver’s psychological expectation, and were coded as , and was defined as expected speed deviator. and were coded as , and was defined as the expected distance deviator. We used the acceleration to represent the execution result of intention. The time window for a single state was set to 10 s (the F-test was used to compare the model parameters at a single time window of 5, 10 and 15 s, respectively, and no significant difference was found. The test results are shown in Supplementary Materials 3). Table 2 shows the rules for the data discretization. Finally, we obtained 3039 and 3534 data sets from the actual and virtual driving experiments, respectively.

Table 1.

Variables and symbols.

Table 2.

Rules for data discretization.

2.1.2. Model Construction

- Hidden Markov Model (HMM)

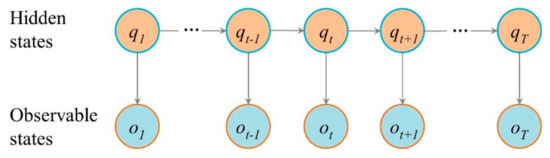

The HMM is a statistical analysis model that can be represented a set of hidden states , a set of observable states , the initial state probability matrix , the transition probability matrix and the emission probability matrix [24,25,26]. Where, is the number of and is the number of . is the hidden state and is the observable state. represents the at . represents the transition probability between the states, indicating the probability that the state is true at when the state is true at . represents the probability that the is true when the is true at . We represented the HMM as . Hypothesizing the hidden state is dependent on its previous moment only, and the observable state is dependent on the hidden state of the Markov chain [27]. An HMM can be represented as a probabilistic network (Figure 3).

Figure 3.

An HMM represented as a probabilistic network.

- Set of Variables

In this study, we took the driving intention at as the hidden variable and running data of the vehicle at as the observation variables. We assumed the driving behavior at to be the driving intention at (). When at , the driving intention of the following driver at was seen as deceleration; when at , the driving intention at was seen as keeping speed; when at , the driving intention at was seen as acceleration. The formed the initial observed variables. We applied the factor analysis method to reduce the dimension of the original observation variables in SPSS 24 [28]. Table 3 shows the common factor variance of the initial observed variables. The sizes of extracted common factor variance for the parameters were very close, which indicated that the factor analysis method was practicable. Table 4 shows the interpretation of total variance in factor rotating. As we can see, the interpretation of total variance first three factors contained 93.86% of all information.

Table 3.

Common factor variance.

Table 4.

Interpretation of total variance.

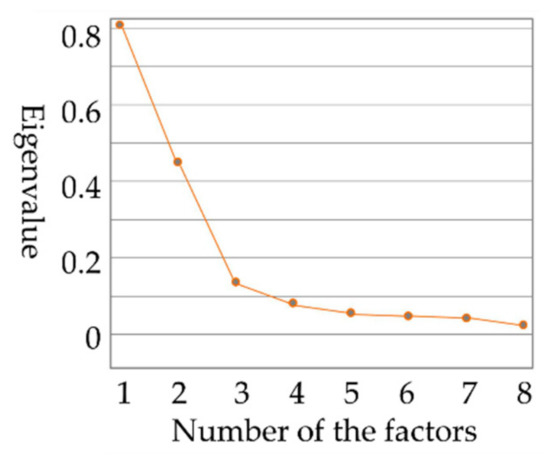

Figure 4 shows the information content of each factor. The gravel figure also shows that the information contained in the first three common factors meets the modeling requirements. The next step was to find three parameters that best represent the first three common factors.

Figure 4.

Scree plot of the information content of each factor.

Table 5 shows the rotation results of the factor load matrix. opened maximum weight projection (95.2%) on the main factor 1. had the maximum weight (89.6%) in the factor space for factor 2. For the common factor 3, had the maximum weight (87.1%) in factor space. In summary, , and constituted the observations variables (factor set) of the driver’s intentions.

Table 5.

Rotated factor matrix.

- Model Training

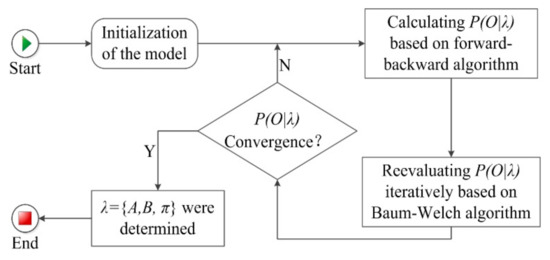

The model training was to determine the value of , and . The process of model training was, firstly, to initialize the model parameters when the observation sequence , state sequence , all possible sets of observations , and all possible sets of hidden states were known. Secondly, we worked out the probability of the observation sequence through the forward–backward algorithm [29]. Finally, we determined the convergence and the model parameters through the Baum–Welch algorithm [30]. Figure 5 shows the model training process. We describe the key steps of model training in Supplementary Materials 1. We used 68.5% of the original data to train the model.

Figure 5.

Model training process.

2.2. Results

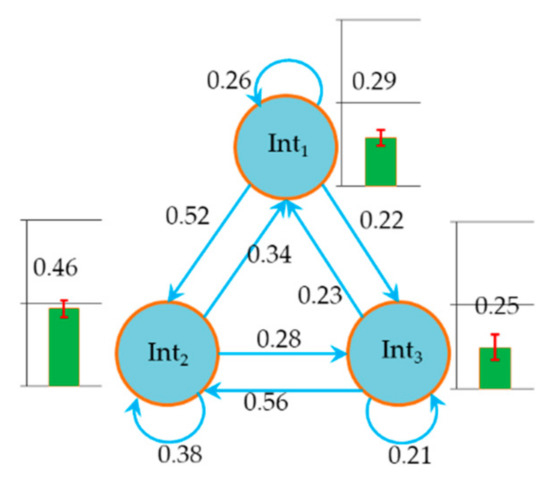

We obtained the probability of intentions by model training. The probabilities of deceleration (Int1), keeping speed (Int2) and acceleration (Int3) were 0.247, 0.468, and 0.285, respectively. Figure 6 shows the state transition probability (). As can be seen from and , the probability of Int2 was significantly higher than that of acceleration and deceleration. This indicated that the results of model training were in line with the reality, because the driver would be driving in a stable state until the driving environment changed. The fact that the probabilities of Int1 and Int3 transferring to Int2 were larger than other transition probabilities also proved this. We show the probabilities of observation states () in Supplementary Materials 2 and discuss the change law of in the discussion section.

Figure 6.

Transition probability between driver’s intention states in the HMM.

2.3. Model Verification

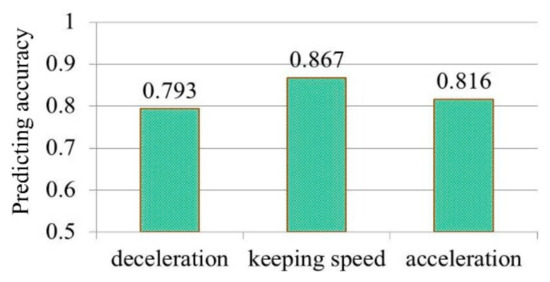

We used the HMM to predict the driving intention. Scilicet, the driving intention at (i.e., the driving behavior at ) could be predicted when the observation variables and the driving intention (acceleration) at were known. We applied the rest of the data sets in the original database, which were unused in model training, to verify the model accuracy. The model accuracy in predicting the driving intention could be obtained by comparing the predicted value and the actual value of the vehicle acceleration at . Figure 7 shows the model accuracy in predicting driving intentions. We used the approximate normal distribution method to calculate the confidence of the prediction accuracy [31], and the 95% confidence intervals of recognition accuracy for Int1, Int2 and Int3 were (0.761, 0.825), (0.845, 0.889) and (0.783, 0.849), respectively. The result showed that the accuracy of the driving intention prediction model proposed in this paper was acceptable compared with many existing driving intention prediction algorithms (the prediction accuracy is generally between 75% and 90%) [32,33,34].

Figure 7.

Accuracy in predicting driving intentions of proposed HMM.

3. Study 2-Driving Intention Prediction Models Adapting to Multi-Mode Emotions

3.1. Materials and Methods

3.1.1. Experiment Design

The purposes of the experiments in this study were to collect the driving data of drivers in different emotional states. The experimental procedure related to driving was consistent with study 1. It was different in that the participants were in different emotional states.

- Participants

The same experimental sample of 62 adult drivers volunteered to participate in this study.

- Driving Emotion Classification and Selection

Psychologists classified emotions into different categories according to different criteria and theoretical constructions [35,36]. Among the many models of emotional structure, a revised edition of the Geneva Emotion Wheel was most suitable for the driving context [37]. This edition derived from the original wheel. Back-and-forth translation and the exchange of emotional qualities made the tool more suitable for traffic settings [38,39]. According to [40], pleasure was also a high-frequency driving emotion. We summarized the 18 emotions involved in [37,38,39,40] into a scale. The participants were asked to give a score from 0 to 5 for each emotion according to their experience during driving. Finally, 8 emotions with higher scores were selected, namely anger (Em1), surprise (Em2), fear (Em3), anxiety (Em4), helplessness (Em5), contempt (Em6), relief (Em7), and pleasure (Em8).

- Experiment Materials

Aside from the driving experiment equipment mentioned in study 1, the experiment materials also referred to emotion induction materials in this study. Emotion induction materials included pictures and movie clips derived from the network, International Affective Picture System (IAPS) and Chinese Affective Picture System (CAPS). Figure 8 shows some emotional materials and Table 6 illustrates the movie clips applied in this study.

Figure 8.

Some emotion induction materials. (a) Pictures; (b) Movie clips.

Table 6.

Movie clips for emotion induction.

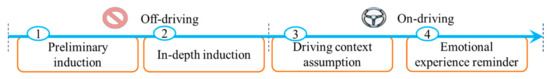

- Emotion Induction

It is difficult to induce an individual’s emotions effectively and measure the level of emotional arousal accurately [40]. The methods most used in emotion induction research were movie clips [41], personalized recall [42], picture viewing [43], acoustic material [44] and standardized imagery [45]. Analysis results showed that music, movies and imagery were relatively ideal methods for emotion induction and the success rate was more than 75% [46,47]. Yet the driver, generally speaking, needs to spend most of his/her attention on the driving, which further increases the difficulty to induce the specific emotions needed. In order to effectively induce and maintain the driver’s emotions while driving, while minimizing the impact on driving tasks, we designed a new emotion induction process. The emotion induction process contained four steps (shown in Figure 9). The preliminary induction and in-depth induction carried out before the driving experiments, and the driving context assumption and emotional experience reminders implemented while the participants drove the car.

Figure 9.

Emotion induction process.

In the preliminary induction of anger, surprise, fear, and anxiety, we presented the pictures with specific emotional overtones and showed the contents expressed in the pictures to the participants, respectively. In the preliminary induction of helplessness and relief, we applied the personalized recall method to awake participants’ helplessness and relief emotion. For the helplessness, we guided the participants to recall their experiences of failure to pass the examination, unable to pay huge housing or car loan, etc. In the induction of relief, we guided the participants to describe their own experiences, such as passing the test of driving skill. For contempt induction, a scene of driving competition was set on the driving simulator in the virtual driving experiment. Firstly, we constructed a number of driving routes in the simulated driving scene, and obstacles, such as the construction area, were set up in the driving routes. Secondly, we ordered five experiment organizers and one participant to drive a virtual vehicle along the routes in sequence. Thirdly, the simulator controller controlled the driving competition to let the participant always reach the end of the experiment routes with the shortest time. Fourthly, we announced the driving competition results were to the participant and praised the driving skill of the participant to let him or her produce a strong sense of superiority. In actual driving experiments, we also used the personalized recall method to preliminarily induce contempt. The participants were guided to recall their experiences of feeling very superior, such as passing the course test with a higher score than their classmates, getting more appreciation or attention than others, etc. For the pleasure emotion, we informed the participants that they would get prizes if they finished the experiment. The in-depth inductions of emotions were carried out based on the preliminary inductions at the first opportunity. The induction materials for each emotion contained two or three movie clips. We picked one of the movie clips at random to play to the participants. In order to avoid the reduction in emotional induction effect caused by the advance understanding of the movie, the movie clips applied in the actual and virtual driving experiment for the same participant were not repetitive. The driving experiment started instantly after the above two steps. The driving task attributes had an important impact on the driver’s mood [48,49]. For example, in commuter driving, drivers were very likely to be anxious about the traffic congestion. Therefore, we assigned each driving experiment a hypothetical driving situation or task attribute to maintain the induced driving emotion. Table 7 shows the hypothetic driving task attributes for each emotion. During driving, we recalled the participants’ induced emotions through voice guidance, psychological suggestion or music (only for relief).

Table 7.

Hypothetic driving task attributes.

- Driving Experiment

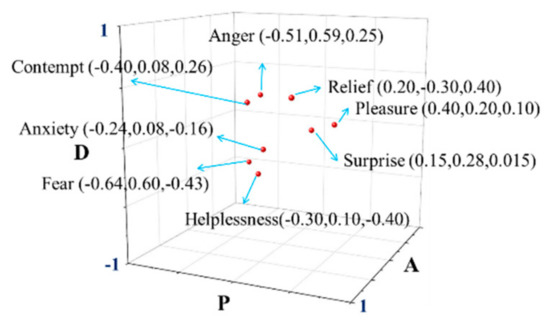

The equipment and procedures of the driving experiments were same as in study 1. Corresponding to each emotion state, each participant participated in eight actual driving experiments and eight virtual driving experiments. Unlike study 1, an important task while driving was to measure the driver’s emotional state. As an important branch of affective computing, emotion recognition patterns based on electroencephalogram (EEG) [50], electrocardiogram (ECG) [51], galvanic skin response (GSR) [52], respiration (RSP) [53], facial expression [54], and speech [55] have been the popular human emotion recognition methods. While we investigated eight kinds of driving emotions in this paper, and it seems impossible to find a measurement that effectively works for all of them with the mentioned methods. In view of these, we used the PAD emotion scale and model to measure the participants’ emotional states in the driving experiments. The PAD model was proposed by A. Mehrabian and J. Russe [56,57]. According to the model, emotions are composed of Pleasure–displeasure (P), Arousal–nonarousal (A), and Dominance–submissiveness (D). The values in each dimension range from −1 to +1 and the values can be used to represent specific emotions, which construct a workable framework for affective computing. Previous research results have shown that the three dimensions can effectively explained human emotions and the three dimensions are not limited to describing the subjective experience of emotions but also have a good mapping relationship with the external expression and physiological arousal of emotions [57]. Since the PAD model was proposed, many scholars have been working on building the mapping relationship between emotions and the three-dimensional space of PAD. The PAD space distributions of the 8 emotions in this paper are summarized in Figure 10 based on previous studies [58,59,60,61].

Figure 10.

Distribution of the eight emotions in PAD space.

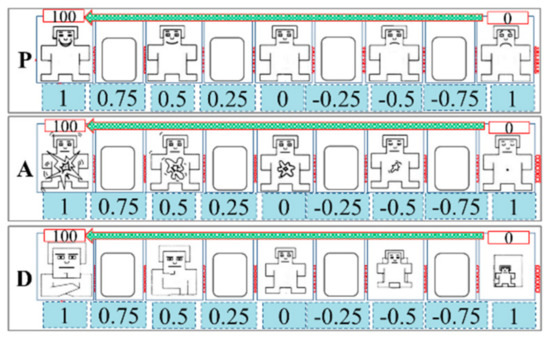

The PAD measurement tool, which is in line with the psychological measurement theory [28], is the PAD emotion scale (shown in Figure 11). As can be seen, each dimension is divided into nine states and five of these states are represented by cartoon figures with different shapes and sizes. There is a blank space between two cartoon figures in the same dimension. For the dimension “P”, the facial expressions of the cartoon figures are displayed one by one from extreme unhappiness on the far right (value-1), to the neutrality in the middle (value 0), and then to extreme happiness on the far left (value 1). For the dimension “A”, the size and irregularity of the image in the cartoon bodies and the size of the cartoon figures’ eyes represent different states from sleepy and indifferent on the far right (value-1), to relatively calm in the middle (value 0), and then to very excited on the far left (value 1). For the dimension “D”, the cartoon’ body size and eye size represent the degree of influence and control. It goes from being heavily influenced on the far right (value-1), to the normal state in the middle (value 0), and then to be able to control the situation well on the far left (value 1).

Figure 11.

PAD emotion scale.

In order to be easy to use in the experiment and to facilitate the subsequent calculation, each dimension was noted from 0 to 100 based on the strength of each dimension and Table 8 shows the converted coordinates of each emotion. Before the emotion induction and driving experiments, we asked the participants to keep firmly in mind the meanings of PAD and make sure that they can accurately express their status in all dimensions without having to see the scale. The participants were required to report the PAD values (between 0 and 100 for P, A, and D, respectively) that matched their psychological state every minute. For the subjects, they can intuitively select a graphical representation of their current psychological state. The subjective and fuzzy description of one’s own emotional state, often applied in the emotional self-reports, was avoided. In the driving experiments, the participants also heard voice cues for emotion recall to maintain their induced emotions. We recorded the driving processes in real-time with the video detection system.

Table 8.

Converted coordinates of each emotion.

Each participant received eight kinds of induction. For a participant, the interval between the eight induction experiments must be long enough to ensure that his or her eight emotion experiences did not interfere with each other. We numbered the 62 participants and organized them to complete one kind of emotion induction and corresponding driving data acquisition in sequence according to the numbering order. Only after all participants completed the certain emotion induction and the corresponding driving data collection, the induction experiment of another emotion began. In the eight emotion-specific inductions, the order of 62 participants in the experiment was fixed. According to experiment records, we took the shortest time (11 days) to complete the emotion induction and corresponding driving data collection for all the participants in the relief virtual-driving experiment. This meant that the time interval between the two emotion induction experiments for the same participant was at least 11 days, and the eight emotional experiences did not interfere with each other. In addition, there were four steps involved in one emotional induction. In practice, we tried to shorten the time intervals between each step as much as possible to ensure that the emotional stimulation of each step had a positive impact on the emotional stimulation of the next step.

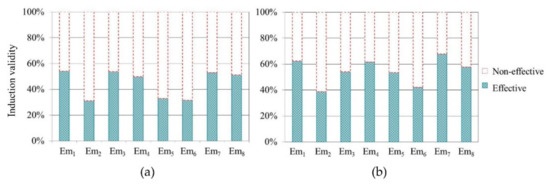

- Effect Testing of Emotion Induction

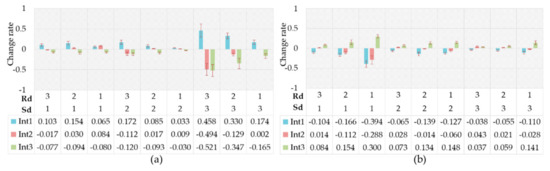

In order to ensure the data were valid, we carried out the tests on the effectiveness of emotional induction. In the driving experiments, drivers reported PAD values for their emotional state every minute. Therefore, in each emotion state, we obtained 620 emotion indicator results in the actual and virtual driving experiment, respectively. Each ser of emotion indicator data represented the driver’s emotional state within a minute. We took the Euclidean distance between the reported coordinates (reported by the participants in the driving experiments) and converted coordinates of emotion in the PAD three-dimensional space as the measurable criteria of emotion activation [62]. The closer the distance was, the higher the emotion activation degree was. In the measurement tool of the PAD model, the coordinate difference value of each dimension state was 0.25. Therefore, Euclidean distance less than 0.25 from the reported PAD to the corresponding emotional coordinate point in the PAD original three-dimensional space indicated that the emotional state was effectively induced. According to this criterion, we obtained the percentage of effective emotional data on the total data for each emotion state (Figure 12) and the deleted non-effective emotional data.

Figure 12.

Emotion measurement results. (a) Actual driving experiments; (b) Virtual driving experiments.

3.1.2. Model Construction

The driving intention prediction model, adapting to the eight kinds of emotions, were established. For each model, the process of data processing and model training was consistent with study 1. We used 70% of the corresponding experimental data for each emotional state for model training, and applied the remaining data to the corresponding model verification.

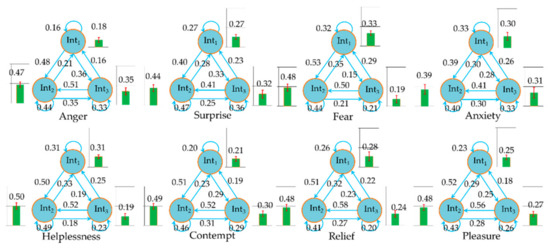

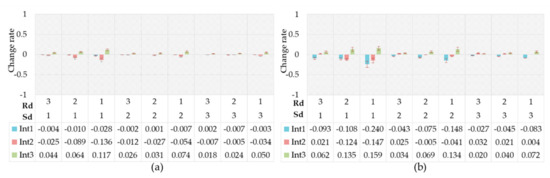

3.2. Results

We obtained the probability distributions of the three driving intentions in different emotional states and the transition probability between the three intentions in the proposed HMMs by model training. Figure 13 shows the training results. Compared to the natural state, there was no significant change in the probability distribution of Int2 under other emotion states, except for anxiety. In the state of anxiety, the probability of the driver keeping speed decreased significantly, while the probability of accelerating and decelerating both increased. In addition, the probability of acceleration increased dramatically. The same rule of probability increase and decrease also applied to the intention in the state of surprise emotion, but the change in probability was less obvious than that in the anxiety emotion. In the states of anger and contempt, the driver’s intention to decelerate reduced significantly, while the probability of accelerating intention increased observably, and the probability of keeping speed increased slightly. In fear and helplessness, the intention to accelerate significantly reduced, and the probability of maintaining speed increased to a certain extent. The probability of a driver to decelerate increased significantly in fear, but decreased significantly in helplessness. Aside from the fact that that the probability of Int1 increased significantly in the pleasure state, the probability distribution law of other driving intentions did not change significantly in the relaxed and pleasure state compared with the natural state. Corresponding to the change in the probability distribution, if the probability of one driving intention increased, the transition probability of the other two driving intentions to it would increase too. On the contrary, if the probability of one driving intention reduced, the transition probability of the other two intentions to it would reduce. We showed the probabilities of observation states for different emotions in Supplementary Materials 2 and discussed the change in the law of observation sequence in the discussion sections.

Figure 13.

Transition probability between driver’s intention states in the different HMMs.

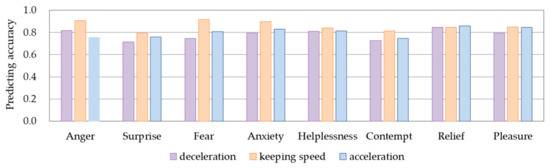

3.3. Model Verification

We used the HMMs proposed in this study to predict the driving intention adapting to driver’s different emotional states. We applied the rest of the data sets, which were unused in model training, to verify the accuracy of the proposed models. We obtained the accuracy of the models in predicting the driving intention by comparing the predicted value and the actual value of the vehicle acceleration. Figure 14 shows the accuracy in predicting driving intentions of the HMM for different emotions. The results showed that the prediction accuracy for the deceleration, keeping speed, and acceleration intentions in different models reached a similarly acceptable level.

Figure 14.

Accuracy in predicting driving intentions of HMMs for different emotions.

4. Discussion

In studies 1 and 2, we obtained and analyzed the probability distribution and transition probability of the three driving intentions in drivers’ natural states and different emotional states.

In order to examine whether emotions had significant influences on the probability distribution of the three intentions, we used the t-test method [31] to compare the probability of driving intention in the non-emotional state (Ne) with that in the eight emotional states. In this process, we merged the actual and virtual driving data of each participant to be one data sample. Table 9 shows the t-test results. As can be seen from Table 9, the influences of anxiety and relief on the probability of Int1 were not significant. The effect of other emotions on the probability of Int1 were significant. The influences of anger, surprise, fear and relief on the probability of Int2 were not significant. The effect of other emotions on the probability of Int2 were significant. Except for pleasure and relief, the effect of emotions on the probability of Int3 were significant.

Table 9.

t-test for probability distribution of driving intention.

Analogously, we used the t-test method to examine the significance of emotional influence on the transition probability of the three intentions and the test result are shown in Table 10. It can be seen that, except for the fact that relief had no significant effect on the transition of Int3, different emotions had significant impacts on the transition of driving intention (in whole or in part).

Table 10.

t-test for transition probability of driving intention.

Furthermore, we applied the F-test [31] to verify whether there is a difference in the impact of the eight emotions on the transition probability of driving intentions. The test results are shown in Supplementary Materials 4, and the results supported the conclusion that there were significant differences in the influence of eight emotions on the driving intention transition probability. We also used the q-test [31] to compare the effects of any two emotions on the transition probability of intention. The test results are shown in Supplementary Materials 5.

Finally, we expected to obtain the changes in driving intention generated by the change in emotional the variable under the condition that other variables were unchanged or nearly unchanged. In the intention identification model constructed above, we got the probability distribution of driving environment parameters under different intention states (). We used the Bayesian formula (shown in (1)) to solve the probability distribution of driving intention under different driving environment parameters.

In this study, the observation variable contained three parameters: , and . represented the difference between the actual following distance and the driver’s expected distance and represented the difference between the actual vehicle speed and the driver’s expected speed. In the process of driving, drivers often express their psychological needs through speed and distance—i.e., the pursuit of higher speed and shorter distance [23,63]. represented that the driver’s speed need was not being met; indicated that the current vehicle speed was just enough to meet the driver’s speed need; represented that the speed of the vehicle was too great for the driver’s speed need. Similarly, represented that the driver’s distance need was not being met; indicated that the current following distance was just enough to meet the driver’s distance need; represented that the following distance was too short for the driver’s distance need. Considering the basic definitions of and [64,65], the combination variable of and was defined as the satisfaction degree (). The component of in the speed dimension () was assigned to 0, 1, and 2, respectively, when the was , , and , respectively. The component of in the distance dimension () was assigned to 0, 1, and 2, respectively, when the was , , and , respectively. When the sum of and was 0 or 1, the value of was assigned to 1, indicating that the driver’s demand satisfaction was low. When the sum of and was 2, the value of was assigned to 2, indicating that the driver’s demand satisfaction was good. When the sum of and was 3 or 4, the value of was assigned to 3, indicating that the driver’s demand satisfaction was over met. In the car-following theory, the distance between vehicles is often regarded as an index to measure the safety risk [66]. In this study, the was defined as risk degree () and the was assigned to 3, 2, and 1, respectively, when the was , , and , respectively. The greater the value of , the greater the risk of driving safety in the distance dimension. In order to show the change in driving intention probability, caused by driving emotion evolution more intuitively, (2) calculates the change rates of driving intention probability under different emotions.

where, is the change rate of driving intention probability. is the driving intention. denotes the combinatorial states of , and . is the emotional state.

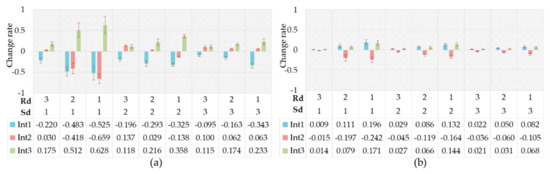

Through the above processing and calculation, we got the change rates of the driving intention probability between the driver’s anger and natural state. As can be seen Figure 15a, compared to the natural state, the probability of acceleration significantly increased while the probability of deceleration significantly decreased in their anger emotional state. Additionally, the degree of the above changes decreased with the increase in or . Many previous researchers have proven that the drivers in the anger emotion prefer aggressive driving behavior [10,67,68]. The results in this study showed that drivers were more inclined to accelerate when they were in an angry state—i.e., preferred to seek a higher driving speed and a shorter following distance. Figure 15b shows the change rates of the driving intention probability between the driver’s surprise emotion and the natural state. It was shown that no remarkable change in driving intention probability took place when the was at a high level. In the moderate or low , the probability of keeping speed decreased significantly and the probability of the other two intentions increased observably when the was moderate or low. Surprise promoted the cognitive control of events and the ability to adapt to sudden environmental changes [69,70]. Therefore, surprise lead to an increase in the sensitivity of drivers to external environmental changes and made drivers easily attracted to other environmental factors. The increased cognitive load could make the driver’s vehicle control strategy confused, thus making the driver change the speed frequently within a certain range.

Figure 15.

Change rates of driving intention probability. (a) Caused by anger; (b) Caused by surprise.

Figure 16a shows the change rates of the driving intention probability between the fear and natural state. It is evident that the probability of the intention to decelerate increased while the probability of the driver’s intention to accelerate decreased. When was moderate, the change was obvious only when the was high. The changes were more conspicuous when the was high and low, and the change rates increased with the increase in . We see the opposite rule of change rates in fear compared with anger. The driver was more likely to decelerate—i.e., drivers preferred to choose conservative driving behaviors. We can find similar results in many previous studies [71,72]. It is generally accepted that fear increase the driver’s risk perception [73], and the drivers prefer to choose the driving pattern with low risk [74]. Figure 16b shows the change rates of the driving intention probability between anxiety and natural state. As can be seen from Figure 16b, the probability of the intention to accelerate and decelerate both markedly increased, while the probability of keeping speed significantly decreased. Many previous studies have shown that anxiety increases driving risks [75,76,77], and our findings support that, considering constant acceleration or deceleration could increase the risk of driving safely beyond dispute.

Figure 16.

Change rates of driving intention probability. (a) Caused by fear; (b) Caused by anxiety.

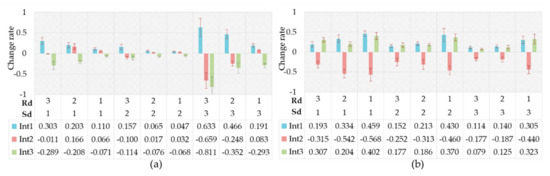

Figure 17a shows the change rates of the intention probability between the driver’s helplessness and the natural state. A probabilistic variation, similar to that of fear, appeared in helplessness, while the intention probability distribution changed to a lower degree under the helplessness emotion. Helplessness was proven to be an emotion similar to fear [73]. Figure 17b shows the change rates of the driving intention probability between the driver’s contempt emotional state and the natural state. Few previous studies have focused on driver contempt in the field of driving behavior, while the results of this paper have shown that the probabilistic change in driving intentions was similar to anger but less variable. The probability of the driver’s intention to accelerate significantly increased, while the probability of the driver’s intention to decelerate significantly decreased. Additionally, the degree of the above changes decreased with the increase in or .

Figure 17.

Change rates of driving intention probability. (a) Caused by helplessness; (b) Caused by contempt.

Figure 18a shows the change rates of the driving intention probability between the driver’s relief emotional state and the natural state. As can be seen, the change of driving intention probability caused by relief was insignificant. This seemed to suggest that a driver made rational driving decisions in a relaxed emotional state. However, some researchers pointed out that relief increased driving risk by reducing driver’s awareness of risk [78]. Figure 18b shows the change rates of the driving intention probability between the driver’s pleasure emotion state and the natural state. The probabilistic variation of driving intention in the pleasant emotional state was similar to that of relief, while the change degree in the pleasant emotional state increased slightly.

Figure 18.

Change rates of driving intention probability. (a) Caused by relief; (b) Caused by pleasure.

5. Conclusions

This study focused on the influence of driver’s different emotional states on the generation and transition of driving intention states. Through constructing the driving intention prediction models suitable for the driver’s natural state, and eight typical emotional states, respectively, we obtained the probability distribution and transition probability of three driving intentions in a driver’s different states. By controlling a single variable, we obtained the generation probability of driving intention under the condition that the emotional state changed while the driving environment parameters remained. Finally, we compared the driving intention generation probabilities in different emotional states with those in natural states and got the differences in driving intention transitions caused by the driver’s emotion evolutions. The basic idea of most previous driving behavior prediction models is generally to predict the driver’s behavior based on the current running state of the vehicle and the time–space relationship with other vehicles in the vehicle aggregation situation (relative speed, relative distance, etc.). The premise of this behavior prediction is that drivers can always effectively perceive the driving environment information and make rational analysis of the traffic safety situation based on the information in the driving behavior decision. In this case, the driving behavior prediction model is likely to make wrong decisions because it ignores the driver’s current mental state. For example, when a driver is in the anger emotion, he or she is very likely to have unreasonable perceptions of the interaction between vehicles (taking other drivers’ normal overtaking behavior as a provocation to themselves, etc.), and then adopt aggressive driving behavior. Obviously, if the behavior prediction model still follows the conventional model to predict the behavior, it will inevitably get results that are not consistent with the actual situation. In contrast, if the driver’s current emotional state of anger can be fully considered in the driving behavior prediction, and then realize that the probability of the driver taking aggressive driving behavior will be greatly increased, the accuracy of behavior prediction will be greatly improved. When it needs to be pointed out, the premise of constructing an emotional driving behavior prediction model is that the on-board intelligence system can accurately recognize the emotional state of the car driver. Finally, yet importantly, it is necessary to point out some limitations of this study. The first is the hypothesis of driving intention and emotion. In the process of constructing, the intention prediction model, based on the hidden Markov model, we assumed the transfer of driving intention as a Markov chain. In the induction and measurement of participants’ emotions, we assumed that the PAD accurately depicted the human emotions, and we deemed the eight emotions in this study to have mapping relationships with the three dimensions of PAD. In addition, as an exploratory study, the car-following driving environment with a single scene was selected, which resulted in the research results of this paper could not being applicable to a more complex driving environment.

Supplementary Materials

The following are available online at https://www.mdpi.com/1660-4601/17/19/6962/s1, Table S1: Probabilities of observation states in different driving intention states, Table S2. Probabilities of observation states in different HMMs, Table S3: F-test for probability of driving intentions under different time window, Table S4: F-test for transition probability of driving intentions under different time window, Table S5: F-test for probability of observation states under different time window, Table S6: F-test results for the transition probability of driving intention under different emotions, Table S7: Probability order of eight emotions in different states of intention transfer, Table S8: q-test results for transition probability of driving intention.

Author Contributions

Both of the authors contributed substantially to the conception and design of the study. Funding acquisition, X.W.; Methodology, Y.L. and X.W.; Project administration, X.W.; Supervision, X.W.; Data curation, Y.L.; Formal analysis, Y.L.; Investigation, Y.L.; Software, Y.L. and X.W.; Validation, X.W.; Writing—original draft, Y.L.; Writing—review and editing, Y.L. and X.W. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the Ministry of Science and Technology of China, grant number 2018YFB1601500; the Qingdao Top Talent Program of Entrepreneurship and Innovation, grant number 19-3-2-11-zhc; the Joint Laboratory for Internet of Vehicles, Ministry of Education-China Mobile Communications Corporation, grant number ICV-KF2018-03; the National Natural Science Foundation of China, grant number 61074140 and 71901134.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Song, K.; Kim, H.; Seo, J.; Kim, K.; Chung, W. Clinical outcomes according to Modic changes of lumbar sprain due to traffic accidents following treatment with Korean Medicine. Eur. J. Integr. Med. 2019, 31, 100981. [Google Scholar] [CrossRef]

- Onozuka, D.; Nishimura, K.; Hagihara, A. Full moon and traffic accident-related emergency ambulance transport: A nationwide case-crossover study. Sci. Total Environ. 2018, 644, 801–805. [Google Scholar] [CrossRef] [PubMed]

- Mao, X.H.; Yuan, C.W.; Gan, J.H.; Zhang, S.Q. Risk factors affecting traffic accidents at urban weaving sections: Evidence from China. Int. J. Environ. Res. Public Health 2019, 16, 1542. [Google Scholar] [CrossRef] [PubMed]

- Huh, K.; Seo, C.; Kim, J.; Hong, D. An experimental investigation of a CW/CA system for automobiles using hardware-in-the-loop simulations. In Proceedings of the American Control Conference, San Diego, CA, USA, 2–4 June 1999; pp. 724–728. [Google Scholar]

- Bromberg, S.; Oron-Gilad, T.; Ronen, A.; Borowsky, A.; Parmet, Y. The perception of pedestrians from the perspective of elderly experienced and experienced drivers. Accid. Anal. Prev. 2012, 44, 48–55. [Google Scholar] [CrossRef] [PubMed]

- Zahid, M.; Chen, Y.; Khan, S.; Jamal, A.; Ijaz, M.; Ahmed, T. Predicting risky and aggressive driving behavior among taxi drivers: Do spatio-temporal attributes matter? Int. J. Environ. Res. Public Health 2020, 17, 3937. [Google Scholar] [CrossRef]

- Ma, Y.; Tang, K.; Chen, S.; Aemal, J.K.; Pan, Y. On-line aggressive driving identification based on in-vehicle kinematic parameters under naturalistic driving conditions. Transp. Res. C Emer. 2020, 114, 554–571. [Google Scholar] [CrossRef]

- Corneliu, E.H.; Cornelia, M.; Simona, A.P. Workplace stress as predictor of risky driving behavior among taxi drivers. The role of job-related affective state and taxi driving experience. Saf. Sci. 2019, 111, 264–270. [Google Scholar] [CrossRef]

- Raffaella, N.; Massimiliano, P.; Alessia, B.; Anna, M.G.; Laura, P. The specific role of spatial orientation skills in predicting driving behavior. Transp. Res. F Traffic 2020, 71, 259–271. [Google Scholar] [CrossRef]

- Bogdan, S.R.; Măirean, C.; Havârneanu, C.E. A meta-analysis of the association between anger and aggressive driving. Transp. Res. F Traffic 2016, 42, 350–364. [Google Scholar] [CrossRef]

- Sullman, M.; Paxion, J.; Stephens, A. Gender roles, sex and the expression of driving anger. Accid. Anal. Prev. 2017, 106, 23–30. [Google Scholar] [CrossRef]

- Ford, J.B.; Merchant, A. Nostalgia drives donations: The power of charitable appeals based on emotions and intentions. J. Advert. Res. 2010, 50, 450–459. [Google Scholar] [CrossRef]

- Jeon, M.; Walker, B.N.; Yim, J.B. Effects of specific emotions on subjective judgment, driving performance, and perceived workload. Transp. Res. F-Traffic 2014, 24, 197–209. [Google Scholar] [CrossRef]

- Sullman, M.; Gras, M.; Cunill, M.; Planes, M.; Font, M. Driving anger in Spain. Pers. Individ. Differ. 2007, 42, 701–713. [Google Scholar] [CrossRef]

- Scott-Parker, B. Emotions, behaviour, and the adolescent driver: A literature review. Transp. Res. F Traffic 2017, 50, 1–37. [Google Scholar] [CrossRef]

- Folkes, V. Recent attribution research in consumer behavior: A review and new directions. J. Consum. Res. 1988, 14, 548–565. [Google Scholar] [CrossRef]

- Peter, J.; Olson, J.; Grunert, K. Consumer Behavior and Marketing Strategy; McGraw-Hill: London, UK, 1999. [Google Scholar]

- Wang, X.; Liu, Y.; Wang, J.; Zhang, J. Study on influencing factors selection of driver’s propensity. Transp. Res. D Transp. Environ. 2019, 66, 35–48. [Google Scholar] [CrossRef]

- Ma, L.; Chen, B.; Wang, X.; Zhu, Z.; Wang, R.; Qiu, X. The analysis on the desired speed in social force model using a data driven approach. Physica A 2019, 525, 894–911. [Google Scholar] [CrossRef]

- Gipps, P. A behavioural car-following model for computer simulation. Transp. Res. B Meth. 1981, 15, 105–111. [Google Scholar] [CrossRef]

- Yang, D.; Zhu, L.; Liu, Y.; Wu, D.; Ran, B. A novel car-following control model combining machine learning and kinematics models for automated vehicles. IEEE Trans. Intell. Transp. 2018, 20, 1991–2000. [Google Scholar] [CrossRef]

- Abbink, D.; Mulder, M.; Van, d.H.; Mulder, M.; Boer, E. Measuring neuromuscular control dynamics during car following with continuous haptic feedback. IEEE Trans. Syst. Man Cybern. Part B 2011, 41, 1239–1249. [Google Scholar] [CrossRef]

- Chen, D.; Laval, J.; Zheng, Z.; Ahn, S. A behavioral car-following model that captures traffic oscillations. Transp. Res. B Methodol. 2012, 46, 744–761. [Google Scholar] [CrossRef]

- Young, S.J. The HTK Hidden Markov Model Toolkit: Design and Philosophy; CUED Technical Report F_INFENG/TR152; Engineering Department, Cambridge University: London, UK, 1993; Volume 2, pp. 2–44. [Google Scholar]

- Li, J.; He, Q.L.; Zhou, H.; Guan, Y.L.; Dai, W. Modeling driver behavior near intersections in hidden markov model. Int. J. Environ. Res. Public Health 2016, 13, 1265. [Google Scholar] [CrossRef] [PubMed]

- Han, Y.; Cui, S.; Geng, Z.; Chu, C.; Chen, K.; Wang, Y. Food quality and safety risk assessment using a novel HMM method based on GRA. Food Control 2019, 105, 180–189. [Google Scholar] [CrossRef]

- Ghassempour, S.; Girosi, F.; Maeder, A. Clustering multivariate time series using Hidden Markov Models. Int. J. Environ. Res. Public Health 2014, 11, 2741–2763. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Liu, Y.; Wang, F.; Wang, J.; Liu, L.; Wang, J. Feature extraction and dynamic identification of drivers’ emotions. Transp. Res. F Traffic 2019, 62, 175–191. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Q.; Nie, Z. Reducing the number of elements in the synthesis of shaped-beam patterns by the forward-backward matrix pencil method. IEEE Trans. Antennas Propag. 2009, 58, 604–608. [Google Scholar] [CrossRef]

- Lindberg, D.V.; More, H. Inference of the transition matrix in convolved hidden markov models and the generalized Baum–Welch algorithm. IEEE Trans. Geosci. Remote 2015, 53, 6443–6456. [Google Scholar] [CrossRef]

- Gui, W. Probability and Statistics; Tsinghua University Press: Beijing, China, 2018. [Google Scholar]

- Ren, Y.; Xu, H.; Li, X.; Wang, W.; Xu, J. Research on module of driver’s steering reaction in simulative tail-crashing environment. J. Transp. Syst. Eng. Inf. Technol. 2007, 7, 94–99. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Wang, H.; Cao, D.; Velenis, E. An ensemble deep learning approach for driver lane change intention inference. Transp. Res. C Emerg. Technol. 2020, 115, 102615. [Google Scholar] [CrossRef]

- Liu, S.; Zheng, K.; Zhao, L.; Fan, P. A driving intention prediction method based on hidden Markov model for autonomous driving. Comput. Commun. 2020, 157, 143–149. [Google Scholar] [CrossRef]

- Xu, G.X.; Li, W.F.; Liu, J. A social emotion classification approach using multi-model fusion. Future Gener. Comput. Syst. 2020, 102, 347–356. [Google Scholar] [CrossRef]

- Feng, X.; Wei, Y.J.; Pan, X.L.; Qiu, L.H.; Ma, Y.M. Academic emotion classification and recognition method for large-scale online learning environment—Based on A-CNN and LSTM-ATT deep learning pipeline method. Int. J. Environ. Res. Public Health 2020, 17, 1941. [Google Scholar] [CrossRef] [PubMed]

- Bänziger, T.; Tran, V.; Scherer, K.R. The Geneva emotion wheel: A tool for the verbal report of emotional reactions. In Proceedings of the Conference of the International Society of Research on Emotion, Bari, Italy, 1 January 2005; Volume 149, pp. 271–294. [Google Scholar]

- Roidl, E.; Frehse, B.; Oehl, M.; Höger, R. The emotional spectrum in traffic situations: Results of two online-studies. Transp. Res. F Traffic 2013, 18, 168–188. [Google Scholar] [CrossRef]

- Roidl, E.; Frehse, B.; Höger, R. Emotional states of drivers and the impact on speed, acceleration and traffic violations—A simulator study. Accid. Anal. Prev. 2014, 70, 282–292. [Google Scholar] [CrossRef] [PubMed]

- Mcginley, J.J.; Friedman, B.H. Autonomic specificity in emotion: The induction method matters. Int. J. Psychophysiol. 2017, 118, 48–57. [Google Scholar] [CrossRef] [PubMed]

- Gross, J.J.; Levenson, R.W. Emotion elicitation using films. Cogn. Emot. 2007, 9, 9–28. [Google Scholar] [CrossRef]

- Rainville, P.; Bechara, A.; Naqvi, N.; Damasio, A.R. Basic emotions are associated with distinct patterns of cardiorespiratory activity. Int. J. Psychophysiol. 2006, 61, 5–18. [Google Scholar] [CrossRef]

- Choi, K.; Kim, J.; Kwon, O.; Kim, J.; Ryu, Y.; Park, J. Is heart rate variability (HRV) an adequate tool for evaluating human emotions?—A focus on the use of the International Affective Picture System (IAPS). Psychiatry Res. 2017, 251, 192–196. [Google Scholar] [CrossRef]

- Fiorito, E.R.; Simons, R.F. Emotional imagery and physical anhedonia. Psychophysiology 1994, 31, 513–521. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Huang, X.; Yang, L.; Nie, L. Bridge the semantic gap between pop music acoustic feature and emotion: Build an interpretable model. Neurocomputing 2016, 208, 333–341. [Google Scholar] [CrossRef]

- Clark, D.M. On the induction of depressed mood in the laboratory: Evaluation and comparison of the Velten and musical procedures. Accid. Anal. Prev. 1983, 5, 27–49. [Google Scholar] [CrossRef]

- Martin, M. On the induction of mood. Clin. Psychol. Rev. 1990, 10, 669–697. [Google Scholar] [CrossRef]

- Cai, H.; Lin, Y. Modeling of operators’ emotion and task performance in a virtual driving environment. Int. J. Hum. Comput. Stud. 2011, 69, 571–586. [Google Scholar] [CrossRef]

- Zhou, P.; Hossain, M.; Zong, X.; Muhammad, G.; Amin, S.; Humar, I. Multi-task emotion communication system with dynamic resource allocations. Inf. Fusion 2019, 52, 167–174. [Google Scholar] [CrossRef]

- Liang, Z.; Oba, S.; Ishii, S. An unsupervised EEG decoding system for human emotion recognition. Neural Netw. 2019, 116, 257–268. [Google Scholar] [CrossRef] [PubMed]

- Katsigiannis, S.; Ramzan, N. DREAMER: A database for emotion recognition through EEG and ECG signals from wireless low-cost off-the-shelf devices. IEEE J. Biomed. Health 2017, 22, 98–107. [Google Scholar] [CrossRef] [PubMed]

- Goshvarpour, A.; Abbasi, A.; Goshvarpour, A. An accurate emotion recognition system using ECG and GSR signals and matching pursuit method. Biomed. J. 2017, 40, 355–368. [Google Scholar] [CrossRef] [PubMed]

- Philippot, P.; Chapelle, G.; Blairy, S. Respiratory feedback in the generation of emotion. Cogn. Emot. 2002, 16, 605–627. [Google Scholar] [CrossRef]

- Shojaeilangari, S.; Yau, W.Y.; Nandakumar, K.; Li, J.; Teoh, E.K. Robust representation and recognition of facial emotions using extreme sparse learning. IEEE Trans. Image Process. 2015, 24, 2140–2152. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.H.; Liang, W.B. Emotion recognition of affective speech based on multiple classifiers using acoustic-prosodic information and semantic labels. IEEE Trans. Affect. Comput. 2010, 2, 10–21. [Google Scholar] [CrossRef]

- Mehrabian, A.; Epstein, N. A measure of emotional empathy. J. Res. Pers. 1972, 40, 525–543. [Google Scholar] [CrossRef] [PubMed]

- Russe, J.A.; Mehrabian, A. Evidence for a three-factor theory of emotions. J. Res. Pers. 1977, 11, 273–294. [Google Scholar] [CrossRef]

- Mehrabian, A. Pleasure-arousal-dominance: A general framework for describing and measuring individual differences in Temperament. Curr. Psychol. 1996, 14, 261–292. [Google Scholar] [CrossRef]

- Mehrabian, A. Comparison of the PAD and PANAS as models for describing emotions and for differentiating anxiety from depression. J. Psychopathol. Behav. Assess. 1997, 19, 331–357. [Google Scholar] [CrossRef]

- Li, X.; Zhou, H.; Song, S.; Ran, T.; Fu, X. The reliability and validity of the Chinese version of abbreviated PAD emotion scales. Lect. Notes Comput. Sci. 2005, 3784, 513–518. [Google Scholar] [CrossRef]

- Liu, Y.; Tao, L.; Fu, X. The analysis of PAD emotional state model based on emotion pictures. J. Image Graph. 2009, 14, 753–758. [Google Scholar]

- Ma, Y.; Hu, B.; Chan, C.; Qi, S.; Fan, L. Distractions intervention strategies for in-vehicle secondary tasks: An on-road test assessment of driving task demand based on real-time traffic environment. Transp. Res. D Transp. Environ. 2018, 63, 747–754. [Google Scholar] [CrossRef]

- Muhrer, E.; Vollrath, M. The effect of visual and cognitive distraction on driver’s anticipation in a simulated car following scenario. Transp. Res. F Traffic 2011, 14, 555–566. [Google Scholar] [CrossRef]

- Saifuzzaman, M.; Zheng, Z. Incorporating human-factors in car-following models: A review of recent developments and research needs. Transp. Res. C Emerg. 2014, 48, 379–403. [Google Scholar] [CrossRef]

- Brackstone, M.; Mcdonald, M. Car-following: A historical review. Transp. Res. F Traffic 1999, 2, 181–196. [Google Scholar] [CrossRef]

- Wan, P.; Wu, C.; Lin, Y.; Ma, X. On-road experimental study on driving anger identification model based on physiological features by ROC curve analysis. IET Intell. Transp. Syst. 2017, 11, 290–298. [Google Scholar] [CrossRef]

- Bumgarner, D.; Webb, J.; Dula, C. Forgiveness and adverse driving outcomes within the past five years: Driving anger, driving anger expression, and aggressive driving behaviors as mediators. Transp. Res. F Traffic 2016, 42, 317–331. [Google Scholar] [CrossRef]

- Reisenzein, R. Exploring the strength of association between the components of emotion syndromes: The case of surprise. Cogn. Emot. 2000, 14, 1–38. [Google Scholar] [CrossRef]

- Gerten, J.; Topolinski, S. Shades of surprise: Assessing surprise as a function of degree of deviance and expectation constraints. Cognition 2019, 192, 103986. [Google Scholar] [CrossRef]

- Taylor, J.; Deane, F.; Podd, J. Driving fear and driving skills: Comparison between fearful and control samples using standardised on-road assessment. Behav. Res. Ther. 2007, 45, 805–818. [Google Scholar] [CrossRef]

- Fikretoglu, D.; Brunet, A.; Best, S.R.; Metzler, T.J.; Delucchi, K.; Weiss, D.S.; Marmar, C. Peritraumatic fear, helplessness and horror, and peritraumatic dissociation: Do physical and cognitive symptoms of panic mediate the relationship between the two? Pers. Individ. Differ. 2007, 45, 39–47. [Google Scholar] [CrossRef]

- Lu, J.; Xie, X.; Zhang, R. Focusing on appraisals: How and why anger and fear influence driving risk perception. J. Saf. Res. 2013, 45, 65–73. [Google Scholar] [CrossRef]

- Schmidt-Daffy, M. Fear and anxiety while driving: Differential impact of task demands, speed and motivation. Transp. Res. F Traffic 2013, 16, 14–28. [Google Scholar] [CrossRef]

- Dula, C.S.; Adams, C.L.; Miesner, M.T.; Leonard, R.L. Examining relationships between anxiety and dangerous driving. Accid. Anal. Prev. 2010, 42, 2050–2056. [Google Scholar] [CrossRef]

- Huang, Y.; Lin, P.; Wang, J. The influence of bus and taxi drivers’ public self-consciousness and social anxiety on aberrant driving behaviors. Accid. Anal. Prev. 2018, 117, 145–153. [Google Scholar] [CrossRef]

- Clapp, J.; Olsen, S.; Danoff, B.; Hagewood, J.; Hickling, E.; Hwang, V.; Beck, J. Factors contributing to anxious driving behavior: The role of stress history and accident severity. J. Anxiety Disord. 2011, 25, 592–598. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.H.; Peng, D.H.; Qiu, M.H.; Shen, T.; Zhang, C.; Shi, F. Altered brain network modules induce helplessness in major depressive disorder. J. Affect. Disords. 2014, 168, 21–29. [Google Scholar] [CrossRef]

- Dolinski, D.; Odachowska, E. Beware when danger on the road has passed. The state of relief impairs a driver’s ability to avoid accidents. Accid. Anal. Prev. 2018, 115, 73–78. [Google Scholar] [CrossRef] [PubMed]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).