Need Help?

10 September 2025

Peer Review Week 2025: "Rethinking Peer Review in the AI Era"

Peer Review Week (PRW) is a global event to celebrate the vital role peer review plays in ensuring the quality and integrity of research. Each year, individuals and institutions come together through events, webinars, and social media campaigns to highlight the importance of rigorous and effective review in scholarly communication.

This year’s theme, "Rethinking Peer Review in the AI Era" (15–19 September 2025), invites the academic community to reflect on how artificial intelligence is reshaping research and publishing, and what that means for the future of peer review.

As part of this year’s theme, we spoke with Roohi Ghosh, who is a Co-Chair for Peer Review Week 2025. In our interview, she shares her insights on how AI is already impacting the peer review process and what changes we might expect in the near future. Roohi emphasizes the need for transparent guidelines on AI use, for both authors and reviewers, and reflects on the irreplaceable human element at the heart of scientific discovery.

Read the interview here.

Responsible and Thoughtful AI Use

We believe AI holds great potential to enhance the peer review experience for editors, reviewers, and authors. From performing ethical image checking and streamlining workflows prior to peer review to assisting with grammar and quality checks, AI will be able to help reviewers focus more directly on research quality. However, these benefits also come with challenges – and they require clear guidelines for the use cases.

At MDPI, we have started to responsibly incorporate AI tools into our editorial workflows. MDPI’s Reviewer Finder assists our internal editors in efficiently identifying expert reviewers based on matching the reviewers’ publication history with a submitted paper’s title and abstract. This improves both the precision and speed of reviewer selection. Additionally, the tool helps broaden our reviewer pool by identifying qualified researchers beyond our existing database, helping to reduce reviewer fatigue and distribute the workload more evenly across the community.

Check our Blog article: AI Tools to Support Innovating Peer Review

Protecting Scientific Integrity

Advanced AI tools are increasingly capable of producing well-written manuscripts that may lack original insight or genuine scholarly contribution. In more serious cases, bad actors, including paper mills, are using AI to generate fraudulent submissions that can bypass traditional checks. That’s why publishers are adopting proactive measures and technologies to ensure the integrity and credibility of scholarly publishing.

Initiatives like the STM Integrity Hub have working groups focusing on common challenges, such as image manipulation detection. Image manipulation poses a serious risk to research integrity and the human detection of these cases can be quite difficult and time consuming. To improve detection of image manipulation, we have implemented a new tool, Proofig AI. Proofig AI is an AI-powered image proofing tool to assist the reviewing of scientific publications to ensure research integrity.

Check our Blog article: Proofig AI: Ensuring Research Integrity with AI-Powered Image Proofing

There are also legal and ethical aspects to consider. Peer review depends on confidentiality, and unpublished manuscripts must not be processed using proprietary large language models (LLMs). Like many publishers, MDPI prohibits the use of such tools in the peer review process to prevent breaches of confidentiality and to protect the integrity of the review system.

Check our Blog article: Ethical Responsibilities of Peer Reviewers

On the author side, we require that authors of MDPI articles disclose whether AI tools were used in manuscript preparation, and to specify how. This transparency is essential to help editors and reviewers assess submissions fairly.

Researcher Feedback for Trust in Peer Review

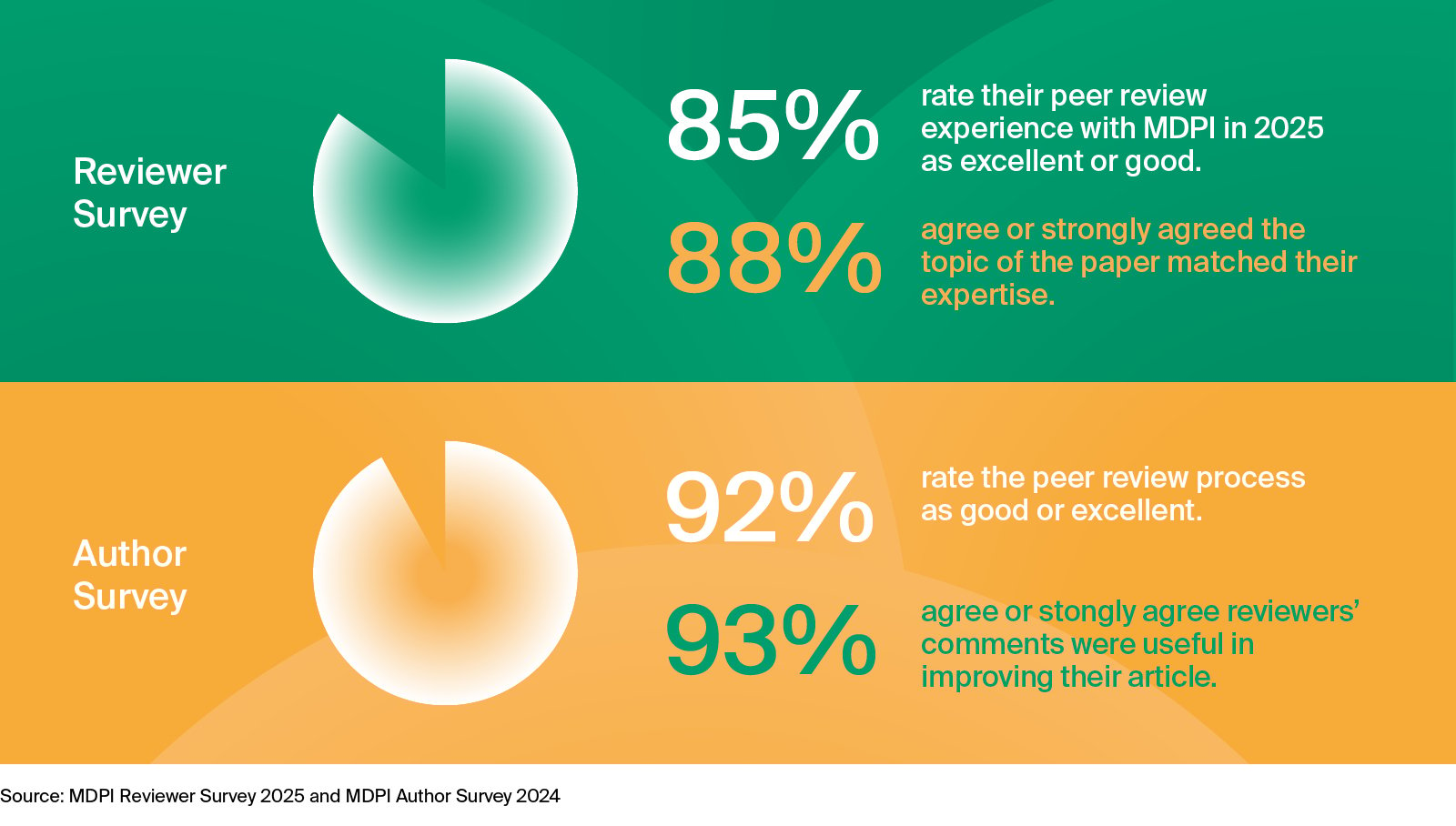

MDPI ensures a reliable and robust editorial process by combining detailed manual checks by professionally trained staff members with tools that flag inconsistencies to our editors. AI tools can ease workloads and boost efficiency to some extent, but human involvement remains essential for maintaining a high-quality process. Each field of research follows established standards, making expert judgment and contextual understanding as important as ever. Survey insights help highlight priorities for authors and reviewers and how they assess MDPI’s peer review and editorial practices.

JAMS Webinar: "Can AI Be an Editor’s Best Ally? Rethinking Peer Review Today"

As part of Peer Review Week 2025, MDPI and JAMS are pleased to host a webinar on the evolving role of AI in academic publishing and peer review. The session will highlight the opportunities AI offers for improving efficiency and quality, while also examining the challenges and unresolved questions it raises for the scientific community. Participants are encouraged to join the conversation by submitting their questions during the event.

Register for the event here.

The Role of AI in Science: Aid or Collaborator?

As large language models (LLMs) grow more capable, their role in science itself is being reconsidered—not only in publishing, but in the very production of knowledge. Two perspectives are emerging: AI as a powerful aid to researchers today, and AI as a potential collaborator in the future.

The first view reflects present reality: AI as a powerful tool that assists researchers without replacing the human expertise. LLMs can summarize vast literatures, generate code, and suggest directions during brainstorming, yet they remain subordinate to human judgment. As Roohi Ghosh observed in our interview on AI use in peer review: "The human is irreplaceable at least for now, and I feel even as AI advances and tools become mature, the expertise, the empathy, and the ability to draw connections and interpret—these are purely human characteristics that AI cannot replace."

In this perspective, scientific insight and creativity are still distinctly human traits. Notably, output provided by LLMs is rarely replicable—different prompts at different times can lead to substantially different results, underscoring their limited reliability as sources of scientific knowledge. For now, the "AI as an Aid" view remains dominant.

The second, more speculative view looks ahead to a time when AI could act as a true collaborator in science, potentially even deserving recognition as a co-author. Advocates suggest that, if LLMs one day achieve general artificial intelligence (AGI), they could contribute by proposing novel hypotheses, uncovering hidden patterns, or even generate new theoretical frameworks—contributions that could rival those of human researchers.

This debate is no longer theoretical. It carries important implications for peer review, authorship, and academic integrity. If AI moves from assisting to co-creating, questions around authorship, responsibility, and intellectual credit will become increasingly complex. As AI advances, so too must our understanding of what it means to "do science", and who, or what, can be said to participate and hold responsibility in that process.

Innovation to Sustain Integrity

As academic publishing navigates the AI era, maintaining accountability and trust will demand innovation as well as caution. Ethical frameworks, technical infrastructure, and review practices must evolve in step with the technology. Peer Review Week 2025 is a timely opportunity confront these questions directly, and to chart a future where innovation strengthens, rather than undermines, scientific integrity.