Abstract

This paper proposes new ANOVA-based approximations of functions and emulators of high-dimensional models using either available derivatives or local stochastic evaluations of such models. Our approach makes use of sensitivity indices to design adequate structures of emulators. For high-dimensional models with available derivatives, our derivative-based emulators reach dimension-free mean squared errors (MSEs) and a parametric rate of convergence (i.e., ). This approach is extended to cope with every model (without available derivatives) by deriving global emulators that account for the local properties of models or simulators. Such generic emulators enjoy dimension-free biases, parametric rates of convergence, and MSEs that depend on the dimensionality. Dimension-free MSEs are obtained for high-dimensional models with particular distributions from the input. Our emulators are also competitive in dealing with different distributions of the input variables and selecting inputs and interactions. Simulations show the efficiency of our approach.

Keywords:

derivative-based ANOVA; emulators; high-dimensional models; independent input variables; optimal estimators PACS:

62J10; 62L20; 62Fxx; 49Q12; 26D10

1. Introduction

Derivatives are sometimes available in modeling either according to the nature of observations of the phenomena of interest ([1,2] and the references therein) or low-cost evaluations of exact derivatives for some classes of PDE/ODE-based models, thanks to adjoint methods [3,4,5,6,7,8]. Models are defined via their rates of change with respect to their inputs; implicit functions (defined via their derivatives) are instances. Additionally, and particularly for complex models or simulators, efficient estimators of gradients and second-order derivatives using stochastic approximations are provided in refs. [9,10,11,12,13]. Being able to reconstruct functions using such derivatives is worth investigating [14], as having a practical, fast-evaluated model that links stochastic parameters and/or stochastic initial conditions to the output of interest of PDE/ODE models remains a challenge due to numerous uncertain inputs [15].

Moreover, the first-order derivatives of models are used to quickly select non-relevant input variables of simulators or functions, leading to effective screening measures. Efficient variance-based screening methods rely on the upper bounds of generalized sensitivity indices, including Sobol’s indices (see refs. [16,17,18,19,20,21,22] for independent inputs and [23] for non-independent variables).

For high-dimensional simulators, dimension reductions via screening input variables are often performed before building their emulators using Gaussian processes (kriging models) [24,25,26,27,28] polynomial chaos expansions [29,30,31], SS-ANOVA [32,33], or other machine learning approaches [34,35]. Indeed, such emulators rely on nonparametric or semi-parametric regressions and struggle to reconstruct simulators for a moderate to large number of inputs (e.g., [35]). Often, nonparametric rates of convergence are achieved by such emulators (see ref. [33] for SS-ANOVA and [36,37,38] for polynomial chaos expansions). Regarding the stability and accuracy of polynomial chaos expansions, the number of model runs needed is first estimated at the square of the dimension of the basis used [36] and then reduced at that dimension up to a logarithm factor [37,38]. Note that for d inputs, such a dimension is about for the tensor-product basis, including, at most, the monomial of degree w.

For models with a moderate to large number of relevant inputs, Bayesian additive regression trees have been used for building emulators of such models using only the input and output observations (see ref. [35] and the references therein). Such approaches rely somehow on rule-ensemble learning approaches by constructing homogenous or local base learners (see ref. [34] and references therein). Combining the model outputs and model derivatives can help to build emulators that account for both local and global properties of simulators. For instance, including derivatives in the Gaussian processes (considered in ref. [2]) allows for improving such emulators. Emulators based on Taylor series (see ref. [14]) combine both the model outputs and derivative outputs with interesting theoretical results, such as dimension-free rates of convergence. However, concrete constructions of such emulators are not provided in that paper.

Note that the aforementioned emulators are part of the class of global approximations of functions. While global emulators can be used to approximate models at any point in entire domain, local or point-based emulators require building different emulators for the same model. Conceptually, the main issues related to such practical emulators are the truncation errors and the biases due to epistemic uncertainty. Indeed, none of the above emulators rely on exact and finite expansions of functions in general. Thus, additional assumptions about the order of such errors are necessary to derive the rates of convergence of emulators. For instance, decreasing eigenvalues of kernels are assumed in kernel-based learning approaches (see, e.g., ref. [39]).

So far, the recent derivative-based (Db) ANOVA provides exact expansions of smooth functions with different types of distributions from inputs using the model outputs, such as first-order and second-order derivatives up to -order cross partial derivatives [22]. It was used in ref. [13] to derive the plug-in and crude emulators of models by replacing the unknown derivatives with their estimates. However, convergence analysis of such emulators is not known, and derivative-free methods are much more convenient for applications in which the computations of cross-partial derivatives are too expansive or impossible [9,10,11].

Therefore, for high-dimensional simulators for which all the inputs are important or not, it is worth investigating the development of their emulators based directly on available derivatives or derivative-free methods. The contribution of this paper is threefold:

- We designed adequate structures of emulators based on information gathered from global and derivative-based sensitivity analysis, such as unbiased orders of truncations and the selection of relevant ANOVA components (inputs and interactions);

- We constructed derivative-based or derivative-free global emulators that are easy to fit and compute and can cope with every distribution of continuous input variables;

- We examined the convergence analysis of our emulators, with a particular focus on the (i) dimension-free upper bounds of the biases and MSEs; (ii) the parametric rates of convergence (i.e., ); and (iii) the number of model runs needed to obtain the stability and accuracy of our estimations.

In this paper, flexible emulators of complex models or simulators and approximations of functions are proposed using exact and finite expansions of functions involving cross-partial derivatives, known as Db-ANOVA. Section 2 first deals with the general formulation of Db-ANOVA using different continuous distribution functions and emulators of models with available derivatives. Such emulators reach the parametric rates of convergence, and their MSEs do not depend on dimensionality (i.e., d). Second, adequate structures of emulators are investigated using Sobol’s indices and their upper bounds, as the components of such emulators are interpretable as the main effects and interactions of a given order. The orders of unbiased truncations have been derived, leading to the possibility of selecting the ANOVA components that were included in our emulators.

For non-highly smooth models and for high-dimensional simulators for which the computations of derivatives are too expansive or impossible, new, efficient simulator emulators have been considered and are shown in Section 3, including their statistical properties. First, we provide such emulators under the assumption of quasi-uniform distributions of inputs so as to (i) obtain practical conditions for using such emulators and (ii) derive emulators that enjoy dimension-free MSEs for particular distributions of inputs. Second, such an assumption is removed to cope with every distribution of inputs. The proposed emulators have dimension-free biases and reach the parametric rate of convergence as well. Numerical illustrations (see Section 4) and an application to a heat diffusion PDE model (see Section 5) have been considered to show the efficiency of our estimators, and we conclude this work in Section 6.

General Notation

For an integer, , denote with a random vector of d independent and continuous variables with marginal cumulative distribution functions (CDFs), , and probability density functions (PDFs), .

For a non-empty subset, , stands for its cardinality, and . Additionally, denotes a subset of such variables, and the partition holds. Finally, we use for the Euclidean norm, for the expectation operator, and for the variance operator.

2. New Insight into Derivative-Based ANOVA and Emulators

Given an integer, , and an open set, , consider a weak partial differentiable function, [40,41], and a subset, , with . Denote with the weak cross-partial derivatives of each component of f with respect to each with and , e.g., the Hilbert space of functions. Consider the following Hilbert–Sobolev space:

In what follows, assume the following:

Assumption A1.

is a random vector of independent and continuous variables, supported on an open domain, Ω.

Assumption A2.

is a deterministic function with .

2.1. Full Derivative-Based Emulators

Under Assumption 2, every sufficiently smooth unction, , admits the derivative-based ANOVA (Db-ANOVA) expansion (see refs. [13,22]), that is, ,

where is a random vector of independent variables, having the CDFs and the PDFs . By evaluating at the random vector, , and taking , yields the unique and orthogonal Db-ANOVA decomposition of , that is,

When analytical cross-partial derivatives are available, or the derivative datasets are observed (see refs. [1,2] and the references therein), we are able to derive emulators of complex models that are time-demanding, bearing in mind the method of moments. Indeed, given a sample, , from , and the associated sample of the (analytical or observed) derivatives’ outputs, that is,

the consistent (full) emulator or estimator of f at any sample point, , of is

with and for every real by convention. We can check that is an unbiased estimator and that it reaches the parametric mean squared error (MSE) rate of convergence, that is, . This rate is dimension-free, provided that .

For complex models without cross-partial derivatives, optimal estimators of such derivatives (i.e., ) have been used to construct the plug-in consistent emulator of f [13]. Such an emulator is given by

While the estimator, , provided in ref. [13] has a dimension-free upper bound for the bias and reaches the parametric rate of convergence, its MSE increases with , showing the necessity of using the number of model runs, , to expect a significant reduction in the MSE for higher-order cross-partial derivatives.

2.2. Adequate Structures of Emulators and Truncations

In high-dimensional settings, it is common to expect a reduction in the dimensionality before building emulators. The use of truncations is common practice in the polynomial approximation of functions [29,32,33,37] and in ANOVA-practicing communities [17,42,43,44], leading to truncated errors. When using Db-ANOVA, controlling such errors is made possible according to the information gathered from global sensitivity analysis [13,17]. Indeed, the variances in the terms in Db-ANOVA expansions of functions are exactly the main part, and interactions with Sobol’s indices up to a normalized constant occur when . Thus, we are able to avoid non-relevant terms in our emulators according to the values of Sobol’s indices, suggesting that adequate truncations will not have any impact on the MSEs and the above parametric rate of convergence. For the sake of simplicity, is considered in what follows.

Definition 1.

Consider an integer, , and the full Db-ANOVA given by (2). The truncated Db-ANOVA of f (in the superpose sense [42,43,44,45]) of order is given by

While is an approximation of f in general, the equality holds for some functions. Given an integer, , consider the space of functions:

We can see that is a class of functions having, at most, -order interactions. Moreover, contains the class of functions given by

It is clear, then, that we have when .

Definition 2.

A truncation of f given by is said to be an unbiased one whenever .

Thus, is the class of unbiased truncations of f up to the order of .

Formally, based on available derivatives, we are able to derive unbiased truncations for some classes of functions. Consider the following Db expressions of Sobol’s indices of the input variables and their upper bounds (see ref. [22]):

and

Note that the computations of and are straightforward, using given first-order derivatives (see ref. [13]), whereas those of require using i) nonparametric methods for given derivatives or ii) derivatives of specific input values. Using such indices, adequate structures of can be constructed. For instance, it is known that when , f is an additive function of the form , with being a real-valued function. Thus, a truncation (in the superpose sense) of order is an unbiased truncation. Other values of are given in Proposition 1.

Proposition 1.

Consider the main and total indices given by with . Then,

- implies that using leads to unbiased truncations;

- implies that using leads to unbiased truncations;

- If there exists an integer, α, such that and , then leads to unbiased truncations.

Proof.

See Appendix A. □

In general, if , then leads to unbiased truncations. Often, low-order derivatives are available or can be efficiently computed using fewer model runs, leading to truncated emulators in the superpose sense. It is worth noting that our emulators still enjoy the above parametric rate of convergence for unbiased truncations. In the presence of truncated errors, it is usually difficult to derive the rates of convergence without additional assumptions about the order of such errors.

In addition to such truncations in the superpose sense, screening measures allow for quickly identifying non-relevant inputs (i.e., ), leading to possible dimension reductions. For instance, we can see the following:

- or implies removing all cross-partial derivatives or interactions involving ;

- and suggest removing the first-order terms, corresponding to ;

- or, equivalently, and implies keeping only and .

For non-highly smooth functions and the models for which the computations of derivatives are too expansive or impossible, derivative-free methods combined with unbiased truncations remain an interesting framework.

3. Derivative-Free Emulators of Models

This section covers the development of the model emulators, even when all the inputs are important according to screening measures.

3.1. Stochastic Surrogates of Functions Using Db-ANOVA

Consider integers ; with ; , and denote with a d-dimensional random vector of independent variables satisfying: ,

Any random variable that is symmetrically about zero is an instance of s. Additionally, denote . For concise reporting of the results, elementary symmetric polynomials (ESPs) were used (see, e.g., [46,47]).

Definition 3.

Given with and , the ESP of is defined as follows:

Note that , . In addition, given , define

Without loss of generality, we are going to focus on the modified output, that is, . Based on the above framework, Theorem 1 provides a new approximation of every function or surrogate of a deterministic simulator.

Theorem 1.

Consider distinct s. If f is smooth enough and Assumption 1 holds, then there exists and real coefficients such that

Proof.

See Appendix B. □

The setting with provides an approximation of order . Equivalently, the same order is obtained using the constraints:

with and . In the same sense, taking with yields an approximation of order . Such constraints implicitly define the coefficients , and they rely on the (generalized) Vandermonde matrices. Distinct values of s (i.e., ) ensure the existence and uniqueness of such coefficients, as such matrices are invertible (see refs. [47,48]).

To improve the approximations of lower-order terms (i.e., lower values of p) in Equation (4), we are given an integer with and consider the following constraints:

where stands for the largest integer that is less than . The above choice of coefficients requires , and is the minimum number of model runs used for deriving surrogates of functions. Such coefficients are more suitable for truncated surrogates. For instance, the truncated surrogate of order (in the superposition sense) is given by

Note that in this case, one must require , and we will see that is the best choice to improve the MSEs.

Likewise, when with is the vector of the most influential input variables according to variance-based sensitivity analysis (see Section 2.2), the following truncated surrogate should be considered:

Based on Equation (4), the method of moments allows for deriving the emulator of any simulator or the estimator of any function. To that end, we are given two independent samples of size N, that is, from and from . The full and consistent emulator is given by

The derivations of the truncated emulators (i.e., and ) are straightforward. All these emulators rely on model runs with the possibility . This property is useful for high-dimensional simulators.

3.2. Statistical Properties of Our Emulators

While the emulator does not rely on the model derivatives, structural and technical assumptions about f are needed to derive the biases of this emulator, such as the Hölder space of functions. Given , denote , , and . Given , the Hölder space of -smooth functions is given by ,

with , and is a (weak) cross-partial derivative.

Moreover, given and CDFs s, define the following space of functions:

We can see that contains constants; is a class of functions having, at most, -order of interactions, and is a class of all smooth functions, as . Lemma 1 provides the links between both spaces. To that end, consider for all with being the infinity norm.

Assumption A3.

for any .

Assumption 3 aims to cover the class of quasi-uniform distributions and other distributions for which the event occurs with a high probability. It is the case for most unbounded distributions.

Lemma 1.

Consider , and assume that with and Assumptions 1 and 3 hold. Then, there exists such that , with

Proof.

See Appendix C. □

Note that Lemma 1 also provides the upper bound of the remaining terms when approximating using the truncated function

When , is equivalent to

3.2.1. Biases of the Proposed Emulators

To derive the bias of (i.e., an estimator of ) in Theorem 2 using the aforementioned spaces of functions, denote with a d-dimensional random vector of independent variables that are centered about zero and standardized (i.e., , ), and denotes the set of such random vectors. For any and , define

Theorem 2.

Assume with and Assumptions 1 and 3 hold. Then, we have

Moreover, if with and , then

Proof.

See Appendix D. □

Using the fact , the results provided in Theorem 2 have simple upper bounds (see Corollary 1). To provide such results, consider

Corollary 1.

Assume with and Assumptions 1 and 3 hold. If , then

Moreover, if with and , then

Proof.

See Appendix E. □

Using the above results, the bias of the full emulator of is straightforward when taking and knowing that . Moreover, Corollary 2 provides the bias of such an emulator under different structural assumptions about f so as to cope with many functions. To that end, define

Corollary 2.

Let ; and . Assume with and Assumptions 1 and 3 hold. If , then

Moreover, if with and , then

Proof.

See Appendix F. □

In view of Corollaries 1 and 2, Equation (12) can lead to a dimension-free upper bound of the bias. Indeed, using the uniform bandwidth and

we can see that the upper bound of the bias is . Furthermore, it is worth noting that for any function .

3.2.2. Mean Squared Errors

We start this section with the variance of the proposed emulators, followed by their mean squared errors and different rates of convergence. For the variance, define

Theorem 3.

Consider the coefficients given by Equation (5), and assume with and Assumptions 1–3 hold. Then,

Moreover, if , and , then

Proof.

See Appendix G. □

It turns out that the upper bounds of the variance in Theorem 3 do not depend on the uniform bandwidths when , leading to the derivations of the parametric MSEs of and . To that end, consider the upper bound of the above variance, that is,

Remark 1.

Based on the expression of , the random variable or having the smallest value of fourth moments or kurtosis should be used. Under the additional condition , we can check that (see Appendix G)

with .

In what follows, we are going to use for the MSE of and

for the expected or integrated MSE (IMSEs) of .

Corollary 3.

Given (5), , assume with ; and Assumptions 1–3 hold. Then, the MSE and IMSE share the same upper bound, given as follows:

Moreover, if with , then the IMSE is given by

Proof.

See Appendix H. □

The presence of in Corollary 3 is going to decrease the rates of convergence of our estimators without additional assumptions about . Corollary 4 starts providing such conditions and the associated MSEs and IMSEs.

Corollary 4.

Under the conditions of Corolary 3, assume that . Then, the upper bound of the IMSE and MSE is given by

Moreover, if with and , then this bound becomes

Proof.

Using Corollary 1, the results are straightforward. □

Based on the upper bounds of Corollary 4, interesting choices of or on one hand and and h on the other hand help in obtaining the parametric rates of convergence due to the fact that does not depend on h.

Corollary 5.

Let , . Assume with ; and Assumptions 1–3 hold. If with and , then the upper bound of MSE and IMSE is

provided that .

Moreover, if with the real , then

Proof.

See Appendix I. □

It is worth noting that the parametric rates of convergence are reached for any function that belongs to (see Corollary 5). Moreover, taking leads to the dimension-free MSEs, which hold for particular distributions of the inputs given by

For uniform distributions, , we must have . Obviously, such conditions are a bit strong, as a few distributions are covered. In the same sense, using as an emulator of for any sample point, , of requires choosing such that its support contains that of , . Thus, Assumption 3, given by , implicitly depends on the distribution of . For instance, given the bounded support of , that is, , we must have , with being the support of , limiting our ability to deploy as a dimension-free, global emulator for some distributions of inputs.

Nevertheless, the assumption is always satisfied for the finite dimensionality, d, if we are only interested in estimating for a given point , leading to local emulators. Indeed, taking to be depended on the point at which f must be evaluated allows for enjoying the parametric rate of convergence and dimension-free MSEs for sample points falling in a neighborhood of . An example of such a choice is

However, different emulators are going to be built in order to estimate for any value, , of . Constructions of balls of given nodes and the radius are an interesting perspective.

Remark 2.

When , in-depth structural assumptions about f that should allow for enjoying the above MSEs concern the truncation error, resulting from keeping only all the interactions or cross-partial derivatives with . One way to handle it consists of choosing such that the residual bias is less than (i.e., ), thanks to sensitivity indices.

While truncations are sometimes necessary in higher dimensions, it is interesting to have the rates of convergence without any truncation to cover lower or moderate dimensional functions, for instance.

Corollary 6.

Let ; , and . If with , , and Assumptions 1–3 hold, then the upper bound of MSE and IMSE is

Moreover, if with and , then

Proof.

See Appendix J. □

In the case of the full emulator of , remark that

Based on Corollary 6, different rates of convergence can be obtained depending on the the support of the input variables via the choice of .

Corollary 7.

with when .

Let and . Assume with ; ; with and Assumptions 1–3 hold. Then, the (MSE and IMSE) rates of convergence are given as follows:

- (i)

- If , then .

- (ii)

- If with , then

Proof.

It is straightforward bearing in mind Corollaries 5 and 6. □

Again, the assumptions in Points (i)–(ii) are satisfied for fewer distributions, but they are always satisfied if we are only interested in estimating for a given point , leading to building different local emulators.

3.2.3. Mean Squared Errors for Every Distribution of Inputs

In this section, we are going to remove the assumption about the quasi-uniform distribution of inputs (Assumption 3) so as to cover any probability distribution of inputs. Note that Assumption 3 is used to derive and . Such an assumption can be avoided by using the following inequalities:

with

and

with

Using such inequalities, the following results are straightforward keeping in mind Corollaries 5–7.

Corollary 8.

Let and . Assume with ; and Assumption 1 and 2 hold. If with and , then we have

provided that .

Corollary 9.

Let and . Assume with ; ; with ; and Assumption 1 and 2 hold. Then,

Regarding the choice of , from the expression

an interesting choice of must satisfy in order to reduce the value of . The following proposition gives interesting choices of s. Recall that and are supported on , respectively, with .

Proposition 2.

Consider a PDF, , supported on and . The distribution, , defined as a mixture of and , that is,

allows for reducing , provided that , .

Proof.

We can check that . □

In what follows, we are going to consider (i.e., ) and with being the uniform distribution.

4. Illustrations

In this section, we deploy our derivative-free emulators to approximate analytical functions. For the setting of different parameters needed, we rely on the results of Corollary 5. Indeed, we use with ; for the identified . For each function, we use runs of the model to construct our emulators, corresponding to with the following:

- ;

- if L is odd and ; otherwise, .

Then, we predict the output for sample points, that is, . Finally, sample values are generated using Sobol’s sequence, and we compare the predictions associated with the initial distributions, that is, , with a mixture distribution of (i.e., ) (see Proposition 2).

4.1. Test Functions

4.1.1. Ishigami’s Function ()

The Ishigami function is given by

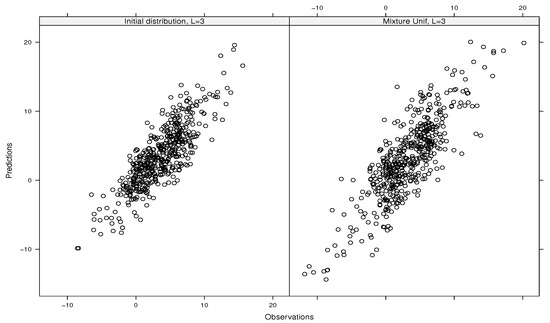

with , . The sensitivity indices are , , , , , and . Thus, we have because (see Proposition 1). Moreover, we are going to remove (in our emulator) the main effect term corresponding to , as . Figure 1 depicts the predictions versus observations (i.e., simulated outputs) for 500 sample points. We can see that our predictions are in line with the simulated values of the output for both distributions used.

Figure 1.

Predictions versus simulated outputs (observations) for Ishigami’s function.

4.1.2. Sobol’s g-Function ()

The g-function [49] is defined as follows:

with . This function is differentiable almost everywhere and has different properties according to the values of [17]. Indeed, the following applies:

- If (i.e., type A), we have , , , , and , . We have , as . Moreover, we have , suggesting that we should only include and in our emulator;

- If (i.e., type B), we have and , leading to ;

- If (i.e., type C), we have and , , and we can check that and that all the inputs are important. Thus, we have to include a lot of ANOVA components in our emulator with small effective effects since the variance of that function is fixed. More information is needed to design the structure of this function better.

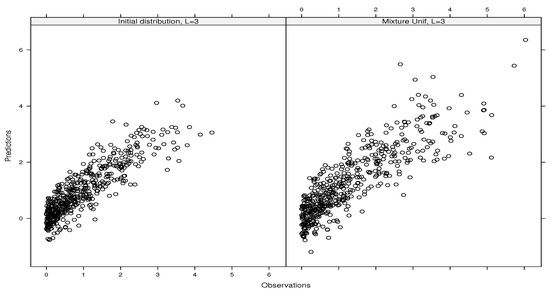

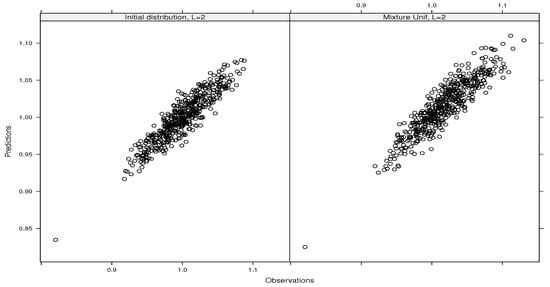

Figure 2 and Figure 3 depict the predictions versus the simulated outputs (i.e., observations) of the g-function of type A and type B, respectively, for 500 sample points. Even though we obtain quasi-perfect predictions in the case of the g-function of type B, those of type A face some difficulties in predicting small and null values.

Figure 2.

Predictions versus simulated outputs (observations) for the g-function of type A.

Figure 3.

Predictions versus simulated outputs (observations) for the g-function of type B.

5. Application: Heat Diffusion Models with Stochastic Initial Conditions

We deployed our emulators to approximate a high-dimensional model defined by the one-dimensional (1-D) diffusion PDE with stochastic initial conditions, that is,

where represents the diffusion coefficient. The quantity of interest (QoI) is given by . The spatial discretization consists of subdividing the spatial domain, , into d equally-sized cells, leading to d initial conditions or inputs, that is, with . We assume that the inputs are independent, and . A time step of was considered, starting from 0 up to .

It is known [12] that the exact gradient can be computed as follows: , where stands for the adjoint model of f evaluated at . Note that only one evaluation of such a function is needed to obtain the gradient of the QOI. The adjoint model is given by (see ref. [12])

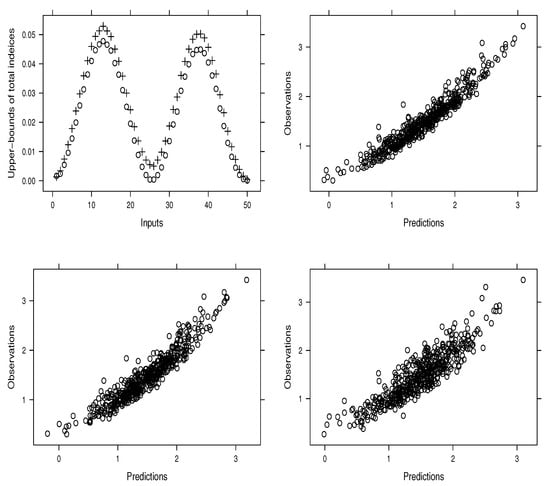

The values of the hyper-parameters derived in this paper (considered at the beginning of Section 4) were used to compute the results below. Using the exact values of the gradient (i.e., ), we computed the main indices (s) and the upper bounds of the total indices (s) (see Figure 4, top-left panel). It appears that the upper bounds are almost equal to the main indices, showing the absence of interactions. This information is confirmed by the fact that , leading to . Based on this information, Figure 4 (top-right panel) depicts the predictions versus the observations (simulated outputs) using the derivative-based emulator with all the first-order partial derivatives. In the same sense, Figure 4 (bottom-left panel) depicts the observations versus predictions by including only the ANOVA components for which , that is, 37 components. Both results are close together and are in line with the observations. Finally, Figure 4 (bottom-right panel) shows the observations versus predictions for derivative-free emulators using only the components for which . It turns out that our emulators provide reliable estimations. As expected (see MSEs), the derivative-based emulator using exact values of derivatives performs better.

Figure 4.

Main indices (∘) and upper bounds of total indices (+) of inputs (top-left panel); observations versus predictions using either derivative-based emulators (see the top-right panel when including all components and the bottom-left panel for all other cases) or derivative-free emulators (bottom-right panel).

6. Conclusions

In this paper, we have proposed simple, practical, and sound emulators of simulators or estimators of functions using either the available derivatives and distribution functions of inputs or derivative-free methods, such as stochastic approximations. Since our emulators or estimators rely exactly on Db-ANOVA, Sobol’s indices and their upper bounds were used to derive the appropriate structures of such emulators so as to reduce epistemic uncertainty. The derivative-based and derivative-free emulators reach the parametric rate of convergence (i.e., ) and have dimension-free biases. Moreover, the former emulators enjoy dimension-free MSEs when all cross-partial derivatives are available and, therefore, can cope with higher-dimensional models. However, the MSEs of the derivative-free estimators depend on dimensionality, and we have shown that the stability and accuracy of such emulators require about model runs for full emulators and about runs for unbiased, truncated emulators of order .

To be able to deploy our emulators in practice, we have provided the best known values for the hyper-parameters needed. The numerical results have revealed that our emulators provide efficient predictions of models once the adequate structures of such models are used. While such results are promising, further improvements are going to be investigated in our next works by i) considering distributions of s that may help in reducing the dimensionality in MSEs, ii) taking into account the discrepancy errors by using the output observations (rather than their mean only), and iii) considering local emulators. It is also worth investigating adaptations of such methods in the presence of empirical data.

Funding

This research received no external funding, except the APC funded by stats-MDPI.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No empirical datasets are used in this paper. All simulated data are already in the paper.

Acknowledgments

We would like to thank the three reviewers for their suggestions and comments that have helped improve our manuscript.

Conflicts of Interest

The author has no conflicts of interest to declare regarding the content of this paper.

Appendix A. Derivations of Unbiased Truncations (Proposition 1)

Keeping in mind the Sobol indices, it is known that , which comes down to for functions of the form . Thus, we have

Taking the difference yields

which implies that .

Additionally, taking yields

which implies .

Appendix B. Proof of Theorem 1

Denote , , , , and . The Taylor expansion of about of order is given by

For any with the cardinality ; using for the indicator function and lead to . Additionally, using implies that , .

First, by evaluating the above expansion at and taking the expectation with respect to , can be written as

We can see that iff . Equation implies if ; otherwise, . The last quantity is equivalent to when with , and it leads to , , which also implies that .

When , we have ; and , by independence. Thus, we have

using the change of variable . At this point, the setting and gives the approximation of of order for all .

Second, taking the expectation with respect to and the sum over , we obtain the result, that is,

bearing in mind Equation (1).

Third, we can increase this order up to by using the constraints to eliminate some higher-order terms. Thus, the order is reached. Other constraints can lead to the order with .

Appendix C. Proof of Lemma 1

Recall that . By using the definition of the absolute value and the fact that , we can check that . Additionally, using the following inequality (see Lemma 1 in ref. [10])

for a given , we can write

and the result holds.

Appendix D. Proof of Theorem 2

Recall that with , . Using

the bias becomes

Note that the quantity has been investigated in ref. [13]. To make use of such results in our context given by Equation (5), let . Thus, we have

When with and , then

Using Equation (A1); and the fact that , we can write

where . The results hold by using Lemma 1.

Appendix E. Proof of Corollary 1

First, keeping in mind Theorem 2, we can see that the smallest value of is , which is reached when . Thus, the bias verifies

Second, using , we can write

because and .

Finally, if with and , the following bias is used to derive the result:

Appendix F. Proof of Corollary 2

For , we can see that , and the order of approximation in Corollary 1 becomes or because and . When , the smallest order is obtained thanks to ref. [13], Corollary 2.

Appendix G. Proof of Theorem 3

For the variance of our emulator, we can write

Using , and keeping in mind Equation (5), we can write

because . Keeping in mind that and using , the variance becomes

because , and when ,

For the second result, by expanding and knowing that , we can see that . Additionally, using and the fact that and , we have

Finally, when and knowing that , we can write

Additionally, note that .

Appendix H. Proof of Corollary 3

Since and , we have . The first result is obvious using the variance of the emulator provided in Theorem 3 and the bias from Corollary 1.

The second result is due to the fact that when , the terms in the Db expansion of f are -orthogonal.

Appendix I. Proof of Corollary 5

As , then and . Thus, the first result holds.

For the second result, taking with yields

Appendix J. Proof of Corollary 6

Thus, the results hold using Corollaries 2 and 3.

References

- Max, D.; Morris, T.J.M.; Ylvisaker, D. Bayesian Design and Analysis of Computer Experiments: Use of Derivatives in Surface Prediction. Technometrics 1993, 35, 243–255. [Google Scholar]

- Solak, E.; Murray-Smith, R.; Leithead, W.; Leith, D.; Rasmussen, C. Derivative observations in Gaussian process models of dynamic systems. Adv. Neural Inf. Process. Syst. 2002, 15, 1–8. [Google Scholar]

- Le Dimet, F.X.; Talagrand, O. Variational algorithms for analysis and assimilation of meteorological observations: Theoretical aspects. Tellus A Dyn. Meteorol. Oceanogr. 1986, 38, 97–110. [Google Scholar] [CrossRef]

- Le Dimet, F.X.; Ngodock, H.E.; Luong, B.; Verron, J. Sensitivity analysis in variational data assimilation. J. Meteorol. Soc. Jpn. 1997, 75, 245–255. [Google Scholar] [CrossRef]

- Cacuci, D.G. Sensitivity and Uncertainty Analysis—Theory; Chapman & Hall/CRC: Boca Raton, FL, USA, 2005. [Google Scholar]

- Gunzburger, M.D. Perspectives in Flow Control and Optimization; SIAM: Philadelphia, PA, USA, 2003. [Google Scholar]

- Borzi, A.; Schulz, V. Computational Optimization of Systems Governed by Partial Differential Equations; SIAM: Philadelphia, PA, USA, 2012. [Google Scholar]

- Ghanem, R.; Higdon, D.; Owhadi, H. Handbook of Uncertainty Quantification; Springer International Publishing: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Agarwal, A.; Dekel, O.; Xiao, L. Optimal Algorithms for Online Convex Optimization with Multi-Point Bandit Feedback. In Proceedings of the COLT, Haifa, Israel, 27–29 June 2010; Citeseer: Princeton, NJ, USA, 2010; pp. 28–40. [Google Scholar]

- Bach, F.; Perchet, V. Highly-Smooth Zero-th Order Online Optimization. In Proceedings of the 29th Annual Conference on Learning Theory, New York, NY, USA, 23–26 June 2016; Volume 49, pp. 257–283. [Google Scholar]

- Akhavan, A.; Pontil, M.; Tsybakov, A.B. Exploiting higher order smoothness in derivative-free optimization and continuous bandits. In Proceedings of the NIPS ’20: Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020. [Google Scholar]

- Lamboni, M. Optimal and Efficient Approximations of Gradients of Functions with Nonindependent Variables. Axioms 2024, 13, 426. [Google Scholar] [CrossRef]

- Lamboni, M. Optimal Estimators of Cross-Partial Derivatives and Surrogates of Functions. Stats 2024, 7, 1–22. [Google Scholar] [CrossRef]

- Chkifa, A.; Cohen, A.; DeVore, R.; Schwab, C. Sparse adaptive Taylor approximation algorithms for parametric and stochastic elliptic PDEs. ESAIM Math. Model. Numer. Anal. 2013, 47, 253–280. [Google Scholar] [CrossRef]

- Patil, P.; Babaee, H. Reduced-Order Modeling with Time-Dependent Bases for PDEs with Stochastic Boundary Conditions. SIAM/ASA J. Uncertain. Quantif. 2023, 11, 727–756. [Google Scholar] [CrossRef]

- Sobol, I.M.; Kucherenko, S. Derivative based global sensitivity measures and the link with global sensitivity indices. Math. Comput. Simul. 2009, 79, 3009–3017. [Google Scholar] [CrossRef]

- Kucherenko, S.; Rodriguez-Fernandez, M.; Pantelides, C.; Shah, N. Monte Carlo evaluation of derivative-based global sensitivity measures. Reliab. Eng. Syst. Saf. 2009, 94, 1135–1148. [Google Scholar] [CrossRef]

- Lamboni, M.; Iooss, B.; Popelin, A.L.; Gamboa, F. Derivative-based global sensitivity measures: General links with Sobol’ indices and numerical tests. Math. Comput. Simul. 2013, 87, 45–54. [Google Scholar] [CrossRef]

- Roustant, O.; Fruth, J.; Iooss, B.; Kuhnt, S. Crossed-derivative based sensitivity measures for interaction screening. Math. Comput. Simul. 2014, 105, 105–118. [Google Scholar] [CrossRef]

- Roustant, O.; Barthe, F.; Iooss, B. Poincar inequalities on intervals—Application to sensitivity analysis. Electron. J. Statist. 2017, 11, 3081–3119. [Google Scholar] [CrossRef]

- Lamboni, M. Derivative-based integral equalities and inequality: A proxy-measure for sensitivity analysis. Math. Comput. Simul. 2021, 179, 137–161. [Google Scholar] [CrossRef]

- Lamboni, M. Weak derivative-based expansion of functions: ANOVA and some inequalities. Math. Comput. Simul. 2022, 194, 691–718. [Google Scholar] [CrossRef]

- Lamboni, M.; Kucherenko, S. Multivariate sensitivity analysis and derivative-based global sensitivity measures with dependent variables. Reliab. Eng. Syst. Saf. 2021, 212, 107519. [Google Scholar] [CrossRef]

- Krige, D.G. A Statistical Approaches to Some Basic Mine Valuation Problems on the Witwatersrand. J. Chem. Metall. Soc. S. Afr. 1951, 52, 119–139. [Google Scholar]

- Currin, C.; Mitchell, T.; Morris, M.; Ylvisaker, D. Bayesian Prediction of Deterministic Functions, with Applications to the Design and Analysis of Computer Experiments. J. Am. Stat. Assoc. 1991, 86, 953–963. [Google Scholar] [CrossRef]

- Oakley, J.E.; O’Hagan, A. Probabilistic sensitivity analysis of complex models: A Bayesian approach. J. R. Stat. Soc. Ser. B Stat. Methodol. 2004, 66, 751–769. [Google Scholar] [CrossRef]

- Conti, S.; O’Hagan, A. Bayesian emulation of complex multi-output and dynamic computer models. J. Stat. Plan. Inference 2010, 140, 640–651. [Google Scholar] [CrossRef]

- Kennedy, M.C.; O’Hagan, A. Bayesian calibration of computer models. J. R. Stat. Soc. Ser. B Stat. Methodol. 2001, 63, 425–464. [Google Scholar] [CrossRef]

- Xiu, D.; Karniadakis, G. The Wiener-Askey polynomial chaos for stochastic di eren-tial equations. Siam J. Sci. Comput. 2002, 24. [Google Scholar] [CrossRef]

- Ghanem, R.G.; Spanos, P.D. Stochastic Finite Elements: A Spectral Approach; Springer: New York, NY, USA, 1991; pp. 1–214. [Google Scholar]

- Sudret, B. Global sensitivity analysis using polynomial chaos expansions. Reliab. Eng. Syst. Saf. 2008, 93, 964–979. [Google Scholar] [CrossRef]

- Wahba, G. Spline Models for Observational Data; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1990. [Google Scholar] [CrossRef]

- Wong, R.K.W.; Storlie, C.B.; Lee, T.C.M. A Frequentist Approach to Computer Model Calibration. J. R. Stat. Soc. Ser. B Stat. Methodol. 2016, 79, 635–648. [Google Scholar] [CrossRef]

- Friedman, J.H.; Popescu, B.E. Predictive learning via rule ensembles. Ann. Appl. Stat. 2008, 2, 916–954. [Google Scholar] [CrossRef]

- Horiguchi, A.; Pratola, M.T. Estimating Shapley Effects in Big-Data Emulation and Regression Settings using Bayesian Additive Regression Trees. arXiv 2024, arXiv:2304.03809. [Google Scholar]

- Migliorati, G.; Nobile, F.; Schwerin, E.; Tempone, R. Analysis of Discrete L2 Projection on Polynomial Spaces with Random Evaluations. Found. Comput. Math. 2014, 14, 419–456. [Google Scholar]

- Hampton, J.; Doostan, A. Coherence motivated sampling and convergence analysis of least squares polynomial Chaos regression. Comput. Methods Appl. Mech. Eng. 2015, 290, 73–97. [Google Scholar] [CrossRef]

- Cohen, A.; Davenport, M.A.; Leviatan, D. On the stability and accuracy of least squares approximations. arXiv 2018, arXiv:math.NA/1111.4422. [Google Scholar] [CrossRef]

- Tsybakov, A. Introduction to Nonparametric Estimation; Springer: New York, NY, USA, 2009. [Google Scholar]

- Zemanian, A. Distribution Theory and Transform Analysis: An Introduction to Generalized Functions, with Applications; Dover Books on Advanced Mathematics; Dover Publications: Garden City, NY, USA, 1987. [Google Scholar]

- Strichartz, R. A Guide to Distribution Theory and Fourier Transforms; Studies in advanced mathematics; CRC Press: Boca Raton, FL, USA, 1994. [Google Scholar]

- Caflisch, R.E.; Morokoff, W.J.; Owen, A.B. Valuation of mortgage-backed securities using Brownian bridges to reduce effective dimension. J. Comput. Financ. 1997, 1, 27–46. [Google Scholar] [CrossRef]

- Owen, A. The dimension distribution and quadrature test functions. Stat. Sin. 2003, 13, 1–17. [Google Scholar]

- Rabitz, H. General foundations of high dimensional model representations. J. Math. Chem. 1999, 25, 197–233. [Google Scholar] [CrossRef]

- Kuo, F.; Sloan, I.; Wasilkowski, G.; Woźniakowski, H. On decompositions of multivariate functions. Math. Comput. 2010, 79, 953–966. [Google Scholar] [CrossRef]

- Alatawi, M.S.; Martinucci, B. On the Elementary Symmetric Polynomials and the Zeros of Legendre Polynomials. J. Math. 2022, 1–9. [Google Scholar] [CrossRef]

- Arafat, A.; El-Mikkawy, M. A Fast Novel Recursive Algorithm for Computing the Inverse of a Generalized Vandermonde Matrix. Axioms 2023, 12, 27. [Google Scholar] [CrossRef]

- Rawashdeh, E. A simple method for finding the inverse matrix of Vandermonde matrix. Mathematiqki Vesnik 2019, 71, 207–213. [Google Scholar]

- Homma, T.; Saltelli, A. Importance measures in global sensitivity analysis of nonlinear models. Reliab. Eng. Syst. Saf. 1996, 52, 1–17. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).