2.2. Method

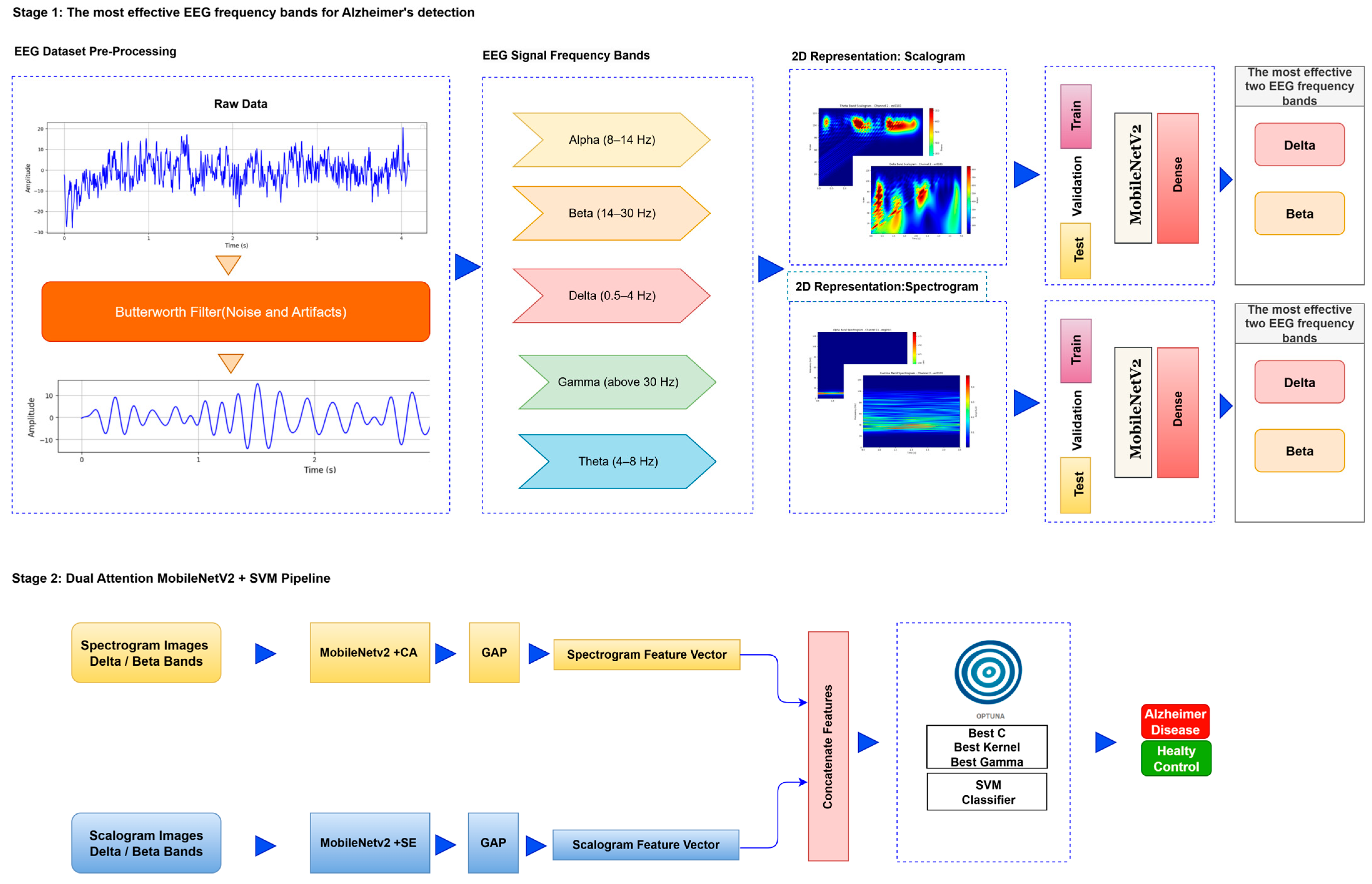

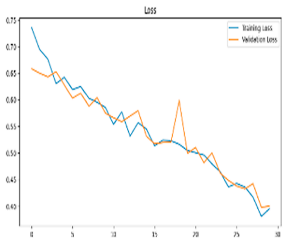

The deep learning approach proposed for image-based analysis of Alzheimer’s disease consists of a series of systematic stages. First, preprocessing operations such as denoising and artifact cleaning are applied to the EEG signals to transform the raw data into a form suitable for analysis. This stage is followed by transforming the signals. Signals are transformed into time–frequency representations that reveal the dynamic properties of the signals by combining the time and frequency dimensions. This transformation is carried out by methods such as FT and CWT. The obtained time–frequency representations are divided into training, validation, and test data sets for the purpose of training, validation, and testing the deep learning model. Finally, these data sets are provided as input to a lightweight deep learning model. In the first stage, the most relevant EEG frequency bands for Alzheimer’s diagnosis are identified, allowing the analysis to focus solely on the most informative components rather than processing the entire dataset. In the second stage, features are extracted from these selected bands using a lightweight, frozen neural network integrated with attention mechanisms, and subsequently classified using an optimized SVM. The flow chart of the proposed model is presented in

Figure 1.

Step 1: Preprocessing: EEG recordings are prone to artifacts such as eye movements, muscle activity, and network-related noise [

21]. This study applied a 4th-order band-pass Butterworth filter (0.5–45 Hz) to remove the noise caused by direct current (DC) drift from the EEG signals and to obtain the relevant sub-bands. With this method, unwanted high-frequency noise was effectively eliminated.

Step 2: Segmentation to Frequency Bands: Signals were separated into frequency bands using wavelet-based algorithms to observe the changes in EEG sub-bands in Alzheimer’s disease and to determine which frequency band is effective in early diagnosis. To perform a more detailed analysis, the EEG signal of each channel was divided into specific frequency bands.

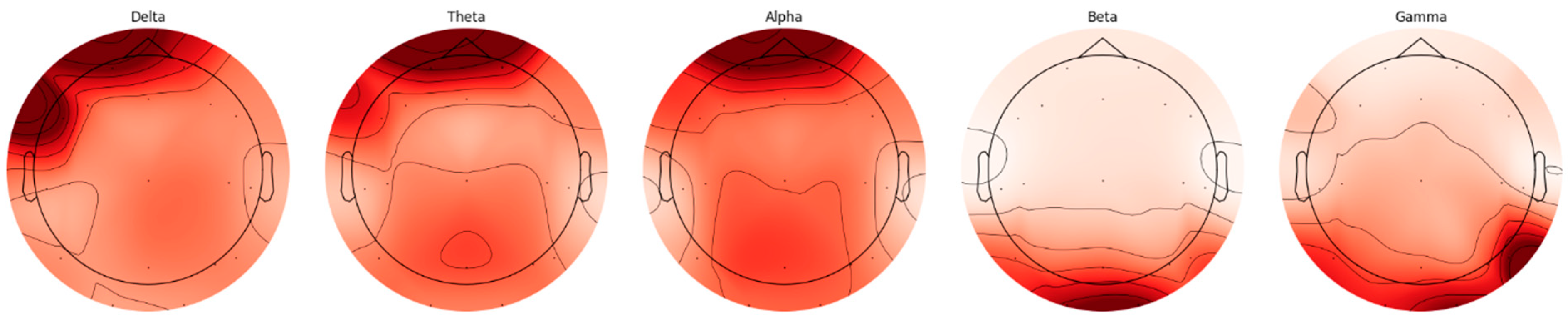

Figure 2 shows the spectral distribution of frequency bands obtained from a relevant channel.

EEG signals are divided into five basic frequency bands: Delta, Theta, Alpha, Beta, and Gamma [

22]. The Delta band covers frequencies below 4 Hz, representing deep sleep and slow wave activities. The Theta band is in the range of 4–8 Hz and is associated with light sleep and relaxation states. The Alpha band reflects states of wakefulness, relaxation, and concentration in the range of 8–14 Hz. The Beta band is associated with active thinking, cognitive processes, and motor activities in the range of 14–30 Hz, while the Gamma band covers frequencies above 30 Hz and represents higher cognitive processes. The distributions of frequency bands on the scalp were evaluated separately for AD and HC, and topographic maps were created using the Welch method. The Weltch method calculates each channel’s power spectral density (PSD) in the specified frequency band. It draws a topographic map using each frequency band’s average PSD values and channel positions. These maps visualize the activity intensities of different frequency bands in the brain and are presented in

Figure 2 and

Figure 3.

When topographic maps are examined, a significant increase in low-frequency bands (Delta and Theta) is observed in individuals with AD. In contrast, a significant decrease in high-frequency bands (Beta and Gamma) is noted. In contrast, a more homogeneous distribution between frequency bands in HC individuals is exhibited.

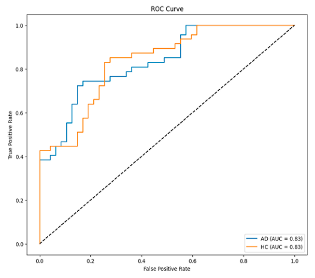

Step 3: Time–Frequency Representation: In the proposed method, the model is evaluated using both scalogram and spectrogram images. Scalograms and spectrograms are created to include time and frequency domain information from EEG data. Scalograms are obtained using the wavelet transform. Wavelet transform provides an optimal time–frequency representation for non-stationary signals by using a wide window size for low frequencies and a narrow window size for high frequencies [

23]. Scalograms of EEG data are created using the Morlet wavelet, which is known for its ability to effectively capture time–frequency features.

The spectrogram is a visual representation of the power density of a signal over time and allows the examination of the signal’s frequency components over time [

24]. The Fourier Transform is used as the basis for creating spectrograms. This study analyzed the signal by dividing it into short intervals to apply the Fourier Transform. Each period was subjected to windowing to minimize losses and ensure continuity, and then overlapping was provided between the windows to increase the accuracy of the analysis process. The frequency spectrum of each window was calculated, and the obtained spectral density values were visualized in decibels and presented as a spectrum map in the time–frequency plane.

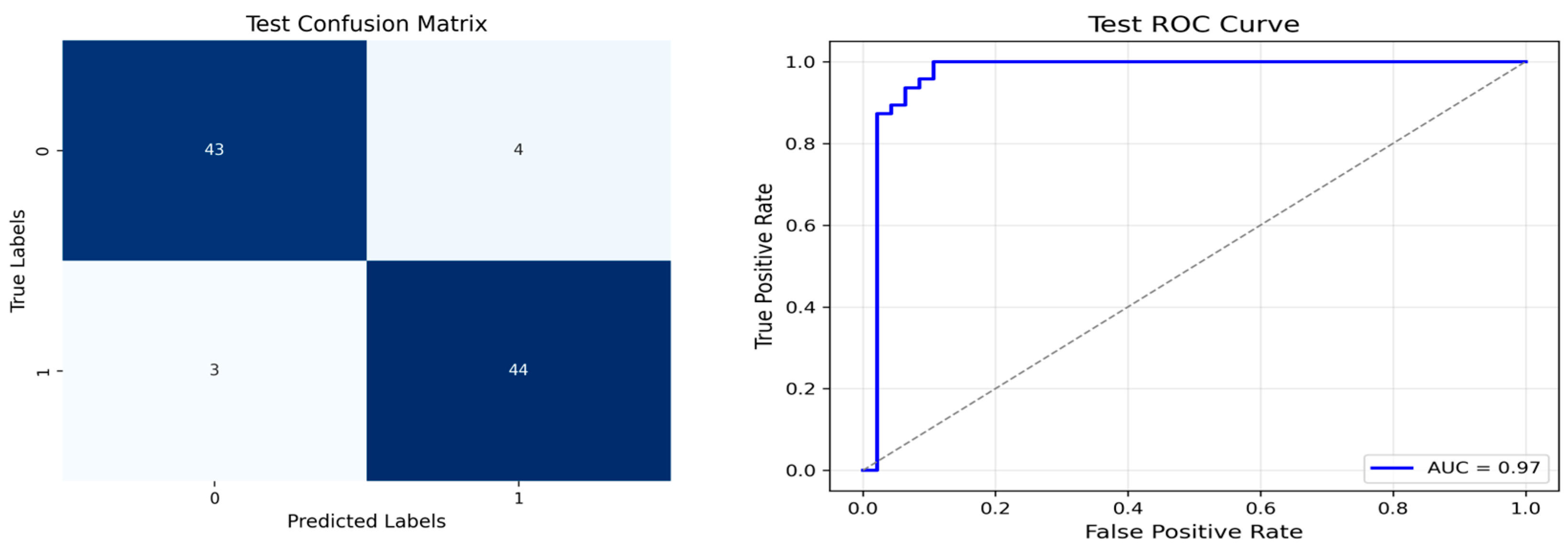

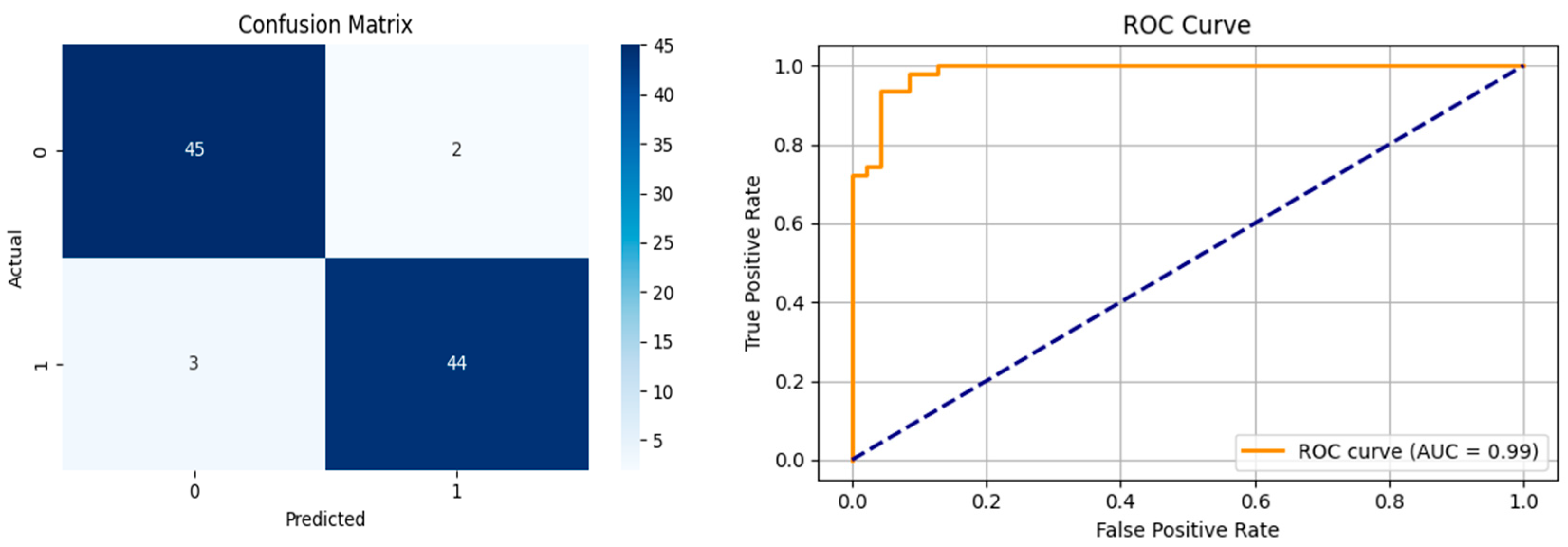

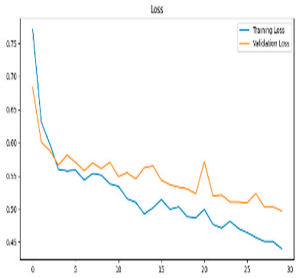

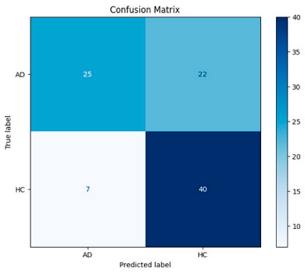

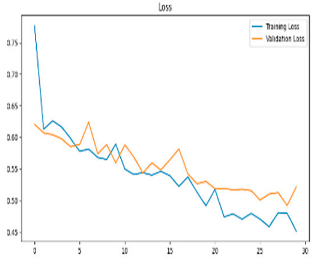

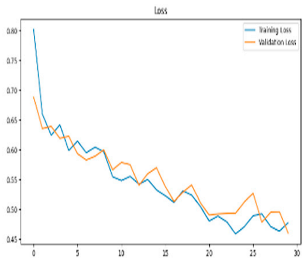

After the creation of image representations, a total of (19 × 24 = 456 AD − 19 × 24 = 456 HC) images were obtained for each frequency band group (Alpha, Beta, Delta, Gamma, Theta) from 19-channel EEG data of 24 individuals in the AD and HC groups. These data were fed to the model by separating them into 80% training, 10% validation, and 10% testing for each frequency band group. The same data partitioning strategy was consistently applied to both the MobileNetV2-based feature extraction and the SVM classification stages. The dataset was divided into training, validation, and test subsets on a subject-independent basis, and this separation was strictly preserved throughout all stages of the pipeline. Consequently, there was no overlap between the training and test sets, thereby completely eliminating the risk of data leakage.

Step 4: Feature Extraction-Integrated Attention for MobileNetV2: The MobileNetV2 model has fewer parameters than other CNN architectures, making it more efficient to deploy on low-power devices [

25]. The MobileNetV2 [

26] transfer learning model was used for training and feature extraction. MobileNetV2 is a lightweight Convolutional Neural Network architecture specifically designed to operate with high efficiency on mobile devices and embedded systems with limited computational resources. This architecture employs depthwise separable convolutions, which decompose standard convolution operations into computationally less intensive sub-operations. As a result, it significantly reduces the number of parameters and computational cost without compromising classification accuracy [

27]. Furthermore, MobileNetV2 incorporates inverted residual blocks with linear bottlenecks, enabling the efficient learning of complex feature representations [

28]. Due to these architectural advantages, MobileNetV2 was selected in this study for the purpose of feature extraction. Moreover, effective model training in EEG signal analysis typically requires large-scale labeled datasets. However, the non-stationary and stochastic nature of EEG signals poses significant challenges to the development of such datasets. Additionally, inter-subject variability further limits the reusability and generalizability of trained models across different individuals. To mitigate these limitations, transfer learning has been employed, enabling knowledge acquired from one task to be transferred to a related task. This approach facilitates model adaptation with limited data while preserving robustness to individual differences [

29]. In particular, the integration of transfer learning strategies within CNNs has proven beneficial for the diagnosis and classification of different stages of Alzheimer’s disease [

30].

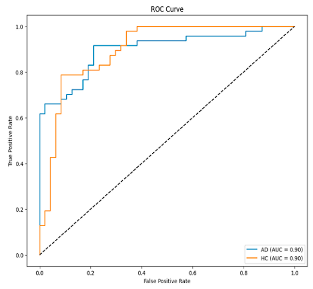

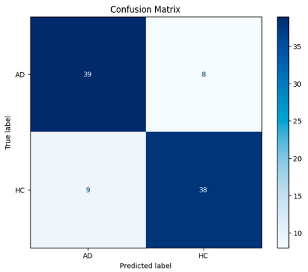

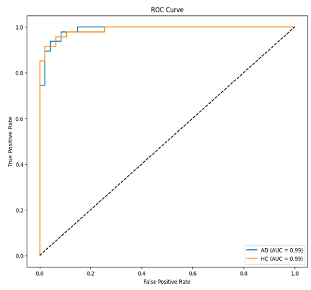

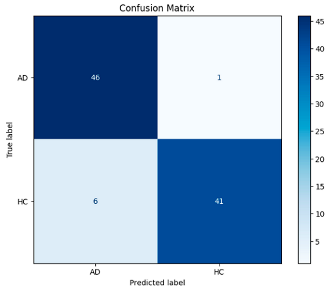

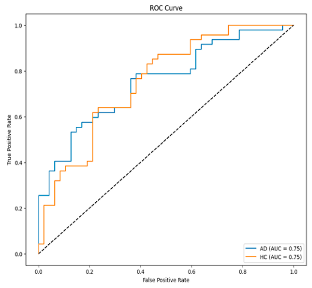

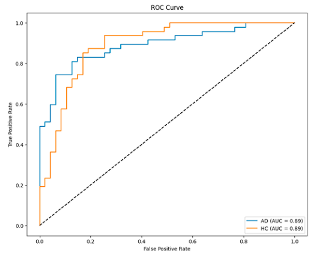

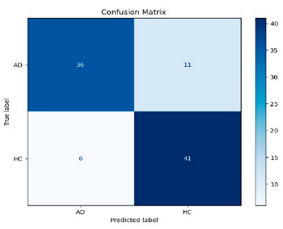

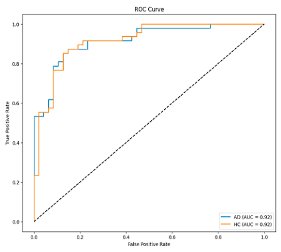

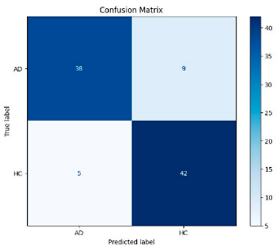

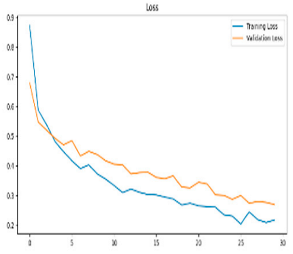

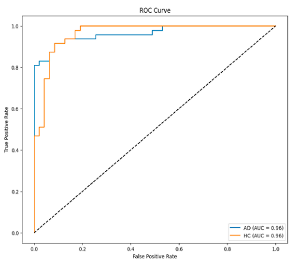

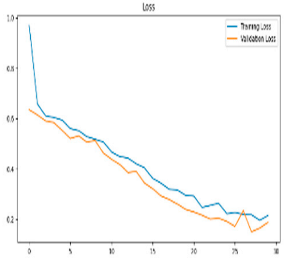

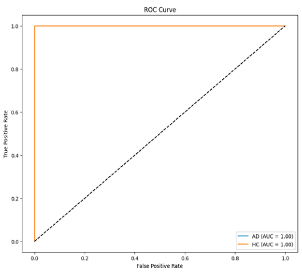

Alpha, Beta, Delta, Gamma, and Theta frequency bands were converted to scalogram and spectrogram images and classified one by one. As a result of ablation studies, it has been determined that Delta and Beta frequency bands play a decisive role in Alzheimer’s diagnosis. Accordingly, feature extraction was performed using MobileNetV2 models integrated with different attention mechanisms, with time and frequency domain images obtained from the Delta and Beta frequency bands. Since both spectrograms and scalograms represent time–frequency images, the feature extraction was performed using MobileNetV2 integrated with the SE attention mechanism for scalograms, and MobileNetV2 with Channel Attention Mechanism for spectrograms. The manner in which the representations (spectrograms vs. scalograms) are applied primarily depends on which dimension of information—frequency or time—is more dominantly and distinctively presented. Spectrograms, generated via the FT, typically emphasize frequency resolution. FT performs fixed-frequency analyses within time windows, facilitating the capture of inter-channel frequency patterns. Therefore, Channel Attention is well-suited for highlighting discriminative frequency-based information across different EEG channels.

In contrast, scalograms, derived from the CWT, capture more localized and fine-grained temporal variations through scale-adapted wavelets. This characteristic enables the extraction of time-sensitive discriminative features. Consequently, SE attention is more appropriate for emphasizing critical activations along the temporal dimension.

In recent years, attention mechanisms have been stated to be an effective method in increasing model performance in the field of computer vision [

31]. The primary function of attention mechanisms is to emphasize meaningful information and suppress unimportant information. These mechanisms are generally implemented as spatial attention, Channel Attention, or a combination of both. In this study, SE [

32] and CA were integrated into the MobileNetV2 model and used for feature extraction. The SE attention mechanism converts the input feature maps into a single vector via global average pooling in the compression step. Then it maps this vector to a smaller size via a fully connected layer [

33].

The Channel Attention Mechanism aims to learn the importance of each channel in an input feature map and integrates this information into the model’s weighting. This process enables the model to learn the essential features and optimize its performance via the attention mechanism.

Step 5: Classification: In the final step, SVM is utilized for the classification process. The determination of hyperparameters in SVM is performed using Optuna [

34]. Optuna participates in determining the best values by performing trial and error with different hyperparameter values. It provides better results than traditional methods such as Grid Search [

29] or Random Search [

35,

36].

The Optuna framework is used to address the problems of parameter tuning difficulty, lack of self-learning ability, and the limited generalizability of models obtained with traditional methods when faced with multiple parameter inputs [

37].