Object Tracking with LiDAR: Monitoring Taxiing and Landing Aircraft

Abstract

:Featured Application

Abstract

1. Introduction

Background

2. Prototype System and Methods

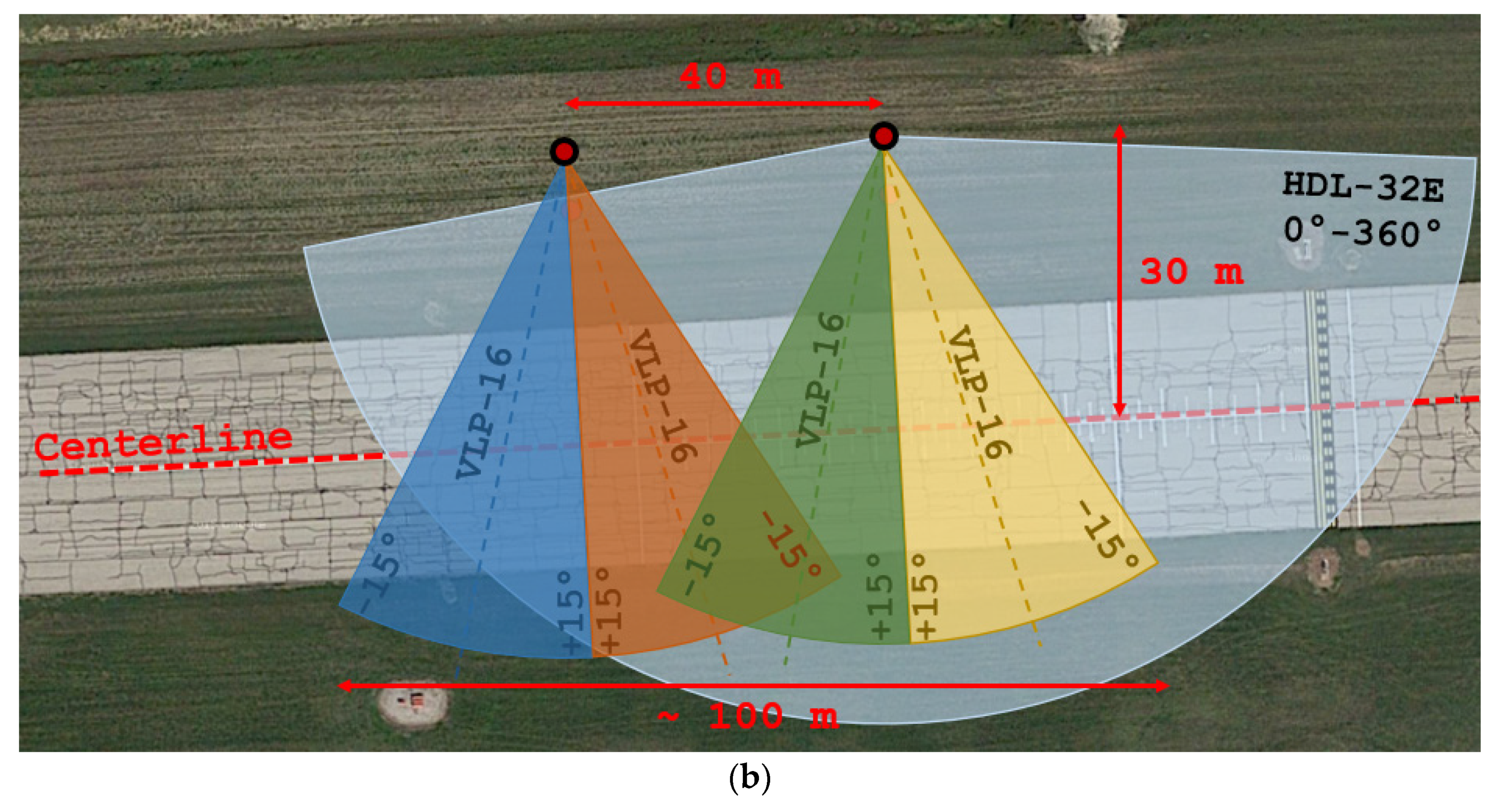

2.1. System Overview

2.2. Sensor Georeferencing

2.3. Aircraft Segmentation from LiDAR Scans

2.4. Motion Models

2.5. Object Space Reconstruction with Various Motion Models

2.6. Estimating the Motion Model with Volume Minimization

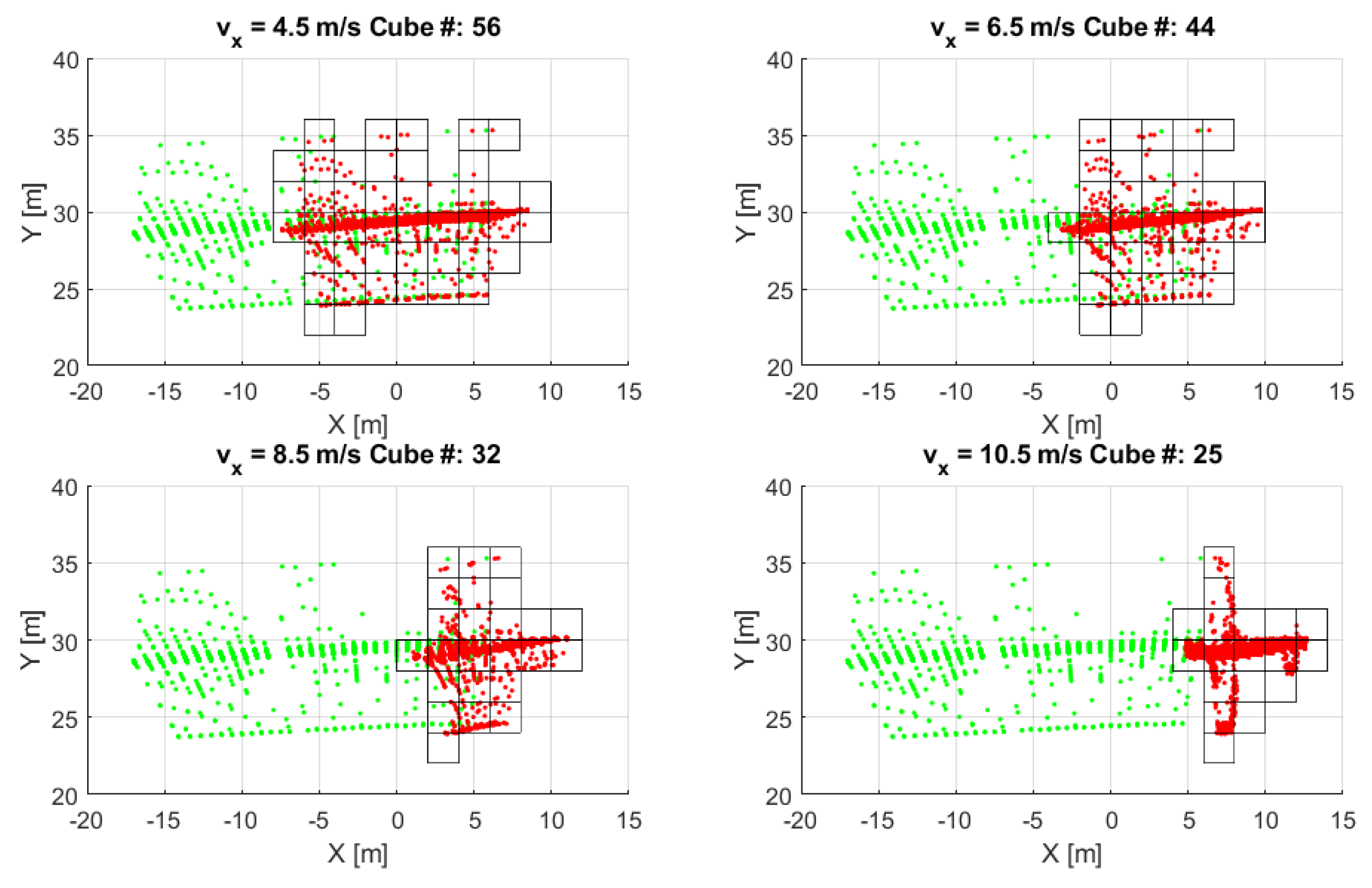

2.7. Refinement of the Volume Minimization: Motion Estimation with Cube Trajectories

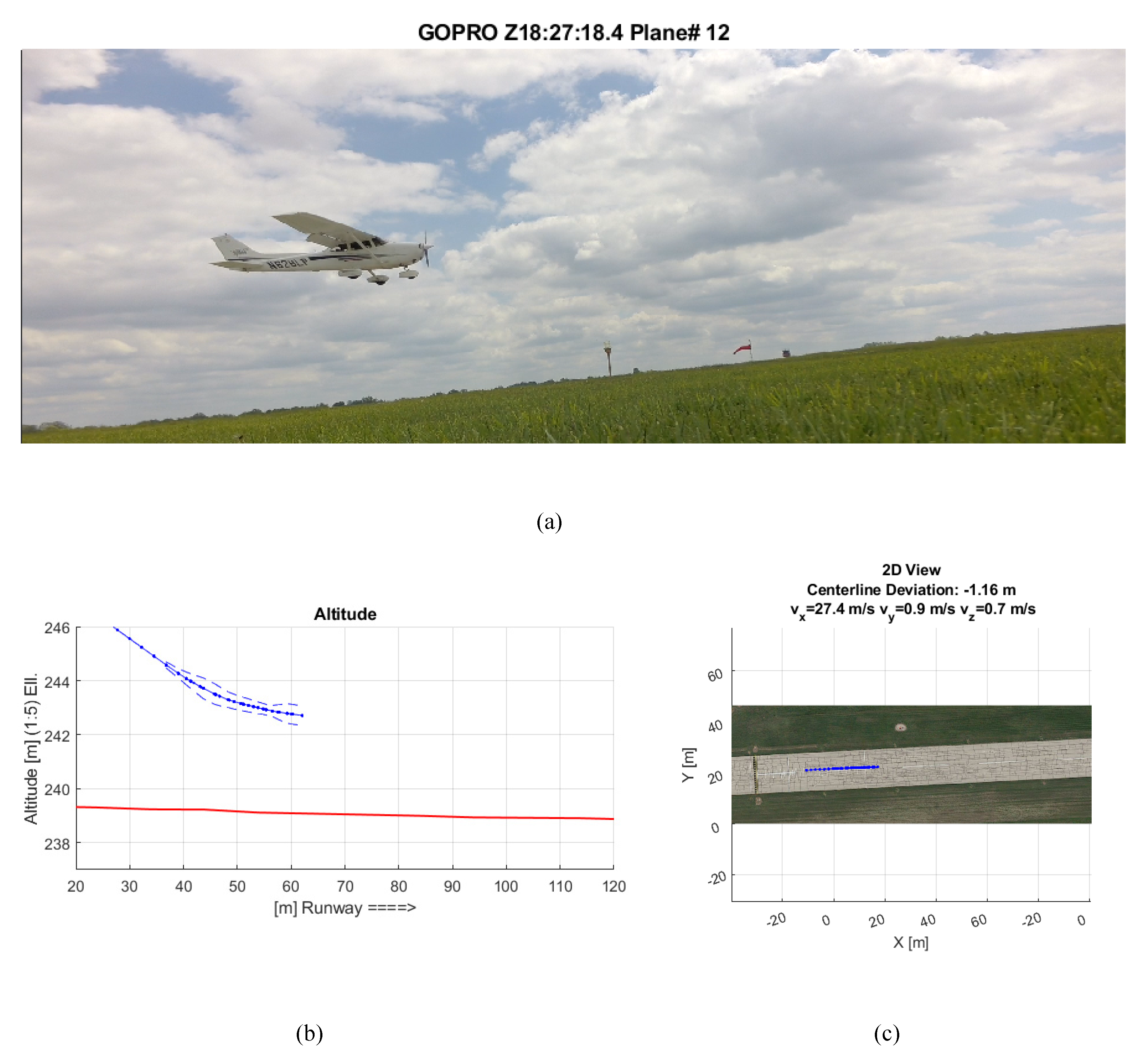

3. Field Tests

4. Results

5. Discussion

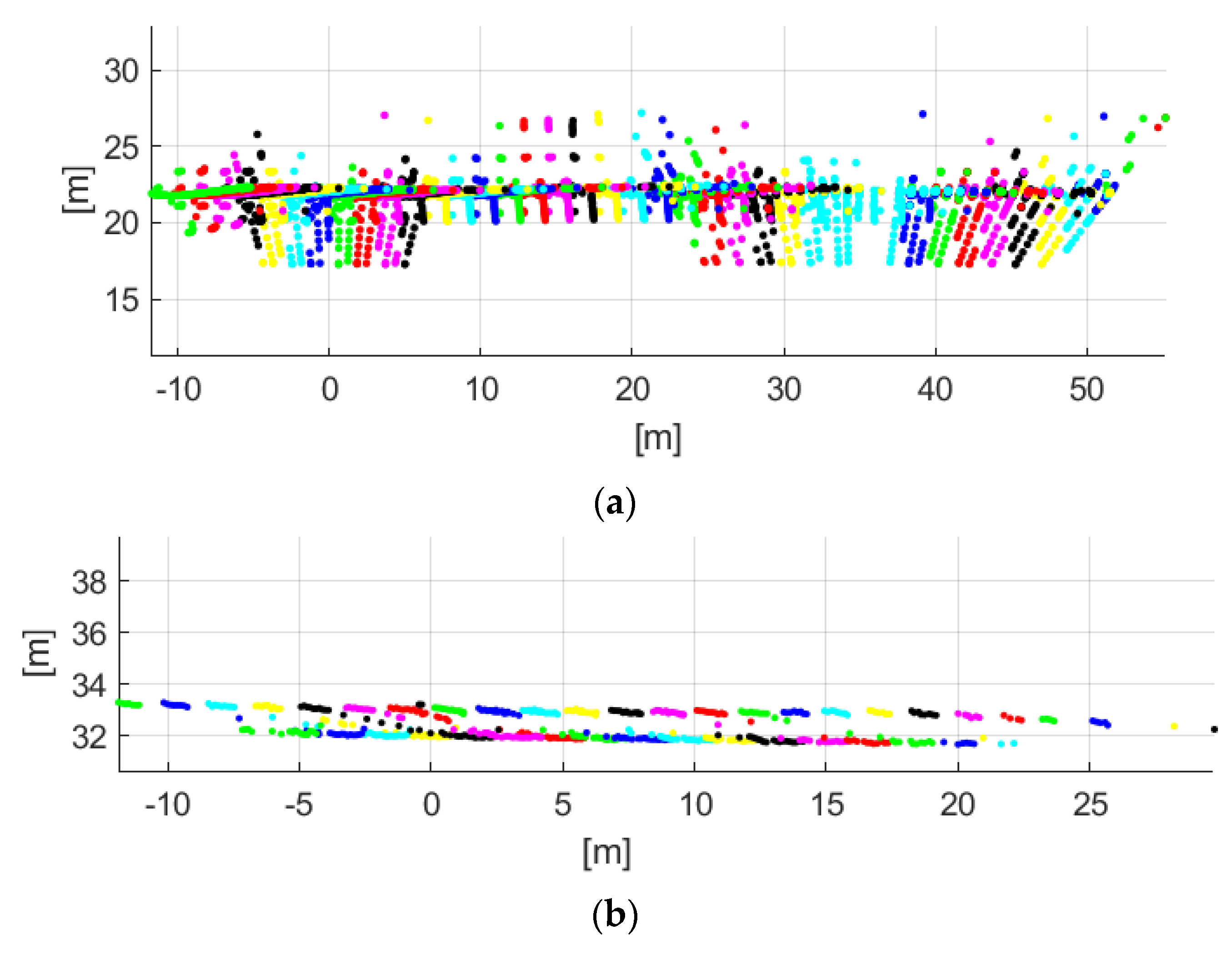

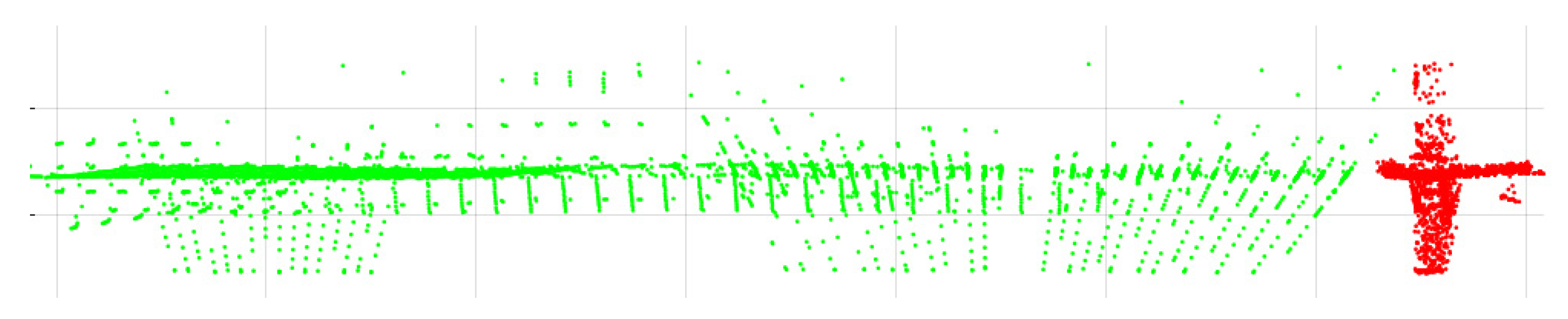

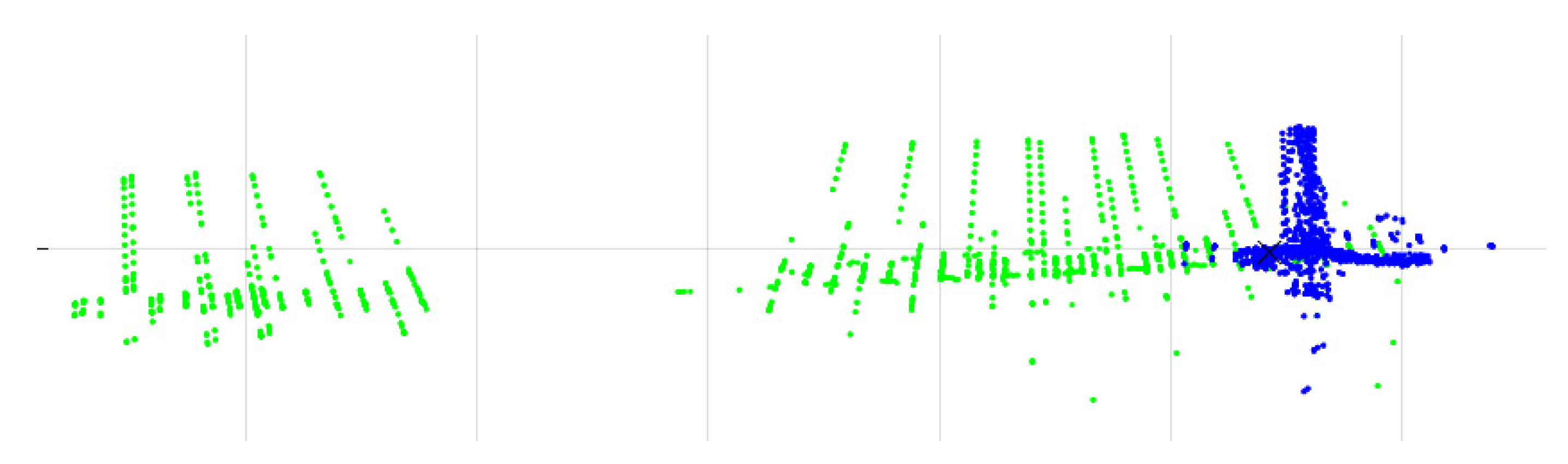

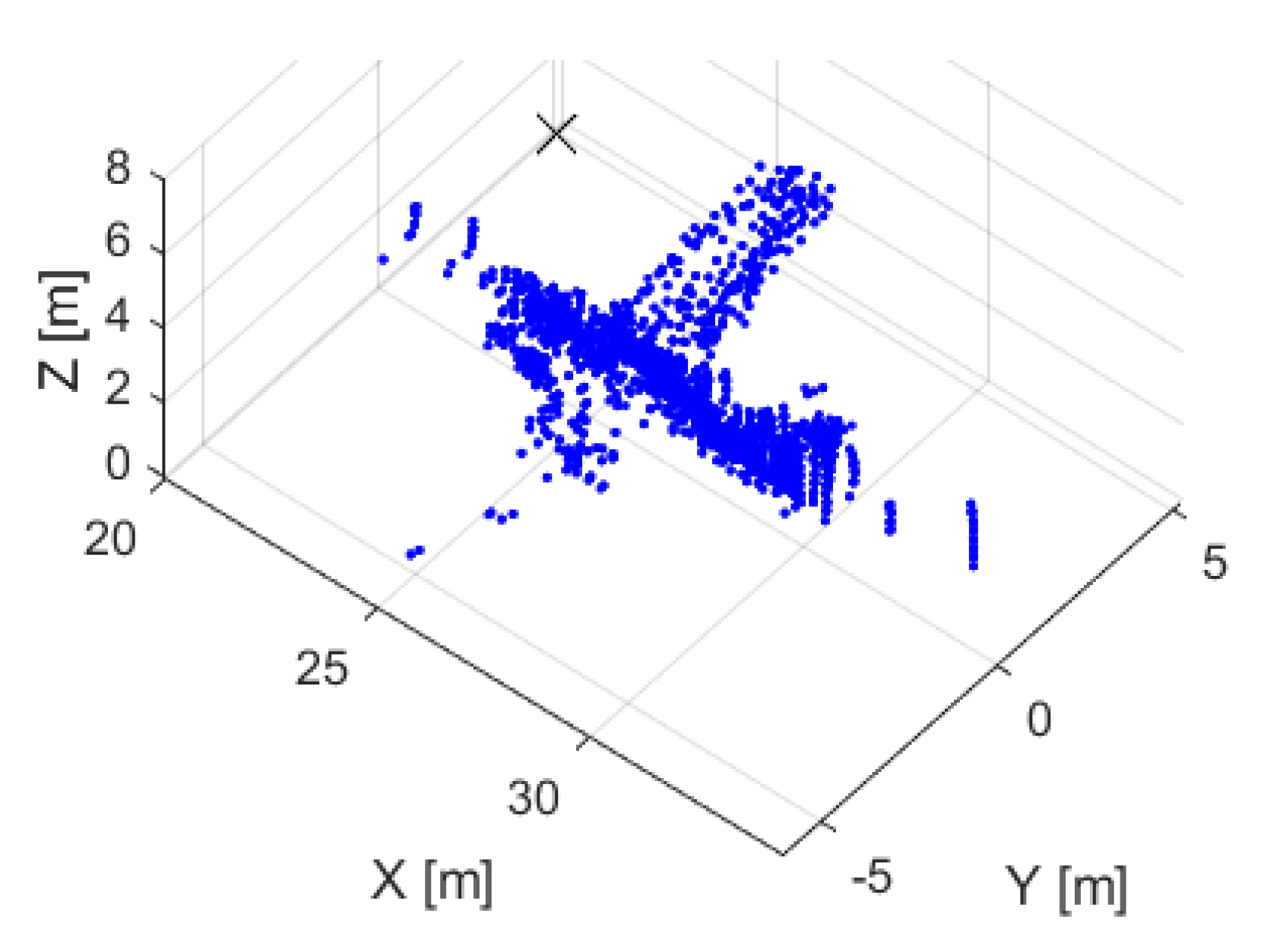

- The reconstruction quality is one indicator that can be used to compare the various algorithms and to assess the performance in general. If the reconstruction is good, the aircraft and its details are recognizable, indicating that the derived motion pattern is also close to the actual trajectory. A fuzzy, blurry reconstruction indicates that the estimated motion is not adequate. The reconstructions of the COG, VM-CA and CT algorithms in Figure 15 show that VM and CT outperform COG and CT provides a better reconstruction than VM. The CT reconstruction is fuzzy compared to the reconstruction provided by the CT algorithm. The reason of the underperformance of the COG method is that different body points are backscattered at different epochs, and consequently, the COG does not accurately represent the center of gravity of the airplane body. Clearly, COG tends to fail when the scans contain small number of points from the tracked object. This is also the case for Test #2, when the object sensor distance is around 33 m, and thus, not enough points are captured from the Cessna-size airplanes. In addition, the sensor network is installed on one side of the runway, which means that the sensors capture points from one side of the airplane. This clearly pulls the COG to the direction of that of the sensors. In contrast to COG, the CT is able to provide a fairly good quality reconstruction (see Figure 15), indicating that the algorithm performs well even if the scans contain a small number of points.

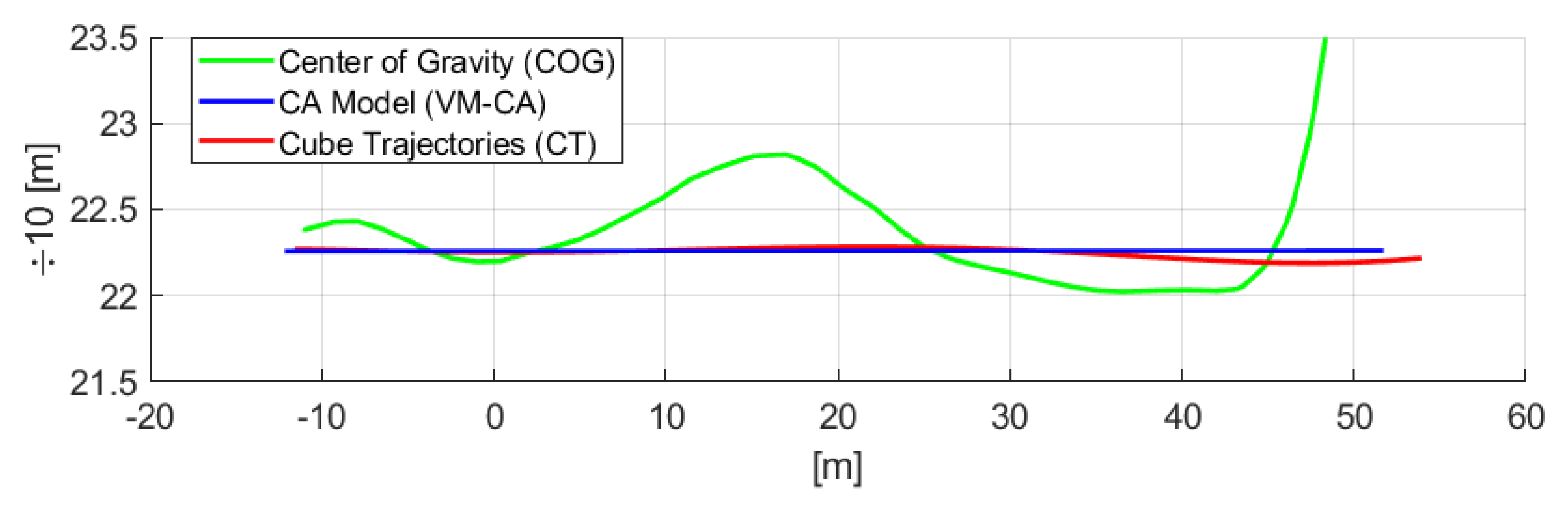

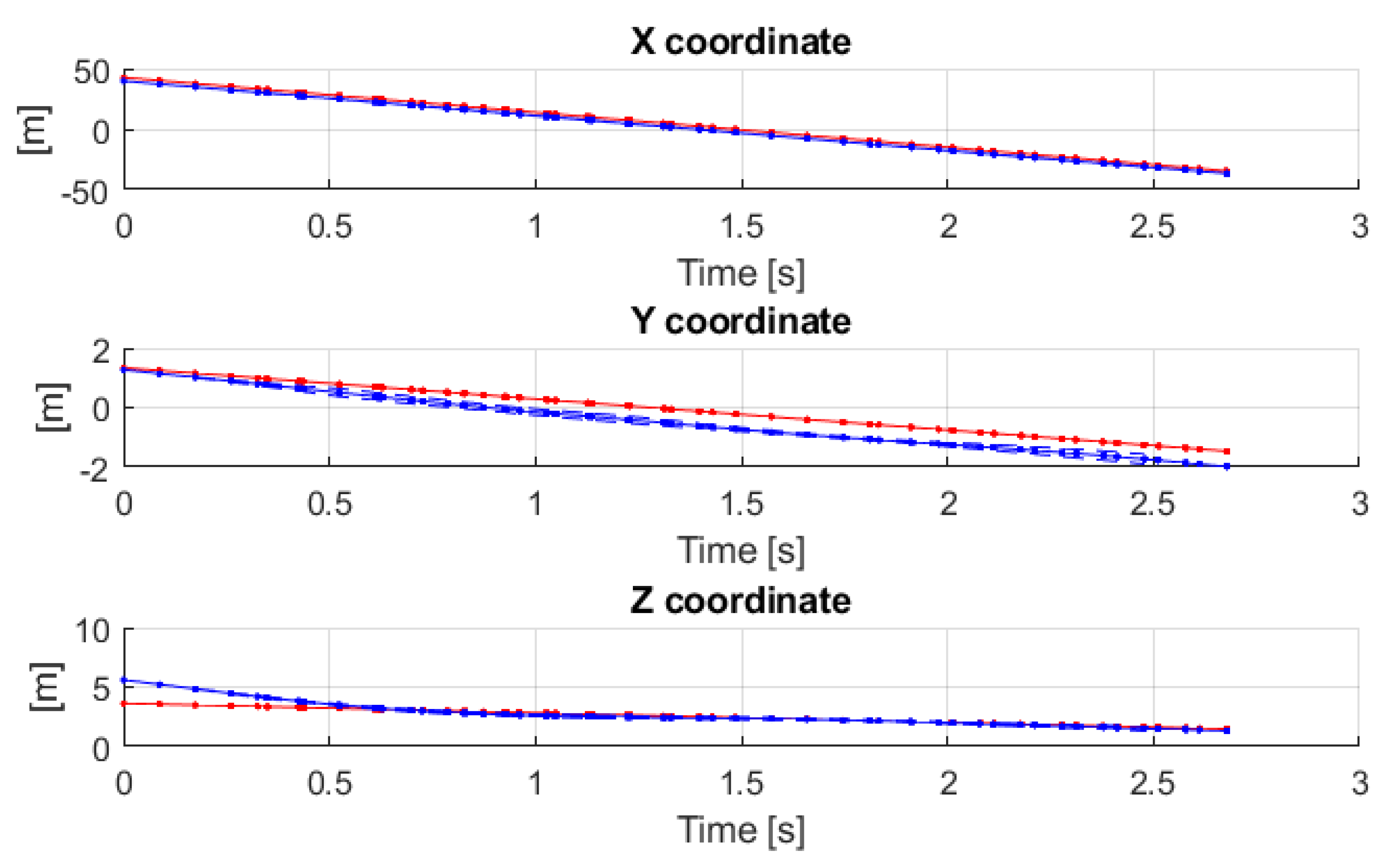

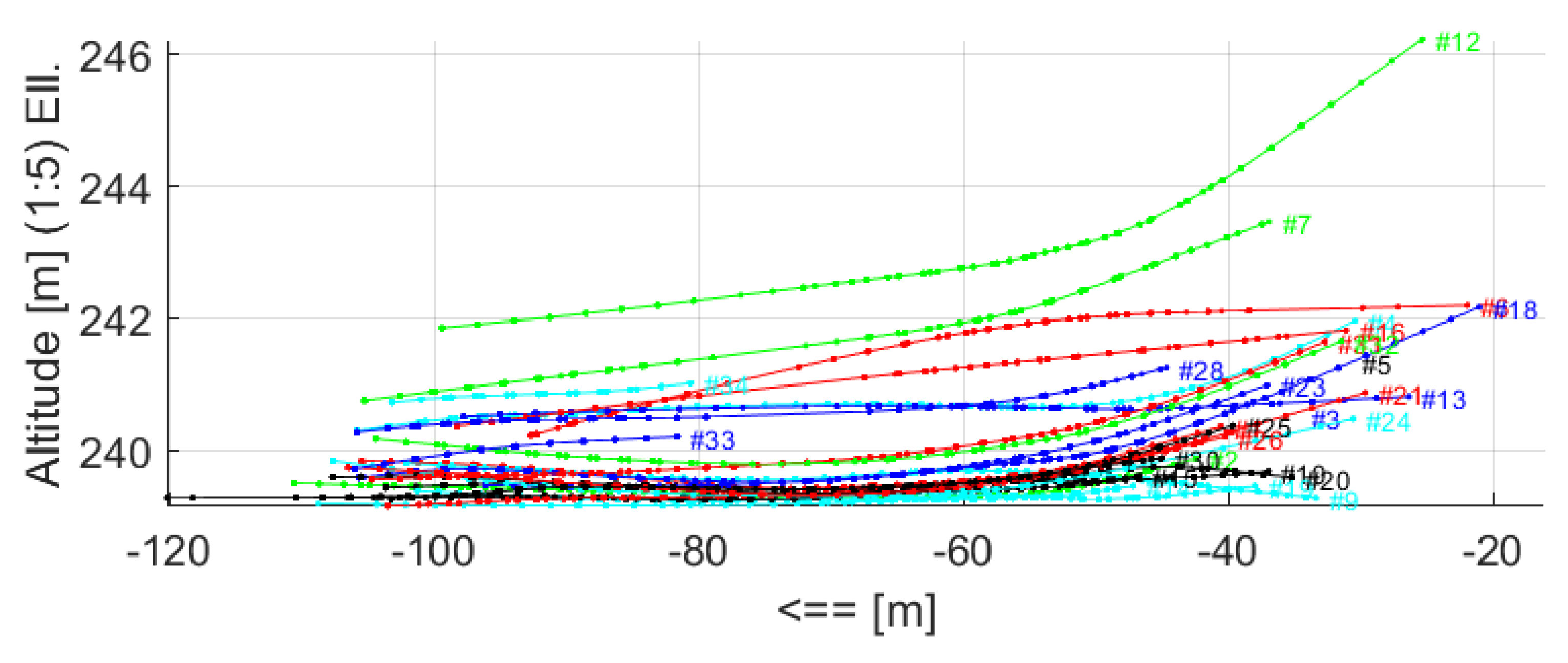

- The shape of the trajectory and the velocity profiles can be also used to assess the performance. For instance, in Figure 13 for Test #1, the trajectory derived from COG is not realistic, it is not possible that the airplane could have followed such a curvy trajectory. Note that large tail at the end of the trajectory, which is definitely not feasible motion, is caused by the lack of a sufficient number of captured points. In contrast, the VM-CA and CT results are feasible trajectories. However, it is unclear whether the small “waves” of the CT trajectory is the actual motion of the airplane, or it is the result of overfitting due to fourth-degree motion model. Nevertheless, the largest difference between the two trajectories is about 10 cm, which is within the error envelope detected earlier in our previous studies. The trajectory along the X, Y, and Z directions for Test #2 can be seen in Figure 16. This figure shows the decreasing altitude (see the Z component) as expected for landing aircraft. Also note that the CV model depicted with red in the Figure 16 is a line; however, it is very unlikely that this motion component behaves such a way. The CT solution depicted with blue follows a more realistic trajectory.

Limitations and Possible Applications

6. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

Appendix B

Appendix C

| Algorithm A1 Simulated annealing algorithm for volume minimization | |

|

References

- Toth, C.; Jóźków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Navarro-Serment, L.E.; Mertz, C.; Hebert, M. Pedestrian Detection and Tracking Using Three-Dimensional LADAR Data. In Field and Service Robotics; Springer Tracts in Advanced Robotics; Springer: Berlin, Germany, 2010; pp. 103–112. ISBN 978-3-642-13407-4. [Google Scholar]

- Cui, J.; Zha, H.; Zhao, H.; Shibasaki, R. Laser-based detection and tracking of multiple people in crowds. Comput. Vis. Image Underst. 2007, 106, 300–312. [Google Scholar] [CrossRef]

- Sato, S.; Hashimoto, M.; Takita, M.; Takagi, K.; Ogawa, T. Multilayer lidar-based pedestrian tracking in urban environments. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 849–854. [Google Scholar]

- Tarko, P.; Ariyur, K.B.; Romero, M.A.; Bandaru, V.K.; Liu, C. Stationary LiDAR for Traffic and Safety Applications–Vehicles Interpretation and Tracking; USDOT: Washington, DC, USA, 2014.

- Börcs, A.; Nagy, B.; Benedek, C. On board 3D object perception in dynamic urban scenes. In Proceedings of the 2013 IEEE 4th International Conference on Cognitive Infocommunications (CogInfoCom), Budapest, Hungary, 2–5 December 2013; pp. 515–520. [Google Scholar]

- Toth, C.K.; Grejner-Brzezinska, D. Extracting dynamic spatial data from airborne imaging sensors to support traffic flow estimation. ISPRS J. Photogramm. Remote Sens. 2006, 61, 137–148. [Google Scholar] [CrossRef]

- Statistical Summary of Commercial Jet Airplane Accidents Worldwide Operations. Available online: http://www.boeing.com/resources/boeingdotcom/company/about_bca/pdf/statsum.pdf (accessed on 16 December 2017).

- Mund, J.; Frank, M.; Dieke-Meier, F.; Fricke, H.; Meyer, L.; Rother, C. Introducing LiDAR Point Cloud-based Object Classification for Safer Apron Operations. In Proceedings of the International Symposium on Enhanced Solutions for Aircraft and Vehicle Surveillance Applications, Berlin, Germany, 7–8 April 2016. [Google Scholar]

- Mund, J.; Zouhar, A.; Meyer, L.; Fricke, H.; Rother, C. Performance Evaluation of LiDAR Point Clouds towards Automated FOD Detection on Airport Aprons. In Proceedings of the 5th International Conference on Application and Theory of Automation in Command and Control Systems, Toulouse, France, 30 September–2 October 2015. [Google Scholar]

- Koppanyi, Z.; Toth, C. Estimating Aircraft Heading based on Laserscanner Derived Point Clouds. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Munich, Germany, 25–27 March 2015; Volume II-3/W4, pp. 95–102. [Google Scholar]

- Toth, C.; Jozkow, G.; Koppanyi, Z.; Young, S.; Grejner-Brzezinska, D. Monitoring Aircraft Motion at Airports by LiDAR. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; Volume III-1, pp. 159–165. [Google Scholar]

- VLP-16 Manual. Available online: http://velodynelidar.com/vlp-16.html (accessed on 15 December 2017).

- HDL-32E Manual. Available online: http://velodynelidar.com/hdl-32e.html (accessed on 15 December 2017).

- Wang, D.Z.; Posner, I.; Newman, P. Model-free Detection and Tracking of Dynamic Objects with 2D Lidar. Int. J. Robot. Res. 2015, 34, 1039–1063. [Google Scholar] [CrossRef]

- Pomerleau, F.; Colas, F.; Siegwart, R. A Review of Point Cloud Registration Algorithms for Mobile Robotics. Found. Trends Robot. 2015, 4, 1–104. [Google Scholar] [CrossRef]

- Moosmann, F.; Stiller, C. Joint self-localization and tracking of generic objects in 3D range data. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1146–1152. [Google Scholar]

- Dewan, A.; Caselitz, T.; Tipaldi, G.D.; Burgard, W. Motion-based detection and tracking in 3D LiDAR scans. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 4508–4513. [Google Scholar]

- Kaestner, R.; Maye, J.; Pilat, Y.; Siegwart, R. Generative object detection and tracking in 3D range data. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 3075–3081. [Google Scholar]

- Dierenbach, K.; Jozkow, G.; Koppanyi, Z.; Toth, C. Supporting Feature Extraction Performance Validation by Simulated Point Cloud. In Proceedings of the ASPRS 2015 Annual Conference, Tampa, FL, USA, 4–8 May 2015. [Google Scholar]

- Saez, J.M.; Escolano, F. Entropy Minimization SLAM Using Stereo Vision. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 36–43. [Google Scholar]

- Saez, J.M.; Hogue, A.; Escolano, F.; Jenkin, M. Underwater 3D SLAM through entropy minimization. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 3562–3567. [Google Scholar]

- Aarts, E.; Korst, J.; Michiels, W. Simulated Annealing. In Search Methodologies; Springer: Boston, MA, USA, 2005; pp. 187–210. ISBN 978-0-387-23460-1. [Google Scholar]

- Chou, C.P.; Cheng, H.J. Aircraft Taxiing Pattern in Chiang Kai-Shek International Airport. In Airfield and Highway Pavement: Meeting Today’s Challenges with Emerging Technologies, Proceedings of the Airfield and Highway Pavements Specialty Conference, Atlanta, GA, USA, 30 April–3 May 2006; American Society of Civil Engineers: Reston, VA, USA, 2016. [Google Scholar] [CrossRef]

- Sholz, F. Statistical Extreme Value Analysis of ANC Taxiway Centerline Deviation for 747 Aircraft; FAA/Boeing Cooperative Research and Development Agreement; Federal Aviation Administration: Washington, DC, USA, 2003.

- Sholz, F. Statistical Extreme Value Analysis of JFK Taxiway Centerline Deviation for 747 Aircraft; FAA/Boeing Cooperative Research and Development Agreement; Federal Aviation Administration: Washington, DC, USA, 2003.

- Sholz, F. Statistical Extreme Value Concerning Risk of Wingtip to Wingtip or Fixed Object Collision for Taxiing Large Aircraft; Federal Aviation Administration: Washington, DC, USA, 2005.

| Constant Velocity | Constant Acceleration | General or Polynomial |

|---|---|---|

| Test Case | Goal | Sensors | Field of View * | Object–Sensor Distance | |

|---|---|---|---|---|---|

| #1 | Taxiing | 2D performance at taxiing | 3 vertical VLP-16s, 1 horizontal HDL-32E | 70 m | 20 m |

| #2 | Landing | 3D performance at landing | 4 vertical VLP-16s, 1 horizontal HDL-32E | 100 m | 33 m |

| Method | Parameters |

|---|---|

| Center of Gravity (COG) | The COG points are filtered with a Gaussian kernel and resampled with spline interpolation |

| Volume Minimization with CA motion model | Cube size is 1 m, other parameters are presented in Section 2.6. |

| Refinement with Cube Trajectories | Initial reconstruction using a CV model and SA, motion model is fourth-degree polynomial |

| Test | Average of the Absolute Residuals | Standard Deviation of the Absolute Residuals | ||||

|---|---|---|---|---|---|---|

| X (m/s) | Y (m/s) | Z (m/s) | X (m/s) | Y (m/s) | Z (m/s) | |

| #1 Taxiing | 0.69 | 0.06 | - | 0.44 | 0.00 | - |

| #2 Landing | 0.67 | 0.44 | 0.51 | 0.17 | 0.08 | 0.11 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koppanyi, Z.; Toth, C.K. Object Tracking with LiDAR: Monitoring Taxiing and Landing Aircraft. Appl. Sci. 2018, 8, 234. https://doi.org/10.3390/app8020234

Koppanyi Z, Toth CK. Object Tracking with LiDAR: Monitoring Taxiing and Landing Aircraft. Applied Sciences. 2018; 8(2):234. https://doi.org/10.3390/app8020234

Chicago/Turabian StyleKoppanyi, Zoltan, and Charles K. Toth. 2018. "Object Tracking with LiDAR: Monitoring Taxiing and Landing Aircraft" Applied Sciences 8, no. 2: 234. https://doi.org/10.3390/app8020234